Abstract

This work focuses on elucidating issues related to an increasingly common technique of multi-model ensemble (MME) forecasting. The MME approach is aimed at improving the statistical accuracy of imperfect time-dependent predictions by combining information from a collection of reduced-order dynamical models. Despite some operational evidence in support of the MME strategy for mitigating the prediction error, the mathematical framework justifying this approach has been lacking. Here, this problem is considered within a probabilistic/stochastic framework which exploits tools from information theory to derive a set of criteria for improving probabilistic MME predictions relative to single-model predictions. The emphasis is on a systematic understanding of the benefits and limitations associated with the MME approach, on uncertainty quantification, and on the development of practical design principles for constructing an MME with improved predictive performance. The conditions for prediction improvement via the MME approach stem from the convexity of the relative entropy which is used here as a measure of the lack of information in the imperfect models relative to the resolved characteristics of the truth dynamics. It is also shown how practical guidelines for MME prediction improvement can be implemented in the context of forced response predictions from equilibrium with the help of the linear response theory utilizing the fluctuation–dissipation formulas at the unperturbed equilibrium. The general theoretical results are illustrated using exactly solvable stochastic non-Gaussian test models.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Dynamical prediction of complex multi-scale systems based on imperfect models and spatiotemporally sparse observations of the truth dynamics is a notoriously difficult problem which is, nevertheless, essential in many applications such as climate-atmosphere science (Emanuel et al. 2005; Randall 2007), materials science (Chatterjee and Vlachos 2007; Katsoulakis et al. 2003), neuroscience (Rangan et al. 2009), or systems biology and biochemistry (Noé et al. 2009; Sriraman et al. 2005; Das et al. 2006; Hummer and Kevrekidis 2003). Due to the high-dimensional, multi-scale nature of such time-dependent problems, it is challenging to obtain even statistically accurate predictions of the coarse-grained characteristics of the truth dynamics. Advances in computing power and new theoretical insights have spurred the development of a plethora of reduced-order models (e.g., Epstein 1969; Emanuel et al. 2005; Neelin et al. 2006; Randall 2007; Sapsis and Majda 2013d; Chen et al. 2014a; Thual et al. 2014) and data assimilation techniques (e.g., Anderson 2007; Houtekamer and Mitchell 2001; Harlim and Majda 2010; Gershgorin et al. 2010a; Majda and Harlim 2012; Majda et al. 2014; Grooms et al. 2014; Chen et al. 2014b). Various ways of minimizing uncertainties in imperfect predictions and validating reduced-order models have been developed in this context (e.g., Majda and Gershgorin 2010, 2011a, b; Branicki and Majda 2012c, 2014; Majda and Branicki 2012c). Data assimilation aside, one of the most important challenges in improving imperfect dynamical predictions concerns the mitigation of model error. Recent developments provide new techniques for mitigating coarse-graining errors, and for counteracting errors due to neglecting nonlinear interactions between the resolved and unresolved processes in reduced-order models; these include the stochastic superparameterization (Grooms and Majda 2013, 2014; Majda and Grooms 2014; Grooms et al. 2015; Slawinska et al. 2015) and reduced subspace closure techniques (Sapsis and Majda 2013a, b, c).

This work focuses on elucidating issues related to an increasingly common technique of multi-model ensemble (MME) predictions which is complementary to improving individual imperfect models. The heuristic idea behind MME prediction is simple: Given a collection of imperfect models, consider the prediction obtained through a linear superposition of individual model forecasts in the hope of mitigating the overall prediction error. While there is some evidence in support of the MME approach for improving imperfect predictions, particularly in atmospheric sciences (e.g., Palmer et al. 2005; Stephenson et al. 2005; Doblas-Reyes et al. 2005; Hagedorn et al. 2005; Weigel et al. 2008; Weisheimer et al. 2009; van der Linden and Mitchell 2009; Oldenborgh et al. 2012), a systematic framework justifying this approach has been lacking. In particular, it is not obvious which imperfect models, and with what weights, should be included in the MME forecast in order to improve predictions within this framework. Consequently, virtually all operational MME prediction systems for weather and climate are based on equal-weight ensembles (Hagedorn et al. 2005; Weigel et al. 2008; van der Linden and Mitchell 2009; Weisheimer et al. 2009; Oldenborgh et al. 2012) which are likely to be far from optimal (Doblas-Reyes et al. 2005) in the absence of additional restrictions imposed on the ensemble members. Our main focus is on a systematic understanding of benefits and limitations associated with the MME approach to improving imperfect predictions; important practical issues in this context are the following:

-

(a)

How to measure the skill (statistical accuracy) of dynamic MME predictions relative to single-model predictions? (This setting should not be confused with a purely statistical modeling in which the underlying dynamics is ignored.)

-

(b)

Is there a condition guaranteeing an improvement in predictions via the MME approach relative to single-model predictions?

We consider the MME prediction within a probabilistic/stochastic framework which exploits tools from information theory in order to systematically understand the characteristics of such an approach. This probabilistic framework can be utilized in two different contexts: First, when dealing with deterministic imperfect models, one can consider a time-dependent probability density function constructed by initializing the models from a given distribution of initial conditions. Second, the probabilistic prediction framework arises naturally when using stochastic reduced-order models in imperfect predictions which is an increasingly common approach (e.g., Epstein 1969; Lorenz 1968, 1969; Palmer 2001; Palmer et al. 2005; Majda et al. 2005; Majda and Wang 2010; Sapsis and Majda 2013d; Chen et al. 2014a; Thual et al. 2014). In many operational situations, dynamic predictions can be obtained through a weighted superposition of forecasts obtained from a collection of imperfect models (e.g., Hagedorn et al. 2005; Weigel et al. 2008; van der Linden and Mitchell 2009; Weisheimer et al. 2009; Oldenborgh et al. 2012). However, the individual imperfect models are usually highly complex and not easily tuneable, and it is desirable to consider the possibility of prediction improvement by adjusting only the ensemble weights. In order to shed light on the issues (a)–(b) above, we set out an information-theoretic framework capable of

-

(i)

Quantification of uncertainty and improving the imperfect predictions via the MME approach;

-

(ii)

Providing practical guidelines for improving dynamic MME predictions given a small collection of available imperfect models.

Here, we derive a simple criterion for improving probabilistic predictions via the MME approach. Moreover, we provide a simple justification of why the MME prediction can have a better prediction skill than the best single model in the ensemble. Finally, we derive systematic guidelines for constructing finite model ensembles which are likely to have a superior predictive skill over any single model in the ensemble. These results stem largely from the convexity of the relative entropy (e.g., Cover and Thomas 2006) which is used here as a measure of the lack of information in the imperfect models relative to the resolved characteristics of the truth dynamics. We show that the guidelines for MME prediction improvement in the context of a forced perturbation from an equilibrium can be implemented with the help of the linear response theory and the ‘fluctuation–dissipation’ approach for forced dissipative systems (Majda et al. 2005, 2010b, a; Leith 1975; Abramov and Majda 2007; Gritsun et al. 2008; Majda and Gershgorin 2011b); this approach follows from the earlier work on improving imperfect predictions in the presence of model error in the single-model setup (see for example, Kleeman 2002; Majda et al. 2002; Kleeman et al. 2002; Majda and Gershgorin 2010, 2011a, b; Gershgorin and Majda 2012; Branicki and Majda 2012c; Majda and Branicki 2012c). When considering prediction improvement for the initial value problem, the practical implementation of the condition for skill improvement through MME can be carried out in the hindcast/reanalysis mode (e.g., Kim et al. 2012). Although we focus here on mitigating the prediction error via the MME approach, it is worth stressing that the ultimate goal in imperfect reduced-order prediction should involve a synergistic approach that combines the improvement in reduced-order models with an MME framework for both data assimilation and prediction.

This paper is structured as follows: First, in Sect. 2, we motivate the need for a systematic analysis of the MME prediction problem. In Sect. 3, we derive the information-theoretic criterion for improving MME predictions relative to single-model predictions. A set of particularly useful results is discussed in Sect. 3.2 where Gaussian models are used in a MME; this approach provides a helpful intuition for dealing with the general results of Sect. 3. Section 4 combines the analytical estimates of Sect. 3 with simple numerical tests which are based on statistically exactly solvable models described in Sect. 4.1. We conclude in Sect. 5 by summarizing the most important results, and we discuss directions for further research in this area, including extensions of the MME approach to improving imperfect data assimilation techniques. Technical details associated with the analytical estimates derived in Sect. 3 are presented in the appendices.

2 Motivating Examples

Consider the dynamics of a high-dimensional, nonlinear system where only a small subset of its dynamical variables can be reasonably modeled or accessed through empirical measurements. The resolved dynamics of the full system is affected by nonlinear, multi-scale interactions with unresolved processes which cannot be observed or even correctly modeled (e.g., Majda and Wang 2006). Nevertheless, we are interested in a statistically accurate prediction of the resolved non-equilibrium dynamics using a collection of imperfect reduced-order dynamical models which approximate or neglect the interactions between the resolved and unresolved processes. To this end, assume that the state vector of dynamical variables in the true high-dimensional system decomposes as \(\mathbf{v}=(\pmb {u},\pmb {v})\), where \(\pmb {u}\in {\mathbb {R}}^K, K<\infty \), denotes the resolved variables and \(\pmb {v}\in {\mathbb {R}}^N\) denotes the unresolved variables; we tacitly assume that \(K\ll N\) which is natural when dealing with complex multi-scale dynamics such as the turbulent dynamics of geophysical flows (e.g., Majda and Wang 2006). The time-dependent probability density associated with the MME of imperfect reduced-order models on the subspace of the resolved variables \(\pmb {u}\) is given by a convex superposition of the model densities in the form

where \(\pi ^{{\textsc {m}}_i}_t\) represents probability densities associated with the imperfect models \({\textsc {m}}_i\) in some class \({\mathcal {M}}\). We are particularly interested in mitigating the MME prediction error by adjusting the model weights \(\alpha _i\) in (1) with fixed characteristics of the individual imperfect models \({\textsc {m}}_i\in {\mathcal {M}}\) which is desirable from the practical viewpoint. The lack of information at time \(t\) between the MME and the truth statistics on the resolved subspace of variables is measured using the relative entropy (Kullback and Leibler 1951) given by

This nonnegative functional satisfying \({\mathcal {P}}(\pi _t,\pi ^{{\textsc {mme}}}_{\pmb {\alpha },t})= 0\) only when \(\pi _t=\pi ^{{\textsc {mme}}}_{\pmb {\alpha },t}\) is not a proper metric. However, it possesses a number of desirable properties such as convexity in the pair \((\pi ,\pi ^{{\textsc {mme}}})\), and it satisfies a ‘triangle equality’ for a certain class of densities discussed later (see also Majda et al. 2005; Branicki and Majda 2012c; Majda and Branicki 2012c); moreover, the relative entropy is invariant under general changes in variables (Majda et al. 2002; Majda and Wang 2006) [i.e., (2) can be written in a form which is independent of the dominating measure which we skip here but see, e.g., Gibbs and Su 2002]. We use the relative entropy (2) as an information-based measure of the time-dependent error in the imperfect probabilistic predictions; additional measures of predictive skill were introduced earlier in the context of uncertainty quantification in the single-model context and are briefly discussed in Sect. 4.3.1 (see also Majda and Gershgorin 2011a, b; Majda and Wang 2010; Branicki and Majda 2012c; Majda and Branicki 2012c). Here, we show that the information-theoretic approach is very useful when considering prediction improvement in the MME context. In particular, this setting helps address the following general questions:

-

What characteristics of the model ensemble lead to uncertainty reduction in MME predictions relative to imperfect predictions with a single model?

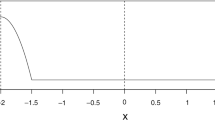

In the subsequent sections, we derive a set of information criteria for improving probabilistic dynamical predictions via the MME approach relative to the best single imperfect model. However, before embarking on a detailed analysis, some motivating examples are presented in Fig. 1 which shows that: (i) not every MME prediction is superior to the single-model prediction and (ii) the structure of the optimal-weight MME depends on both the truth dynamics and the imperfect models in the ensemble. The top-row insets show the evolution of the prediction error in terms of the relative entropy (2) in three different dynamical regimes of a non-Gaussian truth dynamics (described later in Sect. 4.1.2). In all cases, the statistics of the initial conditions and the marginal equilibrium for the resolved dynamics in the imperfect Gaussian models \({\textsc {m}}_i\) coincide with those of the truth dynamics; in addition, the single-model predictions are carried out with an imperfect model tuned to have the correct correlation time \(\tau ^\mathrm{trth}\) of the resolved dynamics at equilibrium. The bottom row in Fig. 1 shows the weight structure of the MME with individual models in the ensemble labeled by the correlation time \(\tau \) of their equilibrium dynamics; the optimal-weight MME is obtained in this case by minimizing the average relative entropy \(\frac{1}{T}\!\int _0^T\!{\mathcal {P}}(\pi _t,\pi ^{{\textsc {mme}}}_t){\mathrm{d}}t\) over the whole time interval considered. Note that the error of the MME prediction relative to the single-model prediction varies significantly between the three configurations in Fig. 1a–c; moreover, the structure of the optimal-weight MME changes drastically from an MME containing only models with \(\tau ^{{\textsc {m}}_i}>\tau ^\mathrm{trth}\) in (a), to an MME with \(\tau ^{{\textsc {m}}_i}<\tau ^\mathrm{trth}\) in (b), to an MME containing a single imperfect model with the shortest correlation time in the ensemble in (c). The difference between the configuration in (a) and (b) lies in the initial statistical conditions: In (a), the initial conditions are such that the resolved dynamics is in a stable regime, while in (b), the resolved dynamics is initially in a transient unstable phase. The configuration shown in Fig. 1c corresponds to imperfect predictions of the resolved non-Gaussian dynamics when the truth equilibrium statistics is significantly skewed. (See Sect. 4.3.2 for more details.)

Dependence of the prediction error within the MME framework on the nature of the non-Gaussian truth dynamics [given by (38) in Sect. 4.1.2]; here, the MME mixture density (1) contains Gaussian models (26) with correct statistics of the initial conditions and correct marginal equilibrium for the resolved dynamics. Top-row insets show the evolution of the error via the relative entropy (2) for predicting the resolved truth dynamics with: (left) symmetric fat-tailed equilibrium density with initial statistical conditions in a stable regime of the truth dynamics, (middle) symmetric fat-tailed equilibrium density with initial conditions in an unstable regime of the truth, and (right) skewed equilibrium density with initial conditions in a stable regime of the truth. The bottom insets show the weights \(\alpha _i\) in MME density (1); the optimal-weight MME (9) minimizes the time-averaged relative entropy by adjusting the ensemble weights. Note that the performance of MME prediction, as well as the structure of the optimal-weight MME, depends strongly on the nature of the truth dynamics

Clearly, the performance of the MME approach for improving imperfect predictions depends on both the structure of the MME and the nature of the truth dynamics. The above examples highlight the need for a more analytical insight which would allow to understand when and why the MME approach leads to improved predictions. In the next section, we focus solely on this topic and we obtain information-based criteria for prediction improvement via the MME approach. The general theoretical results derived in Sect. 3 are discussed further in Sect. 4 based on two simple but revealing test models described in Sect. 4.1.

3 Information-Theoretic Criteria for Improving MME Predictions

Here, we develop an information-theoretic framework for assessing the potential improvement in imperfect predictions through the MME approach. First, in Sect. 3.1, we derive a condition for improving the predictive skill within the MME framework; this condition requires evaluating certain least-biased estimates of the truth which are obtained by maximizing the Shannon entropy subject to a finite number of moment constraints, making this approach amenable to applications. Implications of this information-based criterion are discussed in Sect. 3.1.3 for both the initial value problem and the forced response prediction. Further insight and intuition can be gained by restricting the MME prediction problem to the Gaussian mixture configuration which is discussed in Sect. 3.2. The results presented here exploit the convexity of the relative entropy (2) between the truth and the MME density in (1) which measures the lack of information in the MME density relative to the resolved truth statistics. Further details, along with some simple proofs of the facts established below, are relegated to “Appendix 1”.

3.1 Improving Predictions Through MME Framework

Consider imperfect probabilistic predictions of the truth dynamics on the subspace of resolved variables \(\pmb {u}\in {\mathbb {R}}^K\) based on the MME with density \(\pi ^{{\textsc {mme}}}_{\pmb {\alpha },t}\) in (1). As in Sect. 2, we assume that the truth dynamics has the probability density function denoted by \(p_t({\pmb {u},\pmb {v}}),\,\pmb {v}\,{\in }\,{\mathbb {R}}^N,\;K{\ll }\,N\), and the corresponding marginal density on the resolved subspace is \(\pi _t(\pmb {u})=\int \!p_t(\pmb {u},\pmb {v}){\mathrm{d}}\pmb {v}\). Given some class \({\mathcal {M}}\) of reduced-order models for the resolved dynamics \(\pmb {u}(t)\), we define the best single model \({\textsc {m}}_*\) for making predictions at time \(t \) as the one with the smallest error in terms of the relative entropy

where \(\pi _t\) is the truth density, \(\pi ^{{\textsc {m}}}_t\) represents the probability density associated with the models \({\textsc {m}}\in {\mathcal {M}}\), and the relative entropy \({\mathcal {P}}(\pi _t,\pi ^{{\textsc {m}}}_t)\) measures the lack of information in \(\pi ^{{\textsc {m}}}_t\) relative to the truth marginal density \(\pi _t\) (see Majda and Gershgorin 2010, 2011a, b; Branicki and Majda 2012c; Majda and Branicki 2012c). Analogously, the best single model \({\textsc {m}}_{{\mathcal {I}}}^*\in {\mathcal {M}}\) for making predictions over the time interval \( {\mathcal {I}}\) is given by

where  measures the average lack of information over the time interval \({\mathcal {I}}\). We introduce the following information measures to quantify the performance of the MME prediction relative to the single-model prediction with model

measures the average lack of information over the time interval \({\mathcal {I}}\). We introduce the following information measures to quantify the performance of the MME prediction relative to the single-model prediction with model

where  denotes the integral average over the time interval \({\mathcal {I}}\), and \(\pi ^{\textsc {l}}\) in (5b) is the least-biased estimate of the truth density which maximizes the Shannon entropy subject to \({\textsc {l}}\) moment constraints (see (14) below and Mead and Papanicolaou (1984); Majda et al. (2005); Majda and Gershgorin (2011a, b); Branicki and Majda (2012c)). Importantly, the two measures in (5) have a common upper bound

denotes the integral average over the time interval \({\mathcal {I}}\), and \(\pi ^{\textsc {l}}\) in (5b) is the least-biased estimate of the truth density which maximizes the Shannon entropy subject to \({\textsc {l}}\) moment constraints (see (14) below and Mead and Papanicolaou (1984); Majda et al. (2005); Majda and Gershgorin (2011a, b); Branicki and Majda (2012c)). Importantly, the two measures in (5) have a common upper bound

if ensemble models in \(\mathcal {M}\) have the least-biased structure \({\textsc {m}}_i={\textsc {m}}_i^{{\textsc {l}}'}, {\textsc {l}}'\leqslant {\textsc {l}}\) (see (14) in §3.1.1); this fact stems from the convexity of the relative entropy (10) and the ‘triangle equality’ (11) satisfied by \(\mathcal {P_{\mathcal {I}}}\) which are discussed below. While the measure \(\mathfrak {P}^{\textsc {mme},{\textsc {m}}_{\diamond }}_{\pmb {\alpha },\mathcal {M},{\mathcal {I}}}\) is the most appropriate one, it is unrealistic to expect that it can be evaluated in practice since the exact truth density, \(\pi \), is unlikely to be known. On the other hand, the use of the least-biased estimate, \(\pi ^{\textsc {l}}\), of the truth density represents a practically achievable approach. Thus, we adopt the following:

Information Criterion I The MME prediction utilizing models \({\textsc {m}}\in {\mathcal {M}}\) with weights \(\pmb {\alpha }\) over the time interval \({\mathcal {I}}\) has a smaller error than the single model prediction with \({\textsc {m}}_{\diamond }\) if

Note that the single model \({\textsc {m}}_{\diamond }\) in (5) and (7) does not have to coincide with the best imperfect model \({\textsc {m}}^*_{\mathcal {I}}\) in (4) which is unknown in practice. For example, one might consider \({\textsc {m}}_{\diamond }\) to be the best single model \({\textsc {m}}_{{\mathcal {I}},{\textsc {l}}}^*\) relative to the least-biased truth estimate which is defined as

and it clearly depends on the \({\textsc {l}}\) moment constraints used to estimate the truth density. Note that even if \({\mathcal {P}}_{\mathcal {I}}(\pi ^{\textsc {l}},\pi ^{{\textsc {m}}_{{\mathcal {I}},{\textsc {l}}}^*})=0\), there might exist an information barrier \({\mathcal {P}}_{\mathcal {I}}(\pi ,\pi ^{{\textsc {m}}_{{\mathcal {I}},{\textsc {l}}}^*}) = {\mathcal {P}}_{\mathcal {I}}(\pi ,\pi ^{\textsc {l}})\) in the imperfect predictions, which can be reduced if more detailed truth estimates are considered (Majda and Gershgorin 2011a, b; Branicki and Majda 2012c). We now have the following two useful facts:

Fact 1

Consider the best model \({\textsc {m}}_{{\mathcal {I}},{\textsc {l}}}^*\) in (8) for predicting the resolved truth dynamics \(\pmb {u}(t)\) over the time interval \({\mathcal {I}}\). The prediction of the MME with \(\{{\textsc {m}}_i\}\in {\mathcal {M}}\) can be superior to the prediction with \({\textsc {m}}_{{\mathcal {I}},{\textsc {l}}}^*\) unless the density of \({\textsc {m}}_{{\mathcal {I}},{\textsc {l}}}^*\) coincides with the least-biased marginal density \(\pi ^{\textsc {l}}\); i.e., there might exist a set of models \(\{{\textsc {m}}_i\}\in {\mathcal {M}}\) and the corresponding weights \(\{\alpha _i\}\) such that \(\mathfrak {P}^{{\textsc {l}},\textsc {mme},{\textsc {m}}^*_{{\mathcal {I}}}}_{\pmb {\alpha },\mathcal {M},{\mathcal {I}}}<0\) in (7). The same holds in a more general but uncomputable setting for the best model \({\textsc {m}}_{{\mathcal {I}}}^*\) in (4), i.e., there might exist a set of model weights \(\pmb {\alpha }\) such that \(\mathfrak {P}^{\textsc {mme},{\textsc {m}}^*_{{\mathcal {I}}}}_{\pmb {\alpha },\mathcal {M},{\mathcal {I}}}<0\).

Fact 2

Consider the optimal-weight MME for a given set of imperfect models \(\{{\textsc {m}}_i\}\in {\mathcal {M}}\) which is defined relative to the least-biased truth estimate \(\pi ^{\textsc {l}}\) as

where \(\pmb {\alpha }\) is the vector of weights in the MME mixture density (1) containing dynamic models \(\{{\textsc {m}}_i\}\in {\mathcal {M}}\). For a fixed number of constraints \({\textsc {l}}\), the lack of information \({\mathcal {P}}_{\mathcal {I}}(\pi ^{\textsc {l}},\pi ^{{\textsc {mme}}}_{\pmb {\alpha }^*_{{\mathcal {I}},{\textsc {l}}}}) \) corresponds to an information barrier for MME predictions with models \(\{{\textsc {m}}_i\}\in {\mathcal {M}}\) over the time interval \({\mathcal {I}}\). Moreover, if the predictive skill cannot be improved via the MME approach, then \(\pi ^{{\textsc {mme}}}_{\alpha ^*_{{\mathcal {I}},{\textsc {l}}}}=\pi ^{{\textsc {m}}_{{\mathcal {I}},{\textsc {l}}}^*}\), and the information barriers in the single-model and the MME predictions coincide.

Simple justification of the above facts is illustrated in Fig. 2, and it follows immediately from the convexity of the relative entropy in the second argument (e.g., Cover and Thomas 2006)

and the ‘triangle equality’ satisfied by the relative entropy \(\mathcal {P}\) (e.g., Majda et al. (2005)), namely

where \(\pi ^{{\textsc {l}}}\) and \(\pi ^{{\textsc {m}},{\textsc {l}}}\) are, respectively, the least-biased densities associated with the resolved truth and the model dynamics. Fact 1 becomes obvious upon considering the fixed-time configuration sketched in Fig. 2a in the case when \(\mathcal {P}(\pi _t,\pi _t^{\textsc {m}^*})>0\) for the best model \({\textsc {m}}^*\) (or \(\mathcal {P}(\pi _t^\textsc {l},\pi _t^{\textsc {m}^*})>0\) for \({\textsc {m}}^*={\textsc {m}}^*_{\textsc {l}}\)) in the ensemble (extension of these arguments to the whole time interval \({\mathcal {I}}\) is straightforward due to the linearity of integration and the fact that \({\mathcal {P}}\geqslant 0\)). If \(\mathcal {P}_{\mathcal {I}}(\pi ^\textsc {l},\pi _t^{\textsc {m}^*_{\mathcal {I},\textsc {l}}}) =0\) then \(\pi _t=\pi _t^{\textsc {m}^*_{\mathcal {I},\textsc {l}}}\) by the properties of the relative entropy. Fact 2 is established by considering the two possible fixed-time configurations sketched in Fig. 2. In Fig. 2b, the MME information barrier (9) at time \(t\) (red shaded) is the same as that of the single-model prediction and equal to \({\mathcal {P}}(\pi _t^\textsc {l},\pi ^{{\textsc {m}}_*}_t)\), while the MME information barrier of MME in Fig. 2a is reduced to \({\mathcal {P}}(\pi _t^\textsc {l},\alpha ^*\pi ^{{\textsc {m}}^*}_t{+}(1-\alpha ^*) \pi ^{{\textsc {m}}_1}_t)<{\mathcal {P}}(\pi _t^\textsc {l},\pi ^{{\textsc {m}}^*}_t)\). Clearly, the choice of the imperfect models in MME is important for its improved performance over the single model \({\textsc {m}}_{\diamond }\). (Examples of prediction improvement via MME approach without reducing the single-model information barrier are shown in different configurations in Figs. 3, 6, and 8 discussed in the subsequent sections).

Consequences of the convexity of the relative entropy \({\mathcal {P}}\) in (10) which are exploited in considerations of prediction improvement within the MME framework with probability density function \(\pi _t^{{\textsc {mme}}}\) in (1). One-dimensional (left column) and two-dimensional (right column) sketches are shown for a fixed time in order to illustrate two distinct possibilities in MME prediction which depend on the class of available models \({\mathcal {M}}\): a MME can perform better than any individual model in the ensemble \({\mathcal {M}}\) (e.g., \(\pi ^{\textsc {mme}}= \frac{1}{2}(\pi ^{{\textsc {m}}_{\diamond }}+\pi ^{{\textsc {m}}_1})\) see Fact 1), b MME cannot outperform the best single model \({\textsc {m}}^*\) in \({\mathcal {M}}\). In (a), the information barrier in MME prediction is smaller than the barrier in the single-model prediction (see Fact 2). Note also that the error of MME prediction cannot exceed that of the worst model in the ensemble. The configuration in (c) illustrates the information criterion in (12) and the need for introducing the uncertainty parameter \(\varDelta \geqslant 0\) when only the estimates on the prediction error \({\mathcal {P}}(\pi ^{\textsc {l}},\pi ^{{\textsc {m}}_i})\) of individual models \({\textsc {m}}_i\in {\mathcal {M}}\) are known

The above general facts relate to important practical issues in prediction problems, such as:

-

(i)

Assessment of prediction improvement for a given MME containing a finite collection \(\{{\textsc {m}}_i\}\in {\mathcal {M}}\) of imperfect models based solely on the prediction errors \({\mathcal {P}}(\pi _t^{\textsc {l}},\pi _t^{{\textsc {m}}_i})\). Ideally, one would like to improve the MME prediction by optimizing weights the \(\alpha _i\) to minimize \({\mathcal {P}}(\pi _t^{\textsc {l}},\sum _i \alpha _i\pi _t^{{\textsc {m}}_i})\) in (7); however, this requires repeated evaluations of \({\mathcal {P}}\) which might be not feasible for realistic problems.

-

(ii)

Derivation of guidelines for constructing an MME from a given set of imperfect models that would guarantee prediction improvement when only a partial information given by the average fidelity of individual models \({\mathcal {P}}(\pi _t^{\textsc {l}},\pi _t^{{\textsc {m}}_i})\) is available. (This includes equal-weight MME’s which assign the same nonzero weights to a subset of models \({\textsc {m}}_i\in M\subset {\mathcal {M}}\) and neglect the remaining models in \({\mathcal {M}}\backslash M\)).

It turns out that a significant insight into the above issues can be derived within the information-theoretic framework by exploiting the condition (7) and the convexity of the relative entropy in (10) which leads to the following simplified but a practical criterion:

Information Criterion II Consider improving imperfect predictions via the MME approach when only the fidelity \({\mathcal {P}}_{\mathcal {I}}(\pi ^{{\textsc {l}}},\pi ^{{\textsc {m}}_i})\) of individual ensemble members \({\textsc {m}}_i\in {\mathcal {M}}\) can be estimated. MME prediction with models \(\{{\textsc {m}}_i\}\) and weights \(\{\alpha _i\}\) is preferable to single model predictions with \({\textsc {m}}_{\diamond }\in {\mathcal {M}}\) if

where \(\varDelta \geqslant 0\) is the uncertainty parameter and \(\pi ^{{\textsc {l}}}_t\) is the least-biased density maximizing the Shannon’s entropy given \({\textsc {l}}\) constraints, as in (11).

Remarks

-

If the ensemble \(\mathcal {M}\) consists of models in the least-biased form, i.e., \({\textsc {m}}_i = {\textsc {m}}_i^{{\textsc {l}}'}\), \({\textsc {l}}'\leqslant {\textsc {l}}\), considering the prediction errors \(\mathcal {P}_{\mathcal {I}}\) in the condition (12) relative to the least-biased truth estimate \(\pi ^{\textsc {l}}\) is equivalent to considering prediction errors relative to the truth density \(\pi \) due to the identity (11).

-

The uncertainty parameter \(\varDelta \) in (12) plays an important role in the above setup, and it arises as a consequence of the assumption that only the fidelity \({\mathcal {P}}_{\mathcal {I}}(\pi ^{{\textsc {l}}},\pi ^{{\textsc {m}}_i})\) of individual ensemble members is known. For \({\textsc {m}}_{\diamond },{\textsc {m}}_i\in {\mathcal {M}}\), the condition (12) implies that \(0\leqslant {\mathcal {P}}_{\mathcal {I}}( \pi ^\textsc {l},\pi ^{{\textsc {mme}}})\leqslant {\mathcal {P}}_{\mathcal {I}}( \pi ^\textsc {l},\pi ^{{\textsc {m}}_{\diamond }})+\varDelta \) (see “Appendix 1”). For \(\varDelta =0\), the criterion in (12) provides a sufficient condition for prediction improvement which is, however, too restrictive in light of Fact 1 since for \({\textsc {m}}_{\diamond }={\textsc {m}}_{{\mathcal {I}},{\textsc {l}}}^*\) no MME would satisfy it (see Fig. 2c). For \(\varDelta \ne 0\), the condition in (12) is no longer sufficient for reducing the prediction error; however, it allows for a possible improvement in the predictive performance via the MME approach at the risk of increasing the prediction error by a controllable value \(\varDelta \) relative to \({\mathcal {P}}_{\mathcal {I}}(\pi ,\pi ^{{\textsc {m}}_{\diamond }})\) which is also true when \({\textsc {m}}_{\diamond }={\textsc {m}}_{{\mathcal {I}},{\textsc {l}}}^*\) (compare the configurations \(\pi ^{\textsc {mme}}= \alpha \pi ^{{\textsc {m}}^*}+(1-\alpha )\pi ^{{\textsc {m}}_1}\) with \(\pi ^{\textsc {mme}}= \alpha \pi ^{{\textsc {m}}^*}+(1-\alpha )\pi ^{{\textsc {m}}_2}\) when \({\mathcal {P}}(\pi ^{\textsc {l}},\pi ^{{\textsc {m}}_1}) ={\mathcal {P}}(\pi ^{\textsc {l}},\pi ^{{\textsc {m}}_2}) \)). Guidelines for generating the ensemble models and for probing the local geometry of \({\mathcal {P}}_{\mathcal {I}}(\pi ^{{\textsc {l}}},\cdot )\) are presented in Sect. 3.1.4.

-

Note that, in contrast to the non-simplified criterion (7), the criterion (12) only indicates whether or not the MME prediction with given weights \(\pmb {\alpha }\) is likely to be better than the single-model prediction and it should not be used for weight optimization [unlike the non-simplified criterion (7)].

-

The formulation (12) is particularly useful when considering the improvement in the forced response prediction from equilibrium \(\pi _\mathrm{eq}\), since then \(\pi ^{{\textsc {l}}}_{t}\) in (12) can be directly estimated based on the linear response theory and the fluctuation–dissipation formulas which utilize the information from the unperturbed equilibrium (see Sect. 3.1.2 and Majda et al. 2005, 2010b, a; Abramov and Majda 2007; Gritsun et al. 2008; Majda and Gershgorin 2011b). In the case of the initial value problem with uncertain initial conditions, the criterion (12) can be evaluated based on the truth estimates obtained in the hindmost/reanalysis mode (e.g., Kim et al. 2012).

3.1.1 Condition for Improving Imperfect Predictions Via the MME Approach Based on the Least-Biased Density Representation

It turns out that a significant insight can be gained by representing the condition (12) through the least-biased densities of the imperfect models in the MME density (1) which we outline below. Given a probability density \(q\) over a domain \(\Omega \), its least-biased approximation, \(q^{\textsc {l}}\), subject to a set of \({\textsc {l}}\) statistical constraints belongs to the exponential family of densities which maximizes the Shannon entropy \(\mathcal {S} = -\int _\Omega q\ln q\) subject to (see, e.g., Mead and Papanicolaou (1984); Majda et al. (2005))

where \(E_i\) are some functionals on the space of the resolved variables \(\pmb {u}\); here we assume these functionals to be \(i\)th tensor power of \(\pmb {u}\), i.e., \(E_i(\pmb {u}) = \pmb {u}^{\,\otimes \,i}\), so that their expectations yield the components of the first \({\textsc {l}}\) statistical moments of \(\pi \) about the origin. Consequently, the least-biased densities of the truth and of the imperfect models are given by (see, e.g., Mead and Papanicolaou 1984; Abramov and Majda 2004; Majda et al. 2005; Majda and Gershgorin 2010)

where the constraints (13) in (14)a,b are satisfied, respectively, for \(q=\pi \) and \(q=\pi ^{\textsc {m}}\), and the normalization factors \(C_t\) and \(C^{\textsc {m}}_t\) are chosen so that \(\int \pi ^{{\textsc {l}}_1}_t {\mathrm{d}}\pmb {u}=\int \pi ^{{\textsc {m}},{\textsc {l}}_2}_t {\mathrm{d}}\pmb {u}=1\), with \({\textsc {l}}_1\geqslant {\textsc {l}}_2\). While the Gaussian approximations of any density \(\pi \) can always be obtained, existence of \(\pi ^{\textsc {l}}\) for \({\textsc {l}}>2\) is not guaranteed (Mead and Papanicolaou 1984). We denote the expected values of the functionals \(E_i\) in (14) with respect to \(\pi ^{{\textsc {l}}_1}_t\) as \(\bar{E}_i\) and with respect to \(\pi ^{{\textsc {m}},{\textsc {l}}_2}_t\) as \(\bar{E}^{\textsc {m}}_i\); it is convenient to write these expectations in the vector form as

note that \({\pmb {\theta }}_t = {\pmb {\theta }}\big (\bar{\pmb {E}}_t\big )\) and \({\pmb {\theta }}^{{\textsc {m}}}_t = {\pmb {\theta }}^{{\textsc {m}}}\big (\bar{\pmb {E}}^{\textsc {m}}_t\big )\) in (14) so that the normalization factors in the least-biased densities are functions of the time-dependent statistical moments, i.e, \(C_t = C\big (\bar{\pmb {E}}_t\big )\) and \(C^{\textsc {m}}_t = C^{\textsc {m}}\big (\bar{\pmb {E}}^{\textsc {m}}_t\big )\).

Based on the least-biased representations (14) of the truth and model probability densities, the criterion (12) for improvement in imperfect predictions via the MME approach can be written in a form which is particularly suited for further approximations (see “Appendix 1” for a simple proof):

Fact 3

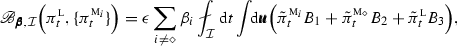

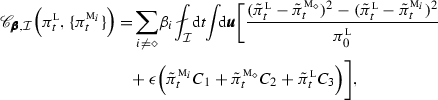

The criterion (12) for improving imperfect predictions via the MME approach with uncertainty \(\varDelta \geqslant 0\) can be expressed in terms of the statistical moments \({\overline{\pmb {E}}},\big \{{\overline{\pmb {E}}}^{{\textsc {m}}_i}\big \}\) of the truth and models as

where

is nonzero only for those model densities which are not in the least-biased form, i.e., if \(\pi ^{{\textsc {m}}_i,{\textsc {l}}_2}_t \ne \pi ^{{\textsc {m}}_i}_t\), and

where the weights \(\beta _i\) are defined in (12) and the vectors of the Lagrange multipliers are given by

Remarks

-

The second term, \(\fancyscript{B}_{\pmb {\beta },{\mathcal {I}}}\), in (16) is independent of the truth density, and it involves only the model densities, \(\pi ^{{\textsc {m}}_i}_t\), in MME.

-

The last term, \(\fancyscript{C}_{\pmb {\beta },{\mathcal {I}}}\), in (16) depends linearly on the expectations, \({\overline{\pmb {E}}}_t\), with respect to the least-biased truth density \(\pi ^{{\textsc {l}}_1}_t\); these can be estimated in the hindcast mode in the initial value problem context or from the ‘fluctuation–dissipation’ formulas when considering improvement in forced response predictions, as discussed below in Sect. 3.1.3.

-

The expected value in \(\fancyscript{A}_{\pmb {\beta },{\mathcal {I}}}\) can be evaluated as long the least-biased approximation, \(\pi ^{{\textsc {l}}_1}_t\), of the truth \(\pi _t\) is known. Moreover, \(\fancyscript{A}_{\pmb {\beta },{\mathcal {I}}}=0\) if the MME contains only least-biased models.

We will exploit the consequences of the above result extensively in the following sections; the main advantage of the above ‘least-biased’ representation of the condition (12) lies in the fact that it depends explicitly and linearly on the statistical moments \({\overline{\pmb {E}}}_t\) of the truth which are, in principle, amenable to approximations and estimates through the fluctuation–dissipation formulas when considering the forced response prediction (see Majda et al. 2005, 2010b, a; Abramov and Majda 2007; Gritsun et al. 2008; Majda and Gershgorin 2010, 2011a, b, as well as Sect. 3.1.3).

3.1.2 Predictive Skill of MME

Here, we represent the general criterion (12) for improving imperfect predictions via the MME approach in the formulation suitable for various time asymptotic estimates in the context of the initial value problem. This is obtained by using the representation (16) in terms of the least-biased densities (14) which provides a formulation that is amenable to practical approximations especially when considering the forced response predictions.

Consider the evolution of the marginal density \(\pi _t\) associated with the truth dynamics on the resolved subspace of variables in the form

which separates the initial statistical conditions from the subsequent evolution of the marginal probability density for the resolved dynamics; the parameter \(\delta \) in (18) is arbitrary at this stage, but it plays the role of an ordering parameter in the time asymptotic considerations discussed later in Sect. 3.2. The mixture density, \(\pi ^{\textsc {mme}}_t\), in (1) associated with a MME of imperfect models \({\textsc {m}}_i\) contained in a class \({\mathcal {M}}\) can be written in the same form as (18) so that

Based on decompositions (18) and (19), evolution of the statistical moments \({\overline{\pmb {E}}}_t,\,{\overline{\pmb {E}}}_t^{{\textsc {m}}_i}\) of the truth and the models can be written as

Consequently, the condition (16) for improving the imperfect predictions via the MME approach can be written in a form which is more amenable to practical estimates.

Fact 4

The condition (16) for improving imperfect predictions via the MME approach with uncertainty \(\varDelta \) can be expressed as

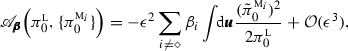

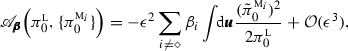

where \(\fancyscript{A}_{\pmb {\beta },{\mathcal {I}}}\) is defined in (17) and

where \(\pmb {\theta }^{{\textsc {m}}_{\diamond }}_t=\pmb {\theta }^{{\textsc {m}}_{\diamond }}_t\! \big (\,{\overline{\pmb {E}}}^{{\textsc {m}}_{\diamond }}_t\big ),\,\pmb {\theta }^{{\textsc {m}}_i}_t=\pmb {\theta }^{{\textsc {m}}_i}_t\! \big (\,{\overline{\pmb {E}}}^{{\textsc {m}}_i}_t\big )\), the weights \(\beta _i\) are defined in (12), and the least-biased truth and model densities are given in (14).

Remarks

-

The evolution of \(\,{\overline{\pmb {E}}}^{{\textsc {m}}_i}_t\) and \({\pmb {\theta }}^{{\textsc {m}}_i}_t\) can be computed directly from the imperfect models.

-

When considering the forced response prediction to perturbations of the attractor dynamics, the expected changes,

, in the truth statistics can be estimated based on the correlations on the unperturbed attractor using the fluctuation–dissipation formulas (e.g., Majda and Gershgorin 2010, 2011a, b). In the context of the initial value problem,

, in the truth statistics can be estimated based on the correlations on the unperturbed attractor using the fluctuation–dissipation formulas (e.g., Majda and Gershgorin 2010, 2011a, b). In the context of the initial value problem,  can be estimated in the hindcast/reanalysis mode (e.g., Kim et al. 2012).

can be estimated in the hindcast/reanalysis mode (e.g., Kim et al. 2012).

3.1.3 Initial Value Problem Versus Forced Response

The framework introduced in Sect. 3.1 applies, in principle, to two seemingly distinct cases: (i) improving imperfect predictions from given non-equilibrium statistical initial conditions and (ii) improving predictions of the response of the truth equilibrium dynamics to external perturbations. Given the decomposition in (20), the similarities and differences between the initial value problem and the forced response prediction can be summarized as follows:

-

For the initial value problem, the initial marginal densities for the resolved dynamics, \(\pi _0\) and \(\pi ^{{\textsc {m}}_i}_t\), correspond to any smooth probability densities with the initial statistics \({\overline{\pmb {E}}}_0\) and \({\overline{\pmb {E}}}^{\textsc {m}}_0\). However, in the case of the forced response prediction, the statistical initial conditions are restricted to the respective equilibrium states, i.e., \(\pi _0 = \pi _\mathrm{eq}\) and \(\pi ^{{\textsc {m}}_i}_{0}=\pi ^{{\textsc {m}}_i}_\mathrm{eq}\), and \({\overline{\pmb {E}}}_0 = {\overline{\pmb {E}}}_\mathrm{eq}\) and \({\overline{\pmb {E}}}^{{\textsc {m}}_i}_{0} = {\overline{\pmb {E}}}^{{\textsc {m}}_i}_\mathrm{eq}\).

-

The fundamental difference between the initial value problem discussed in Sect. 3.1.2 and the forced response prediction lies in the properties of the perturbation terms in the decomposition (18) and the existence of the decomposition (20). In particular,

-

The marginal probability density associated with the evolution of a non-degenerate truth in the initial value problem can always be written in the form (18) and (20). However, the time-dependent terms in (18) and (20) are generally small only for sufficiently short times.

-

In the case of estimating the truth response to external perturbations, the decompositions (18) and (20) apply to non-degenerate hypoelliptic noise (see Hairer and Majda 2010). For sufficiently small external perturbations, the time-dependent perturbations in (18) and (20) remain small for all time. This allows for a practical assessment of the prediction improvement in the forced response via MME through the general conditions (12), (16), or their subsidiaries discussed in Sects. 3.1.4 and 3.1.2 when combined with the linear response theory exploiting the fluctuation–dissipation formulas at the unperturbed equilibrium (see, e.g., Majda et al. 2005; Majda and Wang 2006 for more details).

-

3.1.4 Formal Guidelines for Constructing MME with Superior Predictive Skill Relative to the Single-Model Predictions

Here, we consider a perturbative approach which provides practical guidelines for constructing a useful MME from a single model \({\textsc {m}}_{\diamond }\). As discussed earlier (Fact 1 and Fig. 2), the best single model for making predictions can be inferior to an ensemble of imperfect models which appropriately ‘sample’ the relative entropy landscape \({\mathcal {P}}_{\mathcal {I}}(\pi ^{\textsc {l}},\cdot )\). Such information is inaccessible if only the estimates \({\mathcal {P}}_{\mathcal {I}}(\pi ^{\textsc {l}},\pi ^{{\textsc {m}}_i})\) for individual models \({\textsc {m}}_i\in {\mathcal {M}}\) in the ensemble are available; in such cases the criteria (12) or (16) provide the best possible guidance. However, additional MME improvements can be achieved via testing the local geometry of \({\mathcal {P}}_{\mathcal {I}}(\pi ^{\textsc {l}},\cdot )\) if there exists a possibility of perturbing a parameterized family of models.

First, we note that if a globally parameterized family of imperfect models is available, then the same convexity arguments as those used in Facts 1–3 imply that the MME with densities \(\pi ^{\textsc {m}}_{\epsilon _{\diamond }}, \{\pi ^{\textsc {m}}_{\epsilon _i}\}\) satisfying

will have an improved prediction skill relative to the single-model density \(\pi ^{\textsc {m}}_{\epsilon _{\diamond }}\). The reasons for not choosing the model with the smallest prediction error, \(\min [{\mathcal {P}}_{\mathcal {I}}(\pi ^{\textsc {l}},\pi ^{\textsc {m}}_{\epsilon _{\diamond }}), {\mathcal {P}}_{\mathcal {I}}(\pi ^{\textsc {l}},\pi ^{\textsc {m}}_{\epsilon _i})]\), are analogous to those used in Facts 1 and 3. If there is no global parameterization in the imperfect model class, consider an MME with a mixture density generated by perturbing a single-model density \(\pi ^{{\textsc {m}}_{\diamond }}\) so that for \(\varepsilon \ll 1\)

existence of such perturbed densities \(\pi ^{{\textsc {m}}_i,\varepsilon }_t\) which are non-degenerate (smooth at \(\varepsilon =0\)) was shown to exist under minimal assumptions on the model dynamics in (Hairer and Majda 2010); the interested reader should consult (Majda and Gershgorin 2011a, b; Majda and Wang 2010) for a related treatment of the predictive skill in the single-model configuration.

Based on the decomposition in (23), the evolution of the statistical moments \({\overline{\pmb {E}}}_t^{{\textsc {m}}_i,\varepsilon }\) for the ensemble members can be written as

where

The asymptotic expansions in (24) can be combined with the condition (16) to yield the following:

Fact 5

Consider a MME generated by perturbing a single model \({\textsc {m}}_{\diamond }\) so that the statistical moments \({\overline{\pmb {E}}}^{{\textsc {m}}_i,\varepsilon }_t\) and the coefficients \({\pmb {\theta }}_t^{{\textsc {m}}_i,\varepsilon }\) in the least-biased model densities \(\pi _t^{{\textsc {m}}_i,{\textsc {l}}_2}\) are given by (24). The criterion (16) for improving imperfect predictions via the MME approach with uncertainty \(\varDelta \sim \varepsilon \) can be expressed as

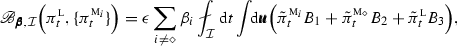

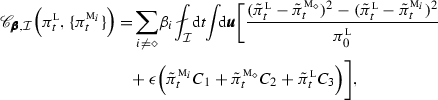

where \(\fancyscript{A}_{\pmb {\beta },{\mathcal {I}}}\) is given by (17) and

where \(\tilde{\pmb {\theta }}^{{\textsc {m}}_{\diamond }}_t\!\!= {\tilde{\pmb {\theta }}}^{{\textsc {m}}_{\diamond }}_t\!\big (\,{\overline{\pmb {E}}}^{{\textsc {m}}_{\diamond }}_t\big ),\,\tilde{\pmb {\theta }}^{{\textsc {m}}_i}_t\!\!={\tilde{\pmb {\theta }}}^{{\textsc {m}}_i}_t \!\big (\,{\overline{\pmb {E}}}^{{\textsc {m}}_{\diamond }}_t\big )\), and the weights \(\beta _i\) are defined in (12).

Remarks

-

The perturbations \({\tilde{\pmb {\theta }}}^{{\textsc {m}}_i}_t\) can be computed directly from the imperfect models \({\textsc {m}}_i\) in MME. The evolution of the truth moments \({\overline{\pmb {E}}}_t\) can be estimated in the hindcast/reanalysis mode in the context of the initial value problem or via the linear response theory and the fluctuation–dissipation formulas when considering the forced response predictions from equilibrium (e.g., Majda and Gershgorin 2010, 2011a, b).

-

The condition (25) simplifies for Gaussian mixture MME discussed in Sect. 3.2 since then \(\fancyscript{A}_{\pmb {\beta },{\mathcal {I}}}=0\).

3.2 Improving Imperfect Predictions Via MME in the Gaussian Framework

The analysis presented in Sect. 3.1–3.1.3 is particularly revealing in the Gaussian framework, i.e., when \({\textsc {l}}_1={\textsc {l}}_2=2\) in (16) or (21), due to the existence of an analytical formula for the relative entropy between two Gaussian densities (e.g., Majda et al. 2005). In such a case, the probability density, \(\pi ^{{\textsc {mme}}}_t\), in (1) of the MME is a Gaussian mixture and \(\fancyscript{A}_{\pmb {\alpha }}=0\) in the conditions (16), (25), and (21). In order to achieve the maximum simplification of the problem while retaining the crucial features of the framework, we assume here that the reduced-order models on the subspace of the resolved variables \(\pmb {u}\in {\mathbb {R}}^K\) for predicting the marginal statistics \(\pi _t\) of the resolved truth dynamics are given by the family of Gaussian Ito SDE’s (e.g., Øksendal 2010) given by

where \(\gamma ^{\textsc {m}}, F^{\textsc {m}},\sigma ^{\textsc {m}}\in {\mathbb {R}}^{K\times K}\) are diagonal matrices with \(\gamma ^{\textsc {m}}_{ii}, \sigma ^{\textsc {m}}_{ii}>0,\,\Vert \pmb {f}\Vert _\infty \leqslant 1\), and \(\pmb {W}_{\!u}(t)\) is a vector-valued Wiener process with independent components, and the mean dynamics and its covariance are given by the well-known formulas

where \(Q = \sigma ^{\textsc {m}}\otimes (\sigma ^{\textsc {m}})^T\). Consequently, the MME density, \(\pi ^{\textsc {mme}}_t\), in (1) is a linear superposition of Gaussian densities with the statistics evolving according to (27)–(28).

Consider now the time-dependent marginal density, \(\pi _t(\pmb {u})\), of the truth on the subspace of resolved variables \(\pmb {u}\in {\mathbb {R}}^K\) so that

As discussed in Sect. 3.1.3, the interpretation of the decomposition in (29) depends on the considered problem. In the context of the initial value problem, \(\pi _{0}\) corresponds to the uncertainty in the initial conditions, and \(\delta \) is an ordering parameter utilized below in short-time asymptotic expansions. When considering the forced response to small external perturbations of the truth equilibrium dynamics, \(\pi _0=\pi _\mathrm{eq}\), and we assume the perturbation in (29) is non-singular so that \(\pi _t\) is smooth at \(\delta =0\) which holds under minimal assumptions outlined in Hairer and Majda 2010. In the Gaussian setting considered here, the decomposition (29) can be used to write the second-order statistics of the truth as

with analogous expressions for the mean \(\pmb {\mu }_t^{{\textsc {m}}_i}\) and covariance \(R_t^{{\textsc {m}}_i}\) of the imperfect Gaussian models (26) in the multi-model ensemble.

The general condition (16) or (21) for improving MME predictions in the Gaussian framework can be easily rewritten in terms of the centered moments, \(\pmb {\mu }_t, R_t,\,\pmb {\mu }_t^{{\textsc {m}}_i}, R_t^{{\textsc {m}}_i}\), as discussed in “Appendix 1”. Here, we first highlight a simpler and more revealing version of this condition in the context of the initial value problem which is valid only at sufficiently short times. (The short-time constraint for the initial value problem arises from the technical requirement that the time-dependent terms in the statistical moments \(\delta {\tilde{\pmb {\mu }}}_t,\delta {\tilde{\pmb {\mu }}}_t^{{\textsc {m}}_i},\,\delta {\tilde{R}}_t,\delta {\tilde{R}}_t^{{\textsc {m}}_i}\) be small; see “Appendix 1”).

Fact 6

Consider the initial value problem and imperfect statistical predictions with Gaussian models \({\textsc {m}}_i\) in (26) with correct initial statistics, i.e., \(\pmb {\mu }^{{\textsc {m}}_i}_{0}=\pmb {\mu }_{0}, \;R^{{\textsc {m}}_i}_0{=}R_0\), and over a sufficiently short time interval \({\mathcal {I}} = [0\;T],\;T\ll 1\) so that \(\delta {\tilde{\pmb {\mu }}}_t,\delta {\tilde{\pmb {\mu }}}_t^{{\textsc {m}}_i},\,\delta {\tilde{R}}_t,\delta {\tilde{R}}_t^{{\textsc {m}}_i}\) remain small. The Gaussian mixture MME provides improved predictions relative to the single-model predictions with \({\textsc {m}}_\diamond \) over the interval \({\mathcal {I}}\) with uncertainty \(\varDelta \) when

where

with the weights \(\beta _i\) defined in (12).

Remarks

-

For an MME containing models (26), underdamped MME with \(\gamma ^{{\textsc {m}}_i}\leqslant \gamma ^{{\textsc {m}}_\diamond }\) helps improve the short-time imperfect predictions (\(E_{\mathcal {I}}>0\)), but it is not sufficient to guarantee the overall skill improvement. The interplays between the truth and model response in \(D_{\mathcal {I}}\) and the truth and model response in the variance in \(F_{\mathcal {I}}\) are both important. Moreover, when the truth response \({\tilde{R}}_t\) in the variance is sufficiently negative, the short-term prediction skill is not improved through the underdamped MME.

-

Even if the short-time condition (31) is satisfied, the medium-range predictive skill of MME might not beat the single model (see Sect. 4.3 for examples).

It turns out that the sufficient condition for improving infinite-time forced response predictions via a Gaussian mixture MME takes an even simpler form than (31) for the initial value problem. This fact follows from invariance of the equilibrium covariance with respect to forcing perturbations in linear Gaussian systems (26), i.e., \({\tilde{R}}_t=0\) in (30), and the fact that under minimal assumptions (Hairer and Majda 2010), the perturbations in the mean, \({\tilde{\mu }}_t\), remain small for all time. Thus, we have the following (see “Appendix 1” for details):

Fact 7

Consider the forced response prediction via a Gaussian mixture MME containing imperfect Gaussian models (26) with correct equilibrium mean and covariance, i.e., \(\pmb {\mu }^{{\textsc {m}}_i}_\mathrm{eq}=\pmb {\mu }_\mathrm{eq}\) and \(R^{{\textsc {m}}_i}_\mathrm{eq}=R_\mathrm{eq}\). The sufficient condition for improving forced response predictions to small external forcing perturbations via MME over the time interval \({\mathcal {I}} = [t_1 \;\;t_1{+}T]\) is independent of the truth covariance response, \({\tilde{R}}_t\), and it is given by

where \(\tilde{\pmb {\mu }}_t\) and \(\tilde{\pmb {\mu }}_t^{{\textsc {m}}_i}\) are, respectively, the perturbations of the truth and model mean from their equilibrium values and \(D_t\) has the same form as in (31) but with \(R_0=R_\mathrm{eq}\).

Remarks

-

The condition (32) for improving the infinite-time forced response can be written as

(33)

(33)where \(\Vert \pmb {\mu }\Vert _R^2 = \pmb {\mu }^\text {T} R \,\pmb {\mu }\) and the weights \(\beta _i\) are defined in (12). The choice of MME satisfying the above condition depends on the interplay between the truth and model response in the mean, and it becomes difficult for \({\tilde{\pmb {\mu }}}_t R_\mathrm{eq}^{-1}{\tilde{\pmb {\mu }}}_t^i<0\); a detailed illustration of this fact is presented in Sect. 4.2.

-

The truth response in the mean \({\tilde{\pmb {\mu }}}_t\) can be estimated from the unperturbed equilibrium based on the linear response theory incorporating fluctuation–dissipation formulas (Majda et al. 2005, 2010b, a; Abramov and Majda 2007; Gritsun et al. 2008; Majda and Gershgorin 2010, 2011a, b).

-

An MME with superior skill for predicting the infinite-time forced response, so that (32) is satisfied for \(t_1\rightarrow \infty ,\,T\rightarrow 0\), the short- or medium-range predictive skill of the same MME might not beat the single model (see examples in Sect. 4.3.3).

-

In a more general setting (see “Appendix 1”) when \(\pi _\mathrm{eq}^{{\textsc {m}}_i}\ne \pi _\mathrm{eq}\) so that \(\pmb {\mu }^{{\textsc {m}}_i}_\mathrm{eq}\ne \pmb {\mu }_\mathrm{eq}\) and \(R^{{\textsc {m}}_i}_\mathrm{eq}\ne R_\mathrm{eq}\), the interplay between the truth and model response in both the mean and covariance is important (see Majda and Gershgorin 2010, 2011a, b for related analysis in the single-model configuration).

The insights gained from the conditions highlighted in facts 8 and 9 and its generalizations presented in “Appendix 1” will be used when interpreting the numerical results in Sect. 4.3.

4 Tests of the Theory for MME Prediction

The goal of any reduced-order prediction technique is to achieve statistically accurate estimates of the evolving truth on the resolved subspace of dynamical variables given uncertain initial data and an imperfect model. MME prediction attempts to accomplish this by combining a collection of reduced-order models, and conditions for the utility of such an approach relative to single model predictions were derived in Sect. 3. Here, in order to illustrate these analytical results, we exploit two classes of exactly solvable stochastic models, described in Sect. 4.1, which are used to generate the ‘truth’ dynamics. In Sect. 4.2, we use these models to provide a cautionary analytical example illustrating the limitations of ad hoc applications of the MME framework in the presence of information barriers (Majda and Branicki 2012c). In Sect. 4.3, we test the information-theoretic criteria derived in Sect. 3 for improving predictions via the MME approach with the help of numerical simulations. While an exhaustive numerical study based on complex numerical models is certainly desirable, it is complementary to our goals and a subject for a separate publication.

4.1 Setup for Studying the Performance of MME Skill Using Exactly Solvable Test Models

Here, we consider two classes of stochastic models which provide the simplest possible setting for illustrating the consequences of interactions between the resolved and unresolved dynamics on the prediction error of reduced-order models. The stochastic dynamics in these models (one Gaussian and one non-Gaussian) may be regarded as an idealization of nonlinear couplings with a ‘bath’ of unresolved degrees of freedom in a much higher dimensional system (see, for example, Majda et al. 2003). The first class of models, described in Sect. 4.1.1, is given by a parameterized family of two-dimensional linear Gaussian systems (Majda and Yuan 2012; Majda 2012; Majda and Branicki 2012c) which linearly couple the ‘resolved’ and ‘unresolved’ dynamics. This revealing setup provides the simplest non-trivial example in which information barriers to improving imperfect dynamical predictions may arise due to neglecting the couplings between the resolved and the unresolved processes (see Majda 2012; Majda and Gershgorin 2011a, b; Majda and Branicki 2012c); we discuss this issue in detail in Sect. 4.2 and Sect. 4.3.3 in the context of MME predictions of the forced response. The nonlinear, non-Gaussian test models, outlined in Sect. 4.1.2 and introduced in (Gershgorin et al. 2010b), allow for incorporating a wealth of dynamical phenomena which are induced by nonlinear multi-scale interactions; these include the intermittent bursts of instability at the resolved scales which are typical of many turbulent regimes in geophysical flows (e.g., Majda 2000; Majda and Lee 2014).

4.1.1 The Two-Dimensional Linear Gaussian System

In this linear Gaussian system with the state vector \(\pmb {x} = (u,\,v)^T\), the ‘resolved’ dynamics \(u(t)\) is linearly coupled to the ‘unresolved’ dynamics, \(v(t)\), according to (see Majda and Yuan 2012; Majda 2012; Majda and Branicki 2012c)

where \(W(t)\) is the scalar Wiener process, and the matrix \(L\) and its eigenvalues \(\lambda _{1,2}\) are

with \(a\) the damping in the resolved dynamics \(u(t),\,A\) the damping in the unresolved dynamics \(v(t)\), and \(q\) the coupling parameter between \(u(t)\) and \(v(t)\). We assume that the deterministic forcing \(F(t)\) acts only in the resolved subspace \(u\) and the stochastic forcing affects directly only the unresolved dynamics \(v(t)\) which is linearly coupled to the resolved dynamics for \(q\ne 0\). Since the system (34) is linear with additive noise, it can be easily shown that it has a Gaussian attractor provided that

so that the stable the equilibrium mean \(\pmb {\mu }_\mathrm{eq} = (\mu ^u_\mathrm{eq}, \;\mu ^v_\mathrm{eq})\) and covariance \(R_\mathrm{eq}\) of (34) are given by

The autocovariance of (34) at equilibrium depends only on the lag, \(\tau \), and it is given by \({\mathcal {C}}_\mathrm{eq}(\tau )= R_\mathrm{eq}\, e^{ L^\text {T}\tau }\) (see Majda and Branicki 2012c for details). Extensions to the non-autonomous case are trivially obtained if the stability conditions (36) are satisfied so that there exists a Gaussian measure on the attractor (see, e.g., Arnold 1998; Majda and Wang 2006) with the attractor mean, \(\pmb {\mu }_\mathrm{att}(t)\equiv {\mathop {\lim }\nolimits _{{t_0\rightarrow -\infty }}} \pmb {\mu }(t, t_0)\), and the same autocovariance as in the autonomous case.

Despite the simplicity of the system (34), there exist distinct regimes of transient dynamics with a stable Gaussian equilibrium satisfying (36); in particular, there exist families in the \(\{a,A,q,\sigma \}\) parameter space with the same marginal statistics of the resolved attractor dynamics (see Majda and Branicki 2012c). This feature of the toy model (34) is important for our purposes since in many applications, the only reliable information that can be extracted from empirical data is the low-order statistics of the resolved dynamics at equilibrium which, in turn, can be often reproduced by many imperfect models. These are exactly the issues considered in a general setting in Sect. 3, and they will be illustrated using numerical tests in Sect. 4.3.

4.1.2 The Nonlinear, Non-Gaussian Model

The non-Gaussian dynamics of the second test model is given by the following nonlinear stochastic system (see Gershgorin et al. 2010b; Branicki et al. 2012; Branicki and Majda 2012b, c; Majda and Branicki 2012c)

where \(W_u\) is a complex Wiener processes with independent components and \(W_\gamma \) is a real Wiener process. The nonlinear system (38), introduced first in a more general form in (Gershgorin et al. 2010b) for filtering multi-scale turbulent signals with hidden instabilities, has a number of desirable properties for testing the skill of MME prediction with reduced-order models. First, it has a surprisingly rich dynamics mimicking signals in various regimes of the turbulent spectrum, including regimes with intermittently positive finite-time Lyapunov exponents due to large-amplitude bursts of instabilities in \(u(t)\) and fat-tailed probability densities for \(u(t)\) (Branicki et al. 2012; Branicki and Majda 2012b, c; Majda and Lee 2014). The equilibrium probability densities in the above regimes have nonzero skewness when \(F\ne 0\) in (38a). Moreover, exact path-wise solutions and exact second-order statistics of this non-Gaussian system can be obtained analytically, as discussed in Gershgorin et al. (2010b).

We consider \(u(t)\) in (38) to be the ‘resolved’ variable which is nonlinearly coupled with the ‘unresolved’ dynamics \(\gamma (t)\) which induces damping fluctuations in the resolved dynamics; this nonlinear coupling is capable of generating a highly non-Gaussian resolved dynamics \(u(t)\) which proved valuable for studying uncertainty quantification and filtering of turbulent dynamical systems (Majda et al. 2010c; Branicki et al. 2012; Branicki and Majda 2012c, b; Majda and Branicki 2012c). It is worth stressing that the stochastic dynamics in (38) may be regarded as an idealization of cumulative effects due to nonlinear couplings with a ‘bath’ of unresolved degrees of freedom in a much higher dimensional system (e.g., Majda et al. 2003). In Sect. 4.3, we will consider numerical tests employing an ensemble of reduced-order Gaussian models for predicting the resolved dynamics \(u(t)\) of the non-Gaussian model (38) with the hidden, unresolved dynamics of \(\gamma (t)\); these tests are used to illustrate the general information-theoretic criteria derived in Sect. 3 and provide insight into additional subtleties associated with MME prediction.

4.2 Information Barriers in MME Prediction

Prediction improvement within the MME framework is not always guaranteed, and it depends on both the choice of the imperfect model ensemble and the nature of the truth dynamics; this fact is apparent in the information criteria derived in Sect. 3.1. The example discussed below represents the simplest non-trivial configuration in which barriers to prediction improvement within the MME framework can arise, and it augments the previous considerations discussed in Majda and Gershgorin (2011a), Majda (2012), Majda and Branicki (2012c) in the context of single-model predictions.

Consider a configuration where the truth dynamics \((u(t),v(t))\) is given by (34) with a stable Gaussian attractor, and the imperfect models for the resolved dynamics \(u^{\textsc {m}}(t)\) are given by the linear Gaussian models (26) with correct marginal equilibrium statistics so that

where the equilibrium mean of the model dynamics (26) and of the resolved truth (34) are given, respectively, in (27)–(28) and (37). The two constraints in (39) imposed on the family of imperfect models (26) with parameters \((\gamma ^{\textsc {m}},\sigma ^{\textsc {m}},F^{\textsc {m}})\) leave a one-parameter family of models with a correct marginal equilibrium statistics parameterized by \(\gamma ^{\textsc {m}}\).

Consider now predictions of the infinite-time response of the truth dynamics to forcing perturbations which change the forcing by \(\delta {\tilde{F}}\) so that the marginal statistics at the new equilibrium of the truth (34) and the model (26) are given by

while the variance of \(u\) and \(u^{\textsc {m}}\) remains unchanged since the considered models are linear and Gaussian. In this case, the condition (32) for improving the infinite-time forced response prediction via the MME approach relative to singe model predictions with \({\textsc {m}}_\diamond \) becomes, at the leading order in \(\delta {\tilde{F}}\),

The above condition implies the existence of two distinct configurations which, similarly to the single-model predictions, are distinguished by the sign of the damping parameter \(A\) in the unresolved truth dynamics in (34). These two scenarios were already sketched in Fig. 2a,b, and we discuss their characteristics below:

-

(i)

No information barrier in the single-model prediction [\({A<0}\) in the unresolved part of the truth (34)] In this case there exists an imperfect model \({\textsc {m}}^*_\infty \) in (26) with

$$\begin{aligned} \gamma ^{{\textsc {m}}^*_\infty }=1/\tau ^{{\textsc {m}}^*_\infty }=-\lambda _1\lambda _2/A>0, \end{aligned}$$(42)which is optimal for doing infinite-time forced response predictions so that \(\underset{t\rightarrow \infty }{\lim }{\mathcal {P}}(\pi ^\delta _{t},\pi ^{{\textsc {m}}^*_\infty ,\,\delta }_{t})=0\) while also satisfying the constraints (39) leading to \({\mathcal {P}}(\pi _\mathrm{eq},\pi ^{{\textsc {m}}^*}_\mathrm{eq})=0\). The following hold in this case:

-

If \({\textsc {m}}_{\diamond }\ne {\textsc {m}}^*_\infty \), the MME approach can improve the infinite-time forced response prediction based on the condition (41); see also Fig. 7 discussed later in Sect. 4.3.3. In particular, the MME skill is improved for any overdamped MME with \(\gamma ^{{\textsc {m}}_i}{\geqslant } \,\gamma ^{{\textsc {m}}_{\diamond }}\) (\(\tau ^{{\textsc {m}}_i}{\leqslant } \,\tau ^{{\textsc {m}}_{\diamond }}\)). If, additionally, \({\textsc {m}}^*_\infty \notin {\mathcal {M}}\), the information barrier in MME can be reduced relative to the \({\textsc {m}}_{\diamond }\) prediction (see also Fig. 2a).

-

If \({\textsc {m}}_{\diamond }={\textsc {m}}_*\), the MME approach cannot improve the infinite-time forced response prediction based on the condition (41), see Fig. 7. The information barrier in MME cannot be reduced relative to the single-model prediction with \({\textsc {m}}_*\) (cf., Fact 2 in Sect. 3.1).

-

-

(ii)

Information barrier in the single-model prediction [\({A>0}\) in the unresolved part of the truth (34)] In this case, the infinite-time forced response prediction is improved (at least) for any MME containing models with \(\gamma ^{{\textsc {m}}_i}\geqslant \gamma ^{{\textsc {m}}_\diamond }\) (\(\tau ^{{\textsc {m}}_i}{\leqslant } \,\tau ^{{\textsc {m}}_{\diamond }}\)). The information barrier in MME prediction of the infinite-time forced response cannot be reduced relative to the single-model prediction, and it is given by

$$\begin{aligned} {\mathcal {P}}(\pi ^\delta _\infty ,\pi ^{{\textsc {m}}^*,\delta }_\infty )=\frac{|\delta {\tilde{F}}|^2}{2 R_\mathrm{eq}}\left( \frac{A}{\lambda _1\lambda _2}\right) ^2, \end{aligned}$$(43)which is achieved only when \(\gamma ^{{\textsc {m}}^*}{\rightarrow }\,\infty \); this situation represents one instance of the configuration depicted schematically in Fig. 2b (see also Figs. 8, 7, 1c for analogous situation in the context initial value problem). Recall that the information barrier in MME prediction utilizing the class of models \({\mathcal {M}}\) corresponds to model error of the optimal-weight MME (9).

This revealing example of the MME skill for forced response prediction and the associated information barriers is examined further in Sect. 4.3.3 in the case of prediction over a finite time interval, where it is shown that additional information barriers can arise if MME consists of a finite number of models.

4.3 Numerical Examples

The goal of this section is twofold. First, we illustrate the general information-theoretic criteria derived in Sect. 3 for improving imperfect predictions via the MME approach with the help of numerical simulations based on the exactly solvable stochastic test models introduced in Sect. 4.1. We stress again that while an exhaustive numerical study based on complex models is desirable, it is complementary to our goals and a subject for a separate publication. The second aim is to illustrate, in a controlled setting, differences between the single-model prediction and the MME prediction under additional constraints which arise in applications. In practice, imperfect models are often approximately tuned to the marginal equilibrium statistics of the resolved dynamics which is often the only reliable source of information. However, such a tuning procedure does not necessarily reduce the prediction error in the transient dynamics or in the response to forced perturbations from equilibrium (e.g., Majda and Gershgorin 2011a, b; Branicki and Majda 2012c; Majda and Branicki 2012c). The numerical examples studied below highlight the differences between the MME structure providing improved short- and medium-range predictions (see also “Appendix 2”). Thus, apart from validating the analytical estimates of Sect. 3, particular emphasis in this section is on the following issues:

-

How significant are the differences between the optimal-weight and equal-weight MME prediction?

-

Are MME’s with good short prediction skill likely to have good medium-range prediction skill?

These themes appear recurrently throughout the remaining sections.

4.3.1 Tuning Reduced-Order Models in the Multi-Model Ensemble

In the numerical examples discussed below, the MME density, \(\pi ^{\textsc {mme}}_t\) (1), is a Gaussian mixture involving the imperfect model densities, \(\pi ^{{\textsc {m}}_i}_{t}\), associated with the class \({\mathcal {M}}\) of linear Gaussian models (34) with correct marginal equilibrium statistics for the resolved dynamics. This setting reflects the fact that the marginal equilibrium mean and covariance, \(\langle u \rangle _\mathrm{eq},\,\hbox {Var}_\mathrm{eq}[u]\), of the resolved truth dynamics can be estimated from measurements. The following result (see Majda and Branicki 2012c) provides the basis for tuning the marginal equilibrium statistics of the imperfect models in a MME:

Proposition 1

Consider the linear Gaussian dynamics in (26) with coefficients \(\big \{\gamma ^{\textsc {m}},\sigma ^{\textsc {m}},F^{\textsc {m}}\big \}\) and constant forcing. Provided that \(\gamma ^{\textsc {m}}>0\), the equilibrium statistics of (26) is controlled by two parameters

which correspond, respectively, to the model mean and variance. There exists is a one-parameter family of models (26) with correct marginal equilibrium statistics of the resolved truth dynamics \(u(t)\) with

where \(\gamma ^{\textsc {m}}\) is a free parameter and \(\mathbb {E}_\mathrm{eq} [u]\) and \(\mathrm{Var}_\mathrm{eq}[u]\) denote the marginal equilibrium mean and variance of the resolved truth dynamics.

The class of imperfect models with correct marginal equilibrium statistics and correct statistics of the initial conditions is given by

where \({\mathcal {P}}\) is the relative entropy (2). Given the constraints on the initial conditions and the equilibrium model densities in the family \({\mathcal {M}}\), there is one free parameter left in the models (26) which we choose to be the correlation time \(\tau ^{{\textsc {m}}_i}=1/\gamma ^{{\textsc {m}}_i}\). Therefore, the mixture density (1) of the MME can be written as

which is parameterized by the weights \(\pmb {\alpha }\equiv [\alpha _1, \ldots , \alpha _I]\) and the distribution of the correlation times denoted by \([\tau ]\); here, we assume that \([\tau ]\) is given by a vector of correlation times evenly distributed between \(\tau _\mathrm{min}\) and \(\tau _\mathrm{max}\)

and that \(\tau ^\mathrm{trth}\in [\tau ]\) denotes the correct correlation time of the marginal dynamics \(u(t)\) in (38). In general, the Gaussian Itô diffusions in (26) cannot reproduce the marginal two-point equilibrium statistics of the true resolved dynamics (see Majda and Branicki 2012c for details). However, there exists a linear Gaussian model (26) with the correct correlation time, \(\tau ^{\textsc {m}}= \tau ^\mathrm{trth}\), where

In the analysis of Sects. 4.2 and 4.3, we will assume that the single-model predictions are carried out using a model with correct correlation time for the resolved dynamics; this setup is justified by the fact that the correlation time estimates are usually the next easiest quantity to estimate from measurements, apart from the mean and covariance. Finally, we adopt the following characterization of the ensemble structure:

The statistical accuracy of the imperfect dynamical predictions is assessed using two information measures (Giannakis et al. 2012; Giannakis and Majda 2012a, b; Branicki and Majda 2012c; Majda and Branicki 2012c) exploiting the relative entropy (2), namely:

-

(i)

the model error

$$\begin{aligned} \fancyscript{E}^{\textsc {m}}_t={\mathcal {P}}\left( \pi _t(u),\pi ^{{\textsc {m}}}_t(u)\right) , \qquad \fancyscript{E}^{\textsc {mme}}_t={\mathcal {P}}\left( \pi _t(u),\pi ^{{\textsc {mme}}}_t(u)\right) , \end{aligned}$$(51) -

(ii)

the internal prediction skill

$$\begin{aligned} \fancyscript{I}_t= & {} {\mathcal {P}}\left( \pi _t(u),\pi _\mathrm{eq}(u)\right) , \qquad \fancyscript{I}^{\textsc {m}}_t={\mathcal {P}}\left( \pi _t^{\textsc {m}}(u),\pi ^{{\textsc {m}}}_\mathrm{eq}(u)\right) ,\nonumber \\ \fancyscript{I}^{\textsc {mme}}_t= & {} {\mathcal {P}}\left( \pi _t^{\textsc {mme}}(u),\pi ^{{\textsc {mme}}}_t(u)\right) , \end{aligned}$$(52)for the truth, a single model \({\textsc {m}}\), and an MME relative to their respective equilibria. Note that the information criteria derived in Sect. 3.1 focus on the model error part in the overall predictive skill which combines (i) and (ii) (Giannakis et al. 2012; Giannakis and Majda 2012a, b; Branicki and Majda 2012c; Majda and Branicki 2012c). When examining the mitigation of prediction error via the MME approach, it is sufficient to consider the measure in (i) above. However, in the following tests, we show the evolution of the internal prediction skill alongside the model error in order to motivate future generalizations of this approach to account for the overall prediction skill.

4.3.2 Tests of MME Prediction in the Context of Initial Value Problem

Here, we use the test models described in Sects. 4.1.1–4.1.2 in order to provide a more complete picture of the MME prediction and augment the analytical results of Sect. 3 with simple numerical simulations. Particular focus is on the issues raised at the beginning of Sect. 4.3 which are not easily tractable analytically; these include differences between the equal-weight and optimal-weight ensembles, information barriers, and the change in structure in the optimal-weight ensemble depending on the prediction horizon.