Abstract

In this paper, we investigate open-loop and weak closed-loop solvabilities of stochastic linear quadratic (LQ, for short) optimal control problem of Markovian regime switching system. Interestingly, these two solvabilities are equivalent on [0, T). We first provide an alternative characterization of the open-loop solvability of LQ problem using a perturbation approach. Then, we study the weak closed-loop solvability of LQ problem of Markovian regime switching system, and establish the equivalent relationship between open-loop and weak closed-loop solvabilities. Finally, we present an example to shed on light on finding weak closed-loop optimal strategies within the framework of Markovian regime switching system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Linear quadratic (LQ, for short) optimal control can be traced back to the works of Kalman [13] for the deterministic cases, and Wonham [25] for the stochastic cases (also see [2, 6, 28], and the references therein). In the classical setting, under some mild conditions on the weighting coefficients in the cost functional such as positive definiteness of the weighting control matrix, the stochastic LQ optimal control problems can be solved elegantly via Riccati equation approach (see [28, Chapter 6]). Chen et al. [3] studied stochastic LQ optimal control problems with indefinite weighting control matrix as well as financial applications such as continuous time mean-variance portfolio selection problems (see [17, 35]). Since then, there has been an increasing interest in the so-called indefinite stochastic LQ optimal control (see [1, 16]).

A topic of state systems involving random jumps, such as Poisson jumps or regime switching jumps, is of interest and of importance in various fields such as engineering, management, finance, economics, and so on. For example, Wu and Wang [26] considered the stochastic LQ optimal control problems with Poisson jumps and obtained the existence and uniqueness of the deterministic Riccati equation. Using the technique of completing squares, Hu and Oksendal [10] discussed the stochastic LQ optimal control problem with Poisson jumps and partial information. Yu [29] investigated a kind of infinite horizon backward stochastic LQ optimal control problems. Li et al. [14] solved the indefinite stochastic LQ optimal control problem with Poisson jumps. Meanwhile, there has been dramatically increasing interest in studying this family of stochastic control problems as well as their financial applications, see, for examples, [7, 7,8,9, 19, 21, 23, 27, 31, 33, 34, 36]. Moreover [11, 12] formulated a class of continuous-time LQ optimal controls with Markovian jumps. Zhang and Yin [30] developed hybrid controls of a class of LQ systems modulated by a finite-state Markov chain. Li et al. [16] initiated indefinite stochastic LQ optimal controls with regime switching jumps. Liu et al. [18] considered near-optimal controls of regime switching LQ problems with indefinite control weight costs. Some other recent development concerning regime switching jumps see [4, 5, 15].

Recently, Sun and Yong [22] investigated the two-person zero-sum stochastic LQ differential games. It was shown in [22] that the open-loop solvability is equivalent to the existence of an adapted solution to a forward-backward stochastic differential equation (FBSDE, for short) with constraint and the closed loop solvability is equivalent to the existence of a regular solution to the Riccati equations. As a continuation work of [20, 22] fundamentally studied the open-loop and closed-loop solvabilities for stochastic LQ optimal control problems. Moreover, the equivalence between the strongly regular solvability of the Riccati equation and the uniform convexity of the cost functional is established. Wang et al. [24] introduced the notion of weak closed-loop optimal strategy for LQ problems, and obtained its existence which is equivalent to the open-loop solvability of the LQ problem. Zhang et al. [32] studied the open-loop and closed-loop solvabilities for stochastic LQ optimal control problems with Markovian regime switching jumps, and established the equivalent relationship between the strongly regular solvability of the Riccati equation and the uniform convexity of the cost functional in the circumstance of Markovian regime switching system. In this paper, we further study the weak closed-loop solvability of stochastic LQ optimal control problems with Markovian regime switching system. In order to present our work more clearly, we describe the problem in detail.

Let \((\Omega ,{{{\mathcal {F}}}},{\mathbb {F}},{\mathbb {P}})\) be a complete filtered probability space on which a standard one-dimensional Brownian motion \(W=\{W(t); 0\leqslant t < \infty \}\) and a continuous time, finite-state, Markov chain \(\alpha =\{\alpha (t); 0\leqslant t< \infty \}\) are defined, where \({\mathbb {F}}=\{{{{\mathcal {F}}}}_t\}_{t\geqslant 0}\) is the natural filtration of W and \(\alpha \) augmented by all the \({\mathbb {P}}\)-null sets in \({{{\mathcal {F}}}}\), and \({\mathbb {F}}^\alpha =\{{{{\mathcal {F}}}}_t^\alpha \}_{t\geqslant 0}\) is the filtration generated by \(\alpha \), with the related expectation \({\mathbb {E}}^\alpha \). We identify the state space of the chain \(\alpha \) with a finite set \({{{\mathcal {S}}}}\triangleq \{1, 2 \ldots , D\}\), where \(D\in {\mathbb {N}}\) and suppose that the chain is homogeneous and irreducible. Let \(0\leqslant t<T\) and consider the following controlled Markovian regime switching linear stochastic differential equation (SDE, for short) over a finite time horizon [t, T]:

where \(A,C:[0,T]\times {{{\mathcal {S}}}}\rightarrow {\mathbb {R}}^{n\times n}\) and \(B,D:[0,T]\times {{{\mathcal {S}}}}\rightarrow {\mathbb {R}}^{n\times m}\) are given deterministic functions, called the coefficients of the state Eq. (1.1); \(b,\sigma :[0,T]\times \Omega \rightarrow {\mathbb {R}}^n\) are \({\mathbb {F}}\)-progressively measurable processes, called the nonhomogeneous terms; and \((t,x,i)\in [0,T)\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\) is called the initial pair. In the above, the process \(u(\cdot )\), which belongs to the following space:

is called the control process, and the solution \(X(\cdot )\) of (1.1) is called the state process corresponding to (t, x, i) and \(u(\cdot )\). To measure the performance of the control \(u(\cdot )\), we introduce the following quadratic cost functional:

where \(G(i)\in {\mathbb {R}}^{n\times n}\) is a symmetric constant matrix, and g(i) is an \({{{\mathcal {F}}}}_T\)-measurable random variable taking values in \({\mathbb {R}}^n\), with \(i\in {{{\mathcal {S}}}}\); \(Q:[0,T]\times {{{\mathcal {S}}}}\rightarrow {\mathbb {R}}^{n\times n}\), \(S:[0,T]\times {{{\mathcal {S}}}}\rightarrow {\mathbb {R}}^{m\times n}\) and \(R:[0,T]\times {{{\mathcal {S}}}}\rightarrow {\mathbb {R}}^{m\times m}\) are deterministic functions with both Q and R being symmetric; \(q:[0,T]\times {{{\mathcal {S}}}}\rightarrow {\mathbb {R}}^{n}\) and \(\rho :[0,T]\times {{{\mathcal {S}}}}\rightarrow {\mathbb {R}}^{m}\) are deterministic functions. In the above, \(M^\top \) stands for the transpose of a matrix M. The problem that we are going to study is the following:

Problem (M-SLQ). For any given initial pair \((t,x,i)\in [0,T)\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\), find a control \(u^{*}(\cdot )\in {\mathcal {U}}[t,T]\), such that

The above is called a stochastic linear quadratic optimal control problem of the Markovian regime switching system. Any \(u^{*}(\cdot )\in {\mathcal {U}}[t,T]\) satisfying (1.3) is called an open-loop optimal control of Problem (M-SLQ) for the initial pair (t, x, i); the corresponding state process \(X(\cdot )=X(\cdot \ ;t,x,i,u^*(\cdot ))\) is called an optimal state process; and the function \(V(\cdot ,\cdot ,\cdot )\) defined by

is called the value function of Problem (M-SLQ). Note that in the special case when \(b(\cdot ,\cdot ),\sigma (\cdot ,\cdot ),g(\cdot ),q(\cdot ,\cdot ),\rho (\cdot ,\cdot )=0\), the state Eq. (1.1) and the cost functional (1.2), respectively, become

and

We refer to the problem of minimizing (1.6) subject to (1.5) as the homogeneous LQ problem associated with Problem (M-SLQ), denoted by Problem (M-SLQ)\(^0\). The corresponding value function is denoted by \(V^0(t,x,i)\). Moreover, when all the coefficients of (1.1) and (1.2) are independent of the regime switching term \(\alpha (\cdot )\), the corresponding problem (1.3) is called Problem (SLQ).

Following the works of [20, 22, 32] investigated the open-loop and closed-loop solvabilities for stochastic LQ problems of Markovian regime switching system. It was shown that the open-loop solvability of Problem (M-SLQ) is equivalent to the solvability of a forward-backward stochastic differential equation with constraint. They also showed that the closed-loop solvability of Problem (M-SLQ) is equivalent to the existence of a regular solution of the following general Riccati equation (GRE, for short):

where

It can be found (see [32]) that, for the stochastic LQ optimal control problem of Markovian regime switching system, the existence of a closed-loop optimal strategy implies the existence of an open-loop optimal control, but not vice versa. Thus, there are some LQ problems that are open-loop solvable, but not closed-loop solvable. Such problems cannot be expected to get a regular solution (which does not exist) to the associated GRE (1.7). Therefore, the state feedback representation of the open-loop optimal control might be impossible. To be more convincing, let us look at the following simple example.

Example 1.1

Consider the following one-dimensional state equation

and the nonnegative cost functional

In this example, the GRE reads (noting that \(Q(\cdot ,i)=0,R(\cdot ,i)=0,D(\cdot ,i)=0\) for every \(i\in {{{\mathcal {S}}}}\), and \(0^{-1}=0\)):

It is not hard to check that GRE (1.8) has no regular solution (see Sect. 3 for the definition of regular solution), thus the corresponding LQ problem is not closed-loop solvable. A usual Riccati equation approach specifies the corresponding state feedback control as follows (noting that \(Q(\cdot ,i)=0,R(\cdot ,i)=0,D(\cdot ,i)=0\) for every \(i\in {{{\mathcal {S}}}}\), and \(0^{-1}=0\)):

which is not an open-loop optimal control for any nonzero initial state x. In fact, let \((t,x,i)\in [0,1)\times {\mathbb {R}}\times {{{\mathcal {S}}}}\) be an arbitrary but the fixed initial pair with \(x\ne 0\). By Itô’s formula, the state process \(X^*(\cdot )\) corresponding to (t, x, i) and \(u^*(\cdot )\) is expressed as

Thus,

On the other hand, let \({\bar{u}}(\cdot )\) be the control defined by

By the variation of constants formula, the state process \({\bar{X}}(\cdot )\), corresponding to (t, x, i) and \({\bar{u}}(\cdot )\), can be presented by

which satisfies \({\bar{X}}(1)=0\). Hence,

Since the cost functional is nonnegative, the open-loop control \({\bar{u}}(\cdot )\) is optimal for the initial pair (t, x, i), but \(u^*(\cdot )\) is not optimal.

The above example suggests that the usual solvability of the GRE (1.7) no longer helpfully handles the open-loop solvability of certain stochastic LQ problems. It is then natural to ask: When Problem (M-SLQ) is merely open-loop solvable, not closed-loop solvable, is it still possible to get a linear state feedback representation for an open-loop optimal control within the framework of Markovian regime switching system? The goal of this paper is to tackle this problem.

The contribution of this paper is to study the weak closed-loop solvability of stochastic LQ optimal control problems with Markovian regime switching system. In detail, we provide an alternative characterization of the open-loop solvability of Problem (M-SLQ) using the perturbation approach adopted in [20]. In order to obtain a linear state feedback representation of open-loop optimal control for Problem (M-SLQ), we introduce the notion of weak closed-loop strategies in the circumstance of stochastic LQ optimal control problem of Markovian regime switching system. We prove that as long as Problem (M-SLQ) is open-loop solvable, there always exists a weak closed-loop strategy whose outcome actually is an open-loop optimal control. Consequently, the open-loop and weak closed-loop solvability of Problem (M-SLQ) are equivalent on [0, T). Comparing with [24], this paper further develops the results in [24] to the case of stochastic LQ optimal control problems with Markovian regime switching system, which could be applied to financial market models with Markov process, such as interest rate, stocks return and volatility. However, the regime switching jumps will bring some difficulties. For example, the first problem is how to define the closed-loop solvability and weak closed-loop solvability in the circumstance of Markovian regime switching system. The second problem is how to prove the equivalent between the open-loop and weak closed-loop solvability of Problem (M-SLQ) in the circumstance of Markovian regime switching system. We will use the methods of [20, 24, 32] to overcome these difficulties.

The rest of the paper is organized as follows. In Sect. 2, we collect some preliminary results and introduce a few elementary notions for Problem (M-SLQ). Section 3 is devoted to the study of open-loop solvability by a perturbation method. In Sect. 4, we show how to obtain a weak closed-loop optimal strategy and establish the equivalence between open-loop and weak closed-loop solvability. Finally, an example is presented in Sect. 5 to illustrate the results we obtained.

2 Preliminaries

Throughout this paper, and recall from the previous section, let \((\Omega ,{{{\mathcal {F}}}},{\mathbb {F}},{\mathbb {P}})\) be a complete filtered probability space on which a standard one-dimensional Brownian motion \(W=\{W(t); 0\leqslant t < \infty \}\) and a continuous time, finite-state, Markov chain \(\alpha =\{\alpha (t); 0\leqslant t< \infty \}\) are defined, where \({\mathbb {F}}=\{{{{\mathcal {F}}}}_t\}_{t\geqslant 0}\) is the natural filtration of W and \(\alpha \) augmented by all the \({\mathbb {P}}\)-null sets in \({{{\mathcal {F}}}}\). In the rest of our paper, we will use the following notation:

and for an \({\mathbb {S}}^n\)-valued function \(F(\cdot )\) on [t, T], we use the notation \(F(\cdot )\gg 0\) to indicate that \(F(\cdot )\) is uniformly positive definite on [t, T], i.e., there exists a constant \(\delta >0\) such that

Next, for any \(t\in [0,T)\) and Euclidean space \({\mathbb {H}}\), we further introduce the following spaces of functions and processes:

and

Now we start to formulate our system. We identify the state space of the chain \(\alpha \) with a finite set \({{{\mathcal {S}}}}\triangleq \{1, 2 \ldots , D\}\), where \(D\in {\mathbb {N}}\) and suppose that the chain is homogeneous and irreducible. To specify statistical or probabilistic properties of the chain \(\alpha \), for \(t\in [0,\infty )\), we define the generator \(\lambda (t)\triangleq [\lambda _{ij}(t)]_{i, j = 1, 2, \ldots , D}\) of the chain under \({\mathbb {P}}\). This is also called the rate matrix, or the Q-matrix. Here, for each \(i, j = 1, 2, \ldots , D\), \(\lambda _{ij}(t)\) is the constant transition intensity of the chain from state i to state j at time t. Note that \(\lambda _{ij}(t) \geqslant 0\), for \(i \ne j\) and \(\sum ^{D}_{j = 1} \lambda _{ij}(t) = 0\), so \(\lambda _{ii}(t) \leqslant 0\). In what follows for each \(i, j = 1, 2, \ldots , D\) with \(i \ne j\), we suppose that \(\lambda _{ij}(t) > 0\), so \(\lambda _{ii}(t) < 0\). For each fixed \(i,j = 1, 2, \ldots , D\), let \(N_{ij}(t)\) be the number of jumps from state i into state j up to time t and set

Then for each \(i,j=1,2,\ldots , D\), the term \({\widetilde{N}}_{ij} (t)\) defined as follows is an \(({\mathbb {F}}, {\mathbb {P}})\)-martingale:

To guarantee the well-posedness of the state Eq. (1.1), we adopt the following assumption:

(H1) For every \(i\in {{{\mathcal {S}}}}\), the coefficients and nonhomogeneous terms of (1.1) satisfy

The following result, whose proof is similar to the result in [22, Proposition 2.1], establishes the well-posedness of the state equation under the assumption (H1).

Lemma 2.1

Under the assumption (H1), for any initial pair \((t,x,i)\in [0,T)\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\) and control \(u(\cdot )\in {{{\mathcal {U}}}}[t,T]\), the state Eq. (1.1) has a unique adapted solution \(X(\cdot )\equiv X(\cdot \ ;t,x,i,u(\cdot ))\). Moreover, there exists a constant \(K>0\), independent of (t, x, i) and \(u(\cdot )\), such that

To ensure that the random variables in the cost functional (1.2) are integrable, we assume the following holds:

(H2) For every \(i\in {{{\mathcal {S}}}}\), the weighting coefficients in the cost functional (1.2) satisfy

Remark 2.2

Suppose that (H1) holds. Then according to Lemma 2.1, for any initial pair \((t,x,i)\in [0,T)\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\) and control \(u(\cdot )\in {{{\mathcal {U}}}}[t,T]\), the state Eq. (1.1) admits a unique (strong) solution \(X(\cdot )\equiv X(\cdot ;t,x,i,u(\cdot ))\) which belongs to the space \(L_{\mathbb {F}}^2(\Omega ;C([t,T];{\mathbb {H}}))\). In addition, if (H2) holds, then the random variables on the right-hand side of (1.2) are integrable, and hence Problem (M-SLQ) is well-posed.

Let us recall some basic notions of stochastic LQ optimal control problems.

Definition 2.3

(Open-loop) Problem (M-SLQ) is said to be

-

(i)

(uniquely) open-loop solvable for an initial pair \((t,x,i)\in [0,T]\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\) if there exists a (unique) \(u^*(\cdot )=u^*(\cdot \ ;t,x,i)\in {{{\mathcal {U}}}}[t,T]\) (depending on (t, x, i)) such that

$$\begin{aligned} J(t,x,i;u^{*}(\cdot ))\leqslant J(t,x,i;u(\cdot )),\qquad \forall u(\cdot )\in {{{\mathcal {U}}}}[t,T]. \end{aligned}$$(2.2)Such a \(u^*(\cdot )\) is called an open-loop optimal control for (t, x, i).

-

(ii)

(uniquely) open-loop solvable if it is (uniquely) open-loop solvable for all the initial pairs \((t,x,i)\in [0,T]\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\).

Definition 2.4

(Closed-loop) Let \(\Theta :[t,T]\times {{{\mathcal {S}}}}\rightarrow {\mathbb {R}}^{m\times n}\) to be deterministic function and \(v:[t,T]\times \Omega \rightarrow {\mathbb {R}}^m\) be an \({\mathbb {F}}\)-progressively measurable process.

-

(i)

We call \((\Theta (\cdot ,\cdot ),v(\cdot ))\) a closed-loop strategy on [t, T] if

$$\begin{aligned} {\mathbb {E}}\int _t^T|\Theta (s,\alpha (s))|^2ds<\infty ,\quad \hbox {and}\quad {\mathbb {E}}\int _t^T|v(s)|^2ds<\infty . \end{aligned}$$(2.3)The set of all closed-loop strategies \((\Theta (\cdot ,\cdot ),v(\cdot ))\) on [t, T] is denoted by \({\mathscr {C}}[t,T]\).

-

(ii)

A closed-loop strategy \((\Theta ^*(\cdot ,\cdot ),v^*(\cdot ))\in {\mathscr {C}}[t,T]\) is said to be optimal on [t, T] if

$$\begin{aligned}&J(t,x,i;\Theta ^*(\cdot ,\alpha (\cdot ))X^*(\cdot )+v^*(\cdot ))\leqslant J(t,x,i;\Theta (\cdot ,\alpha (\cdot ))X(\cdot )+v(\cdot )),\nonumber \\&\quad \forall (x,i)\in {\mathbb {R}}^n\times {{{\mathcal {S}}}},\quad \forall (\Theta (\cdot ,\cdot ),v(\cdot ))\in {\mathscr {C}}[t,T], \end{aligned}$$(2.4)where \(X^*(\cdot )\) is the solution to the closed-loop system under \((\Theta ^*(\cdot ,\cdot ),v^*(\cdot ))\):

$$\begin{aligned} \left\{ \negthinspace \negthinspace \begin{array}{ll} &{}dX^*(s)=\Big \{\big [A(s,\alpha (s))+B(s,\alpha (s))\Theta ^*(s,\alpha (s))\big ]X^*(s)+B(s,\alpha (s))v^*(s)+b(s)\Big \}ds\\ &{}\qquad \quad \ +\Big \{\big [C(s,\alpha (s))+D(s,\alpha (s))\Theta ^*(s,\alpha (s))\big ]X^*(s)\\ &{}\qquad \quad \ +D(s,\alpha (s))v^*(s)+\sigma (s)\Big \}dW(s),\qquad s\in [t,T],\\ &{} X^*(t)=x,\quad ~\alpha (t)=i, \end{array}\right. \end{aligned}$$(2.5)and \(X(\cdot )\) is the solution to the closed-loop system (2.5) corresponding to \((\Theta (\cdot ,\cdot ),v(\cdot ))\).

-

(iii)

For any \(t\in [0,T)\), if a closed-loop optimal strategy (uniquely) exists on [t, T], Problem (M-SLQ) is (uniquely) closed-loop solvable.

Remark 2.5

We emphasize that, in the above definition, \(\Theta \) is a deterministic function, and in (2.3) the randomness of \(\Theta (\cdot ,\alpha (\cdot ))\) comes from \(\alpha (\cdot )\). Moreover, (2.4) must be true for all \((x,i)\in {\mathbb {R}}^n\times {{{\mathcal {S}}}}\). The same remark applies to the definition below.

Definition 2.6

(Weak closed-loop) Let \(\Theta :[t,T]\times {{{\mathcal {S}}}}\rightarrow {\mathbb {R}}^{m\times n}\) be a deterministic function and \(v:[t,T]\times \Omega \rightarrow {\mathbb {R}}^m\) be an \({\mathbb {F}}\)-progressively measurable process such that for any \(T'\in [t,T)\),

-

(i)

We call \((\Theta (\cdot ,\cdot ),v(\cdot ))\) a weak closed-loop strategy on [t, T) if for any initial state \((x,i)\in {\mathbb {R}}^n\times {{{\mathcal {S}}}}\), the outcome \(u(\cdot )\equiv \Theta (\cdot ,\alpha (\cdot ))X(\cdot )+v(\cdot )\) belongs to \({{{\mathcal {U}}}}[t,T]\equiv L^2_{{\mathbb {F}}}(t,T;{\mathbb {R}}^m)\), where \(X(\cdot )\) is the solution to the weak closed-loop system:

$$\begin{aligned} \left\{ \negthinspace \negthinspace \begin{array}{ll} &{} dX(s)=\Big \{\big [A(s,\alpha (s))+B(s,\alpha (s))\Theta (s,\alpha (s))\big ]X(s)+B(s,\alpha (s))v(s)+b(s)\Big \}ds\\ &{}\qquad \quad ~~~+\Big \{\big [C(s,\alpha (s))+D(s,\alpha (s))\Theta (s,\alpha (s))\big ]X(s)\\ &{}\qquad \qquad \qquad +D(s,\alpha (s))v(s)+\sigma (s)\Big \}dW(s),\qquad s\in [t,T],\\ &{} X(t)=x,\quad ~\alpha (t)=i. \end{array}\right. \end{aligned}$$(2.6)The set of all weak closed-loop strategies is denoted by \({\mathscr {C}}_w[t,T]\).

-

(ii)

A weak closed-loop strategy \((\Theta ^*(\cdot ,\cdot ),v^*(\cdot ))\in {\mathscr {C}}_w[t,T]\) is said to be optimal on [t, T) if

$$\begin{aligned}&J(t,x,i;\Theta ^*(\cdot ,\alpha (\cdot ))X^*(\cdot )+v^*(\cdot ))\leqslant J(t,x,i;\Theta (\cdot ,\alpha (\cdot ))X(\cdot )+v(\cdot )),\nonumber \\&\qquad \qquad \qquad \quad \forall (x,i)\in {\mathbb {R}}^n\times {{{\mathcal {S}}}},\quad \forall (\Theta (\cdot ,\cdot ),v(\cdot ))\in {\mathscr {C}}_w[t,T], \end{aligned}$$(2.7)where \(X(\cdot )\) is the solution of the closed-loop system (2.6), and \(X^*(\cdot )\) is the solution to the weak closed-loop system (2.6) corresponding to \((\Theta ^*(\cdot ,\cdot ),v^*(\cdot ))\).

-

(iii)

For any \(t\in [0,T)\), if a weak closed-loop optimal strategy (uniquely) exists on [t, T), Problem (M-SLQ) is (uniquely) weakly closed-loop solvable.

3 Open-Loop Solvability: A Perturbation Approach

In this section, we study the open-loop solvability of Problem (M-SLQ) through a perturbation approach. We begin by assuming that, for any choice of \((t,i)\in [0,T)\times {{{\mathcal {S}}}}\),

which is necessary for the open-loop solvability of Problem (M-SLQ) according to [32, Theorem 4.1]. In fact, assumption (3.1) means that \(u(\cdot )\rightarrow J^0(t,0,i;u(\cdot ))\) is convex, and one can actually prove that assumption (3.1) implies the convexity of the mapping \(u(\cdot )\rightarrow J(t,x,i;u(\cdot ))\) for any choice of \((t,x,i)\in [0,T)\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\) (see [20, 32]).

For \(\varepsilon >0\), consider the LQ problem of minimizing the perturbed cost functional

subject to the state Eq. (1.1). We denote this perturbed LQ problem by Problem (M-SLQ)\(_\varepsilon \) and its value function by \(V_\varepsilon (\cdot ,\cdot ,\cdot )\). Notice that the cost functional \(J^0_\varepsilon (t,x,i;u(\cdot ))\) of the homogeneous LQ problem associated with Problem (M-SLQ)\(_\varepsilon \) is

which, by (3.1), satisfies

The Riccati equations associated with Problem (M-SLQ)\(_\varepsilon \) follow

where for every \((s,i)\in [0,T]\times {{{\mathcal {S}}}}\) and \(\varepsilon >0\),

We say that a solution \(P_\varepsilon (\cdot ,\cdot )\in C([0,T]\times {{{\mathcal {S}}}};{\mathbb {S}}^n)\) of (3.3) is said to be regular if

A solution \(P_\varepsilon (\cdot ,\cdot )\) of (3.3) is said to be strongly regular if

for some \(\lambda >0\). The system of Riccati equation (3.3) is said to be (strongly) regularly solvable, if it admits a (strongly) regular solution. Clearly, condition (3.8) implies (3.5)–(3.7). Thus, a strongly regular solution \(P_\varepsilon (\cdot ,\cdot )\) must be regular. Moreover, it follows from [32, Theorem 6.3] that, under the assumption (3.1), Riccati equation (3.3) have a unique strongly regular solution \(P_\varepsilon (\cdot ,\cdot )\in C([0,T]\times {{{\mathcal {S}}}};{\mathbb {S}}^n)\), and from (3.7), we have

Furthermore, let \((\eta _\varepsilon (\cdot ),\zeta _\varepsilon (\cdot ), \xi ^\varepsilon (\cdot ))\) be the adapted solution of the following BSDE:

and let \(X_\varepsilon (\cdot )\) be the solution of the following closed-loop system:

where \(\Theta _\varepsilon :[0,T]\times {{{\mathcal {S}}}}\rightarrow {\mathbb {R}}^{m\times n}\) and \(v_\varepsilon :[0,T]\times \Omega \rightarrow {\mathbb {R}}^m\) are defined by

with

Then from Theorem 5.2 and Corollary 6.5 in [32], the unique open-loop optimal control of Problem (M-SLQ)\(_\varepsilon \), for the initial pair (t, x, i), is given by

Before studying the main result of this section, we prove the following lemma.

Lemma 3.1

Under Assumptions (H1) and (H2), for any initial pair \((t,x,i)\in [0,T)\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\), one has

Proof

Let \((t,x,i)\in [0,T)\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\) be fixed. On the one hand, for any \(\varepsilon >0\) and any \(u(\cdot )\in {{{\mathcal {U}}}}[t,T]\), we have

Taking the infimum over all \(u(\cdot )\in {{{\mathcal {U}}}}[t,T]\) on the left hand side implies that

On the other hand, if V(t, x, i) is finite, then for any \(\delta >0\), we can find a \(u^\delta (\cdot )\in {{{\mathcal {U}}}}[t,T]\), independent of \(\varepsilon >0\), such that

It follows that

Letting \(\varepsilon \rightarrow 0\), we obtain

Since \(\delta >0\) is arbitrary, by combining (3.16) and (3.17), we obtain (3.15). A similar argument applies to the case when \(V(t,x,i)=-\infty \). \(\square \)

Now, we present the main result of this section, which provides a characterization of the open-loop solvability of Problem (M-SLQ) in terms of the family \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\).

Theorem 3.2

Let Assumptions (H1) and (H2) and (3.1) hold. For any given initial pair \((t,x,i)\in [0,T)\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\), let \(u_\varepsilon (\cdot )\) be defined by (3.14), which is the outcome of the closed-loop optimal strategy \((\Theta _\varepsilon (\cdot ,\cdot ),v_\varepsilon (\cdot ))\) of Problem (M-SLQ)\(_\varepsilon \). Then the following statements are equivalent:

-

(i)

Problem (M-SLQ) is open-loop solvable at (t, x, i);

-

(ii)

The family \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) is bounded in \(L^2_{{\mathbb {F}}}(t,T;{\mathbb {R}}^m)\), i.e.,

$$\begin{aligned} \sup _{\varepsilon >0}{\mathbb {E}}\int _t^T|u_\varepsilon (s)|^2ds<\infty ; \end{aligned}$$ -

(iii)

The family \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) is convergent strongly in \(L^2_{{\mathbb {F}}}(t,T;{\mathbb {R}}^m)\) as \(\varepsilon \rightarrow 0\).

Proof

We begin by proving the implication (i) \(\mathop {\Rightarrow }\) (ii). Let \(v^*(\cdot )\) be an open-loop optimal control of Problem (M-SLQ) for the initial pair (t, x, i). Then for any \(\varepsilon >0\),

On the other hand, since \(u_\varepsilon (\cdot )\) is optimal for Problem (M-SLQ)\(_\varepsilon \) with respect to (t, x, i), we have

Combining (3.18) and (3.19) yields that

This shows that \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) is bounded in \(L^2_{{\mathbb {F}}}(t,T;{\mathbb {R}}^m)\).

For (ii) \(\mathop {\Rightarrow }\) (i), the proof is similar to [24] (See Remark 3.3 below), and the implication (iii) \(\mathop {\Rightarrow }\) (ii) is trivially true.

Finally, we prove the implication (ii) \(\mathop {\Rightarrow }\) (iii). We divide the proof into two steps.

Step 1: The family \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) converges weakly to an open-loop optimal control of Problem (M-SLQ) for the initial pair (t, x, i) as \(\varepsilon \rightarrow 0\).

To verify this, it suffices to show that every weakly convergent subsequence of \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) has the same weak limit which is an open-loop optimal control of Problem (M-SLQ) for (t, x, i). Let \(u^*_i(\cdot )\), \(i=1,2\) be the weak limits of two different weakly convergent subsequences \(\{u_{i,\varepsilon _k}(\cdot )\}_{k=1}^\infty \) \((i=1,2)\) of \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\). The same argument as in the proof of (ii) \(\mathop {\Rightarrow }\) (i) shows that both \(u^*_1(\cdot )\) and \(u^*_2(\cdot )\) are optimal for (t, x, i). Thus, recalling that the mapping \(u(\cdot )\mapsto J(t, x,i; u(\cdot ))\) is convex, we have

This means that \(\frac{u^*_1(\cdot )+u^*_2(\cdot )}{2}\) is also optimal for Problem (M-SLQ) with respect to (t, x, i). Then we can repeat the argument employed in the proof of (i) \(\mathop {\Rightarrow }\) (ii), replacing \(v^*(\cdot )\) by \(\frac{u^*_1(\cdot )+u^*_2(\cdot )}{2}\) to obtain (see (3.20))

Now, note that

which implies that

By the definition of weak-convergence yields

Adding the above two inequalities and then multiplying by 2, we get

or equivalently (by shifting the integral on the right-hand side to the left-hand side),

It follows that \(u^*_1(\cdot )=u^*_2(\cdot )\), which establishes the claim.

Step 2: The family \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) converges strongly as \(\varepsilon \rightarrow 0\).

According to Step 1, the family \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) converges weakly to an open-loop optimal control \(u^*(\cdot )\) of Problem (M-SLQ) for (t, x, i) as \(\varepsilon \rightarrow 0\). By repeating the argument employed in the proof of (i) \(\mathop {\Rightarrow }\) (ii) with \(u^*(\cdot )\) replacing \(v^*(\cdot )\), we obtain

On the other hand, since \(u^*(\cdot )\) is the weak limit of \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\), we have

Combining (3.21) and (3.22), we see that \({\mathbb {E}}\int _t^T|u_\varepsilon (s)|^2ds\) actually has the limit \({\mathbb {E}}\int _t^T|u^*(s)|^2ds\). Therefore (recalling that \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) converges weakly to \(u^*(\cdot )\)),

which means that \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) converges strongly to \(u^*(\cdot )\) as \(\varepsilon \rightarrow 0\). \(\square \)

Remark 3.3

A similar result recently appeared in [32], which asserts that if Problem (M-SLQ) is open-loop solvable at (t, x, i), then the limit of any weakly/strongly convergent subsequence of \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) is an open-loop optimal control for (t, x, i). Our result sharpens that in [32] by showing the family \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) itself is strongly convergent when Problem (M-SLQ) is open-loop solvable. This improvement has at least two advantages. First, it serves as a crucial bridge to the weak closed-loop solvability presented in the next section. Second, it is much more convenient for computational purposes because subsequence extraction is not required.

Remark 3.4

In Example 1.1, since \(B=1\) and \(D=S=R=0\), we have

So the condition \({{{\mathcal {R}}}}\big ({\hat{S}}_\varepsilon (s,i)\big )\subseteq {{{\mathcal {R}}}}\big ({\hat{R}}_\varepsilon (s,i)\big ),\ {\mathrm{a.e.}}~s\in [0,T],\ i\in {{{\mathcal {S}}}}\) is not satisfied, which implies that GRE (1.8) has no regular solution.

4 Weak Closed-Loop Solvability

In this section, we study the equivalence between open-loop and weak closed-loop solvabilities of Problem (M-SLQ). We shall show that \(\Theta _\varepsilon (\cdot ,\cdot )\) and \(v_\varepsilon (\cdot )\) defined by (3.11) and (3.12) converge locally in [0, T), and that the limit pair \((\Theta ^*(\cdot ,\cdot ),v^*(\cdot ))\) is a weak closed-loop optimal strategy.

We start with a simple lemma, which enables us to work separately with \(\Theta _\varepsilon (\cdot ,\cdot )\) and \(v_\varepsilon (\cdot )\). Recall that the associated Problem (M-SLQ)\(^0\) is to minimize (1.6) subject to (1.5).

Lemma 4.1

Under Assumptions (H1) and (H2), if Problem (M-SLQ) is open-loop solvable, then so is Problem (M-SLQ)\(^0\).

Proof

For arbitrary \((t,x,i)\in [0,T)\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\), we note that if \(b(\cdot ,\cdot ),\sigma (\cdot ,\cdot ),g(\cdot ),q(\cdot ,\cdot ),\rho (\cdot ,\cdot )=0\), then the adapted solution \((\eta _\varepsilon (\cdot ),\zeta _\varepsilon (\cdot ), \xi ^\varepsilon _1(\cdot ),\cdots ,\xi ^\varepsilon _D(\cdot ))\) to BSDE (3.9) is identically zero, and hence the process \(v_\varepsilon (\cdot )\) defined by (3.12) is also identically zero. By Theorem 3.2, to prove that Problem (M-SLQ)\(^0\) is open-loop solvable at (t, x, i), we need to verify that the family \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) is bounded in \(L^2_{{\mathbb {F}}}(t,T;{\mathbb {R}}^m)\), where (see (3.14) and note that \(v_\varepsilon (\cdot )=0\)),

with \(X_\varepsilon (\cdot )\) is the solution to the following equation:

To this end, we return to Problem (M-SLQ). Let \(v_\varepsilon (\cdot )\) be defined in (3.12) and denote by \(X_\varepsilon ( \cdot \ ;t,x,i)\) and \(X_\varepsilon ( \cdot \ ;t,0,i)\) solutions to (3.10) with respect to the initial pairs (t, x, i) and (t, 0, i), respectively. Since Problem (M-SLQ) is open-loop solvable at both (t, x, i) and (t, 0, i), by Theorem 3.2, the families

are bounded in \(L^2_{{\mathbb {F}}}(t,T;{\mathbb {R}}^m)\). Note that due to that the process \(v_\varepsilon (\cdot )\) is independent of the initial state, the difference \(X_\varepsilon ( \cdot \ ;t,x,i)-X_\varepsilon ( \cdot \ ;t,0,i)\) also satisfies the same Eq. (4.2). Then by the uniqueness of adapted solutions of SDEs, we obtain that

which, combining (4.1) and (4.3), implies that

Since \(\{u_\varepsilon ( \cdot ,t,x,i)\}_{\varepsilon >0}\) and \(\{u_\varepsilon ( \cdot ,t,0,i)\}_{\varepsilon >0}\) are bounded in \(L^2_{{\mathbb {F}}}(t,T;{\mathbb {R}}^m)\), so is \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\). Finally, it follows from Theorem 3.2 that Problem (M-SLQ)\(^0\) is open-loop solvable. \(\square \)

Next, we prove that the family \(\{\Theta _\varepsilon (\cdot ,\cdot )\}_{\varepsilon >0}\) defined by (3.11) is locally convergent in [0, T).

Proposition 4.2

Let (H1) and (H2) hold. Suppose that Problem (M-SLQ)\(^0\) is open-loop solvable. Then the family \(\{\Theta _\varepsilon (\cdot ,\cdot )\}_{\varepsilon >0}\) defined by (3.11) converges in \(L^2(0,T';{\mathbb {R}}^{m\times n})\) for any \(0<T'<T\); that is, there exists a locally square-integrable deterministic function \(\Theta ^*:[0,T)\times {{{\mathcal {S}}}}\rightarrow {\mathbb {R}}^{m\times n}\) such that

Proof

We need to show that for any \(0<T'<T\), the family \(\{\Theta _\varepsilon (\cdot )\}_{\varepsilon >0}\) is Cauchy in \(L^2(0,T';{\mathbb {R}}^{m\times n})\). To this end, let us first fix an arbitrary initial \((t,i)\in [0,T)\times {{{\mathcal {S}}}}\) and let \(\Phi _\varepsilon (\cdot )\in L^2_{{\mathbb {F}}}(\Omega ;C([t,T];{\mathbb {R}}^{n\times n}))\) be the solution to the following SDE:

Clearly, for any initial state x, from the uniqueness of SDEs, the solution of (4.2) is given by

Since Problem (M-SLQ)\(^0\) is open-loop solvable, by Theorem 3.2, the family

is strongly convergent in \(L^2_{\mathbb {F}}(t,T;{\mathbb {R}}^{m})\) for any \(x{\in }{\mathbb {R}}^n\). It follows that \(\{\Theta _\varepsilon (\cdot ,\cdot )\Phi _\varepsilon (\cdot )\}_{\varepsilon >0}\) converges strongly in \(L^2_{\mathbb {F}}(t,T;{\mathbb {R}}^{m\times n})\) as \(\varepsilon \rightarrow 0\). Denote \(U_\varepsilon (\cdot )=\Theta _\varepsilon (\cdot ,\cdot )\Phi _\varepsilon (\cdot )\) and let \(U^*(\cdot )\) be the strong limit of \(U_\varepsilon (\cdot )\). By Jensen’s inequality, we get

Moreover, from (4.4), we see that \({\mathbb {E}}^\alpha [\Phi _\varepsilon (\cdot )]\) satisfies the following ODE:

By the standard results of ODE, combining (4.5), the family of continuous functions \({\mathbb {E}}[\Phi _\varepsilon (\cdot )]\) converges uniformly to the solution of

Note that \(\Phi _\varepsilon (t)=I_n\), we can define the following stopping time:

We claim that the family \(\{\Theta _\varepsilon (\cdot ,i)\}_{\varepsilon >0}\) is Cauchy in \(L^2(t,\tau ;{\mathbb {R}}^{m\times n})\) for each \(i\in {{{\mathcal {S}}}}\). Indeed, first note that when \(s\in [t,\tau ]\), one has for each \(i\in {{{\mathcal {S}}}}\),

Then we have

Since \(\{U_\varepsilon (\cdot )\}_{\varepsilon >0}\) is Cauchy in \(L^2_{{\mathbb {F}}}(t,T;{\mathbb {R}}^{m\times n})\) and \(\{\Phi _\varepsilon (\cdot )\}_{\varepsilon >0}\) converges uniformly on [t, T], the last two terms of the above inequality approach to zero as \(\varepsilon _1,\varepsilon _2\rightarrow 0\), which implies that \(\{\Theta _\varepsilon (\cdot ,i)\}_{\varepsilon >0}\) is Cauchy in \(L^2(t,\tau ;{\mathbb {R}}^{m\times n})\) for each \(i\in {{{\mathcal {S}}}}\).

Next we use a compactness argument to prove that, for each \(i\in {{{\mathcal {S}}}}\), \(\{\Theta _\varepsilon (\cdot ,i)\}_{\varepsilon >0}\) is actually Cauchy in \(L^2(0,T';{\mathbb {R}}^{m\times n})\) for any \(0<T'<T\). Take any \(T'\in (0,T)\). From the preceding argument we see that for each \(t\in [0,T']\), there exists a small \(\Delta _t>0\) such that \(\{\Theta _\varepsilon (\cdot ,i)\}_{\varepsilon >0}\) is Cauchy in \(L^2(t,t+\Delta _t;{\mathbb {R}}^{m\times n})\). Since \([0,T']\) is compact, we can choose finitely many \(t\in [0,T']\), say, \(t_1,t_2,...,t_k,\) such that \(\{\Theta _\varepsilon (\cdot ,i)\}_{\varepsilon >0}\) is Cauchy in each \(L^2(t_j,t_j+\Delta _{t_j};{\mathbb {R}}^{m\times n})\) and \([0,T']\subseteq \bigcup _{j=1}^k[t_j,t_j+\Delta _{t_j}]\). It follows that

The proof is therefore completed. \(\square \)

The following result shows that the family \(\{v_\varepsilon (\cdot )\}_{\varepsilon >0}\) defined by (3.12) is also locally convergent in [0, T).

Proposition 4.3

Let (H1) and (H2) hold. Suppose that Problem (M-SLQ) is open-loop solvable. Then the family \(\{v_\varepsilon (\cdot )\}_{\varepsilon >0}\) defined by (3.12) converges in \(L^2(0,T';{\mathbb {R}}^{m})\) for any \(0<T'<T\); that is, there exists a locally square-integrable deterministic function \(v^*(\cdot ):[0,T)\rightarrow {\mathbb {R}}^{m}\) such that

Proof

Let \(X_\varepsilon (s)\), \(0\leqslant s\leqslant T\), be the solution to the closed-loop system (3.10) with respect to initial time \(t=0\). Then, on the one hand, from the linearity of the state Eq. (1.1) and Lemma 2.1, we have

On the other hand, since Problem (M-SLQ) is open-loop solvable, Theorem 3.2 implies that the family

is Cauchy in \(L^2_{{\mathbb {F}}}(0,T;{\mathbb {R}}^m)\), i.e.,

Therefore

Now for every \(0<T'<T\). Since Problem (M-SLQ) is open-loop solvable, according to Lemma 4.1 and Proposition 4.2, the family \(\{\Theta _\varepsilon (\cdot ,i)\}_{\varepsilon >0}\) is Cauchy in \(L^2(0,T';{\mathbb {R}}^{m\times n})\) for every \(i\in {{{\mathcal {S}}}}\). Thus, combining (4.8), we have

which combing (4.6) and (4.7), implies that

This shows that the family \(\{v_\varepsilon (\cdot )\}_{\varepsilon >0}\) converges in \(L^2_{{\mathbb {F}}}(0,T';{\mathbb {R}}^m)\). \(\square \)

We are now ready to state and prove the main result of this section, which establishes the equivalence between open-loop and weak closed-loop solvability of Problem (M-SLQ).

Theorem 4.4

Let (H1) and (H2) hold. If Problem (M-SLQ) is open-loop solvable, then the limit pair \((\Theta ^*(\cdot ,\cdot ),v^*(\cdot ))\) obtained in Propositions 4.2 and 4.3 is a weak closed-loop optimal strategy of Problem (M-SLQ) on any [t, T). Consequently, the open-loop and weak closed-loop solvability of Problem (M-SLQ) are equivalent.

Proof

From Definition 2.6, it is obvious that the weak closed-loop solvability of Problem (M-SLQ) implies the open-loop solvability of Problem (M-SLQ). In the following, we consider the inverse case.

Take an arbitrary initial pair \((t,x,i)\in [0,T)\times {\mathbb {R}}^n\times {{{\mathcal {S}}}}\) and let \(\{u_\varepsilon (s);t\leqslant s\leqslant T\}_{\varepsilon >0}\) be the family defined by (3.14). Since Problem (M-SLQ) is open-loop solvable at (t, x, i), by Theorem 3.2, \(\{u_\varepsilon (s);t\leqslant s\leqslant T\}_{\varepsilon >0}\) converges strongly to an open-loop optimal control \(\{u^*(s);t\leqslant s\leqslant T\}_{\varepsilon >0}\) of Problem (M-SLQ) (for the initial pair (t, x, i)). Let \(\{X^*(s);t\leqslant s\leqslant T\}_{\varepsilon >0}\) be the corresponding optimal state process; i.e., \(X^*(\cdot )\) is the adapted solution of the following equation:

If we can show that

then \((\Theta ^*(\cdot ,\cdot ),v^*(\cdot ))\) is clearly a weak closed-loop optimal strategy of Problem (M-SLQ) on [t, T). To justify the argument, we note first that by Lemma 2.1, we obtain

where \(\{X_\varepsilon (s);t\leqslant s\leqslant T\}_{\varepsilon >0}\) is the solution of Eq. (3.10). Second, by Propositions 4.2 and 4.3, one has

It follows that for any \(0<T'<T\),

Recall that \(u_\varepsilon (s)=\Theta _\varepsilon (s,\alpha (s))X_\varepsilon (s)+v_\varepsilon (s)\) converges strongly to \(u_\varepsilon ^*(s)\), \(t\leqslant s\leqslant T\), in \(L^2_{\mathbb {F}}(t,T;{\mathbb {R}}^m)\) as \(\varepsilon \rightarrow 0\). Thus, (4.9) must hold. The above argument shows that the open-loop solvability implies the weak closed-loop solvability. Consequently, the open-loop and weak closed-loop solvability of Problem (M-SLQ) are equivalent. This completes the proof. \(\square \)

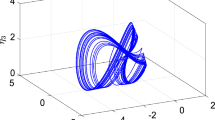

5 Examples

There are some (M-SLQ) problems that are open-loop solvable, but not closed-loop solvable; for such problems, one could not expect to get a regular solution (which does not exist) to the associated GRE (3.3), so that the state feedback representation of the open-loop optimal control might be impossible. In fact, Example 1.1 has illustrated this conclusion. However, Theorem 4.4 shows that the open-loop and weak closed-loop solvability of Problem (M-SLQ) are equivalent. In the following, we present another example to illustrate the procedure for finding weak closed-loop optimal strategies for some (M-SLQ) problems that are open-loop solvable (and hence weakly closed-loop solvable) but not closed-loop solvable.

Example 5.1

In order to present the procedure more clearly, we simplify the problem. Let \(T=1\) and \(D=2\), that is, the state space of \(\alpha (\cdot )\) is \({{{\mathcal {S}}}}=\{1,2\}\). For the generator \(\lambda (s)\triangleq [\lambda _{ij}(s)]_{i, j = 1, 2}\), note that \(\sum ^{2}_{j = 1} \lambda _{ij}(s) = 0\) for \(i\in {{{\mathcal {S}}}}\), then

Consider the following Problem (M-SLQ) with one-dimensional state equation

and the cost functional

where the nonhomogeneous term \(b(\cdot ,\cdot )\) is given by

It is easy to see that \(b(\cdot ,i)\in L^2_{\mathbb {F}}(\Omega ;L^1(0,1;{\mathbb {R}}))\) for each \(i\in {{{\mathcal {S}}}}\). In fact,

Since the term \(\exp \left\{ \int _0^s\sqrt{2\alpha (r)}dW(r)-\int _0^s\alpha (r)dr\right\} \) is a square-integrable martingale, note that \(\alpha (\cdot )\) belongs to \({{{\mathcal {S}}}}=\{1,2\}\), it follows from Doob’s maximal inequality that

Thus,

which implies that \(b(\cdot ,i)\in L^2_{\mathbb {F}}(\Omega ;L^1(0,1;{\mathbb {R}}))\) for each \(i\in {{{\mathcal {S}}}}\).

We first claim that this (M-SLQ) problem is not closed-loop solvable on any [t, 1]. Indeed, the generalized Riccati equation associate with this problem reads

and

whose solutions are \(P(s,1)=P(s,2)=1\), or \(P(s,i)\equiv 1,\) for \((s,i)\in [0,1]\times {{{\mathcal {S}}}}.\) Then for any \(s\in [t,1]\) and \(i\in {{{\mathcal {S}}}}\), we have

where

Therefore, the range inclusion condition is not satisfied. This implies that our claim holds.

In the following, we use Theorem 3.2 to conclude that the above (M-SLQ) problem is open-loop solvable (and hence, by Theorem 4.4, weakly closed-loop solvable). Without loss of generality, we consider only the open-loop solvability at \(t=0\). To this end, let \(\varepsilon >0\) be arbitrary and consider Riccati equation (3.3), which, in our example, read:

and

Solving the above equations yields

Or

Noting that the state space of \(\alpha (s)\) is \({{{\mathcal {S}}}}=\{1,2\}\), we let

Then, the corresponding BSDE (3.9) reads

Let \(f(s)=\frac{1}{\sqrt{1-s}}\). Using the variation of constants formula for BSDEs, and noting that \(W(\cdot )\) and \({\widetilde{N}}_k(\cdot )\) are \(({\mathbb {F}}, {\mathbb {P}})\)-martingales, we obtain

It should be point out that, in the above equality, we use the Fibini’s Theorem and the martingale property, i.e.,

Now, let

Then, the corresponding closed-loop system (3.10) can be written as

By the variation of constants formula for SDEs, we get

In light of Theorem 3.2, in order to prove the open-loop solvability at (0, x, i), it suffices to show the family \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) defined by

is bounded in \(L^2_{{\mathbb {F}}}(0,1;{\mathbb {R}})\). For this, let us first simplify (5.5). On the one hand, by Fubini’s theorem,

Similarly, on the other hand,

Consequently, we get

A short calculation gives

Therefore, \(\{u_\varepsilon (\cdot )\}_{\varepsilon >0}\) is bounded in \(L^2_{{\mathbb {F}}}(0,1;{\mathbb {R}})\). Now, let \(\varepsilon \rightarrow 0\) in (5.6), we get an open-loop optimal control:

From the above discussion, similar to the state process \(X(\cdot )\) of (5.1), the open-loop optimal control \(u^*(\cdot )\) also depends on the regime switching term \(\alpha (\cdot )\). That is to say, as the value of the switching \(\alpha (\cdot )\) varies, the open-loop optimal control \(u^*(\cdot )\) will be changed too.

Finally, we let \(\varepsilon \rightarrow 0\) in (5.3) and (5.4) to get a weak closed-loop optimal strategy \((\Theta ^*(\cdot ,\cdot ),v^*(\cdot ))\):

We put out that neither \(\Theta ^*(\cdot ,\cdot )\) and \(v^*(\cdot )\) is square-integrable on [0, 1). Indeed, one has

6 Conclusions

In this paper, we mainly study the open-loop and weak closed-loop solvabilities for a class of stochastic LQ optimal control problems of Markovian regime switching system. The main result is that these two solvabilities are equivalent. First, using the perturbation approach, we provide an alternative characterization of the open-loop solvability. Then we investigate the weak closed-loop solvability of the LQ problem of Markovian regime switching system, and establish the equivalent relationship between open-loop and weak closed-loop solvabilities. Finally, we present an example to illustrate the procedure for finding weak closed-loop optimal strategies in the circumstance of Markovian regime switching system.

References

Ait Rami, M., Moore, J.B., Zhou, X.Y.: Indefinite stochastic linear quadratic control and generalized differential Riccati equation. SIAM J. Control Optim. 40, 1296–1311 (2001)

Bensoussan, A.: Lectures on Stochstic Control, Part I: In Nonlinear Filtering and Stochastic Control. Lecture Notes in Mathematics, vol. 972. Springer, Berlin (1982)

Chen, S.P., Li, X.J., Zhou, X.Y.: Stochastic linear quadratic regulators with indefinite control weight costs. SIAM J. Control Optim. 36, 1685–1702 (1998)

Donnelly, C.: Sufficient stochastic maximum principle in a regime-switching diffusion model. Appl. Math. Optim. 64, 155–169 (2011)

Donnelly, C., Heunis, A.J.: Quadratic risk minimization in a regime-switching model with portfolio constraints. SIAM J. Control Optim. 50, 2431–2461 (2012)

Davis, M.H.A.: Linear Estimation and Stochastic Control. Chapman and Hall, London (1977)

Douissi, S., Wen, J.Q., Shi, Y.F.: Mean-field anticipated BSDEs driven by fractional brownian motion and related stochastic control problem. Appl. Math. Comput. 355, 282–298 (2019)

Hu, Y., Liang, G.C., Tang, S.J.: Systems of infinite horizon and ergodic BSDE arising in regime switching forward performance processes. arXiv:1807.01816

Hu, Y., Li, X., Wen, J.Q.: Anticipated backward stochastic differential equations with quadratic growth. arXiv:1807.01816

Hu, Y.Z., Oksendal, B.: Partial information linear quadratic control for jump diffusions. SIAM J. Control Optim. 47, 1744–1761 (2008)

Ji, Y., Chizeck, H.J.: Controllability, stabilizability, and continuous-time Markovian jump linear quadratic control. IEEE Trans. Autom. Control 35, 777–788 (1990)

Ji, Y., Chizeck, H.J.: Jump linear quadratic Gaussian control in continuous time. IEEE Trans. Autom. Control 37, 1884–1892 (1992)

Kalman, R.E.: Contributions to the theory of optimal control. Bol. Soc., Mat. Mex 5, 102–119 (1960)

Li, N., Wu, Z., Yu, Z.Y.: Indefinite stochastic linear-quadratic optimal control problems with random jumps and related stochastic Riccati equations. Sci. China Math. 61, 563–576 (2018)

Li, Y.S., Zheng, H.: Weak necessary and suficient stochastic maximum principle for Markovian regime-switching diffusion models. Appl. Math. Optim. 71, 39–77 (2015)

Li, X., Zhou, X.Y., Ait Rami, M.: Indefinite stochastic linear quadratic control with Markovian jumps in infinite time horizon. J. Global Optim. 27, 149–175 (2003)

Li, X., Zhou, X.Y., Lim, A.E.B.: Dynamic mean-variance portfolio selection with no-shorting constraints. SIAM J. Control Optim. 40, 1540–1555 (2002)

Liu, Y.J., Yin, G., Zhou, X.Y.: Near-optimal controls of random-switching LQ problems with indefinite control weight costs. Automatica 41, 1063–1070 (2005)

Mei, H.W., Yong, J.M.: Equilibrium strategies for time-inconsistent stochastic switching systems. ESAIM: COCV 25, 64 (2019)

Sun, J.R., Li, X., Yong, J.: Open-loop and closed-loop solvabilities for stochastic linear quadratic optimal control problems. SIAM J. Control Optim. 54, 2274–2308 (2016)

Sun, J.R., Wang, H.X.: Mean-field stochastic linear-quadratic optimal control problems: weak closed-loop solvability. arXiv:1907.01740

Sun, J.R., Yong, J.M.: Linear quadratic stochastic differential games: open-loop and closed-loop saddle points. SIAM J. Control Optim. 52, 4082–4121 (2014)

Shi, Y.F., Wen, J.Q., Xiong, J.: Backward doubly stochastic Volterra integral equations and applications to optimal control problems. arXiv:1906.10582

Wang, H.X., Sun, J.R., Yong, J.M.: Weak closed-loop solvability of stochastic linear-quadratic optimal control problems. Disc. Cont. Dyn. Syst. A 39, 2785–2805 (2019)

Wonham, W.M.: On a matrix Riccati equation of stochastic control. SIAM J. Control Optim. 6, 681–697 (1968)

Wu, Z., Wang, X.R.: FBSDE with Poisson process and its application to linear quadratic stochastic optimal control problem with random jumps. Acta Autom. Sin. 29, 821–826 (2003)

Yin, G., Zhou, X.Y.: Markowitz’s mean-variance portfolio selection with regime switching: from discrete-time models to their continuous-time limits. IEEE Trans. Autom. Control 49, 349–360 (2004)

Yong, J.M., Zhou, X.Y.: Stochastic Controls: Hamiltonian Systems and HJB Equations. Springer, New York (1999)

Yu, Z.Y.: Infinite horizon jump-diffusion forward-backward stochastic differential equations and their application to backward linear-quadratic problems. ESAIM 23, 1331–1359 (2017)

Zhang, Q., Yin, G.: On nearly optimal controls of hybrid LQG problems. IEEE Trans. Autom. Control 44, 2271–2282 (1999)

Zhang, X., Elliott, R.J., Siu, T.K.: A stochastic maximum principle for a Markov regime-switching jump-diffusion model and its application to finance. SIAM J. Control Optim. 50, 964–990 (2012)

Zhang, X., Li, X., Xiong, J.: Open-loop and closed-loop solvabilities for stochastic linear quadratic optimal control problems of Markov regime-switching system. https://www.researchgate.net/publication/336640066

Zhang, X., Siu, T.K., Meng, Q.B.: Portfolio selection in the enlarged Markovian regime-switching market. SIAM J. Control Optim. 48, 3368–3388 (2010)

Zhang, X., Sun, Z.Y., Xiong, J.: A general stochastic maximum principle for a Markov regime switching jump-diffusion model of mean-field type. SIAM J. Control Optim. 56, 2563–2592 (2018)

Zhou, X.Y., Li, D.: Continuous-time mean-variance portfolio selection: a stochastic LQ framework. Appl. Math. Optim. 42, 19–33 (2000)

Zhou, X.Y., Yin, G.: Markowitz’s mean-variance portfolio selection with regime switching: a continuous-time model. SIAM J. Control Optim. 42, 1466–1482 (2003)

Acknowledgements

The authors would like to thank the editors and the anonymous referees for many helpful comments and valuable suggestions on this paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest:

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Jiaqiang Wen was partially supported by National Natural Science Foundation of China (Grant Nos. 11871309, 11671229) and China Postdoctoral Science Foundation (Grant No. 2019M660968). Xun Li was partially supported by Research Grants Council of Hong Kong under Grants 15224215, 15255416 and 15213218. Jie Xiong was supported partially by Southern University of Science and Technology Start up fund Y01286120 and National Natural Science Foundation of China Grants 61873325 and 11831010.

Rights and permissions

About this article

Cite this article

Wen, J., Li, X. & Xiong, J. Weak Closed-Loop Solvability of Stochastic Linear Quadratic Optimal Control Problems of Markovian Regime Switching System. Appl Math Optim 84, 535–565 (2021). https://doi.org/10.1007/s00245-020-09653-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00245-020-09653-8

Keywords

- Stochastic linear quadratic optimal control

- Markovian regime switching

- Riccati equation

- Open-loop solvability

- Weak closed-loop solvability