Abstract

We consider the nonlinear wave equation known as the \(\phi ^{6}\) model in dimension 1+1. We describe the long-time behavior of this model’s solutions close to a sum of two kinks with energy slightly larger than twice the minimum energy of non-constant stationary solutions. We prove orbital stability of two moving kinks. We show for low energy excess \(\epsilon \) that these solutions can be described for a long time of order \(-\ln {(\epsilon )}\epsilon ^{-\frac{1}{2}}\) as the sum of two moving kinks such that each kink’s center is close to an explicit function which is a solution of an ordinary differential system. We give an optimal estimate in the energy norm of the remainder and we prove that this estimate is achieved during a finite instant t of order \(-\ln {(\epsilon )}\epsilon ^{-\frac{1}{2}}.\)

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Background

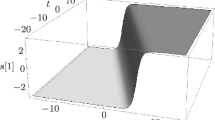

We consider a nonlinear wave equation known as the \(\phi ^{6}\) model. For the potential function \(U(\phi )=\phi ^{2}(1-\phi ^{2})^{2}\) and \(\dot{U}(\phi )=2\phi -8\phi ^{3}+6\phi ^{5},\) the equation is written as

The potential energy \(E_{pot},\) the kinetic energy \(E_{kin}\) and total energy \(E_{total}\) associated to the Eq. (1) are given by

The vacuum set \({\mathcal {V}}\) of the potential function U is the set \(U^{{-1}}\{0\}=\{0,1,{-}1\}.\) We say that if a solution \(\phi (t,x)\) of the integral equation associated to (1) has \(E_{total}(\phi ,\partial _{t}\phi )<+\infty ,\) then it is in the energy space. The solutions of (1) in the energy space have constant total energy \(E_{total}(\phi (t),\partial _{t}\phi (t)).\)

From standard energy estimate techniques, the Cauchy Problem associated to (1) is locally well-posed in the energy space. Moreover, if \(E_{total}(\phi (0),\partial _{t}\phi (0))=E_{0}<{+}\,\infty ,\) then there exists \(M(E_{0})>0\) such that \(\left\Vert \phi (0,x)\right\Vert _{L^{\infty }({\mathbb {R}})}<M(E_{0}),\) otherwise the facts that \(U\in C^{\infty }({\mathbb {R}})\) and \(\lim _{\phi \rightarrow \pm \infty }U(\phi )={+}\,\infty \) would imply that \(\int _{{\mathbb {R}}}U(\phi (0,x))\,dx>E_{0}.\) Therefore, similarly to the proof of Theorem 6.1 from the book [27] of Shatah and Struwe, we can verify that the partial differential equation (1) is globally well-posed in the energy space since U is a Lipschitz function when restricted to the space of real functions \(\phi \) satisfying \(\left\Vert \phi \right\Vert _{L^{\infty }({\mathbb {R}})}<K_{0}\) for some positive number \(K_{0}.\)

The stationary solutions of (1) are the critical points of the potential energy. The unique constant solutions of (1) in the energy space are the functions \(\phi \equiv v,\) for any \(v\in {\mathcal {V}}.\) The only non-constant stationary solutions of (1) with finite total energy are the topological solitons called kinks and anti-kinks, for more details see chapter 5 of [19]. Each topological soliton H connects different numbers \(v_{1},\,v_{2}\in {\mathcal {V}},\) more precisely,

The kinks of (1) are given by

for any real a. The anti-kinks of (1) are given by \({-}H_{0,1}(x-a),\,H_{0,1}({-}x+a)\) for any \(a\in {\mathbb {R}}.\)

In the article [21], for the \(\phi ^{6}\) model, Manton did approximate computations to verify that the force between two static kinks is repulsive and the force between a kink and anti-kink is attractive. Furthermore, it was also obtained by approximate computations in [21] that the force of interaction between two topological solitons of the \(\phi ^{6}\) model has an exponential decay with the distance between the solitons.

The study of kink and multi-kinks solutions of nonlinear wave equations has applications in many domains of mathematical physics. More precisely, the model (1) that we study has applications in condensed matter physics [2] and cosmology [9, 12, 31].

It is well known that the set of solutions in energy space of (1) for any potential U is invariant under space translation, time translation, and space reflection. Moreover, if H is a stationary solution of (1) and \({-}1<v<1\), then the function

which is denominated the Lorentz transformation of H, is also a solution of the partial differential equation (1).

The problem of stability of multi-kinks is of great interest in mathematical physics, see for example [6, 8]. For the integrable model mKdV, Muñoz proved in [23] the \(H^{1}\) stability and asymptotic stability of multi-kinks. However, for many non-integrable models such as the \(\phi ^{6}\) nonlinear wave equation, the asymptotic and long-time dynamics of multi-kinks after the instant where the collision or interaction happens are still unknown, even though there are numerical studies of kink-kink collision for the \(\phi ^{6}\) model, see [8], which motivate our research on the topic of the description of long time behavior of a kink-kink pair.

For one-dimensional nonlinear wave equation models, results of stability of a single kink were obtained, for example, asymptotic stability under odd perturbations of a single kink of \(\phi ^{4}\) model was proved in [16] and the study of the decay rate of this odd perturbation during a long time was studied in [5]. Also, in [17], Martel, Muñoz, Kowalczyk, and Van Den Bosch proved asymptotic stability of a single kink for a general class of nonlinear wave equations, including the model which we study here.

The main purpose of our paper is to describe the long time behavior of solutions \(\phi (t,x)\) of (1) in the energy space such that

with total energy equal to \(2E_{pot}(H_{01})+\epsilon ,\) for \(0<\epsilon \ll 1.\) More precisely, we proved orbital stability for a sum of two moving kinks with total energy \(2E_{pot}(H_{0,1})+\epsilon \) and we verified that the remainder has a better estimate during a long time interval which goes to \({\mathbb {R}}\) as \(\epsilon \rightarrow 0,\) indeed we proved that the estimate of the remainder during this long time interval is optimal. Also, we prove that the dynamics of the kinks’ movement is very close to two explicit functions \(d_{j}:{\mathbb {R}}\rightarrow {\mathbb {R}}\) defined in Theorem 4 during a long time interval. This result is very important to understand the behavior of two kinks after the instant of collision, which happens when the kinetic energy is minimal, indeed, our main results Theorems 2 and 4 describe the dynamics of the kinks before and after the collision instant for a long time interval. The numerical study of interaction and collision between kinks for the \(\phi ^{6}\) model was done in [8], in which it was verified that the collision of kinks is close to an elastic collision when the speed of each kink is low and smaller than a critical speed \(v_{c}\).

For nonlinear wave equation models in dimension \(2+1,\) there are similar results obtained in the dynamics of topological multi-solitons. For the Higgs Model, there are results in the description of the dynamics of multi-vortices in [28] obtained by Stuart and in [11] obtained by Gustafson and Sigal. Indeed, we took inspiration from the proof and statement of Theorem 2 of [11] to construct our main results. Also, in [29], Stuart described the dynamics of monopole solutions for the Yang–Mills–Higgs equation. For more references, see also [7, 10, 20, 30].

In [1], Bethuel, Orlandi, and Smets described the asymptotic behavior of solutions of a parabolic Ginzburg–Landau equation closed to multi-vortices in the initial instant. For more references, see also [14, 26].

There are also results in the dynamics of multi-vortices for nonlinear Schrödinger equation, for example, the description of the dynamics of multi-vortices for the Gross–Pitaevski equation was obtained in [24] by Ovchinnikov and Sigal and results in the dynamics of vortices for the Ginzburg–Landau–Schrödinger equations were proved in [4] by Colliander and Jerrard, see also [15] for more information about Gross–Pitaevski equation.

1.2 Main results

We recall that the objective of this paper is to show orbital stability for the solutions of the Eq. (1) which are close to a sum of two interacting kinks in an initial instant and estimate the size of the time interval where better stability properties hold. The main techniques of the proof are modulation techniques adapted from [13, 22, 25] and a refined energy estimate method to control the size of the remainder term.

Notation 1

For any \(D\subset {\mathbb {R}},\) any non-negative real function \(f:D\subset {\mathbb {R}}\rightarrow {\mathbb {R}},\) a real function g with domain D is in \(O\left( f(x)\right) \) if and only if there is a uniform constant \(C>0\) such that \(0\le \left| g(x)\right| \le C f(x).\) We denote that two real non-negative functions \(f,g:D\subset {\mathbb {R}}\rightarrow {\mathbb {R}}_{\ge 0}\) satisfy

if there is a constant \(C>0\) such that

If \(f\lesssim g\) and \(g \lesssim f,\) we denote that \(f\cong g.\) We use the notation \((x)_{+}\,{:=}\,\max (x,0)\). If \(g(t,x) \in C^{1}({\mathbb {R}},L^{2}({\mathbb {R}}))\cap C({\mathbb {R}},H^{1}({\mathbb {R}})),\) then we define \(\overrightarrow{g(t)} \in H^{1}({\mathbb {R}})\times L^{2}({\mathbb {R}})\) by

and we also denote the energy norm of the remainder \(\overrightarrow{g(t)}\) as

to simplify our notation in the text, where the norms \(\left\Vert \cdot \right\Vert _{H^{1}},\,\left\Vert \cdot \right\Vert _{L^{2}}\) are defined, respectively,

for any \(f_{1}\in H^{1}({\mathbb {R}})\) and any \(f_{2}\in L^{2}({\mathbb {R}}).\) Finally, we consider the hyperbolic functions \({{\,\textrm{sech}\,}},\,\cosh :{\mathbb {R}}\rightarrow {\mathbb {R}}\) and we are going to use the following notations

Definition 1

We define S as the set \(g \in L^{\infty }({\mathbb {R}})\) such that

From the observations made about the local well-posedness of partial differential equation (1) in the energy space and, since \(1,\,{-}1\) are in \({\mathcal {V}},\) we have that (1) is also locally well-posed in the affine space \(S\times L^{2}({\mathbb {R}}).\) Motivated by the proof and computations that we are going to present, we also consider:

Definition 2

We define for \(x_{1},\,x_{2}\in {\mathbb {R}}\)

and we say that \(x_{2}\) is the kink center of \(H^{x_{2}}_{0,1}(x)\) and \(x_{1}\) is the kink center of \(H^{x_{1}}_{-1,0}(x).\)

There are also non-stationary solutions \((\phi (t,x),\partial _{t}\phi (t,x))\) of (1) with finite total energy \(E_{total}(\phi (t),\partial _{t}\phi (t))\) that satisfy for all \(t\in {\mathbb {R}}\)

But, for any \(a\in {\mathbb {R}},\) the kinks \(H_{0,1}(x-a)\) are the unique functions that minimize the potential energy in the set of functions \(\phi (x)\) satisfying condition (2), the proof of this fact follows from the Bogomolny identity, see [19] or section 2 of [13]. By similar reasoning, we can verify that all functions \(\phi (x)\in S\) have \(E_{pot}(\phi )>2E_{pot}(H_{0,1}).\)

Definition 3

We define the energy excess \(\epsilon \) of a solution \((\phi (t),\partial _{t}\phi (t))\in S\times L^{2}({\mathbb {R}})\) as the following value

We recall the notation \((x)_{+}\,{:=}\,\max (x,0)\). It’s not difficult to verify the following inequalities

-

(D1)

\(\left|H_{0,1}(x)\right|\le e^{-\sqrt{2}(-x)_{+}},\)

-

(D2)

\(\left|H_{-1,0}(x)\right|\le e^{-\sqrt{2}(x)_{+}},\)

-

(D3)

\(\left|\dot{H}_{0,1}(x)\right|\le \sqrt{2}e^{-\sqrt{2}(-x)_{+}},\)

-

(D4)

\(\left|\dot{H}_{-1,0}(x)\right|\le \sqrt{2}e^{-\sqrt{2}(x)_{+}}.\)

Moreover, since

we can verify by induction the following estimate

for all \(k\in {\mathbb {N}}{\setminus } \{0\}.\) The following result is crucial in the framework of this manuscript:

Lemma 1

(Modulation Lemma). There exist \(C_{0},\delta _{0}>0\), such that if \(0<\delta \le \delta _{0}\), \(x_{1},\,x_{2}\) are real numbers with \(x_{2}-x_{1}\ge \frac{1}{\delta }\) and \(g \in H^{1}({\mathbb {R}})\) satisfies \(\left\Vert g\right\Vert _{H^{1}}\le \delta \), then for \(\phi (x)=H_{-1,0}(x-x_{1})+H_{0,1}(x-x_{2})+g(x)\), there exist unique \(\, y_{1},\, y_{2}\) such that for

the four following statements are true

-

1

\(\langle g_{1},\, \partial _{x}H_{-1,0}(x-y_{1})\rangle _{L^{2}}=0,\)

-

2

\(\langle g_{1},\, \partial _{x}H_{0,1}(x-y_{2})\rangle _{L^{2}}=0,\)

-

3

\(\left\Vert g_{1}\right\Vert _{H^{1}}\le C_{0}\delta ,\)

-

4

\(\left|y_{2}-x_{2}\right|+\left|y_{1}-x_{1}\right|\le C_{0}\delta .\)

We will refer to the first and second statements as the orthogonality conditions of the Modulation Lemma.

Proof

The proof follows from the implicit function theorem for Banach spaces. \(\square \)

Now, our main results are the following:

Theorem 2

There exist \(C, \delta _{0}>0\), such that if \(\epsilon < \delta _{0}\) and

with \(E_{total}(\phi (0),\partial _{t}\phi (0))=2E_{pot}(H_{0,1})+\epsilon \), then there exist functions \(x_{1}, \,x_{2} \in C^{2}({\mathbb {R}})\) such that, for all \(t\in {\mathbb {R}},\) the unique global time solution \(\phi (t,x)\) of (1) is given by

with g(t) satisfying, for any \(t\in {\mathbb {R}},\) the orthogonality conditions of the Modulation Lemma and

Furthermore, we have that

Remark 1

In notation of the statement of Theorem 2, for any \(\delta >0,\) there exists \(K(\delta )\in (0,1)\) such that if \(0<\epsilon <K(\delta ),\) \(E_{total}(\phi (0),\partial _{t}\phi (0))=2E_{pot}(H_{0,1})+\epsilon ,\) then we have that \(\left\Vert (g(0),\partial _{t}g(0))\right\Vert _{H^{1}\times L^{2}}<\delta \) and \(x_{2}(0)-x_{1}(0)>\frac{1}{\delta },\) for the proof see Lemma 21 and Corollary 22 in the Appendix Section A.

Theorem 3

In notation of Theorem 2, there exist constants \(\delta ,\,\kappa >0\) such that if \(0<\epsilon <\delta ,\) then \(\frac{\epsilon }{\kappa +1}\le \left\Vert \overrightarrow{g(T)}\right\Vert \) for some \(T\in {\mathbb {R}}\) satisfying \(0\le T\le (\kappa +1)\frac{\ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}}.\)

Proof

See the Appendix Section B. \(\square \)

Remark 2

Theorem 3 implies that estimate (7) is relevant in a time interval \(\left( {-}T,T\right) \) for a \(T>0\) of order \({-}\epsilon ^{{-}\frac{1}{2}}\ln {(\epsilon )}.\) More precisely, for any function \(r:{\mathbb {R}}_{+}\rightarrow {\mathbb {R}}_{+}\) with \(\lim _{h\rightarrow 0}r(h)=0,\) there is a positive value \(\delta (r)\) such that if \(0<\epsilon <\delta (r)\) and \(\left\Vert \overrightarrow{g(0)}\right\Vert \le r(\epsilon )\epsilon ,\) then \(\epsilon \lesssim \left\Vert \overrightarrow{g(t)}\right\Vert \) for some \(0<t=O\Big (\frac{\ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}}\Big ).\)

Remark 3

Theorem 3 also implies the existence of a \(\delta _{0}>0\) such that if \(0<\epsilon <\delta _{0},\) then, for any \((\phi (0,x),\partial _{t}\phi (0,x))\in S\times L^{2}({\mathbb {R}})\) with \(E_{total}(\phi (0),\partial _{t}\phi (0))\) equals to \(2E_{pot}(H_{0,1})+\epsilon ,\,g(t,x)\) defined in identity (5) satisfies \(\epsilon \lesssim \limsup \limits _{t\rightarrow +\infty }\left\Vert \overrightarrow{g(t)}\right\Vert ,\) similarly we have that \(\epsilon \lesssim \limsup \limits _{t\rightarrow -\infty }\left\Vert \overrightarrow{g(t)}\right\Vert .\)

Theorem 4

Let \(\phi \) satisfy the assumptions in Theorem 2 and \(x_{1},\, x_{2},\) and g be as in the conclusion of this theorem. Let the functions \(d_{1},\, d_{2}\) be defined for any \(t\in {\mathbb {R}}\) by

where \(a,\,b,\,c\in {\mathbb {R}}\) and \(v\in (0,1)\) are the unique real values satisfying \(d_{j}(0)=x_{j}(0),\,\dot{d}_{j}(0)=\dot{x}_{j}(0)\) for \(j\in \{1,\,2\}.\) Let \(d(t)=d_{2}(t)-d_{1}(t),\,z(t)=x_{2}(t)-x_{1}(t).\) Then, for all \(t\in {\mathbb {R}},\) we have

Furthermore, for any \(t\in {\mathbb {R}},\)

Remark 4

If \(\left\Vert \overrightarrow{g(0)}\right\Vert =O\left( \epsilon \right) ,\) then the estimates (10) and (11) imply that the functions \(x_{j}(t),\,\dot{x}_{j}(t)\) are very close to \(d_{j}(t),\,\dot{d}_{j}(t)\) during a time interval of order \({-}\ln {(\epsilon )}\epsilon ^{-\frac{1}{2}}.\)

Remark 5

The proof of Theorems 2 and 4 for \(t\le 0\) is analogous to the proof for \(t\ge 0,\) so we will only prove them for \(t\ge 0.\)

Theorem 4 describes the repulsive behavior of the kinks. More precisely, if the kinetic energy of the kinks and the energy norm of the remainder g are small enough in the initial instant \(t=0,\) then the kinks will move away with displacement \(z(t)\cong \epsilon ^{\frac{1}{2}}t+\ln {\frac{1}{\epsilon }}\) when \(t>0\) is big enough belonging to a large time interval.

Furthermore, using Theorem 4, we can also deduce the following corollary.

Corollary 5

With the same hypotheses as in Theorem 4, we have that

Proof of Corollary 5

It follows directly from Theorem 4 and from Lemma 19 presented in the Appendix Section A. \(\square \)

1.3 Resume of the proof

In this subsection, we present how the article is organized and explain briefly the content of each section.

Section 2. In this section, we prove orbital stability of a perturbation of a sum of two kinks. Moreover, we prove that if the initial data \((\phi (0,x),\partial _{t}\phi (0,x))\) satisfies the hypotheses of Theorem 2, then there are real functions \(x_{1},\,x_{2}\) of class \(C^{2}\) such that for all \(t\ge 0\)

First, for every \(z>0,\) we are going to demonstrate the following estimate

The proof of this inequality is similar to the demonstration of Lemma 2.7 of [13] and it follows using the Fundamental Theorem of Calculus.

The proof of the orbital stability will follow from studying the expression

using the fact that the kinks are critical points of \(E_{pot}\) and the spectral properties of the operator \(D^{2}E_{pot}\left( H_{0,1}\right) ,\) which is also non-negative. Moreover, from the modulation lemma, we will introduce the functions \(x_{2},\,x_{1}\) that will guarantee the following coercivity property

Therefore, the estimate above and (12) will imply that

From the orthogonality conditions of the Modulation Lemma and standard ordinary differential equation techniques, see Chapter 1 of [3], we also obtain uniform bounds for \(\left\Vert \dot{x}_{j}(t)\right\Vert _{L^{\infty }({\mathbb {R}})},\,\left\Vert \ddot{x}_{j}(t)\right\Vert _{L^{\infty }({\mathbb {R}})}\) for \(j\in \{1,\,2\}.\) More precisely, the modulation parameters \(x_{1}\) and \(x_{2}\) are going to satisfying the following estimate

The main techniques of this section are an adaption of sections 2 and 3 of [13].

Section 3. In this section, we study the long-time behavior of \(\dot{x}_{j}(t),\,x_{j}(t)\) for \(j\in \{1,\,2\}.\) More precisely, we prove that the parameters \(x_{1}\) and \(x_{2}\) satisfy the following system of differential inequalities

for \(j\in \{1,2\},\) where \(\alpha (t),\zeta (t)\) are non-negative functions depending only on the functions \(\left( x_{j}(t)\right) _{j\in \{1,2\}},\, \left( \dot{x}_{j}(t)\right) _{j\in \{1,2\}},\,\left\Vert \overrightarrow{g(t)}\right\Vert \) and satisfying

because of the estimates (13) and (14). However, the estimates (17) can be improved during a large time interval if we could use the estimate (7) in the place of \(\left\Vert \overrightarrow{g(t)}\right\Vert =O(\epsilon ^{\frac{1}{2}}).\)

Our proof of estimates (15), (16) is based on the proof of Lemma 3.5 from [13]. First, for each \(j\in \{1,2\},\) the estimate (15) is obtained from the time derivative of the equations

which are the orthogonality conditions of the Modulation Lemma. Indeed, we are going to obtain that

Next, we are going to construct a smooth cut-off function \(0\le \chi \le 1\) satisfying

where \(0<\gamma ,\,\theta <1\) are parameters that will be chosen later with the objective of minimizing the modulus of the time derivative of

from which with the second time derivative of the orthogonality conditions of Modulation Lemma and the partial differential equation (1), we will deduce the estimate (16) for \(j\in \{1,\,2\}.\)

Section 4. In Section 4, we introduce a function F(t) with the objective of controlling \(\left\Vert \overrightarrow{g(t)}\right\Vert \) for a long time interval. More precisely, we show that the function F(t) satisfies for a constant \(K>0\) the global estimate \(\left\Vert \overrightarrow{g(t)}\right\Vert ^{2}\lesssim F(t)+K\epsilon ^{2}\) and we show that \(\vert \dot{F}(t)\vert \) is small enough for a long time interval. We start the function from the quadratic part of the total energy of \(\phi (t),\) more precisely with

However, we obtain that the terms of worst decay that appear in the computation of \(\dot{D}(t)\) are of the form

where \(k\in \{1,2,3\}\) and the function J satisfies for some \(l\in {\mathbb {Q}}_{\ge 0}\) the following estimates

and

But, we can cancel these bad terms after we add to the function D(t) correction terms of the form

and now, in the time derivative of the sum of D(t) with these correction terms, we obtain an expression with a size of order \(\epsilon ^{l+\frac{1}{2}}\left\Vert \overrightarrow{g(t)}\right\Vert ^{k}\) which is much smaller than \(\epsilon ^{l}\left\Vert \overrightarrow{g(t)}\right\Vert ^{k}\) because of inequality (14) obtained in Section 2 of this manuscript. Next, we consider a smooth cut-off function \(0\le \omega \le 1\) satisfying

and \(\omega _{1}(t,x)=\omega \left( \frac{x-x_{1}(t)}{x_{2}(t)-x_{1}(t)}\right) .\) Based on the argument in the proof of Lemma 4.2 of [13], we aggregate the last correction term

whose time derivative will cancel with the term

which comes from \(\dot{D}(t),\) since we cannot remove this expression using the correction terms similar to (19). Finally, we evaluate the time derivative of the function F(t) obtained from the sum D(t) with all the correction terms described above.

Remaining Sections. In the remaining part of this paper, we prove our main results, the estimate (7) of Theorem 2 is a consequence of the energy estimate obtained in Section 4 and the estimates with high precision of the modulation parameters \(x_{1}(t),\,x_{2}(t)\) which are obtained in Section 5. In Section 5, we prove the result of Theorem 4, where we study the evolution of the precision of the modulation parameters estimates by comparing it with a solution of a system of ordinary differential equations. Complementary information is given in Appendix Section A and the proof of Theorem 3 is in the Appendix Section B.

2 Global Stability of Two Moving Kinks

Before the presentation of the proofs of the main theorems, we define a function to study the potential energy of a sum of two kinks.

Definition 4

The function \(A:{\mathbb {R}}_{+}\rightarrow {\mathbb {R}}\) is defined by

The study of the function A is essential to obtain global control of the norm of the remainder g and the lower bound of \(x_{2}(t)-x_{1}(t)\) in Theorem 2.

Remark 6

It is easy to verify that \( E_{pot}(H_{0,1}(x-x_{2})+H_{-1,0}(x-x_{1}))=E_{pot}(H_{0,1}(x-(x_{2}-x_{1}))+H_{-1,0}(x)). \)

We will use several times the following elementary estimate from the Lemma 2.5 of [13] given by:

Lemma 6

For any real numbers \(x_{2},x_{1}\), such that \(x_{2}-x_{1}>0\) and \(\alpha ,\,\beta >0\) with \(\alpha \ne \beta \) the following bound holds:

For any \(\alpha >0\), the following bound holds

The main result of this section is the following

Lemma 7

The function A is of class \(C^{2}\) and there is a constant \(C>0\), such that

-

1.

\(\left|{\ddot{A}}(z)-4\sqrt{2} e^{-\sqrt{2}z}\right|\le C(z+1)e^{-2\sqrt{2}z},\)

-

2.

\(\left|\dot{A}(z)+4e^{-\sqrt{2}z}\right|\le C(z+1)e^{-2\sqrt{2}z},\)

-

3.

\(\left|A(z)-2E_{pot}(H_{0,1})-2\sqrt{2}e^{-\sqrt{2}z}\right|\le C(z+1)e^{-2\sqrt{2}z}.\)

Proof

By the definition of A, it’s clear that

Since the functions U and \(H_{0,1}\) are smooth and \(\partial _{x}H_{0,1}(x)\) has exponential decay when \(\vert x\vert \rightarrow +\infty \), it is possible to differentiate A(z) in z. More precisely, we obtain

For similar reasons, it is always possible to differentiate A(z) twice, precisely, we obtain

Then, integrating by parts, we obtain

Now, we consider the function

Then, we have

Also, it is not difficult to verify the following identity

So, the identities (25) and (24) imply the following inequality

Since U is smooth and \(\left\Vert H_{0,1}\right\Vert _{L^{\infty }}=1\), we have that there is a constant \(C>0\) such that

Now, using the inequalities from (D1) to (D4) and Lemma 6 to inequality (26), we obtain that there exists a constant \(C_{1}\) independent of z such that

Also, it is not difficult to verify that the estimate

and the identity (23) imply the inequality

Since we have the following identity and estimate from Lemma 6

we obtain, then:

which clearly implies with (27) the inequality

Also, we have the identity

for the proof see the end of Appendix A. Since we have the identity \({\ddot{U}}(0)-{\ddot{U}}(\phi )=24 \phi ^{2}-30 \phi ^{4}\), by integration by parts, we obtain

In conclusion, inequality (32) is equivalent to \(\left|{\ddot{A}}(z)-4\sqrt{2}e^{-\sqrt{2}z}\right|\lesssim (z+1) e^{-2\sqrt{2}z}.\)

The identities

and Lemma 6 imply the following estimate for \(z>0\)

so \(\lim _{\vert z\vert \rightarrow +\infty } \left|\dot{A}(z)\right|=0\). In conclusion, integrating inequality \(\left|\ddot{A}(z)-4\sqrt{2}e^{-\sqrt{2}z}\right|\lesssim (z+1) e^{-2\sqrt{2}z}\) from z to \(+\infty \) we obtain the second result of the lemma

Finally, from the fact that \(\lim _{z\rightarrow +\infty } E_{pot}(H_{-1,0}+H^{z}_{0,1}(x))=2E_{pot}(H_{0,1})\), we obtain the last estimate integrating inequality (34) from z to \(+\infty \), which is

\(\square \)

It is not difficult to verify that the Fréchet derivative of \(E_{pot}\) as a linear functional from \(H^{1}({\mathbb {R}})\) to \({\mathbb {R}}\) is given by

Also, for any \(v,\, w \in H^{1}({\mathbb {R}}),\) it is not difficult to verify that

Moreover, the operator \(D^{2}E_{pot}\left( H_{0,1}\right) : H^{2}({\mathbb {R}})\subset L^{2}({\mathbb {R}})\rightarrow L^{2}({\mathbb {R}})\) satisfies the following property.

Lemma 8

The operator \(D^{2}E_{pot}\left( H_{0,1}\right) \) satisfies:

for a constant \(c>0\) and any \(g\in H^{1}({\mathbb {R}}).\)

Proof

See Proposition 2.2 from [13], see also [18]. \(\square \)

Lemma 9

(Coercivity Lemma). There exist \(C, c,\,\delta >0,\) such that if \(x_{2}-x_{1}\ge \frac{1}{\delta }\), then for any \(g \in H^{1}({\mathbb {R}})\) we have

Proof of Coercivity Lemma

The proof of this Lemma is analogous to the proof of Lemma 2.4 in [13]. \(\square \)

Lemma 10

There is a constant \(C_{2}\), such that if \(x_{2}-x_{1}>0\), then

Proof

By the definition of the potential energy, the equation (3), and the exponential decay of the two kinks functions, we have that

as a bounded linear operator from \(L^{2}({\mathbb {R}})\) to \({\mathbb {C}}\). So, we have that

and, then, the conclusion follows directly from Lemma 6, (D1) and (D2). \(\square \)

Theorem 11

(Orbital Stability of a sum of two moving kinks). There exists \(\delta _{0}>0\) such that if the solution \(\phi \) of (1) satisfies \((\phi (0),\partial _{t}\phi (0))\in S\times L^{2}({\mathbb {R}})\) and the energy excess \(\epsilon =E_{total}(\phi )-2E_{pot}(H_{0,1})\) is smaller than \(\delta _{0}\), then there exist \(x_{1},\, x_{2}:{\mathbb {R}}\rightarrow {\mathbb {R}}\) functions of class \(C^{2}\), such that for all \(t \in {\mathbb {R}}\) denoting \(g(t)=\phi (t)-H_{0,1}(x-x_{2}(t))-H_{-1,0}(x-x_{1}(t))\) and \(z(t)=x_{2}(t)-x_{1}(t)\), we have:

-

1.

\(\left\Vert g(t)\right\Vert _{H^{1}}=O(\epsilon ^{\frac{1}{2}}),\)

-

2.

\(z(t)\ge \frac{1}{\sqrt{2}}\left[ \ln {\frac{1}{\epsilon }}+\ln {2}\right] ,\)

-

3.

\(\left\Vert \partial _{t}\phi (t)\right\Vert _{L^{2}}^{2}\le 2\epsilon ,\)

-

4.

\(\max _{j \in \{1,2\}}\left|\dot{x}_{j}(t)\right|^{2}+\max _{j \in \{1,2\}}\left|\ddot{x}_{j}(t)\right|=O(\epsilon ).\)

Proof

First, from the fact that \(E_{total}(\phi (x))>2E_{pot}(H_{0,1}),\) we deduce, from the conservation of total energy, the estimate

From Remark 1, we can assume if \(\epsilon \ll 1\) that there exist \(w_{1},\,w_{2} \in {\mathbb {R}}\) such that

and

for a small constant \(\delta >0\). Since the Eq. (1) is locally well-posed in the space \(S\times L^{2}({\mathbb {R}}),\) we conclude that there is a \(\delta _{1}>0\) depending only on \(\delta \) and \(\epsilon \) such that if \(-\delta _{1} \le t \le \delta _{1},\) then

If \(\delta ,\epsilon >0\) are small enough, then, from the inequality (40) and the Modulation Lemma, we obtain in the time interval \([-\delta _{1},\delta _{1}]\) the existence of modulation parameters \(x_{1}(t),\,x_{2}(t)\) such that for

we have

From now on, we denote \(z(t)=x_{2}(t)-x_{1}(t).\) From the conservation of the total energy, we have for \(-\delta _{1} \le t \le \delta _{1}\) that

From Lemma 7 and (42), the above identity implies that

for any \(t\in [-\delta _{1},\delta _{1}].\) From (38), it is not difficult to verify that \(\left|\langle DE_{pot}(H^{x_{2}(t)}_{0,1}\right|\)\({+H^{x_{1}(t)}_{-1,0}),\,g(t)\rangle _{L^{2}({\mathbb {R}})}}\le C_{2}e^{-\sqrt{2}z(t)}\left\Vert g(t)\right\Vert _{H^{1}({\mathbb {R}})}.\) So, the Eq. (43) and the Coercivity Lemma imply, while \(-\delta _{1}\le t\le \delta _{1}\), the following inequality

Finally, applying the Young inequality in the term \(C_{2}e^{-\sqrt{2}z(t)}\left\Vert g(t)\right\Vert _{H^{1}({\mathbb {R}})}\), we obtain that the inequality (44) can be rewritten in the form

Then, the estimates (45), (42) imply for \(\delta >0\) small enough the following inequality

So, the inequality (46) implies the estimates

for \(t \in [-\delta _{1},\delta _{1}]\). In conclusion, if \(\frac{1}{\delta }\lesssim \ln {(\frac{1}{\epsilon })}^{\frac{1}{2}},\) we can conclude by a bootstrap argument that the inequalities (39), (47) are true for all \(t \in {\mathbb {R}}.\) More precisely, we study the set

and prove that \(M=\sup _{b \in C}b=+\infty .\) We already have checked that C is not empty, also C is closed by its definition. Now from the previous argument, we can verify that C is open. So, by connectivity, we obtain that \(C={\mathbb {R}}_{>0}.\)

In conclusion, it remains to prove that the modulation parameters \(x_{1}(t),\,x_{2}(t)\) are of class \(C^{2}\) and that the fourth item of the statement of Theorem 11 is true.

(Proof of the \(C^{2}\) regularity of \(x_{1},\, x_{2},\) and of the fourth item.)

For \(\delta _{0}>0\) small enough, we denote \((y_{1}(t),\, y_{2}(t))\) to be the solution of the following system of ordinary differential equations, with the function \(g_{1}(t)=\phi (t,x)-H^{y_{2}(t)}_{0,1}(x)-H^{y_{1}(t)}_{-1,0}(x)\),

with initial condition \((y_{2}(0),y_{1}(0))=(x_{2}(0),x_{1}(0))\). This system of ordinary differential equations is motivated by the time derivative of the orthogonality conditions of the Modulation Lemma.

Since we have the estimate \(\ln {(\frac{1}{\epsilon })}\lesssim x_{2}(0)-x_{1}(0)\) and \(g_{1}(0)=g(0),\) Lemma 6 and the inequalities in (47) imply that the matrix

is positive, so we have from Picard–Lindelöf Theorem that \(y_{2}(t),\,y_{1}(t)\) are of class \(C^{1}\) for some interval \([-\delta ,\delta ],\) with \(\delta >0\) depending on \(\left|x_{2}(0)-x_{1}(0)\right|\) and \(\epsilon .\) From the fact that \((y_{2}(0),y_{1}(0))=(x_{2}(0),x_{1}(0)),\) we obtain, from the Eqs. (48) and (49), that \((y_{2}(t),y_{1}(t))\) also satisfies the orthogonality conditions of Modulation Lemma for \(t \in [-\delta ,\delta ].\) In conclusion, the uniqueness of Modulation Lemma implies that \((y_{2}(t),y_{1}(t))=(x_{2}(t),x_{1}(t))\) for \(t \in [-\delta ,\delta ].\) From this argument, we also have for \(t \in [-\delta ,\delta ]\) that \(e^{-\sqrt{2}(y_{2}(t)-y_{1}(t))}\le \frac{\epsilon }{2\sqrt{2}}.\) By bootstrap, we can show, repeating the argument above, that

Also, the argument above implies that if \((y_{1}(t),y_{2}(t))=(x_{1}(t),x_{2}(t))\) in an instant t, then \(y_{1},\,y_{2}\) are of class \(C^{1}\) in a neighborhood of t. In conclusion, \(x_{1}, \, x_{2}\) are functions in \(C^{1}({\mathbb {R}}).\) Finally, since \(\left\Vert g(t)\right\Vert _{H^{1}}=O(\epsilon ^{\frac{1}{2}})\) and \(e^{-\sqrt{2}z(t)}=O(\epsilon ),\) the following matrix

is uniformly positive for all \(t \in {\mathbb {R}}.\) So, from the estimate \(\left\Vert \partial _{t}\phi (t)\right\Vert _{L^{2}({\mathbb {R}})}=O(\epsilon ^{\frac{1}{2}}),\) the identities \(x_{j}(t)=y_{j}(t)\) for \(j=1,2\) and the Eqs. (48) and (49), we obtain

Since the matrix M(t) is invertible for any \(t\in {\mathbb {R}},\) we can obtain from the Eqs. (48), (49) that the functions \(\dot{x}_{1}(t),\dot{x}_{2}(t)\) are given by

Now, since we have that \((\phi (t),\partial _{t}\phi (t))\in C({\mathbb {R}},S\times L^{2}({\mathbb {R}}))\) and \(x_{1}(t),\,x_{2}(t)\) are of class \(C^{1},\) we can deduce that \((g(t),\partial _{t}g(t))\in C({\mathbb {R}},H^{1}({\mathbb {R}})\times L^{2}({\mathbb {R}})).\) So, by definition, we can verify that \(M(t) \in C^{1}({\mathbb {R}},{\mathbb {R}}^{4}).\)

Also, since \(\phi (t,x)\) is the solution in distributional sense of (1), we have that for any \(y_{1},\,y_{2} \in {\mathbb {R}}\) the following identities hold

Since (1) is locally well-posed in \(S\times L^{2}({\mathbb {R}}),\) we obtain from the identities above that the following functions \(h(t,y)\,{:=}\,\big \langle \partial _{x}H^{y}_{0,1},\,\partial ^{2}_{t}\phi (t)\big \rangle _{L^{2}}\) and \(l(t,y)\,{:=}\,\big \langle \partial _{x}H^{y}_{-1,0},\,\partial ^{2}_{t}\phi (t)\big \rangle _{L^{2}}\) are continuous in the domain \({\mathbb {R}}\times {\mathbb {R}}.\)

So, from the continuity of the functions \(h(t,y),\,l(t,y)\) and from the fact that \(x_{1},\,x_{2}\in C^{1}({\mathbb {R}}),\) we obtain that the functions

are of class \(C^{1}.\) In conclusion, from the Eq. (54), by chain rule and product rule, we verify that \(x_{1},\,x_{2}\) are in \(C^{2}({\mathbb {R}}).\)

Now, since \(x_{1},\,x_{2} \in C^{2}({\mathbb {R}})\) and \(\dot{x}_{1},\,\dot{x}_{2}\) satisfy (54), we deduce after differentiate in time the function

the following equations

Also, from the identity \(g(t)=\phi (t)-H^{x_{1}(t)}_{-1,0}-H^{x_{2}(t)}_{0,1},\) we obtain that \(\partial _{t}g(t)=\partial _{t}\phi (t,x)+\dot{x}_{1}(t)\partial _{x}H^{x_{1}(t)}_{-1,0}+\dot{x}_{2}(t)\partial _{x}H^{x_{2}(t)}_{0,1},\) so, from the estimates (39) and (53), we obtain that

Now, since \(\phi (t)\) is a distributional solution of (1), we also have, from the global equality \(\phi (t)=H^{x_{1}(t)}_{-1,0}+H^{x_{2}(t)}_{0,1}+g(t),\) the following identity

Since \(\partial _{x}H^{x_{1}(t)}_{-1,0} \in \ker \left( D^{2}E_{pot}\left( H^{x_{1}(t)}_{-1,0}\right) \right) \), we have by integration by parts that \(\left\langle \partial _{x}H^{x_{1}(t)}_{-1,0},\,\right. \)\(\left. \partial ^{2}_{x}g(t)-\ddot{U}\left( H^{x_{1}(t)}_{-1,0}\right) g(t)\right\rangle _{L^{2}}=0.\) Since we have

Lemma 6 implies \(\left\langle \partial _{x}H^{x_{1}(t)}_{-1,0},\,\dot{U}\left( H^{x_{1}(t)}_{-1,0}\right) +\dot{U}\left( H^{x_{2}(t)}_{0,1}\right) -\dot{U}\left( H^{x_{1}(t)}_{-1,0}+H^{x_{2}(t)}_{0,1}\right) \right\rangle _{L^{2}}=O\Big (e^{-\sqrt{2}(z(t))}\Big ).\) Also, from Taylor’s Expansion Theorem, we have the estimate

From Lemma 6, the fact that U is a smooth function and \(H_{0,1}\in L^{\infty }({\mathbb {R}})\), we can obtain

In conclusion, we have

and by similar arguments, we have

Also, the Eqs. (55) and (56) form a linear system with \(\ddot{x}_{1}(t),\,\ddot{x}_{2}(t).\) Recalling that the Matrix M(t) is uniformly positive, we obtain from the estimates (47), (53), (57), (59) and (60) that

\(\square \)

The Theorem 11 can also be improved when the kinetic energy of the solution is included in the computation and additional conditions are added, more precisely:

Theorem 12

There exist \(C,\,c,\,\delta _{0}>0,\) such that if \(0<\epsilon \le \delta _{0},\,(\phi (0,x),\partial _{t}\phi (0,x))\in S\times L^{2}({\mathbb {R}})\) and \(E_{total}((\phi (0,x),\partial _{t}\phi (0,x)))=2E_{pot}(H_{0,1})+\epsilon ,\) then there are \(x_{2},\,x_{1}\in C^{2}({\mathbb {R}})\) such that \(g(t,x)=\phi (t,x)-H^{x_{2}(t)}_{0,1}(x)-H^{x_{1}(t)}_{-1,0}(x)\) satisfies

and, for all \(t\in {\mathbb {R}},\)

Proof

From Modulation Lemma and Theorem 11, we can rewrite the solution \(\phi (t)\) in the form

with \(x_{1}(t),\,x_{2}(t),\,g(t)\) satisfying the conclusion of Theorem 11. First, we denote

then we apply Taylor’s Expansion Theorem in \(E(\phi (t))\) around \(\phi _{\sigma }(t).\) More precisely, for \(R_{\sigma }(t)\) the residue of quadratic order of Taylor’s Expansion of \(E(\phi (t),\partial _{t}\phi (t))\) around \(\phi _{\sigma }(t)\), we have:

such that for \((\nu _{1},\nu _{2}) \in S\times L^{2}({\mathbb {R}})\) and \((v_{1},v_{2})\in H^{1}({\mathbb {R}})\times L^{2}({\mathbb {R}})\), we have the identities

with \(D^{2}E_{total}(\nu _{1},\nu _{2})\) defined as a linear operator from \(H^{2}({\mathbb {R}})\times L^{2}({\mathbb {R}})\) to \(L^{2}({\mathbb {R}}).\)

So, from identities (65) and (66), it is not difficult to verify that

and, so,

Also, we have

The orthogonality conditions satisfied by g(t) also imply for all \(t\in {\mathbb {R}}\) that

So, the inequality (38) and the identities (68), (69), (70) imply that

From the Coercivity Lemma and the definition of \(D^{2}E_{total}(\phi _{\sigma }(t))\), we have that

Finally, there is the identity

From Lemma 6, we have that \(\left|\langle \partial _{x}H^{z}_{0,1},\,\partial _{x}H_{-1,0}\rangle _{L^{2}}\right|=O\big (ze^{-\sqrt{2}z}\big )\) for z big enough. Then, it is not difficult to verify that Lemma 7, (67), (71), (72) and (73) imply directly the statement of the Theorem 12 which finishes the proof. \(\square \)

Remark 7

Theorem 12 implies that it is possible to have a solution \(\phi \) of the Eq. (1) with energy excess \(\epsilon >0\) small enough to satisfy all the hypotheses of Theorem 2. More precisely, in notation of Theorem 2, if \(\left\Vert (g(0,x),\partial _{t}g(0,x))\right\Vert _{H^{1}\times L^{2}}\ll \epsilon ^{\frac{1}{2}}\) and

then we would have that \(E_{total}(\phi (0),\partial _{t}\phi (0))-2E_{pot}(H_{0,1})\cong \epsilon .\)

3 Long Time Behavior of Modulation Parameters

Even though Theorem 11 implies the orbital stability of a sum of two kinks with low energy excess, this theorem does not explain the movement of the kinks’ centers \(x_{2}(t),\,x_{1}(t)\) and their speed for a long time. More precisely, we still don’t know if there is an explicit smooth real function d(t), such that \((z(t),\dot{z}(t))\) is close to \((d(t),\dot{d}(t))\) in a large time interval.

But, the global estimates on the modulus of the first and second derivatives of \(x_{1}(t),\,x_{2}(t)\) obtained in Theorem 11 will be very useful to estimate with high precision the functions \(x_{1}(t),\,x_{2}(t)\) during a very large time interval. Moreover, we first have the following auxiliary lemma.

Lemma 13

Let \(0<\theta ,\,\gamma <1.\) We recall the function

for any \(z >0.\) We assume all the hypotheses of Theorem 11 and let \(\chi (x)\) be a smooth function satisfying

and \(0\le \chi (x)\le 1\) for all \(x\in {\mathbb {R}}.\) In notation of Theorem 11, we denote

and \(\left\Vert \overrightarrow{g(t)}\right\Vert =\left\Vert (g(t),\partial _{t}g(t))\right\Vert _{H^{1}({\mathbb {R}})\times L^{2}({\mathbb {R}})},\)

Then, for \(\theta =\frac{1-\gamma }{2-\gamma }\) and the correction terms

we have the following estimates, for \(j \in \{1,2\},\)

Remark 8

We will take \(\gamma =\frac{\ln {\ln {(\frac{1}{\epsilon })}}}{\ln {(\frac{1}{\epsilon })}}.\) With this value of \(\gamma \) and the estimates of Theorem 11, we will see in Lemma 16 that \(\exists C>0\) such that

Proof

For \(\gamma \ll 1\) enough and from the definition of \(\chi (x),\) it is not difficult to verify that

We will only do the proof of the estimates (76) and (77) for \(j=1,\) the proof for the case \(j=2\) is completely analogous. From the proof of Theorem 11, we know that \(\dot{x}_{1}(t),\,\dot{x}_{2}(t)\) solve the linear system

where M(t) is the matrix defined by (52). Then, from Cramer’s rule, we obtain that

Using the definition (52) of the matrix M(t), \(\left\Vert \overrightarrow{g(t)}\right\Vert =O(\epsilon ^{\frac{1}{2}})\) and Lemma 6 which implies the following estimate

we obtain that

So, from the estimate (81) and the identity (79), we obtain that

Finally, from the definition of g(t, x) in Theorem 11 we know that

from the Modulation Lemma, we also have verified that

and from Theorem 11 we have that \(\left\Vert \overrightarrow{g(t)}\right\Vert +\max _{j \in \{1,2\}}\left|\dot{x}_{j}(t)\right|\ll 1.\) In conclusion, we can rewrite the estimate (82) as

By similar reasoning, we can also deduce that

Following the reasoning of Lemma 3.5 of [13], we will use the terms \(p_{1}(t),\,p_{2}(t)\) with the objective of obtaining the estimates (77), which have high precision and will be useful later to approximate \(x_{j}(t),\,\dot{x}_{j}(t)\) by explicit smooth functions during a long time interval.

First, it is not difficult to verify that

which clearly implies with estimate (83) the inequality (76) for \(j=1.\) The proof of inequality (76) for \(j=2\) is completely analogous.

Now, the demonstration of the inequality (77) is similar to the proof of the second inequality of Lemma 3.5 of [13]. First, we have

and we will estimate each term one by one. More precisely, from now on, we will work with a general cut-off function \(\chi (x),\) that is a smooth function \(0\le \chi \le 1\) satisfying

with \(0<\theta ,\,\gamma <1\) and

The reason for this notation is to improve the precision of the estimate of \(\dot{p}_{1}(t)\) by the searching of the \(\gamma ,\,\theta \) which minimize \(\alpha (t)\).

Step 1. (Estimate of I) We will only use the identity \( I=\dot{x}_{1}(t)\frac{\big \langle \partial _{t}\phi (t),\,\partial ^{2}_{x}H^{x_{1}(t)}_{-1,0}\big \rangle _{L^{2}}}{\left\Vert \partial _{x}H_{0,1}\right\Vert _{L^{2}}^{2}}.\)

Step 2. (Estimate of II.) We have, by chain rule and definition of \(\chi _{0},\) that

So, we obtain that

First, since the support of \({\dot{\chi }}\) is contained in \([\theta (1-\gamma ),\,\theta ],\) from the estimates (D3) and (D4) we obtain that

Now, we recall the identity \( \partial _{t}\phi (t,x)=-\dot{x}_{1}(t)\partial _{x}H^{x_{1}(t)}_{-1,0}-\dot{x}_{2}(t)\partial _{x}H^{x_{2}(t)}_{0,1}+\partial _{t}g(t),\) by using the estimates (90), (91) in the identity (89), we deduce that

Since \(\frac{1-\gamma }{2-\gamma }\le \max ((1-\theta ),\theta (1-\gamma ))\) for \(0<\gamma ,\theta <1,\) we have that the estimate (92) is minimal when \(\theta =\frac{1-\gamma }{2-\gamma }.\) So, from now on, we consider

which implies with (78) and (92) that \(II=O(\alpha (t)).\)

Step 3. (Estimate of III.) We deduce from the identity

that

The identity (93) and the estimates (78), (90) and (91) imply by Cauchy–Schwarz inequality that

In conclusion, we have estimated that \(III.1=O(\alpha (t)).\)

Also, from condition (87) and the estimate (4), we can deduce that

Additionally, we have that

By integration by parts, we have that

In conclusion, from the estimates (78), (90), (91) and identity (93), we obtain that

Also, from Lemma (6), the estimate (4) and the fact of \(0\le \chi _{0}\le 1,\) we deduce that

From the estimates (90), (91) and identity (93), we can verify by integration by parts the following estimates

Finally, from Cauchy–Schwarz inequality and the estimate (96) we obtain that

In conclusion, we obtain from the estimates (99), (100), (101), (102) (103) and (104) that

This estimate of III.2 and the estimate (95) of III.1 imply

In conclusion, from the estimates \(II=O(\alpha (t)),\) (106) and the definition of I, we have that \(I+II+III=O(\alpha (t)).\)

Step 4. (Estimate of V.) We recall that \(V=-\frac{\langle \partial _{x}\chi _{0}(t)g(t),\,\partial ^{2}_{t}\phi (t) \rangle _{L^{2}}}{\left\Vert \partial _{x}H_{0,1}\right\Vert _{L^{2}}^{2}},\) and that

First, by integration by parts, using estimate (78), we have the following estimate

Second, since U is smooth and \(\left\Vert g(t)\right\Vert _{L^{\infty }}=O\big (\epsilon ^{\frac{1}{2}}\big )\) for all \(t\in {\mathbb {R}},\) we deduce that

Next, from Eq. (58) and Lemma 6, we have that

then, by Hölder inequality we have that

Clearly, the estimates (108), (109) and (111) imply that \(V=O(\alpha (t)).\)

Step 5. (Estimate of VI.) We know that

We recall the Eq. (107) which implies that

By integration by parts, we have from estimate (78) that

From the estimate (110) and Cauchy–Schwarz inequality, we can obtain the following estimate

Then, to conclude the estimate of VI we just need to study the following term \(C(t)\,{:=}\,\big \langle \partial _{x}g(t)\chi _{0}(t),\,\dot{U}(H^{x_{1}(t)}_{-1,0}+H^{x_{2}(t)}_{0,1}+g(t))-\dot{U}(H^{x_{1}(t)}_{-1,0}+H^{x_{2}(t)}_{0,1})\big \rangle _{L^{2}}.\) Since we have from Taylor’s theorem that

from estimate (78), we can deduce using integration by parts that

Since

we obtain that

Also, from Lemma 6 and the fact that \(\left\Vert g(t)\right\Vert _{L^{\infty }}\lesssim \left\Vert \overrightarrow{g(t)}\right\Vert \), we deduce that

In conclusion, we obtain that

So

Step 6. (Sum of \(IV,\,VI.\)) From the identities (107) and

we obtain that

In conclusion, from the identity

and by integration by parts, we have that

From our previous results, we conclude that

The conclusion of the lemma follows from estimate (118) with identity

which can be obtained from (21) by integration by parts with the fact that

\(\square \)

Remark 9

Since, we know from Lemma 6 that

and, by elementary calculus with change of variables, that \(\left\Vert \partial _{x}H_{0,1}\right\Vert _{L^{2}}^{2}=\frac{1}{2\sqrt{2}},\) then the estimates (76) and (77) obtained in Lemma 13 motivate us to study the following ordinary differential equation

Clearly, the solution of (119) satisfies the equation

As a consequence, it can be verified that if \(d(t_{0})>0\) for some \(t_{0}\in {\mathbb {R}},\) then there are real constants \(v> 0,\,c\) such that

In conclusion, the solution of the equations

are given by

for \(a,\,b\) real constants. So, we now are motivated to study how close the modulation parameters \(x_{1},\,x_{2}\) of Theorem 11 can be to functions \(d_{1},\,d_{2}\) satisfying, respectively the identities (123) and (122) for constants \(v\ne 0,\, a,\,b,\,c\).

At first view, the statement of the Lemma 13 seems too complex and unnecessary for use and that a simplified version should be more useful for our objectives. However, we will show later that for a suitable choice of \(\gamma \) depending on the energy excess of the solution \(\phi (t),\) we can get a high precision in the approximation of the modulation parameters \(x_{1},\,x_{2}\) by smooth functions \(d_{1},\,d_{2}\) satisfying (123) and (122) for a large time interval.

4 Energy Estimate Method

Before applying Lemma 13, we need to construct a function F(t) to get better estimate on the value of \(\left\Vert (g(t),\partial _{t}g(t))\right\Vert _{H^{1}\times L^{2}}\) than that obtained in Theorem 11.

From now on, we consider \(\phi (t)=H_{0,1}(x-x_{2}(t))+H_{-1,0}(x-x_{1}(t))+g(t,x)\), with \(x_{1}(t),\,x_{2}(t)\) satisfying the orthogonality conditions of the Modulation Lemma and \(x_{1},\,x_{2}\), \((g(t),\partial _{t}g(t))\) and \(\epsilon >0\) satisfying all the properties of Theorem 11. Before we enunciate the main theorem of this section, we consider the following notation

We also denote \(\omega _{1}(t,x)=\omega \big (\frac{x-x_{1}(t)}{x_{2}(t)-x_{1}(t)}\big )\) for \(\omega \) a smooth cut-off function with the image contained in the interval [0, 1] and satisfying the following condition

We consider now the following function

Since \(x_{1},\,x_{2}\) are functions of class \(C^{2}\), it is not difficult to verify that \((g(t),\partial _{t}g(t))\) solves the integral equation associated to the following partial differential equation

in the space \(H^{1}({\mathbb {R}})\times L^{2}({\mathbb {R}}).\)

Theorem 14

Assuming the hypotheses of Theorem 11 and recalling its notation, let \(\delta (t)\) be the following quantity

Then, there exist positive constants \(A_{1},\,A_{2},\,A_{3}\) such that the function F(t) satisfies the inequalities

Remark 10

Proof

Since the formula defining function F(t) is very large, we decompose the function in a sum of five terms \(F_{1},\, F_{2},\, F_{3},\,F_{4}\) and \(F_{5}\). More specifically:

First, we prove that \(\left|\dot{F}(t)\right|\lesssim \delta (t).\) The main idea of the proof of this item is to estimate each derivative \(\frac{dF_{j}(t)}{dt},\) for \(1\le j\le 5,\) with an error of size \(O(\delta (t)),\) then we will check that the sum of these estimates are going to be a value of order \(O(\delta (t)),\) which means that the main terms of the estimates of these derivatives cancel.

Step 1. (The derivative of \(F_{1}(t).\)) By definition of \(F_{1}(t),\) we have that

Moreover, from the identity (II) satisfied by g(t, x), we can rewrite the value of \(\frac{dF_{1}(t)}{dt}\) as

and, from the orthogonality conditions of the Modulation Lemma, we obtain

which implies

Step 2. (The derivative of \(F_{2}(t).\)) It is not difficult to verify that

From the definition of the function U, we can deduce that

therefore, we obtain from Lemma 6 and Cauchy–Schwarz inequality that

In conclusion, we obtain from the identity satisfied by \(\frac{dF_{2}(t)}{dt}\) that

Step 3. (The derivative of \(F_{3}(t).\)) From the definition of \(F_{3}(t),\) we obtain that

which can be rewritten as

Step 4. (Sum of \(\frac{dF_{1}}{dt}, \frac{dF_{2}}{dt}, \frac{dF_{3}}{dt}.\)) If we sum the estimates (125), (126) and (127), we obtain that

More precisely, from Taylor’s Expansion Theorem and since \(\left\Vert \overrightarrow{g(t)}\right\Vert ^{4}\le \delta (t),\)

Step 5. (The derivative of \(F_{4}(t).\)) The computation of the derivative of \(F_{4}(t)\) will be more careful since the motivation for the addition of this term is to cancel with the expression

of (128). The construction of functional \(F_{4}(t)\) is based on the momentum correction term of Lemma 4.2 of [13]. To estimate \(\frac{dF_{4}(t)}{dt}\) with precision of \(O(\delta (t))\), it is just necessary to study the time derivative of

since the estimate of the other term in \(F_{4}(t)\) is completely analogous. First, we have the identity

From the definition of \(\omega _{1}(t,x)=\omega \big (\frac{x-x_{1}(t)}{x_{2}(t)-x_{1}(t)}\big )\), we have

Since in the support of \({\dot{\omega }}(x)\) is contained in the set \(\frac{3}{4}\le x \le \frac{4}{5}\), we obtain the following estimate:

Clearly, from integration by parts, we deduce that

Also, we have

So, to estimate the time derivative of (129) with precision \(O(\delta (t)),\) it is enough to estimate

We have that

From integration by parts, the first term of right-hand side of Eq. (134) satisfies

From Taylor’s Expansion Theorem, we have that

Also, we have verified the identity

which clearly implies with the inequalities (D1), (D2) and Lemma 6 the estimate

Finally, it is not difficult to verify that

Then, from estimates (136), (137) and (138) and the partial differential equation (II) satisfied by g(t, x), we can obtain the estimate

which, by integration by parts and by Cauchy–Schwarz inequality using the estimate (96) for \(\omega _{1},\) we obtain that

Now, to finish the estimate of \(2\dot{x}_{1}(t)\int _{{\mathbb {R}}}\omega _{1}(t,x)\partial ^{2}_{t}g(t,x)\partial _{x}g(t,x)\,dx,\) it remains to study the integral given by

which by integration by parts is equal to

Since the support of \(\omega _{1}(t,x)\) is included in \(\{x\vert \,(x-x_{2}(t))\le -\frac{z(t)}{5}\}\) and the support of \(1-\omega _{1}(t,x)\) is included in \(\{x\vert \,(x-x_{1}(t))\ge \frac{3z(t)}{4}\},\) from the exponential decay properties of the kink solutions in (D1), (D2), (D3), (D4) we obtain the estimates

In conclusion, we obtain that the estimates (142), (143) imply the following estimate

Then, the estimates (134), (139), (144), (145) and (146) imply that

By an analogous argument, we deduce that

In conclusion, we have that

Step 6. (The derivative of \(F_{5}(t).\)) We have that

Step 7. (Conclusion of estimate of \(\vert \dot{F}(t)\vert \)) From the identities (147) and (148), we obtain that

Then, the sum of identities (128) and (149) implies \(\sum _{i=1}^{5}\frac{dF_{i}(t)}{dt}=O(\delta (t)),\) this finishes the proof of inequality \(\left|\dot{F}(t)\right|=O(\delta (t)).\)

Proof of \(F(t)+A_{1}\epsilon ^{2}\ge A_{2}\epsilon ^{2}.\) The Coercivity Lemma implies that \(\exists \,c>0,\) such that \(F_{1}(t)\ge c\left\Vert \overrightarrow{g(t)}\right\Vert ^{2}.\) Also, from Theorem 11, we have the global estimate

which implies that \(\left|F_{3}(t)\right|=O\Big (\left\Vert \overrightarrow{g(t)}\right\Vert \epsilon \Big ),\,\left|F_{4}(t)\right|=O\Big (\left\Vert \overrightarrow{g(t)}\right\Vert ^{2}\epsilon ^{\frac{1}{2}}\Big ),\,\left|F_{5}(t)\right|=O\Big (\left\Vert \overrightarrow{g(t)}\right\Vert ^{2}\epsilon ^{\frac{1}{2}}\Big ).\) Also, since

Lemma 6 and Cauchy–Schwarz inequality imply that

Then, the conclusion of \(F(t)+A_{1}\epsilon ^{2}\ge A_{2}\left\Vert \overrightarrow{g(t)}\right\Vert ^{2}\) follows from Young inequality for \(\epsilon \) small enough. \(\square \)

Remark 11

In the proof of Theorem 14, from Theorem 11 we have \(\left|F_{2}(t)\right|+\left|F_{3}(t)\right|=O\left( \left\Vert \overrightarrow{g(t)}\right\Vert \epsilon \right) .\) Since \(\left|F_{4}(t)\right|+\left|F_{5}(t)\right|=O\left( \left\Vert \overrightarrow{g(t)}\right\Vert ^{2}\epsilon ^{\frac{1}{2}}\right) \) and \(\left|F_{1}(t)\right|\lesssim \left\Vert \overrightarrow{g(t)}\right\Vert ^{2},\) then Young inequality implies that

Remark 12

(General Energy Estimate) For any \(0<\theta , \gamma <1,\) we can create a smooth cut-off function \(0\le \chi (x)\le 1\) such that

We define

If we consider the following function

then, by a similar proof to the Theorem 14, we obtain that if \(0<\epsilon \ll 1\) and

then there are positive constants \(A_{1},\,A_{2}>0\) such that

Our first application of Theorem 14 is to estimate the size of the remainder \(\left\Vert \overrightarrow{g(t)}\right\Vert \) during a long time interval. More precisely, this corresponds to the following theorem, which is a weaker version of Theorem 2.

Theorem 15

There is \(\delta >0,\) such that if \(0<\epsilon <\delta ,\) \((\phi (0),\partial _{t}\phi (0)) \in S\times L^{2}({\mathbb {R}})\) and \(E_{total}(\phi (0),\partial _{t}\phi (0))=2E_{pot}(H_{0,1})+\epsilon ,\) then there exist \(x_{1},x_{2} \in C^{2}({\mathbb {R}})\) such that the unique solution of (1) is given, for any \(t\in {\mathbb {R}},\) by

with g(t) satisfying orthogonality conditions of the Modulation Lemma and

for all \(t\in {\mathbb {R}}.\)

Proof of Theorem 15

In notation of Theorem 14, from Theorem 14 and Remark 11, there are uniform positive constants \(A_{2},\,A_{1}\) such that for all \(t\ge 0\)

From now on, we denote \(G(t)\,{:=}\,F(t)+A_{1}\left( \epsilon \ln {\frac{1}{\epsilon }}\right) ^{2}.\) From the inequality (154) and Remark 10, there is a constant \(C>0\) such that, for all \(t \ge 0,\) G(t) satisfies

In conclusion, from Gronwall Lemma, we obtain that \(G(t)\le G(0)\exp \Big (\frac{C\epsilon ^{\frac{1}{2}}t}{\ln {\frac{1}{\epsilon }}}\Big )\) for all \(t\ge 0.\) Then, from the definition of G and inequality (154), we verify the inequality (153) for any \(t\ge 0\). The proof of inequality (153) for the case \(t<0\) is completely analogous. \(\square \)

5 Global Dynamics of Modulation Parameters

Lemma 16

In notation of Theorem 2, \(\exists C>0,\) such that if the hypotheses of Theorem 2 are true, then for \(\overrightarrow{g(0)}=\left( g(0,x),\partial _{t}g(0,x)\right) \) we have that there are functions \(p_{1}(t),\,p_{2}(t) \in C^{1}({\mathbb {R}}_{\ge 0}),\) such that for \(j \in \{1,\,2\}\) and any \(t\ge 0,\) we have:

Proof

In the notation of Lemma 13, we consider the functions \(p_{j}(t)\) for \(j \in \{1,\,2\}\) and we consider \(\theta =\frac{1-\gamma }{2-\gamma },\) the value of \(\gamma \) will be chosen later. From Lemma 13, we have that

We recall from Theorem 11 the estimates \(\max _{j\in \{1,\,2\}}\left|\dot{x}_{j}(t)\right|=O(\epsilon ^{\frac{1}{2}}),\,e^{-\sqrt{2}z(t)}=O(\epsilon ).\) From Theorem 15, we have that

To simplify our computations, we denote \(c_{0}=\frac{\left\Vert \overrightarrow{g(0)}\right\Vert +\epsilon \ln {\frac{1}{\epsilon }}}{\epsilon \ln {\frac{1}{\epsilon }}}.\) Then, we obtain for any \(j\in \{1,\,2\}\) and all \(t\ge 0\) that

Since \(e^{-\sqrt{2}z(t)}\lesssim \epsilon ,\) we deduce for \(\epsilon \ll 1\) that \(z(t)e^{-\sqrt{2}z(t)}\lesssim \epsilon \ln {\frac{1}{\epsilon }}<\epsilon ^{1-\frac{\gamma }{(2-\gamma )2}}\ln {\frac{1}{\epsilon }}.\) Then, for any \(t\ge 0,\) we obtain from the same estimates and the definition (75) of \(\alpha (t)\) that

However, if \(\gamma \ln {\frac{1}{\epsilon }}\le 1\) and \(z(0)\cong \ln {\frac{1}{\epsilon }},\) which is possible, then the right-hand side of inequality (158) is greater than or equivalent to \(\left( \epsilon \ln {\frac{1}{\epsilon }}\right) ^{2}\) while \(0\le t\lesssim \frac{\ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}}.\) But, it is not difficult to verify for \(\gamma =\frac{\ln {\ln {\frac{1}{\epsilon }}}}{\ln {\frac{1}{\epsilon }}}\) that the right-hand side of inequality (158) is smaller than \(\left( \epsilon \ln {\frac{1}{\epsilon }}\right) ^{2}.\)

Therefore, from now on, we are going to study the right-hand side of (158) for \(\frac{1}{\ln (\frac{1}{\epsilon })}<\gamma <1\). Since we know that \(\ln {(\frac{1}{\epsilon })}\lesssim z(t)\) from Theorem 11, the inequality (158) implies for \(\frac{1}{\ln {(\frac{1}{\epsilon })}}<\gamma <1\) and \(t\ge 0\) that

For \(\epsilon >0\) small enough, it is not difficult to verify that if \( \beta _{3}(t)\ge \beta _{1}(t),\) then \(\gamma \ge \frac{\ln {\ln {\frac{1}{\epsilon }}}}{\ln {\frac{1}{\epsilon }}}.\) Moreover, if we have that \(1>\gamma >8\frac{\ln {\ln {\frac{1}{\epsilon }}}}{\ln {\frac{1}{\epsilon }}},\) we obtain from the following estimate

that \(\beta _{3}(t)>\frac{\left( \epsilon \ln {(\frac{1}{\epsilon })}\right) ^{2}}{\ln {\ln {\frac{1}{\epsilon }}}}.\) If \(\gamma \le \frac{\ln {\ln {(\frac{1}{\epsilon })}}}{\ln {\frac{1}{\epsilon }}},\) then \(\frac{\left( \epsilon \ln {\frac{1}{\epsilon }}\right) ^{2}}{\ln {\ln {\frac{1}{\epsilon }}}}\lesssim \beta _{1}(t)\) for any \(t\ge 0.\)

In conclusion, for any case we have that \(\frac{\left( \epsilon ^{2}\ln {\frac{1}{\epsilon }}\right) ^{2}}{\ln {\ln {\frac{1}{\epsilon }}}}\lesssim \beta (t)\) when \(t\ge 0,\) so we choose \(\gamma =\frac{\ln {\ln {\frac{1}{\epsilon }}}}{\ln {\frac{1}{\epsilon }}}.\) As a consequence, there exists a constant \(C_{1}>0\) such that, for any \(t\in {\mathbb {R}}_{\ge 0},\)

So, the estimates (157), (160), Remark 9 and our choice of \(\gamma \) imply the inequalities (155) and (156). \(\square \)

Remark 13

If \(\frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{m}}\lesssim \left\Vert \overrightarrow{g(0)}\right\Vert \) for a constant \(m>0,\) then, for \(\gamma =\frac{1}{8},\) we have from Lemma 13 that there is \(p(t) \in C^{2}({\mathbb {R}})\) satisfying for all \(t\ge 0\)

Then, for the smooth real function d(t) satisfying

and since \(e^{-\sqrt{2}z(t)}\lesssim \epsilon ,\,\ln {\frac{1}{\epsilon }}\lesssim z(t),\) we can deduce for any \(t\ge 0\) that \(Y(t)=(z(t)-d(t))\) satisfies the following integral inequality for a constant \(K>0\)

Indeed, for any \(k\in {\mathbb {N}}\) and all \(t\ge 0,\,\vert Y(t)\vert \le \Lambda ^{(k)}\left( \left| Y\right| \right) (t).\) We also can verify for any \(T>0\) that \(\Lambda ^{(k)}\left( \vert Y\vert \right) (t)\) is a Cauchy sequence in the Banach space \(L^{\infty }\left[ 0,T\right] .\) In conclusion, we can deduce for any \(t\ge 0\) that \(\left|Y(t)\right|\lesssim Q(t K^{\frac{1}{2}}),\) where Q(t) is the solution of the following integral equation

By standard ordinary differential equation techniques, we deduce for any \(t\ge 0\) that

and from \(\dot{z}(0)=\dot{d}(0)\) and the estimates (161) and (162), we obtain that

from which with (163) we obtain for all \(t\ge 0\) that

However, the precision of the estimates (163) and (165) is very bad when \(\epsilon ^{-\frac{1}{2}}\ll t,\) which motivate us to apply Lemma 13 to estimate the modulation parameters \(x_{1}(t),\,x_{2}(t)\) for \(\vert t\vert \lesssim \frac{\ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}}.\)

Remark 14

We recall from Theorem 4 the definitions of the functions \(d_{1}(t),\,d_{2}(t).\) If \( \left\Vert \overrightarrow{g(0)}\right\Vert \ge \frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{5}},\) then, using estimates

we deduce for a positive constant C large enough the inequalities (10) and (11) of Theorem 4.

Remark 15

If

the estimates of \(\max _{j\in \{1,2\}}\left|x_{j}(t)-d_{j}(t)\right|,\,\max _{j\in \{1,\,2\}}\left|\dot{x}_{j}(t)-\dot{d}_{j}(t)\right|\) can be done by studying separated cases depending on the initial data \(z(0),\,\dot{z}(0).\)

Lemma 17

\(\exists K>0\) such that if \(\left\Vert \overrightarrow{g(0)}\right\Vert \le \frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{5}}, \) where \(\overrightarrow{g(0)}=\left( g(0,x),\partial _{t}g(0,x)\right) ,\) and all the hypotheses of Theorem 4 are true and \(\frac{\epsilon }{\left( \ln {\frac{1}{\epsilon }}\right) ^{8}}\lesssim e^{-\sqrt{2}z(0)}\lesssim \epsilon ,\) then we have for \( t\ge 0\) that

Proof of Lemma 17

First, in notation of Lemma 16, we consider

Also, motivated by Remark 9, we consider the smooth function d(t) solution of the following ordinary differential equation

Step 1. (Estimate of \(z(t),\,\dot{z}(t)\)) From now on, we denote the functions \(W(t)=z(t)-d(t),\,V(t)=p(t)-\dot{d}(t).\) Then, Lemma 16 implies that \(W,\,V\) satisfy for any \(t\in {\mathbb {R}}_{\ge 0}\) the following differential estimates

From the above estimates and Taylor’s Expansion Theorem, we deduce for \(t\ge 0\) the following system of differential equations, while \(\left|W(t)\right|<1:\)

Recalling Remark 9, we have that

where \(v>0\) and \(c\in {\mathbb {R}}\) are chosen such that \((d(0),\dot{d}(0))=(z(0),\dot{z}(0)).\) Moreover, it is not difficult to verify that

Moreover, since \( 8e^{-\sqrt{2}z(0)}=v^{2}{{\,\textrm{sech}\,}}{(c)}^{2}\le 4v^{2}e^{-2\left|c\right|}, \) we obtain from the hypothesis for \(e^{-\sqrt{2}z(0)}\) that \(\frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{4}}\lesssim v \lesssim \epsilon ^{\frac{1}{2}}\) and as a consequence the estimate \(\vert c\vert \lesssim \ln {(\ln {(\frac{1}{\epsilon })})}.\)

Also, it is not difficult to verify that the functions

generate all solutions of the following ordinary differential equation

which is obtained from the linear part of the system (168).

To simplify our computations, we use the following notation

From the variation of parameters technique for ordinary differential equations, we can write that

such that for any \(t\ge 0\)

The presence of an error in the condition of the initial data \(c_{1}(0),\,c_{2}(0)\) comes from estimate (155) of Lemma 16. Since for all \(t \in {\mathbb {R}}\) \(m(t)\dot{n}(t)-\dot{m}(t)n(t)=\sqrt{2}v\), we can verify by Cramer’s rule and from the fact that \(\frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{4}}\lesssim v\) that

and, for all \(t\ge 0,\) the estimates

Since we have for all \(x\ge 0\) that

we deduce from the Fundamental Theorem of Calculus, the identity \(n(t)=(\sqrt{2}vt+c)\tanh (\sqrt{2}vt+c)-1,\) estimate \(\frac{\epsilon ^{\frac{1}{2}}}{\ln {(\frac{1}{\epsilon })}^{4}}\lesssim v\lesssim \epsilon ^{\frac{1}{2}}\) and the estimates (174), (175) that

for any \(t\ge 0.\) From a similar argument, we deduce that

for any \(t\ge 0.\)

From the estimates \(v\lesssim \epsilon ^{\frac{1}{2}},\,\vert c\vert \lesssim \ln {\ln {\frac{1}{\epsilon }}},\) we obtain for \(\epsilon \ll 1\) while \(t\ge 0\) and

that

Also, from \( \left|n(t)\right|\le (\sqrt{2}v\vert t\vert +\vert c\vert ), \) we deduce for any \(t\ge 0\) that

In conclusion, the estimates (176), (177), (179), (180) and the definition of \(W(t)=z(t)-d(t)\) imply that while \(t\ge 0\) and the condition (178) is true, then

Then, from the expression for V(t) in the equation (171) and the estimates (176), (177), (180), we obtain that if inequality (181) is true and \(t\ge 0,\) then

which implies the following estimate

Indeed, from the bound \(\left\Vert \overrightarrow{g(0)}\right\Vert \lesssim \frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{4}},\) we deduce that (178) is true if \(0\le t\le \frac{\left[ \ln {\ln {\frac{1}{\epsilon }}}\right] \ln {\frac{1}{\epsilon }}}{(4C+2)\epsilon ^{\frac{1}{2}}}.\) As a consequence, the estimates (181) and (183) are true if \(0\le t\le \frac{\left[ \ln {\ln {\frac{1}{\epsilon }}}\right] \ln {\frac{1}{\epsilon }}}{(4C+2)\epsilon ^{\frac{1}{2}}}.\)

But, for \(t\ge 0,\) we have that

Since f(t) defined in inequality (181) is strictly increasing and \(f(0)\lesssim \frac{1}{\left( \ln {\frac{1}{\epsilon }}\right) ^{2}\ln {\ln {\frac{1}{\epsilon }}}}\), there is an instant \(T_{M}>0\) such that

from which with estimate (181) and condition (178) we deduce that (181) is true for \(0\le t\le T_{M}.\) Also, from the identity (185) and the fact that \(\left\Vert \overrightarrow{g(0)}\right\Vert \lesssim \frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{4}}\) we deduce

from which we obtain that \(T_{M}\ge \frac{3}{8(C+1)}\frac{\ln {\ln {\frac{1}{\epsilon }}}\left( \ln {\frac{1}{\epsilon }}\right) }{\epsilon ^{\frac{1}{2}}}\) for \(\epsilon \ll 1.\) In conclusion, since f(t) is an increasing function, we have for \(t\ge T_{M}\) and \(\epsilon \ll 1\) that

from which with the estimates (184) and (181) we deduce for all \(t\ge 0\) that

As consequence, we obtain from the estimates (172), (173), (176), (177) and (186) that

for all \(t\ge 0.\)

Step 2. (Estimate of \(\left|x_{1}(t)+x_{2}(t)\right|,\,\left|\dot{x}_{1}(t)+\dot{x}_{2}(t)\right|.\)) First, we define

From the inequalities (155), (156) of Lemma 16, we obtain for all \(t\ge 0,\) respectively:

Also, from inequality (155) and the fact that for \(j\in \{1,2\}\, d_{j}(0)=x_{j}(0),\,\dot{d}_{j}(0)=\dot{x}_{j}(0),\) we deduce that \(M(0)=0\) and \(\left|N(0)\right|\lesssim \max \left( \left\Vert \overrightarrow{g(0)}\right\Vert ,\epsilon \ln {\frac{1}{\epsilon }}\right) \epsilon ^{\frac{1}{2}}.\) Then, from the Fundamental Theorem of Calculus, we obtain for all \(t\ge 0\) that

In conclusion, for \(K=16C+18,\) we verify from triangle inequality that the estimates (186) and (190) imply (166) and the estimates (187) and (189) imply (167). \(\square \)

Remark 16

The estimates (190) and (189) are true for any initial data \(\overrightarrow{g(0)}\in H^{1}({\mathbb {R}})\times L^{2}({\mathbb {R}})\) such that the hypotheses of Theorem 4 are true.

Remark 17

(Similar Case) If we add the following conditions

to the hypotheses of Theorem 4, then, by repeating the above proof of Lemma 17, we would still obtain for any \(t\ge 0\) the estimates (174), (175), (176) and (177).

However, since now \(\left|c\right|\le \left( \ln {\frac{1}{\epsilon }}\right) ^{2},\) if \(\epsilon \ll 1\) enough, we can verify while \(t\ge 0\) and

that

which implies by a similar reasoning to the proof of Lemma 17 for a uniform constant \(C>1\) and any \(t\in {\mathbb {R}}_{\ge 0}\) the following estimates

From the estimates (192), (193) and \(\left\Vert \overrightarrow{g(0)}\right\Vert \le \frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{5}}\), we deduce that the condition (191) holds while \(0\le t\le \frac{\ln {\ln {\frac{1}{\epsilon }}}\left( \ln {\frac{1}{\epsilon }}\right) }{4(C+1)\epsilon ^{\frac{1}{2}}}.\) Indeed, since \(\left\Vert \overrightarrow{g(0)}\right\Vert ^{2}\le \frac{\epsilon }{\left( \ln {\frac{1}{\epsilon }}\right) ^{10}},\) we can verify that there is an instant \(\frac{\ln {\ln {\frac{1}{\epsilon }}}\left( \ln {\frac{1}{\epsilon }}\right) }{4(C+1)\epsilon ^{\frac{1}{2}}}\le T_{M}\) such that (191) and (192) are true for \(0\le t \le T_{M}\) and

In conclusion, we can repeat the argument in the proof of step 1 of Lemma 17 and deduce that there is \(1<K\lesssim C+1\) such that for all \(t\ge 0\)

Lemma 18

In notation of Theorem 4, \(\exists K>1,\,\delta >0\) such that if \(0<\epsilon<\delta ,\,0<v\le \frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{4}},\, \overrightarrow{g(0)}=\left( g(0,x),\partial _{t}g(0,x)\right) \) and \(\left\Vert \overrightarrow{g(0)}\right\Vert \le \frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{5}},\) then we have for all \(t\ge 0\) that

Proof of Lemma 18

First, we recall that

which implies that

We recall the notation \(W(t)=z(t)-d(t),\,V(t)=p(t)-\dot{d}(t).\) From the first inequality of Lemma 16, we have that

We already verified that \(W,\, V\) satisfy the following ordinary differential system

However, since \(v^{2}\le \frac{\epsilon }{\left( \ln {\frac{1}{\epsilon }}\right) ^{8}},\) we deduce from (197) that \(e^{-\sqrt{2}d(t)}\lesssim \frac{\epsilon }{\left( \ln {\frac{1}{\epsilon }}\right) ^{8}}\) for all \(t\ge 0.\) So, while \(\left\Vert W(s)\right\Vert _{L^{\infty }[0,t]}<1,\) we have from the system of ordinary differential equations above for some constant \(C>0\) independent of \(\epsilon \) that

from which we deduce the following estimate for any \(t\ge 0\)

In conclusion, while \(\left\Vert W(s)\right\Vert _{L^{\infty }[0,t]}<1,\) we have that

Finally, since \(W(0)=0,\) the Fundamental Theorem of Calculus and (200) imply the following estimate for all \(t\ge 0\)

Then, the estimates (198) and (201) imply if \(\epsilon \ll 1\) that

for \(0 \le t \le \frac{\left( \ln {\frac{1}{\epsilon }}\right) \ln {\ln {\frac{1}{\epsilon }}}}{(8C+4)\epsilon ^{\frac{1}{2}}}.\) From (202) and (200), we deduce for \(0 \le t \le \frac{\left( \ln {\frac{1}{\epsilon }}\right) \ln {\ln {\frac{1}{\epsilon }}}}{(8C+4)\epsilon ^{\frac{1}{2}}}\) that

Since \(\left|W(t)\right|\lesssim \epsilon ^{\frac{1}{2}}t,\, \left|\dot{W}(t)\right|\lesssim \epsilon t\) for all \(t\ge 0,\) we can verify by a similar argument to the proof of Step 1 of Lemma 17 that for all \(t\ge 0\) there is a constant \(1<K\lesssim (C+1)\) such that

In conclusion, estimates (195) and (196) follow from Remark 16, inequalities (204), (205) and triangle inequality. \(\square \)

Remark 18

We recall the definition (169) of d(t). It is not difficult to verify that if \(\left\Vert \overrightarrow{g(0)}\right\Vert \le \frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{5}},\, \frac{\epsilon ^{\frac{1}{2}}}{\left( \ln {\frac{1}{\epsilon }}\right) ^{4}}\lesssim v\) and one of the following statements

-

1.

\(e^{-\sqrt{2}z(0)}\ll \frac{\epsilon }{\left( \ln {\frac{1}{\epsilon }}\right) ^{8}}\) and \(c>0,\)

-

2.

\(e^{-\sqrt{2}z(0)}\ll \frac{\epsilon }{\left( \ln {\frac{1}{\epsilon }}\right) ^{8}}\) and \(c\le {-}\left( \ln {\frac{1}{\epsilon }}\right) ^{2}\)

were true, then we would have that \(e^{-\sqrt{2}d(t)}\ll \frac{\epsilon }{\left( \ln {\frac{1}{\epsilon }}\right) ^{8}}\) for \(0\le t \lesssim \frac{\left( \ln {\frac{1}{\epsilon }}\right) ^{2}}{\epsilon ^{\frac{1}{2}}}.\) Moreover, assuming \(e^{-\sqrt{2}z(0)}\left( \ln {\frac{1}{\epsilon }}\right) ^{8}\ll \epsilon ,\) if \(c>0,\) then we have for all \(t\ge 0\) that

otherwise if \(c\le {-}\left( \ln {\frac{1}{\epsilon }}\right) ^{2},\) since \(0<v\lesssim \epsilon ^{\frac{1}{2}},\) then there is \(1\lesssim K\) such that for \(0\le t \le \frac{K\left( \ln {\frac{1}{\epsilon }}\right) ^{2}}{\epsilon ^{\frac{1}{2}}},\) then \(2\left|\sqrt{2}vt+c\right|>\left|c\right|,\) and so

In conclusion, the result of Lemma 18 would be true for these two cases.

From the following inequality

we deduce from Lemmas 17, 18 and Remarks 16, 17 and 18 the statement of Theorem 4.

6 Proof of Theorem 2

If \(\left\Vert \overrightarrow{g(0)}\right\Vert \ge \epsilon \ln {\frac{1}{\epsilon }},\) the result of Theorem 2 is a direct consequence of Theorem 15. So, from now on, we assume that \(\left\Vert \overrightarrow{g(0)}\right\Vert <\epsilon \ln {\frac{1}{\epsilon }}.\)

We recall from Theorem 4 the notations \(v,\,c,\,d_{1}(t),\,d_{2}(t)\) and we denote \(d(t)=d_{2}(t)-d_{1}(t)\) that satisfies

From the definition of \(d_{1}(t),\,d_{2}(t),\,d(t)\), we know that \(\max _{j\in \{1,\,2\}}\left|\ddot{d}_{j}(t)\right|+e^{-\sqrt{2}d(t)}=O\Big (v^{2}{{\,\textrm{sech}\,}}{(\sqrt{2}vt+c)}^{2}\Big )\) and since \(z(0)=d(0),\,\dot{z}(0)= \dot{d}(0),\) we have that \(v,\,c \) satisfy the following identities

so Theorem 11 implies that \(v\lesssim \epsilon ^{\frac{1}{2}}.\)

From the Corollary 5 and the Theorem 4, we deduce that \(\exists C>0\) such that if \(\epsilon \ll 1\) and \(0\le t \le \frac{\left( \ln {\ln {\frac{1}{\epsilon }}}\right) \ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}},\) then we have that

Next, we consider a smooth function \(0\le \chi _{2}(x)\le 1\) that satisfies

We denote

From Theorem 14 and Remark 12, the estimates (206) and (207) of the modulation parameters imply that for the following function

and the following quantity \(\delta _{1}(t)\) denoted by

we have \(\left|\dot{L}_{1}(t)\right|=O(\delta _{1}(t))\) for \(t\ge 0.\) Moreover, estimates (206), (207) and the bound \(\dot{L}_{1}(t)=O(\delta _{1}(t))\) imply that for

\(\left|\dot{L}_{1}(t)\right|=O(\delta _{2}(t))\) if \(0\le t \le \frac{\left( \ln {\ln {\frac{1}{\epsilon }}}\right) \ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}}.\)

Now, similarly to the proof of Theorem 15, we denote \(G(s)=\max \left( \left\Vert \overrightarrow{g(s)}\right\Vert ,\epsilon \right) .\) From Theorem 14 and Remark 12, we have that there are positive constants \(K,k>0\) independent of \(\epsilon \) such that

We recall that Theorem 11 implies that

from which with the definition of G(s) and estimates (206) and (207) we deduce that

while \(0\le t \le \frac{\left( \ln {\ln {\frac{1}{\epsilon }}}\right) \ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}}.\)

In conclusion, the Fundamental Theorem of Calculus implies that \(\exists K>0\) independent of \(\epsilon \) such that

while \(0\le t \le \frac{\left( \ln {\ln {\frac{1}{\epsilon }}}\right) \ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}}.\)

Since \(\frac{d}{dt} [\tanh {(\sqrt{2}vt+c)}]=\sqrt{2}v{{\,\textrm{sech}\,}}{(\sqrt{2}vt+c)}^{2},\) we verify that while the term \(G(s)v^{2}{{\,\textrm{sech}\,}}{(\sqrt{2}vt+c)}^{2}\epsilon ^{\frac{1}{2}}\) is dominant in the integral of the estimate (208), then \(G(t)\lesssim G(0).\) The remaining case corresponds when \(G(s)^{2}\frac{\epsilon ^{\frac{1}{2}}}{\ln {(\frac{1}{\epsilon })}}\) is the dominant term in the integral of (208) from an instant \(0\le t_{0}\le \frac{\left( \ln {\ln {\frac{1}{\epsilon }}}\right) \ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}}.\) Similarly to the proof of 15, we have for \(t_{0}\le t\le \frac{\left( \ln {\ln {\frac{1}{\epsilon }}}\right) \ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}}\) that \(G(t)\lesssim G(t_{0})\exp \Big (C\frac{(t-t_{0})\epsilon ^{\frac{1}{2}}}{\ln {\frac{1}{\epsilon }}}\Big ). \)

In conclusion, in any case we have for \(0\le t \le \frac{\left( \ln {\ln {\frac{1}{\epsilon }}}\right) \ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}}\) that

But, for \(T\ge \frac{\left( \ln {\ln {\frac{1}{\epsilon }}}\right) \ln {\frac{1}{\epsilon }}}{\epsilon ^{\frac{1}{2}}}\) and \(K>2\) we have that

In conclusion, from the result of Theorem 15, we can exchange the constant \(C>0\) by a larger constant such that estimate (209) is true for all \(t\ge 0.\)

Data Availibility

The author can confirm that all relevant data are included in this article and its supplementary information files.

References

Bethuel, F., Orlandi, G., Smets, D.: Dynamics of multiple degree Ginzburg–Landau vortices. C. R. Math. 342, 837–842 (2006)

Bishop, A.R., Schneider, T., Matter, S.N.S.D.C.: National Science Foundation (U.S.). In: Solitons and Condensed Matter Physics: Proceedings of the Symposium on Nonlinear (Soliton) Structure and Dynamics in Condensed Matter, Oxford, England, June 27–29, 1978. Lecture Notes in Economic and Mathematical Systems. Springer (1978)

Coddington, A., Levinson, N.: Theory of Ordinary Differential Equations. International Series in Pure and Applied Mathematics. McGraw-Hill Companies, New York (1955)

Colliander, J.E., Jerrard, R.L.: Vortex dynamics for the Ginzburg–Landau–Schrödinger equation. Int. Math. Res. Not. 333–358, 1998 (1998)

Delort, J.-M., Masmoudi, N.: Long-Time Dispersive Estimates for Perturbations of a Kink Solution of One-Dimensional Cubic Wave Equations Space Time Dimensions, volume 1 of Memoirs of European Mathematical Society. EMS Press, Zurich (2022)