Abstract

We study the directed polymer model for general graphs (beyond \({\mathbb {Z}}^d\)) and random walks. We provide sufficient conditions for the existence or non-existence of a weak disorder phase, of an \(L^2\) region, and of very strong disorder, in terms of properties of the graph and of the random walk. We study in some detail (biased) random walk on various trees including the Galton–Watson trees, and provide a range of other examples that illustrate counter-examples to intuitive extensions of the \({\mathbb {Z}}^d\)/SRW result.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The model of polymers in random environment, that is of random walk that is weighted by a random time-space field, has a long history in statistical physics, both on its own right and as a tool in understanding interfaces, see [35] for an early occurence. It soon appeared also in the mathematical literature, see [11, 36]. We refer to [16] for a recent overview of the subject from a mathematical perspective, and a concise history. Most of the mathematical work has focused on the model where the walk associated to the polymer is a symmetric random walk on the lattice \({\mathbb {Z}}^d\) or on approximations of the walk on the lattice, such as downward paths on trees [13, 27], diffusions on the d-dimensional discrete torus [29] or on the cylinder [12], or simple random walk on the complete graph [18]. In either case, the study of the polymer is closely related, via the Feynman-Kac representation, to the study of a stochastic heat equation (SHE) on the underlying lattice/tree.

Recently, as part of a study of stochastic dynamics equivalent to the SHE on large (but finite) networks, Sochen and the second author [51] discussed the effect of the underlying network topology on the dynamics. Using dynamic field theory, the multiplicative noise can be translated to an interaction term between the eigen-functions of the graph Laplacian. The second moment of the solution is then calculated using expansion in these eigen-functions. Similar to the lattice topology, for transitive graphs the different phases of the model (defined below) depend on the spectral dimension of the graph.

Motivated by that work, we study in this paper how key notions that have been developed in the study of directed polymers on \({\mathbb {Z}}^d\) translate to the situation where the associated walk is defined on various infinite graphs. Of particular interest is the case where the underlying graph is itself random (such as various percolation models), or at least irregular, and the relation between the transience or recurrence of the random walk on the graph and the phase transitions among different regimes. As we will see, new phenomena emerge, and the structure of the underlying graph has an important effect on the behavior of the polymer. Naturally, we emphasize these aspects of the theory. Our goal in this paper is to initiate the study of these interesting models and raise new questions, rather than providing complete answers to all models. In Sect. 7, we state several open questions that we find of interest.

We mention two other papers that adopt a similar point of view. Polymers for which the underlying walk is a general Markov chain have been studied in [14], in the specific case where the chain is positive recurrent. For the related model of the parabolic Anderson model (PAM) (which studies the SHE equation when the noise only depends on time), the recent [26] focuses on the PAM on Galton–Watson trees and locally tree-like structures such as the configuration model.

In the rest of this introduction, we explicitly introduce the polymer model, define the different phases, and state some general theorems concerning the existence and properties of various phases. These are easy extensions of the standard results for the case of simple random walk on the lattice. We also introduce certain graphs that will be a good source of counter-examples. Our main results are stated in Sect. 2. Section 3 introduces three classes of graphs with associated Markov chains, that are used to illustrate various features and are interesting on their own rights. Those are the lattice super-critical percolation cluster, the biased walk on Galton–Watson trees, and the canopy graph. The proofs of all statements appear in Sects. 4–6. Section 7 contains concluding remarks and the statement of several open problems.

1.1 The polymer model

To set the stage for a description of our results, we begin by introducing our model of random polymer. Let \(G=(V,E)\) denote a connected (undirected) graph with (infinite) vertex set V and set of edges \(E\subset V\times V\). We let d(x, y) denote the graph distance between \(x,y\in V\), i.e. the length of the shortest path connecting x, y.

Associated with the graph is a nearest-neighbor discrete time Markov chain \(S=(S_k)_{k\ge 0}\) with (time-homogeneous) transition matrix \(P(x,y), x,y\in V\), where \(P(x,y)=0\) if \((x,y)\not \in E\). We denote by \({\mathrm P}_x\) the law of \((S_k)_{k\ge 0}\) where \(S_0=x\). The expectation under \({\mathrm P}_x\) is denoted by \({\mathrm E}_x\) and we set \(p_n(x,y) = {\mathrm P}_{x}(S_n=y)\). We remark that often, we consider the simple random walk (SRW) case determined by \(P(x,y)=1/d_x\) when \((x,y)\in E\), with \(d_x\) the degree of \(x\in V\). This of course is only defined when the degree is locally finite, i.e. so that \(d_x<\infty \) for all \(x\in V\). Throughout, we write \(S,S'\) for two independent copies of S and \({\mathrm E}_{x,y}^{\otimes 2}\) for the expectations of two independent copies \(S,S'\) starting from x and y, with \({\mathrm P}_{x,y}^{\otimes 2}\) the corresponding law. We also write \({\mathrm E}_{x}^{\otimes 2} = {\mathrm E}_{x,x}^{\otimes 2}\) and \({\mathrm P}_x^{\otimes 2}={\mathrm P}_{x,x}^{\otimes 2}\).

The third component in the definition of the polymer is the environment, which is a collection of i.i.d. random variables \(\omega (i,x)\) with \(i\in {\mathbb {N}}\) and \(x\in V\). For concreteness, we chose the nomalization that makes \(\omega (i,x)\) of mean zero and variance one. The law of the environment is denoted \({{\mathbb {P}}}\), with expectation denoted by \({{\mathbb {E}}}\). We also let \({\mathcal {G}}_n\) denote the sigma-algebra generated by \(\{\omega (i,x),i\le n,x\in V\}\).

Throughout the paper, we make the following blanket assumption on the random walk and on the environment.

Assumption 1.1

-

1.

The Markov chain \(((S_k)_k,V)\) is irreducible and (G, S) is locally finite, i.e. \({{\bar{d}}}_x:= \sum _{y: (x,y)\in E} \mathbf{1}_{p(x,y)>0}<\infty \) for all \(x\in V\);

-

2.

There exists \(a>0\) so that \(\Lambda (\beta ): = \log {{\mathbb {E}}}[e^{\beta \omega (i,x)}]\) is finite for \(\beta \in [-a,\infty )\).

Continuing with definitions, the polymer measure \({\mathrm P}^{n,\beta }_x\) of horizon n and inverse temperature \(\beta \ge 0\) is the probability measure on the paths \(S=(S_k)_{k\ge 0}\) given by

where the partition function \(Z_n(x)\) satisfies

Under the polymer measure \({\mathrm P}^{n,\beta }_x\), the polymer path \((S_k)\) favors parts of the environment that take high values, and the parameter \(\beta \) tunes the intensity of this preference. One thus expects a transition between the delocalized (small \(\beta \)) regime, where the polymer does not exhibit a qualitative change of behavior compared to the original walk, and the localized (large \(\beta \)) regime, where the polymer localizes in attractive parts of the environment.

1.2 Weak and strong disorder and their consequences

An important quantity in the study of the localized/delocalized transition is the normalized partition function:

It is straightforward to check that for fixed x, \(W_n(x)\) defines a mean-one, positive martingale with respect to \(({\mathcal {G}}_n)_n\), which therefore converges \({{\mathbb {P}}}\)-a.s. to a limit \(W_\infty (x,\beta )\). The following easy 0-1 law holds in our general context.

Proposition 1.2

For all \(\beta \ge 0\),

Moreover, there is a critical parameter \(\beta _c\in [0,\infty ]\) such that weak disorder holds if \(\beta <\beta _c\) and strong disorder holds if \(\beta >\beta _c\).

For the lattice/SRW model, it is known that \(\beta _c = 0\) in dimensions \(d=1,2\) and that \(\beta _c\in (0,\infty )\) when \(d\ge 3\), see [16, 42].

One expects that the transition between weak and strong disorder corresponds to the transition between the localized and delocalized phases. Indeed, for the lattice/SRW model, [21] show that in the whole weak disorder region, the polymer path satisfies a functional central limit theorem. Their argument adapts to our general context with some restrictions, as follows. Let \(|S_n|=d(S_n,S_0)\) and let \(|S^{(N)}| = (|S_{Nt}| / \sqrt{N})_{t\in [0,1]}\) denote the continuous time process obtained by interpolation.

Theorem 1.3

[21]. Let \(S_0=x\in V\). Assume that: (i) \((|S_k|)\) satisfies an almost-sure central limit theorem in the sense that for all sequence \(N_k\) such that \(\inf _{k} N_{k+1}/N_k > 0\), for any bounded and Lipschitz function F of the path, as \(N\rightarrow \infty \),

where \((B_t)\) is a centered, real-valued Brownian motion with \({\mathrm E}[|B_1|^2] > 0\).

(ii) \((W_n(x,\beta ))_n\) is uniformly integrable.

Then, as \(n\rightarrow \infty \),

Remark 1.4

Condition (ii) of Theorem 1.3 implies that weak disorder holds. The converse may not hold, see the discussion in Sect. 2.2.

Remark 1.5

Under weak disorder, one can consider the limit polymer measure, defined as \(\mu _{polymer} = \lim _{n\rightarrow \infty } {\mathrm P}_x^{n,\beta }\). Proposition 4.1 in [21] states that \(\mu _{polymer}\) is well defined and is absolutely continuous with respect to the original measure \({\mathrm P}_x\); the proof carries over to our general framework.

Let \(I_n(x) = ({\mathrm P}^{n-1,\beta }_x)^{\otimes 2}(S_n={S}'_n)\) denote the probability for two independent polymer paths (in the same environment) to end at the same point. As noted in [19, Remark 2.5], the next theorem holds under the mere assumption that \((S_k)\) is a Markov chain (in particular, irreducibility of (S, P) is not necessary).

Theorem 1.6

[15, 19]. For all \(\beta > 0\), \(x\in V\),

In the other extreme, strong localization properties in the entire strong disorder region have been shown for the lattice/SRW model [15, 19].

We close this subsection by noting that while \(W_n(x)\) may be very different from its expectation, this is not the case for \(\log W_n(x)\). The next theorem was proved in the lattice case in [43]. An inspection of the proof reveals that it transfers directly to our setup.

Theorem 1.7

[43]. For all \(\beta >0\), there exists a finite constant \(C=C(\beta )\) (that is independent of the graph structure) such that for all \(x\in G\),

1.3 Very strong disorder

Under strong disorder, we have by Proposition 1.2 that \(W_n(x,\beta )\rightarrow _{n\rightarrow \infty } 0\), a.s. We say that very strong disorder holds if that decay is exponential, that is, if

Proposition 1.8

The limit \({\bar{p}}:={\bar{p}}(x)\) does not depend on \(x\in V\). Moreover, there exists a critical parameter \({\bar{\beta }}_c\in [0,\infty ]\), such that very strong disorder holds for \(\beta > {{\bar{\beta }}}_c\) and \(\,{\bar{p}}=0\) when \(\beta <{{\bar{\beta }}}_c \). Finally,

From the definitions, we clearly have that \(\beta _c\le {\bar{\beta }}_c\). For the lattice/SRW model, it is known that \({\bar{\beta }}_c=0\) when \(d=1,2\) (see [20, 42]), while \({{\bar{\beta }}}_c \in (0,\infty ]\) when \(d\ge 3\) (see [16], in particular Example 3.2 for an example with \(\beta _c=\infty \)). In this latter case, the question whether or not \({{\bar{\beta }}}_c = \beta _c\) is, to our knowledge, still open. Still on the lattice, there exists a random walk with heavy-tailed jumps such that \(\beta _c < {{\bar{\beta }}}_c\), see [54].

In our general framework, we have the following:

Proposition 1.9

(Very strong disorder always holds for large \(\beta \)). Assume that there exists \(d<\infty \) so that \(d_x\le d\) for all d. Assume further that the support of the law of \(\omega (i,x)\) is unbounded from above. Then, there exists \(\beta _0\ge 0\) such that very strong disorder holds for all \(\beta >\beta _0\).

(The assumption of unbounded support in Proposition 1.9 is essential, see [16, Example 3.2] for a counter example for Bernoulli environment.)

Very strong disorder implies the following strong localization property. The proof, given originally for the lattice/SRW model, carries over without change to our setup (see [19, Remark 2.5]).

Theorem 1.10

[19]. Very strong disorder holds if and only if there is some \(c>0\) such that

or, equivalently, if there is some \(c>0\) such that

In particular, under strong disorder, there exists \(c>0\) such that

For the lattice/SRW model, the authors in [5] have gone deeper into the description of the (endpoint) localization phenomena. They showed that in the full very strong disorder region, the mass of the endpoint concentrates asymptotically on some small islands – a phenomena also called asymptotic pure atomicity. More precisely, they proved that if

then, for every sequence \((\varepsilon _k)_{k\ge 0}\) vanishing as \(k\rightarrow \infty \),

In a related continuous setting, localization properties of the Brownian polymer full path (i.e. not restricted to the endpoint properties) have been shown to hold deep inside the very strong disorder region in [22].

1.4 The \(L^2\)-region

A range of parameters that plays an important role in the literature because it is tailored to moment computations, is the \(L^2\)-region, which corresponds to the set of \(\beta \)’s such that the martingale \((W_n(x,\beta ))_n\) is bounded in \(L^2\), i.e.

The following easy proposition allows for the definition of a threshold for the \(L^2\)-region, similar to \(\beta _c\),

Proposition 1.11

There is a parameter \(\beta _2\in [0,\infty ]\) such that (11) holds for \(\beta \in [0,\beta _2)\) and \(\sup \nolimits _{n} {{\mathbb {E}}}W_n(x,\beta )^2=\infty \) for all \(x\in V\) when \(\beta >\beta _2\).

Remark 1.12

It is immediate that \(\beta _2\le \beta _c\). For the lattice/SRW model, it is further known that \(0< \beta _2 < \beta _c\) for \(d\ge 3\) [6, 8,9,10] (in particular, see [9, Section 1.4] for d = 3,4). In our general setting, we will construct graphs for which SRW satisfies the a priori surprising property that \(\beta _c>0\), but \(\beta _2=0\), see Theorem 5.7.

Compared to the full weak disorder region, the \(L^2\)-region has the advantage of allowing second-moment computations which, for example, lead to the first proofs of diffusivity of the path for the lattice in the \(L^2\)-region (and \(d\ge 3\)), see Remark 3.3 in [16] for a summary on the matter. In our general context, similar considerations bring us to the following result, whose proof is given in Sect. 5. Recall that \(|S_n|=d(S_n,S_0)\).

Theorem 1.13

Assume (11), and that there exist a random variable Z and a deterministic sequence \(a_n\rightarrow _{n\rightarrow \infty }\infty \) satisfying \(a_n/a_{n-\ell } \rightarrow 1\) for all \(\ell >0\), such that for all \(x\in V\),

Then, for all bounded and continuous function F, as \(n\rightarrow \infty \),

Remark 1.14

Recently, the rate of convergence in \(W_n \rightarrow W_\infty \) and the nature of the fluctuations for the lattice/SRW model have been obtained in the full region \([0,\beta _2)\) in [17, 23]. It is believed that the speed and nature should be different in the region \([\beta _2,\beta _c)\). See also [24, 28, 32, 44, 48] where similar questions appear in the study of the regularized SHE and KPZ equation in dimension \(d\ge 3\). We do not touch upon these questions in this paper.

2 General Results

We state in this section our general results for the polymer model. In Sect. 2.1 we give conditions for \(\beta _2=0\) or \(\beta _2>0\) in terms of quantitative transience/recurrence of (G, S), and in particular in terms of heat kernel estimates and volume growth. We also show in Theorem 2.14 that recurrent walks admitting appropriate heat kernel bounds satisfy \(\beta _c=0\). Section 2.2 is devoted to the weak disordered regime. We give sufficient conditions for the uniform integrability of \(W_n(x,\beta )\) in terms of graph notions such as the Liouville property and existence of good graph isomorphisms. (We emphasize that weak disorder does not imply uniform integrability, see Proposition 4.1.) Along the way, we refer to counter examples to natural conjectures; these counter examples are constructed later in the paper.

Throughout, we assume without stating it explicitly that Assumption 1.1 holds. Recall that a random walk on G with transition probability P(x, y) is called reversible (with reversing measure \(\pi \)) if \(\pi \) is a positive measure on V so that for any \(x,y\in V\), \(\pi (x)P(x,y)=\pi (y) P(y,x)\).

2.1 Critical parameters

We begin with a sufficient condition for \(\beta _2=0\), in the reversible setup, for recurrent walks. This condition covers the (known) case of SRW on \({\mathbb {Z}}^d\) for \(d=1,2\), and applies to reversible walks.

Theorem 2.1

Suppose that \((S_k)\) is recurrent and reversible with a reversing measure \(\pi \) satisfying \(\inf _{x\in V} \pi (x)>0\). Then \(\beta _2 = 0\).

Remark 2.2

If \((S_k)\) is a SRW then the condition on \(\pi \) in Theorem 2.1 is always satisfied, since in that case \(\pi (x)=d_x\ge 1\) is a reversing measure.

Remark 2.3

The condition that \((S_k)\) is recurrent on G is not sufficient for the conclusion of Theorem 2.1 to hold, see Sect. 6.4 for a counter-example.

A sufficient condition for \(\beta _2=0\) involves the intersection of pair of paths.

Theorem 2.4

Let \((S),(S')\) denote independent copies of (S) and assume that

Then, \(\beta _2=0\).

Note that condition (13) can be written as

A refinement of Theorem 2.4 appears in Theorem 5.4.

Remark 2.5

In Proposition 5.3, we describe a family of graphs (including transient ones) with \(\beta _2=0\). This covers the case of the supercritical percolation cluster on \({\mathbb {Z}}^d\) with \(d\ge 2\).

In the reverse direction, we require a quantitative criterion. Introduce the Green function for \((S_k)\):

Theorem 2.6

Suppose that \((S_k)\) is transient and reversible with reversing measure \(\pi \) satisfying \(\sup _{x\in V} \pi (x)<\infty \). If

then \(\beta _2>0\).

Remark 2.7

When \((S_k)\) is a SRW then \(\pi (x)=d_x\) is a reversing measure and the boundedness condition in Theorem 2.6 means that the degree is uniformly bounded in V. Under this condition, (16) is satisfied if and only if the Green function is bounded from above.

Remark 2.8

The boundedness condition on \(\pi \) in Theorem 2.6 is not necessary: indeed, the biased random walk on a canopy tree is an example of a reversible transient graph that does not satisfy this property, while \(\beta _2>0\) holds for the associated polymer, see Theorem 6.6.

Remark 2.9

Condition (16) is satisfied as soon as \((S_k)\) admits a uniform Gaussian heat kernel upper bound as in (18), for some \(d>2\).

Remark 2.10

There are polymers associated with transient and reversible SRW that do not possess an \(L^2\)-region. Indeed, in Theorem 5.5 we show that the SRW on the supercritical percolation cluster on \({\mathbb {Z}}^d\), \(d\ge 3\) (which is transient and reversible with reversing measure bounded from above), has \(\beta _2=0\) (in contrast with the full lattice SRW). Further, there are reversible and transient walks such that \(\beta _c=0\). For an example of the latter, take \(G={\mathbb {Z}}_+\), with \(P(i,i+1)=e^{e^{i+1}}/(e^{e^{i+1}}+e^{e^{i}})\). (This corresponds to a conductance model with conductances \(C_{i,i+1}=e^{e^i}\).) It is not hard to verify that the resulting random walk is irreducible, transient and reversible, while a repetition of the proof of Proposition 4.1 shows that \({{\bar{\beta }}}_c=\beta _c=0\).

There are also polymers associated with a transient SRW satisfying \(0=\beta _2<\beta _c\). We exhibit two examples of this phenomenon for SRW on appropriate graphs, namely a class of transient Galton–Watson trees, see Sect. 6.2, and a copy of \({\mathbb {Z}}^d\) for \(d\ge 4\) with arbitrary long pipes attached on a line, see Theorem 5.7.

Returning to the general (not necessary reversible) setup, we begin with the positive recurrent case.

Theorem 2.11

If \((S_k)\) is positive recurrent, then \(\beta _c=0\).

Remark 2.12

If the positive recurrent \((S_k)\) admits return times that have exponential moments, then \(\beta _c={{\bar{\beta }}}_c=0\), see [14]. However, an extra condition beyond positive recurrence cannot be omitted in general. Indeed, the positive recurrent \(\lambda \)-biased walk on a Galton–Watson tree with \(m<\lambda \) provides an example where \({{\bar{\beta }}}_c\) can be positive depending on the characteristics of the offspring distribution, see Theorem 6.1. In particular, this gives an example of a polymer where \(\beta _c < {{\bar{\beta }}}_c\). We note that the question whether \(\beta _c={{\bar{\beta }}}_c\) or not when \(d\ge 3\) is still open in the case of the lattice/SRW \({\mathbb {Z}}^d\).

We now introduce a class of walks, for which the existence of a weak disorder region is determined by the value of the spectral dimension of the walk. We say that a random walk S satisfies a sub-Gaussian heat kernel upper bound with parameters \(d_f>0,d_w>1\) if there exist a positive measure \(\mu \) on V, a vertex \(x\in V\) and constants \(C_x,c>0\), such that for all \(n>0\),

where \(d=2d_f/d_w\). We say that the sub-Gaussian heat kernel upper bound is uniform if in addition, \(\inf _{x\in V} \mu (x)>0\) and for some \(C>0\),

The estimates are called Gaussian if \(d_w=2\). The notation \(d_w,d_f\) (for the walk and fractal dimensions) is borrowed from the theory of random walks on fractals, see e.g. [41] for an extensive introduction. The exponent d, often written \(d_s\) in the literature, is referred to as the spectral dimension.

Remark 2.13

The bound (18) holds with \(d_w=2\) whenever \(\mu \) is a reversing measure for S, such that \(\inf _{x\in V} \mu (x) > 0\) and such that S satisfies the d-dimensional isoperimetric inequality, see [55, pg. 40 & Section 14].

Theorem 2.14

Assume the existence of a measure \(\mu \) satisfying \(\inf _{x\in V} \mu (x)>0\) and, with \({\mathcal {S}}(x,r) = \{y\in V, d(x,y)=r\}\),

(i) If (17) holds with \(d<2\) then \(\beta _c=0\).

(ii) If (18) holds for \(d>2\) and \(\sup _{x\in V} \mu (x)<\infty \), then \(\beta _2>0\).

Remark 2.15

If \(\mu \) is reversing for S, then the condition (19) with \(d_f<2\) implies that S is recurrent, see [55, Lemma (3.12)].

Remark 2.16

The assumptions of Theorem 2.14 (in fact, with the stronger (18) replacing (17)) hold for SRW on the Sierpiński gasket [37] and on the Sierpiński carpet [2, 4]. Note that one can find a family of Sierpinski gaskets with arbitrary large \(d_f\) while \(d<2\) [33]. The bound (18) holds for general classes of fractal graphs, see Remark 4.5.3 in [41].

Remark 2.17

The conclusion of Theorem 2.14(i) holds for SRW on a graph G satisfying the uniform volume growth \(|B(x,r)|\le Cr^2\) where \(B(x,r)=\{y\in V,d(y,x)\le r\}\), with a uniform bound on the degree of vertices. Indeed, for such graphs, the Carne-Varopoulos bound (see [55] or [47]) yields that \(p_n(x,y)\le C_x e^{-d(x,y)^2/2n}\). Together with the argument in [16, Section 6.2.1], this immediately yields that \(\beta _c=0\). This remark applies to more general walks (not necessarily reversible) satisfying the Carne-Varopoulos bound (such as in [49]) and graphs satisfying quadratic volume growth.

To obtain very strong disorder, we need uniform covering conditions, of the following type.

Assumption 2.18

Suppose that there exist \(x_0\in V\) and \(C_G>0\), such that for n large enough, for all \(m\in {\mathbb {N}}\), one can find a sequence of sets \(A_i\subset V\) that satisfies \(B(x_0,nm)\subset \cup _{i\in I} A_i\), \(\mathrm {diam}(A_i) \le n^{1/d_w}\) and

where \(c_2>c\) and \(c,d_w\) are as in the uniform sub-Gaussian heat kernel upper bound (18).

Assumption 2.18 holds for many fractal graphs, such as the Sierpiński gasket and carpet, and their random variants.

Theorem 2.19

Assume the hypotheses of Theorem 2.14 (i) with the uniform (18) replacing (17), and in addition let Assumption 2.18 hold. Then, \({{\bar{\beta }}}_c=0\). Moreover, there exists \(C>0\) such that for all \(x_0\in V\), \(\beta \in (0,1)\),

Remark 2.20

The critical case \(d=2\) is not covered by Theorems 2.14 and 2.19. For \({\mathbb {Z}}^d\)/SRW, the conclusion holds by [42]. Unlike the proof of Theorems 2.14 and 2.19 for \(d<2\), the proof for \(d=2\) in [42] uses a change of measure that introduces correlations into the environment. We believe that the argument carries over to our setup, but we have not verified all details.

Remark 2.21

After this work was completed, we learnt of a result of Kajino et al. [38], obtained independently, where a version of Theorem 2.19 is proved, under similar but slightly different assumptions. (In particular, they work with reversible walks and with uniform lower and upper bounds on the reversing measure, and the covering condition in Assumption 2.18 is replaced by a uniform volume growth condition.) Under the extra assumption of existence of heat kernel lower bounds, they show that the estimate in Theorem 2.19 is sharp for small \(\beta \).

We close this section by mentioning a result of Birkner [7] whose proof carries over without changes to our general framework. Let

Theorem 2.22

[7]. Let \(x\in V\). Let \(S,S'\)

be two independent copies of S started at x, and let \({\mathcal {F}}_S\) denote the \(\sigma \)-algebra generated by \((S_k)\). If

then \((W_n(x,\beta ))_n\) is uniformly integrable. In particular, (23) implies that \(W_\infty (x,\beta ) >0\) a.s.

Theorem 2.22 was used in the proof that \(\beta _2 < \beta _c\) for the \({\mathbb {Z}}^d\)/SRW polymer, when \(d\ge 3\) (see Remark 1.12 above). In our context, it will be used in Sect. 6.2 when showing that \(\beta _c >0\) for a transient Galton–Watson model which satisfies \(\beta _2=0\).

2.2 Uniform integrability of the partition function

Since \({{\mathbb {E}}}[W_n(x,\beta )]=1\), it is immediate that whenever \((W_n(x,\beta ))_n\) is uniformly integrable for some \(x\in V\), then weak disorder holds. In what follows, we study the converse implication and provide some conditions on \((S_k)_{k\ge 0}\) under which \((W_n(x,\beta ))_n\) is uniformly integrable in the entire weak disorder region.

Remark 2.23

The converse is not always true: Proposition 4.1 provides an example for which \(\beta _c>0\) but \((W_n(x,\beta ))_n\) is not uniformly integrable in the whole weak disorder region.

We begin with an observation. We say that \(h:V\rightarrow {\mathbb {R}}\) is an harmonic function on G whenever

Proposition 2.24

-

1.

\(h(x)= {{\mathbb {E}}}[W_\infty (x,\beta )]\) defines a bounded harmonic function on G.

-

2.

The following properties are equivalent:

-

(i)

\((W_n(x,\beta ))_{n}\) is uniformly integrable for some \(x\in V\),

-

(ii)

\({{\mathbb {E}}}[W_\infty (x,\beta )] = 1\) for some \(x\in V\),

-

(iii)

\(\inf _{x\in V} {{\mathbb {E}}}[W_\infty (x,\beta )] > 0\).

-

(i)

-

3.

If one of the above properties is satisfied then for all \(x\in V\), \((W_n(x,\beta ))_{n}\) is uniformly integrable and \({{\mathbb {E}}}[W_\infty (x)]=1\) .

Corollary 2.25

Assume that (G, P) satisfies the Liouville property, i.e. that all bounded harmonic functions are constant. Then, \((W_n(x,\beta ))_{n}\) is uniformly integrable for all \(x\in V\) in the whole weak disorder region.

In what follows, given a graph G and a vertex \(v\in V\), we call the pair (v, G) a rooted graph. We say that two rooted graphs (v, G) and \((v',G')\) are isomorphic if there exists a graph isomorphism \(\pi \) so that \(v'=\pi (v)\) and \(G'=\pi (G)\).

Corollary 2.26

Suppose there is a finite set of vertices \(V_0\subset V\) such that for all \(x\in V\), the rooted graph (x, G) is isomorphic to one of the rooted graphs \(\{(v,G)\}_{v\in V_0}\). Then, if weak disorder holds, \((W_n(x))_{n}\) is uniformly integrable for all \(x\in V\).

3 Specific Graphs

We introduce in this short section three models, which will be used to illustrate various phenomena. These are respectively SRW on the lattice infinite bond percolation cluster, the \(\lambda \)-biased random walk on a Galton–Watson tree, and the canopy tree.

3.1 Super-critical percolation cluster on \({\mathbb {Z}}^d\)

To each edge (x, y) of the lattice \({\mathbb {Z}}^d\) we associate a Bernoulli random variable \(a_{xy}\) such that the edge is open (i.e. \(a_{xy}=1\)) with probability p. It is well known, see e.g. [30], that for \(d\ge 2\) there exists a critical parameter \(p_c=p_c({\mathbb {Z}}^d) \in (0,1)\), such that for the super-critical regime \(p>p_c\), there exists almost-surely a unique infinite connected cluster denoted by \({\mathcal {C}}_\infty \).

The SRW on the super-critical infinite cluster shares properties similar to the SRW on \({\mathbb {Z}}^d\); indeed, the walk on \({\mathcal {C}}_\infty \) is transient when \(d\ge 3\) and recurrent when \(d=2\), almost surely [31]. The SRW further satisfies almost surely a central limit theorem and a local limit theorem [3]. We will however see that the polymer measure on the percolation model is quite different, and in particular, see Theorem 5.5, does not possess an \(L^2\) regime.

3.2 \(\lambda \)-biased random walk on Galton–Watson trees

Let \({\mathcal {T}}\) be a rooted (at a vertex o) Galton–Watson tree (conditioned on survival) with offspring distribution \(\{p_k\}\), having mean \(m=\sum k p_k>1\). (Note that the model of Bernoulli percolation on the regular tree is a particular case of Galton–Watson tree.) The parent of \(x\in V\) is the neighbor of x on the geodesic connecting x to the root. All other neighbors of x are called descendents. Given a real \(\lambda \ge 0\), we let \(({\mathrm P},(S_k))\) denote the \(\lambda \)-biased random walk on \({\mathcal {T}}\), with transition probability \(P(x,y)=\lambda /(\lambda +d_x)\) if y is the parent of x and \(P(x,y)=1/(d_x+\lambda )\) otherwise. Lyons [45] proved that the walk is transient if \(m>\lambda \), null recurrent if \(m=\lambda \) and positive recurrent if \(m<\lambda \). Let \(|S_k|\) denote the distance of \(S_k\) from the root. Law of large numbers for \(|S_n|/n\), based on appropriate regeneration structures, were derived in [46] (for the transient case). These were completed by large deviation principles in [25], and by central limit theorems (for the transient \(m>\lambda >0\) and null-recurrent \(m=\lambda >0\) cases) in [50]. Note that the case \(\lambda =0\) corresponds to the model of branching random walk, and \(W_n(o)\) is then the Biggins martingale. Much is known about \(W_n(o)\) and its limits, see [52].

Our results for polymers with the biased random walk on Galton–Watson trees are presented in Sects. 6.1 and 6.2.

3.3 The canopy tree

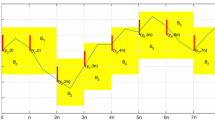

The canopy tree \(\mathtt T\) is the infinite volume limit of a finite \(d+1\)-regular tree seen from its bottom boundary [1]. It is constructed as follows. At the ground level \(\ell =0\), put a countable number of vertices and attach to every successive pack of d vertices one parent at level \(\ell =1\). Do the same recursively at the higher \(\ell \) levels (see Fig. 1 for a pictorial representation).

The \(\lambda \)-biased random walk (with bias toward the parents) is constructed similarly to Sect. 3.2. Namely, when at vertex v at level \(l>0\), the jump probability toward the parent is \(\lambda /(\lambda +d)\) while the probability to jump to any other neighbor is \(1/(\lambda +d)\). When at vertex v at level 0, the jump probability toward the parent of v is 1. It follows from the description that the \(\lambda \)-biased walk can be represented in terms of a conductance model (see [47]) with the conductance on edges between levels \(\ell \) and \(\ell +1\) equal to \(\lambda ^\ell \). From this representation it follows at once that the \(\lambda \)-biased walk is recurrent if \(\lambda < 1\), null recurrent if \(\lambda = 1\) and transient when \(\lambda > 1\).

We show in Sect. 6.3 that \(\beta _2>0\) for the polymer on \((\mathtt T,(S_k))\) whenever \(d>\lambda > 1\).

The canopy tree of parameter \(d=2\). The subtrees \(\mathtt T^{(\ell )}\) are used in the proof of Theorem 6.6

4 Weak, Strong and Very Strong Disorder

We provide in this section proofs for our main results.

4.1 Proof of Proposition 1.2

Proof of Proposition 1.2

The proof mostly follows classical techniques of directed polymers, see e.g. [16]. Introduce the notation \(e_n := e^{\beta \sum _{i=1}^n \omega (i,S_i)-n\Lambda (\beta )}\) such that \(W_n(x) = {\mathrm E}_x[e_n]\). Let \(n,m\in {\mathbb {N}}\) and denote by \(\eta _n\) the shift in time of n steps in the environment \(\omega (i,y)\), and observe that by Markov’s property,

where \(R_n(x)= \{y\in V, p_n(x,y)>0\}\). As \(R_n(x)\) is finite by assumption, we obtain after taking the limit \(m\rightarrow \infty \) that

Since all the \({\mathrm E}_x\left[ e_n {\mathbf {1}}_{S_n=y}\right] ,y\in R_n(x)\) are almost surely positive,

hence \(\{W_\infty (x) = 0\}\) is measurable with respect to \({\mathcal {G}}_n^+ := \sigma (\omega (i,x), i\ge n, x\in V)\) for all n hence it is a tail event, so by Kolmogorov 0–1 law either \(W_\infty (x) = 0\) a.s. or \(W_\infty (x) > 0\) a.s. Now the first statement of Proposition 1.2 follows from (27) and the irreducibility assumption.

We turn to the second part of the proposition, namely the existence of the critical parameter \(\beta _c\). Let \(\theta \in (0,1)\) and \(x\in V\). We begin by observing that \({{\mathbb {E}}}[W_\infty (x,\beta )^\theta ]\) is non-increasing in \(\beta \), for which it is enough to prove that \(\beta \rightarrow {{\mathbb {E}}}[W_n(x,\beta )^\theta ]\) is non-increasing for all n (note that \((W_n(x,\beta )^\theta )_n\) is uniformly integrable since \({{\mathbb {E}}}[W_n(x,\beta )]=1\)).

Let \(H_n(S) = \sum _{i=1}^n \omega (i,S_i)\) and \(e_n(S) = e^{\beta H_n(S) - n\Lambda (\beta )}\). For all \(n\ge 0\), we have by Fubini:

where \(\mathrm {d}{{\mathbb {P}}}^{S,n} = e_n(S) \mathrm {d}{{\mathbb {P}}}\). The field \(\omega (i,x)\) is still independent under \({{\mathbb {P}}}^{S,n}\), so by the FKG inequality for independent random variables [34], we see that since \(H_n(S)\) is non-decreasing with respect to the environment while \(W_n(x,\beta )^{\theta - 1}\) is non-increasing, we have

where the last equality holds since \({{\mathbb {E}}}[e_n(S)] = 1\).

Now, observe that

does not depend on x. Indeed, if \(\beta _c(x)= \infty \) for some x, then by (27) we have that \(W_\infty (y,\beta )>0\) a.s. for all y and all \(\beta \ge 0\), hence \(\beta _c(y)=\infty \) for all y. Similarly, if \(\beta _c(x)<\infty \) for some x, then all \(\beta _c(y)\) are finite. In this case, let \(x,y\in V\) and \(\beta >\beta _c(x)\), so that \(W_\infty (y,\beta ) = 0\) for all \(y \in V\) and thus \(\beta _c(y)\le \beta _c(x)\); by exchanging the role of x and y, \(\beta _c(y) = \beta _c(x)\).

We conclude the proof by checking, similarly to what we just did, that \(\beta _c:=\beta _c(x)\) separates strong disorder from weak disorder. \(\quad \square \)

4.2 Uniform integrability: proofs for Sect. 2.2

Proof of Proposition 2.24

1. Let \(h(x) = {{\mathbb {E}}}[W_\infty (x)]\). By Fatou’s lemma, the expectation \({{\mathbb {E}}}[W_\infty (x)]\) is uniformly bounded by 1. To see that h is harmonic, take expectation with respect to the environment in (26) and let \(n=1\).

2. We begin with the implication (i) \(\Rightarrow \) (ii). If \((W_n(x))_n\) is uniformly integrable, then \(W_n(x)\) converges in \(L^1\) and so \({{\mathbb {E}}}[W_\infty (x)]=1\) since \({{\mathbb {E}}}[W_n(x)]=1\).

To see that (ii) implies (iii), suppose that \(h(x)={{\mathbb {E}}}[W_\infty (x)]=1\). Since h is bounded by 1, this implies that h is a local maximum. By harmonicity, h must be constant equal to 1 which is (iii).

We now turn to (iii) \(\Rightarrow \) (i). Suppose that \(\inf _{y\in V} {{\mathbb {E}}}[W_\infty (y)] > 0\) and let any \(x \in V\). By identity (26): (recall the definition of \({\mathcal {G}}_k\) in the introduction)

and since \({{\mathbb {E}}}\left[ W_\infty (x) \bigg | {\mathcal {G}} _k\right] \) is UI, \((W_n(x))_n\) is also UI.

Point 3 of the proposition follows from the last arguments. \(\quad \square \)

Proof of Corollary 2.25

By point 1 of Proposition 2.24, h is constant when (G, P) satisfies the Liouville property. Hence \(\inf _x h(x)\) is non-zero since weak disorder holds and so uniform integrability holds by point 3 of Proposition 2.24. \(\quad \square \)

Proof of Corollary 2.26

Under the assumption of the corollary the function \(h(x)={{\mathbb {E}}}[W_\infty (x)]\) only takes a finite number of values. Since weak disorder holds, all of them are positive and the statement follows from Proposition 2.24. \(\quad \square \)

We next construct an example of a pair (G, S) where \(\beta _c>0\) but \(W_n(x,\beta )\) is not uniformly integrable for any \(\beta >0\) and some \(x\in V\). Let \({\mathcal {T}}_d\) denote the infinite d-ary tree rooted at a vertex o. Augment \({\mathcal {T}}_2\) by attaching to o a copy of \({\mathbb {Z}}_+\), to created a rooted tree \({\mathcal {G}}_{4.1}\), see Fig. 2.

To define the random walk on \({\mathcal {G}}_{4.1}\), assign to each edge e of \({\mathcal {T}}_2\) the conductace \(C_e=1\), while to the ith edge of \({\mathbb {Z}}_+\) (measured from the root), assign the conductance \(C_i=e^{e^i}\). For \((x,y)\in E\), write \(C_{x,y}\) for the conductance of the edge (x, y), and set \(P(x,y)=C_{x,y}/\sum _{z\sim x} C_{x,z}\).

Proposition 4.1

The polymer on \({\mathcal {G}}_{4.1}\) with i.i.d. bounded \(\omega (i,x)\) has \(\beta _c>0\) while for any \(\beta >0\), \(W_n(o,\beta )\) is not uniformly integrable.

Proof

Let \(v_1,v_2\) be the descendents of o that belong to \({\mathcal {T}}_2\) and let \(v_3\) denote the descendent of o that belongs to \({\mathbb {Z}}_+\). Let

Clearly, by the transience of the walk on \({\mathbb {Z}}_+\) with our conductances, \({\mathrm P}({\mathcal {C}})\in (0,1)\). Writing \(W_n=W_n(o,\beta )\), decompose \(W_n = W_n^{t} + W_n^{l},\) with \(W_n^l = {\mathrm E}[e_n {\mathbf {1}}_{{\mathcal {C}}}] = {\mathrm P}({\mathcal {C}}) {\mathrm E}[e_n \mathbf |{{\mathcal {C}}}]\). Set

We have that \({\mathrm P}({\mathcal {A}}_n^c|{\mathcal {C}}) < e^{-c n}\) for all n large (since the conditional walk has a conductance representation with increasingly strong drift away from the root). We now claim that for all \(\beta >0\),

Indeed, let \(v_i\) denote the ith vertex on the \({\mathbb {Z}}_+\) part of \({\mathcal {G}}_{4.1}\). By a union bound, using that the \(\omega (i,x)\) are bounded,

and thus we have that

where \(EX_n\le 1\). Hence, \(W_n^l\rightarrow _{n\rightarrow \infty } 0\), in probability, and hence \({{\mathbb {P}}}\)-a.s. since \(W_n^l\) is a martingale. On the other hand, \(W_n^t = {\mathrm E}[e_n {\mathbf {1}}_{{\mathcal {C}}^c}] \ge W_n^{t,*}\) where \(W_n^{t,*} = {\mathrm E}[e_n {\mathbf {1}}_{{\mathcal {D}}}]\) with

Note that \(W_n^{t,*}\) is a martingale and that

where \(W_n^{{\mathcal {T}}_2}(o,\beta )\) is the normalized partition function for the SRW on \({\mathcal {T}}_2\). We now claim that for \(\beta >0\) small enough,

see e.g. [27, (3.3b)] for a similar computation. Indeed, letting \(S,S'\) denote two independent copies of S, the SRW on \({{\mathcal {T}}}_2\) started at the root, and setting \(N_\infty =\sum _{i=1}^\infty \mathbf{1}_{S_i=S_i'}\), it is easy to check that there exists a constant c so that \({\mathrm E}(e^{cN_\infty })<\infty \). As a consequence, with \(\Lambda _2(\beta )\) as in (22), we have that \(\Lambda _2(\beta )\rightarrow _{\beta \rightarrow 0} 0\), and \({{\mathbb {E}}}(W_n^{{\mathcal {T}}_2}(o,\beta ))^2\le {\mathrm E}(e^{\Lambda _2(\beta ) N_\infty })<\infty \) for \(\beta >0\) small enough, yielding (31). Thus, we conclude that for small \(\beta \), \(W_n^{t,*}\) converges a.s. and in \(L^2\) to a strictly positive limit \(W_\infty ^{t,*}\) with \({{\mathbb {E}}}W_\infty ^{t,*}= {\mathrm P}[{\mathcal {D}}] >0\). Thus on the one hand, \(W_\infty = \lim _n W_n\) is, for small \(\beta \), positive with positive probability, which implies that it is in fact positive a.s., and thus \(\beta _c>0\). On the other hand, we have by (29) that for all \(\beta \in (0,\beta _0)\), \(W_\infty = W_\infty ^t\), where by Fatou’s lemma \({{\mathbb {E}}}[W_\infty ^t]\le {\mathrm P}({\mathcal {C}})<1\). Therefore, \((W_n)_{n\ge 0}\) cannot be uniformly integrable in \((0,\beta _0)\), and hence for any \(\beta >0\), since \({{\mathbb {E}}}[W_n]=1\). \(\quad \square \)

4.3 Recurrence and heat kernel bounds: Proof of Theorems 2.11 and 2.14

Proof of Theorem 2.11

The proof is based on the change of measure technique introduced in [42]. By Assumption 1.1 and our convention that for any (i, x), \(\omega (i,x)\) has zero mean and variance 1, we obtain that for \(\delta >0\),

We fix \(x\in V\) and let \(W_n=W_n(x)\). Thanks to positive recurrence and the ergodic theorem, we can find an \(\varepsilon \in (0,1)\) such that under \({\mathrm P}_x\), the process \((S_k)\) spends at most a fraction \(1-\varepsilon \) of time away from x, i.e.

Now, fix \(\delta _n = n^{-1/2}\) and define the measure \(\widetilde{{{\mathbb {P}}}}\) by

where \({\mathcal {C}} = [1,n]\times \{x\}\). Under \(\widetilde{{{\mathbb {P}}}}\), the variables \((\omega (i,y))\) are independent, of mean \(-\delta _n {\mathbf {1}}_{(i,y)\in {\mathcal {C}}} (1+o_n(1))\) and variance \(1+o_n(1)\). Further, for \((i,y)\in {\mathcal {C}}\),

Then, for any \(\alpha \in (0,1)\), Hölder’s inequality yields that

The first term on the right hand side of the last display reads

On the other hand, by (35),

By our choice of \(\delta _n\) and (33), we conclude that \(\widetilde{{{\mathbb {E}}}}\left[ W_n \right] \rightarrow _{n\rightarrow \infty } 0\). Putting things together, we find that \({{\mathbb {E}}}[W_n^\alpha ]\rightarrow _{n\rightarrow \infty } 0\) for all \(\beta >0\), and since \(W_n^\alpha \) is uniformly integrable, necessarily \(W_\infty = 0\) and thus \(\beta _c=0\). \(\quad \square \)

Proof of Theorem 2.14

The proof of point (i) parallels that of Theorem 2.11, and we use similar notation, with the change that now \(\delta _n = C_1^{-d_f/2} n^{-\frac{d_w+d_f}{2d_w}}\) where \(C_1>0\) is a parameter to be determined later, and

Note that \(n\delta _n ={ C_1^{-d_f/2}}n^{1/2-d/4}\rightarrow _{n\rightarrow \infty } \infty \) since \(d<2\). Introduce the measure \(\widetilde{{{\mathbb {P}}}}\) as in (34). Proceed as in (36) and, using that \(|B(x,n)|\le C n^{d_f}\) for some \(C>0\) and all \(n\ge 1\) by the hypotheses, bound the first term in the right hand side of (36), for all n large, by

On the other hand, as in (37), we have that

where the first term of the RHS in the last display goes to 0 as \(n\rightarrow \infty \), while by Markov’s inequality and estimate (17), we find that the second term is bounded from above by

for some positive F such that \(F(x)\rightarrow 0\) as \(x\rightarrow \infty \), where the estimate in the second line holds for n large enough and is obtained by Riemann approximation using that \(\mu \left( {\mathcal {S}}(x,p)\right) \le C_V p^{d_f-1}\) and \(d=2d_f/d_w <2\). We can now fix \(C_1\) large enough to make RHS of the last display as small as we wish.

Putting things together, we find that for all \(\beta >0\), \({{\mathbb {E}}}[W_n^\alpha ] \rightarrow _{n\rightarrow \infty } 0\) and therefore \(W_\infty = 0\) a.s.

We turn to the proof of Theorem 2.14 (ii). By Khas’minskii’s lemma [53, P.8, Lemma 2], there is an \(L^2\)-region whenever

Note that \({\mathrm E}_{x}^{\otimes 2} [ N_\infty (S,S')] = \sum _{n=0}^\infty \sum _{y\in V} p_n(x,y)^2\). By (18) and (19), and using the uniform upper bound on \(\mu \),

The fact that \(d_f/d_w = d/2\) with \(d>2\) yields (39). \(\quad \square \)

4.4 Very strong disorder: proof of Propositions 1.8 and 1.9, and Theorem 2.19

Proof of Proposition 1.8

We claim that if \({{\bar{p}}}(x)\) in (7) satisfies \({{\bar{p}}}(x)<0\) for some \(x\in V\), then the same holds for all \(x\in V\), i.e. (7) holds. Indeed, let \(x,y\in V\) and \(m\ge 0\) such that \(y\in R_m(x)\) where \(R_m(x)=\{z\in V, p_m(x,z) > 0\}\). From (25), we see that for all \(n\ge 0\),

hence

which justifies our claim. We now complete the proof of Proposition 1.8. Let \(H_n(S) = \sum _{i=1}^n \omega (i,S_i)\). We have by Fubini:

and following the same arguments as in the proof of Proposition 1.2, we find that for all \(n\ge 1\), \(\beta \rightarrow {{\mathbb {E}}}\log W_n(x,\beta )\) is non-increasing. Therefore \({{\bar{p}}}(x)\) of (7) is also non-increasing with \(\beta \) and this concludes the proof. Finally, property (8) follows from the concentration inequality in Theorem 1.7 and the Borel–Cantelli lemma. \(\quad \square \)

We now turn to the:

Proof of Proposition 1.9

Let \(d>0\) such that the degree of all vertices is bounded by d. Let \(I(a)=\sup _{\theta >0}\left\{ \theta a -\Lambda (\theta ) \right\} \) be the rate function of the \(\omega (i,y)\)’s. For any nearest-neighboor path of vertices \({\mathbf {x}}=(x_0,\dots ,x_n)\), we note \(H_n({\mathbf {x}}) = \sum _{k=1}^n \omega (k,x_k)\). Let \(a>0\) and consider

Since \(I(a)\rightarrow \infty \) as \(a\rightarrow \infty \), we can choose a such that the last sum converges. Then by Borel–Cantelli’s lemma, \({{\mathbb {P}}}\)-almost surely for n large enough we have \(H_n({\mathbf {x}}) \le na\) for every path \({\mathbf {x}}\), so that

Since the support of \(\omega \) is unbounded, we have \(\Lambda (\beta ) \gg \beta \) as \(\beta \rightarrow \infty \), therefore \(\limsup n^{-1} \log W_n(x_0) < 0\) a.s. for \(\beta \) large enough. The proof is concluded via (8). \(\square \)

Proposition 1.8 provides uniform bounds on the decay exponent.

Proof of Theorem 2.19

The proof parallels that of Theorem 2.14. By Proposition 1.8, the limsup in (21) does not depend on the starting point \(x_0\).

Fix \(x_0\) as in Assumption 2.18 and let n be large enough so that \((A_i)_{i\in I}\) is a covering of \(B(x_0,nm)\). We have

where

By the formula \((a+b)^{\theta } \le a^{\theta } + b^{\theta }\) for \(a,b\ge 0\) and \(\theta \in (0,1)\), we obtain that

For \(i_1,\dots ,i_m\in I\), let \(J=J_0 \cup \dots \cup J_m\) with

Set \(\delta _n = C_1^{-d_f/2} n^{-\frac{d_w+d_f}{2d_w}}\). We have

Since \(\inf \mu > 0\), by (19), for n large enough the first factor on the right-hand side is bounded by

with \(\alpha = {\theta C_V }/{(1-\theta )}\). We will now show that for all \(m\ge 1\),

which by (42) will entail that

Using Markov’s property, the summand in (43) is bounded by

with \({\tilde{J}}_{i} = \{x\in V : d(x,A_i) \le C_1 n^{1/d_w}\}\). Hence (43) will follow once we show that

We decompose the left hand side of the last display as

The first sum is bounded by

where we have used (18), (19) and (20) with the fact that \(\mathrm {diam}(A_i) \le n^{d/2}\). For \(\theta \) close enough to 1, the last sum can be made smaller than \(\varepsilon /2\) by letting R large enough (which we fix from now on).

The second sum in (45) is bounded from above by

where by (20), the first factor is bounded by some constant \(C'=C'(R)\), and from (38) and the computation below it, we find that for \(C_1\) and n large enough (in this order), the second factor is bounded (uniformly in \(j\in I\)) by

Note that there exists \(C>0\) such that for all \(\beta \le 1\), we have \(\Lambda '(\beta ) \ge C \beta \). We now choose n to be any integer between \(C_2 \beta ^{-\frac{4}{2-d}}\) and \(2C_2 \beta ^{-\frac{4}{2-d}}\), with \(C_2\) fixed big enough to make n large enough and to ensure that the quantity in (46) is less than \(\varepsilon /(2C')\). This shows (43).

Now, let \(W_r(x,y) = {\mathrm E}[e_r {\mathbf {1}}_{S_r = y}]\) be the normalized point-to-point partition function. By Markov’s property, we have

where \(\eta _s\) is the shift of environment in time. We therefore get that

since \(\#\{x: {\mathrm P}(S_s = x)>0\} \le C_V s^{d_f}\) by (19) and \({{\mathbb {E}}}[W_r(x)] = 1\).

Hence, decomposing any \(t> n\) into \(t=nm_0+r\) with \(r\in [0,n)\), we obtain along with (44) that

Moreover, since

we find that

so letting \(t\rightarrow \infty \), we obtain that

with our choice of n [see below (46)]. This gives (21). \(\quad \square \)

5 Proofs for Sect. 1.4 and Theorems 2.1 and 2.6: The \(L^2\)-region

Proof of Proposition 1.11

From the definitions it follows that the condition of \(L^2\)-boundedness in (11) reduces to a condition on two independent copies of the random walk:

where \(\Lambda _2(\beta )\) defined in (22) is non-decreasing in \(\beta \).

We claim that the finiteness of \(\sup _n {{\mathbb {E}}}[W_n(x)^2]\) does not depend on x. Indeed, by Markov’s property, we have for all \(x,y\in V\),

where \(\tau _{(y,y)}=\inf _n \left\{ n\ge 0: S_n=y, {S}'_n=y\right\} \) with \({\mathrm P}_{x,x}^{\otimes 2}(\tau _{(y,y)} < \infty ) > 0\) by irreducibily of the walk; this proves our claim.

Existence of the critical parameter \(\beta _2\) in Proposition 1.11 then comes from (47). \(\square \)

Proof of Theorem 1.13

We follow the lines of Section 3.3 in [16]. Let F be a test function. Since \(W_n(x,\beta )\rightarrow W_\infty (x,\beta )\) with \(W_\infty (x,\beta ) >0\) a.s, it is enough to show that

With \(S,S'\) independent copies of S, let \({\overline{F}}(x) = F(x) - {\mathrm E}[F(X)]\) and \(N_n = \sum _{k=1}^n {\mathbf {1}}_{S_k=S_k'}\) with \(N=\lim _{n\rightarrow \infty } N_n\). We have, with \(\Lambda _2=\Lambda _2(\beta )\) as in (22),

so since the above integrand is bounded by \(4\Vert F\Vert _\infty ^2 e^{\Lambda _2 N}\in L^1\), it is enough to show that as \(n\rightarrow \infty \),

where \(N,X_1,X_2\) are independent and \(X_i {\mathop {=}\limits ^{(d)}}X\) for \(i=1,2\). Let \(F_1,F_2,G\) be bounded Lipschitz functions. Consider:

where the \(\varepsilon ^i_{n,\ell }\) are defined implicitly. We have:

uniformly in \(n\ge \ell \) as \(\ell \rightarrow \infty \) since \(N_n\nearrow N\). Furthermore, for fixed \(\ell \), we obtain that \(\varepsilon ^2_{n,\ell } - \varepsilon ^1_{n,\ell } \rightarrow 0\) as \(n\rightarrow \infty \) by Markov property using that

with \(a_n^{-1} \rightarrow 0\) as \(n\rightarrow \infty \), where \(\Vert F_1 \Vert _{Lip}\) the Lipschitz constant of \(F_1\). Now for fixed \(\ell \), we have by hypothesis that \(|{S}_{n-\ell }|/a_{n} {\mathop {\longrightarrow }\limits ^{{ (d)}}}X\) as \(n\rightarrow \infty \), therefore letting first \(n\rightarrow \infty \) and then \(\ell \rightarrow \infty \), we obtain that \(B_n \rightarrow {\mathrm E}_{x}^{\otimes 2}[G(N)] {\mathrm E}[F_1(X)] {\mathrm E}[F_2(X)]\), which entails (48). \(\quad \square \)

Proof of Theorem 2.1

Let \((S_k)\) be recurrent and reversible with respect to a measure \((\pi (x))_{x\in V}\) which is bounded away from zero. We will show that \(\beta _2 = 0\). We first observe that \((S_{2k})\) is recurrent as well. Indeed, starting from any vertex x, either \((S_{2k})\) or \((S_{2k+1})\) visits x infinitely many times. If x has even period, then it has to be \((S_{2k})\); if it has odd period, then both visit x infinitely often by irreducibility of the walk.

Now, with \(S,S'\) denoting independent copies of S, let

Since the reversible measure satisfies \(\pi (x){\mathrm P}_x(S_n=y) = {\mathrm P}_y(S_n=x) \pi (y)\), we have:

where the last equality holds since \((S_{2k})_{k\ge 0}\) is recurrent. Hence the RHS of (47) is infinite when \(\beta > 0\) by the lower bound \(e^{x} \ge x\). \(\quad \square \)

Proof of Theorem 2.6

Recall the definition of the Green function of S in (15). Let \((S_k)\) be transient and reversible with respect to a measure \((\pi (x))_{x\in V}\) which is bounded from above and such that \(\sup _{x\in V} G(x,x)/\pi (x) < \infty \). We show that \(\beta _2>0\). Again, it is enough to show that (39) holds, and by (49),

from which the statement of the theorem follows. \(\quad \square \)

5.1 Criteria for \(\beta _2=0\) and proof of Theorem 2.4

The following expression for \({{\mathbb {E}}}(W_\infty ^2)\) will be useful in this section. Recall \(\Lambda _2=\Lambda _2(\beta )\), see (22).

Proposition 5.1

The following identity holds:

Proof

We have

Now expand the product and take \(n\rightarrow \infty \), using monotone convergence. \(\quad \square \)

Proof of Theorem 2.4

By Proposition 5.1, it is enough to show that for some \(x_0\in V\) and for all \(\delta >0\), there exists a constant \(C>0\) such that for all \(k\ge 1\),

We fix an arbitrary \(x_0\in V\) and \(\delta >0\). By the assumption of the theorem, there exists \(x^\star \in V\) such that

Therefore,

where \(C>0\) by the irreducibility of (S). This proves (50). \(\quad \square \)

We describe an application of Theorem 2.4 to the construction of a family of transient graphs satisfying \(\beta _2=0\).

Definition 5.2

A pipe in G is a chain of vertices \(v_1,\ldots ,v_L\) satisfying

-

1.

\((v_i,v_{i+1})\in E\).

-

2.

For \(i=2,\ldots ,L-1\), the degree of \(v_i\) is 2.

Proposition 5.3

Let G contain arbitrarily long pipes. Then \(\beta _2=0\) for the G/SRW polymer.

Proof

Consider a pipe of length L and let x be its center. Then, \(p_k(x,x) = p_k^{\mathbb {Z}}(x,x)\) for \(k< L/2\), where \( p_k^{\mathbb {Z}}(x,y)\) is the transition probability for simple random walk on \({\mathbb {Z}}\). Hence \(\sum _{k} p_k(x,x)^2 \ge \sum _{k=1}^{L/2} p_k^{\mathbb {Z}}(x,x)^2 \sim \log L \rightarrow \infty \) as \(L\rightarrow \infty \), which is condition (14). An application of Theorem 2.4 completes the proof. \(\quad \square \)

The condition in Theorem 2.4 can be relaxed to the following, writing \(\tau _S(x,L)\) for the hitting time of a ball of radius L around x. The proof is identical to that of Theorem 2.4 and is therefore omitted.

Theorem 5.4

Assume that

Then, \(\beta _2=0\).

5.2 \(\beta _2=0\) for the lattice supercritical percolation cluster

In this section, we consider G to be a supercritical percolation cluster on \({\mathbb {Z}}^d\), see Sect. 3.1 for definitions.

Theorem 5.5

\(\beta _2=0\) a.s. for SRW on the supercritical percolation cluster of \({\mathbb {Z}}^d\), \(d\ge 2\).

Recall the notion of pipe, see Definition 5.2. Theorem 5.5 follows at once from Proposition 5.3 and the next lemma.

Lemma 5.6

For all \(L\ge 1\) and \(d\ge 2\), there exist a.s. infinitely many pipes of length L in the supercritical percolation cluster.

Proof

Partition \({\mathbb {Z}}^d\) to boxes of side \(L+1\). Fix such a box B and let \({\mathcal {C}}\) denote the (unique) infinite cluster. Let \({\mathcal {E}}\) denote all edges that connect two vertices in the boundary of B. Define the events

Note that \(A_1\) and \(A_2\) are increasing functions. Hence, by FKG and \(p>p_c\),

On the other hand, let \({{\mathcal {P}}}_L\) denote the event that there exists in B a pipe \((v_1,\ldots ,v_L)\) of length L with \(v_1\) belonging to the boundary of B. Then since on \(A_2\), \(A_1\) only depends on the configuration outside B, we have that

Combining the above, we get

Thus, with positive probability, there exists a pipe of length L in the supercritical percolation cluster. By ergodicity, this implies the lemma. \(\quad \square \)

5.3 A transient graph with \(0=\beta _2<\beta _c\)

Recall Definition 5.2. Consider the graph \(G_{5.7}\) that is obtained by glueing to \({\mathbb {Z}}^d\), on the kth vertex of the line

pipes of length k. See Fig. 3 for an illustration

Theorem 5.7

The polymer on \(G_{5.7}\)/SRW with \(d\ge 4\) satisfies \(\beta _2=0\) and \(\beta _c>0\).

Proof

In view of Proposition 5.3, for the first assertion it suffices to prove that \(\beta _c>0\). Denote by \(A=A(S)\) the event \(A=\{S \text { never enters } {\mathcal {D}}\}\). Then, when \(d\ge 4\),

where \(({\mathrm P}^{{\mathbb {Z}}^d},S)\) is the SRW on \({\mathbb {Z}}^d\).

We now introduce the martingale \(W_n(0,A)={\mathrm E}_0[e_n {\mathbf {1}}_A]\). We will prove that, for some \(\beta >0\), \(W_n(0,A)\) is uniformly bounded in \(L^2\). This will imply that for such \(\beta \), \({{\mathbb {E}}}[W_\infty (0,A)] = {\mathrm P}_0(A) > 0\), and since \(W_\infty (0) \ge W_\infty (0,A)\) a.s., we further obtain that \({{\mathbb {E}}}[W_\infty (0)]> {{\mathbb {E}}}[W_\infty (0,A)] > 0\) and hence, by Proposition 1.2, that \(\beta _c > 0\).

Turning to the \(L^2\) estimate, as in the proof of Proposition 5.1 we have, letting \(\mu _2=\mu _2(\beta ) = e^{\Lambda _2(\beta )} -1\),

with

Therefore, we obtain by summing that, for \(\beta \) small, since \(d\ge 4\),

where \(W_\infty ^{{\mathbb {Z}}^d}(0)\) denotes \(W_\infty ( 0)\) for the polymer on \({\mathbb {Z}}^d\)/SRW.

We turn to the last assertion of the theorem. By Corollary 2.25, it is enough to show that G satisfies Liouville’s property. Let h be a harmonic function on G. By harmonicity, \(h=h_k\) on the kth pipe. Thus, h restricted to \({\mathbb {Z}}^d\) is again harmonic. Since \({\mathbb {Z}}^d\) satisfies the Liouville property, it follows that so does G. \(\quad \square \)

6 Polymers on Tree Structures

Throughout this section we take S to be the \(\lambda \)-biased random walk on either the Galton–Watson trees with offspring distribution \((p_k)_{k\ge 0}\) such that \(m = \sum k p_k >1\) (conditioned on non-extinction if \(p_0>0\)), or on the canopy tree, see Sects. 3.2 and 3.3 for definitions. We write \(D_n=\{v\in V: d(v,o)=n\}\) for vertices at level n of the tree. In the case of the Galton–Watson tree, expectations and probabilities with respect to the randomness of the tree will be denoted by \({\mathrm E}_\mu \) and \(\mu \) respectively. When \(p_0>0\), we write \(q=\mu (\text {extinction})\) and \(\mu _{0}(\cdot ) = \mu (\cdot |\text { non-extinction})\).

6.1 The positive recurrent Galton–Watson tree (\(\mathbf {\lambda > m}\))

Let \(({\mathcal {T}},(S_k))\) be the walk on the Galton–Watson tree \({\mathcal {T}}\) with parameters \(\lambda> m>1\), as defined in Sect. 3.2.

Theorem 6.1

Assume \(\lambda >m\). Then, almost surely on the realization of \({\mathcal {T}}\), strong disorder always holds (\(\beta _c=0\)). Moreover,

-

(i)

If the tree is \(m-1\) regular (i.e. \({p_m=1}\) for some integer m), then very strong disorder always holds, i.e. \(\bar{\beta _c}({\mathcal {T}}) = 0\), a.s.

-

(ii)

More generally, if \(\sup \{{d}:p_d >0\} < \lambda \), then \(\mu _0\)-a.s, \(\bar{\beta _c}({\mathcal {T}}) = 0\).

-

(iii)

If \({\mathrm E}_\mu [|D_1|\log ^+ |D_1|]<\infty \), \(p_0>0\) and there exists some \(d>\lambda \) such that \(p_d>0\), then \(\bar{\beta _c}({\mathcal {T}}) > 0\), \(\mu _0\)-a.s.

Remark 6.2

Point (iii) shows in particular that there are positive recurrent walks such that very strong disorder does not always hold.

Proof

The fact that \(\beta _c=0\) comes from Theorem 2.11. In order to see (i), let \(X_n=|S_n|\). Then \((X_n)\) is a random walk on \({\mathbb {Z}}_+\) with a bias towards 0 which is constant on each point of \({\mathbb {Z}}_+\). Therefore, the return time to 0 of \((S_n)\) admit exponential moments and by [14], very strong disorder always holds. Point (ii) holds since the problem can be reduced to a random walk \((Y_n)\) on half a line with inhomogeneous bounded bias towards the root. By a standard coupling argument, the walk \((X_n)\) will stochasticaly dominate the walk \((Y_n)\), which then implies, following from (i), exponential moments for the return time.

We next prove (iii). Letting \(Z = \lim _{n} m^{-n} |D_n|\), it follows from the Kesten-Stigum theorem [39] that the condition \({\mathrm E}_\mu [|D_1|\log ^+ |D_1|]<\infty \) implies that \(\mu _{0}(Z > 0)=1\). Thus, for \(\varepsilon >0\) small and any \(c>0\),

In what follows, we write \(l=l_n=\lfloor (\log n)^c\rfloor \). Now fix any \(d>\lambda \) such that \(p_d>0\) and denote by \({\mathcal {R}}_n\) the \((d+1)\)-regular tree of depth \(L = \lfloor \log \log n \rfloor \). With a slight abuse of notation we also let

On the event \(A_n\), there are at least \((m-\varepsilon )^l\) vertices in \(D_{l}\) that may independently spawn \({\mathcal {R}}_n\) with probability \(p_d^{Q} \, p_0^{d^{L}}\), where \(Q=1+d+\dots + d^{L -1}\). Therefore,

where the finiteness of the last sum comes from taking \(c=c(d)\) large enough. By the Borel–Cantelli lemma and (52), we thus obtain that

We now fix a realization of the infinite tree \({\mathcal {T}}\) and n large so that \(B_n\) holds, and pick an \({\mathcal {R}}_n\) corresponding to that event. [Such an n exists \(\mu _0\)-almost surely by (53)]. Introduce the event

In words, the event \(F_n(S)\) means that the random walk goes directly to the bottom of one of the \({\mathcal {R}}_n\), and does not reach the root of that \({\mathcal {R}}_n\) before time n. What we show next is that the event \(F_n(S)\) has sub-exponential probability, namely that there exists positive constants \(c_1,c_2\) that depend only on \(\lambda \) and d, such that for all n large enough,

To prove (54), we first observe that on \(B_n\), the event \(\{S_l\in {\mathcal {R}}_n, |S_{k+1}|=|S_k|+1\; \text {for}\; k=1,\ldots l+L\}\) has probability bounded from below by \(Ce^{-(l+L)}\). Once at the bottom, the probability of reaching the top of \({\mathcal {R}}_n\) before returning to the bottom of \({\mathcal {R}}_n\) equals the probability of reaching L before reaching 1 for a SRW on \([1,L]\cap {\mathbb {N}}\) started at 2, with probability \(\lambda /(\lambda +d)\) to go right and \(d/ (\lambda +d)\) to go left. Since \(d>\lambda \), this probability is equivalent as \(L\rightarrow \infty \) to \(c_3 (\frac{\lambda }{d})^{L}\), with \(c_3=c_3(\lambda ,d)\). Therefore, the probability starting from the bottom of \({\mathcal {R}}_n\) not to reach the root of \({\mathcal {R}}_n\) at all before time n is bounded from below by (recall that \(L=\lfloor \log \log n \rfloor \))

for some constant \(c_4=c_4(d,\lambda )\), where we recall that \(L=\lfloor \log \log n \rfloor \). Combining these estimates with the Markov property leads to (54).

We can now turn to the conclusion of the proof. Define \(Y_n:={\mathrm E}_{o}[e_n F_n]\). We will show below that for \(\beta >0\) small enough,

Assuming (55), we have by the Paley–Zygmund inequality that

for n large enough, where we used (54) and that \({{\mathbb {E}}}[Y_n] = {\mathrm P}_{o}(F_n)\). Since \(W_n \ge Y_n\), we further obtain from (56) that there exists a positive sequence \(\alpha _n\) satisfying \(\alpha _n = o(n)\) as \(n\rightarrow \infty \), such that

for some positive constants C and \(c'\). On the other hand, if very strong disorder holds for \(\beta >0\), that is if \({{\mathbb {E}}}[\log W_n] \le -\varepsilon n\) for n large enough and some \(\varepsilon \in (0,1)\), then by the concentration inequality (6), we obtain that

which cannot hold in the same time as (57). and hence very strong disorder does not hold for such \(\beta \).

We now come back to the proof of (55). We have,

Since on \(F_n\), both walks go directly to the bottom of some \({\mathcal {R}}_n\) as above (this takes \((\log n)^c\) steps), we have that for \(x_0\) which is a leaf of \({\mathcal {R}}_n\) that

Then, observe that the number of intersections of the walks before they leave \({\mathcal {R}}_n\) is stochastically dominated by the total number of intersections that would occur in any infinite tree having \({\mathcal {R}}_n\) attached to some vertex. In particular, (55) holds if we can find such an infinite tree T for which \(\beta _2>0\). We will choose T to be the canopy tree with parameters \(m=d\) and \(\lambda \), see Sect. 3.3 for definitions. The claimed finiteness of \(\beta _2\) for the canopy tree now follows from Theorem 6.6. \(\quad \square \)

6.2 The transient Galton–Watson tree (\(\mathbf {\lambda < m}\))

Let \(({\mathcal {T}},(S_k))\) be the walk on the Galton–Watson tree \({\mathcal {T}}\) with parameters \(\lambda < m\), as defined in Sect. 3.2.

Theorem 6.3

Assume that \(m>1\), \(\lambda <m\), and \(p_0=0\). Then, almost surely on the realization of \({\mathcal {T}}\), \(\beta _c>0\). If further \(p_1>0\), then \(\beta _2=0\).

We remark that the particular case \(\lambda =1\), which corresponds to the SRW, is covered by Theorem 6.3.

Proof

That \(\beta _2=0\) when \(p_1>0\) follows from Proposition 5.3 upon observing that there exist (due to \(p_1>0\)) arbitrarily long pipes. We thus only need to prove that \(\beta _c>0\). Throughout the proof, we write GW for the law of \({\mathcal {T}}\), and abbreviate \({\mathrm P}={\mathrm P}_o\), \({\mathrm P}^{\otimes 2}={\mathrm P}_o^{\otimes 2}\), with similar notation for expectation. Recall that for \(v\in V\), \(\tau _v=\inf \{k>0: S_k=v\}\). A preliminary step is the following lemma.

Lemma 6.4

There exists \(c\in (0,\infty )\) such that GW-a.s., there exists \(N=N({\mathcal {T}})>0\) such that for all \(n\ge N\),

Proof

Let \(\delta >0\). For \(w\in V\) and j a descendant of w, define

For \(v\in D_n\), let \([x_0=o,\dots ,x_n=v]\) be the unique simple path connecting the root to v. It follows from [25, Lemma 2.2] that for \(\delta >0\) small enough, there exist \(\alpha >0\) and \(N_0({\mathcal {T}})\) such that for \(n \ge N_0({\mathcal {T}})\),

Fix \(v\in D_n\). By the Markov property, we have that for \(n\ge N_0({\mathcal {T}})\),

where the last bound holds uniformly over \(v\in D_n\). \(\quad \square \)

We also need the following lemma. In the statement, \(S,S'\) denote two independent copies of \((S_k)\) on \({\mathcal {T}}\). Let \({\mathcal {F}}_S\) denote the \(\sigma \)-algebra generated by S. Also, let

denote the intersection times and locations of S and \(S'\).

Lemma 6.5

There exists \(c_2\in (0,\infty )\) such that GW-a.s., there exists \(\ell _0=\ell _0({\mathcal {T}},S)\) so that

Proof

We say that \(\ell \) is a regeneration level for S if there is a time \(\sigma \ge 0\) such that \(|S_k| \ge \ell \) for all \(k\ge \sigma \). It follows from [25, Lemma 4.2] that S possesses infinitely many regeneration levels, whose successive differences are independent and admit exponential moments under the annealed law \(GW\times {\mathrm P}\). In particular, this implies that, for some \(c>0\),

Therefore, by the Borel–Cantelli lemma, \(GW\times {\mathrm P}\) a.s., there is at least one regeneration level \(\ell _0\) for S in \([\ell /2,\ell ]\) for \(\ell \ge \ell _1({\mathcal {T}},S)\) large enough. We continue the proof on that event.

Let \(h\in D_\ell \) be the last vertex visited by S at level \(\ell /2\) before it regenerates at level \(\ell _0\). We have, using Lemma 6.4 at the second inequality, that

\(\square \)

We return to the proof of Theorem 6.3. By Theorem 2.22, it is enough to show that for some \(\beta >0\),

It follows from [46] that

In particular, there is a random variable \(c_1=c_1(S)\) such that for all \(k\ge 1\), S does not spend more than \(c_1 v^{-1} k\) time above level k. Setting

we obtain from the Borel–Cantelli lemma and (59) that \(L<\infty \), \(GW\times {\mathrm P}^{\otimes 2}\)-a.s., and therefore

where in the last inequality, \(\ell _0\) is as in Lemma 6.5, \(T_{\ell _0({\mathcal {T}},S)}(S)\) denotes the last time k with \(|S_k|\le \ell _0({\mathcal {T}},S)\), and we used (59). Taking \(\beta \) small enough so that \(\Lambda _2 c_1 <c_2 v\) ensures that the right hand side of (63) is finite, \(GW\times {\mathrm P}\)-a.s. This concludes the proof.

\(\square \)

6.3 The canopy tree

We consider the walk \((\mathtt T,(S_k))\) on the canopy tree \(\mathtt T\) defined in Sect. 3.3, with parameters \(m=d>\lambda >1\). In this section, we prove the following:

Theorem 6.6

\(\beta _2>0\) for the polymer on \((\mathtt T,(S_k))\) with \(m>\lambda >1\).

Even though the walk is in this case reversible and transient, the existence of an \(L^2\)-region is not simply implied by Theorem 2.6 because, with \(c_{xy}\) denoting the conductance of the edge (x, y), the reversing measure \(\pi (x):=\sum _{x\sim y} c_{xy}= \lambda ^{\ell -1} (\lambda +m)\) if x belongs to level \(\ell \), is diverging when \(\ell \rightarrow \infty \).

We briefly describe the situation. When \(m>\lambda \), there is inside each finite \((m+1)\)-regular sub-tree of the canopy tree a downwards drift which create traps for the walk, in the sense that the walk will spend a long time in finite subtrees at the bottom of the tree before exiting them forever by transience. Even though the walk will spend significant time in these finite sub-trees, the branching structure (\(m>1\)) makes it hard for two independent copies to meet frequently, and this combined with transiences allow for an \(L^2\)-region to exist.

The rest of the section is devoted to the proof of Theorem 6.6. Section 6.3.1 develops some preliminary standard (one dimensional) random walk estimates. Section 6.3.2 contains the actual proof.

6.3.1 Random walk estimates

Fix \(\kappa >1\). Consider the random walk \((X_k)\) on \(\{0,1,\ldots ,\ell \}\) with conductances \(\gamma ^i\) on the edge \((i,i+1)\), \(i=0,\ldots ,\ell -1\). Let \(\tau _i=\min \{t>0: X_t=i\}\). We write \({\mathrm P}_i\) for the law of the random walk with \(X_0=i\).

Lemma 6.7

There exists a constant \(c>0\) such that for all \(\ell > 0\) and \(t>0\),

Proof

By a conductance computation, \(q_\ell := {\mathrm P}_\ell (\tau _0 < \tau _\ell ) \le c \gamma ^{-\ell }\) for some constant c. We use the pathwise decomposition \(\tau _0=\sum _{j=1}^{{\mathcal {G}}} {\hat{\tau }}^j+ {\hat{\tau }}_0\), where \({\hat{\tau }}^j\) are i.i.d. and have the law of \(\tau _\ell \) under \({\mathrm P}_\ell \) conditioned on \(\tau _\ell <\tau _0\), \({{\mathcal {G}}}\) is a geometric random variable of success parameter \(q_\ell \), and \({\hat{\tau }}_0\) has the law of \(\tau _0\) under \({\mathrm P}_\ell \) and conditioned on \(\tau _0<\tau _\ell \), with independence of the \({\hat{\tau }}^j\)s, \({\mathcal {G}}\) and \({\hat{\tau }}_0\). Then,

where in the last inequality, we have used that the quantity inside the expectation on the second line is almost-surely bounded by 1 since \(k\rightarrow \sum _{j=1}^k {\hat{\tau }}^j\) visits at most once t (the \({\hat{\tau }}^j\)’s are positive). We finally obtain that

\(\square \)

6.3.2 Proof of Theorem 6.6

Equipped with the estimates in Sect. 6.3.1, we can now proceed with the:

Proof of Theorem 6.6

We will prove that condition (39) is verified, that is

For \(x,y\in \mathtt T\), recall the definition of the Green function:

For all \(\ell \ge 0\), we label by \(\ell \in \mathtt T\) the \(\ell \)th vertex on the left-most ray of \(\mathtt T\), see Fig. 1. We first observe that:

Indeed, the number of visits of (S) to \(\ell \) is, by transience, stochastically dominated by the number of visits to site \(\ell \) of biased random walk on \({\mathbb {Z}}\), with jump probability to the right equal to \(\lambda /(\lambda +1)\) and geometric holding times, started at \(\ell \). The law of the latter is independent of \(\ell \), and by transience, its Green function is bounded. This proves (66).

We next show that

Note that by the symmetry properties of the canopy tree, this will directly entail (65). For all \(\ell \ge 0\), let \(\mathtt T^{(\ell )}\) denote the \((m+1)\)-regular tree of height \(\ell \) rooted at \(\ell \in \mathtt T\) of degree m, see Fig. 1. We decompose:

We first deal with \(A_{\ell _0}\). For \(\ell \ge 0\), define \({{\bar{p}}}^{(\ell )}_{t}\) to be the transition probability of S after all vertices at the same level in \(\mathtt T^{(\ell )}\) have been glued together and the corresponding conductances have been summed. Label by \(w\in [0,\ell ]\) the node of depth w in the glued version of \(\mathtt T^{(\ell )}\), so that \({{\bar{p}}}^{(\ell )}_{t}(w,w')\) stands for the probability for \((S_k)\) to go from depth w to depth \(w'\) in \(\mathtt T^{(\ell )}\) after t steps.

By symmetry, we observe that \(p_t(\ell ,y)=m^{-w}{{\bar{p}}}^{(\ell )}_{t}(0,w)\) for all \(y\in \mathtt T^{(\ell )}\) of depth w. Moreover, by reversibility,

Therefore,

By the Markov property, the identity \({{\bar{p}}}^{(\ell )}_{t}(0,0) = {{\bar{p}}}_t(\ell ,\ell )\) and (66), we have that

and it follows from (69) that \(\sup _{\ell _0} A_{\ell _0} < \infty \) since \(\lambda > 1\).

We turn to \(B_{\ell _0}\). By the Markov property, we have that

where \(\tau _\ell \) denotes the first hitting time of \(\ell \in \mathtt T\). By symmetry, as in (69),

where in \(B_{\ell _0}^{(1)}\) the sum in w is restricted to \(w\le \ell -\ell _{0}\). By (68) and since \(\sum _{s=0}^t {\mathrm P}_{\ell _0}(\tau _\ell = s) \,{{\bar{p}}}^{(\ell )}_{t-s}(0,w)\le 1\), we have that

so by summing first over \(t\ge 0\) and using (70), we find that

Identity (68) further gives that

and we have by estimates (64) with conductance \(m/\lambda \), and (70), letting \(k_\ell = c \left( m / \lambda \right) ^\ell \),

for some finite C, so that

The sum inside the above parenthesis is again uniformly bounded from above. Moreover, there is some \(C<\infty \) such that:

In any case, \(\sum _{\ell > 0} k_\ell ^{-1} \sum _{w=1}^\ell \left( {m}/{\lambda ^2}\right) ^{w} < \infty \), so putting things together we obtain that \(\sup _{\ell _0} B_{\ell _0} <\infty \), which concludes the proof. \(\quad \square \)

6.4 Presence of an \(L^2\)-region for a recurrent walk

Let \({\mathcal {T}}_2\) be the infinite binary tree and denote its root by o. We will define a walk on \({\mathcal {T}}_2\) which, at each step, goes down with probability 1/2 from a vertex to one of its children, until a clock rings and brings the walk back to the root. If the distribution of the clock has a sufficiently heavy tail, the number of intersections of two independent walk will be small enough to allow for \(\beta _2>0\).

Turning to the actual construction, consider the graph product \(G_0 = {\mathcal {T}}_2 \times {\mathbb {Z}}^2\) where \({\mathbb {Z}}^2\) stands for the two-dimensional lattice. Let \(G=(V,E)\) be the subgraph of \(G_0\) where \(V=\{(v,x)\in G_0 : d(o,v) \ge \Vert x \Vert _1\}\) and E contains the edges of \(G_0\) for which both ends are in V. Let \(q(x,y)=1/4\) if \(x,y\in {\mathbb {Z}}^2\) satisfy \(|x-y|=1\). Define the walk \(S_n=(T_n,X_n)\) on G by the transition probabilities

for all edges \(((v,x),(w,y))\in E\). Defined as such, \((X_n)\) is the SRW on \({\mathbb {Z}}^2\) and \(T_n\) is a walk on the binary tree such that \(T_n\) jumps to one of his children with probability 1/2 when \(X_{n+1}\ne 0\) and jumps back to the root when \(X_{n+1}=0\).

Theorem 6.8

The walk \((S_n)\) is recurrent and satisfies \(\beta _2>0\) for the associated polymer.

Proof

Note that \((X_n)\) is the SRW on \({\mathbb {Z}}^2\). Since \((X_n)\) visits infinitely many often 0, and since \(T_n=o\) whenever this happens, \((S_n)\) is recurrent.

We now check that the condition in (39) is verified. For two independent copies \(S=(X,T),S'=(X',T')\) of S, let \(\tau _0=0\) and define recursively \(\tau _{n+1} = \inf \{k> \tau _n : X_k=X_k'=0\}\), with the convention that the infimum over an empty set is equal to infinity. Further let \(K=K(X,X')=\sum _{k\ge 0} {\mathbf {1}}_{X_k=X'_k=0}\).

For any \((v,x)\in V\), we have

Let \({\tilde{T}},{\tilde{T}}'\) be independent copies of the random walk on \({\mathcal {T}}_2\) which at each step, goes down to one of its children with probability 1/2. By Markov’s property, we have

where \({\mathcal {G}}\) is a geometric random variable of parameter 1/2. Therefore,

where \({\mathrm E}_{(v,x)}^{\otimes 2}\left[ K\right] = \sum _{n\ge 0} {\mathrm P}_x(X_n=0)^2 \le \sum _{n\ge 0} cn^{-2}<\infty \) by the local limit theorem for the simple random walk, uniformly in \((v,x)\in V\). Hence, by Khasminskii’s lemma, \(\beta _2>0\) for the polymer associated with \((S_n)\). \(\quad \square \)

7 Conclusions and Open Problems

We have presented some elements of a theory of polymers on general graphs. Our study leaves several important open questions. The comments below address some of these.

-

1.

It is natural to wonder whether, in a conductance model, \(\beta _c\) is monotone with respect to adding edges. Maybe counter-intuitively, the answer is no. The following is a counter example. Consider the SRW on \({\mathbb {Z}}_+^3\), which satisfies \(\beta _c>0\). This corresponds to a conductance model on \({\mathbb {Z}}_+^3\) with all edges having conductance equal to 1. Now increase the conductance between \((i,j,k)\in {\mathbb {Z}}^3_+\) and \((i+1,j,k)\) to \(e^{e^i}\). (This corresponds to adding edges between i and \(i+1\), more and more as \(i\rightarrow \infty \).) Then, by Borel–Cantelli, the random walk eventually goes ballistically on a path \((i,a,b)\rightarrow (i+1,a,b)\rightarrow (i+2,a,b)\ldots \), with some random a, b, and in fact the probability that this did not happen before time n decays super exponentially in n. Now a repeat of the proof of Proposition 4.1 leads to the conclusion that \(\beta _c=0\).

-

2.