Abstract

This work focuses on the study of partial differential equation (PDE) based basis function for Discontinuous Galerkin methods to solve numerically wave-related boundary value problems with variable coefficients. To tackle problems with constant coefficients, wave-based methods have been widely studied in the literature: they rely on the concept of Trefftz functions, i.e. local solutions to the governing PDE, using oscillating basis functions rather than polynomial functions to represent the numerical solution. Generalized Plane Waves (GPWs) are an alternative developed to tackle problems with variable coefficients, in which case Trefftz functions are not available. In a similar way, they incorporate information on the PDE, however they are only approximate Trefftz functions since they don’t solve the governing PDE exactly, but only an approximated PDE. Considering a new set of PDEs beyond the Helmholtz equation, we propose to set a roadmap for the construction and study of local interpolation properties of GPWs. Identifying carefully the various steps of the process, we provide an algorithm to summarize the construction of these functions, and establish necessary conditions to obtain high order interpolation properties of the corresponding basis.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Trefftz methods are Galerkin type of methods that rely on function spaces of local solutions to the governing partial differential equations (PDEs). They were initially introduced in [27, 35], and the original idea was to use trial functions which satisfy the governing PDE to derive error bounds. They have been widely used in the engineering community [20] since the 60s, for instance for Laplace’s equation [30], to the biharmonic equation [33] and to elasticity [23]. Later the general idea of taking advantage of analytical knowledge about the problem to build a good approximation space was used to develop numerical methods: in the presence of corner and interface singularities [10, 34], boundary layers, rough coefficients, elastic interactions [2, 3, 28, 29], wave propagation [2, 9]. In the context of boundary value problems (BVPs) for time-harmonic wave propagation, several methods have been proposed following the idea of functions that solves the governing PDE [13], relying on incorporating oscillating functions in the function spaces to derive and discretize a weak formulation. Wave-based numerical methods have received attention from several research groups around the world, from the theoretical [13] and computational [14] point of view, and the pollution effect of plane wave Discontinuous Galerkin (DG) methods was studied in [11]. Such methods have also been implemented in industry codes,Footnote 1 for acoustic applications. The use of Plane Wave (PW) basis functions has been the most popular choice, while an attempt to use Bessel functions was reported in [25]. In [24], the authors present an interesting comparison of performance between high order polynomial and wave-based methods. More recently, application to space-time problems have been studied in [4, 21, 22, 31, 32].

In this context, numerical methods rely on discretizing a weak formulation via a set of exact solutions of the governing PDE. When no exact solutions to the governing PDE are available, there is no natural choice of basis functions to discretize the weak formulation. This is in particular the case for variable coefficient problems. In order to take advantage of Trefftz-type methods for problems with variable coefficients, Generalized Plane Waves (GPWs) were introduced in [17], as basis functions that are local approximate solutions—rather than exact solutions—to the governing PDE. GPWs were designed adding higher order terms in the phase of classical PWs, choosing these higher order terms to ensure the desired approximation of the governing PDE. In [15], the construction and interpolation properties of GPWs were studied for the Helmholtz equation

with a particular interest for the case of a sign-changing coefficient \(\beta \), including propagating solutions (\(\beta <0\)), evanescent solutions (\(\beta >0\)), smooth transition between them (\(\beta =0\)) called cut-offs in the field of plasma waves. The interpolation properties of a set \({\mathbb {V}}\) spanned by resulting basis functions, namely \(\Vert (I-P_{{\mathbb {V}}}) u \Vert \) where \(P_{{\mathbb {V}}}\) is the orthogonal projector on \({\mathbb {V}}\) while u is the solution to the original problem, play a crucial role in the error estimation of the corresponding numerical method [5]. For this same equation the error analysis of a modified Trefftz method discretized with GPWs was presented in [18]. In [19], Generalized Plane Waves (GPWs) were used for the numerical simulation of mode conversion modeled by the following equation:

In the present work, we answer questions related to extending the work on GPW developed in [15]—the construction of GPWs on the one hand, and their interpolation properties on the other hand—from the Helmholtz operator \(-\Delta +\beta \) to a wide range of partial differential operators. A construction process valid for some operators of order two or higher is presented, while a proof of interpolation properties is limited to some operators of order two. We propose a road map to identify crucial steps in our work:

-

1.

Construction of GPWs \(\varphi \) such that \({\mathcal {L}}\varphi \approx 0\)

-

(a)

Choose an ansatz for \(\varphi \) (Sect. 2).

-

(b)

Identify the corresponding \(N_{dof}\) degrees of freedom and \(N_{eqn}\) constraints (Sect. 2.1).

-

(c)

Choose the number of degrees of freedom adequately \(N_{dof}\ge N_{eqn}\) (Sect. 2.1).

-

(d)

Study the structure of the resulting system and identify \(N_{dof}-N_{eqn}\) additional constraints (Sects. 2.2, 2.3).

-

(e)

Compute the remaining \(N_{eqn}\) degrees of freedom at minimal computational cost (Sect. 2.4).

-

(a)

-

2.

Interpolation properties

-

(a)

Study the properties of the remaining \(N_{eqn}\) degrees of freedom with respect to the \(N_{dof}-N_{eqn}\) additional constraints

-

(b)

Identify a simple reference case depending only on the \(N_{dof}-N_{eqn}\) additional constraints (Sect. 3).

-

(c)

Study the interpolation properties of this reference case (Sect. 4.1).

-

(d)

Relate the general case to the reference case (Sects. 3.1, 3.2).

-

(e)

Prove the interpolation properties of the GPWs from those of the reference case (Sect. 4.2).

-

(a)

We will consider linear partial differential operators with variable coefficients, defined as follows.

Definition 1

A linear partial differential operator of order \(M\ge 2\), in two dimensions, with a given set of complex-valued coefficients \(\alpha \!\! =\! \{\alpha _{k,\ell -k},\!(k,\ell )\!\in \!{\mathbb {N}}^2, 0\!\le \! k\!\le \!\ell \!\le \! M\}\) will be denoted hereafter as

Our goal is to build a basis of functions well suited to approximate locally any solution u to a given homogeneous variable-coefficient partial differential equation

where by locally we mean piecewise on a mesh \({\mathcal {T}}_h\) of \(\Omega \). Such interpolation properties are a building block for the convergence proof of Galerkin methods. For a constant coefficient operator, it is natural to use the same basis on each element \(K\in {\mathcal {T}}_h\). However, with variable coefficients, it cannot be optimal to expect a single basis to have good approximation properties on the whole domain \(\Omega \subset {\mathbb {R}}^2\). For instance, for the Helmholtz equation with a sign-changing coefficient, it can not be optimal to look for a single basis that would give a good approximation of solutions both in the propagating region and in the evanescent region. Therefore it is natural to think of local bases defined on each \(K\in {\mathcal {T}}_h\): with GPWs we focus on local properties around a given point \((x_0,y_0)\in {\mathbb {R}}^2\) rather than on a given domain \(\Omega \). A simple idea would then be freezing the coefficients of the operator, that is to say studying, instead of \({\mathcal {L}}_{M,\alpha }\), the constant coefficient operator \({\mathcal {L}}_{M,{\bar{\alpha }}}\) with constant coefficients \({\bar{\alpha }} = \{\alpha _{k,l}(x_0,y_0),0\le k+l\le M\}\). However, as observed in [15, 16], this leads to low order approximation properties, while we are interested in high order approximation properties. This is why new functions are needed to handle variable coefficients. This work will focus on two aspects: the construction and the interpolation properties of GPWs.

We follow the GPW design proposed in [15, 17]. Retaining the oscillating feature while aiming for higher order approximation, GPW were designed with Higher Order Terms (HOT) in the phase function of a plane wave. These higher order terms are to be defined to ensure that a GPW function \(\varphi \) is an approximate solution to the PDE:

In Sect. 2, the construction of a GPW \( \varphi (x,y)=e^{P(x,y)}\) will be described in detail, then a precise definition of GPW will be provided under the following hypothesis:

Hypothesis 1

Consider a given point \((x_0,y_0)\in {\mathbb {R}}^2\), a given approximation parameter \(q\in {\mathbb {N}}\), \(q\ge 1\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), and a partial differential operator \({\mathcal {L}}_{M,\alpha }\) defined by a given set of complex-valued coefficients \(\alpha = \{\alpha _{k,l},0\le k+l\le M\}\), defined in a neighborhood of \((x_0,y_0)\), satisfying

-

\(\alpha _{k,l}\) is \({\mathcal {C}}^{q-1}\) at \((x_0,y_0)\) for all (k, l) such that \(0\le k+l\le M\),

-

\(\alpha _{M,0}(x_0,y_0)\ne 0\).

This construction is equivalent to the construction of the bi-variate polynomial

and is performed by choosing the degree dP, and providing an explicit formula for the set of complex coefficients \(\{\lambda _{ij}\}_{\{ (i,j)\in {\mathbb {N}}^2, 0\le i+j\le d P \}}\), in order for \(\varphi \) to satisfy \({\mathcal {L}}_{M,\alpha }\varphi (x,y)=O\left( \Vert (x,y)-(x_0,y_0) \Vert ^q\right) \). An algorithm to construct a GPW is provided. In Sect. 3 properties of the \(\lambda _{ij}\)s are studied, while the interpolation properties of the corresponding set of basis functions are studied for the case \(M=2\) in Sect. 4, under the following hypothesis:

Hypothesis 2

Under Hypothesis 1 we consider only operators \({\mathcal {L}}_{M,\alpha }\) such that \(M\) is even and the terms of order \(M\) satisfy

for some complex numbers \((\gamma _1,\gamma _2,\gamma _3)\) such that there exists \((\mu _1,\mu _2)\!\in \!{\mathbb {C}}^2\), \(\mu _1\mu _2\ne 0\), a non-singular matrix \(A\in {\mathbb {C}}^{2\times 2}\) satisfying \(\Gamma = A^tDA\) where \(\Gamma =\begin{pmatrix} \gamma _1 &{}\quad \gamma _2/2\\ \gamma _2/2&{}\quad \gamma _3 \end{pmatrix}\) and \(D=\begin{pmatrix} \mu _1 &{}\quad 0\\ 0&{}\quad \mu _2 \end{pmatrix}\) , and therefore

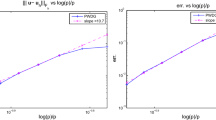

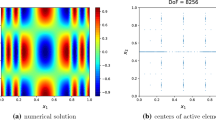

For instance, these matrices are \(\Gamma =D=Id\) for \({\mathcal {L}}_H:=-\Delta -\kappa ^2(x,y)\) or \({\mathcal {L}}_B:=\Delta {\mathcal {L}}_H\), and \(\Gamma =D=c(x_0,y_0)Id\) for \({\mathcal {L}}_C:=-\nabla \cdot (c(x,y)\nabla )-\kappa ^2(x,y)\). Note that if \(\Gamma \) is real, this is simply saying that its eigenvalues are non-zero. Finally, corresponding numerical results are then provided, for various operators \({\mathcal {L}}_{M,\alpha }\) of order \(M=2\) in Sect. 5.

Our previous work was limited to the Helmholtz equation (1) for propagating and evanescent regions, transition between the two, absorbing regions, as well as caustics. The interpolation properties presented here cover more general second order equations, in particular equations that can be written as

with variable coefficients A matrix-valued, real and symmetric with non-zero eigenvalues, \({\mathbf {d}}\) vector-valued and m scalar-valued. It includes for instance

-

Helmholtz equation with absorption corresponding to \(A = I\) with \(\mathfrak {R}(m)>0\) and \(\mathfrak {I}(m)\ne 0\);

-

the mild-slop equation [8] modeling the amplitude of the free-surface water waves corresponding to \(m=c_pc_g\) being the product of \(c_p\) the phase speed of the waves and \(c_g\) the group speed of the waves with \(A = m Id\);

-

if \(\mu \) is the permeability and \(\epsilon \) the permittivity, then the transverse-magnetic mode of Maxwell’s equations for \(A=\frac{1}{\mu }I\) and \(m=\epsilon \), while the transverse-electric mode of Maxwell’s equations for \(A=\frac{1}{\epsilon }I\) and \(m=\mu \).

Throughout this article, we will denote by \({\mathbb {N}}\) the set of non-negative integers, by \({\mathbb {N}}^*\) the set of positive integers, by \({\mathbb {R}}^{+} = [0;+\infty )\) the set of non-negative real numbers, and by \({\mathbb {C}}[z_1,z_2]\) the space of complex polynomials with respect to the two variables \(z_1\) and \(z_2\). As the first part of this work is dedicated to finding the coefficients \(\lambda _{ij}\), we will reserve the word unknown to refer to the \(\lambda _{i,j}\)s. The length of the multi-index \((i,j)\in {\mathbb {N}}^2\) of an unknown \(\lambda _{ij}\), \(|(i,j)|=i+j\), will play a crucial role in what follows.

2 Construction of a GPW

The task of constructing a GPW is attached to a homogeneous PDE, it is not global on \({\mathbb {R}}^2\) but it is local as it is expressed in terms of a Taylor expansion. It consists in finding a polynomial \(P\in {\mathbb {C}}[x,y]\) such that the corresponding GPW, namely \( \varphi :=e^{P}\), is locally an approximate solution to the PDE.

Consider \(M= 2\), \(\beta = \{ \beta _{0,0}, \beta _{0,1}=\beta _{1,0}=\beta _{1,1}=0, \beta _{0,2}\equiv -1,\beta _{2,0}\equiv -1 \}\), and the corresponding the operator \({\mathcal {L}}_{2,\beta } = -\partial _x^2 -\partial _y^2 + \beta _{0,0}(x)\). Then for any polynomial \(P\in {\mathbb {C}}[x,y]\):

so the construction of an exact solution to the PDE would be equivalent to the following problem:

Consider then the following examples.

-

If \(\beta _{0,0}(x,y)\) is constant, then it is straightforward to find a polynomial of degree one satisfying Problem (5); \(\beta _{0,0}\) being negative this would correspond to a classical plane wave.

-

If \(\beta _{0,0}(x,y)=x\), then there is no solution to (5), since the total degree of the polynomial \(\partial _x^2 P + (\partial _x P)^2+\partial _y^2 P + (\partial _y P)^2\) is always even.

-

If \(\beta _{0,0}(x,y)\) is not a polynomial function, it is also straightforward to see that no polynomial P can satisfy Problem (5).

From these trivial examples we see that in general there is no such function, \(\varphi (x,y)=e^{P(x,y)}\), P being a complex polynomial, solution to a variable coefficient partial differential equation exactly. It could seem that the restriction for P to be a polynomial is very strong. However since we are interested in approximation and smooth coefficients, rather than looking for a more general phase function we restrict the identity \({\mathcal {L}} \varphi =0\) on \(\Omega \) into an approximation on a neighborhood of \((x_0,y_0)\in {\mathbb {R}}^2\) in the following sense. We replace the too restrictive cancellation of \({\mathcal {L}}_{M,\alpha }e^{P(x,y)}\) by the cancellation of the lowest terms of its Taylor expansion around \((x_0,y_0)\). So this section is dedicated to the construction of a polynomial \(P\in {\mathbb {C}}[x,y]\), under Hypothesis 1, to ensure that the following local approximation property

is satisfied. The parameter q will denote throughout this work the order of approximation of the equation to which the GPW is designed. In summary, the construction is performed:

-

for a partial differential operator \({\mathcal {L}}_{M,\alpha }\) of order \(M\) defined by a set of smooth coefficients \(\alpha \),

-

at a point \((x_0,y_0)\in {\mathbb {R}}^2\),

-

at order \(q\in {\mathbb {N}}^*\),

-

to ensure that \( {\mathcal {L}}_{M,\alpha }e^{P(x,y)} = O(|(x-x_0,y-y_0)|^q). \)

Even though the construction of a GPW will involve a non-linear system we propose to take advantage of the structure of this system to construct a solution via an explicit formula. In this way, even though a GPW \( \varphi :=e^{P}\) is a PDE-based function, the polynomial P can be constructed in practice from this formula, and therefore the function can be constructed without solving numerically any non-linear—or even linear—system. This remark is of great interest with respect to the use of such functions in a Discontinuous Galerkin method to solve numerically boundary value problems.

In order to illustrate the general formulas that will appear in this section, we will use the specific case \({\mathfrak {L}}_{2,\gamma }\) where \(\gamma = \{ \gamma _{0,0},\gamma _{1,0},\gamma _{0,1},\gamma _{2,0} \equiv -1,\gamma _{1,1} , \gamma _{0,2}\}\), for which we can write explicitly many formulas is a compact form. In order to simplify certain expressions that will follow we propose the following definition.

Definition 2

Assume \((i,j)\in {\mathbb {N}}^2\) and \((x_0,y_0)\in {\mathbb {R}}^2\). We define the linear partial differential operator \(D^{(i,j)}\) by

A precise definition of GPW will be provided at the end of this section.

2.1 From the Taylor expansion to a non-linear system

We are seeking a polynomial \(P(x,y) = \sum _{0\le i+j\le d P} \lambda _{ij}(x-x_0)^i(y-y_0)^j\) satisfying the Taylor expansion (6). Defining such a polynomial is equivalent to defining the set \( \{\lambda _{ij}; (i,j)\in {\mathbb {N}}^2,0\le i+j\le d P \} \), and therefore we will refer to the \(\lambda _{ij}\)s as the unknowns throughout this construction process. The goal of this subsection is to identify the set of equations to be satisfied by these unknowns to ensure that P satisfies the Taylor expansion (6), and in particular to choose the degree of P so as to guarantee the presence of linear terms in each equation of the system.

According to the Faa di Bruno formula, the action of the partial differential operator \({\mathcal {L}}_{M,\alpha }\) on a function \(\varphi (x,y)= e^{P(x,y)}\) is given by

where the linear order \(\prec \) on \({\mathbb {N}}^2\) is defined by

and where \(p_s((i,j),\mu )\) is equal to

For the operator \({\mathfrak {L}}_{2,\gamma }\) the Faa di Bruno formula becomes

In order to single out the terms depending on P in the right hand side, this leads to the following definition.

Definition 3

Consider a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l},0\le k+l\le M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). We define the partial differential operator \({\mathcal {L}}_{M,\alpha }^A\) associated to \({\mathcal {L}}_{M,\alpha }\) as

or equivalently, since the exponential of a bounded quantity is bounded away from zero:

For the operator \({\mathfrak {L}}_{2,\gamma }\) this gives

Since, for any polynomial P, the function \(e^P\) is locally bounded, and since \({{\mathcal {L}}_{M,\alpha }[ e^P]}=\big ({\mathcal {L}}_{M,\alpha }^A{e^P}+\alpha _{0,0}\big )e^P\), then for a polynomial P to satisfy the approximation property (6), it is sufficient to satisfy

Therefore, the problem to be solved is now:

In order to define a polynomial \(P(x,y) = \sum _{0\le i+j\le d P} \lambda _{ij}(x-x_0)^i(y-y_0)^j\), the degree dP of the polynomial determines the number of unknowns: there are \(N_{dof} = \frac{(dP+1)(dP+2)}{2}\) unknowns to be defined, namely the \(\{\lambda _{i,j}\}_{\{(i,j)\in {\mathbb {N}},0\le i+j\le dP}\). In order to design a polynomial P satisfying Eq. (7), the parameter q determines the number of equations to be solved: there are \(N_{eqn} = \frac{q(q+1)}{2}\) terms to be canceled from the Taylor expansion. The first step toward the construction of a GPW is to define the value of dP for a given value of q.

At this point it is clear that if \(dP\le q-1\), then the resulting system is over-determined. Our choice for the polynomial degree dP relies on a careful examination of the linear terms in \({\mathcal {L}}_{M,\alpha }^AP\). We can already notice that, under Hypothesis 1, in \({\mathcal {L}}_{M,\alpha }^AP\) there is at least one non-zero linear term, namely \(\alpha _{M,0}(x_0,y_0)\partial _x^MP\), and there is at least one non-zero non-linear term, namely \(\alpha _{M,0}(x_0,y_0)(\partial _xP)^M\). This non-linear term corresponds to the following parameters from the Faa di Bruno formula: \(\mu =M\), \(s=1\), \((k_1,(i_1,j_1)) = (M,(1,0))\). The linear terms can only correspond to \(s=1\), \(\mu =1\) and \(p_1((k,\ell -k),1) = \{ (1,(k,\ell -k)) \}\), see Definition 3. We can then split \({\mathcal {L}}_{M,\alpha }^A\) into its linear and non-linear parts.

Definition 4

Consider a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l},0\le k+l\le M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). The linear part of the partial differential operator \({\mathcal {L}}_{M,\alpha }^A\) is defined by \({\mathcal {L}}_{M,\alpha }^L:={\mathcal {L}}_{M,\alpha }-\alpha _{0,0} \partial _x^{0}\partial _y^{0}\), or equivalently

and its non-linear part \({\mathcal {L}}_{M,\alpha }^N:={\mathcal {L}}_{M,\alpha }^A-{\mathcal {L}}_{M,\alpha }^L\) can equivalently be defined by

For the operator \({\mathfrak {L}}_{2,\gamma }\) this gives respectively

Consider the (I, J) coefficients of the Taylor expansion of \({\mathcal {L}}_{M,\alpha }^LP\) for \((I,J)\in {\mathbb {N}}^2\) and \(0\le I+J <q\):

so that in order to isolate the derivatives of highest order, i.e. of order \(M+I+J\), we can write

Back to Problem (8), the (I, J) terms (9) a priori depend on the unknowns \(\{\lambda _{i,j},(i,j)\in {\mathbb {N}}^2,0\le i+j\le dP\}\). Since

then under Hypothesis 1 any (I, J) term in System (8) has at least one non-zero linear term, as long as \(I+J\le dP-M\), namely \(\frac{(M+I)!}{I!}\alpha _{M,0}(x_0,y_0)\lambda _{M+I,J}\), while it does not necessarily have any linear term as soon as \(I+J>dP-M\). Avoiding equations with no linear terms is natural, and it will be crucial for the construction process described hereafter.

Choosing the polynomial degree to be \(dP=M+q-1\) therefore guarantees the existence of at least one linear term in every equation of System (8). Therefore, from now on the polynomial P will be of degree \(dP=M+q-1\) and the new problem to be solved is

As a consequence the number of unknowns is \(N_{dof} = \frac{(M+q)(M+q+1)}{2}\), and the system is under-determined : \(N_{dof}-N_{eqn} = Mq+ \frac{M(M+1)}{2}\). See Fig. 1 for an illustration of the equation and unknown count.

Note that this system is always non-linear. Indeed, under Hypothesis 1, the (0, 0) equation of the system always includes the non-zero non-linear term \(\alpha _{M,0}(x_0,y_0)(\lambda _{1,0})^{M}\), corresponding to the following parameters from the Faa di Bruno formula: \(\mu =M\), \(s=1\), \((k_1,(i_1,j_1)) = (M,(1,0))\).

The key to the construction procedure proposed next is a meticulous gathering of unknowns \(\lambda _{i,j}\) with respect the length of their multi-index \(i+j\). As we will now see, this will lead to splitting the system into a hierarchy of simple linear sub-systems.

2.2 From a non-linear system to linear sub-systems

The different unknowns appearing in each equation of System (10) can now be studied. A careful inspection of the linear and non-linear terms will reveal the underlying structure of the system, and will lead to identify a hierarchy of simple linear subsystems.

The inspection of the linear terms is very straightforward thanks to Eq. (9). The description of the unknowns in the linear terms is summarized here.

Lemma 1

Consider a point \((x_0,y_0)\in {\mathbb {R}}^2\), a given \(q\in {\mathbb {N}}^*\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\in {\mathcal {C}}^{q-1} \text { at }(x_0,y_0),0\le k+l\le M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). In each equation (I, J) of System (10), the linear terms can be split as follows:

-

a set of unknowns with length of the multi-index equal to \(M+I+J\), corresponding to \(\ell =M\) and \((\tilde{i},\tilde{j})=(I,J)\),

-

a set of unknowns with length of the multi-index at most equal to \(M+I+J-1\).

Under Hypothesis 1, both sets are never empty.

Proof

In terms of unknowns \(\{\lambda _{i,j},(i,j)\in {\mathbb {N}}^2, 0\le i+j\le M+q-1\} \), Eq. (9) reads:

The result is immediate for \(I=J=0\) from (11). The following comments are valid for the right hand sides of (12)–(14): the third term only contains unknowns with a length of the multi-index equal to \(\ell +{\tilde{i}}+{\tilde{j}}\le M-1 +I+J\), while the second term only contains unknowns with a length of the multi-index equal to \(M+{\tilde{i}}+{\tilde{j}}\le M+I+J-2\); as to the first term, it only contains unknowns with a length of the multi-index equal to \(M+I+J\). This proves the claim. \(\square \)

We then focus on the inspection of the non-linear terms. Each non-linear term in \({\mathcal {L}}_{M,\alpha }^AP\) reads from the definition of \({\mathcal {L}}_{M,\alpha }^N\)

and yields a sum of non-linear terms with respect to the unknowns \(\{\lambda _{ij}\}_{\{(i,j),0\le i+j\le M+q-1 \}}\), implicitly given by the following formula:

Therefore coming from the term (15), only a restricted number of unknowns contribute to the (I, J) equation of Problem (10).

In order to identify the unknowns contributing to (16), here are two simple yet important reminders are provided in “Appendix C”.

It is now straightforward to describe the unknowns \(\lambda _{i,j}\) appearing in the non-linear terms of the equation (I, J) of System (10), unwinding formula (16).

Lemma 2

Consider a point \((x_0,y_0)\in {\mathbb {R}}^2\), a given \(q\in {\mathbb {N}}^*\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\in {\mathcal {C}}^{q-1} \text { at }(x_0,y_0),0\le k+l\le M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). In each equation (I, J) of System (10), the unknowns \(\lambda _{i,j}\) appearing in the non-linear terms have a length of the multi-index \(i+j<M+I+J\).

Proof

Each term \(\partial _x^{{\tilde{i}}}\partial _y^{{\tilde{j}}}\left[ \prod _{m=1}^s \left( \partial _x^{i_m}\partial _y^{j_m} P \right) ^{k_m}\right] \) in \({\mathcal {L}}_{M,\alpha }^AP\) is a polynomial, and its constant coefficient contains coefficients of the polynomial \(\prod _{m=1}^s \left( \partial _x^{i_m}\partial _y^{j_m} P \right) ^{k_m}\) with a length of the multi-index length of the multi-index at most equal to \({\tilde{i}}+{\tilde{j}}\), that is to say coefficients of the polynomials \(\partial _x^{i_m}\partial _y^{j_m} P\) with a length of the multi-index length of the multi-index at most equal to \({\tilde{i}}+{\tilde{j}}\) for every \((i_m,j_m)\) from the Faa di Bruno’s formula, so coefficients \(\lambda _{i,j}\) of the polynomial P with a length of the multi-index at most equal to \({\tilde{i}}+{\tilde{j}}+i_m+j_m\). Since the indices are such that \({\tilde{i}}\le I\), \({\tilde{j}}\le J\), and \(i_m+j_m\le \ell <M\), the unknowns \(\lambda _{i,j}\) appearing in each term \(\partial _x^{{\tilde{i}}}\partial _y^{{\tilde{j}}}\left[ \prod _{m=1}^s \left( \partial _x^{i_m}\partial _y^{j_m} P \right) ^{k_m}\right] (x_0,y_0)\) have a length of the multi-index at most equal to \(M+I+J-1\). It is therefore true for any linear combination such as (16). \(\square \)

From the two previous Lemmas, we see that, in each equation (I, J) of System (10), unknowns with a length of the multi-index equal to \(M+I+J\) appear only in linear terms, namely in

whereas all the remaining unknowns have a length of the multi-index at most equal to \(M+I+J-1\). It is consequently natural to subdivide the set of unknowns with respect to the length of their multi-index \(M+\mathfrak {L}\), for \(\mathfrak {L}\) between 0 and \(q-1\) in order to take advantage of this linear structure.

2.3 Hierarchy of triangular linear systems

Our goal is now to construct a solution to the non-linear system (10), and our understanding of its linear part will lead to an explicit construction of such a solution without any need for any approximation.

The crucial point of our construction process is to take advantage of the underlying layer structure with respect to the length of the multi-index: it is only natural now to gather into subsystems all equations \((I,\mathfrak {L}-I)\) for I between 0 and \(\mathfrak {L}\), while gathering similarly all unknowns with length of the multi-index equal to \(M+\mathfrak {L}\). In the subsystem of layer \(\mathfrak {L}\), we know that the unknowns with a length of the multi-index equal to \(M+I+J\) only appear in linear terms, and we rewrite each equation (I, J) as

For the sake of clarity, the resulting right-hand side terms can defined as follows.

Definition 5

Consider a point \((x_0,y_0)\in {\mathbb {R}}^2\), a given \(q\in {\mathbb {N}}^*\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\in {\mathcal {C}}^{q-1} \text { at }(x_0,y_0),0\le k+l\le M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\).

We define the quantity \(N_{I,J}\) from Equation (I, J) from (10) as

[EX] For the operator \({\mathfrak {L}}_{2,\gamma }\) the non-linear terms in \(N_{0,0}\), \(N_{1,0}\) and \(N_{0,1}\) are respectively

We now consider the following subsystems for given \(\mathfrak {L}\) between 0 and \(q-1\):

The layer structure follows from our understanding of the non-linearity of the original system:

Corollary 1

Consider a point \((x_0,y_0)\in {\mathbb {R}}^2\), a given \(q\in {\mathbb {N}}^*\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\in {\mathcal {C}}^{q-1} \text { at }(x_0,y_0),0\le k+l\le M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). For any \((I,J)\in {\mathbb {N}}^2\) such that \(I+J<q\), the quantity \(N_{I,J}\) only depends on unknowns \(\lambda _{i,j}\) with length of the multi-index at most equal to \(M+I+J-1\).

Proof

The result is straightforward from Lemmas 1 and 2 . \(\square \)

Assuming that all unknowns \(\lambda _{i,j}\) with length of the multi-index at most equal to \(M+I+J-1\) are known, then (21) is a well-defined linear under-determined system with

-

\(\mathfrak {L}\) linear equations, namely the \((I,J) =(I,\mathfrak {L}-I)\) equations from System (10) for I between 0 and \(\mathfrak {L}\);

-

\(M+\mathfrak {L}+1\) unknowns, namely the \(\lambda _{ij}\) for \(i+j=M+\mathfrak {L}\).

Therefore, if all unknowns \(\lambda _{i,j}\) with length of the multi-index at most equal to \(M+I+J-1\) are known, we expect to be able to compute a solution to the subsystem \(\mathfrak {L}\); this is the layer structure of our original problem (10). Figure 2 highlights the link between the layers of unknowns and equations of the initial nonlinear system on the one hand, and the layers unknowns and equations of the linear subsystems on the other hand.

Representation of the indices of equations and unknowns from the initial nonlinear system (10) divided up into linear subsystems (21). For \(q=6\) and \(M=4\), each shape of marker corresponds to one value of \(\mathfrak {L}\): the indices (I, J) satisfying \(I+J=\mathfrak {L}\) correspond to the subsystem’s equations (Left panel), while the indices (i, j) satisfying \(i+j=\mathfrak {L}+M\) correspond to the subsystem’s unknowns (Right panel)

At this stage, we have identified a hierarchy of under-determined linear subsystems, for increasing values of \(\mathfrak {L}\) from 0 to \(q-1\), and we are now going to propose one procedure to build a solution to each subsystem. There is no unique way to do so, however if either \(\alpha _{M,0}(x_0,y_0)\ne 0\) or \(\alpha _{0,M}(x_0,y_0)\ne 0\) it provides a natural way to proceed. Indeed, the unknowns involved in an equation \((I,J)=(I,\mathfrak {L}-I)\) are \(\{\lambda _{i,M+\mathfrak {L}-i}; i\in {\mathbb {N}}, I\le i \le I+M\}\); and the coefficient of the unknown \(\lambda _{I+M,\mathfrak {L}-I}\) is proportional to \(\alpha _{M,0}(x_0,y_0)\), which is non-zero under Hypothesis 1. Figure 3 provides two examples, in the (i, j) plane, of the indices of one equation’s unknowns: for each equation, the coefficient of the term corresponding to the rightmost marker is non-zero. By adding \(M\) constraints corresponding to fixing the values of \(\lambda _{i,M+\mathfrak {L}-i}\) for \(0\le i <M\), that is the unknowns corresponding in the (i, j) plane to first \(M\) markers on the left at level \(M+\mathfrak {L}\), we therefore guarantee that for increasing values of I from 0 to \(\mathfrak {L}\) we can compute successively \(\lambda _{I+M,\mathfrak {L}-I}\).

Representation of the indices of unknowns involved in two equations (I, J) of the subsystem (21). For \(q=6\), for \(M= 4\), and \(\mathfrak {L}=4\), each filled blue square marker corresponds in the (i, j) plane to an unknown \(\lambda _{ij}\), involved in the \((I,J) = (1,3)\) equation (Left panel), or in the \((I,J) = (4,0)\) equation (Right panel) (colour figure online)

We can easily recast this in terms of matrices. At each level \(\mathfrak {L}\), numbering the equations with increasing values of I and the unknowns with increasing values of i highlights the band-limited structure of each subsystem, while the entries of the \(M\)th super diagonal are all proportional to \(\alpha _{M,0}(x_0,y_0)\), and therefore non-zero under Hypothesis 1. The matrix of the square linear system at level \(\mathfrak {L}\) is then constructed from the first \(M\) lines of the identity, corresponding to the additional \(M\) constraints, placed on top of the matrix of the subsystem.

Definition 6

Consider a point \((x_0,y_0)\in {\mathbb {R}}^2\), a given \(q\in {\mathbb {N}}^*\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha \!=\!\{ \alpha _{k,l}\!\in \!{\mathcal {C}}^{q-1} \!\text { at }\!(x_0,y_0),0\!\le \! k+l\!\le \! M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). For a given level \(\mathfrak {L}\in {\mathbb {N}}\) with \(\mathfrak {L}<q\), we define the matrix of the square system of level \(\mathfrak {L}\), \(\mathsf {T} ^\mathfrak {L}\in {\mathbb {C}}^{(M+\mathfrak {L}+1)\times (M+\mathfrak {L}+1)}\), as

or equivalently

Assuming that all unknowns \(\lambda _{i,j}\) with length of the multi-index at most equal to \(M+I+J-1\) are known, then, as expected, a solution to the linear under-determined system (21) can be computed as follows.

Proposition 1

Consider a point \((x_0,y_0)\in {\mathbb {R}}^2\), a given \(q\in {\mathbb {N}}^*\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\in {\mathcal {C}}^{q-1} \text { at }(x_0,y_0),0\le k+l\le M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). For a given level \(\mathfrak {L}\in {\mathbb {N}}\) with \(\mathfrak {L}<q\), under Hypothesis 1, the matrix \(\mathsf {T} ^\mathfrak {L}\in {\mathbb {C}}^{(M+\mathfrak {L}+1)\times (M+\mathfrak {L}+1)}\) is non-singular.

We now assume that the unknowns \(\{\lambda _{i,j}, (i,j)\in {\mathbb {N}}^2, i+j<M+\mathfrak {L}\}\) are known, so that the terms \(N_{I,\mathfrak {L}-I}\) for I from 0 to \(\mathfrak {L}\) can be computed. Consider any vector \(\mathsf {B} ^\mathfrak {L}\in {\mathbb {C}}^{M+\mathfrak {L}+1}\) satisfying

Then independently of the first \(M\) components of \(\mathsf {B} ^\mathfrak {L}\), solving the linear system

by forward substitution provides a solution to (21) for

Proof

The matrix \(\mathsf {T} ^\mathfrak {L}\) is lower triangular, therefore its determinant is

which can not be zero under Hypothesis 1. The second part of the claim derives directly from the definition of \( \mathsf {T} ^\mathfrak {L}\) and \( \mathsf {B} ^\mathfrak {L}\) and the fact that the system is lower triangular, and can be illustrated as follows:

\(\square \)

To summarize, we have defined for increasing values of \(\mathfrak {L}\) a hierarchy of linear systems, each of which has the following characteristics:

-

its unknowns are \(\{\lambda _{i,M+\mathfrak {L}-i};\ \forall i\in {\mathbb {N}} \text { such that }0\le i\le M+\mathfrak {L}\}\);

-

its matrix \(\mathsf {T} ^\mathfrak {L}\in {\mathbb {C}}^{(M+\mathfrak {L}+1)\times (M+\mathfrak {L}+1)}\) is a square, non-singular, and triangular;

-

its right-hand side depends both on \(\{\lambda _{i,j};\ \forall (i,j)\!\in \!{\mathbb {N}}^2 \text { such that }0\!\le \! i\!+\!j \!<\! M+\mathfrak {L}\}\) and on \(M\) additional parameters.

At each level \(\mathfrak {L}\), assuming that the unknowns of inferior levels are known and provided \(M\) given values for \(\lambda _{i,M+\mathfrak {L}-i}\) for \(0\le i <M\), Proposition 1 provides an explicit formula to compute \(\lambda _{i,M+\mathfrak {L}-i}\) for \(M\le i\le M+\mathfrak {L}\).

2.4 Algorithm

The non-linear system (10) had \(N_{dof}^{(10)}=\frac{(M+q)(M+q+1)}{2}\) unknowns and \(N_{eqn}^{(10)}=\frac{q(q+1)}{2}\) equations, whereas each linear triangular system introduced in the previous subsection has \(N_{dof}^T=M+\mathfrak {L}+1\) unknowns and \(N_{eqn}^T=M+\mathfrak {L}+1\) equations for each level \(\mathfrak {L}\) such that \(0\le \mathfrak {L}\le q-1\). Therefore the hierarchy of triangular systems has a total of \(N_{dof}^{H}=(M+1)q+\frac{q(q-1)}{2}\) unknowns and \(N_{eqn}^{H}=N_{eqn}^{(10)}+Mq=Mq+\frac{q(q+1)}{2}\) equations, including the \( \frac{q(q+1)}{2}\) equations of the initial non-linear system (10).

The remaining \(N_{dof}^{(10)}-N_{dof}^T=\frac{M(M+1)}{2}\) unknowns, which are unknowns of none of the triangular systems but appear only on the right hand side of these systems, are the \(\{\lambda _{i,j},(i,j)\in {\mathbb {N}}^2,0\le i+j <M\}\). These are the unknowns with length of the multi-index at most equal to \(M-1\), and the corresponding indices (i, j) are the only ones that are not marked on the right panel of Fig. 2. It is therefore natural to add \(\frac{M(M+1)}{2}\) constraints corresponding to fixing the values of the remaining unknowns \(\{\lambda _{i,j},(i,j)\in {\mathbb {N}}^2,0\le i+j <M\}\). The final system we consider consists of these \(\frac{M(M+1)}{2}\) constraints, guaranteeing that the unknowns \(\{\lambda _{i,j},(i,j)\in {\mathbb {N}}^2,0\le i+j <M\}\) are known, together with the hierarchy of triangular systems (22) for increasing values of \(\mathfrak {L}\) from 0 to \(q-1\); it has \(N_{dof}^{F}=\frac{(M+q)(M+q+1)}{2} \) unknowns, namely the unknowns of the original system (10), and \(N_{eqn}^{F}=\frac{(M+q)(M+q+1)}{2}\) equations, namely the equations of the original system split into linear subsytems together with a total of \(\frac{M(M+1)}{2}+qM\) additional constraints. A counting summary is presented here:

Number of unknowns | Number of equations | |

|---|---|---|

Original non-linear system (10) | \( N_{dof}^{(10)}=\frac{(M+q)(M+q+1)}{2}\) | \(N_{eqn}^{(10)}=\frac{q(q+1)}{2}\) |

Subsystem at level \(\mathfrak {L}\) (21) | \( N_{dof}^\mathfrak {L}=M+\mathfrak {L}+1\) | \( N_{eqn}^\mathfrak {L}=\mathfrak {L}+1\) |

Triangular system at level \(\mathfrak {L}\) (22) | \(N_{dof}^T=M+\mathfrak {L}+1\) | \( N_{eqn}^T=M+\mathfrak {L}+1\) |

Hierarchy of triangular systems for \(\mathfrak {L}\) from 0 to \(q-1\) | \(N_{dof}^{H}=(M+1)q+\frac{q(q-1)}{2}\) | \( N_{eqn}^{H}=Mq+\frac{q(q+1)}{2}\) |

Final system (initial constraints + triangular systems) | \(N_{dof}^{F}=\frac{(M+q)(M+q+1)}{2}\) | \(N_{eqn}^{F}=\frac{(M+q)(M+q+1)}{2}\) |

Thanks to the \(\frac{M(M+1)}{2}\) constraints, for increasing values of \(\mathfrak {L}\) from 0 to \(q-1\), the hypothesis of Proposition 1 is satisfied, the right hand side \(\mathsf {B} ^\mathfrak {L}\) can be evaluated and the triangular system (22) can be solved. So the unknowns \(\{\lambda _{i,M+\mathfrak {L}-i};\ \forall i\in {\mathbb {N}} \text { such that }0\le i\le M+\mathfrak {L}\}\) can be computed by induction on \(\mathfrak {L}\), constructing a solution to the initial non-linear system (10) by induction on \(\mathfrak {L}\).

The following algorithm requires the value of \(\frac{M(M+1)}{2}+qM\) parameters, to fix initially the set of unknowns \(\{\lambda _{i,j},(i,j)\in {\mathbb {N}}^2,0\le i+j <M\}\) and then at each level \(\mathfrak {L}\) the set of unknowns \(\{\lambda _{i,M+\mathfrak {L}-i},i\in {\mathbb {N}},0\le i<M\}\). Under Hypothesis 1, the algorithm presents a sequence of steps to construct explicitly a solution to Problem (10) and requires no approximation process.

From the definitions of \(\mathsf {T} ^\mathfrak {L}\) and \(\mathsf {B} ^\mathfrak {L}\) we immediately see that the step 5 boils down to

If the set of unknowns \(\{\lambda _{i,j},(i,j)\in {\mathbb {N}}^2,0\le i+j <M+q-1\}\) is computed from Algorithm 1, then the polynomial \(P(x,y):= \sum _{0\le i+j\le q+M-1} \lambda _{i,j}(x-x_0)^i(y-y_0)^j\) is a solution to Problem (10), and therefore the function \(\varphi ({\mathbf {x}}) :=\exp P({\mathbf {x}})\) satisfies (6). This is true independently of the values fixed in lines 1.1 and 1.3 of the algorithm.

Remark 1

It is interesting to notice that the algorithm applies to a wide range of partial differential operators, including type changing operators such as Keldysh operators, \(L_K=\partial _x^2 + y^{2m+1}\partial _y^2+\) lower order terms, or Tricomi operators, \( L_T=\partial _x^2 + x^{2m+1}\partial _y^2 +\) lower order terms, that change from elliptic to hyperbolic type along a smooth parabolic curve.

To conclude this section, we provide a formal definition of a GPW associated to an partial differential operator at a given point.

Definition 7

Consider a point \((x_0,y_0)\in {\mathbb {R}}^2\), a given \(q\in {\mathbb {N}}^*\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\in {\mathcal {C}}^{q-1} \text { at }(x_0,y_0),0\le k+l\le M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). A Generalized Plane Wave (GPW) associated to the differential operator \({\mathcal {L}}_{M,\alpha }\) at the point \((x_0,y_0)\) is a function \(\varphi \) satisfying

Under Hypothesis 1, a Generalized Plane Wave (GPW) can be constructed as function \(\varphi (x,y)= \exp P(x,y) \), where the coefficients of the polynomial P are computed by Algorithm 1, independently of the values fixed in the algorithm.

The crucial feature of the construction process is the exact solution provided in the algorithm: in practice, a solution to the initial non-linear rectangular system is computed without numerical resolution of any system, with an explicit formula.

The choice of the fixed values in Algorithm 1 will be discussed in the next paragraph. Even though these values does not affect the construction process, and the fact that the corresponding \(\varphi (x,y)= \exp P(x,y) \) is a GPW, it will be key to prove the interpolation properties of the corresponding set of GPWs.

Remark 2

Under the hypothesis \(\alpha _{0,M}(x_0,y_0)\ne 0\) it would be natural to fix the values of \(\{ \lambda _{i,j}, 0\le j\le M-1, 0\le i\le q+M-1 -j \}\) instead of those of \(\{ \lambda _{i,j}, 0\le i\le M-1, 0\le j\le q+M-1 -i \}\), and an algorithm very similar to Algorithm 1, exchanging the roles of i and j would construct the polynomial coefficients of a GPW.

3 Normalization

We will refer to normalization as the choice of imposed values in Algorithm 1. The discussion presented in this section will be summarized in Definition 8.

Within the construction process presented in the previous section, only the design of the function \(\varphi \) as the exponential of a polynomial is related to wave propagation, while Algorithm 1 works for partial differential operators not necessarily related to wave propagation. In particular, the property \({\mathcal {L}}_{M,\alpha }\varphi (x,y)=O\left( \Vert (x,y)-(x_0,y_0) \Vert ^q\right) \) of GPWs is independent of the choice of \((\lambda _{1,0},\lambda _{0,1})\). However, the normalization process described here carries on the idea of adding higher order terms to the phase function of a plane wave, see (3), as was proposed in [15].

We will now restrict our attention to a smaller set of partial differential operators that include several interesting operators related to wave propagation, thanks to an additional hypothesis on the highest order derivatives in \({\mathcal {L}}_{M,\alpha }\), namely Hypothesis 2. Under this hypothesis we will be able to study the interpolation properties of associated GPWs in a unified framework. As we will see in this section, choosing only two non-zero fixed values in Algorithm 1 is sufficient to generate a set of linearly independent GPWs. It is then natural to study how the rest of the \(\lambda _{ij}\)s depend on those two values, and the related consequences of Hypothesis 2. These rely on Hypothesis 2 extending the fact that for classical PWs \((i\kappa \cos \theta )^2+(i\kappa \sin \theta )^2 = -\kappa ^2 \) is independent of \(\theta \).

3.1 For every GPWs

In Algorithm 1, the number of prescribed coefficients is \(\frac{M(M+1)}{2}+Mq\), and the set of coefficients to be prescribed is the set \(\{ \lambda _{i,j}, 0\le i\le M-1, 0\le j\le q+M-1 -i \}\).

For the sake of simplicity, it is natural to choose most of these values to be zero. Since the unknown \(\lambda _{0,0}\) never appears in the non-linear system, there is nothing more natural than setting it to zero: this ensures that any GPW \(\varphi \) will satisfy \(\varphi (x_0,y_0)=1\). Concerning the subset of \(Mq\) unknowns corresponding to step 1.3 in Algorithm 1, setting these values to zero simply reduces the amount of computation involved in step 1.5 in the algorithm: indeed for \(I=0\) then \(\sum _{k=0}^{M-1}\mathsf {T} ^\mathfrak {L}_{I+M+1,I+k+1} \lambda _{I+k,M+\mathfrak {L}-I-k}=0\), while for \(0<I<M\) then

As for the unknowns \(\lambda _{1,0}\) and \(\lambda _{0,1}\), they will be non-zero to mimic the classical plane wave case, and their precise choice will be discussed in the next subsection. For the remaining unknowns to be fixed, that is to say the set \(\{ \lambda _{i,j}, 2\le i+j\le M-1 \}\), their values are set to zero, here again in order to reduce the amount of computation in computing the right hand side entries \(\mathrm B^{{\mathfrak {L}}}_{M+1+I}\) and in applying 1.5.

For the operator \({\mathfrak {L}}_{2,\gamma }\) the non-linear terms in \(N_{1,0}\) and \(N_{0,1}\) respectively become with this normalization

Since all but two of the unknowns to be fixed in Algorithm 1 are set to zero, it is now natural to express the \(\lambda _{i,j}\) unknowns computed from 1.5 in the algorithm as functions of the two non-zero prescribed unknowns, \(\lambda _{1,0}\) and \(\lambda _{0,1}\).

Lemma 3

Consider a point \((x_0,y_0)\in {\mathbb {R}}^2\), a given \(q\in {\mathbb {N}}^*\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\in {\mathcal {C}}^{q-1} \text { at }(x_0,y_0),0\!\le \! k+l\!\le \! M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). Under Hypothesis 1 consider a solution to Problem (10) constructed thanks to Algorithm 1 with all the prescribed values \(\lambda _{i,j}\) such that \(i<M\) and \(i+j\ne 1\) set to zero. Each \(\lambda _{i+M,j}\) can be expressed as an element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\).

Proof

The fact that \(\lambda _{i+M,j}\) can be expressed as a polynomial in two variables with respect to \(\lambda _{1,0}\) and \(\lambda _{0,1}\) is a direct consequence from the explicit formula in step 1.5 in Algorithm 1 combining with setting \(\lambda _{i,j}\) such that \(i<M\) and \(i+j\ne 1\) to zero. \(\square \)

Since unknowns are expressed as elements of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\), we will now study the degree of various terms from Algorithm 1 as polynomials with respect to \(\lambda _{1,0}\) and \(\lambda _{0,1}\). To do so, we will start by inspecting the product terms appearing in Faa di Bruno’s formula.

Lemma 4

Consider a point \((x_0,y_0)\in {\mathbb {R}}^2\), a given \(q\in {\mathbb {N}}^*\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\in {\mathcal {C}}^{q-1} \text { at }(x_0,y_0),0\le k+l\le M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). Consider a given polynomial \(P\in {\mathbb {C}}[x,y]\). The non-linear terms \({\mathcal {L}}_{M,\alpha }^NP\), expressed as linear combinations of products of derivatives of P, namely \(\prod _{m=1}^s \left( \partial _x^{i_m}\partial _y^{j_m} P \right) ^{k_m}\), contain products of up to \(M\) derivatives of P, namely \(\partial _x^{i_m}\partial _y^{j_m} P \), counting repetitions. The only products that have exactly \(M\) terms are \((\partial _xP)^k(\partial _yP)^{M-k}\) for \(0\le k \le M\), whereas all the other products have less than \(M\) terms.

Proof

Since the operator \({\mathcal {L}}_{M,\alpha }^N\) is defined via Faa di Bruno’s formula, we will proceed by careful examination of the summation and product indices in the latter.

The number of terms in the product term is s, with possible repetitions counted thanks to the \(k_m\)s, and the total number of terms counting repetitions is \(\mu =\sum _{m=1}^s k_m\). Since in \({\mathcal {L}}_{M,\alpha }^N\) the indices are such that \(1\le \mu \le \ell \le M\), there cannot be more than \(M\) terms counting repetitions in any of the \(\prod _{m=1}^s \left( \partial _x^{i_m}\partial _y^{j_m} P \right) ^{k_m}\).

For \(s=1\), in the set \(p_1((k,\ell -k),\mu )\), \((i_1,j_1)\in {\mathbb {N}}^2\) are such that \(i_1+j_1 \ge 1\) and \(k_1\in {\mathbb {N}}\) is such that \(k_1(i_1+j_1)=\ell \). Since \(\ell \le M\), such a term appears in Faa di Bruno’s formula as a product of \(\mu =M\) terms if and only if \(\ell =M\), \(k_1=M\), and therefore \(i_1+j_1= 1\). There are then only two possibilities: either \((i_1,j_1)=(1,0)\) corresponding to the term \((\partial _xP)^M\), or \((i_1,j_1)=(0,1)\) corresponding to the term \((\partial _yP)^M\).

For \(s=2\), in the set \(p_2((k,\ell -k),\mu )\), \((i_1,j_1,i_2,j_2)\in {\mathbb {N}}^4\) are such that \(i_1+j_1\ge 1\), \(i_2+j_2\ge 1\), \((i_1,j_1)\prec (i_2,j_2)\), and \((k_1,k_2)\in {\mathbb {N}}^2\) is such that \(\mu =k_1+k_2\) and \(k_1(i_1+j_1)+k_2(i_2+j_2)=\ell \). Since \(\ell =k_1(i_1+j_1)+k_2(i_2+j_2)\ge k_1+k_2=\mu \) and \(\ell \le M\) such a term appears in Faa di Bruno’s formula as a product of \(\mu =M\) terms if and only if \(\ell =M\) and \(k_1+k_2=M\). There are then two possible cases: either \(i_2+j_2>1\), then \(M=k_1(i_1+j_1)+k_2(i_2+j_2)>k_1+k_2=M\), so there is no such term in the sum, or \(i_2+j_2=1\), then necessarily \((i_1,j_1)=(0,1)\) and \((i_2,j_2)=(1,0)\), corresponding to the terms \((\partial _xP)^k(\partial _yP)^{M-k}\) for any k from 0 to \(M\).

For \(s\ge 3\), in the set \(p_s((k,\ell -k),\mu )\), for all \(m\in {\mathbb {N}}\) such that \(1\le m\le s\), \((i_m,j_m)\in {\mathbb {N}}^2\) and \(k_m\in {\mathbb {N}}\) are such that \(i_m+j_m\ge 1\), \(\sum _{m=1}^s k_m(i_m+j_m)=\ell \), \(\mu =\sum _{m=1}^s k_m\) and \((i_1,j_1)\prec (i_2,j_2)\prec (i_3,j_3)\). Because of this last condition, it is clear that \(i_3+j_3>1\). Since \(\ell \le M\) and \(\ell =\sum _{m=1}^s k_m(i_m+j_m)\ge \sum _{m=1}^s k_m=\mu \), such a term appears in Faa di Bruno’s formula as a product of \(\mu =M\) terms if and only if \(\ell =M\) and \(\sum _{m=1}^s k_m=M\). But then \(M=\sum _{m=1}^s k_m(i_m+j_m)> \sum _{m=1}^s k_m=M\), so there is no such term in the sum.

The claim is proved. \(\square \)

Lemma 5

Consider a point \((x_0,y_0)\in {\mathbb {R}}^2\), a given \(q\in {\mathbb {N}}^*\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\in {\mathcal {C}}^{q-1} \text { at }(x_0,y_0),0\!\le \! k+l\!\le \! M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). Consider a given polynomial \(P\in {\mathbb {C}}[x,y]\). The quantity \(\partial _x^{I_0}\partial _y^{J_0}{\mathcal {L}}_{M,\alpha }^NP\) is a linear combination of terms \(\partial _x^{I_0}\partial _y^{J_0}\left( \prod _{m=1}^s \left( \partial _x^{i_m}\partial _y^{j_m} P \right) ^{k_m}\right) \), where the indices come from Faa di Bruno’s formula. Each of these \(\partial _x^{I_0}\partial _y^{J_0}\left( \prod _{m=1}^s \left( \partial _x^{i_m}\partial _y^{j_m} P \right) ^{k_m}\right) \) can be expressed as a linear combination of products \(\prod _{m=1}^t (\partial _x^{a_m}\partial _y^{b_m} P)^{c_m}\) where the indices satisfy \(\sum _{m=1}^t c_m(a_m+b_m)\le {I_0}+{J_0}+M\).

Proof

Thanks to the product rule, the derivative \(\displaystyle \partial _x^{I_0}\partial _y^{J_0}\left( \prod _{m=1}^s \left( \partial _x^{i_m}\partial _y^{j_m} P \right) ^{k_m}\right) \) can be expressed as a linear combination of several terms \(\displaystyle \prod _{m=1}^s \partial _x^{I_m}\partial _y^{J_m}\left[ \left( \partial _x^{i_m}\partial _y^{j_m} P \right) ^{k_m}\right] \), where \(\displaystyle \sum _{m=1}^tI_m=I_0\) and \(\sum _{m=1}^tJ_m=J_0\).

We can prove by induction on k that \(\displaystyle \partial _x^{I}\partial _y^{J}\left[ \left( \partial _x^{i}\partial _y^{j} P \right) ^{k}\right] \) can be expressed, for all \(\displaystyle (i,j,I,J)\in {\mathbb {N}}^4\), as a linear combination of products \(\displaystyle \prod _{m=1}^M (\partial _x^{a_m}\partial _y^{b_m} P)^{c_m}\) where the indices satisfy \(\displaystyle \sum _{m=1}^M c_m(a_m+b_m)\le I+J+k(i+j)\):

-

1.

it is evidently true for \(k=1\);

-

2.

suppose that it is true for \(k_0\ge 1\), then for any \((i,j,I,J)\in {\mathbb {N}}^4\) the product rule applied to \(\partial _x^{i}\partial _y^{j} P\times \left( \partial _x^{i}\partial _y^{j} P \right) ^{k_0}\) yields

$$\begin{aligned} \partial _x^{I}\partial _y^{J}\left[ \left( \partial _x^{i}\partial _y^{j} P \right) ^{k_0+1}\right] = \sum _{{\tilde{i}} =0}^{I} \sum _{{\tilde{j}} =0}^{J} \begin{pmatrix} I\\ {\tilde{i}} \end{pmatrix} \begin{pmatrix} J\\ {\tilde{j}} \end{pmatrix} \partial _x^{i+I-{\tilde{i}}}\partial _y^{j+J-{\tilde{j}}} P \partial _x^{{\tilde{i}}}\partial _y^{{\tilde{j}}} \left[ \left( \partial _x^{i}\partial _y^{j} P \right) ^{k_0}\right] , \end{aligned}$$where by hypothesis each \(\partial _x^{{\tilde{i}}}\partial _y^{{\tilde{j}}} \left[ \left( \partial _x^{i}\partial _y^{j} P \right) ^{k_0}\right] \)can be expressed as a linear combination of products \(\prod _{m=1}^M (\partial _x^{a_m}\partial _y^{b_m} P)^{c_m}\) with \(\sum _{m=1}^M c_m(a_m+b_m)\le {\tilde{i}}+{\tilde{j}}+k_0(i+j)\), so that each term in the double sum can be expressed as a linear combination of products \( \prod _{m=1}^{M+1} (\partial _x^{a_m}\partial _y^{b_m} P)^{c_m}\) where \(a_{M+1} :=i+I-{\tilde{i}}\), \(b_{M+1} := j+J-{\tilde{j}}\) and \(c_{M+1}:=1\), which yields \(\sum _{m=1}^{M+1} c_m(a_m+b_m)=\sum _{m=1}^{M} c_m(a_m+b_m)+(i+I-{\tilde{i}}+ j+J-{\tilde{j}})\) and therefore \(\sum _{m=1}^{M+1} c_m(a_m+b_m)\le k_0(i+j)+(i+I+ j+J)\). This concludes the proof by induction.

Finally the derivative \(\partial _x^{I_0}\partial _y^{J_0}\left( \prod _{m=1}^s \left( \partial _x^{i_m}\partial _y^{j_m} P \right) ^{k_m}\right) \) can be expressed as a linear combination of several terms \(\prod _{m=1}^s \prod _{{\tilde{m}}=1}^M (\partial _x^{a_{{\tilde{m}}}}\partial _y^{b_{{\tilde{m}}}} P)^{c_{{\tilde{m}}}}\), with \(\sum _{{\tilde{m}}=1}^M c_{{\tilde{m}}}(a_{{\tilde{m}}}+b_{{\tilde{m}}})\le I_m+J_m+k_m(i_m+j_m)\), in other words it can be expressed as a linear combination of several terms \(\prod _{m=1}^{Ms} (\partial _x^{a_{ m}}\partial _y^{b_{m}} P)^{c_{m}}\), with \(\sum _{ m=1}^{Ms} c_{ m}(a_{ m}+b_{m})\le \sum _{m=1}^s I_m+J_m+k_m(i_m+j_m)=I_0+J_0+\sum _{m=1}^s k_m(i_m+j_m)\). For any \(\partial _x^{I_0}\partial _y^{J_0}\left( \prod _{m=1}^s \left( \partial _x^{i_m}\partial _y^{j_m} P \right) ^{k_m}\right) \) coming from \(\partial _x^{I_0}\partial _y^{J_0}{\mathcal {L}}_{M,\alpha }^NP\), the summation indices from Faa di Bruno’s formula satisfy \(\sum _{m=1}^s k_m(i_m+j_m)=\ell \), so the products \(\prod _{m=1}^{Ms} (\partial _x^{a_{ m}}\partial _y^{b_{m}} P)^{c_{m}}\) are such that \(\sum _{ m=1}^{Ms} c_{ m}(a_{ m}+b_{m})\le I_0+J_0+M\). \(\square \)

The two following results now turn to \(\lambda _{i+M,j}\) computed in Algorithm 1.

Proposition 2

Consider a point \((x_0,y_0)\in \mathbb R^2\), given \(q\in \mathbb N^*\) and \(M\in \mathbb N\), with \(M\ge 2\), a set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\!\in \!{\mathcal {C}}^{q-1} \text { at }(x_0,y_0),0\!\le \! k+l\le M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). Under Hypothesis 1 consider a solution to Problem (10) constructed thanks to Algorithm 1 with all the fixed values \(\lambda _{i,j}\) such that \(i<M\) and \(i+j\ne 1\) set to zero. As an element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\), \(\lambda _{M,0}\) is of degree equal to \(M\).

Proof

The formula to compute \(\lambda _{M,0}\) in Algorithm 1 comes from the \((I,J)=(0,0)\) equation in System (10), that is to say \({\mathcal {L}}_{M,\alpha }^AP(x_0,y_0)=-\alpha _{0,0}(x_0,y_0)\). It reads

and the sum is actually zero since the \(\lambda _{k,M-k}\) unknowns are prescribed to zero for \(k<M\). The definitions of \(\mathsf {B} ^0\) and \(\mathsf {L} ^0\) then give

Since the \(\lambda _{k,\ell -k}\) unknowns are prescribed to zero for all \(1<\ell <M-1\) and all k, the double sum term reduces to \( \alpha _{0,1}(x_0,y_0)\lambda _{0,1}+\alpha _{1,0}(x_0,y_0)\lambda _{1,0}\). The non-linear terms from \({\mathcal {L}}_{M,\alpha }^NP\), namely \(\prod _{m=1}^s (\partial _x^{i_m}\partial _y^{j_m}P)^{k_m}\), are products of at most \(M\) terms, counting repetitions, according to Lemma 4. So \({\mathcal {L}}_{M,\alpha }^NP(x_0,y_0)\) is a linear combination of product terms reading \(\prod _{m=1}^s (\lambda _{i_m,j_m})^{k_m}\) with at most \(M\) factors. Moreover, since P is constructed thanks to Algorithm 1, from Corollary 1 we know that these \( \lambda _{i_m,j_m}\)s have a length of the multi-index at most equal to \(M-1\), so they are either \(\lambda _{1,0}\) or \(\lambda _{0,1}\) or prescribed to zero. This means that in \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\) each one of these \( \lambda _{i_m,j_m}\) is at most of degree one. So in \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\) each \(\prod _{m=1}^s (\lambda _{i_m,j_m})^{k_m}\) is a product of at most \(M\) factors each of them of degree at most one, the product is therefore of degree at most \(M\). As a result

as an element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\) is of degree at most \(M\).

Finally, the term \((\partial _xP)^M\) from \({\mathcal {L}}_{M,\alpha }^NP\) identified in Lemma 4 corresponds to a term \(\alpha _{M,0}(x_0,y_0)(\lambda _{1,0})^M\) in the expression of \(\lambda _{M,0}\), and this term is non-zero under Hypothesis 1. As a conclusion \(\lambda _{M,0}\) as an element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\) is of degree equal to \(M\). \(\square \)

Proposition 3

Consider a point \((x_0,y_0)\in \mathbb R^2\), given \(q\in \mathbb N^*\) and \(M\in \mathbb N\), with \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\!\in \!{\mathcal {C}}^{q-1} \!\text { at }\!(x_0,y_0),0\!\le \! k+l\!\le \! M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). Under Hypothesis 1 consider a solution to Problem (10) constructed thanks to Algorithm 1 with all the fixed values \(\lambda _{i,j}\) such that \(i<M\) and \(i+j\ne 1\) set to zero. As an element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\), each \(\lambda _{i+M,j}\) has a total degree at most equal to the length of its multi-index \(i+j+M\).

Proof

The formula to compute \(\lambda _{I+M,\mathfrak {L}-I}\) in Algorithm 1 comes from the \((I,J)=(I,\mathfrak {L}-I)\) equation in System (10), that is to say \(\partial _x^I\partial _y^{\mathfrak {L}-I}{\mathcal {L}}_{M,\alpha }^AP(x_0,y_0)=-\partial _x^I\partial _y^{\mathfrak {L}-I}\alpha _{0,0}(x_0,y_0)\). It reads

We will proceed by induction on \(\mathfrak {L}\):

-

1.

the result has been proved to be true for \(\mathfrak {L}= 0\) in Proposition 2;

-

2.

suppose the result is true for \(\mathfrak {L}\in {\mathbb {N}}\) as well as for all \(\tilde{\mathfrak {L}}\in {\mathbb {N}}\) such that \(\tilde{\mathfrak {L}}\le \mathfrak {L}\), then all the linear terms in \(N_{I,\mathfrak {L}+1-I}\) have a length of the multi-index at most equal to \(M+\mathfrak {L}\) so by hypothesis their degree as elements of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\) is at most equal to \(M+\mathfrak {L}\), and thanks to Lemma 5 all the non-linear terms in \(N_{I,\mathfrak {L}+1-I}\) can be expressed as a linear combination of products \(\prod _{m=1}^t (\lambda _{a_m,b_m})^{c_m}\) where the indices satisfy \(\sum _{m=1}^t c_m(a_m+b_m)\le \mathfrak {L}+1+M\) so by hypothesis their degree as elements of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\) is at most equal to \(M+\mathfrak {L}+1\); the last step is to prove that the \(\lambda _{I+k,M+\mathfrak {L}+1-I-k}\) are also of degree at most equal to \(M+\mathfrak {L}+1\), and we will proceed by induction on I:

-

(a)

for \(I=0\), all \(\lambda _{I+k,M+\mathfrak {L}+1-I-k}\) for \(0\le k\le M-1\) satisfy the two conditions \(I+k<M\) and \(I+k+M+\mathfrak {L}+1-I-k = M+\mathfrak {L}+1\ne 1\) so they are all prescribed to zero and their degree as element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\) is at most equal to \(M+\mathfrak {L}+1\) that;

-

(b)

suppose that, for a given \(I\in {\mathbb {N}}\), the \(\lambda _{{\tilde{I}}+k,M+\mathfrak {L}+1-{\tilde{I}}-k}\) for all \({\tilde{I}}\in {\mathbb {N}}\) such that \({\tilde{I}}\le I\) are also of degree at most equal to \(M+\mathfrak {L}+1\) then it is clear from Eq. (24) that \(\lambda _{I+1+M,\mathfrak {L}-I-1}\) is also of degree at most equal to \(M+\mathfrak {L}+1\).

This concludes the proof. \(\square \)

-

(a)

As explained from an algebraic viewpoint in Sect. 3.2 in [15], the degree of \(\lambda _{i+M,j}\) as an element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\) will be affected by the choice of the last two prescribed values, namely \(\lambda _{1,0}\) and \(\lambda _{0,1}\). Indeed if \(\lambda _{1,0}\) and \(\lambda _{0,1}\) satisfy a polynomial identity \(P_l(\lambda _{1,0},\lambda _{0,1})=0\), then we can consider the quotient ring \({\mathbb {C}} [\lambda _{1,0},\lambda _{0,1}]/(P_l)\).

Note that choosing to set \(\{ \lambda _{i,j}, 1< i+j\le M-1 \}\) to values different from zero may be useful to treat operators that do not satisfy Hypothesis 2 but this is not our goal here.

3.2 For each GPW

In order to obtain a set of linearly independent GPWs, the values of \(\lambda _{1,0}\) and \(\lambda _{0,1}\) will be chosen different for each GPW. However the values of \(\lambda _{1,0}\) and \(\lambda _{0,1}\) will satisfy a common property for every GPWs. Very much as the coefficients of any plane wave of wavenumber \(\kappa \) satisfy \((\lambda _{1,0})^2+(\lambda _{0,1})^2=-\kappa ^2\), independently of the direction of propagation \(\theta \) since \(\lambda _{1,0}=\imath \kappa \cos \theta \) and \(\lambda _{0,1}=\imath \kappa \sin \theta \), under Hypothesis 2 the coefficients of each GPW will be chosen for the quantity

to be identical for every GPWs, as we will see in the following proposition and theorem.

This will be crucial to prove interpolation properties of the corresponding set of functions, which will result from the consequence of this common property on the degree of each \(\lambda _{i+M,j}\) as an element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\). As the plane wave case suggests, we will see that \(\lambda _{i+M,j}\) can be expressed as a polynomial of lower degree thanks to a judicious choice for \(\lambda _{1,0}\) and \(\lambda _{0,1}\).

We first need an intermediate result concerning the polynomial \({\mathcal {L}}_{M,\alpha }^NP\).

Lemma 6

Consider a point \((x_0,y_0)\in {\mathbb {R}}^2\), a given \(q\in {\mathbb {N}}^*\), a given \(M\in {\mathbb {N}}\), \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\in {\mathcal {C}}^{q-1} \text { at }(x_0,y_0),0\le k+l\le M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). Consider a given polynomial \(P\in {\mathbb {C}}[x,y]\). For any \(\mathfrak {L}\in {\mathbb {N}}\) and any \(I\in {\mathbb {N}}\) such that \(I\le \mathfrak {L}+1\), the quantity \(\partial _x^I\partial _y^{\mathfrak {L}+1-I} \left[ {\mathcal {L}}_{M,\alpha }^NP \right] \) can be expressed as a linear combination of products \(\prod _{t=1}^\mu \partial _x^{i_t+I_t}\partial _y^{j_t+J_t}P \), with \(\sum _{t=1}^\mu I_t =I\), \(\sum _{t=1}^\mu J_t =\mathfrak {L}+1-I\), \(\sum _{t=1}^\mu i_t =k\), and \(\sum _{t=1}^\mu j_t =\ell -k\). Moreover, for each product term, there exists \(t_0\in {\mathbb {N}}\), \(1\le t_{0}\le \mu \) such that \(I_{t_0}\ne 0\) or \(J_{t_0}\ne 0\).

Proof

The quantity \({\mathcal {L}}_{M,\alpha }^NP\) can be expressed, from Faa di Bruno’s formula, as a linear combination of products \(\prod _{m=1}^s\left( \partial _x^{i_m}\partial _y^{j_m}P \right) ^{k_m}\), with \((i_{m_1},j_{m_1})\ne (i_{m_2},j_{m_2})\) for all \(m_1\ne m_2\), \(\sum _{m=1}^sk_m = \mu \), \(\sum _{m=1}^sk_mi_m =k\), and \(\sum _{m=1}^sk_mj_m =\ell -k\). Therefore \({\mathcal {L}}_{M,\alpha }^NP\) can also be expressed, repeating terms, as a linear combination of products \(\prod _{t=1}^\mu \partial _x^{i_t}\partial _y^{j_t}P \), with possibly \((i_{m_1},j_{m_1})= (i_{m_2},j_{m_2})\) for \(m_1\ne m_2\), \(\sum _{t=1}^\mu i_t =k\), and \(\sum _{t=1}^\mu j_t =\ell -k\). So the quantity \(\partial _x^I\partial _y^{\mathfrak {L}+1-I} \left[ {\mathcal {L}}_{M,\alpha }^NP\right] \) can be expressed, from Leibniz’s rule, as a linear combination of products \(\prod _{t=1}^\mu \partial _x^{i_t+I_t}\partial _y^{j_t+J_t}P \), with \(\sum _{t=1}^\mu I_t =I\) and \(\sum _{t=1}^\mu J_t =\mathfrak {L}+1-I\).

Consider such a given product term \(\prod _{t=1}^\mu \partial _x^{i_t+I_t}\partial _y^{j_t+J_t}P \), and suppose that for all t \(I_t=J_t=0\). Then \(I=\sum _{t=1}^\mu I_t =0\) and \(\mathfrak {L}+1-I=\sum _{t=1}^\mu J_t =0\), which is impossible since \(\mathfrak {L}+1>0\). \(\square \)

The two following results gather the consequences of this choice on \(\lambda _{i+M,j}\)s computed in Algorithm 1.

Proposition 4

Consider a point \((x_0,y_0)\in \mathbb R^2\), given \(q\in \mathbb N^*\) and \(M\in \mathbb N\), with \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\!\in \!{\mathcal {C}}^{q-1} \!\text { at }\!(x_0,y_0),0\!\le \! k+l\!\le \!M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). Under Hypotheses 1 and 2 consider a solution to Problem (10) constructed thanks to Algorithm 1 with all the prescribed values \(\lambda _{i,j}\) such that \(i<M\) and \(i+j\ne 1\) set to zero, and

for some \(\theta \in {\mathbb {R}}\) and \(\kappa \in {\mathbb {C}}^*\). As an element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\), \(\lambda _{M,0}\) can be expressed as a polynomial of degree at most equal to \(M-1\), and its coefficients are independent of \(\theta \).

Note that once we impose this condition on \(\lambda _{1,0},\lambda _{0,1}\) any element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\) can be expressed by different polynomials, possibly with different degrees, simply because under Hypothesis 2 and (25) we have

See paragraph 3.2 in [15] for an algebraic view point on this comment.

Proof

Since

again the term to investigate is \({\mathcal {L}}_{M,\alpha }^NP(x_0,y_0)\). Lemma 4 identifies products of \(M\) terms in \({\mathcal {L}}_{M,\alpha }^NP\), and from the definition of \({\mathcal {L}}_{M,\alpha }^N\) they appear in the following linear combination

Back to the expression of \(\lambda _{M,0}\), and thanks to Hypothesis 2, the only possible terms of degree \(M\) therefore appear in the following linear combination:

Finally thanks to (25), the only terms of degree \(M\) in (26) can be expressed as a polynomial of degree at most equal \(M-1\). \(\square \)

Proposition 5

Consider a point \((x_0,y_0)\in \mathbb R^2\), given \(q\in \mathbb N^*\) and \(M\in \mathbb N\), with \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\!\in \!{\mathcal {C}}^{q-1} \!\text { at }\!(x_0,y_0),0\!\le \! k+l\!\le \! M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). Under Hypotheses 1 and 2 consider a solution to Problem (10) constructed thanks to Algorithm 1 with all the fixed values \(\lambda _{i,j}\) such that \(i<M\) and \(i+j\ne 1\) set to zero, and

for some \(\theta \in {\mathbb {R}}\) and \(\kappa \in {\mathbb {C}}^*\). As an element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\), each \(\lambda _{i+M,j}\) can be expressed as a polynomial of degree at most equal to \(i+j+M-1\), and its coefficients are independent of \(\theta \).

Proof

From Algorithm 1 the expression of \(\lambda _{I+M,\mathfrak {L}-I}\) reads

We will proceed again by induction on \(\mathfrak {L}\):

-

1.

the result has been proved to be true for \(\mathfrak {L}= 0\) in Proposition 4;

-

2.

suppose the result is true for \(\mathfrak {L}\in {\mathbb {N}}\) as well as for all \(\tilde{\mathfrak {L}}\in {\mathbb {N}}\) such that \(\tilde{\mathfrak {L}}\le \mathfrak {L}\), then we focus on \(N_{I,\mathfrak {L}+1-I}\), given by

$$\begin{aligned} \begin{aligned} N_{0,\mathfrak {L}+1}&= \sum _{k=0}^{M} \sum _{{\tilde{j}}=0}^{\mathfrak {L}} \left( k+{\tilde{i}}\right) !\frac{\left( {M}-k+{\tilde{j}}\right) !}{{\tilde{j}} !} {\mathcal {D}}^{(0,\mathfrak {L}+1-{\tilde{j}})}\alpha _{k,{M}-k} (x_0,y_0) \lambda _{k,{M}-k+{\tilde{j}}}\\&\quad +\sum _{\ell = 1}^{M-1} \sum _{k=0}^\ell \sum _{{\tilde{j}}=0}^{\mathfrak {L}+1} \left( k\right) !\frac{\left( {\ell }-k+{\tilde{j}}\right) !}{{\tilde{j}} !} {\mathcal {D}}^{(0,\mathfrak {L}+1-{\tilde{j}})}\alpha _{k,\ell -k} (x_0,y_0) \lambda _{k,{\ell }-k+{\tilde{j}}} \\&\quad - {\mathcal {D}}^{(0,\mathfrak {L}+1)}\left[ {\mathcal {L}}_{M,\alpha }^NP \right] (x_0,y_0) - {\mathcal {D}}^{(0,\mathfrak {L}+1)}\alpha _{0,0}(x_0,y_0) \text { for } I=0 \text {; and } \end{aligned} \end{aligned}$$$$\begin{aligned} \begin{aligned}&N_{I,\mathfrak {L}+1-I}\\&\quad =- \sum _{k=0}^{M} \sum _{{\tilde{i}}=0}^{I-1}\sum _{{\tilde{j}}=0}^{\mathfrak {L}-I} \frac{\left( k+{\tilde{i}}\right) !\left( {M}-k+{\tilde{j}}\right) !}{{\tilde{i}}!{\tilde{j}}!} {\mathcal {D}}^{(I-{\tilde{i}},\mathfrak {L}+1-I-{\tilde{j}})}\alpha _{k,{M}-k} (x_0,y_0) \lambda _{k+{\tilde{i}},{M}-k+{\tilde{j}}}\\&\qquad -\sum _{\ell = 1}^{M-1} \sum _{k=0}^\ell \sum _{{\tilde{i}}=0}^{I}\sum _{{\tilde{j}}=0}^{\mathfrak {L}+1-I} \frac{ \left( k+{\tilde{i}}\right) !\left( {\ell }-k+{\tilde{j}}\right) !}{{\tilde{i}}!{\tilde{j}}!} {\mathcal {D}}^{(I-{\tilde{i}},\mathfrak {L}+1-I-{\tilde{j}})}\alpha _{k,\ell -k} (x_0,y_0) \lambda _{k+{\tilde{i}},{\ell }-k+{\tilde{j}}} \\&\qquad -\,{\mathcal {D}}^{(I,\mathfrak {L}+1-I)}\left[ {\mathcal {L}}_{M,\alpha }^NP \right] (x_0,y_0) - {\mathcal {D}}^{(I,\mathfrak {L}+1-I)}\alpha _{0,0}(x_0,y_0) \text { otherwise;} \end{aligned} \end{aligned}$$all the linear terms in \(N_{I,\mathfrak {L}+1-I}\), as elements of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\), by hypothesis have degree at most equal to \((I+M)+(\mathfrak {L}+1-I)-1=M+\mathfrak {L}\), and thanks to Lemma 6 all the non-linear terms in \(N_{I,\mathfrak {L}+1-I}\) can be expressed as a linear combination of products \(\prod _{t=1}^\mu \lambda _{a_t,b_t}\) where the indices satisfy \(\sum _{t=1}^\mu (a_t+b_t)\le \mathfrak {L}+1+M\); in each such product, as element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\), each \(\lambda _{a_t,b_t}\) is either of degree \(a_t+b_t=1\) if \((a_t,b_t)\in \{(0,1),(1,0)\}\), or of degree at most equal to \(a_t+b_t-1\) otherwise by hypothesis; from Lemma 6 there is at least one \(t_0\) such that \((a_{t_0},b_{t_0})\notin \{(0,1),(1,0)\}\), therefore each product \(\prod _{t=1}^\mu \lambda _{a_t,b_t}\), as element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\), can be expressed as a polynomial of degree at most \(\left( \sum _{t=1}^\mu (a_t+b_t)\right) -1\le \mathfrak {L}+M\); so all terms in \(N_{I,\mathfrak {L}+1-I}\), as elements of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\), have degree at most equal to \(M+\mathfrak {L}\); the last step is to prove that the \(\lambda _{I+k,M+\mathfrak {L}+1-I-k}\) are also of degree at most equal to \(M+\mathfrak {L}\), and we will proceed by induction on I:

-

(a)

for \(I=0\), all \(\lambda _{I+k,M+\mathfrak {L}+1-I-k}\) for \(0\le k\le M-1\) satisfy the two conditions \(I+k<M\) and \(I+k+M+\mathfrak {L}+1-I-k = M+\mathfrak {L}+1\ne 1\) so they are all prescribed to zero and their degree as element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\) is at most equal to \(M+\mathfrak {L}\) that;

-

(b)

suppose that, for a given \(I\in {\mathbb {N}}\), the \(\lambda _{{\tilde{I}}+k,M+\mathfrak {L}+1-{\tilde{I}}-k}\) for all \({\tilde{I}}\in {\mathbb {N}}\) such that \({\tilde{I}}\le I\) are also of degree at most equal to \(M+\mathfrak {L}\) then it is clear from Eq. (27) that \(\lambda _{I+1+M,\mathfrak {L}-I-1}\) is also of degree at most equal to \(M+\mathfrak {L}\).

This concludes the proof. \(\square \)

-

(a)

Finally, since we are interested in the local approximation properties of GPWs, it is natural to study their Taylor expansion coefficients, and how they can be expressed as elements of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\). In particular we will find what is the link between the Taylor expansion coefficients of a GPW, \( \partial _x^i\partial _y^j \varphi \left( x_0,y_0\right) /(i!j!)\), and that of the corresponding PW, \((\lambda _{0,1})^j (\lambda _{1,0})^i/(i!j!)\).

Proposition 6

Consider a point \((x_0,y_0)\in \mathbb R^2\), given \(q\in \mathbb N^*\) and \(M\in \mathbb N\), with \(M\ge 2\), a given set of complex-valued functions \(\alpha =\{ \alpha _{k,l}\!\in \!{\mathcal {C}}^{q-1} \!\text { at }(x_0,y_0),0\!\le \! k+l\!\le \! M\}\), and the corresponding partial differential operator \({\mathcal {L}}_{M,\alpha }\). Under Hypotheses 1 and 2 consider a solution to Problem (10) constructed thanks to Algorithm 1 with all the fixed values \(\lambda _{i,j}\) such that \(i<M\) and \(i+j\ne 1\) set to zero, and

for some \(\theta \in {\mathbb {R}}\) and \(\kappa \in {\mathbb {C}}^*\), and the corresponding \( \varphi (x,y)=\exp \sum _{ 0\le i+j\le q+1} \lambda _{ij}(x-x_0)^i (y-y_0)^j\). Then for all \((i,j) \in {\mathbb {N}}^2\) such that \(i+j\le q+1\) the difference

can be expressed as an element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\) such that

-

its total degree satisfies \(\mathrm{d} R_{i,j} \le i+j-1\),

-

its coefficients only depend on i, j, and on the derivatives of the PDE coefficients \(\alpha \) evaluated at \((x_0,y_0)\) but do not depend on \(\theta \).

Proof

Applying the chain rule introduced in “Appendix A.2. to the GPW \(\varphi \) one gets for all \( (i,j) \in {\mathbb {N}}^2\),

where \(p_s((i,j),\mu )\) is the set of partitions of (i, j) with length \(\mu \):

For each partition \((k_l,(i_l,j_l))_{l\in [\![ 1,s ]\!]}\) of (i, j), the corresponding product term, considered as an element of \({\mathbb {C}}[\lambda _{1,0},\lambda _{0,1}]\), has degree \(Deg \ \prod _{l=1}^s (\lambda _{i_l,j_l})^{k_l}=\sum _{l=1}^s k_l Deg\ \lambda _{i_l,j_l}\). Combining Proposition 5 and the fact that \(\lambda _{i,j}=0\) for all (i, j) such that \(1<i+j<M\), we can conclude that this degree is at most equal to

The partition with two terms \((i,j)=j(0,1)+i(1,0)\) corresponds to the term \((\lambda _{0,1})^j(\lambda _{1,0})^i\), which is the leading term in \(\partial _x^i\partial _y^j \varphi \left( x_0,y_0\right) \). Indeed, any other partition will include at least one term such that \(i_l+j_l>1\), and the degree corresponding to this term within the product is either \( k_l \cdot 0\) or \( k_l (i_l+j_l-1)\), and in both case it is at most equal to \(k_l (i_l+j_l)-1\). As a result, the degree of the product term in (29) is necessarily less than \(\sum _{l=1}^s k_l(i_l+j_l)= i+j\). So \(R_{i,j}\), which is defined as the difference between \(\partial _x^i\partial _y^j \varphi \left( x_0,y_0\right) \) and its leading term \((\lambda _{0,1})^j (\lambda _{1,0})^i\), is as expected of degree less than \(i+j\).

Finally, the coefficients of \(R_{i,j}\) share the same property as the coefficients of \(\lambda _{ij}\)s from Propositions 5. \(\square \)

Remark 3

As mentioned in Remark 2, under the hypothesis \(\alpha _{0,M}(x_0,y_0)\ne 0\), an algorithm very similar to Algorithm 1 would construct the polynomial coefficients of a GPW, fixing the values of \(\{ \lambda _{i,j}, 0\le j\le M-1, 0\le i\le q+M-1 -j \}\). The corresponding version of Proposition 6 could then be proved essentially by exchanging the roles of i and j in all the proofs.

3.3 Local set of GPWs

At this point for a given value of \(\theta \in {\mathbb {R}}\) we can construct a GPW as a function \(\varphi =\exp P\) where the polynomial P is a solution to Problem (10) constructed thanks to Algorithm 1 with all the fixed values \(\lambda _{i,j}\) such that \(i<M\) and \(i+j\ne 1\) set to zero, and