Abstract

We investigate a class of optimal stopping problems arising in, for example, studies considering the timing of an irreversible investment when the underlying follows a skew Brownian motion. Our results indicate that the local directional predictability modeled by the presence of a skew point for the underlying has a nontrivial and somewhat surprising impact on the timing incentives of the decision maker. We prove that waiting is always optimal at the skew point for a large class of exercise payoffs. An interesting consequence of this finding, which is in sharp contrast with studies relying on ordinary Brownian motion, is that the exercise region for the problem can become unconnected even when the payoff is linear. We also establish that higher skewness increases the incentives to wait and postpones the optimal timing of an investment opportunity. Our general results are explicitly illustrated for a piecewise linear payoff.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Standard Brownian motion constitutes without a doubt the most commonly utilized model for the factor dynamics driving the underlying stochasticity in financial models. Its analytical tractability and computational facility makes it a compelling model with many desirable properties ranging from the independence of its increments to the Gaussianity of its probability distribution. Unfortunately, for many financial return variables the presence of autocorrelation of the driving dynamics and/or skewness of the probability distributions constitutes a rule rather than an exception. It is clear that in such a case relying on a simple Gaussian structure may result in wrong conclusions concerning both the valuation and the timing of investment opportunities.

In contrast with the standard Gaussian framework, relatively recent empirical research indicates that even though the exact value of an asset is unpredictable, the direction towards which the asset value is expected to develop may be predictable to some extent [see, for example, Anatolyev and Gerko (2005), Anatolyev and Gospodinov (2010), Bekiros and Georgoutsos (2008a, b), Chevapatrakul (2013), Christoffersen and Diebold (2006), Christoffersen et al. (2006), Nyberg (2011), Rydberg and Shepard (2003) and Skabar (2013)]. More precisely, expressing the return of an asset as the product of its sign and its absolute value and investigating the behavior of these factors separately indicates that the sign variable capturing the directional behavior of the return can be forecasted correctly with an accuracy ranging from 52% to even 60% [for a recent survey of studies focusing on directional predictability, see Hämäläinen (2015)]. This empirical observation has not went completely unnoticed in theoretical finance studies and it has resulted into the introduction and the analysis of driving dynamics possessing at least some of the skewness and the local (in space) predictive properties encountered in financial data. One of the proposed modeling approaches is based on skew Brownian motion and skew diffusion processes in general (cf. Corns and Satchell 2007; Decamps et al. 2005, 2006a, b; Presman 2012; Presman and Yurinsky 2012; Rosello 2012). Basically, a skew Brownian motion behaves like an ordinary Brownian motion outside the origin but at the origin the process has more tendency to move, say, upwards than downwards resulting in a sense into a larger number of positive than negative excursions starting from the origin. In that way it offers a mathematical model for local directional predictability of the driving random factor and, consequently, to an asymmetric and skewed probability distribution of the underlying random dynamics. For results on skew Brownian motion, see, for example, Appuhamillage et al. (2011), Appuhamillage and Sheldon (2012), Barlow (1988), Borodin and Salminen (2015), Burdzy and Chen (2001), Harrison and Shepp (1981), Itô and McKean (1974), Lejay (2006), Lejay et al. (2014), Ouknine (1991), Vuolle-Apiala (1996) and Walsh (1978).

Motivated by the arguments above, we investigate in this paper how the singularity generated by the positive skewness of the underlying driving diffusion affect optimal stopping policies within an infinite horizon setting. Our approach for solving the considered optimal stopping problem is based on the scrutinized analysis of the superharmonic functions. For papers on optimal stopping, where the superharmonic functions play prominent role, we refer to Alvarez (2003), Beibel and Lerche (1997), Beibel and Lerche (2001), Christensen and Irle (2011), Crocce and Mordecki (2014), Dayanik and Karatzas (2003), Ekström and Villeneuve (2006), Salminen (2000) and Salminen and Ta (2015) and references therein. In particular, we use the Martin representation theory of superharmonic functions (cf. Christensen and Irle 2011; Salminen 1985). We demonstrate that positive skewness increases the incentives to wait at the singularity so radically that the skew point is always included in the continuation region provided that the exercise payoff is increasing at the skew point. This observation is in sharp contrast with results based on standard Brownian motion and illustrates how even relatively small local predictability of the underlying diffusion generates incentives to wait and, in that way, postpone the optimal stopping of the underlying process. An interesting and to some extent surprising implication of this observation is that the optimal stopping policy for skew BM can become a three-boundary policy even in the case where the exercise payoff is piecewise linear (call option type). Such configurations cannot appear in models relying on standard BM. We also demonstrate that the sign of the dependence of the value of the optimal policy and the skewness of the underlying diffusion is positive. Consequently, higher skewness increases the value of the optimal policy and expands the continuation region. An interesting implication of this observation is that the value of the optimal stopping strategy for a positively skew BM dominates the corresponding value for standard BM.

The contents of this study are as follows. The basic properties of the underlying dynamics, i.e., skew Brownian motion, are discussed in Sect. 2. In Sect. 3 the considered stopping problem and some key facts are presented. Our main findings on optimal stopping of skew Brownian motion are summarized in Sect. 4. These results are then numerically illustrated in an explicitly parameterized piecewise linear model in Sect. 5.

2 Underlying dynamics: Skew Brownian motion

Our main objective is to investigate how the potential directional asymmetry of the underlying diffusion affects the optimal exercise strategies and their values. In order to accomplish this task, we assume that the underlying diffusion process is a skew Brownian motion (abbreviated from now on as SBM) characterized as the unique strong solution of the stochastic equation (cf. Harrison and Shepp 1981)

where \(x\in \mathbb {R}\) is the initial value of the process, \(\beta \in [0,1]\) is a parameter capturing the skewness of the process, \(\{W_t\}_{t\ge 0}\) is a standard Brownian motion and \(\{l_t^X\}_{t\ge 0}\) is the local time at zero of the process \(\{X_t\}_{t\ge 0}\) normalized with respect to Lebesgue’s measure. As is clear from (1), the process \(\{X_t\}_{t\ge 0}\) coincides with standard Brownian motion when \(\beta = 1/2\) and with reflected Brownian motion when \(\beta = 0\) or \(\beta = 1\). The process \(\{X_t\}_{t\ge 0}\) behaves like ordinary Brownian motion outside the skew point 0 and has for all \(t>0\) the property \(\mathbb {P}_0[X_t\ge 0]=\beta \) (cf. Borodin and Salminen 2015, p. 130). Thus, the process has in a sense more tendency to move up than down from the origin whenever \(\beta >1/2\). Moreover, the known transition probability density reads as [see, for example, Borodin and Salminen (2015, p. 130) or Lejay (2006, p. 420)]

The scale function and the speed measure of X are given by

and

respectively. The fact that \(S(x)\rightarrow \pm \infty \) as \(x\rightarrow \pm \infty \) implies that X is recurrent. Finally, the increasing and the decreasing fundamental solutions associated with X are (cf. Borodin and Salminen 2015, p. 130)

and

respectively, where \(\theta =\sqrt{2r}\) is the so-called Wronskian of the fundamental solutions with respect to the scale function. It is easily seen that \(\psi _r\) and \(\varphi _r\) are differentiable with respect to S everywhere (also at 0), but not in the ordinary sense at 0. In particular, we notice that

Recall also that the Laplace transform of the first hitting time \(\tilde{\tau }_a=\inf \{t\ge 0:X_t=a\}\) to the state \(a\in \mathbb {R}\) can be expressed as follows (cf. Borodin and Salminen 2015, p. 18)

3 Problem setting and some preliminary results

Our task is to investigate for SBM X with \(\beta >1/2\) how the skewness and the resulting local directional predictability of the underlying affects the value and optimal exercise policy in the optimal stopping problem (OSP):

Find a stopping time \(\tau ^*\) such that

where \(r>0\) denotes the prevailing discount rate, \(\mathcal {T}\) is the set of all stopping times with respect to the natural filtration generated by X, and \(g{:}\,\mathbb {R}\mapsto \mathbb {R}_+\) is the exercise reward satisfying:

- (g1):

-

g is continuous, non-decreasing, non-negative, and has finite left and right derivatives,

- (g2):

-

\(\lim _{x\rightarrow \infty }g(x)/\psi _r(x)=0\) and \(\lim _{x\rightarrow -\infty }g(x)/\psi _r(x)=0\).

In (6) we use the convention that if \(\tau (\omega )=\infty \) then

As is known from the literature on optimal stopping V is the smallest r-excessive majorant of g [cf. Theorem 1 on p. 124 of Shiryaev (1978)]. Recall that for a regular diffusion on \(\mathbb {R}\) a measurable function \(h{:}\,\mathbb {R}\mapsto \mathbb {R}_+\) is called r-excessive if it satisfies for all \(x\in \mathbb {R}\) and \(t\ge 0\) the inequality (cf. Borodin and Salminen 2015, pp. 32–35)

as well as the limiting condition

As usual, we call \(\varGamma :=\{x:V(x)=g(x)\}\) the stopping region and \(C:=\{x:V(x)>g(x)\}\) the continuation region. Let

denote the set of points at which the ratio \(g/\psi _r\) is maximized. We can now prove the following:

Lemma 1

The value of the optimal policy is finite, i.e. \(V(x)<\infty \) for all \(x\in \mathbb {R}\). The stopping region is nonempty; in fact, \(\mathcal {M}\subset \varGamma \). Moreover, if \(x^*\in \mathcal {M}\), then \((-\infty ,x^*)\backslash \mathcal {M}\subset C\).

Proof

Assumptions (g1) and (g2) guarantee that the set of maximizers \(\mathcal {M}\) is non-empty. Hence, for all \(x\in \mathbb {R}\) it holds that

For the last inequality in (8) we use the optional sampling theorem which is justified since \(\{\text {e}^{-rt}\psi _r(X_t)\}_{t\ge 0}\) is a positive supermartingale. This proves that \(V(x)<\infty \) for all \(x\in \mathbb {R}\). In order to show that \(\mathcal {M}\subset \varGamma \), let \(x^*\in \mathcal {M}\) and utilize (8) to obtain

proving that \(x^*\in \varGamma \). Finally, let \(x\in (-\infty ,x^*)\setminus \mathcal {M}\). It is then clear that since \(x\not \in \mathcal {M}\)

demonstrating that \(x\in C\) as well.

Next we establish a result used to verify that a candidate strategy is optimal. This is essentially Corollary on p. 124 in Shiryaev (1978). We present the proof for readability and completeness.

Lemma 2

Let A be a nonempty Borel subset of \(\mathbb {R}\) and \(\tau _A:=\inf \{t\ge 0:X_t\in A\}\). Assume that the function

is r-excessive and dominates g. Then, \(V=\hat{V}\) and \(\tau _A\) is an optimal stopping time. Moreover, \(\tau _A\) is finite almost surely.

Proof

Clearly, \(\tau _A<\infty \) almost surely since X is recurrent and A is nonempty. By the definition of V it holds for all x

On the other hand, \(\hat{V}\) being an r-excessive majorant of g yields

Consequently, \(V=\hat{V}\) and \(\tau _A\) is an optimal stopping time.

In many optimal stopping problems the set A appearing in Lemma 2 turns out to be \(\varGamma \) explaining the terminology “stopping set” for \(\varGamma \). This is also the case in our subsequent analysis where we establish conditions under which the optimal stopping rule equals \(\tau _{\,\varGamma }\).

4 Main results

Typically optimal stopping problems of the type (6) can be investigated quite efficiently by relying on variational inequalities and approaches utilizing the differential operator associated with the generator of the underlying diffusion. Unfortunately, the use of those approaches for SBM is challenging due to the extra drift component involving a local time term at the skew point, see stochastic equation (1). In order to circumvent this problem, we first focus on the general properties of r-excessive functions and characterize general conditions under which the skew point (i.e. the origin) is in the continuation region.

Proposition 1

Assume that either \(0\le g'(0-) < g'(0+)\) or \(0<g'(0-) \le g'(0+)\). Then, for SBM with \(\beta >1/2\) the state 0 is for all \(r>0\) in the continuation region \(C=\{x{:}\,V(x)>g(x)\}\) .

Proof

Since \(\psi _r\) and \(\varphi _r\) are differentiable everywhere with respect to the scale function S it follows that any r-excessive function h has the left and the right scale derivatives \(d^-h/dS\) and \(d^+h/dS\), respectively, and these satisfy for all x [cf. Corollary 3.7 in Salminen (1985)]

Let V be the value function defined in (6) and recall that V is the smallest r-excessive majorant of g. Assume now that \(0\in \varGamma \). Then \(V(0)=g(0)\) and since \(V(x)\ge g(x)\) for all \(x\in \mathbb {R}\) we have for \(\delta >0\)

Letting \(\delta \downarrow 0\) yields

Similarly, for \(\delta >0\)

leading, when letting \(\delta \downarrow 0,\) to

Therefore, using the assumptions on g,

since \(\beta >1/2\). But this contradicts (10) and, hence, \(0\not \in \varGamma \).

Remark 1

-

1.

In the proof of Proposition 1 we do not rely on particular properties of SBM and, therefore, the conclusions can be extended to all appropriately defined general skew diffusions.

-

2.

The conclusions of Proposition 1 could alternatively be proved by investigating the behavior of the ratio

$$\begin{aligned} u_\lambda (x):=\frac{g(x)}{\lambda \psi _r(x)+(1-\lambda )\varphi _r(x)}, \end{aligned}$$where \(\lambda \in [0,1]\). By Theorem 2.1 in Christensen and Irle (2011) \(0\in \varGamma \) if and only if there exists a \(\lambda \in [0,1]\) such that \(0\in \mathrm{argmax}\{u_\lambda (x)\}\). Assuming that this is the case implies that \(u_\lambda '(0+)\le 0 \le u_\lambda '(0-)\) which can be shown to coincide with the requirement \(\beta g'(0+)\le (1-\beta )g'(0-)\). Noticing that this inequality cannot be satisfied under the conditions of Proposition 1 demonstrates that \(0\in C\) as claimed.

-

3.

The optimal stopping of skew geometric Brownian motion with a piecewise linear payoff has been studied in Presman and Yurinsky (2012). In particular, they show that when the process is downwards skew at, say, \(x_0>0\) then, with appropriate parameter values, \(x_0\) may be an isolated element in the stopping set.

Proposition 1 essentially states that if the exercise payoff is increasing in some small open neighborhood of the origin, then the skew point is always included into the continuation region. Put somewhat differently, the directional predictability of the underlying process generates incentives to wait in a neighborhood of the skew point whenever the exercise reward is locally increasing at the state where the underlying process has more tendency to move upwards instead of moving downwards. Since upward movements are in the present setting more favorable from the perspective of the decision maker, waiting becomes optimal even in cases where exercising would be optimal in the absence of skewness. This is an interesting and nontrivial property generated by the singularity of the process at the origin.

Combining Proposition 1 with Lemma 1 shows how the ratio \(g/\psi _r\) can be utilized in the characterization of subsets of the continuation region. An interesting implication of these findings is that \((-\infty ,\inf {\mathcal {M}})\subset C\). Hence, if the maximizing threshold \(x^*\) of the ratio \(g/\psi _r\) is negative, unique, and the exercise payoff is increasing and either differentiable or locally convex at the origin, then the continuation region must necessarily contain both the set \((-\infty ,x^*)\) as well as an open neighborhood of the origin. This result again illustrates nicely the intricacies associated with the singularity of the underlying diffusion at the skew point. As we will later observe, this phenomenon arises even for piecewise linear reward functions.

The key comparative static properties of the value and optimal exercise strategy are given in the following

Proposition 2

The value function V is non-decreasing as a function of \(\beta \). In particular, the value function of the OSP for SBM with \(\beta >1/2\) dominates the value of the corresponding OSP for standard BM. Moreover, higher skewness expands the continuation region.

Proof

In order to analyze the impact of skewness on the value of the optimal timing policy, we first notice that using (2) for a measurable function \(h:\mathbb {R}\mapsto \mathbb {R}\) yields

in case the expectation exists. Consequently, for a non-decreasing h it holds that

Consider the sequence of functions \(\{F_n\}_{n\ge 0}\) defined inductively (cf. Shiryaev 1978, pp. 121–122) by

Then \(F_{n+1}(x)\ge F_{n}(x)\) for all x and n. Moreover, \(x\mapsto F_n(x)\) is non-decreasing for every n since g is assumed to be non-decreasing and expectation preserves the ordering. Thus, the increased skewness does not decrease their expected value by (11). On the other hand, since \(F_n\) converges pointwise to V (cf. Shiryaev 1978, Lemma 5 on p. 121) we notice that increased skewness increases or leaves unchanged V. The alleged dominance follows by setting \(\beta =1/2\). Finally, assume that \(\hat{\beta }>\beta \) and denote by \(V_{\hat{\beta }}\) the value and by \(C_{\hat{\beta }}=\{x\in \mathbb {R}{:}\,V_{\hat{\beta }}(x)> g(x)\}\) the continuation region of the stopping problem associated with the SBM having skewness parameter \(\hat{\beta }\). If \(x\in C\), then \(V_{\hat{\beta }}(x)\ge V(x)>g(x)\) implies that \(x\in C_{\hat{\beta }}\) as well and, consequently, that \(C\subset C_{\hat{\beta }}\) as claimed.

Proposition 2 demonstrates that the sign of the relationship between the increased skewness and the value of the optimal exercise strategy is positive. This result is intuitively clear since it essentially states that the more probable upward excursions are, the larger is the value of waiting for more favorable states resulting into a higher payoff. It is worth emphasizing that the positive skewness is not needed for the positivity of the dependence of the skewness and the value, and the conclusion is valid whenever \(\beta \in [0,1]\).

It is also worth pointing out that according to Proposition 2, the sign of the relationship between the skewness of the underlying SBM and the incentives to postpone rational exercise and wait for better states are positive for any \(\beta \in [0,1]\). This observation again emphasizes the interaction between the monotonicity of the payoff and the expected incidence of positive excursions resulting into higher payoffs. As \(\beta \) becomes lower positive excursions become less frequent thereby resulting into lower incentives to wait.

Before stating our main results on the single stopping boundary case, we introduce for a differentiable function F

and

Recall that if F is an r-excessive function of X then \(L_\psi F\) and \(L_\varphi F\) are associated with the representing measure of F [for a precise characterization and the integral representation of excessive functions, see Borodin and Salminen (2015, p. 33), Salminen (1985, (3.3) Proposition), and Salminen and Ta (2015, Theorem 2.4)]. In the proofs of Propositions 3 and 4 we use the representation theory to verify the excessivity of the proposed value function.

Proposition 3

Assume that \(\mathcal {M}=\{x^*\}\), where \(x^*>0\), and that in addition to (g1) and (g2) the reward function g has the following properties

-

(i)

\(g\in C^2([x^*,\infty ))\) i.e. g is twice continuously differentiable on \([x^*,\infty )\),

-

(ii)

\(g''(x)-2rg(x)\le 0\) for all \(x\ge x^*\).

Then, \(\tau _{x^*}=\inf \{t\ge 0:X_t\ge x^*\}\) is an optimal stopping time and the value reads as

Proof

Let \(\tilde{V}\) denote the proposed value function on the right hand side of (14). Since

we find that \(V\ge \tilde{V}\).

To show that \(V=\tilde{V}\) we apply Lemma 2 and establish that \(\tilde{V}\) is an r-excessive majorant of g. Since \(x^*\in \mathcal {M}\) it is immediate that \(\tilde{V}(x)\ge g(x)\) for all \(x\in \mathbb {R}\) (cf. 9). To show the r-excessivity of \(\tilde{V}\) we use the representation theory of excessive functions (cf. Salminen 1985). Let \(x_0>x^*\) so that \(g(x_0)>0\) and define the mapping \(H{:}\,\mathbb {R}\mapsto \mathbb {R}_+\) as \(H(x):=\tilde{V}(x)/\tilde{V}(x_0)=\tilde{V}(x)/g(x_0)\). Moreover, let for \(x\ge x_0\)

and for \(x\le x_0\)

We now show that these definitions induce a probability measure on \([-\infty ,+\infty ].\) Firstly, by the monotonicity and the non-negativity of g we have that \(g'(x)+\theta g(x)\ge 0.\) Hence, \((L_\varphi g)(x)\ge 0\) for all \(x\ge x^*,\) i.e., \(\sigma _{x_0}^H((x,\infty ])\ge 0\) for all \(x\ge x_0.\) Moreover, from assumptions (i) and (ii)

for all \(x\ge x^*\) implying that \(x\mapsto \sigma _{x_0}^H((x,\infty ])\) is non-increasing. Secondly, since \(x^*\in \mathcal {M}\) we have \((L_\psi g)(x^*)=0.\) Assumptions (i) and (ii) guarantee that

and, therefore, \((L_\psi g)(x)\le 0\) for all \(x\ge x^*,\) i.e., \(\sigma _{x_0}^H([-\infty ,x))\ge 0\) for all \(x\le x_0,\) and \(x\mapsto \sigma _{x_0}^H([-\infty ,x))\) is non-decreasing. Thirdly, from the definition of the Wronskian we have that

Combining now the three steps above and setting \(\sigma _{x_0}^H(\{x_0\})=0\) show that \(\sigma _{x_0}^H\) constitutes a probability measure on \([-\infty , +\infty ]\). Thus, \(\sigma _{x_0}^H\) induces via the Martin representation an r-excessive function [cf. Borodin and Salminen (2015, p. 33) and Salminen (1985)] which coincides with H. Since \(\tilde{V}(x) = \tilde{V}(x_0)H(x)\) the proposed value \(\tilde{V}\) is excessive as well. Invoking Lemma 2 completes the proof.

Remark 2

-

1.

The conclusions of Proposition 3 are also valid under the weaker assumptions:

-

(i)

\(g\in C^1([x^*,\infty )),\)

-

(ii)

\(L_\varphi g\) and \(L_\psi g\) are non-increasing on \([x^*,\infty )\).

-

(i)

-

2.

In the proof of Proposition 3 it is seen that \(\sigma ^H_{x_0}\) induces a probability measure on \([-\infty ,+\infty ].\) In fact, \(\sigma _{x_0}^H(\{-\infty \})=0\) and \(\sigma _{x_0}^H(\{+\infty \})=0.\) Indeed, the first statement is immediate from (16). The second one follows if \(\lim _{x\rightarrow +\infty }(L_\varphi g)(x)=0\) (cf. 15). To verify this, recall from the proof of Proposition 3 that \((L_\psi g)(x)\le 0\) for \(x>x^*,\) and, hence,

$$\begin{aligned} g'(x) \le \frac{\psi _r'(x)}{\psi _r(x)}g(x)= \frac{\text {e}^{\theta x}-(2\beta -1)\text {e}^{-\theta x}}{\text {e}^{\theta x}+(2\beta -1)\text {e}^{-\theta x}}\theta g(x)\le \theta g(x). \end{aligned}$$Consequently, for \(x\ge x_0\)

$$\begin{aligned} (L_\varphi g)(x)=\frac{\beta (g'(x) +\theta g(x))}{\mathrm {e}^{\theta x}}\le 2\beta \theta \mathrm {e}^{-\theta x}g(x). \end{aligned}$$(17)Because \(\lim _{x\rightarrow \infty }\mathrm {e}^{-\theta x}\psi _r(x)=1\) and, by assumption, \(\lim _{x\rightarrow \infty } g(x)/\psi _r(x) = 0\) we have \(\sigma _{x_0}^H(\{\infty \})=\lim _{x\uparrow \infty }\sigma _{x_0}^H((x,\infty ]) =0\), as claimed.

-

3.

Alternatively, the r-excessivity of the proposed value function \(\tilde{V}\) can be established by relying on the generalization of the Itô–Tanaka formula developed in Peskir (2005). More precisely, let \(\tau _{N}\) be an increasing sequence of bounded stopping times converging towards an arbitrary stopping time \(\tau \). Since \(\tilde{V}''(x)\) is locally bounded for all \(x\in \mathbb {R}\backslash \{0,x^*\}\) and \(\tilde{V}(x)\) is continuously differentiable for all \(x\in \mathbb {R}\backslash \{0\}\) we find by applying the extension of the change-of-variable formula presented in Remark 2.3 of Peskir (2005) to the discounted value of the proposed value function

$$\begin{aligned} e^{-r\tau _{N}}\tilde{V}(X_{\tau _{N}})&=\tilde{V}(x) +\int _0^{\tau _{N}}e^{-rs}\left( \frac{1}{2}\tilde{V}''(X_s) - r\tilde{V}(X_s)\right) \mathbbm {1}_{\{X_s\not \in \{ 0,x^*\}\}}ds\\&\quad +\frac{1}{2}\int _0^{\tau _{N}}e^{-rs}(\tilde{V}'(X_s+) +\tilde{V}'(X_s-))(dW_s+(2\beta -1)dl_s^X)\\&\quad + \frac{1}{2}\int _0^{\tau _{N}}e^{-rs}(\tilde{V}'(X_s+) -\tilde{V}'(X_s-))\mathbbm {1}_{\{X_s=0\}}dl_s^X. \end{aligned}$$Since \(\tilde{V}(x)=(g(x^*)/\psi _r(x^*))\psi _r(x)\) in a neighborhood of 0 we find by utilizing (5) that

$$\begin{aligned} (2\beta -1) (\tilde{V}'(0+)+\tilde{V}'(0-)) + \tilde{V}'(0+)-\tilde{V}'(0-)=0. \end{aligned}$$Consequently, we notice that the integrals with respect to \(l_t^X\) cancel each other and, therefore, that

$$\begin{aligned} e^{-r\tau _{N}}\tilde{V}(X_{\tau _{N}})&=\tilde{V}(x) +\int _0^{\tau _{N}}e^{-rs}\left( \frac{1}{2}\tilde{V}''(X_s)- r\tilde{V}(X_s)\right) \mathbbm {1}_{\{X_s\not \in \{ 0,x^*\}\}}ds\\ {}&\quad +\int _0^{\tau _{N}}e^{-rs}\tilde{V}'(X_s) \mathbbm {1}_{\{X_s\ne 0\}}dW_s. \end{aligned}$$Taking expectations and invoking the condition \(\tilde{V}''(x)\le 2r\tilde{V}(x)\) for all \(x\in \mathbb {R}\backslash \{0,x^*\}\) yields

$$\begin{aligned} \mathbb {E}_x\left[ e^{-r\tau _{N}}\tilde{V}(X_{\tau _{N}})\right] \le \tilde{V}(x) \end{aligned}$$which proves the r-excessivity of \(\tilde{V}\).

Proposition 3 states a set of conditions under which the general optimal timing problem constitutes a standard single exercise boundary problem where the underlying process is stopped as soon as it hits the critical threshold \(x^*>0\) at which the ratio \(g/\psi _r\) is maximized. These results can naturally be extended to the case where the maximizing threshold is negative, i.e., to the case where \(x^*< 0\). However, it is clear from Proposition 1 that in that case the exercise payoff has to be constant in a neighborhood of the skew point since otherwise the origin could not belong to the stopping set.

Our main results on the case where \(x^*<0\) are now summarized in the following proposition.

Proposition 4

Assume that \(\mathcal {M}=\{x^*\}\), where \(x^*<0\), and that in addition to conditions (g1) and (g2) the exercise payoff g satisfies the conditions

-

(i)

\(g\in C^2([x^*,\infty ))\),

-

(ii)

\((1-\beta )\theta \mathrm {e}^{-\theta x^*}g(x^*)>\beta g'(0) > 0,\)

-

(iii)

\(g''(x)-2rg(x)< -\varepsilon \) for all \(x\ge x^*\) and some \(\varepsilon >0\).

Then, the equation system

has a unique solution \(\mathbf {y}^*=(y_1^*,y_2^*)\) such that \(\mathbf {y}^*\in (x^*,0)\times (0,\infty )\). Moreover, \(\tau ^*=\inf \{t\ge 0:X_t\in A\}\) with \(A = [x^*,y_1^*]\cup [y_2^*,\infty )\) is the optimal stopping time, and the value reads as

where

Proof

We first establish that equation system (18) has a unique solution \(\mathbf {y}^*\in (x^*,0)\times (0,\infty )\). In order to accomplish this task, we first observe that (18) can be re-expressed by using (12) and (13) as

where \(q_1(x):=\text {e}^{\theta x}(g'(x)-\theta g(x))\) and \(q_2(x):=\text {e}^{-\theta x}(g'(x)+\theta g(x))\). Consider now the behavior of the functions \(h_1:=q_1+q_2\) and \(h_2:=q_1-q_2.\) Since \(x^*<0\) and \((L_\psi g)(x^*)=0\) it follows from (12) that \(q_1(x^*)=0\) and, hence, \(h_1(x^*)=-h_2(x^*)=\mathrm {e}^{-\theta x^*}2\theta g(x^*) >0\). Moreover, \(h_1(0)=2g'(0)>0\), \(h_2(0)=-2\theta g(0)<0\), and

Our assumption (iii) guarantees that \(h_1'(x)<0\) for all \(x>x^*\). In a completely analogous fashion we find that \( h_2'(x)<0 \) for \(x>0\) and \(h_2'(x)>0\) for \(x\in (x^*,0)\). Moreover, if \(x>z>0\) then applying the standard mean value theorem yields

demonstrating that \(\lim _{x\rightarrow \infty }h_1(x)=-\infty \). In an analogous way we find that \(\lim _{x\rightarrow \infty }h_2(x)=-\infty \) as well. Consider now for a given \(x\in [x^*,0]\) equation \(h_2(\tilde{y}_x)=h_2(x)\) where \(\tilde{y}_x\in [0,\infty )\). The continuity of \(h_2(x)\) at the origin implies that for \(x=0\) we have \(\tilde{y}_0=0\). Utilizing (22), in turn, implies that for all \(x\in (x^*,0)\) there is a unique \(\tilde{y}_x\in (0, \tilde{y}_{x^*})\) satisfying \(h_2(\tilde{y}_x)=h_2(x)\) (since \(h_2(x)\downarrow -\infty \) as \(x\uparrow \infty \)). Implicit differentiation yields

Consider next for a given \(x\in [x^*,0]\) equation \(l(x)=l(\hat{y}_x)\), where

and \(\hat{y}_x\in [0,\infty )\). The monotonicity of \(h_1(x)\) implies that l(x) is monotonically decreasing on \((x^*,0)\cup (0,\infty )\). Moreover, since

and \(l(x)\downarrow -\infty \) as \(x\uparrow \infty \) we notice that there exists necessarily a unique \(\hat{x}\in (x^*,0)\) such that \(l(\hat{x})=l(0+)\) and, consequently, such that \(\hat{y}_{\hat{x}}=0\). On the other hand, since \(l(x)<0\) for \(x>l^{-1}(0)\) we notice that there is a unique \(\hat{y}_0\in (0,l^{-1}(0))\) such that \(l(\hat{y}_0)=l(0-)\). Moreover, implicit differentiation yields

Combining these findings show that \(\tilde{y}_0=0<\hat{y}_0\) and \(\tilde{y}_{x^*}>\tilde{y}_{\hat{x}}>0=\hat{y}_{\hat{x}}\). The continuity and the monotonicity of the solution curves \(x\mapsto \tilde{y}_x\) and \(x\mapsto \hat{y}_x,\ x\in (x^*,0)\) then proves that they have a unique interception point \(x^{**}\in (\hat{x},0)\) such that \(\tilde{y}_{x^{**}}=\hat{y}_{x^{**}}\) and, consequently, such that (20) holds.

We now prove that (19) constitutes the value and \(\tau ^*\) the optimal stopping strategy of (6). To this end, let \(\tilde{V}\) denote the proposed value function on the right hand side of (19) with \(y^*_1:=x^{**}\) and \(y^*_2:=\tilde{y}_{x^{**}}=\hat{y}_{x^{**}}.\) It is again clear that \(V\ge \tilde{V}\). In order to prove the opposite inequality, we first notice that \(\tilde{V}\) is continuous and non-negative. To demonstrate that \(\tilde{V}\) is r-excessive, we let \(x_0>y_2^*\) and define the mapping \(\hat{H}:\mathbb {R}\mapsto \mathbb {R}_+\) as \(\hat{H}(x):=\tilde{V}(x)/\tilde{V}(x_0)=\tilde{V}(x)/g(x_0)\). As in the proof of Proposition 3, define for \(x\ge x_0\)

and for \(x\le x_0\)

where the identity \((L_\psi g)(y_1^*)=(L_\psi g)(y_2^*)\) is used. We now show that these definitions induce a probability measure on \([-\infty ,+\infty ]\). Firstly, the monotonicity and the non-negativity of the exercise payoff g imply that \(g'(x)+\theta g(x)>0\) and, therefore, from (13) \((L_\varphi g)(x)\ge 0\) for all \(x\ge y_2^*,\) i.e., \(\sigma _{x_0}^{\hat{H}}((x,\infty ])\ge 0\) for \(x\ge x_0.\) Moreover,

for all \(x\in [x_0,\infty )\) implying that \(x\mapsto \sigma _{x_0}^{\hat{H}}((x,\infty ])\) is non-increasing. Secondly, since \((L_\psi g)(x^*)=0\) and

it is seen by applying assumption (iii) that \(L_\psi g\) is decreasing and negative on \((x^*,\infty ).\) Consequently, \(x\mapsto \sigma _{x_0}^{\hat{H}}([-\infty ,x))\) is non-negative and non-decreasing for \(x\le x_0.\) Thirdly, we should check that

but this follows similarly as in the proof of Proposition 3 exploiting the Wronskian relationship. This concludes the proof that \(\sigma _{x_0}^{\hat{H}}\) constitutes a probability measure on \([-\infty ,+\infty ].\) The probability measure \(\sigma _{x_0}^{\hat{H}}\) induces via the Martin representation an r-excessive function [cf. Borodin and Salminen (2015, p. 33) and Salminen (1985)] which coincides with \(\hat{H}\). Since \(\tilde{V}(x) = \tilde{V}(x_0)\hat{H}(x)\) we find that the proposed value \(\tilde{V}(x)\) is r-excessive as well.

It remains to prove that \(\tilde{V}\) dominates the exercise payoff g. It is clear that \(\tilde{V}\ge g\) for all \(x\in (-\infty , y_1^*]\cup [y_2^*,\infty )\). It is, thus, sufficient to analyze the difference \(\varDelta (x):=\tilde{V}(x)-g(x)\) on \((y_1^*, y_2^*)\). Notice that \(\varDelta (y_1^*)=\varDelta (y_2^*)=0\). Applying formula (3.4) in Salminen (1985) where we choose \(x_0=y_2^*\) results in

Since \(\sigma ^{\hat{H}}_{x_0}([y^*_1,y^*_2])=0\) this expression simplifies and yields

Moreover, utilizing (23), assumption (iii), and noticing that

for \(x\in (y_1^*,0)\cup (0,y_2^*)\) show that \(\varDelta /\psi _r\) is increasing on \((y_1^*,0)\) and, consequently, that \(\varDelta (x)>0\) for all \(x\in (y_1^*,0)\). In an completely analogous fashion, we find that \(\varDelta (x)/\psi _r(x)\) is decreasing for \(x\in (0,y_2^*)\) and, therefore, that \(\varDelta (x)>0\) for all \(x\in (0, y_2^*)\) as well. The continuity of \(\varDelta \) then proves that \(\varDelta (x)>0\) for all \(x\in (y_1^*,y_2^*)\) and, consequently, that the proposed value \(\tilde{V}\) dominates the exercise payoff g. We may now evoke Lemma 2 to complete the proof of the proposition.

Remark 3

-

1.

The conclusions of Proposition 4 are derived from the general properties of excessive mappings and their representing measures and as such do not require detailed process specific information besides the singularity at the skew point and the generator of the driving process. In that respect, the developed proof applies even under more general circumstances than in the SBM setting.

-

2.

Establishing the r-excessivity of the proposed value using the generalized Itô–Tanaka formula is a bit more involved in this case but can also be done as indicated in Remark 2.3.

As the proof of Proposition 4 indicates, there are circumstances under which the problem can be reduced into a two boundary problem where the lower boundary \(x^*=y_1^*\) constitutes a tangency point of the value. A set of conditions under which this observation is true are stated in the following corollary.

Corollary 1

Assume that \(\mathcal {M}=\{x^*,y_2^*\}\), where \(x^*<0<y_2^*\). Assume also that conditions (i)–(iii) of Proposition 4 are satisfied. Then \(\varGamma = \{x^*\}\cup [y_2^*,\infty )\), \(C=(-\infty ,x^*)\cup (x^*,y_2^*)\), and the value is

Proof

The statement is a direct implication of Lemma 1, Propositions 3 and 4.

An interesting yet untreated case arises when the reward function is a constant in a neighborhood of the origin. Such a configuration may appear for instance in the case of a capped call \(g(x) = (x-K)^+-(x-M)^+\), where \(M>K\). For this consider the exercise payoff

where \(x_M \le 0\), and \(\tilde{g}:\mathbb {R}\mapsto \mathbb {R}_+\) is a continuous and nondecreasing function such that g satisfies our standing assumptions (g1) and (g2).

Proposition 5

Assume that \(\mathcal {M}=\{x^*\}\), where \(x^*\le x_M\le 0\). Assume also in addition that \(\tilde{g}\) has the following properties

-

(i)

\(\tilde{g}\in C^2([x^*,x_M])\),

-

(ii)

\(\tilde{g}''(x)-2r\tilde{g}(x)\le 0\) for all \(x\in [x^*,x_M]\).

Then, \(\tau _{x^*}=\inf \{t\ge 0:X_t\ge x^*\}\) is an optimal stopping time and the value reads as in (14).

Proof

The proof is a slight modification of the proof of Proposition 3.

5 Explicit illustration

Our objective is now to illustrate the main results in Sect. 4 explicitly by assuming that the exercise reward reads as \(g(x):=(x+K)^+\) with \(K> 0\). Recall that \(\mathcal {M}\) denotes the set of maximum points of the ratio \(g/\psi _r\), cf. (7). Our main result on the value and the optimal stopping strategy are presented in the following:

Proposition 6

For all \(\beta \in (1/2,1)\) and \(K>0\) there is a unique critical discount rate \(\hat{r}=\hat{r}(\beta ,K)\) satisfying the identity

Moreover, \(\hat{r}\) is increasing as a function of \(\beta \).

-

(A)

Assume that \(r<\hat{r}\). Then, \(\mathcal {M}=\{x^*\}\) with \(x^*>0\). The optimal stopping strategy is \(\tau ^*=\inf \{t\ge 0: X_t\ge x^*\}\) and the value is as in (14).

-

(B)

Assume that \(r=\hat{r}\). Then \(\mathcal {M}=\{x_1^*,x^*\},\) where \(x^*>0\) and

$$\begin{aligned} x_1^*= \frac{1}{\theta }-K<0. \end{aligned}$$(26)The optimal stopping strategy is \(\tau ^*=\inf \{t\ge 0: X_t\in \{x_1^*\}\cup [x^*,\infty )\}\) and the value is as in (24).

-

(C)

Assume that \(r>\hat{r}\). Then, \(\mathcal {M}=\{x_1^*\}\) where \(x_1^*\) is as given in (26). The optimal stopping strategy is \(\tau ^*=\inf \{t\ge 0: X_t\in [x_1^*,y_1^*]\cup [y_2^*,\infty )\}\), where \((y_1^*,y_2^*)\in (x_1^*,0)\times (0,\infty )\) constitute the unique solution of the equation system (18), and the value is as in (19).

Proof

In what follows we will show that the three different cases (A)–(C) appearing above and corresponding to the cases characterized in Propositions 3, 4 and Corollary 1 arise depending on the precise magnitude of the key parameters \(\beta , r\) and K. We start by proving that for any \(\beta \in (1/2,1)\) and \(K>0\) Eq. (25) has a unique solution \(\hat{r}\). To this end, fix \(K>0\) and consider for \(\theta >0\) and \(\beta \in [1/2,1]\) the function

Standard differentiation yields

Consequently, from (27), C is monotonically decreasing as a function of \(\theta \). In particular, for all \(\beta \in (1/2,1)\) we have

where

Invoking the monotonicity and the continuity of C as a function of \(\theta \) shows that Eq. (25) has a unique solution, as claimed.

Next we show that \(\beta \mapsto \hat{r}(\beta )\) is increasing. To see that this is indeed the case, consider the function \(\hat{\theta } :=\sqrt{2\hat{r}}\) and observe that implicit differentiation of equation \(C(\hat{\theta },\beta )=0\) yields

Since \(C_{\theta }< 0\) by (27), it is sufficient to study the sign of \(C_\beta \) along the solution curve \(\beta \mapsto \hat{\theta }(\beta )\). Since \(\hat{\theta } < \theta ^*\) and \(\hat{\theta } K-1<\ln \left( \beta /(1-\beta )\right) \), we have from (27) using the identity \(C(\hat{\theta },\beta )=0\) that

Therefore, from (29) to (27) it follows that \(\hat{\theta }'>0\) and, hence, \(\hat{r}\) is increasing.

We now proceed to proving (A)–(C). From our general analysis we known that we should consider the maximum points of the function

Standard differentiation yields

We immediately notice the following

and \(\lim _{x\rightarrow \infty }l(x)=-\infty .\) Moreover, since for \(x>-K\)

two different configurations may arise depending on the precise values of \(\theta , \beta ,\) and K. First, if \(\theta K\le 1\), then \(l(0-)\ge 0\) and the monotonicity of l guarantees that \(u_r\) attains a unique global maximum at \(x^*>0\) satisfying the ordinary first order condition \(u_r'(x^*)=0\) which is equivalent with

This case corresponds to the one characterized in Proposition 3 and, hence, proves claim (A) when \(\theta K\le 1\).

Second, if \(\theta K>1\) then \(l(-K)=\text {e}^{-\theta K}>0\) and the monotonicity of l on \((-K,0)\) guarantees that \(u_r\) attains a local maximum at the point

If \(l(0+)=1-\left( \frac{1}{\beta }-1\right) \theta K>0\), then \(u_r\) attains a local maximum at the threshold \(x^*>0\) satisfying (31) as well. However, if \(l(0+)\le 0\), then the monotonicity of l implies that \(x_1^*\) constitutes a global maximum point of \(u_r\) and \(\mathcal {M}=\{x_1^*\}\). Hence, in the case where \(l(0+)>0\) the set \(\mathcal {M}\) has at most two points. In order to determine the parameter values for which \(\mathcal {M}=\{x_1^*,x^*\}\) we consider the equation

Since \(u_r'(x^*)=u_r'(x_1^*)=0\) it holds that \(u_r(x^*)=1/\psi _r'(x^*)\) and \(u_r(x_1^*)=1/\psi _r'(x_1^*)\). Hence, (32) is equivalent with

Consequently, \(\mathcal {M}=\{x_1^*, x^*\}\) with \(x^*>0\) as in (31) if and only if \(x^*\) satisfies also (33), which is equivalent with

implying that

Substituting the expression for \(2\beta -1\) obtained from (34) into (31) yields

By applying (35) in (36) we conclude that \(\mathcal {M}=\{x_1^*,x^*\}\) if and only if \(\beta \in [1/2,1]\) and \(\theta > 0\) are such that \(C(\beta ,\theta )=0\), as claimed. This proves case (B), and also (A) and (C) since the value is a non-increasing function of r.

Remark 4

For \(\beta =1\) Eq. (25) with \(\hat{\theta }:=\sqrt{2\hat{r}}\) reads as

and the unique solution is given by \(\hat{\theta }K\approx 1.64132.\) Notice that \(\beta \mapsto \theta (\beta )\) being increasing the limit of \(\theta (\beta )\) as \(\beta \downarrow 1/2\) exists. As \(\beta \downarrow 1/2\) then necessarily \(x^*\) in (35) tends to 0. Therefore, \(\lim _{\beta \downarrow 1/2}\theta (\beta )=1/K.\) Consequently, the critical parameter boundary \(\beta \mapsto \theta (\beta )\) is an increasing function connecting the extremal points (1 / 2, 1 / K) and (1, 1.64132 / K). This is illustrated in Fig. 1 when \(K=1.\)

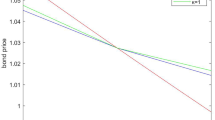

The optimal boundaries associated with the optimal exercise strategies are illustrated as functions of the skewness parameter \(\beta \) in Fig. 2 under the assumptions that \(K=1\) and \(r=0.95\). As is clear from the figure, the considered stopping problem constitutes a three-boundary problem as long as the skewness parameter \(\beta \) remains below the critical level \(\beta ^*\) which under our parameter assumptions is \(\beta ^*\approx 0.7445\). As soon as skewness exceeds this critical level, the problem becomes a single boundary problem, where the decision maker waits until the underlying hits the upper threshold maximizing the ratio \((x+K)^+/\psi _r(x)\). The reason for this observation is clear: for sufficiently low values of \(\beta \) the attainable intertemporal gains accrued by waiting and postponing the timing decision further into the future exceed the return accrued by exercising immediately in a neighborhood of the origin. As the skewness parameter increases, more and more of the excursions are expected to end to the positive side, thus increasing the incentives to wait for higher payoffs.

The optimal boundaries associated with the optimal exercise strategies are, in turn, illustrated as functions of the parameter \(\theta \) in Fig. 3 under the assumptions that \(K=1\) and \(\beta =0.55\). In contrast with the effect of the skewness parameter \(\beta \), higher discounting accelerates optimal timing and, thus, decreases the incentives to wait. Accordingly, we now notice from Fig. 3 that the considered problem constitutes a single boundary problem only as long as the discount rate is lower than the critical level \(\hat{r}\approx 0.5983\). Above this critical level waiting for for future potentially higher payoffs is no longer optimal at all states and the optimal exercise strategy becomes a three-boundary stopping rule.

References

Alvarez E (2003) On the properties of \(r\)-excessive mappings for a class of diffusions. Ann Appl Probab 13:1517–1533

Anatolyev S, Gerko A (2005) A trading approach to testing for predictability. J Bus Econ Stat 23:455–461

Anatolyev S, Gospodinov N (2010) Modeling financial return dynamics via decomposition. J Bus Econ Stat 28:232–245

Appuhamillage T, Sheldon D (2012) First passage time of skew Brownian motion. J Appl Probab 49:685–696

Appuhamillage T, Bokil V, Thomann E, Waymire E, Wood B (2011) Occupation and local times for skew Brownian motion with applications to dispersion across an interface. Ann Appl Probab 21:2050–2051

Barlow M (1988) Skew Brownian motion and a one-dimensional stochastic differential equation. Stochastics 25:1–2

Beibel M, Lerche HR (1997) A note on optimal stopping of regular diffusions under random discounting. Stat Sin 7:93–108

Beibel M, Lerche HR (2001) A note on optimal stopping of regular diffusions under random discounting. Theory Probab Appl 45:547–557

Bekiros SD, Georgoutsos D (2008a) Nonlinear dynamics in financial asset returns: the predictive power of CBOE volatility index. Eur J Financ 14:397–408

Bekiros SD, Georgoutsos D (2008b) Direction-of-change forecasting using a volatility based recurrent neural network. J Forecast 27:407–417

Borodin A, Salminen P (2015) Handbook on Brownian motion—facts and formulae, 2nd edn. Birkhäuser, Basel (2nd printing)

Burdzy K, Chen Z-Q (2001) Local time flow related to skew Brownian motion. Ann Probab 29:1693–1715

Chevapatrakul T (2013) Return sign forecasts based on conditional risk: evidence from the UK stock market index. J Bank Financ 37:2342–2353

Christensen S, Irle A (2011) A harmonic function technique for the optimal stopping of diffusions. Stoch Int J Probab Stoch Process 83:347–363

Christoffersen PF, Diebold FX (2006) Financial asset returns, direction-of-change forecasting, and volatility dynamics. Manag Sci 52:1273–1287

Christoffersen PF, Diebold FX, Mariano RS, Tay AS, Tse YK (2006) Direction-of-change forecasts based on conditional variance, skewness and kurtosis dynamics: international evidence. J Financ Forecast 1:3–24

Corns TRA, Satchell SE (2007) Skew Brownian motion and pricing European options. Eur J Financ 13:523–544

Crocce F, Mordecki E (2014) Explicit solutions in one-sided optimal stopping problems for one-dimensional diffusions. Stoch Int J Probab Stoch Process 86:491–509

Dayanik S, Karatzas I (2003) On the optimal stopping problem for one-dimensional diffusions. Stoch Process Appl 107:173–212

Decamps M, de Schepper A, Goovaerts M, Schoutens W (2005) A note on some new perpetuities. Scand Actuar J 4:261–270

Decamps M, Goovaerts M, Schoutens W (2006a) Asymmetric skew Bessel processes and their applications to finance. J Comput Appl Math 186:130–147

Decamps M, Goovaerts M, Schoutens W (2006b) Self exciting threshold interest rates model. Int J Theor Appl Financ 9:1093–1122

Ekström E, Villeneuve S (2006) On the value of optimal stopping games. Ann Appl Probab 16:1576–1596

Harrison JM, Shepp LA (1981) On skew Brownian motion. Ann Probab 9:309–313

Hämäläinen J (2015) Portfolio selection with directional return estimates. Available at SSRN: http://ssrn.com/abstract=2279823

Itô K, McKean HP Jr (1974) Diffusion processes and their sample paths. Springer, Berlin (Second Printing)

Lejay A (2006) On the constructions of the skew Brownian motion. Probab Surv 3:413–466

Lejay A, Mordecki E, Torres S (2014) Is a Brownian motion skew? Scand J Stat 41:346–364

Nyberg H (2011) Forecasting the direction of the US stock market with dynamic binary probit models. Int J Forecast 27:561–578

Ouknine Y (1991) Skew Brownian motion and derived processes. Theory Probab Appl 35:163–169

Peskir G (2005) A change-of-variable formula with local time on curves. J Theor Probab 18:499–535

Presman E (2012) Solution of the optimal stopping problem of one-dimensional diffusion based on a modification of payoff function. In: Honor of Yuri V. Prokhorov, Prokhorov and contemporary probability theory. Springer proceedings in mathematics and statistics, vol 33. Springer, pp 371–403

Presman E, Yurinsky V (2012) Optimal stopping of geometric Brownian motion with partial reflection. In: International conference on stochastic optimization and optimal stopping, Moscow, 24–28 Sept. Book of abstracts, Steklov Mathematical Institute, Moscow, pp 89–90

Rosello D (2012) Arbitrage in skew Brownian motion models. Insur Math Econ 50:50–56

Rydberg TH, Shepard N (2003) Dynamics of trade-by-trade price movements: decomposition and models. J Financ Econom 1:2–25

Salminen P (1985) Optimal stopping of one-dimensional diffusions. Math Nachr 124:85–101

Salminen P (2000) On Russian options. Theory Stoch Process 6:161–176

Salminen P, Ta BQ (2015) Differentiability of excessive functions of one-dimensional diffusions and the principle of smooth fit, vol 104. Banach Center Publications, pp 181–199

Shiryaev AN (1978) Optimal stopping rules. Springer, New York

Skabar A (2013) Direction-of-change financial time series forecasting using a similarity based classification model. J Forecast 32:409–422

Vuolle-Apiala J (1996) Skew Brownian motion-type of extensions. J Theor Probab 9:853–861

Walsh J (1978) Diffusion with a discontinuous local time. Astérisque 52–53:37–46

Acknowledgements

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Alvarez E., L.H.R., Salminen, P. Timing in the presence of directional predictability: optimal stopping of skew Brownian motion. Math Meth Oper Res 86, 377–400 (2017). https://doi.org/10.1007/s00186-017-0602-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00186-017-0602-4