Abstract

In this paper we consider a stochastic frontier model in which both the noise and inefficiency components are asymmetric, viz., the noise term is skew normal and the inefficiency term is half-normal. This formulation avoids the criticism that skewness of the composite error term (sum of the noise and inefficiency) cannot be an indicator of inefficiency because skewness can also arise from the noise term. Our estimator of inefficiency does not depend on skewness of the one-sided error alone; it controls for skewness in the noise term as well. We further generalize the model by introducing determinants of skewness of the noise term as well as determinants of inefficiency. Additionally, we test hypotheses that the noise term is either symmetric (normal) or has a constant skewness parameter. Instead of using the standard ML method, we use the indirect inference (II) approach to estimate the parameters of the proposed model. Formulae for predicting (in)efficiency are also provided. Finally, we provide both simulation and empirical results using the II estimation approach to showcase workings of our model.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In stochastic frontier (SF) models, the noise term is almost universally assumed to be symmetric and normally distributed. The inefficiency term is assumed to have several competing distributions – the most common being half-normal, exponential and truncated normal. This makes the composite error term (noise minus inefficiency) in a production function model have a negatively skewed distribution. Because of this, the negative skewness is often used to test the presence of inefficiency (Ceolli 1995; Schmidt and Lin 1984) in a production function. However, skewness can be significant and of the wrong sign because of other things. For example, empirically skewness of the residual can be wrong (positive) in a production function even if the true model (or the data generating process, DGP) has the right skewness (negative).Footnote 1 Knowing that skewness can be wrong in the empirical model even though there is nothing wrong in the model or the DGP, we consider a model in which the skewness can be either positive or negative and allow the possibility of a skewed noise term. That is, we allow the noise term in the SF model to be positively or negatively skewed (asymmetric). This can be justified both theoretically and empirically (Badunenko and Henderson 2021). Thus, the presence of a skewed composite error term may or may not represent inefficiency. In the extreme case, inefficiency can be zero but the composite error term, which contains only the noise, can still be skewed. Our goal is to separate the skewness of the noise term so that it does not contaminate the estimate of inefficiency. Alternatively, we guard against labeling skewness of the composed error as an indicator of inefficiency because skewness can also arise from the noise term.

The generalization of symmetric noise to asymmetric noise in econometric models has been considered in many empirical applications. In financial studies, it is commonly observed that return of a market portfolio exhibits kurtosis and possesses skewness as well. Thus, a skew distribution is quite often used in financial applications. For instance, Adock (2010) uses a multivariate skew-Student-t distribution to study asset pricing and portfolio selection. Kraus and Litzenberger (1976) develop an asset pricing model that incorporates the effect of skewness on valuation. There are also some applications in macroeconomics studies. For instance, Busch et al. (2020) use the panel data on individuals and households from the United States, Germany and Sweden to study the income risk over the business cycle. They found that skewness of income growth is much more negative in recessions. In the studies on production and productivity, Qi et al. (2015) consider the asymmetric effects of the whether shocks on production; and Badunenko and Henderson (2021) consider a SF model with skew-normal noise and exponentially distributed inefficiency.

Perhaps the maximum likelihood (ML) method is the most commonly used approach to estimate models with skew noise. For instance, see Adock (2010) and Badunenko and Henderson (2021). Different from the existing literatures, in this paper we suggest using the indirect inference (II) approach, which was first introduced in Gourieroux et al. (1993) and then applied by Lai (2022) to estimate the SF model with skew-normal (SN) noise and half-normal inefficiency. The II approach can be easily generalized to different combinations of the distributions of the random noise and inefficiency since there is no need to derive the probability density function. Similar to the simulated likelihood estimation, the II estimation is also a simulation-based approach. However, its implementation requires only the least squares estimation. Specifically, we first estimate the model by the least squares approach, obtain the coefficients and evaluate the empirical characteristic functions of the residuals. We then generate the random sample given the model parameter and conduct the same estimation procedure, including the least squares estimation and evaluating the empirical characteristic functions of the residuals using the generated sample. In the final step, we match the least squares coefficients and the empirical characteristic functions of the residuals from the real and simulated data. The parameter that minimizes the distance between the estimated coefficients and the empirical characteristic functions based on the real data and simulated data is the solution of the II estimation.

We also extend the model by using variables that are determinants of the shape parameter of the SN distribution. Similarly, inefficiency is also explained by some variables viewed as determinants of inefficiency. We provide simulation results to illustrate finite sample properties of the II estimation method in the case of SN noise and a half-normal (HN) inefficiency term. For this, we consider several settings of the data generating process. For example, we allow the skew-normal noise to have a positive shape parameter, negative shape parameter or heterogeneous shape parameter, and we examine whether the II estimation can correctly identify the direction of the shape of SN noise. In order to compare the finite sample performance of the II and ML estimators, we consider a SF model with a normal and HN distributions pair for the noise and inefficiency. Finally, an empirical application is provided to illustrate the workings of our model.

The rest of the paper is structured as follows. The model and estimation method are introduced in Sect. 2. Simulation results are discussed in Sect. 3. The empirical model and results are discussed in Sect. 4. Section 5 concludes the paper with a summary and future extensions.

2 The model formulation and estimation

We consider a cross-sectional production function with SN noise and HN technical inefficiency, viz.,

where \(y_i\) is log output, \(x_i\) consists of log inputs (for a Cobb-Douglas production function),Footnote 2\(v_{i}\sim SN\left( \sigma _{v},\lambda \right) \), u is HN and is written as \(u\sim N^{+}\left( 0,\sigma ^2_{u}\right) \). If \(v_i\) is normally distributed, the model in (1) is the standard SF model with N-HN distributions for \(v_i\) and \(u_i\). Following the SF literature, we assume \(v_i\) and \(u_i\) to be independent. The input variables are assumed to be independent of \(v_i\) and \(u_i\), i.e., we assume that inputs are exogenous.Footnote 3

The probability density function (pdf) of the SN noise \(v_i\) is

where \(\sigma _{v}\) is the scale parameter and \(\lambda \) is the shape parameter; \(\phi (.)\) and \(\Phi (.)\) are the pdf and cdf of a standard normal variable. Two special cases of the shape parameter are the following: (i) if \(\lambda =0,\) then \(v_i\sim N\left( 0,\sigma _{v}^{2}\right) \), and (ii) if \(\lambda \rightarrow \infty ,v_i\sim N^{+}\left( 0,\sigma _{v}^{2}\right) .\)

For simplicity, we use \(\theta \) to denote the vector of the parameters of the model in (1). Thus \(\theta =(\beta ^{\intercal },\sigma _{v},\lambda ,\sigma _{u})^{\intercal }\) contains both the parameters in the frontier function and the parameters in the distributions of v and u. The model in (1) is usually estimated by the ML method. Here, we suggest using the II estimation method, where a linear regression model is used as an approximation of the model in (1).Footnote 4 The estimation is based on the ordinary least squares (OLS) approach. This approximated model is referred to as the instrument model in our following discussion. It is worth mentioning that although we are making distributional assumptions of the error components, there is no need to evaluate the likelihood function for the II estimation.

For the OLS estimation, we write the stochastic frontier model given in (1) as

where \(a=\alpha -\mathbb {E}u_{i},\) \(u_{i}^{*}=u_{i}-\mathbb {E}u_{i},\) and the composite error \(\left( v_{i}-u_{i}^{*}\right) \) has zero mean. Let \(\widehat{a}_{N}\) and \(\widehat{\beta }_{N}\) denote the OLS estimators of the intercept a and the slope vector \(\beta .\) It can be shown that \(\widehat{\beta } _{N}\) is a consistent estimator of \(\beta \), but \(\widehat{a}_{N}\) is not a consistent estimator of \(\alpha \) because \(\mathbb {E}u_{i}\ne 0\).

Define \(\xi _{i}=y_{i}-x_{i}\beta =a+v_{i}-u_{i}^{*}=\alpha +v_{i}-u_{i}.\) Although the OLS estimator of a from (2) is not a consistent estimator of \(\alpha \), it is clear that the OLS estimators \(\widehat{a}_{N}\) and \(\widehat{\beta }_{N}\) and the characteristic functions of \(\widehat{\xi }_{i}\) are functions of the true parameter \(\theta \). In other words, the information contained in the moments of the residual \(\widehat{\xi }_{i}\) can be used for identifying the parameters of the distributions of \(v_{i}\) and \(u_{i}.\)

Now consider the characteristic function of \(\xi \), \(\varphi _{\xi }\left( .\right) \), which is defined as

Using the OLS estimator \(\widehat{\beta }_{N}\) and the observed sample, we obtain \(\widehat{\xi }_{i}\) as

Given the set of points \(t=t_{1},\ldots ,t_{q}\), we can also compute the empirical characteristic function at t via

We use \(\widehat{\gamma }_{N}\) to denote a vector containing the OLS estimators of a and \(\beta \) and the empirical characteristic function evaluated at \(t=t_{1},\ldots ,t_{q},\) so

Alternatively, the estimator \(\widehat{\gamma }_{N}\) is the solution of the moment conditions of the approximated model:

where \(e_i = \left( \xi _{i}-a\right) \). Equations (6) and (7) come from the first order conditions of the OLS estimation, and Eq. (8) is the sample counterpart of the characteristic function \(\mathbb {E}\left( e^{\textbf{i}t\xi _{i}}\right) \). Under the distributional assumptions, the true value of \(\gamma \) is

For the II estimation, it is required q/2 to be greater than the number of the parameters contained in the distributions of v and u so that we have enough identification conditions. Since the empirical characteristic function contains the real and imaginary parts, each point t gives two moment conditions. For the models in our simulation and empirical sections, we choose ten points, i.e., \(q=10\), with equal space in the interval \([-0.1, 0.1]\). See also Lai (2022) for more discussion about the choice of the points and the relevant literature.

The vector \(\gamma \) is a function of the parameter \(\theta \), and we denote it as

where \(\mathbb {B}(\theta )\) is called the binding function. It provides a link between the parameters of the true model and the approximated model. Since the characteristic function is unique and \(t\in \mathbb {R},\) we can find infinite moment conditions for solving the true parameter \(\theta .\) We discuss the procedure below:

- Step 1::

-

Given the approximate or instrumental model, we estimate \(\gamma \) by OLS using the observed data and denote it as

$$\begin{aligned} \widehat{\gamma }_{N}=\left( \widehat{a}_{N},\widehat{\beta }_{N}^{\intercal },\widehat{\varphi }_{N,\xi }\left( t_{1}\right) ,...,\widehat{\varphi } _{N,\xi }\left( t_{q}\right) \right) ^{\intercal }, \end{aligned}$$(11)where \(\left( \widehat{a}_{N},\widehat{\beta }_{N}^{\intercal }\right) ^{\intercal }\) is the OLS estimate from (2) using the observed data and \(\widehat{\varphi }_{N,\xi }\left( t\right) \) is the empirical characteristic function of (4) evaluated at \(t=t_{1},t_{2},\ldots ,t_{q}\).

- Step 2::

-

For a given \(\theta ,\) we can simulate values of the endogenous variable \(\left\{ y_{i}^{m}(\theta )\right\} _{i=1}^{N}\) using the true model given in (1). In other words, given the model specification and a value of the parameter \(\theta ,\) one can draw v and u from their distributions and then generate the simulated \(\left\{ y_{i}^{m}(\theta )\right\} _{i=1}^{N}\) conditional on the exogenous variables \(\left( x_{1},...,x_{N}\right) .\) The estimator of \(\gamma \) in the mth replication is denoted as

$$\begin{aligned} \widehat{\gamma }_{N}^{m}\left( \theta \right) =\left( \widehat{a}_{N} ^{m}\left( \theta \right) ,\widehat{\beta }_{N}^{m\intercal }\left( \theta \right) ,\widehat{\varphi }_{N,\xi }^{m}\left( t_{1}\right) ,...,\widehat{\varphi }_{N,\xi }^{m}\left( t_{q}\right) \right) ^{\intercal }, \end{aligned}$$(12)where \(\left( \widehat{a}_{N}^{m}\left( \theta \right) ,\widehat{\beta } _{N}^{m\intercal }\left( \theta \right) \right) ^{\intercal }\) is the solution of the problem

$$\begin{aligned} Q_{N}^{m}(b)=\underset{b}{\min }\frac{1}{N} {\displaystyle \sum \limits _{i=1}^{N}} \left( y_{i}^{m}-a-x_{i}\beta \right) ^{2}, \end{aligned}$$(13)which is estimated based on the mth simulated data. Using the predicted OLS residual \(\widehat{\xi }^{m},\) we can obtain the empirical characteristic function \(\widehat{\varphi }_{\xi }^{m}\left( t\right) \) defined in (4) and (12) at the points \(t=t_{1},t_{2},\ldots ,t_{q}.\) With the simulated data \(\left\{ (y_{i}^{m},x_{i})\right\} _{i=1}^{N}\), one can then replicate M times such simulations and estimate the parameter \(\gamma .\)

- Step 3::

-

In this step, we then match the estimate of \(\gamma \) from the approximate model based on the real data and that from the simulated data. The II estimator of \(\theta \) is defined as the one that has the minimum distance between the estimates based on the real data and simulated data, i.e.,

$$\begin{aligned} \widehat{\theta }_{N}^{M}(\Omega )=\arg \min _{\theta \in \Theta }\text { }\left( \widehat{\gamma }_{N}-\frac{1}{M} {\displaystyle \sum \limits _{m=1}^{M}} \widehat{\gamma }_{N}^{m}(\theta )\right) ^{\intercal }\Omega \left( \widehat{\gamma }_{N}-\frac{1}{M} {\displaystyle \sum \limits _{m=1}^{M}} \widehat{\gamma }_{N}^{m}(\theta )\right) , \end{aligned}$$(14)

where \(\Omega \) is a symmetric non-negative matrix.

For simplicity, we choose the weighting matrix \(\Omega \) as a diagonal matrix, which assigns 1 for each element of the OLS estimators (\( \widehat{a}_{N}\) and \(\widehat{\beta }_{N}\)) and 1/q for each element of the empirical characteristic functions \(\widehat{\varphi }_{N,\xi }\left( t_{1}\right) ,...,\widehat{\varphi }_{N,\xi }\left( t_{q}\right) \).

It is worth mentioning that the characteristic function contained in the binding function is evaluated by the empirical characteristic function, so it does not matter what the form of the characteristic function is under the true distributions of v and u. In other words, we estimate the parameters contained in the distribution by matching the empirical characteristic functions from the observed data and the simulated data. In order to identify the parameter \(\theta ,\) it is required that the binding function \(\mathbb {B}\left( \cdot \right) \) be a function of the true parameter \(\theta \) and the dimension of the auxiliary parameter \(\gamma \) must be larger than or equal to the dimension of \(\theta \).

Lai (2022) discussed some practical issues in implementing the II estimation and suggested selecting the points close together and equally spaced around zero. The main idea of the II approach is to use simulations performed under the ‘true’ model to correct for the asymptotic bias of the approximate model \(\widehat{\gamma }_{N}\). In the above estimation procedure, steps 1 and 2 require the OLS estimation and step 3 requires nonlinear least squares estimation. Since the characteristic function is the Fourier transform of the probability density function, it completely defines the probability distribution of this random variable. The characteristic function provides an alternative route to estimate the distribution of the random variable. Therefore, \(\widehat{\gamma }_{N}\) is the binding function used in the estimation of the SF model. After obtaining the II estimator \(\widehat{\theta }_{N}^{M}(\Omega )\) from (14), we can compute the standard error using the approach discussed in Lai (2022). Alternatively, one can also use the bootstrap method to obtain the bootstrap standard error, which is easier to implement from the practical point of view.

Prediction of (in)efficiency: Once we obtain the estimate of \(\theta ,\) the prediction of inefficiency can be done using the simulated approach. Let g(u) be an integrable function of u. For the SF analysis, we are particularly interested in \(g(u)=u\) and \(g(u) =\exp (-u)\). When \(g(u)=u,\) we have \(\mathbb {E}\left[ g(u)|\varepsilon \right] =\mathbb {E}\left[ u|\varepsilon \right] .\) If \(g(u)=e^{-u},\) then \(\mathbb {E}\left[ g(u)|\varepsilon \right] =\mathbb {E}\left[ e^{-u} |\varepsilon \right] \). By the definition of conditional expectation, we have

It follows from (15c) that \(\mathbb {E}\left[ g(u)|\varepsilon \right] \) can be estimated using the simulated estimator

Let \(W_{i}^{r}=\frac{f_{v}\left( \varepsilon +u^{r}\right) }{\sum _{r=1} ^{R}f_{v}\left( \varepsilon +u^{r}\right) }\) denote the weight based on the simulated probability. Then the simulated estimator \(\mathbb {E}^{s}\left[ g\left( u\right) |\varepsilon \right] \) takes the form of a weighted average of \(g\left( u^{r}\right) \).

Under the distribution \(v\sim SN(\sigma _{v},\alpha ),\) the pdf of v is

Plugging (17) into (16), we obtain the simulated estimator of the inefficiencies and TEs. The simulated estimator for predicting TE and inefficiency has been applied in various stochastic frontier models, for example, the panel stochastic frontier models in Lai and Kumbhakar (2018) and Lai and Kumbhakar (2021) and also the spatial autoregressive panel stochastic frontier model in Lai and Tran (2022).

Nonconstant \(\sigma _{u}:\) A simple way to model determinants of inefficiency is to make \(\sigma _{u}\) nonconstant – for example, \(\sigma _{u,i}=\exp \left( \delta ^{\prime } w_{i}\right) ,\) where w is a vector of exogenous variables that can be interpreted as determinants of inefficiency. When \(u_i\) is HN, \(\mathbb {E}u_{i}=\sqrt{2/\pi }\sigma _{u,i} =\sqrt{2/\pi }\exp \left( \delta ^{\prime }w_{i}\right) \). Consequently, the w variables can be viewed as determinants of inefficiency. With nonconstant \(\sigma _{u}\) the SF model given in (1) can be written as

where \(u_{i}^{*}=u_{i}-h(w_{i};\delta )\) and \(h(w_{i};\delta )=\sqrt{2/\pi }\exp \left( \delta ^{\prime }w_{i}\right) ,\) which is nonlinear. Define \(h_{0}=\mathbb {E}\left[ h(w_{i};\delta )\right] \) and \(a=\alpha -h_{0},\) then \(\left[ \alpha -h(w_{i};\delta )\right] \) can be decomposed as

where \(\mathbb {E}\left[ h_{0}-h(w_{i};\delta )\right] =0\) by construction. Note that \(h_0\) is the expectation of the function \(h(w_i; \delta )\) taken over w, thus it is a constant. Let \(a=\alpha -h_{0}\) be the intercept of the instrument model, then the above model in (18) can be represented as

where \(\xi _{i}=y_{i}-x_{i}\beta =a+\left[ h_{0}-h(w_{i};\delta )\right] +v_{i}-u_{i}^{*}.\) Note that (20) has a similar form as Eq. (2). Thus, the corresponding binding function is

It follows that the II estimation of (18) can be done in the same way. One can apply OLS to (20) and obtain the estimators \(\widehat{a}_{N}\), \(\widehat{\beta }_{N}\) and the predicted \(\widehat{\xi }_{i}\). The empirical characteristic function of \(\xi \) at points \(t_1, t_2,..., t_q\) is computed using (4). It is clear that each element of \(\gamma \) is a function of the true parameter \(\theta .\) The estimation procedure is similar to the previous case of constant \(\sigma _u\). By matching \(\widehat{\gamma }_{N}\) from the observed data and \(\widehat{\gamma }_{N}^{m}(\theta )\) from the simulated data, we obtain an estimate of \(\theta \), which minimizes the distance between the two estimates.

3 Simulation

In this section, we conduct some Monte Carlo experiments to investigate the finite sample performance of the II estimator. Furthermore, we are also interested in knowing whether the proposed estimator can correctly identify the direction of the skewness from the data. In this regard, we consider opposite signs of the shape parameter that determines the skewness in the data generating process (DGP) in our experiments.

We consider the following specification of our DGP:

where

In order to allow exogenous determinants in the inefficiency u, we specify \(\sigma _{u,i}\) as \(\sigma _{u,i}=\exp \left( \delta _{0}+\delta _{1}w_{i}\right) .\) The true parameters are set as

and

The exogenous variables are randomly drawn as \(x\overset{i.i.d.}{\sim }U\left( 0,3\right) \) and \(w\overset{i.i.d.}{\sim }N\left( 0,1\right) .\) For the skew-normal random variable v, we consider three different specifications. In the first two specifications, v has the constant shape parameter \(\lambda \), where \(\lambda =3\) in Case 1 and \(\lambda =-3\) in Case 2. Empirically, we do not know the direction of the skewness of v, which crucially depends on the sign of \(\lambda .\) Thus, we consider these two opposite cases in our simulation to explore how our approach performs and whether it can correctly identify the skewness of v when the shape parameter has opposite signs. In Case 3, we allow the shape parameter \(\lambda \) to be heteroscedastic and parameterize it as

where

We assume that the exogenous determinant variable z in the shape parameter follows a normal distribution \(z_{i}\overset{i.i.d.}{\sim }N\left( 0,1\right) .\) Under this setting, the scale parameter \(\lambda _{i}\) can be either positive or non-positive, and the sample means of \({\lambda }_{i}\) in the generated random sample is about \(-3\).

Finally, we consider Case 4, where \(\lambda _{1} =0\) and \(\lambda _{0} =0\), i.e., \(\lambda _{i} =0\) for all i. The rest of the setting of the DGP in Case 4 is the same as in the previous case. If \(\lambda _{i} =0\), then \(v_{i}\) follows a normal distribution \(\text {N}\left( 0, \sigma _{v}^{2}\right) \). The normal-half normal specification has been used widely in the empirical application of the stochastic frontier model. Thus, we are also interested in comparing the performances of the II estimator and the ML estimator.

In the II estimation, the total number of replications in all simulations is 1000. For each estimation, we consider the number of replications M to be \(M=\{10,\) \(100\}\) and investigate the efficiency gain as we increase the number of replications.

Table 1 summarizes the simulation results of the four cases, labeled as Case 1A, Case 1B, Case 2A and Case 2B. In Case 1, we have a positive value of \(\lambda \), so that v has a positive skewness. We have a negative \(\lambda \) in Case 2, thus v has a negative skewness. Moreover, we labeled the cases with the letter ‘A’ when the number of replications \(M=10\) and ‘B’ when \(M=100.\) Values in columns 2 to 4 give the biases when the sample sizes are 250, 500 and 1000. Columns 5 to 7 summarize the root mean squared error (RMSE). As shown in the table, all biases and RMSEs are in small magnitudes. Most of the biases slightly decrease as we increase the sample size from 250 to 1000. So do the RMSEs. On the other hand, by comparing Cases 1A (2A) and 1B (2B) we find that the RMSEs slightly decrease for most of the parameters as we increase M from 10 to 100. For the negative skewness cases (2A and 2B), we find that the biases of \(\lambda \) are slightly larger than those of the cases with a positive skewness (1A and 1B). It could be due to the direction of the skewness. For example, consider three random noises \(v_{1},\) \(v_{2}\) and \(v_{3},\) where \(v_{1}\) has a positive skewness, \(v_{2}\) has zero skewness and \(v_{3}\) has negative skewness. It is clear that the skewness of the composite errors satisfies the relation \(SK(v_{1}-u)>SK\left( v_{2}-u\right)>\) \(SK\left( v_{3}-u\right) \) and \(0>SK\left( v_{2}-u\right)>\) \(SK\left( v_{3}-u\right) .\) We conjecture that the bias of \(\lambda \) is slightly larger when v and u have the same direction of skewness. However, the bias decreases quickly as we increase the sample size.

In Case 3, we let the shape parameter be heteroscedastic and have it depend on an exogenous variable z. The simulation results are summarized in the upper panel of Table 2. As shown in the table, the pattern observed from the biases and RMSEs is quite similar to what we found in Table 1. In Case 4, we compare the biases and RMSEs of the ML estimator and the indirect estimators when \(M =10\) and \(M=100\). The results are summarized in the lower panel of Table 2. As shown in the table, the bias of the II estimator when \(M=10\) is slightly larger than that of the ML estimator. However, as we increase the number of replications to 100, the bias of the II estimator significantly decreases and performs quite similarly to the ML estimator. Furthermore, we find the RMSEs of the ML and II estimators when \(M=10\) and \(M=100\) are quite similar. There is no significant evidence showing that the ML estimator is better in terms of RMSE. The simulation results in Tables 1 and 2 show the consistency of our proposed estimator. Based on these results, we conclude that our estimator performs quite well in the small sample.

Below, we use an artificial random sample of \(N=500\) to compare the predicted inefficiencies and TEs, which are computed using Eq. (16) under 1000 draws. The sample is generated according to the DGP specified in Eq. (22). We specify \(\lambda _{0}=-3.5\) and \(\lambda _{1}=-1\), so that the average ratio of \(\sqrt{\frac{Var\left( v\right) }{Var\left( u\right) }}\) is around \(\frac{0.2275}{0.2586}=0.88.\) The remaining parameters are specified as before. The true distribution of the composite error is heteroscedastic SN and half-normal distribution. This random sample is estimated under three different specifications of the composite error. The three models are labelled as follows:

-

M1:

heteroscedastic SN noise and half-normal inefficiency

-

M2:

normal noise and half-normal inefficiency

-

M3:

homoscedastic SN noise and half-normal inefficiency.

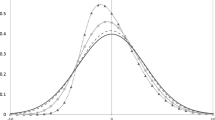

Thus, M1 is the true model. We compare the true values of the inefficiencies and TE (u and \(e^{-u}\)) and their expectations (\(\mathbb {E} \left( u|\varepsilon \right) \)and \(E\left( e^{-u}|\varepsilon \right) \)) with their predictions under models M1-M3. The graphs of the true TEs, their expected values, and their predicted values are given in Fig. 1. As shown in the figure, the predicted TEs from M2 seem to be overestimated at the lower tail and underestimated at the right tail of the distribution of TE. This is because M2 imposes a symmetric distribution on the random noise v, and thus the prediction of TE ignores the asymmetric distribution of v. On the other hand, the predicted TEs from M1 and M3 have more similarities because both of them assume SN distributions on v. M1 has a heteroscedastic shape parameter and M3 assumes a homoscedastic shape parameter. The conditional expectation functions are evaluated using the true parameters. In order to compare the predicted inefficiencies and TEs under models M1-M3, we define the absolute values of the biases and prediction errors of inefficiencies and TEs as

and

where \(j=1,2,3\). We summarize the descriptive statistics of the true and predicted values of u, TE, and the absolute biases and absolute prediction errors in Table 3. The means of the true values of u and \(\mathbb {E} (u|\epsilon )\) are about the same (0.30), but their standard deviations and ranges are different (ranges of u and \(\mathbb {E}(u|\epsilon )\) are 1.43 and 1.21, respectively). The means of the predicted values of \(\mathbb {E} (u|\epsilon )\) for the three models are almost the same as the true mean of the values of \(\mathbb {E}(u|\epsilon )\). Similarly, the means of the predicted values of \(\mathbb {E}(e^{-u}|\epsilon )\) from M1-M3 are identical and the same as the mean of the true values of \(\mathbb {E}(u|\epsilon )\) (all about 0.75). However, the standard deviation of predicted \(\mathbb {E}(u|\epsilon )\) from M2 (0.1055) is much smaller than those from M1 and M3 (which are around \(0.20 \sim 0.21\)). It seems that the predicted \(\mathbb {E}(u|\epsilon )\) from M2 has the problem of under-dispersion. A similar finding also appears in the predictions of \(\mathbb {E}(e^{-u}|\epsilon )\). Furthermore, M2 has absolute biases that are quite different from the other two models. Similarly, the means of absolute prediction errors of \(\mathbb {E}(u|\epsilon )\) and \(\mathbb {E}(e^{-u}|\epsilon )\) from M1 and M3 are very similar and are different from M2. These results show that although there are some similarities, model M2 (which ignores the skewness in the noise term) is different from models M1 and M3. We find similar results from models M1-M3 in the application with real data.

4 Empirical application

In this section, we empirically demonstrate the estimation approach using real data (an unbalanced panel of 59 US electricity transmission companies for the period 2001–2009). The main objective is to showcase workings of our model instead of a thorough empirical analysis of efficiency of the electricity transmission sector as in Llorca et al. (2016). Instead of searching for the best model in terms of their specifications of inefficiency, our objective is to illustrate the working of the II estimation with SN noise and half-normal inefficiency. Having said that, we want to point out that our approach can be extended to accommodate the more general case where the mean and variance of inefficiency can be functions of exogenous variables that can potentially explain inefficiency. Thus, our model clearly extends the existing SF models by allowing an asymmetric noise term where the asymmetry (the shape parameter of the SN variable) is explained by some exogenous variables. In our application, we use weather variables as determinants of inefficiency via the variance of the half-normal inefficiency term. The shape parameter in the SN distribution of the noise term is allowed to depend on peak load. In fact, one can use other variable(s) as determinants of the shape parameters also.

Since electricity distribution/transmission are services in which outputs are considered exogenous, a cost or input distance is commonly used. Here, we use a cost function approach in which the cost variable (C) is total cost (Totex). Totex is defined as the sum of Opex, which is the total of the operation and maintenance expenses, and Capex, which is the sum of annual depreciation on capital assets and the annual return on the balance of capital. Opex, Capex and Totex are measured in year 2000 US dollars. The output variables are \(Y_{1}\) – electricity delivered (del), \(Y_{2}\) – total capacity of substations (cap\(\_\)subs), and \(Y_{3}\) – network length (pole) measured in miles. The input variables are labor and capital. Price of labor (LPR) is defined as the average annual wage for the electric power transmission and distribution industry by state. As in the case of Totex, this variable is also measured in year 2000 US dollars. The producer price index for power transmission is used as a proxy for capital price (KPR). Since we use a cost function to represent the technology, the dependent variable y is log of Totex, and the x variables are log of input prices and outputs. The inefficiency term u appears with a positive sign in the cost function because inefficiency increases cost. There is no such requirement for the noise term. Input prices and Totex are normalized by KPR to impose the linear homogeneity (in input prices) requirement on the cost function. Details on these variables listed below can be found in Llorca et al. (2016).

The weather variables used to explain cost inefficiency are annual minimum temperature in Fahrenheit degrees (tmin), average of the daily mean wind speeds in knots (wind), average of the daily precipitation in inches (prcp), and Capex/Opex Ratio (cor). We also include positive and negative average demand growth (pogrowth and negrowth) as two separate determinant variables. The peak load demand is used to explain skewness of the noise term, v.

Finally, we control regional variations in cost by including the following regional dummies in the cost function: serc - Reliability Corporation, spp - Southwest Power Pool, wecc - Electricity Coordinating Council, npcc - Northeast Power Coordinating Council, rfc - ReliabilityFirst Corporation, mro - Midwest Reliability Organization, and ercot - Electric Reliability Council of Texas. We report summary statistics of the variables and their definitions in Table 4. Some of these variables are constructed using the original variables. For instance, we used the variable tmincor, which is defined as the product of tmin (Temperature) and cor (ratio of Capex and Opex). All the variables used in the translog cost function and the skewness and inefficiency functions as well as the regional dummies, along with their means and standard deviations, are reported in Table 4.

The model we discussed so far is for cross-sectional data. However, our data is an unbalanced panel of 59 US electricity transmission companies for the period 2001–2009 – giving us a total of 402 observations. Since this is the first paper on the use of SN noise with determinants of the shape parameters while using the II approach to estimate the parameters of the stochastic frontier model, we have not used company effects and other possible panel extensions in the present model. Instead, we use a pooled cross-sectional model with a time trend as a covariate and determinant of inefficiency.Footnote 5 Using panel notations, the translog cost function, which we estimate, is written as

where \(Y_{m}, m=1,2,3,\) are outputs, \(D_{d}\) are regional dummies, and t is the time trend. Cost (C) and input price (W) are normalized by the price of capital. Since there are only two inputs, we have one normalized input price (labor), which is W. The shape parameter \(\lambda \) is a function of \(z_{it}\) variables. Here, \(z_{it}\) includes only peak. The vector \(w_{it}\) contains t, tmin, wind, prcp, tmin\(\times \)cor, wind\(\times \)cor, prcp\(\times \)cor, pogrowth and negrowth, which are used as determinants of inefficiency modeled in terms of \(\sigma ^{2}_{u}\). That is, we model inefficiency via \(\sigma _{u,it} =\exp (\delta ^{\prime }w_{it})\), where \(u_{it} \sim N^{+}(0, \sigma ^{2}_{u,it})\). We include neutral technical change by including the time trend variable (t) in the cost function. We also include t in \(w_{it}\) to allow inefficiency to change over time in a systematic manner.

Since the model with asymmetric noise is new, we test the specification with some other models. We estimate three models, viz., M1-M3 mentioned in Sect. 3, and test the appropriateness of each of them in terms of the data used. Because models M2 and M3 are special cases of M1, first we select between models M1 and M2 using the Wald test. That is, we test the joint hypothesis \(H_{0}:\lambda _{0}=0\) and \(\lambda _{1}=0,\) which is equivalent to testing \(\lambda _{i}=0\) for all i, because the skew-normal distribution with a zero shape parameter degenerates to the normal distribution. Using the bootstrap standard error from model M1, we compute the Wald statistic, which follows a \(\chi ^{2}(2)\) distribution and gives a value of 12,880. Based on this result, we reject model M2 at the 1% level of significance. Similarly, a test between models M2 and M3 is performed using the null hypothesis \(H_{0}:\lambda _{0}=0.\) Since the shape parameter \(\lambda \) (from Table 5) is significant at the 5% level, we reject model M2. Finally, selection between models M1 and M3 is made by testing the hypothesis \(H_{0}:\lambda _{1}=0.\) Since the estimated coefficient of peak is 0.0302 with the standard error 0.0177, the corresponding p-value is 0.087. Therefore, model M3 is rejected at the 10% level of significance, but not rejected at the 5% level of significance. Based on these tests, we conclude that model M1 is supported by the data. That is, we find evidence of asymmetric noise (v) even after controlling for the inefficiency term (u), which is always asymmetric. In a standard SF model (model M2), the composite error term (\(v+u\)) is skewed and the skewness of the composite error term is viewed as an indicator of inefficiency. This assertion is wrong if the noise term is skewed. Models M2 and M3 show how one can test for skewed v and still identify inefficiency.

We report the parameter estimates of M1-M3 in Table 5. Given that the parameters of the translog cost function have no direct interpretation, we compute scale elasticity for each output (\(\partial \ln C/\partial \ln Y_{m}, m=1,2,3\)) and elasticity with respect to input price. These are reported in Table 6. The mean values of these elasticities with respect to \(Y_{1} - Y_{3}\) (del, cap_subs and pole) are 0.2127, 0.9039 and 1.0307, respectively, in model M1. For models M2 and M3, these elasticities are very different – 0.009, 0.5346, and 0.1093 in M2 and 0.0500, 0.4606, 0.1012 in M3. These elasticities for each output measure percentage change in cost for a one percent change in output, and they are supposed to be non-negative. It can be seen from Table 6 that the estimated elasticities from M2 and M3 are negative for some observations although their mean values are positive. For example, the elasticities of “del” from M2 has 169 negative values out of 402 values. In contrast all the estimated elasticities from M1 are positive. Similarly, the input price elasticity of labor from M1 is positive for all observations (as should be the case since an increase in input prices increases cost, ceteris paribus), whereas in M2 there are some negative values. These results also vindicate model M1 in addition to its support from the statistical test discussed above.

Estimates of technical change (\(TC=\partial \ln C /\partial t\)) – the coefficient of t – is found to be positive but insignificant in all three models. A positive (negative) value of TC indicates technical regress (progress) – an increase (decrease) in cost over time – holding everything else unchanged. TC in all three models is assumed to be neutral.

We predict technical (in)efficiency using the formula in (16), which gives predicted values of both inefficiency (\(u_{it}\)) and TE (\(\exp (-u_{it}))\) for each company at every year it is observed. It is worth mentioning that one should use \(v=\varepsilon -u^r\) in (16) since we have a cost frontier model. The inefficiencies and TEs are predicted by Eq. (16) based on 1000 draws. The mean values of inefficiency and TE in M1 are 0.1070 and 0.9032, meaning that the costs of the transmission companies, on average, are 10.7% higher because of inefficiency. Alternatively, their TE, on average, is 90.32%. However, the mean inefficiency and TE from M2 and M3 are very different. These models predict that TE, on average, is only 65%. Since models M2 and M3 are rejected and model M1 is supported by the data, we argue that the results from M1 are to be trusted more than those from the other two models for which mean inefficiencies, on average, are 48.45% and 50.19%. These numbers are too high to believe.

To get a closer look at the predicted inefficiency and TE, instead of their means, we report their density plots in Fig. 2. In model M1 most of the companies are more than 88% cost efficient (less than 10% inefficient). The spreads of cost inefficiency in M2 and M3 are much larger, resulting in estimated cost efficiency varying from less than 2% to almost 100% in model M2 and from 0.25% to 90% in model M3. Companies with very low cost efficiency in M2 and M3 drive the mean efficiency down, compared to M1. Model M1 shows that more than 50% of the companies are more than 90% efficient.

Since we include t as a determinant of inefficiency, we calculate technical efficiency change from \(\Delta TE_{it} = - \partial (u_{it})/\partial t \approx - \partial (\mathbb {E}u_{it})/\partial t = \sqrt{2/\pi }\,\delta _{t} \times \exp ({\delta ^{\prime }} w_{it})\). Note that here we replace \(u_{it}\) by \(\mathbb {E} u_{it}\) and then use the estimated values of the parameters to compute \(\Delta TE_{it}\). Recall that \(\mathbb {E} u_{it}= \sqrt{2/\pi } \hat{\sigma }_{u,it} = \sqrt{2/\pi } \exp {(\hat{\delta }^{\prime }_{t}} w_{it})\). Thus, the sign on \(\delta _{t}\) indicates whether \(\Delta TEC\) is increasing or decreasing. In models M1 and M2, \(\hat{\delta }_{t}\) is positive, indicating a decreasing efficiency over time. In M3, \(\hat{\delta }_{t}\) is negative, implying increasing cost efficiency. However, \(\hat{\delta }_{t}\) is statistically insignificant in all three models.

5 Conclusion

This paper makes three contributions in the stochastic frontier (SF) models. First, the noise term is assumed to be asymmetric (skew normal) while the inefficiency term is assumed to be half-normal. This formulation avoids the criticism that skewness of the composite error term (sum of the noise and inefficiency) cannot be an indicator of inefficiency because skewness can arise from the noise term. Also, skewness in this model can be either positive or negative. Second, instead of using the standard ML method, we use the indirect inference (II) approach to estimate the parameters of the SF model, the parameters associated with the skewness function of the noise term, and determinants of inefficiency modeled via the variance of the half-normal inefficiency term. We tested this model against two special cases: (i) the noise term is symmetric and (ii) the skewness is a function of some covariates. Third, we generalize the SN and half-normal model even further in which the shape parameter of the SN model is made heteroscedastic (function of exogenous variables). Also, we added determinants of inefficiency by making the variance of the HN distribution heteroscedastic. We provide simulation results using the II estimation approach to show small sample performance of our model. Finally, we use a stochastic cost frontier model to showcase the workings of our model using an unbalanced panel of 59 US electricity transmission companies observed for the period 2001–2009. Test results show that our model M1 with heterogeneity in inefficiency and the skewness parameter is favored by the data against the two simpler models that are special cases of our model.

One caveat that we plan to address in the future is that our theoretical model is cross-sectional but the application uses a panel data. We used a pooled model without fixed/random effects to control for company-specific heterogeneity. Another future extension in the panel model would be to accommodate persistent inefficiency along with transient inefficiency with or without determinants.

Notes

For a translog production it contains the linear, square and cross products of log inputs.

The model can be extended to relax this assumption.

Note that the model in (1) is log-linear but one can specify a nonlinear function that can be approximated by a linear function.

We plan to include panel features such as the fixed/random effects in our model while estimating it using the II approach in a separate paper.

References

Adcock CJ (2010) Asset pricing and portfolio selection based on the multivariate extended skew-Student-t distribution. Ann Oper Res 176:221–234

Badunenko O, Henderson DJ (2021) Production Analysis with Asymmetric Noise. MPRA_paper_110888.pdf

Busch C, Domeij D, Guvenen F, Madera R (2020) Skewed idiosyncratic income risk over the business cycle: sources and insurance. Am Econ J Macroecon 14(2):207–42

Coelli T (1995) Estimators and hypothesis tests for a stochastic frontier function: a monte carlo analysis. J Prod Anal 6:247–268

Gourieroux C, Monfort A, Renault E (1993) Indirect inference. J Appl Economet 8(S1):S85–S118

Hafner CM, Manner H, Simar L (2018) The “wrong skewness’’ problem in stochastic frontier models: a new approach. Economet Rev 37(4):380–400. https://doi.org/10.1080/07474938.2016.1140284

Kraus A, Litzenberger RH (1976) Skewness preference and the valuation of risk assets. J Financ 31:10851100

Lai H-P (2022) Indirect Inference of Stochastic Frontier Models, working paper

Lai H-P, Kumbhakar SC (2018) Panel data stochastic frontier model with determinants of persistent and transient inefficiency. Eur J Oper Res 271(2):746–755

Lai H-P, Kumbhakar SC (2021) Panel stochastic frontier model with endogenous inputs and correlated random components. J Bus Econ Stat, forthcoming

Lai H-P, Tran K (2022) Persistent and transient inefficiency in a spatial autoregressive panel stochastic frontier model. J Product Anal, forthcoming

Llorca M, Orea L, Pollitt MG (2016) Efficiency and environment factors in the US electricity transmission industry. Energy Econ 55:234–246

Qi L, Bravo-Ureta BE, Cabrera VE (2015) From cold to hot: climatic effects and productivity in Wisconsin dairy farms. J Dairy Sci 98:8664–8677

Schmidt P, Lin T-F (1984) Simple tests of alternative specifications in stochastic frontier models. J Econom 24:349–361

Simar L, Wilson PW (2010) Inferences from cross-sectional stochastic frontier models. Economet Rev 29:62–98

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Lai, Hp., Kumbhakar, S.C. Indirect inference estimation of stochastic production frontier models with skew-normal noise. Empir Econ 64, 2771–2793 (2023). https://doi.org/10.1007/s00181-023-02412-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00181-023-02412-y