Abstract

In practical engineering applications, random variables may follow multimodal distributions with multiple modes in the probability density functions, such as the structural fatigue stress of a steel bridge carrying both highway and railway traffic and the vibratory load of a blade subject to stochastic dynamic excitations, etc. Traditional uncertainty propagation methods are mainly used to treat random variables with only unimodal probability density functions, which, therefore, tend to result in large computational errors when multimodal probability density functions are involved. In this paper, an uncertainty propagation method is developed for problems in which multimodal probability density functions are involved. Firstly, the multimodal probability density functions of input random variables are established using the Gaussian mixture model. Secondly, the uncertainties of the input random variables are propagated to the response function through an integration of the sparse grid numerical method and maximum entropy method. Finally, the convergence mechanism is developed to improve the uncertainty propagation accuracy step by step. Two numerical examples and one engineering application are studied to demonstrate the effectiveness of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Uncertainties associated with heterogeneous materials, manufacturing imprecision, random loads, and so on widely exist in practical engineering problems (Melchers 1999; Schuöllera and Jensen 2008; Stefanou 2009; Papadrakakis et al. 2010; Pranesh and Ghosh 2018). They are considered to have significant influence on the performance of an engineering system, such as reliability, safety, and robustness (Du and Chen 2002; Youn et al. 2004; Doltsinis and Kang 2004; Keshavarzzadeh et al. 2017; Zhang et al. 2018). Therefore, quantifying and analyzing the effects of input uncertainties on the system performance, namely, uncertainty propagation, have become critical in the practical engineering design.

Generally, the motivation of uncertainty propagation (MaiTre et al. 2004; Lee and Chen 2009; Ballaben et al. 2017) is to calculate the statistical moments or probability distribution function of the system response Y = g(X), based on the distribution information of input random variables X = [X1, X2, ..., Xn]T. Until now, many important progresses have been made for uncertainty propagation, which can be roughly sorted into three categories. (1) Sampling-based methods such as direct Monte Carlo simulation (MCS) (Augusti et al. 1984; Amirinia et al. 2017; Pan and Dias 2017), adaptive sampling (Mori and Kato 2003), and importance sampling (Bucher 1988). This category of methods utilizes a large amount of samples to propagate uncertainty, which is commonly considered to be able to achieve high precision. (2) Most probable point (MPP)-based methods such as the first-order reliability method (FORM) (Rackwitz and Flessler 1978; Low and Tang 2007; Keshtegar and Chakraborty 2018) and second-order reliability method (SORM) (Brietung 1984; Zhang and Du 2010). FORM and SORM approximate the response function with Taylor series expansion at a point with the largest failure probability density value, e.g., most probable point, based on which the probability distribution function of the response is calculated with a good balance between computational accuracy and efficiency. (3) Moment-based methods (Lee and Kwak 2006; Zhao and Ono 2001; Shi et al. 2018). For this category of methods, the statistical moments of the response function are first calculated by some numerical integration techniques, and then its probability density function is approximated based on the calculated moments.

However, it should be pointed out that most of the existing uncertainty propagation methods generally consider that the random variables are unimodally distributed. In other words, there is only one mode (local maxima) in the probability density function of each random variable. In practical engineering problems, nevertheless, the random variable may follow a multimodal distribution with more than one probability density mode, which is named as the multimodal random variable in this paper. For instance, the long-term monitoring data of the structural fatigue stress on a steel bridge carrying both highway and railway traffic was demonstrated to obey a bimodal distribution (Ni et al. 2011; Ni et al. 2010). In the complex power grids, the abrupt local change of voltage was considered to obey a bimodal distribution (Moens et al. 2014). According to the statistical Weibull plots of experimental data, the Knoop microhardness of nanostructured partially stabilized zirconia coatings was observed to follow a bimodal distribution (Lima et al. 2002). The axle load spectrum of a road site was pronounced to follow multimodal distributions when various loading situations are considered for the passing trucks (Haider et al. 2009; Timm et al. 2005). The vibratory load of a blade subject to stochastic dynamic excitations and the start and shutdown stresses of turbine generator rotors were also reported to follow multimodal distributions (He et al. 2016). The MPP-based methods and moment-based methods may encounter large computational errors when multimodal random variables are involved. More specifically, it is required that the multimodal random variables should be converted to unimodal variables that follow normal distributions in the MPP-based methods, which generally increases the nonlinear degree of the response function and hence makes the uncertainty propagation more complex (Hu and Du 2017). The existing moment-based methods generally calculate the low order of moments of the response function, which may fail to capture the multimodal characteristic of the response probability density function. Though the sampling-based methods are able to deal with multimodal random variables, they are widely considered to be extremely computationally expensive, which hinders the application of sampling-based methods in practical engineering problems involving complex simulation models. Therefore, it is necessary to develop high-performance MPP-based or moment-based uncertainty propagation methods for problems involving multimodal random variables.

The research on uncertainty propagation involving multimodal distributions is still under its preliminary stage. Until now, only a limited number of exploratory researches have been conducted, and they tend to be suffering from some inherent limitations. An uncertainty propagation method integrating the Laplace method and FORM was proposed by establishing the Laplace approximations of random variables with multimodal distributions (He et al. 2016). However, it may encounter large uncertainty propagation errors in the cases where the probability density function is highly unsymmetrical at modes or the response function at MPP is highly nonlinear. A mean value saddlepoint approximation method for bimodal distributions was proposed, based on which the structural failure probability can be estimated more accurately (Hu and Du 2017). The mean value saddlepoint approximation method linearizes the response function at mean values, which may fail to provide satisfied computational accuracy when the response function is highly nonlinear.

A new uncertainty propagation method is proposed in this paper to well treat problems in which multimodal random variables are involved. The remainder of this paper is organized as follows. The input multimodal random variables are modeled using Gaussian mixture model in Section 2. The uncertainties of input multimodal random variables are propagated to the response function with a combination of the sparse grid numerical integration method and maximum entropy method in Section 3. Two numerical examples and an engineering application are investigated in Section 4 to demonstrate the effectiveness of the proposed method. Some conclusions are finally summarized in Section 5.

2 Uncertainty modeling of input multimodal random variables

The uncertainty modeling of input multimodal random variables is the prerequisite of an uncertainty propagation problem. Generally, it involves two aspects: (i) assigning a mathematical structure to appropriately describe the uncertain variable and (ii) determining the numerical values of the parameters of the mathematical structure.

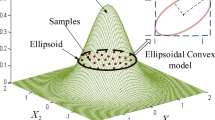

The Gaussian mixture model (GMM) (Rasmussen 2000; Zivkovic 2004) is adopted for uncertainty modeling of input random variables with multimodal distributions. It has been widely used in the areas of applied statistics such as pattern recognition, classification, and clustering (Figueiredo and Jian 2002; Ban et al. 2018; Khan et al. 2019) to deal with complex data set with multiple subpopulations. The GMM employs a linear combination of multiple Gaussian components to characterize the multimodal random variable X, the probability density function of which is expressed as (Zivkovic 2004):

where αm denotes the coefficient of the mth Gaussian component, and it satisfies αm ≥ 0 and \( \sum \limits_{m=1}^k{\alpha}_m=1 \). φ(X| θm) denotes the Gaussian probability density function of the mth component, where \( {\boldsymbol{\uptheta}}_m=\left({\mu}_m,{\delta}_m^2\right) \) denotes the mean and variance. k denotes the number of component, which can be determined by the minimization of the Akaike information criterion (AIC) (Ni et al. 2011; Ni et al. 2010):

where Lf denotes the maximized value of the likelihood function for the GMM model. \( \boldsymbol{\uptheta} =\left\{{\alpha}_1,{\mu}_1,{\delta}_1^2,{\alpha}_2,{\mu}_2,{\delta}_2^2,\dots, {\alpha}_k,{\mu}_k,{\delta}_k^2\right\} \) denotes the complete set of the distribution parameters. To make a better illustration, the Gaussian mixture model of a bimodal distribution is presented in Fig. 1, and its probability density function is expressed as below:

To determine the Gaussian mixture model of a multimodal random variable, the set of parameters θ is required to be estimated. The maximum likelihood estimation method is a common choice for parameter estimation, given a set of h independent observed samples x = {x(1), x(2), ..., x(h)}. Moreover, a set of h hidden variables γ = {γ(1), γ(2), ..., γ(h)} is provided, which indicates that the sample x(i) was produced by the mth component. Each hidden variable is a binary vector \( {\boldsymbol{\upgamma}}^{(i)}=\left[{\gamma}_1^{(i)},{\gamma}_2^{(i)},...,{\gamma}_k^{(i)}\right] \), where \( {\gamma}_m^{(i)}=1 \) and \( {\gamma}_p^{(i)}=0\left(p\ne m\right) \). Based on this, the log-likelihood function can be established as below:

The set of parameters θ is estimated by maximizing the log-likelihood function:

The expectation maximization (EM) algorithm (Dempster et al. 1977; Redner and Walker 1984; Balakrishnan et al. 2017) is commonly adopted to iteratively search the local maxima of logf(X, γ| θ), owing to its advantages of reliable convergence, low cost per iteration and ease of programming. The EM algorithm produces a sequence of estimates \( \left\{\hat{\boldsymbol{\uptheta}}(t),t=0,1,2,...\right\} \) of the parameters θ by alternatingly applying the following two steps (Figueiredo and Jian 2002):

E-step: the conditional expectation of the log-likelihood is computed, given the observed samples x and current estimated parameters \( \hat{\boldsymbol{\uptheta}}(t) \). Since logf(X, γ| θ) is linear with respect to the hidden variables γ, we simply need to calculate the conditional expectation \( \varpi =\hat{E}\left[\boldsymbol{\upgamma} |X,\hat{\boldsymbol{\uptheta}}(t)\right] \) and plug it into logf(X, γ| θ). The result is the so-called Q-function:

Since the elements of γ are binary, their conditional expectations are given by:

where αm is the priori probability that \( {\gamma}_m^{(i)}=1 \), while \( {\omega}_m^{(i)} \) is the posteriori probability that \( {\gamma}_m^{(i)}=1 \), after observing x(i).

M-step: the parameters are updated by maximizing the expected log-likelihood established in E-Step.

3 Propagating uncertainties to the response function

In this section, the uncertainties of the input multimodal random variables are propagated to the response function with a combination of the sparse grid numerical integration method and maximum entropy method. Furthermore, a convergence mechanism is developed to guarantee the uncertainty propagation accuracy.

3.1 Calculating the raw moments of the response function

Based on the obtained probability density functions of each input random variable X, the raw moments of the response function Y = g(X) can be calculated as follows:

where ml denotes the lth-order raw moment of Y, X = (X1, X2, ⋯, XN) denotes the vector of N independent multimodal random variables, fX(X) = f1(X1)f2(X2)⋯fN(XN) denotes the joint multimodal probability density function of X, ℝN denotes the multi-dimensional integral domain, \( \hat{E} \) is the expectation operator. Generally speaking, the multi-dimensional integral in (9) cannot be economically calculated through direct numerical integrals, since high dimensionality and complicated integral domain are always encountered.

The sparse grid numerical integration (SGNI) method (Gerstner and Griebel 1998; Xiong et al. 2010; He et al. 2014) is adopted to calculate the raw moments with k-level accuracy, through the summation of probability density values over a certain number of multidimensional sampling points. First, the one-dimensional quadrature points and weights (\( {U}_1^i=\left\{{\xi}_{1,1}^i,{\xi}_{1,2}^i,...,{\xi}_{1,s}^i\right\} \) and \( {\omega}_1^i=\left\{{\omega}_{1,1}^i,{\omega}_{1,2}^i,...,{\omega}_{1,s}^i\right\} \)) should be obtained for each input random variable through some kind of quadrature rules. To obtain the appropriate one-dimensional quadrature points and weights for multimodal random variables, the normalized moment-based quadrature rule (NMBQR) (Echard et al. 2011; Xia et al. 2015) is used. Then, the multidimensional sampling points \( {\mathbf{U}}_N^k \), hereafter called collocation points, are defined using a special tensor product on the one-dimensional quadrature points (Smolyak 1963; Xiu and Hesthaven 2005):

where ⊗ denotes the operation of the special tensor product between two vectors, which is defined as follows: If \( {U}_1^{i_1}=\left\{{\xi}_{1,1}^{i_1},{\xi}_{1,2}^{i_1}\right\} \), \( {U}_2^{i_2}=\left\{{\xi}_{1,1}^{i_2},{\xi}_{1,2}^{i_2}\right\} \), then \( {U}_1^{i_1}\otimes {U}_1^{i_2}=\left\{{\boldsymbol{\upxi}}_1=\left({\xi}_{1,1}^{i_1},{\xi}_{1,1}^{i_2}\right),{\boldsymbol{\upxi}}_2=\left({\xi}_{1,1}^{i_1},{\xi}_{1,2}^{i_2}\right),{\boldsymbol{\upxi}}_3=\left({\xi}_{1,2}^{i_1},{\xi}_{1,1}^{i_2}\right),{\boldsymbol{\upxi}}_4=\left({\xi}_{1,2}^{i_1},{\xi}_{1,2}^{i_2}\right)\right\} \). ∣i ∣ = i1 + i2 + ... + in denotes the summation of the multi-indices {i1, i2, ..., iN}, which is intelligently bounded such that the special tensor product will exclude collocation points that contribute less to the improvement of the required integration accuracy. For each obtained collocation point \( {\boldsymbol{\upxi}}_r\in {\mathbf{U}}_N^k \), a corresponding weight ωr is assigned:

where \( {\omega}_{1,j}^i \) denotes the weight corresponding to the one-dimensional quadrature point \( {\xi}_{1,j}^i \), and \( {\xi}_{1,j}^i \) denotes the jth element of the collocation points \( {U}_1^i \). Based on the obtained collocation points and corresponding weights, the lth raw moment of the response function is calculated as follows:

where p is the total number of the collocation points, which is obtained by the combinations of the multi-indices that satisfy k + 1 ≤ ∣ i ∣ ≤ k + N.

The construction of collocation points based on the special tensor product is illustrated using a two-dimensional problem, as shown in Fig. 2. To achieve a 2-level (k = 2) accuracy, five possible combinations of the multi-indices ([i1, i2]) are considered with the boundary equation 3 ≤ i1 + i2 ≤ 4, namely, ([i1, i2]) = ([1, 2], [1, 3], [2, 1], [2, 2], [3, 1]). Noting that each pair [i1, i2] is equal to the number of one-dimensional points in the first and second dimension, respectively, the total 14 collocation points are obtained for SGNI through the possible combinations over the five sets [i1, i2]. Since the combinations [1,3] and [1,3] produce a redundant point, therefore, only 13 points are shown in Fig. 2.

3.2 Calculating the probability density function of the response function

Based on the first l raw moments obtained above, the probability density function of the response function Y is generally computed using the maximum entropy method (MEM) (Jaynes 1957; Xu et al. 2017), by maximizing its Shannon entropy under the constraints of given raw moments:

where ρ(y) denotes the probability density function of Y, S(ρ) denotes the Shannon entropy of ρ(y), and mi denotes the ith-order raw moment of Y. Generally speaking, the commonly used unimodal probability density functions can be obtained with satisfied accuracy directly through (13), for which only the low-order raw moments are required. However, the calculation of a multimodal probability density function always requires a much larger number of raw moments. Nevertheless, the values between different orders of raw moments, especially the low-order and high-order moments, generally behave extremely large variations. It will result in numerical computational difficulty, e.g., ill-conditioned matrix, when estimating the multimodal probability density functions, which will make a significant influence on the uncertainty propagation accuracy of the proposed method.

To deal with the numerical computational difficulty and hence calculate the multimodal probability density function of Y more accurately, a quasi-normalization transformation \( {Y}^{\prime }=\frac{Y-{\mu}_Y}{c} \) is adopted in the calculation of moments in the conventional MEM. c is a given scaling factor. When c = σy, the quasi-normalization transformation becomes a normalization process. Based on that, the optimization problem in (13) is reconstructed as below:

where μY denotes the mean of Y, i.e., μY = m1, \( {m}_i^{\prime } \) is defined as the ith non-standard central moment of Y, which can be calculated using the raw moments mi obtained above:

where \( {B}_i^j=\left(\begin{array}{c}i\\ {}j-1\end{array}\right) \) is the binomial coefficient, m0 = ∫ ρ(y)dy = 1 is the 0th raw moment of Y. The detailed derivation process of \( {m}_i^{\prime } \) is presented in the Appendix.

The constrained optimization problem in (14) is converted to an unconstained optimization problem through the Lagrangian multiplier method:

where λk(k = 0, 1, ⋯l) denote the Lagrangian multipliers. Based on the necessary conditions for a stationary point of (16), the analytical expression of ρ(y) is obtained:

and λk(k = 0, 1, ⋯l) can be calculated by solving a group of nonlinear equations as follows:

Here, the method proposed in Ref. (Zellner and Highfield 1988) is employed to calculate λk(k = 0, 1, ⋯l), in which the equations are solved by the standard Newton method.

3.3 Convergence mechanism

As introduced above, the first l raw moments of the response function need to be calculated in Section 3.1 and then utilized to calculate the probability density function of the response in Section 3.2. However, how to determine the order of moments l for a certain uncertainty propagation problem remains as an unresolved but important issue. First, the uncertainty propagation problem involving multimodal input random variables is very complex. Different types of probability density functions may be obtained for the response function. If the multimodal probability density function is obtained, then the high order of raw moments should be calculated. If the unimodal probability density function is obtained, then only the low order of moments is required. Second, it does not mean that the order of moments should be as high as possible if the probability density function of the response is multimodal, because the calculation of high-order moments is often considered to be difficult and time-consuming. More importantly, small deviations in the calculated moments may result in large estimation errors of the multimodal probability density function.

It is known that as an increasing number of moments are used, the estimated probability density function will gradually approach to the actual probability density function, and its Shannon entropy finally converges to a stable value. Therefore, a convergence mechanism proposed in our previous work (Zhang et al. 2019) is used to determine the order of moments appropriately, through which the probability density function of the response can be estimated with a gradually improved accuracy, until the demanded uncertainty propagation accuracy is satisfied. As shown in Fig. 3, the processes of moment calculation of the response in Section 3.1 and probability density function estimation of the response in Section 3.2 are integrated in a sequence. At the end of each sequence, the Shannon entropy of the estimated probability density function is calculated and compared with that obtained at the last sequence. If the variation of the Shannon entropy between two sequences is within a given precision, it is believed that the Shannon entropy has converged and the probability density function with demanded accuracy is obtained. The order of moments used at the current sequence is the most appropriate one to estimate the probability density function of the response. The detailed procedures are introduced below:

- Step 1.

Initialize the order of the moments l = 0 and Shannon entropy E0, and define the convergence precision ε;

- Step 2.

Let l = l + 2;

- Step 3.

Calculate the first l raw moments of the response function mi (i = 1, 2, ⋯, l) using (12);

- Step 4.

Convert the raw moments mi (i = 1, 2, ⋯, l) into non-standard central moments \( {m}_i^{\prime }\ \left(i=1,2,\cdots, l\right) \) using (15);

- Step 5.

Estimate the probability density function ρ(y) of the response function using (14);

- Step 6.

Calculate the Shannon entropy El of the estimated probability density function ρ(y);

- Step 7.

Check the convergence criterion. If ‖El − El − 2‖/‖El‖ ≤ ε, go to step 8. Otherwise, repeat step 2 to step 7;

- Step 8.

Output the obtained order of moments l and probability density function ρ(y).

4 Numerical examples

4.1 Mathematical problems

In this section, two mathematical problems with different complexities are considered. For mathematical problem 1:

For mathematical problem 2:

X1, X2, and X3 are independent random variables with multimodal probability density distributions (PDFs).

A set of observed data pair is assumed to be obtained for each random variable Xi, i = 1, 2, 3, as shown by the histograms in Fig. 4. For each data pair, it contains the value of X and its occurrence frequency. In total, 46 pairs of data are provided for X1, X2, and X3, respectively. It is not hard to find that the observed data of each variable shows two peaks in the range of variation, therefore, the PDFs of X1, X2, and X3 are bimodal. Accordingly, the Gaussian mixture model with two components is utilized to construct the bimodal PDF of each random variable, and the corresponding parameters are estimated, as presented in Table 1. Then, the PDFs of X1, X2, and X3 are depicted in Fig. 4, along with the observed data set. It can be found that the constructed PDFs of X1, X2, and X3 show a good conformity with the variation of the observed data. Especially, the bimodal characteristic of the data sets are well captured by the constructed PDFs, which indicates the effectiveness of using Gaussian mixture model to construct multimodal distributions.

-

Results of Mathematical problem 1

The raw moments of the response function Y are calculated using the SGNI method and MCS method, based on the input bimodal PDFs of X1, X2, and X3. The highest order of raw moments that are calculated is determined as 10 using the convergence mechanism, in which the convergence value is set as ε = 2 × 10−4. For the MCS method, a large number of samples (1 × 106) are generated using the PDFs of X1, X2, and X3, and the response function values over each sample are calculated to obtain the raw moments. Therefore, the results of MCS are used as the reference to evaluate the computational accuracy of SGNI. Table 2 presents the results obtained by SGNI and MCS. It can be found that the results of SGNI are the same as that of MCS under given rounding precision, which indicates the good accuracy of using SGNI to calculate the raw moments of the response function in this example. Furthermore, it is observed that large variations exist between different orders of raw moments of Y. For example, the value of m1 is − 39.700 while that of m10 is 3.108e + 16, the variation between which reaches up to 16 orders of magnitude. The large variations will result in ill-conditioned matrix in the estimation of the PDF and hence significantly influence the PDF estimation accuracy. Therefore, the raw moments are converted to non-standard central moments in this paper, based on which the numerical computational difficulty can be significantly alleviated and hence the PDF of the response function will be obtained with satisfied accuracy.

To demonstrate the effectiveness of the proposed method, the uncertainty propagation results of MCS and the proposed method are presented in Fig. 5, which include the probability density function and cumulative distribution function (CDF) of Y. It can be intuitively observed that the PDF and CDF results obtained by the proposed method are almost the same with that of MCS in the variation range of Y. To make a better illustration, the CDF values and relative errors of the proposed method are listed in Table 3, where five cases (y = − 60, − 50, − 40, − 30, − 20) are considered. It can be found that for each case, the proposed method obtains the CDF result with a good precision. Under four cases, the relative error of the proposed method is smaller than 2%. The largest relative error of the five cases is only 6.936% when y = − 60.

To illustrate the role of the convergence mechanism acted in the proposed uncertainty propagation method, the evolution processes of the calculated PDF and its Shannon entropy are shown in Fig. 6, in which the order of calculated moments of the response function l increases from 2 to 10. From Fig. 6a, it can be observed that the calculated PDF of the response function approaches to the referenced PDF obtained by MCS little by little, until l = 10 the PDF obtained by the proposed method is almost consistent with the referenced PDF. From Fig. 6b, it can be found that the Shannon entropy of the calculated PDF varies from 3.406 to 3.392 when l increases from 2 to 10. Furthermore, the variation between the Shannon entropy at two sequential steps is becoming smaller and smaller, until l = 10 the variation is under the given convergence value. Therefore, with the help of the proposed convergence mechanism, the PDF of the response function can be calculated with a gradually improved accuracy with the increase of the order of moments, until the most appropriate order of moments is determined for the demanded uncertainty propagation accuracy.

The number of evaluation of the response function Y is used to assess the computational cost of the proposed method. From the discussions above, we can find that the evaluation of the response function Y is only involved in the calculation of the response moment ml using SGNI. Therefore, the computational cost of the proposed method is determined by the level of accuracy k and the dimensionality of the problem N. In this example, k is defined as 6 and N is 3, thus, 410 response function evaluations are required. For the MCS method, 1 × 106 samples are generated and the response function values over each sample are calculated. It is observed that the number of function evaluations of the proposed method is much more smaller than that of MCS.

-

Results of Mathematical problem 2

The first 12 orders of raw moments of Y are calculated using the SGNI method and MCS method, as presented in Table 4. Several phenomena can be observed from Table 4. (1) All the moments calculated by SGNI are very close to that of MCS. The smallest relative error is as tiny as −4.77E-04% and the largest relative error is only 1.486%. The high precision of the calculated raw moments will lay an important foundation for the estimation of the PDF of Y. (2) Large variations exist between different orders of raw moments of Y. The values of the moment become larger and larger with the increase of the order. For each consecutive pair of moments, namely mi and mi + 1, the variation is 1 to 2 orders of magnitude. The largest variation reaches up to 19 orders of magnitude, which exists between m1 and m12. Therefore, the raw moments are converted to non-standard central moments to mitigate the numerical computational difficulty.

The uncertainty propagation results of MCS and the proposed method are presented in Fig. 7, including the PDF and CDF of Y. From Fig. 7a, it is intuitively observed that the PDF results of Y obtained by MCS behaves as a bimodal distribution, with two modes at y ≈ − 40 and y ≈ 0. The PDF results obtained by the proposed method coincide well with that of MCS, and the bimodal characteristic of the referenced PDF results is well captured. From Fig. 7b, it is found that the CDF results of Y obtained by the proposed method are almost consistent with that obtained by MCS. For a better illustration, the CDF values and relative errors of the proposed method are listed in Table 5, where five cases (y = − 80, − 50, − 30, 0, 30) are considered. It is found that the relative errors of CDF results obtained by the proposed method are very small, with the largest one − 4.056% and the smallest one 1.680e − 02%, which demonstrates a high uncertainty propagation accuracy of the proposed method. Furthermore, the uncertainty propagation results of widely-used AK-MCS (Echard et al. 2011) by combining Kriging metamodel and Monte Carlo Simulation are presented in Table 5, in which the active learning method is used to gradually improve the accuracy of Kriging model. It can be observed that high-precision uncertainty propagation results are obtained by AK-MCS for cases y = − 30, 0, 30. However, when y = − 80 and y = − 50, the relative errors reach up to − 90.123% and − 20.692%, respectively.

In the proposed method, the convergence mechanism is utilized to gradually improve the uncertainty propagation accuracy. For a better illustration, the evolution processes of the calculated PDF and its Shannon entropy are shown in Fig. 8, in which the order of calculated moments of the response function l increases from 2 to 12. From Fig. 8a, it is observed that the calculated PDF of the proposed methods approaches to the referenced PDF little by little. When l ≤ 6, only a unimodal PDF is obtained, and it shows a great deviation from the referenced PDF. When 8 ≤ l ≤ 10, a bimodal PDF is already calculated, however, it still demonstrates a relatively large degree of deviation from the reference results, especially in the neighborhood of the modes. When l = 12, the obtained bimodal PDF is almost consistent with that obtained by MCS, which indicates a high-precision uncertainty propagation result is obtained by the proposed method. From Fig. 8b, it is observed that the Shannon entropy of the calculated PDF gradually decreases from 4.546 to 3.392 when l increases from 2 to 12. More importantly, the variation between the Shannon entropy at two sequential steps is becoming smaller and smaller, until l = 12 the variation is under the given convergence value. In other words, the searching process has converged when l = 12 and a satisfied uncertainty propagation accuracy can be obtained for the proposed method.

The computational cost of the proposed method is dominated by the number of response function evaluations involved in the calculation of ml using SGNI. For this example, the level of accuracy is defined as 6 and the dimensionality of the problem is 3; therefore, the number of response function evaluations of the proposed method is 410. For MCS, 1 × 106 response function values are calculated to obtain uncertainty propagation results. For AK-MCS, the computational cost is determined by the number of response function evaluations involved in the establishment of the Kriging metamodel. First, the response function values on 15 sampling points are calculated to establish the preliminary Kriging metamodel. Then, the response function values on 80 added sampling points are calculated one by one to update the Kriging metamodel. In total, 95 response function evaluations are calculated in AK-MCS. Therefore, the proposed method is much more efficient than MCS, while it is less efficient than AK-MCS.

4.2 Speed reducer shaft

As shown in Fig. 9, a speed reducer shaft (Hu and Du 2017) is subjected to a random force P and a random torque T. d and l are the diameter and length of the shaft, respectively. The response function of the speed reducer shaft is defined as the difference between the strength Sy and the maximum equivalent stress σmax:

where X = (Sy, d, l, P, T) denotes the vector of random variables. Sy, d, and l follow unimodal normal probability density functions while P and T follow multimodal probability density functions, the detailed distribution information of which is presented in Table 6. The multimodal probability density functions of P and T are constructed using a set of observed data based on Gaussian mixture model, which are shown in Fig. 10.

A speed reducer shaft (Hu and Du 2017)

The raw moments of the response function are calculated using the distributions of P, T, Sy, d and l by SGNI and MCS, as shown in Table 7. The highest order of calculated raw moments is determined as 6 by the convergence mechanism. First, it is observed that the results of SGNI are very close to that of MCS, the largest relative error between which is only 0.1091%. It indicates that SGNI is able to calculate the raw moments of the response function with a high computational accuracy. Second, large variations exist between different orders of raw moments. For instance, the value of m1 is 1.210e + 02 while that of m6 is 8.676e + 12, the variation between which reaches up to 10 order of magnitudes. The large variations will result in ill-conditioned matrix in the calculation of the PDF of g and hence they are transformed into non-standard central moments.

The PDF results of g are calculated by the proposed method and MCS, which are shown in Fig. 11a, respectively. First, it can be observed that the obtained PDF of the response function g is unimodal in this example, even though the input random variables P and T follow multimodal probability density functions. Second, the PDF curve obtained by the proposed method coincides well with the PDF results of MCS. The CDF results of g calculated by the proposed method and MCS are shown in Fig. 11b. It is found that the CDF results of the proposed method agree very well with that of MCS within the variation range of g. Table 8 presents the specific values at four cases (\( \overline{g}=0,60,120,180 \)). It can be observed that the relative errors of the proposed method are always kept in a very low level. The largest relative error reaches only 6.94e − 02%, which can be neglected in most practical situations. Therefore, the proposed method is considered to be able to achieve satisfied uncertainty propagation accuracy for this example.

The convergence processes of the calculated PDF and its Shannon entropy are shown in Fig. 12, in which the order of calculated moments increases from 2 to 6. It can be found that the calculated PDF of the response function gradually approaches to the referenced results and the variation of Shannon entropy gradually reduces, until l = 6, the PDF obtained by the proposed method is almost consistent with the referenced PDF and the variation of Shannon entropy is reduced to a given small value. Therefore, the PDF of the response function is calculated with a gradually improved accuracy through the convergence mechanism.

The computational cost of the proposed method can be quantified by the number of response function evaluations involved in the calculation of ml using SGNI. For this example, the level of accuracy is defined as 6 and the dimensionality of the problem is 5; therefore, the number of response function evaluations of the proposed method is 2502. For the MCS method, 1 × 106 response function values are calculated to obtain the response moments.

4.3 Vehicle disc brake system

Consider a vehicle disc brake system (Xia et al. 2015) consisting of a brake disc and a pair of brake pads made of friction materials and back plates, as shown in Fig. 13a. To avoid strong vibrations and loud noises in harsh working environment, the damping ratio of the vehicle disc brake system is constrained to be less than − 0.01. Therefore, the response function of this problem is defined:

where p, ω, h1, and h2 are four random variables, which denote the brake pressure, friction coefficient, friction material thickness, and disc thickness, respectively. The detailed distribution information of p, ω, h1, and h2 are presented in Table 9.

Vehicle brake disc system. a CAD model and b FEM model (Xia et al. 2015)

To conduct the uncertainty propagation of this problem, a continuum 3D finite element analysis model including 26,125 elements and 37,043 nodes is established for the vehicle disc brake system, as shown in Fig. 13b. Based on 35 finite element analysis of the vehicle disc brake system, a quadratic polynomial response surface approximation model is established as follows:

With the approximation model, the uncertainty propagation can be completed with a much higher computational efficiency.

The first 12 raw moments of the damping ratio of the vehicle disc brake system are calculated using SGNI and MC. The results and their relative errors are compared, as shown in Table 10. It can be observed that the raw moments obtained by SGNI are very close to that of MCS. The smallest relative error between the results is 5.731e − 02% and the largest one is only 0.2492%. It indicates the good accuracy of using SGNI to calculate the raw moments of the response function involving multimodal random variables. Besides, it is found that there exist large variations between different orders of raw moments of the damping ratio. Each time the order of moment l increases, the variation increases by at least one order of magnitude. When l = 12, the variation between m1 and m10 reaches up the largest value, 17 orders of magnitude. The variation is too large that it will cause significant computational errors when used to calculate the PDF of the damping ratio. Therefore, the raw moments are converted to non-standard central moments to alleviate this issue.

The uncertainty propagation results are obtained by MCS and the proposed method, as shown in Fig. 14. From Fig. 14a, it can be found that the PDF results obtained by the proposed method agree with that of MCS very well. From Fig. 14b, it can be found that the CDF curve of the proposed method also coincides well with that of MCS.

For this example, the order of moments to estimate the PDF of the response function is determined as l = 12 by the convergence mechanism. The convergence process of the calculated PDF and its Shannon entropy under different order of moments are shown in Fig. 15. It is found that when l increases from 2 to 12, the PDF of the response function calculated using the proposed method gradually approaches to the reference PDF obtained by MCS. When l = 12, the estimated PDF is of the highest precision. Furthermore, the Shannon entropy of the response PDF gradually converges to a steady value when l increases from 2 to 12. Therefore, through the convergence mechanism, the PDF of the response function is calculated with a gradually improved accuracy with the increase of l, until the most appropriate order of moments is determined for the demanded uncertainty propagation accuracy.

The computational cost of the proposed method is assessed by the number of response function evaluations involved in the uncertainty propagation process. Since the dimensionality of this problem is 4 and the level of accuracy is 8, therefore, 4900 times of response functions are computed by the proposed method in this example. On the other hand, 1 × 106 response function values are calculated by MCS.

5 Conclusions

In this paper, an uncertainty propagation method is developed for problems in which multimodal probability density functions are involved. First, the multimodal probability density functions of input random variables are established using the Gaussian mixture model. Second, the uncertainties of the input random variables are propagated to the response function through an integration of the sparse grid numerical integration and maximum entropy estimation. At last, the convergence mechanism is developed to improve the uncertainty propagation accuracy. Several numerical examples are used to demonstrate the effectiveness of the proposed method, and some conclusions can be obtained. (i) The proposed method is of high computational accuracy for uncertainty propagation problems involving multimodal probability density functions. From the results of numerical examples, we find that the PDF and CDF results obtained by the proposed method agree with the reference results very well. (ii) The proposed method is able to calculate the probability density function of the response with a gradually improved accuracy with the proposed convergence mechanism, until the demanded uncertainty propagation accuracy is satisfied. (iii) The uncertainty propagation results can be either multimodal or unimodal. If multimodal probability density function is obtained for the response function, then high order of moments are generally required to be calculated. The highest order of moments to be calculated can be well determined by the convergence mechanism in this paper. If the unimodal probability density function is obtained, then we commonly calculate the first 4 or at most 6 orders of moments to estimate the response PDF. (iv) The computational cost of the proposed method is dominated by the number of response function evaluations involved in the calculation of the response moments using SGNI, which is generally determined by the dimensionality of the problem and the level of accuracy utilized in SGNI. From the results of numerical examples, it can be found that the computational efficiency of the proposed method is much higher than MCS, while lower than AK-MCS.

6 Replication of results

In order to facilitate the replication of results presented in this paper, the MATLAB code files of the vehicle disc brake system in Section 4.3 are provided as the supplementary material, and brief descriptions are given to the function of each file, as shown in Table 11. The results of the speed reducer shaft and mathematical problem can also be reproduced conveniently, by modifying the characteristics of the problems such as dimensionality, initial conditions, and performance function.

In total, 11 Matlab code files are provided to perform the proposed method effectively. “Iteration_E5.m” is the main program, which conducts the uncertainty propagation by integrating the SGNI and MEM in the convergence mechanism proposed in Section 3.3. The main program consists of four subprograms, namely, “SGNI.m,” “MEM.m,” “gplot.m,” and “Hankel.m”. “SGNI.m” calculates the raw moments of the response function by SGNI and “MEM.m” calculate the PDF of the response function by MEM, as introduced in Sections 3.1 and 3.2, respectively. “gplot.m” is used to plot the uncertainty propagation results. “Hankel.m” is used to calculate the Hankel det, through which the existence of solution to MEM can be checked. The other files are subprograms of “SGNI.m” or “MEM.m,” which include “nwspgr.m,” “gfun.m,” “me_dens2.m,” “me_dens2.m,” “NMBQR.m,” “get_seq.m,” and “tensor_product.m.” The function of each subprogram is illustrated in Table 11.

References

Amirinia G, Kamranzad B, Mafi S (2017) Wind and wave energy potential in southern Caspian Sea using uncertainty analysis. Energy 120:332–345

Augusti G, Baratta A, Casciati F (1984) Probabilistic methods in structural engineering. Chapman and Hall

Balakrishnan S, Wainwright MJ, Yu B (2017) Statistical guarantees for the EM algorithm: from population to sample-based analysis. Ann Stat 45(1):77–120

Ballaben JS, Sampaio R, Rosales MB (2017) Uncertainty quantification in the dynamics of a guyed mast subjected to wind load. Eng Struct 132:456–470

Ban Z, Liu J, Cao L (2018) Superpixel segmentation using Gaussian mixture model. IEEE Trans Image Process 27(8):4105–4117

Brietung K (1984) Asymptotic approximations for multinormal integral. J Eng Mech 110(3):357–366

Bucher CG (1988) Adaptive sampling-an iterative fast Monte Carlo procedure. Struct Saf 5(2):119–126

Dempster A, Laird N, Rubin D (1977) Maximum likelihood estimation from incomplete data via the EM algorithm. J R Stat Soc B 39:1–38

Doltsinis I, Kang Z (2004) Robust design of structures using optimization methods. Comput Methods Appl Mech Eng 193(23–26):2221–2237

Du X, Chen W (2002) Sequential optimization and reliability assessment method for efficient probabilistic design. J Mech Des 126(2):871–880

Echard B, Gayton N, Lemaire M (2011) AK-MCS: an active learning reliability method combining kriging and Monte Carlo simulation. Struct Saf 33:145–154

Figueiredo MAT, Jian AK (2002) Unsupervised learning of finite mixture models. IEEE Trans Pattern Anal Mach Intell 24(3):381–396

Gerstner T, Griebel M (1998) Dimension integration using sparse grids. Numerical Algorithms 18(3–4):209–232

Haider SW, Harichandran RS, Dwaikat MB (2009) Closed-form solutions for bimodal axle load spectra and relative pavement damage estimation. J Transp Eng 135(12):974–983

He J, Gao S, Gong J (2014) A sparse grid stochastic collocation method for structural reliability analysis. Struct Saf 51:29–34

He J, Guan X, Jha R (2016) Improve the accuracy of asymptotic approximation in reliability problems involving multimodal distributions. IEEE Trans Reliab 65(4):1724–1736

Hu Z, Du X (2017) A mean value reliability method for bimodal distributions, in: Proceedings of the ASME 2017 International Design Engineering Technical Conference & Computers and Information in Engineering Conference, Paper DETC 2017–67279

Jaynes ET (1957) Information theory and statistical mechanics. Phys Rev 106(4):620

Keshavarzzadeh V, Fernandez F, Tortorelli DA (2017) Topology optimization under uncertainty via non-intrusive polynomial chaos expansion. Comput Methods Appl Mech Eng 318:120–147

Keshtegar B, Chakraborty S (2018) A hybrid self-adaptive conjugate first order reliability method for robust structural reliability analysis. Appl Math Model 53:319–332

Khan FA, Khelifi F, Tahir MA, Bouridane A (2019) Dissimilarity Gaussian mixture models for efficient offline handwritten text-independent identification using SIFT and RootSIFT descriptors. IEEE T Inf Foren Sec 14(2):289–303

Lee SH, Chen W (2009) A comparative study of uncertainty propagation methods for black-box-type problems. Struct Multidiscip Optim 37(3):239–253

Lee SH, Kwak BM (2006) Response surface augmented moment method for efficient reliability analysis. Struct Saf 28:261–272

Lima RS, Kucuk A, Berndt CC (2002) Bimodal distribution of mechanical properties on plasma sprayed nanostructured partially stabilized zirconia. Materials Science & Engineering A 327(2):224–232

Low BK, Tang WH (2007) Efficient spreadsheet algorithm for first-order reliability method. J Eng Mech 133(12):1378–1387

MaiTre OPL, Knio OM, Najm HN, Ghanem RG (2004) Uncertainty propagation using wiener–Haar expansions. J Comput Phys 197(1):28–57

Melchers RE (1999) Structural reliability analsyis and prediction. John Willy & Sons

Moens E, Araújo NA, Vicsek T, Herrmann HJ (2014) Shock waves on complex networks. Sci Rep 4:4949

Mori Y, Kato T (2003) Multinormal integrals by importance sampling for series system reliability. Struct Saf 25(4):363–378

Ni YQ, Ye XW, Ko JM (2010) Monitoring-based fatigue reliability assessment of steel bridges: analytical model and application. J Struct Eng 136(12):1563–1573

Ni YQ, Ye XW, Ko JM (2011) Modeling of stress spectrum using long-term monitoring data and finite mixture distributions. J Eng Mech 138(2):175–183

Pan Q, Dias D (2017) An efficient reliability method combining adaptive support vector machine and Monte Carlo simulation. Struct Saf 67:85–95

Papadrakakis M, Stefanou G, Papadopoulos V (2010) Computational methods in stochastic dynamics. Springer

Pranesh S, Ghosh D (2018) A FETI-DP based parallel hybrid stochastic finite element method for large stochastic systems. Comput Struct 195:64–73

Rackwitz R, Flessler B (1978) Structural reliability under combined random load sequences. Comput Struct 9(5):489–494

Rasmussen CE (2000) The infinite Gaussian mixture model. Adv Neural Inf Proces Syst 12(11):554–560

Redner RA, Walker HF (1984) Mixture densities, maximum likelihood and the EM algorithm. SIAM Rev:195–239

Schuöllera GI, Jensen HA (2008) Computational methods in optimization considering uncertainties-an overview. Computer Methods in Applied Mechinics and Engineering 198(1):2–13

Shi Y, Lu Z, Chen S, Xu L (2018) A reliability analysis method based on analytical expressions of the first four moments of the surrogate model of the performance function. Mech Syst Signal Process 111:47–67

Smolyak SA (1963) Quadrature and interpolation formulas for tensor products of certain classes of functions. Dokl Akad Nauk SSSR 4:240–243

Stefanou G (2009) The stochastic finite element method: past, present and future. Comput Methods Appl Mech Eng 198(9–12):1031–1051

Timm DH, Tisdale SM, Turochy RE (2005) Axle load spectra characterization by mixed distribution modelling. J Transp Eng 131(2):83–88

Xia B, Lü H, Yu D, Jiang C (2015) Reliability-based design optimization of structural systems under hybrid pobabilistic and interval model. Comput Struct 160:126–134

Xiong F, Greene S, Chen W, Xiong Y, Yang S (2010) A new sparse grid based method for uncertianty propagation. Struct Multidiscip Optim 41(3):335–349

Xiu D, Hesthaven J (2005) High order collocation method for differential equations with random inputs. SIAM J Sci Comput 27(3):1118–1139

Xu J, Dang C, Kong F (2017) Efficient reliability analysis of structures with the rotational quasi-symmetric point- and the maximum entropy methods. Mech Syst Signal Process 95:58–76

Youn BD, Choi KK, Yang RJ, Gu L (2004) Reliability-based design optimization for crashworthiness of vehicle side impact. Struct Multidiscip Optim 26(3–4):272–283

Zellner A, Highfield RA (1988) Calculation of maximum entropy distributions and approximation of marginalposterior distributions. J Econ 37:195–209

Zhang J, Du X (2010) A second-order reliability method with first-order efficiency. J Mech Des 132(10):1006–1014

Zhang X, He J, Takezawa A, Kang Z (2018) Robust topology optimization of phononic crystals with random field uncertainty. Int J Numer Methods Eng 115(5):1154–1173

Zhang Z, Jiang C, Han X, Ruan XX (2019) A high-precision probabilistic uncertainty propagation method for problems involving multimodal distributions. Mech Syst Signal Pr, accepted

Zhao YG, Ono T (2001) Moment methods for structural reliability. Struct Saf 23(1):47–75

Zivkovic Z (2004) Improved adaptive Gaussian mixture model for background subtraction. In: Proceedings of the 17th international conference on pattern recognition

Funding

This work is supported by the National Natural Science Foundation of China (Grant No. 51805157, Grant No. 51725502 and Grant No. 51490662), Hunan Natural Science Foundation (Grant No. 2019JJ40015).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Responsible Editor: Byeng D Youn

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

ESM 1

(RAR 6 kb)

Appendix

Appendix

Deduction of the ith non-standard central moment \( {m}_i^{\prime } \):

where

Therefore, \( {m}_i^{\prime } \) is rewritten as:

According to the rules of integral operation:

\( {B}_i^{j+1}=\left(\begin{array}{c}i\\ {}j\end{array}\right) \) is the binomial coefficient. Let j = j + 1, then, we obtain:

where \( {B}_i^j=\left(\begin{array}{c}i\\ {}j-1\end{array}\right) \).

Rights and permissions

About this article

Cite this article

Zhang, Z., Wang, J., Jiang, C. et al. A new uncertainty propagation method considering multimodal probability density functions. Struct Multidisc Optim 60, 1983–1999 (2019). https://doi.org/10.1007/s00158-019-02301-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-019-02301-y