Abstract

During the past decade, considerable research has been conducted on constrained optimization problems (COPs) which are frequently encountered in practical engineering applications. By introducing resource limitations as constraints, the optimal solutions in COPs are generally located on boundaries of feasible design space, which leads to search difficulties when applying conventional optimization algorithms, especially for complex constraint problems. Even though penalty function method has been frequently used for handling the constraints, the adjustment of control parameters is often complicated and involves a trial-and-error approach. To overcome these difficulties, a modified particle swarm optimization (PSO) algorithm named parallel boundary search particle swarm optimization (PBSPSO) algorithm is proposed in this paper. Modified constrained PSO algorithm is adopted to conduct global search in one branch while Subset Constrained Boundary Narrower (SCBN) function and sequential quadratic programming (SQP) are applied to perform local boundary search in another branch. A cooperative mechanism of the two branches has been built in which locations of the particles near boundaries of constraints are selected as initial positions of local boundary search and the solutions of local boundary search will lead the global search direction to boundaries of active constraints. The cooperation behavior of the two branches effectively reinforces the optimization capability of the PSO algorithm. The optimization performance of PBSPSO algorithm is illustrated through 13 CEC06 test functions and 5 common engineering problems. The results are compared with other state-of-the-art algorithms and it is shown that the proposed algorithm possesses a competitive global search capability and is effective for constrained optimization problems in engineering applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Constrained optimization problems (COPs) are the most popular type of problems in engineering optimization. COPs are generally composed of objective function, constrained functions, variables and bounds (search space) and can be formulated as (1):

Here f and g are objective function and constrained functions, respectively. \( \overrightarrow{\mathrm{x}} \) are variables and S represents design space defined on RD. q is the number of inequalities and m − q is equalities constrained functions.

For practical engineering optimization problems, the objective function value is generally an engineering objective such as the minimization of cost or mass. The resource limitation is often expressed as constraint. When an optimal solution sits on a boundary of feasible design space, it implies resource is exhausted. By agreement, boundary of constraint refers to as boundary of feasible design space in this article. At boundaries of constraints, values of constraint become zero and these constraints are called active constraints (Bonyadi and Michalewicz 2014). Many existing optimization algorithms have difficulties in handling constraints. Taking penalty function method as an example, a mild punishment may lead to infeasible solutions while excessive punishment may restrict particles moving to regions near boundaries of constraints and therefore, optimal solutions are likely to be missed.

In this paper, one of the existing global optimization algorithms, Particle Swarm Optimization algorithm (PSO), is extended to solve the constrained optimization problem combined with a novel local boundary search method. PSO algorithm was originally proposed by Kennedy and Eberhart (Kennedy and Eberhart 1995). Imitating the cooperative behaviors of fish schooling and bird flocking, the algorithm is based on particle swarm to converge to an optimal solution. Two characteristics of a particle include position and velocity guide motivation. Position is the current location in a search region and velocity is updated according to the individual and the global search history.

While the concept is simple, the PSO algorithm has a strong search capability and becomes a very popular global optimization algorithm. It also has been extended to solve COPs. Parsopoulos (Parsopoulos and Vrahatis 2002) incorporated the non-stationary multi-stage penalty function method into PSO algorithm and the testing results demonstrated the advantage of PSO for solving COPs. Pulido (Pulido and Coello 2004) proposed the feasibility-based rules method for handling constraints in which the global best of particle swarm was chosen based on feasibility rules. A turbulence operator was also used in this method to improve the exploratory ability. Hu (Hu and Eberhart 2002) and Guo (Guo et al. 2004) used a fly-back mechanism to keep particles from escaping the feasible region so that the feasibility of solutions can be proved. He (He and Wang 2007a) proposed a co-evolution PSO approach to solve COPs. In that method, along with the optimization process, the penalty function evolves in a self-adaptive way. He and Wang (He and Wang 2007b) employed the simulated annealing method with PSO algorithm to improve the global search capability of the standard PSO and the feasibility-based rules were used for handling the constrain functions. Paquet (Paquet and Engelbrecht 2007) proposed a PSO variant to solve linear equality constrained problems. All particles positions are initialized at the position satisfying the constraints so that all further generated particles are feasible. Liang (Liang et al. 2010) proposed a CoCLPSO method to deal with COPs in which particles were adaptively assigned to explore different constraints according to their difficulties and the SQP method is also combined in CoCLPSO to improve its local search ability. Sun (C-l et al. 2011) proposed the IVPSO to update the position of particles in a vector pattern and the one-dimensional search method was applied to find feasible positions for particles escaping from the feasible region. Bonyadi (Bonyadi et al. 2013) proposed a constraint handling method in cooperation with MLPSO method to solve COPs. It was assumed that any particles with feasible positions are better than infeasible particles and infeasible particles with smaller constraint violation values are better than those with larger values. Mezura-Montes and Coello (Mezura-Montes and Coello Coello 2011) published a survey paper summarizing considerable amount of existing constraint-handling techniques within nature-inspired algorithms. Main features of each constraint-handling technique have been given and it should be noted that extra parameters are generally required in most of the methods.

As stated above, present researches on constrained PSO algorithms focus on constraint handling mechanism to keep solution feasible while ignoring the fact that a global optimum generally locates near boundaries of constraints. Also, to improve the accuracy of constraint handling, control parameters are often used in these algorithms but adjusting parameters for different problems is always complicated and time-consuming. To overcome the limitations of existing methods, our goal in this work is to improve the constrained PSO algorithm by enhancing local search near boundaries.

The key issue in local boundary search is how to find boundaries of constraints. This research builds on the concept of subset constraints boundary narrower (SCBN) function proposed by Bonyadi MR. (Bonyadi and Michalewicz 2014) The SCBN function was originally proposed to narrow a feasible region of COPs to its boundaries of constraints and particles within the narrowed band region can then be picked and stored during the optimization process of PSO. The area of band region is controlled by adjusting a positive parameter ε. The SCBN function is defined as (2):

From its formulation, HΩ, ε ≤ 0 if and only if at least one of the constraints is 2ε-active in the subset Ω while others are satisfied. After using the SCBN function, particles moving near boundaries of constraints can be easily picked out and their positions will be provided for local boundary search.

Based on the SCBN function, this paper comes up with a parallel boundary search particle swarm optimization (PBSPSO) algorithm which has two parallel branches with cooperative mechanism. In one branch, constrained PSO based on the penalty function method is adopted and global search is conducted. The particles near boundaries of constraints are picked out iteratively using the SCBN function and locations of these particles are stored. In another branch, the local boundary search process is performed from the stored positions as initial points based on the sequential quadratic programming (SQP). The results of local boundary search will reversely influence the search program of the other branch by guiding particles motion direction in the next generation. With the proposed algorithm, the cooperative mechanism of the global and local search processes not only directly increases the search intensity near boundaries of constraints, but also avoids the trial-and-error in adjusting penalty parameters. Note the increase of search intensity improves the chance of finding an optimal solution. The proposed algorithm is relatively suitable for real engineering optimization problems where solution often sits on the boundary of constraint.

This paper is organized as follows. Section 2 provides a brief introduction of the standard PSO algorithm. In Section 3, the proposed PBSPSO algorithm is described covering the constraint handling mechanism, diversity enhanced technique and the algorithm execution process. The result of numerical experiment is presented in Section 4. CEC06 benchmark test functions and five common engineering problems are chosen to test the proposed algorithm. In the last section, conclusions are drawn and the future research work is presented.

2 Standard particle swarm optimization

The basic particle swarm optimization algorithm was originally proposed by Kennedy and Eberhart (Kennedy and Eberhart 1995) in 1995 for unconstrained global optimization problems. After years of development, the standard PSO is widely used now and many modified PSO algorithms are revised from the standard version.

The mathematical expression of the standard PSO algorithm is summarized as follows. Assuming a problem with D-dimensions, \( {x}_k^i \) stands for the position of the ith particle and \( {v}_k^i \) is the velocity. \( {p}_k^i \) and \( {p}_k^g \) are the historically best position of each particle and the global best position, respectively. The updating regulations of all particles are based on two rules: each particle will pursue the historical best position and global best position iteratively. The whole swarm is controlled by equations below. (3) is velocity update equation and (4) is position update equation.

There are three parts in (3). The first part contains the velocity of earlier generation and inertia factor ω. The latter ensures that particles are able to “fly” across the design space and also guarantees the balance between local and global search capacities. A linearly varying inertia weight proposed by Shi and Eberhart (Shi and Eberhart 1999) has significantly improved the standard PSO, shown as (5). iter is the current generation and itermax is the maximum generation. The second part called the cognition component expresses the personality of each particle and motivates particles to pursue its personal best position in the search history. The third part is called the social component part (Kennedy 1997) and represents the collaborative behaviors among particles. c1 and c2 are named cognitive scaling parameter and social scaling parameter, respectively (Eberhart and Shi 2001). r1 and r2 are two random numbers within the range [0,1].

3 The parallel boundary search particle swarm optimization (PBSPSO) algorithm

Conventional constrained PSO algorithms ensure the feasibility of solutions by constraint handling mechanism, such as the penalty function method, but the process of adjusting parameters is complicated in various problems. Meanwhile, optimal solutions may be missed since the inadequate search near boundaries of constraints. To overcome these difficulties, in this work, the constrained PSO is modified based on a local boundary search procedure so that the parameter adjustment process is more straightforward and search capability near boundaries is observably improved. In this section, mechanism of the proposed parallel boundary search particle swarm optimization (PBSPSO) algorithm is introduced in detail.

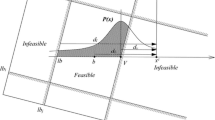

The schematic diagram of the proposed PBSPSO algorithm is shown in Fig. 1. In one branch of PBSPSO, PSO algorithm based on non-stationary penalty function method (Yang et al. 1997) is adopted and PSO global search is conducted. In order to avoid the procedure from stagnating, a judgement criterion has been built and a velocity reset operator proposed in our previous study (Liu et al. 2016) is used to scatter particles away from the stagnation position. Boundary bounce method (Clerc 2006) is applied to pull back particles escaping from the given design space. In each generation, particles are calculated and picked by the SCBN function and location of the particle closest to boundaries of constraints is recorded for the other branch.

Schematic diagram of PBSPSO algorithm. Green area represents feasible region, particles with black full lines are previous generation while others with dotted line are updated, the purple particle is the initial point of SQP search and it is chosen by SCBN function. Blue dot is the result of global search and red dot is the result of SQP search

In the second branch, the sequential quadratic programming (SQP) is executed and the location information recorded in the first branch is set as the initial position of SQP local search. The Hessian matrix is calculated using the BFGS method for the SQP implementation. The results of SQP local search and PSO global search (red and blue dots in Fig. 1, respectively) are compared with each other and the better one is set to be the globally optimal solution of the present generation. When search result near boundary of constraint in the second branch is chosen as the current global optimal solution, the search direction of the PSO algorithm in the next generation will be influenced and particles in next generation will be led to the sensitive boundaries of constraint.

A cooperative mechanism is hereby established that the global search process of the PSO algorithm provides initial points iteratively for the local search process and the best solutions of local boundary search will influence the particles movement direction in return. The termination conditions of the proposed method are set as follows: the maximum generation is reached or relative difference between the results of last two generations is less than ε. The value ε is set as 10−5 in this study.

The parallel structure of PBSPSO algorithm has three major advantages than the existing algorithms. Firstly, local boundary search approach increases search intensity near boundaries of constraints so that there is no need to spend much time on adjusting the control parameter. Secondly, the local boundary search improves the overall search ability of the algorithm and increases the chances of finding a global optimal solution. Thirdly, two branches of the algorithm are not independent with each other while PSO provides initial location information for local boundary search and the results of local boundary search may lead PSO global search to sensitive boundaries of constraint. This treatment ensures the algorithm efficiency. Together, the parallel algorithm structure and the cooperative mechanism between global and local boundary search method effectively improved the optimization capability of the algorithm.

The algorithm flow chart is displayed in Fig. 2.

3.1 Constraint handling for the PBSPSO algorithm

As a constraint handling mechanism, the penalty function technique is simple in concept and convenient in operation. So, the widely-used non-stationary penalty functions (Yang et al. 1997) are employed in our work to handle constrain functions. The penalty functions are defined as in (6) and (7):

Here qi(x) = max {0, gi(x)}, i = 1, …, m. θ(qi(x)) is the multi-stage assignment function. γ(qi(x)) is the power of the penalty function and gi(x) represents the constrained functions. The rules of parameters are specified below (Eberhart and Shi 2001):

\( h(k)=k\sqrt{k} \) for our optimization problem.

3.2 Diversity enhancement in the PBSPSO algorithm

It has been noted that the PSO may be trapped into stagnation for numbers of generations without any improvement. For the purpose of escaping from stagnation, a velocity reset operator presented in our previous work (Liu et al. 2016) is employed.

Once the programming is judged to trap into stagnation, velocity reset operator is activated and velocity of swarm will be reset according to the current generation number and the inertia weight factor ω. The velocity reset equation is shown as (8).

where Vrand is a velocity matrix in which elements are randomly defined between ‐Vmax and Vmax.μ records the search process and will linearly decrease when the iterations increases. itermax and itercurrent are the max generation and the current generation, respectively. rw is named velocity correlation coefficient and its boundary is within a predefined range [rwmin, rwmax] referring to the inertia weight factor ω of the standard PSO. Along with the optimization process, (itermax − itercurrent) decreases and the value of μ is diminished. rw is used to control the distribution range of reset velocities. Finally, Vreset shrinks and the algorithm’s convergence property is guaranteed. Once the adaptive reset operator is activated, velocities are reset by (8) and particles are rebounded off the stagnation position by (9).

3.3 Implementation of the PBSPSO algorithm

The implementation of the proposed PBSPSO algorithm is described as follows.

4 Numerical experiment

In this section, thirteen benchmark test functions from CEC06 (Liang 2006) and five real world engineering problems were chosen to test the proposed algorithm. The benchmark test functions from CEC06 are used to test the ability of the proposed algorithm to deal with conventional constrained optimization problems while five engineering problems are used to evaluate its ability to solve practical engineering optimization problems.

The initial population is set to be 40 for both test functions and engineering problems. The population of g02 and g03 is 100 because of the strong nonlinearity of these problems. The thickness parameter ε in SCBN is set as 0.5 and tolerance δ for the translation of equality constraints into inequalities is set as 1.0E-4. 25 runs have been completed for each problem. The tests were run on a computer with 3.40GHz core i7 CPU and 8 GB RAM.

4.1 Benchmark test functions

The chosen 13 functions from CEC06 are characterized as several types based on the number of variables, degree of nonlinearity, proportion of feasible region, constraint type and the number of active constraints. See Table 1.

Table 2 shows the results of benchmark test functions of PBSPSO and contains the best, mean, worst, SD values, the maximum, average and minimum iteration numbers and the initial population size for different functions. It must be noted that the average iteration of four functions are below 100 while that of ten functions are below 500, which indicates relatively high levels of efficiency of the proposed algorithm.

The proposed algorithm is compared with the existing most advanced algorithms including SMES (Mezura-Montes and Coello 2005), HCOEA (Wang et al. 2007), ATMES (Wang et al. 2008), M-ABC (Mezura-Montes and Cetina-Dominguez 2012), PSGA (Dhadwal et al. 2014), MOPSO (Venter and Haftka 2010) and DSS-MDE (Zhang et al. 2008). Instead of using the conventional penalty function, SMES (Mezura-Montes and Coello 2005) is based on a diversity mechanism and feasibility-based comparison mechanism to handle infeasible solutions and guide particles to the feasible region. HCOEA (Wang et al. 2007) effectively combines multi-objective optimization with a niching genetic algorithm for global search and a parallel local search adopting a clustering partition of the population and multiparent crossover. To achieve a balance between optimizing the objective function and reducing constraint violations, an adaptive tradeoff model (ATM) is combined with the evolutionary strategy (ES) in ATMES (Wang et al. 2008). M-ABC (Mezura-Montes and Cetina-Dominguez 2012) is a constrained optimization algorithm containing four modifications such as selection mechanism, the scout bee operator and the equality constraint and constraints of boundaries. PSGA (Dhadwal et al. 2014) is a novel hybrid evolutionary algorithm composed of PSO and GAs, in which PSO is aimed for improving the worst solutions and efficiency while GAs is to keep population diversity. A constrained, single objective optimization problem is converted into an unconstrained, bi-objective problem in Multi-objective Particle Swarm Optimization (Venter and Haftka 2010) (MOPSO) algorithm and the method does not need any problem dependent parameters while it provides performance that is similar or superior to that of a tuned penalty function approach. Considering the promise in feasible solutions during the evolution process, DSS-MDE (Zhang et al. 2008) assembles the dynamic stochastic selection (DSS) with multimember differential evolution.

The comparison is made based on the statistical performance of each algorithm. The PBSPSO achieves the present optimum solutions for all test functions except for g03. Apart from the optimum, mean and worst values of PBSPSO are also better than using other algorithms in full. The results of g02 and g03 are not good because the two problems are of strong nonlinearity. Overall, PBSPSO is strong to be competitive against other algorithms on CEC06 benchmark test functions. The results are displayed in Table 3 and numbers in bold represent optimal results.

To detect significant differences between algorithms in Table 3, the Wilcoxon matched-pairs signed-ranks test (Garcia et al. 2009), a non-parametric pairwise statistical test has been made in this study. The Wilcoxon tests have been conducted with three levels of significance including α = 0.05, α = 0.1 and α = 0.2. The results of Wilcoxon test are listed in Table 4. The statistical hypotheses for the Wilcoxon test are as follows:

-

(1)

The null hypothesis H0: The results of two algorithms come from one statistical population.

-

(2)

The alternative hypothesis H1: The results of two algorithms come from different statistical populations.

According to results of Wilcoxon test in Table 4, conclusions can be drawn:

-

(1)

The PBSPSO algorithm outperforms SMES and M-ABC(2/7) with a level of significance α = 0.05.

-

(2)

The PBSPSO algorithm outperforms SMES, M-ABC and MOPSO(3/7) with a level of significance α = 0.1.

-

(3)

The PBSPSO algorithm outperforms SMES, M-ABC, MOPSO, HCOEA and ATMES(5/7) with a level of significance α = 0.2.

By means of the Wilcoxon test, the statement: "PBSPSO is strong to be competitive against other algorithms." could be statistically supported.

4.2 Engineering problems

Five common engineering problems have been tested in this part.

4.2.1 Tension/compression spring design optimization problem

Tension/compression spring design optimization problem aims at minimizing the weight of the spring constrained on minimum deflection, shear stress, surge frequency and outside diameter. Three variables are the wire diameter (x1), the mean coil diameter (x2) and the number of active coils (x3), respectively. Fig. 3 presents a schematic view of the problem. The mathematical model of the problem is shown in Appendix 1.

The proposed PBSPSO is compared with a number of advanced constrained optimization algorithms including DEDS (Zhang et al. 2008), HEAA (Wang et al. 2009), WCA (Eskandar et al. 2012a), DELC (Wang and Li 2010), G-QPSO (Coelho 2010), MBA (Sadollah et al. 2013), NM-PSO (Zahara and Kao 2009), IAPSO (Guedria 2016), GA2 (Coello and Montes 2002), CAEP (Coello and Becerra 2004), CPSO (He and Wang 2007a), HPSO (He and Wang 2007b), DE (Lampinen 2002), PSO-DE (Liu et al. 2010), LCA (Kashan 2011), APSO (Yang 2010), ABC (Cuevas and Cienfuegos 2014). The best solutions and the statistical performance of results are summarized in Table 5 and Table 6, respectively.

The active constraints of the problem are g1 and g2. The values of the two constraints in best results of WCA, MBA and PBSPSO are closer to zero than others, which indicates that the optimal solutions of these three algorithms impend over boundaries of active constraints. In other words, deflection and shear stress are quite close to the minimum deflection (represented by g1) and the limited shear stress (represented by g2), respectively. The mean and the worst results of PBSPSO are also better than most of other algorithms. The standard deviation results show that results centrality of PBSPSO is greater. All the 25 runs finished within 100 iterations.

4.2.2 Three bar truss design problem

The problem aims at minimizing the total volume of bar truss system. Refer to Appendix 2 for mathematical form of the problem.

The best solutions and statistic results of PBSPSO are compared with DEDS (Zhang et al. 2008), PSO-DE (Liu et al. 2010), WCA (Eskandar et al. 2012a), MBA (Sadollah et al. 2013), SCA (Ray and Liew 2003), HEAA (Wang et al. 2009), see Table 7 and Table 8.

The active constraint of the problem is g1. The best solutions of DEDS, PSO-DE and PBSPSO are closer to boundaries of constraints and are also better than other algorithms. The mean, worst and SD values of PBSPSO are better than all of others. It should be noted that the SD value of PBSPSO is 1E-14 order of magnitude, which is much smaller and far better than other algorithms. The maximum iteration number is 51 in 25 runs.

4.2.3 Welded beam design optimization problem

The welded beam design optimization problem aims to minimize the fabrication cost of welded beam. The problem is constrained by shear stress, bending stress in the beam, buckling load on the bar and deflection on the beam. The design variables include the thickness of the welding line (x1), length of the weld (x2), beam thickness (x3) and width of the beam (x4). Fig. 4 shows the schematic view of the problem. Mathematical description of the problem is presented in Appendix 3.

Algorithms chosen for comparison in this problem include: GA2 (Coello and Montes 2002), CPSO (He and Wang 2007a), CAEP (Coello and Becerra 2004), NM-PSO (Zahara and Kao 2009), WCA (Eskandar et al. 2012b), MBA (Sadollah et al. 2013), IAPSO (Guedria 2016), HPSO (He and Wang 2007b), PSO-DE (Liu et al. 2010), LCA (Kashan 2011), APSO (Yang 2010),SSO-C (Cuevas and Cienfuegos 2014) and FA (Gandomi et al. 2011). See Table 9 and Table 10.

The active constraints of the problem are g1, g2 and g3. The optimal solutions of IAPSO and PBSPSO are closer to the boundaries of constraints defined by g1, g2 and g3 than any other solutions and the optimal solutions of the two algorithms are also the best. The result of CAEP is superior but not feasible. Statistic results show that the optimal solution of PBSPSO algorithm is superior to 13 state-of-the-art algorithms in Table 10. The maximum iteration number is 81 in 25 runs among all algorithms.

4.2.4 Pressure vessel design optimization problem

Pressure vessel design optimization problem is considered here as the fourth problem. The target of the problem is to minimize the total fabricating cost of the pressure vessel. The constraints include materials, forming and welding costs. The design variables are thickness of the shell (x1), thickness of the head (x2), the inner radius (x3) and the length of the cylindrical section of the vessel (x4) as plotted in Fig. 5. Mathematical description is presented in Appendix 4.

The optimum and statistical performance of results of PBSPSO are compared with CDE (Fz et al. 2007), GA2 (Coello and Montes 2002), G-OPSO (Coelho 2010),WCA (Eskandar et al. 2012b), MBA (Sadollah et al. 2013), APSO (Yang 2010), IAPSO (Guedria 2016), NM-PSO (Zahara and Kao 2009), G-QPSO (Coelho 2010) and LCA (Kashan 2011) as summarized in Table 11 and Table 12.

The active constraints are g1, g2 and g3 in this problem as shown in Table 11. The best solutions of WCA and PBSPSO are closer to the three boundaries of constraints and the performance of the two algorithms precedes others for this problem. The best solution of MBA is quite close to two of the active constraints (g1 and g2), however, it is far from the boundary of constraint defined by g3. As a result, it is worse than solutions of WCA and PBSPSO. The statistic results shown in Table 12 demonstrate that the solution of PBSPSO possesses a strong centrality and is superior to other constrained algorithms for this problem. The maximum iteration number is 69 in 25 runs.

4.2.5 Speed reducer design optimization problem

This problem aims to minimize the mass of the speed reducer as shown in Fig. 6. The problem is constrained by the bending stress of gear teeth, the surface stress, transverse deflections of the shafts and stress in the shafts. Variables are the face width (x1), module of teeth (x2), number of teeth in the pinion (x3), length of the first shaft between bearings (x4), length of the second shaft between bearings (x5) and the diameter of the first (x6) and the second shafts (x7), respectively. See Appendix 5 for mathematical expression.

The following algorithms are involved in comparison of this problem: DEDS (Zhang et al. 2008), HEAA (Wang et al. 2009), PSO-DE (Liu et al. 2010), WCA (Eskandar et al. 2012b), MBA (Sadollah et al. 2013), LCA (Kashan 2011), IAPSO (Guedria 2016), SCA (Ray and Liew 2003), ABC (Akay and Karaboga 2012), SC (Ray and Liew 2003) and APSO (Yang 2010). See Table 13 and Table 14.

There are 4 active constraints including g5, g6, g8 and g11. The best solution of PBSPSO is closer to the boundaries of active constraints as indicated by values of active constraints. The best solution of ABC is the worst in all algorithms because the solution is respectively far from the boundary of the active constraint (g11) indicating that resource expressed by g11 was not made full use of. The mean and worst solutions of PBSPSO are also better than other algorithms and the S.D value is relatively small. The maximum iteration number is 45 for this problem.

4.2.6 Further discussion

As mentioned before, optimal solutions of actual engineering problems generally locate on boundaries of active constraints because of the limitation in resources (Bonyadi and Michalewicz 2014). In some ways, the close distance in which solutions get to boundaries of constraints indicates the strong capability algorithms possess to conduct boundary search. As a result, solutions found are relatively superior. In order to quantitatively describe this significant characteristic of constrained optimization algorithms, a generalized distance \( \tilde{\mathrm{D}} \) is defined in this article as (10).

Diis formulated as (11).

i is the number of constraints. |gi(x)| is the absolute value of the ith constraint and there are three components in (11). If |gi(x)| ≥ 1, it means the solution is far away from the ith constraint, Di equals zero. If 10−20 < |gi(x)| < 1, the solution is near the ith constraint and Di is defined as log10|gi(x)|. If f|gi(x)| ≤ 10−20, the solution is almost on the ith boundary of constraint and Di is set to be −20. The value of \( \tilde{\mathrm{D}} \) is a negative real number without unit. The smaller the value, the closer the solutions get to boundaries of constraints. The \( \tilde{\mathrm{D}} \)values in the above 5 engineering problems are presented using the bar charts as shown in Fig. 7.

As displayed in Fig. 7, generalized distance values of PBSPSO are relatively small in all the five problems, which indicates that the optimal solutions are close to active constraints and the algorithm possesses strong capability of boundary search. Summarized the overall performance of the modified constrained PSO algorithms in boundary search, the proposed algorithm behaves better than other modified PSO algorithms such as G-QPSO, IAPSO, CPSO and APSO. Although some algorithms, e.g., MBA, WCA and DEDS are better than PBSPSO in some problems, their performance fluctuates from one problem to another, which reveals the relative instability of these algorithms in different engineering problems. For example, MBA performs well in the spring design problem and the vessel design problem. In other three problems, its solutions are ordinary while performance of PBSPSO is stable and competitive in all problems.

5 Conclusions and future research work

In real world engineering optimization problems, Optimal solutions generally locate on boundaries of active constraints because of limitation of resources. Considering this characteristic, a parallel boundary search particle swarm optimization (PBSPSO) method is proposed as a novel constrained optimization algorithm in this paper. The PBSPSO has two branches with cooperative mechanism. In the global search branch, PSO with the penalty function method conducts the search process. The velocity reset operator in our prior development of PSO algorithm is adopted to enhance swarm diversity. Particles are calculated by the SCBN function and locations of those close to the boundaries of constraints are selected as initial points in the branch of local boundary search. In the local boundary search branch, SQP proceeds at the points identified by the former. The results from the local boundary search procedure will reversely influence the global search process in the next generation and particles will be led to boundaries of active constraints. The proposed algorithm relieves the complex parameters adjustment course in traditional constrained PSO methods. Its cooperative mechanism increases the chance of finding global optimal solutions, which is suitable for practical engineering constrained optimization problems. PBSPSO is tested by thirteen CEC06 benchmark test functions and five common engineering optimization problems. Conclusions are summarized as follows:

-

(a)

From the results of benchmark functions, the PBSPSO algorithm with parallel structure and cooperative mechanism is shown to be effective in search capabilities and results stability.

-

(b)

The employment of a local boundary search process significantly increases the chance of finding global optima near the boundaries of constraints. This local boundary search procedure is suitable for practical engineering problems. The test results of five engineering problems support the above conclusion.

-

(c)

By combining velocity reset operator with the PSO, the proposed method overcomes the limitation of existing modified constrained PSO algorithms in falling into local solutions. As a result, the global search capability of the PBSPSO algorithm is improved effectively.

In future work, the selection strategy of initial points picking will be further studied and the local boundary search process will be further improved.

References

Bonyadi MR, Michalewicz Z (2014) On the edge of feasibility: a case study of the particle swarm optimizer. Ieee Congress on Evolutionary Computation. p. 3059–66

Kennedy J, Eberhart R (1995) Particle swarm optimization, in: 1995 I.E. international conference on neural networks proceedings, pp. 1942–1948(vol 48)

Parsopoulos KE, Vrahatis MN (2002) Particle Swarm Optimization Method for Constrained Optimization Problem: CiteSeer,

Pulido GT, Coello CAC (2004) A constraint-handling mechanism for particle swarm optimization. Cec2004: Proceedings of the 2004 Congress on Evolutionary Computation. p. 1396–403 Vol.2

Hu XH, Eberhart R (2002) Solving constrained nonlinear optimization problems with particle swarm optimization, in: 6th world multi-conference on Systemics, cybernetics and informatics (SCI 2002)/8th international conference on information systems analysis and synthesis (ISAS 2002)

Guo CX, Hu JS, Ye B, Cao YJ (2004) Swarm intelligence for mixed-variable design optimization. J Zhejiang Univ (Sci) 5:851–860

He Q, Wang L (2007a) An effective co-evolutionary particle swarm optimization for constrained engineering design problems. Eng Appl Artif Intell 20:89–99

He Q, Wang L (2007b) A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Appl Math Comput 186:1407–1422

Paquet U, Engelbrecht AP (2007) Particle swarms for linearly constrained optimisation. Fundamenta Informaticae 76:147–170

Liang JJ, Shang Z, Li Z (2010) Coevolutionary comprehensive learning particle swarm optimizer. IEEE Congress on Evolutionary Computation:1–8

C-l S, J-c Z, J-s P (2011) An improved vector particle swarm optimization for constrained optimization problems. Inf Sci 181:1153–1163

Bonyadi M, Li X, Michalewicz Z (2013) A hybrid particle swarm with velocity mutation for constraint optimization problems. Proceeding of the Fifteenth Conference on Genetic and Evolutionary Computation Conference. p. 1–8

Mezura-Montes E, Coello Coello CA (2011) Constraint-handling in nature-inspired numerical optimization: past, present and future. Swarm and Evolutionary Computation 1:173–194

Shi Y, Eberhart R (1999) Modified particle swarm optimizer. IEEE international conference on evolutionary computation proceedings, 1998 I.E. world congress on Computational Intelligence 1999. p. 69–73

Kennedy J (1997) The particle swarm: social adaptation of knowledge. IEEE International Conference on Evolutionary Computation 1997. p. 303–8

Eberhart RC, Shi YH (2001) Particle swarm optimization: Developments, applications and resources,

Yang JM, Chen YP, Horng JT, Kao CY (1997) Applying family competition to evolution strategies for constrained optimization. Evolutionary Programming Vi, International Conference, Ep97, Indianapolis, Indiana, Usa, April 13-16, , Proceedings1997. P. 201–11

Liu Z, Lu J, Zhu P (2016) Lightweight design of automotive composite bumper system using modified particle swarm optimizer. Compos Struct 140:630–643

Clerc M (2006) Confinements and biases in particle swarm optimisation

Liang JJ (2006) PNS. Problem Definitions and Evaluation criteria for the CEC 2006 special session on constrained real-parameter Optimization

Mezura-Montes E, Coello CACA (2005) Simple multimembered evolution strategy to solve constrained optimization problems. IEEE Trans Evol Comput 9:1–17

Wang Y, Cai Z, Guo G, Zhou Y (2007) Multiobjective optimization and hybrid evolutionary algorithm to solve constrained optimization problems. Ieee Transactions on Systems Man and Cybernetics Part B-Cybernetics 37:560–575

Wang Y, Cai Z, Zhou Y, Zeng W (2008) An adaptive tradeoff model for constrained evolutionary optimization. IEEE Trans Evol Comput 12:80–92

Mezura-Montes E, Cetina-Dominguez O (2012) Empirical analysis of a modified artificial bee Colony for constrained numerical optimization. Appl Math Comput 218:10943–10973

Dhadwal MK, Jung SN, Kim CJ (2014) Advanced particle swarm assisted genetic algorithm for constrained optimization problems. Comput Optim Appl 58:781–806

Venter G, Haftka RT (2010) Constrained particle swarm optimization using a bi-objective formulation. Struct Multidiscip Optim 40:65–76

Zhang M, Luo W, Wang X (2008) Differential evolution with dynamic stochastic selection for constrained optimization. Inf Sci 178:3043–3074

Garcia S, Molina D, Lozano M, Herrera F (2009) A study on the use of non-parametric tests for analyzing the evolutionary algorithms' behaviour: a case study on the CEC'2005 special session on real parameter optimization. J Heuristics 15:617–644

Wang Y, Cai Z, Zhou Y, Fan Z (2009) Constrained optimization based on hybrid evolutionary algorithm and adaptive constraint-handling technique. Struct Multidiscip Optim 37:395–413

Eskandar H, Sadollah A, Bahreininejad A, Hamdi M (2012a) Water cycle algorithm - a novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput Struct 110:151–166

Wang L, Li LP (2010) An effective differential evolution with level comparison for constrained engineering design. Struct Multidiscip Optim 41:947–963

Coelho LDS (2010) Gaussian quantum-behaved particle swarm optimization approaches for constrained engineering design problems. Expert Syst Appl 37:1676–1683

Sadollah A, Bahreininejad A, Eskandar H, Hamdi M (2013) Mine blast algorithm: a new population based algorithm for solving constrained engineering optimization problems. Appl Soft Comput 13:2592–2612

Zahara E, Kao YT (2009) Hybrid Nelder–mead simplex search and particle swarm optimization for constrained engineering design problems. Expert Syst Appl 36:3880–3886

Guedria NB (2016) Improved accelerated PSO algorithm for mechanical engineering optimization problems. Appl Soft Comput 2016:455–467

Coello CAC, Montes EM (2002) Constraint-handling in genetic algorithms through the use of dominance-based tournament selection. Adv Eng Inform 16:193–203

Coello CAC, Becerra RL (2004) Efficient evolutionary optimization through the use of a cultural algorithm. Eng Optim 36:219–236

Lampinen J (2002) A constraint handling approach for the differential evolution algorithm. Cec'02: proceedings of the 2002 congress on evolutionary computation, Vols 1 and 2, . p. 1468–73

Liu H, Cai Z, Wang Y (2010) Hybridizing particle swarm optimization with differential evolution for constrained numerical and engineering optimization. Appl Soft Comput 10:629–640

Kashan AH (2011) An efficient algorithm for constrained global optimization and application to mechanical engineering design: league championship algorithm (LCA): Butterworth-Heinemann

Yang XS (2010) Engineering optimization—an introduction with metaheuristic applications. New York. John Wiley & Sons, Wiley, pp 291–298

Cuevas E, Cienfuegos M (2014) A new algorithm inspired in the behavior of the social-spider for constrained optimization. Expert Syst Appl 41:412–425

Ray T, Liew KM (2003) Society and civilization: an optimization algorithm based on the simulation of social behavior. IEEE Trans Evol Comput 7:386–396

Eskandar H, Sadollah A, Bahreininejad A, Hamdi M (2012b) Water cycle algorithm – a novel metaheuristic optimization method for solving constrained engineering optimization problems. Comput Struct:151–166

Gandomi AH, Yang X-S, Alavi AH (2011) Mixed variable structural optimization using firefly algorithm. Comput Struct 89:2325–2336

He Q, Wang L (2007c) A hybrid particle swarm optimization with a feasibility-based rule for constrained optimization. Appl Math Comput 186:1407–1422

Fz H, Wang L, He Q (2007) An effective co-evolutionary differential evolution for constrained optimization. Appl Math Comput 186:340–356

Akay B, Karaboga D (2012) Artificial bee colony algorithm for large-scale problems and engineering design optimization. J Intell Manuf 23:1001–1014

Acknowledgements

The research leading to the above results was supported by National Natural Science Foundation of China (Grant No. 11772191), National Science Foundation for Young Scientists of China (Grant No. 51705312) and National Postdoctoral Foundation of China (Grant No. 17Z102060055). The authors also acknowledge the support from the Adjunct Professor position provided by the Shanghai Jiao Tong University to Prof. Wei Chen.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1 Tension/compression spring design problem

subject to:

Appendix 2 Three bar truss design problem

subject to:

Appendix 3 Welded beam design problem

subject to:

Appendix 4 Pressure vessel design problem

subject to:

Appendix 5 Speed reducer design problem

subject to:

Rights and permissions

About this article

Cite this article

Liu, Z., Li, Z., Zhu, P. et al. A parallel boundary search particle swarm optimization algorithm for constrained optimization problems. Struct Multidisc Optim 58, 1505–1522 (2018). https://doi.org/10.1007/s00158-018-1978-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-018-1978-3