Abstract

The importance sampling method is an extensively used numerical simulation method in reliability analysis. In this paper, a modification to the importance sampling method (ISM) is proposed, and the modified ISM divides the sample set of input variables into different subsets based on the contributive weight of the importance sample defined in this paper and the maximum super-sphere denoted by β-sphere in the safe domain defined by the truncated ISM. By this proposed modification, only samples with large contributive weight and locating outside of the β-sphere need to call the limit state function. This amelioration remarkably reduces the number of limit state function evaluations required in the simulation procedure, and it doesn’t sacrifice the precision of the results by controlling the level of relative error. Based on this modified ISM and the space-partition idea in variance-based sensitivity analysis, the global reliability sensitivity indices can be estimated as byproducts, which is especially useful for reliability-based design optimization. This process of estimating the global reliability sensitivity indices only needs the sample points used in reliability analysis and is independent of the dimensionality of input variables. A roof truss structure and a composite cantilever beam structure are analyzed by the modified ISM. The results demonstrate the efficiency, accuracy, and robustness of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Reliability analysis measures the ability that a system or a component fulfills its intended function without failures by taking uncertainties into account. The fundamental problem is to determine the failure probability with a typical expressionP f = Pr {g(x) = ε − ψ(x) < 0}, where εis a failure threshold, ψ(x)is the response function such as the deformation of structure, g(x)is a limit state function, and x is a vector of random input variables including the applied loads, material properties, operating condition, and geometry or configuration, etc.

Theoretically speaking, the failure probability can be obtained once the cumulative distribution function (CDF) F G (g) of the limit state functiong(x), is available, i.e., P f = F G (0). However, analytical derivation of F G (g)is infeasible for complex limit state function.

During the past several decades, various approximate methods have been developed to estimate the failure probability. The first-order reliability method (FORM) (Hasofer and Lind 1974; Zhao and Ono 1999a) and the second-order reliability method (SORM) (De Der Kiureghian 1991; Zhao and Ono 1999b) are mainly-used method for their efficiency. FORM especially is considered as one of the most acceptable and feasible computational methods, and is developed into two scenarios. One is the mean value FORM (MVFORM) by a linearization of the limit state function around the mean point of input variables, and the other is the advanced FORM (AFORM) by linearizing the limit state function around the most probable point (MPP). However, the accuracy of both FORM and SORM may be abated when encountering the strongly nonlinear cases. Besides, the first-order and second-order partial derivatives of the limit state function g(x) with respect to the model input variables need to be determined in advance, respectively. To overcome the shortcoming of the FORM and the SORM, moment methods for structural reliability are developed (Zhao and Ono 2001; Zhao et al. 2006; Zhao and Ono 2004). Moment methods needn’t the partial derivatives of the limit state function and are simple to implement. However, for some problems with small failure probability, the moment method cannot provide accurate results. Besides, the totally different results may be yielded for different equivalent formulations of the same limit state function by the moment methods (Xu and Cheng 2003). The main reason for error of the moment methods is that the CDF of the limit state function is difficult to be determined only by its first four moments, especially in the tail range. In addition, the high order moments such as the third- and fourth- order moments for strongly nonlinear limit state function are difficult to estimate accurately. Therefore, the fractional moment based maximum entropy is investigated by Zhang and co-workers (Zhang and Pandey 2013) for reliability analysis, where the probability density function (PDF) of the limit state function is approximated by the maximum entropy procedure under the given fractional moment constraints. This method is superior to the integral moment constraints because a fractional moment embodies information about a large number of central moments (Zhang and Pandey 2013). To efficiently estimate the fractional moments, Ref. (Zhang and Pandey 2013) proposed a multiplicative form of dimensional reduction method. The obvious advantage is the rather low computational cost, but it also has some deficiencies. It limits the positive limit state function with low interactive effects and the limit state value cannot be zero when input variables are fixed at their mean values. To reduce the calls of limit state function, the limit state function is usually surrogated by the developed meta-models like quadratic response surface (Kim and Na 1997), neural networks (Papadrakakis and Lagaros 2001), support vector machine (Song et al. 2013; Bourinet et al. 2011) and Kriging (Echard et al. 2011; Hu and Mahadevan 2016). This type of surrogate method needs a post-processing computational cost to evaluate the reliability, although the post-processing computational cost is usually ignored because it is relatively smaller than that of evaluating the limit state function, it dose still exist. Therefore, an efficient post-processing reliability analysis method can enhance the efficiency of meta-model methods effectively.

To avoid estimating the moments and the partial derivatives of the limit state function, Monte Carlo simulation (MCS), a universal method is a good choice since it is adapted for all problems and all distribution types. To improve the efficiency and accuracy of the direct MCS, variance reduction techniques such as importance sampling method (ISM) (Zhang et al. 2014; Melchers 1989; Harbitz 1986; Au and Beck 2002; Zhou et al. 2015) should be preferred. The ISM shifts the sampling center from the mean point of input variables to the MPP. To further construct the more optimal IS density, kernel density estimation (Au and Beck 1999) is employed to estimate the failure probability adaptively.

The modified version of the original ISM is mainly investigated in this paper to save a part of the computational cost and enhance the efficiency of the original ISM. Firstly, truncated importance sampling (TIS) procedure (Grooteman 2008) is employed by introducing the β-sphere, where the sample points dropped into the inner of the β-sphere are all safe. Therefore, the sample points within the inner of the β-sphere don’t need to run the true model, and this process is the first part to save the computational cost for the original ISM. Secondly, contributive weight function is defined in this paper by dividing the original PDF by the importance sampling PDF, which measures the contribution of importance sample points to the failure probability. Thus, the sample points with small contribution are selected by defining a specified tolerance, and these sample points also don’t need to run the true model to decide whether failure or not. Due to that the importance sample points with small contribution usually in the sparse domain with very small PDF, while the inner of the β-sphere are usually in the intensive domain with large PDF, the proposed method decreases the computational cost of the original ISM from two different domains, and these two domains may be independent or have a small intersection.

By the information of the modified ISM in failure probability analysis, space-partition method is extended to estimate the global reliability sensitivity indices which are more useful in reliability-based design optimization and defined in Refs (Cui et al. 2010; Li et al. 2012; Wei et al. 2012). The space-partition method is used in variance-based sensitivity analysis originally. The proposed method is independent of the dimensionality of the model input variables by repeatedly dividing the vector of failure indicator values into different subsets according to different inputs.

The main contributions of this work include: ① modified ISM is investigated by using β-sphere which appears in TIS and the contributive weight function defined in this paper to reduce the computational cost of original ISM from two different domains then to enhance the efficiency of ISM. This amelioration remarkably reduces the number of limit state function evaluations required in the simulation procedure, and it doesn’t sacrifice the precision of the results by controlling the level of relative error. ② space-partition method combined with the modified ISM technique is proposed to estimate the global reliability sensitivity indices efficiently, and this method is independent of the dimension of model input variables; ③ failure probability and global reliability sensitivity indices are estimated simultaneously by one group of model evaluations.

The rest of this paper is organized as follows. Section 2 briefly reviews the definitions of the structural reliability and the global reliability sensitivity indices. Section 3 introduces the original ISM for reliability analysis briefly and the modified ISM proposed in this paper elaborately. Along with the proposed modified ISM, a new computational method of global reliability sensitivity indices is proposed based on the space-partition idea in Section 4. Section 5 analyzes a roof truss structure and a composite cantilever beam structure to verify the accuracy, efficiency, and robustness of the proposed method. Finally, conclusions are summarized in Section 6.

2 Reviews of structural reliability analysis and global reliability sensitivity analysis

2.1 Structural reliability analysis

Suppose the limit state function of the concerned structural system is Y = g(x), where x = (x 1, x 2, …, x n ) is the random input variable vector and f X (x)is the joint PDF of model input variables. Given all the input variables are mutually independent, the joint PDF can be expressed by a product of the marginal PDF of x i (i = 1, 2, …, n), i.e., \( {f}_{\boldsymbol{X}}\left(\boldsymbol{x}\right)=\prod_{i=1}^n{f}_{X_i}\left({x}_i\right) \). We define the region where the limit state function is less than zero as the failure domain. Thereof, the failure probability of this structural system is expressed as follows:

Equation (1) is a multidimensional integration and the direct method to estimate it is MCS, i.e.,

where N is the number of sample points and x i (i = 1, …, N)is generated from f X (x). I F (x i ) is the indicator function of the failure domain and is defined as follows:

2.2 Global reliability sensitivity analysis

To measure the effect of model input variables on the failure probability, Cui and Li (Cui et al. 2010; Li et al. 2012) proposed the failure probability-based global sensitivity index, and it is depicted as follows:

where P f is the unconditional failure probability and \( {P}_{f\mid {X}_i} \)is the conditional failure probability when X i is fixed. \( {\delta}_i^P \)reflects the average effect of the input variable X i on the failure probability of the model. The higher \( {\delta}_i^P \)is, the more importance X i is on the failure probability.

Ref. (Li et al. 2012) proven that (4) has the same form with the variance-based sensitivity index, i.e.,

Wei (Wei et al. 2012) standardized it by dividing (5) by the unconditional variance of the failure domain indicator function, that is

Equation (6) indicates that the failure probability-based global sensitivity index is the first order variance effect of the failure indicator function. Therefore, methods in estimation of Sobol’ index can be extended to this index.

3 Modified importance sampling method (ISM) for structural reliability analysis

3.1 Original ISM for structural reliability analysis

For the problem with large failure probability (10−1~10−2), the MCS is efficient and accurate enough. But for the problem with small failure probability (10−2~10−3or even small), a large number of sample points should be generated (more than 104) to guarantee the calculation accuracy. Aiming at the problem with small failure probability, the ISM is proposed to improve the calculation efficiency. The formula is

where h X (x) is the importance sampling PDF. The optimal h X (x) is constructed by the following equation (Melchers 1989; Wei et al. 2014):

Equation (8) indicates that P f must be obtained in advance for determining the optimal h X (x), which is impossible because I F (x)is the integrand and P f is to be estimated. Thereof, the optimal importance sampling PDF cannot be obtained feasibly in advance. Generally, the importance sampling PDF is constructed by shifting the sampling center from the mean point of input variables to the MPP because MPP is the point with highest probability density in the failure domain.

3.2 Modified ISM for structural reliability analysis

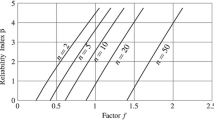

To reduce the calls of model evaluations on ISM, TIS procedure is proposed in Ref (Grooteman 2008) by introducing the β-sphere shown in Fig. 1 where β is the minimum distance from the coordinate origin to the failure surface in the standard normal space, and can be sought by a constrained optimization procedure, i.e.,

where u is the uncorrelated normalized variables transformed from the random variables x by equivalent probability transformation, i.e.,

where \( {F}_{X_i}\left(\cdot \right) \) is the cumulative distribution function (CDF) of X i , Φ(⋅)and Φ −1(⋅) are the CDF and inverse CDF of standard normal variable, respectively.

Therefore, the indicator function of the outer of the β-sphere is defined as follows:

where the inner of the β-sphere contains all the safe domain or a part, and the outer of the β-sphere contains the failure domain and a part of safe domain. Thereof, no failure point exists in the β-sphere and the failure domain indicator function is revised as follows:

Equation (12) illustrates that a part of safe points dropped into the β-sphere doesn’t need to call the true model to judge whether failure or not. Therefore, the failure probability can be rewritten as follows:

The main difference between the ISM and the TIS method is that the latter needs to judge if the sample point is contained in the β-sphere, and if so, the limit state function value doesn’t need to be estimated for this sample point. To estimate (13), N sample points of model inputs are generated, and then the failure probability is estimated by

where x i is the ith sample points of model inputs.

The expectation of the estimator \( \widehat{P_f} \) can be derived as

Thus, (14) is an unbiased estimator for the failure probability P f .

Depending on the definitions of I F (⋅) and I β (⋅), the item \( {I}_F\left({\boldsymbol{x}}_i\right){I}_{\beta}\left({\boldsymbol{x}}_i\right)\frac{f_{\boldsymbol{X}}\left({\boldsymbol{x}}_i\right)}{h_{\boldsymbol{X}}\left({\boldsymbol{x}}_i\right)} \) can be calculated as

The sample point in the inner of the β-sphere is absolutely safe. Generally, the state of the sample point out of the β-sphere need to be judged beforehand. Equation (16) indicates that if the sample point x i is safe, the contribution of this sample point to the denominator of (14) is zero. In contrast, if the sample point x i is failure, the contribution of this sample point to the denominator of (14) is \( \frac{f_{\boldsymbol{X}}\left({\boldsymbol{x}}_i\right)}{h_{\boldsymbol{X}}\left({\boldsymbol{x}}_i\right)} \). Thus, we define a contributive weight of sample point as follows:

Equation (17) is suitable for the case of single MPP. For multiple MPPs, the failure probability is estimated by the following equation according to Ref. (Lu and Feng 1995), i.e.,

where m is the number of MPPs and h k (x) is the kth importance sampling PDF by shifting the sample center to the kth MPP.

Therefore, N number of sample points is generated by h k (x)(k = 1, 2, …, m), respectively, and the failure probability is estimated as follows:

where x k, i is the ith sample generated by the kth importance sampling PDF.

Thus, the contributive weight function of the case of multiple MPPs is constructed as follows:

For convenience, the following derivation is based on (17), and the following derivation is also adapted for the case of multiple MPPs by substituting (17) with (20).

The generated N sample points are firstly sorted in a descending order according to the values of their contributive weight indices. Therefore, (14) can be rewritten as

where \( \frac{f_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right)}{h_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right)}\kern0.5em \left(j\in \left[k+1,N\right]\right) \) is smaller than any \( \frac{f_{\boldsymbol{X}}\left({\boldsymbol{x}}_i\right)}{h_{\boldsymbol{X}}\left({\boldsymbol{x}}_i\right)}\left(i\in \left[1,k\right]\right) \).

Through ignoring the second sum of the denominator in (21), the estimation of (21) is expressed as

A convergence criterion should be constructed to choose a proper number of k. Based on the (21) and (22), the relative error of the failure probability between the current \( {P}_f^{(k)} \) and the true estimate value P f is computed by

The state of the samples in the first group is identified accurately by calling the limit state function and judging whether the sample point is in the β-sphere, thus, \( \sum_{i=1}^k{I}_F\left({\boldsymbol{x}}_i\right){I}_{\beta}\left({\boldsymbol{x}}_i\right){f}_{\boldsymbol{X}}\left({\boldsymbol{x}}_i\right)/{h}_{\boldsymbol{X}}\left({\boldsymbol{x}}_i\right) \) is known. In contrast, the state of the sample point in the second group doesn’t need to be judged due to its small contributive weight, and \( \sum_{j=k+1}^N{I}_F\left({\boldsymbol{x}}_j\right){I}_{\beta}\left({\boldsymbol{x}}_j\right){f}_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right)/{h}_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right) \) is an unknown value for the analysts. To control the error due to ignoring\( \sum_{j=k+1}^N{I}_F\left({\boldsymbol{x}}_j\right){I}_{\beta}\left({\boldsymbol{x}}_j\right){f}_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right)/{h}_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right) \)and determine a suitable k, the supremum value of \( \sum_{j=k+1}^N{I}_F\left({\boldsymbol{x}}_j\right){I}_{\beta}\left({\boldsymbol{x}}_j\right){f}_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right)/{h}_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right) \) can be determined by \( \sum_{j=k+1}^N{f}_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right)/{h}_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right) \). Obviously, \( \sum_{j=k+1}^N{f}_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right)/{h}_{\boldsymbol{X}}\left({\boldsymbol{x}}_j\right) \) is independent of the indicator function and doesn’t need to call the limit state function. Based on it, the maximum relative error of the failure probability given in (22) is estimated as

Then, the suitable value of k is searched from one to N by setting \( {\varepsilon}_k^{\mathrm{max}}<{C}_r \) (where C r is the level of accuracy). The incrementation stops if \( {\varepsilon}_k^{\mathrm{max}}<{C}_r \).

3.3 Computational cost

The aforementioned modification of ISM reduces the number of limit state function evaluations from two different domains, i.e., the inner of β-sphere and the region with small contributive weight indices. Thereof, the sample set can be divided into three categories, i.e.,

where U is the universal set containing all the generated sample points. Because the sample points in the set A and B don’t need to call the limit state function, the true number of limit state function evaluations of this improvement is

where card represents the number of elements in the set C.

4 Modified ISM for estimating the global sensitivity indices

To estimate (5) by the input sample points and relative output sample points generated in the modified ISM for reliability analysis, the space-partition method proposed in Ref (Zhai et al. 2014) is extended in this paper.

Suppose the sample space of input X i is (b l , b u ), and partition it into s successively equiprobable and non-overlapping subintervals A k = [a k − 1, a k ), 1 ≤ k ≤ s, where \( {p}_k={\int}_{a_{k-1}}^{a_k}{f}_{X_i}\left({x}_i\right){dx}_i \). Then, the (6) is equivalently expressed as

where \( {E}_{A_k}\left(\cdot \right) \)is the expectation operator when X i is fixed in A k = [a k − 1, a k )(k = 1, 2, …, s). Ref (Zhai et al. 2014) proved that \( \sum_{k=1}^s{p}_k{V}_{X_i}\left(E\left({I}_F|{X}_i\right)|{X}_i\in {A}_k\right)\to 0 \)when \( \varDelta a=\underset{k}{\max}\mid {a}_k-{a}_{k-1}\mid \to 0 \). Thus, the approximate expression of S i in case of Δa → 0 is

The law of total expectation in successive intervals without overlapping is proved as follows:

Based on (31), the law of total variance in successive intervals without overlapping is proved as follows:

Then,

Thus, (30) can be equivalently written as

Generally, E(I F | X i ∈ A k ) is easier to be estimated and requires much fewer sample points in each subinterval A k than V(I F | X i ∈ A k ). Thereof, the conflict between the accuracy of estimating E(Y| X i ∈ A k ) and convergence condition Δa → 0 of (35) can be alleviated.

4.1 Estimation of \( {V}_{A_k}\left(E\left({I}_F|{X}_i\in {A}_k\right)\right) \)

According to (13), E(I F | X i ∈ A k )is estimated as

Define \( IW\left(\boldsymbol{x}\right)={I}_F\left(\boldsymbol{x}\right){I}_{\beta}\left(\boldsymbol{x}\right)\frac{f_{\boldsymbol{X}}\left(\boldsymbol{x}\right)}{h_{\boldsymbol{X}}\left(\boldsymbol{x}\right)} \) and make IW(x i ) = 0 (i = k + 1, .. , N) for its small contributive weight. Then,

where m k is the number of sample points \( {\boldsymbol{x}}_r^{(k)}\left(r=1,\dots, {m}_k\right) \)in subinterval A k .

According to the relationship between expectation and variance, and combining the proved law of total expectation in successive intervals without overlapping, the estimator of \( {V}_{A_k}\left(E\left({I}_F|{X}_i\in {A}_k\right)\right) \)is derived as follows:

where

4.2 Estimation of V(I F )

According to the relationship between the expectation and the variance, V(I F ) is estimated by\( {P}_f^{(k)} \), i.e.,

4.3 The partition strategies

The partition scheme affects the estimations of the global reliability sensitivity indices notablely. A larger s may improve the accuracy of the outer variance and guarantee the convergence condition, yet also reduce the accuracy of the inner expectation. The inaccurate estimation of the inner expectation will yield mistakes in estimation of S i . A smaller s may improve the accuracy of the inner expectation, but decrease the accuracy of outer variance and destroy the convergence condition of the space-partition method. Therefore, achieving a compromise is necessary at a given number of input-output sample points. To harmonize the number of subintervals and the number of samples in each subinterval, the medium value \( s=\left[\sqrt{N}\right] \)is suggested in Ref. (Li and Mahadevan 2016). Another partition scheme is suggested in this paper. Firstly, a certain number of sample points are fixed in each subinterval. In the beginning, when N is small, the each subinterval is long and the number of samples for estimating the variance in the out loop are small. Then the estimates of the global reliability sensitivity indices are quite inaccurate. By increasing N, the length of each subinterval becomes short and the number of samples for estimating the outer variance is increased. Consequently, the accuracy of the inner expectation and outer variance are fulfilled, and the convergence condition of space-partition also is guaranteed by increasing N, the number of sample points. Fig. 2 shows the process of the proposed alternative partition scheme. The blue circle represents the total sample points, and the red circle represents the boundaries of each subinterval.

5 Case studies

In this section, a roof truss structure and a composite cantilever beam structure are employed to verify the effectiveness of the proposed method. In these two engineering studies, low discrepancy sequence sampling procedure (Sobol 1976; Sobol 1998) is used to generate the sample points of the model input variables. Moreover, MPP can be searched by many existing methods (Hasofer and Lind 1974; Rashki et al. 2012). In this paper, MPP is computed by the advanced first-order reliability method (AFORM) (Hasofer and Lind 1974). The AFORM algorithm is global convergent when the limit state function is continuous and differentiable (Hasofer and Lind 1974).

5.1 Case study I: A roof truss structure

A roof truss is shown in Fig. 3. The top boom and the compression bars are reinforced by concrete. The bottom boom and the tension bars are steel. The uniformly distribution load q is applied on the roof truss, which can be transformed into the nodal load P = ql/4. The perpendicular deflection Δ C of the node C can be obtained by the mechanical analysis, which is the function of the input variables, i.e.,

where A C , A S , E C , E S , and l denote sectional area, elastic modulus, length of the concrete and that of the steel bars, respectively. Considering the safety and the applicability, the limit state function is established as follows:

where ε is the failure threshold. Random input variables are assumed as the independent normal variables with the distribution parameters shown in Table 1.

We define the sample reduction ratio to measure the efficiency of each reliability analysis method. The mathematical expression of the sample reduction ratio is depicted as follows:

where N is the number of samples used in MCS and Ncall is the used number of samples in the compared method. Ncall includes the number of samples used to find MPP if used. Therefore, the higher the sample reduction ratio is, the more efficient the method is.

Tables 2, 3, and 4 show the results of failure probability estimated by MCS, ISM, TIS where MPP is searched by 35 model evaluations and the proposed modified ISM with different maximum relative error limits, respectively. From these three tables, five points can be obtained as follows:

-

(1).

The proposed modified ISM inherits the variance reduction property of the original ISM, and decreases the computational cost remarkably by adjusting the maximum relative error limit flexibly.

-

(2).

The higher the maximum relative error limit is, the larger the sample reduction ratio is.

-

(3).

With the reduction of the maximum relative error limit, the estimation of the failure probability by the proposed modified ISM trends towards the true value.

-

(4).

The sample reduction ratio of the proposed ISM in this case study is more than twice as large as that of the TIS method with the maximum relative error limit being 5%, is twice as large as that of the TIS method with the maximum relative error limit being 2%, and is approximate 1.5 times as large as that of the TIS method with the maximum relative error limit being 0.5%.

-

(5).

As the number of samples N increases, the sample reduction ratio of the modified ISM increases. The main reason is that as the number of samples N increases, the samples used to find the MPP can be omitted in comparison with the savings of sample evaluations.

To further illustrate the capability of dealing with small failure probability of the modified ISM, we adjust the failure threshold for comparison. Tables 3, 5, and Table 6 give the results of failure probability with different failure threshold, and the maximum relative error limit is set as 2%. The MPPs are computed by 35, 56, 49 model evaluations for three different failure thresholds, respectively. The following conclusions from the numerical results are obviously obtained, i.e.,

-

(1).

The proposed modified ISM method is competent for the case with small failure probability.

-

(2).

Under the acceptable precision level, sample reduction ratio of the proposed method is twice as large as that of the TIS method.

-

(3).

Under the same precision level, the smaller the failure probability is, the bigger the sample reduction ratio is. The main reasons is that if the current system is not highly reliable, then the β-sphere will not be larger and thus the samples in the β-sphere could be small so that the savings of function evaluations.

Table 7 shows the results estimated by MVFORM, AFORM, and MaxEnt + M-DRM. The calls of model evaluations are 8, 35/56/49, 25, respectively. These are more efficient than the sampling-based method. However, the accuracies of these three efficient methods are lower than those of the sampling-based method for this problem. It further illustrates the generality of the sampling-based method.

By reusing the samples generated in failure probability estimation, the global reliability sensitivity indices can be obtained as byproducts.

Fig. 4 and Fig. 5 show the numerical results of global reliability sensitivity indices withε = 0.025. From the results figures, it can be seen that no matter \( s=\left[\sqrt{N}\right] \) or fixing a certain number of sample points in each subinterval in advance, the acceptable accuracies can be achieved in the examples. Table 8 shows the numerical results of global reliability sensitivity indices estimated by the proposed modified ISM and the single-loop IS method proposed in Ref. (Wei et al. 2012). The importance ranking of the random input variables obtained by Ref (Wei et al. 2012), modified ISM and MCS are the same, i.e., A c > q > E s > A s > l > E c . For each estimate, the standard deviation (SD) of estimate \( {\widehat{S}}_i \) is employed to measure the convergence. From Table 8, we can clearly see that SDs of our proposed method are small than these of the method in Ref. (Wei et al. 2012), thus it can demonstrate the robustness of the proposed modified ISM. The potential sources of the slight error of the proposed modified ISM in global reliability sensitivity analysis are the slight errors from the modified ISM in reliability analysis and the space-partition method in sensitivity analysis.

The global reliability sensitivity indices estimated by the modified ISM integration with space-partition method (Modified ISM(2) represents the partition scheme that a certain number of sample points are fixed in each subinterval in advance, and references are the results estimated by MCS with large number of model evaluations)

5.2 Case study II: A Composite cantilever beam structure

A composite cantilever beam structure with the load F 0 is shown in Fig. 6. The displacement Δ Tip of the free point is obtained by mechanical analysis of composite material structure:

where F 0, L and h are the applied load per width, length of the beam and the height of the beam, respectively. E L , E T , G LT and v LT are the longitudinal Young moduli, transverse Young moduli, shear modulus and Poisson ratio, respectively. Considering that the tip displacement cannot exceed 9.59 cm, the limit state function of the reliability analysis can be established as follows:

All the input variables are normal and mutually independent. The distribution parameters are listed in the Table 9.

Table 10 shows the numerical results of failure probability while ε = 9.59cm and C r = 2%. The results demonstrate that the proposed modified ISM not only inherits the advantages of the original ISM but also reduces more than 5 % model evaluations in comparison with the original ISM. The failure probability of this case is 0.0876. The mainly reductive direction of this case study is the small contributive weight domain, and it hardly has sample points in the β-sphere for β is small in this case. For comparison, ε is adjusted to 19.59 cm and 29.59cm. The failure probabilities of the two cases are 0.00197 and 1.068 × 10−4, respectively. They are smaller than that of 9.59 cm failure threshold. From Tables 11 and 12, it can be seen that the number of sample points in β-sphere increases, and no matter small failure probability or large failure probability, the reductive number of model evaluations in the small contributive weight domain is as much as that in β-sphere.

Table 13 also shows the failure probability of this composite cantilever beam structure estimated by AFORM and MaxEnt + M-DRM. AFORM uses 24 model evaluations to find the MPPs for different failure thresholds, respectively. MaxEnt + M-DRM only uses 29 model evaluations to estimate the failure probabilities with different failure thresholds. From Table 13, it obvious that AFORM behaves more accurately than MaxEnt + M-DRM which cannot estimate the small failure probability accurately for this case study.

By \( s=\left[\sqrt{N}\right] \) partition scheme and reusing the sample points in failure probability estimation, the global reliability sensitivity indices are computed in Table 14. Results in Table 14 demonstrate the fast convergence of the proposed method compared with the existing efficient single-loop IS method. The importance rank is h > L > F 0 > G LT > E L > E T = v LT , which indicates that the uncertainty of h has the most important effect on failure probability. By decreasing the uncertainty of h, the most reduction of failure probability can be obtained. It is also shown that the sensitivity indices of E T and v LT are close to zero, thus the uncertainty of these two input variables can be omitted.

6 Conclusion

This paper aims at improving the efficiency of ISM in reliability analysis. The proposed modified ISM inherits the advantages of the original ISM and further reduces the computational cost of ISM. Firstly, based on the idea of TIS, we screen out the samples in β-sphere. Secondly, we define the contributive weight as the ratio of the original PDF to the importance sampling PDF. The samples with small contributive weight are screened out under an acceptable precision level. Because of the samples dropped in β-sphere are safe, they don’t need to run the model to decide their states. The samples with small contributive weights predicate the small contribution to failure probability. Thereof, we consider they are safe directly without running the model. Thus, the proposed modified ISM reduces the computational cost of ISM from two different domains. Because the modified ISM is based on the original ISM some limitations of the original ISM also exist in the modified ISM. To further estimate the global reliability sensitivity indices as byproducts, original space-partition method in variance-based sensitivity analysis is extended to the global reliability sensitivity analysis, and the law of total variance in the successive intervals without overlapping is proved additionally. By analyzing a roof truss structure and a composite cantilever beam structure, the effectiveness of the proposed modified ISM in reliability analysis and global reliability sensitivity analysis is verified.

References

Au SK, Beck JL (1999) A new adaptive importance sampling scheme. Struct Saf 21:135–158

Au SK, Beck JL (2002) Importance sampling in high dimensions. Struct Saf 25:139–163

Bourinet JM, Deheeger F, Lemaire M (2011) Assessing small failure probabilities by combined subset simulation and support vector machines. Struct Saf 33(6):343–353

Cui LJ, Lu ZZ, Zhao XP (2010) Moment-independent importance measure of basic random variable and its probability density evolution solution. SCIENCE CHINA Technol Sci 53(4):1138–1145

De Der Kiureghian A (1991) stefano M. Efficient algorithm for second-order reliability analysis. J Eng Mech ASCE 117(2):2904–2923

Echard B, Gayton N, Lemaire M (2011) AK-MCS: An active learning reliability method combining Kriging and Monte Carlo Simulation. Struct Saf 33:145–154

Grooteman F (2008) Adaptive radial-based importance sampling method for structural reliability. Struct Saf 30:533–542

Harbitz A (1986) An efficient sampling method for probability of failure calculation. Struct Saf 3:109–115

Hasofer AM, Lind NC (1974) An exact and invariant first order reliability format. J Eng Mech ASCE 100(1):111–121

Hu Z, Mahadevan S (2016) Global sensitivity analysis-enhanced surrogate (GSAS) modeling for reliability analysis. Struct Multidiscip Optim 53:501–521

Kim SH, Na SW (1997) Response surface method using vector projected sampling points. Struct Saf 19(1):3–19

Li CZ, Mahadevan S (2016) An efficient modularized sample-based method to estimate the first-order Sobol' index. Reliab Eng Syst Saf 153:110–121

Li LY, Lu ZZ, Feng J, Wang BT (2012) Moment-independent importance measure of basic variable and its state dependent parameter solution. Struct Saf 38:40–47

Lu ZZ, Feng YS (1995) An importance sampling function for computing failure probability for a limit state equation with multiple design point. Acta Aeronautica et Astronautica Sinica 16(4):484–487 (in Chinese)

Melchers RE (1989) Importance sampling in structural system. Struct Saf 6:3–10

Papadrakakis M, Lagaros N (2001) Reliability-based structural optimization using neural networks and Monte Carlo simulation. Comput Methods Appl Mech Eng 191(32):3491–3507

Rashki M, Miri M, Moghaddam MA (2012) A new efficient simulation method to approximate the probability of failure and most probable point. Struct Saf 39:22–29

Sobol IM (1976) Uniformly distributed sequences with additional uniformity properties. USSR Comput Math Math Phys 16:236–242

Sobol IM (1998) On quasi-Monte Carlo integrations. Math Comput Simul 47:103–112

Song H, Choi KK, Lee CI, Zhao L, Lamb D (2013) Adaptive virtual support vector machine for reliability analysis of high-dimensional problems. Struct Multidiscip Optim 47:479–491

Wei P, Lu ZZ, Hao WR, Feng J, Wang BT (2012) Efficient sampling methods for global reliability sensitivity analysis. Comput Phys Commun 183:1728–1743

Wei PF, Lu ZZ, Song JW (2014) Extended Monte Carlo simulation for parametric global sensitivity analysis and optimization. AIAA J 52(4):867–878

Xu L, Cheng GD (2003) Discussion on: moment methods for structural reliability. Struct Saf 25:193–199

Zhai QQ, Yang J, Zhao Y (2014) Space-partition method for the variance-based sensitivity analysis: Optimal partition scheme and comparative study. Reliab Eng Syst Saf 131:66–82

Zhang XF, Pandey MD (2013) Structural reliability analysis based on the concepts of entropy, fractional moment and dimensional reduction method. Struct Saf 43:28–40

Zhang XF, Pandey MD, Zhang YM (2014) Computationally efficient reliability analysis of mechanisms based on a multiplicative dimensional reduction method. J Mech Des ASME 136(6):061006

Zhao YG, Ono T (1999a) A general procedure for first/second-order reliability method (FORM/SORM). Struct Saf 21(2):95–112

Zhao YG, Ono T (1999b) New approximations for SORM: part 1. J Eng Mech ASCE 125(1):79–85

Zhao YG, Ono T (2001) Moment method for structural reliability. Struct Saf 23(1):47–75

Zhao YG, Ono T (2004) On the problems of the fourth moment method. Struct Saf 26(3):343–347

Zhao YG, Lu ZH, Ono T (2006) A simple-moment method for structural reliability. J Asian Archit Build Eng 5(1):129–136

Zhou CC, Lu ZZ, Zhang F, Yue ZF (2015) An adaptive reliability method combining relevance vector machine and importance sampling. Struct Multidiscip Optim 52:945–957

Acknowledgements

This work was supported by the Natural Science Foundation of China (Grant 51475370, 51775439) and the Innovation Foundation for Doctor Dissertation of Northwestern Polytechnical University (Grant CX201708).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yun, W., Lu, Z. & Jiang, X. A modified importance sampling method for structural reliability and its global reliability sensitivity analysis. Struct Multidisc Optim 57, 1625–1641 (2018). https://doi.org/10.1007/s00158-017-1832-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-017-1832-z