Abstract

Neural networks have been proved efficient in improving many machine learning tasks such as convolutional neural networks and recurrent neural networks for computer vision and natural language processing, respectively. However, the inputs of these deep learning paradigms all belong to the type of Euclidean structure, e.g., images or texts. It is difficult to directly apply these neural networks to graph-based applications such as node classification since graph is a typical non-Euclidean structure in machine learning domain. Graph neural networks are designed to deal with the particular graph-based input and have received great developments because of more and more research attention. In this paper, we provide a comprehensive review about applying graph neural networks to the node classification task. First, the state-of-the-art methods are discussed and divided into three main categories: convolutional mechanism, attention mechanism and autoencoder mechanism. Afterward, extensive comparative experiments are conducted on several benchmark datasets, including citation networks and co-author networks, to compare the performance of different methods with diverse evaluation metrics. Finally, several suggestions are provided for future research based on the experimental results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the rapid increase in computing resources and trainable data, the performance of neural networks has been excellent improved in various fields. In computer vision area, convolutional neural networks (CNNs) [1] are widely exploited for different research directions such as image classification [2, 3], semantic segmentation [4, 5] and image captioning [6, 7]. In natural language processing area, recurrent neural networks (RNNs) [8] or long short-term network (LSTM) [9] are used for several important tasks including sentiment analysis [10, 11], machine translation [12, 13], question-answering system [14, 15]. In addition, deep neural networks can also be used for cancer detection [16, 17], earthquake magnitude prediction [18, 19], or intelligent game [20].

Deep learning architectures have achieved great success in the Euclidean domain, including image, text and audio [21, 22]. As one of the typical non-Euclidean structures in the machine learning area, graph data have the characteristics of arbitrary size, complex topological structure and always have an unfixed node ordering [23]. Therefore, it is difficult to directly exploit existing learning paradigms (e.g., convolutional or pooling operation) to graph structure data. However, graph data are a ubiquitous and essential structure in machine learning domain due to the powerful ability to represent objects and their relationships in various scenarios such as community detection [24, 25], traffic flow prediction [26, 27], knowledge graphs [28, 29]. It is imperative to generalize these successful neural networks to graph analysis, and more and more researchers are devoting themselves to do this task [30]. As a result, graph neural networks (GNNs) have been developed rapidly and achieved a series of breakthroughs [31,32,33].

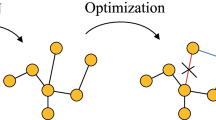

In the past few years, GNNs have been widely used in various graph analysis tasks, including node-focused tasks (e.g., node classification, link prediction) [34,35,36] and graph-focused tasks (e.g., graph similarity detection, graph classification) [37,38,39]. Among them, node classification is one of the most typical research directions of graph analysis due to the widespread application scenarios. In particular, the objective of the node classification task is to predict a particular class for each unlabeled node in the graph based on the graph information [40]. For example, node classification can predict the research topic to which each article belongs in the citation networks [41, 42]. In the protein-protein interaction network, each node can be assigned several gene ontology types [43, 44]. Figure 1 shows the objective of semi-supervised node classification in which only a few nodes have labels in the training dataset.

To provide a comparison of different algorithms in node classification, we present a review of graph neural networks based on comprehensive experiments. The contributions of this paper are shown as follows:

-

This survey provides a comprehensive review of existing graph neural network models in the scene of node classification. It introduces a new taxonomy of these models and presents several popular algorithms for each category.

-

Several popular algorithms derived from each category are compared based on a comprehensive experiment. In detail, these algorithms are rerunning on several popular benchmark datasets with four evaluation metrics. Besides, an objective analysis is conducted based on the experiment results.

-

According to the experimental analysis, several challenges of existing GNNs models are discussed and several future research directions are provided for interested researchers.

The rest of this paper is organized as follows. In Sect. 2, we first define several commonly used notations and then introduce some definitions related to node classification. Then, various graph neural network models of different categories are introduced in Sect. 3. In Sect. 4, we conduct experiments about node classification by using graph neural networks on several datasets and discuss the experimental results. Based on the experimental analysis, several open problems and future directions are provided in Sect. 5. In Sect. 6, a conclusion of this survey is conducted.

2 Notations and definitions

In this section, we provide a set of commonly used notations and present some critical definitions that will be used in this article. Specifically, we provide a number of notations summarized in Table 1 before going further into the following contents.

The degree matrix \({\mathbf {D}}\) is derived from the corresponding adjacency matrix \({\mathbf {A}}\) and can be defined as

For other variables, we use lowercase italic characters to represent scalers, bold lowercase characters to denote vectors and bold uppercase characters to represent matrices. Then, several definitions are introduced in the following.

Definition 1

(graph) Let \({\mathcal {G}} = ({\mathcal {V}}, {\mathcal {E}})\) be a graph where \({\mathcal {V}} = \left\{ v_1, v_2, \ldots , v_n \right\} \) is a set of nodes, \({\mathcal {E}} \subseteq {\mathcal {V}} \times {\mathcal {V}}\) is a set of edges between nodes in \({\mathcal {V}}\).

Note that \({\mathcal {G}}\) can be weighted or unweighted (the element’s value of \({\mathbf {A}}\) is either 0 or 1), directed or undirected. In this paper, we focus on dealing with undirected graphs, which are augmented with the node attribute feature \({\mathbf {X}} = \left\{ {\mathbf {X}}_1, {\mathbf {X}}_2, \ldots , {\mathbf {X}}_n \right\} \), where \({\mathbf {X}}_i\) is the corresponding feature vector of node \(v_i\). In addition, for unattributed graphs, one-hot encoding is used as the features for all nodes, i.e., \({\mathbf {X}}={\mathbf {I}}\).

Definition 2

(K-hop neighbors) Let \({\mathcal {G}} = ({\mathcal {V}}, {\mathcal {E}})\) be a graph, the k-hop neighbors of \(v_i\) denote the set of nodes which are exactly k hops away from \(v_i\) and is formulated as

Here, \({\text {sp}}(i,j)\) denotes the shortest path between \(v_i\) and \(v_j\), and it will be infinite when there is no edge between them. K represents the maximum number of hops.

Definition 3

(node classification) Let \({\mathcal {G}} = ({\mathcal {V}}, {\mathcal {E}})\) be a graph, the objective of node classification is to produce labels for each node which can be formed as \({\varvec{y}} = \left\{ y_1, y_2, \ldots , y_n \right\} \) or \({\mathbf {Y}} = \left\{ {\varvec{y}}_1, {\varvec{y}}_2, \ldots , {\varvec{y}}_n \right\} \) for multi-labels classification.

Note that the information of graph structure, node features, edge connections can be helpful to complete the node classification task.

3 Graph neural networks

In this section, we provide a comprehensive overview of various graph neural networks, which can be used for node classification, through three categories: convolutional mechanism, attention mechanism, and autoencoder mechanism. Several popular algorithms of each category are introduced.

3.1 Convolutional mechanism

Graph convolutional mechanism is one of the most commonly used information aggregation paradigms in graph analysis. The basic idea of this mechanism is that it utilizes convolutional or pooling operations on graph structure to extract higher representation for each node and then used in node classifier. GNNs models based on this mechanism are denoted as graph convolutional networks (GCNs), which are unrelated to node order and different from CNNs on images.

3.1.1 ChebNet

In order to generalize operators of CNNs to graph domain, Defferrard et al. [45] proposed a spectral-based graph convolutional network called ChebNet, which contains a fast localized spectral graph filter derived from Chebyshev polynomial. Specifically, ChebNet includes three main steps: the design of localized convolutional filters, a graph coarsening procedure, and a graph pooling operation.

Graph Laplacian is an important operator of spectral graph analysis [46] and can be defined as \({\mathbf {L}} = {\mathbf {D}}- {\mathbf {A}} \in {\mathbb {R}}^{n \times n}\). In addition, \({\mathbf {L}}\) has a normalized form which is formed as

The diagonalized form of graph Laplacian is defined as \({\mathbf {L}}={\mathbf {U}} {\varvec{\varLambda }} {\mathbf {U}}^{T},\) where \({\varvec{\varLambda }}\) is the diagonal matrix of eigenvalues derived from \({\mathbf {L}}\) and \({\mathbf {U}}\) denotes the Fourier basis. \({\text {diag}}(\cdot )\) denotes a diagonal matrix derived from the input matrix or vector.

Then, a spectral filter \(g_{\theta }\) is designed to filter the input signal \({\mathbf {x}} \in {\mathbb {R}}^n\) (each element associate with a node), which is shown as follows:

Here, \(g_{\theta }({\varvec{\varLambda }}) = {\text {diag}}(\theta )\) is a nonparametric filter, \(\theta \in {\mathbb {R}}^n\) denotes the Fourier coefficients, and \({\mathbf {U}}^T{\mathbf {x}} \in {\mathbb {R}}^{n}\) represents the graph Fourier transform of \({\mathbf {x}}\).

However, the nonparametric filter \(g_{\theta }\) cannot localize in space and is complex to learn. To alleviate these problems, Defferrard et al. [45] introduce a polynomial filter and compute \(g_{\theta }({\mathbf {L}})\) in a recursive formulation based on the Chebyshev polynomial function. As a result, the filter can be parametrized as

where \(\tilde{{\varvec{\varLambda }}}=2 {\varvec{\varLambda }} / \lambda _{\max }-{\mathbf {I}}_{n}\) denotes a diagonal matrix which all elements in the range \([-1,1],\) \(\lambda _{max}\) represents the maximum eigenvalue of \({\mathbf {L}}\) and the parameter \(\theta \in {\mathbb {R}}^K\) denotes all coefficients of the Chebyshev polynomial \(T_{k}({\mathbf {x}})\). In detail, \(T_{k}({\mathbf {x}})\) can be computed in a recursive way \(T_{k}({\mathbf {x}})=2 {\mathbf {x}} T_{k-1}({\mathbf {x}})-T_{k-2}({\mathbf {x}})\), where \(T_{0}({\mathbf {x}})=1\) and \(T_{1}({\mathbf {x}})={\mathbf {x}}\). Thus, Eq. (4) can be reformed as follows:

where \(\tilde{{\mathbf {L}}}=2 {\mathbf {L}} / \lambda _{\max }-{\mathbf {I}}_{n}\) is a scaled graph Laplacian and can then be used to evaluate the k-th order Chebyshev polynomial \(T_{k}(\tilde{{\mathbf {L}}}) \in {\mathbb {R}}^{n \times n}.\) Thus, the central vertex depends on its K-hop neighbors since Eq. (6) is a K-order polynomial in the Laplacian. Benefit from Eq. (6), ChebNet bypasses the computation of the graph Fourier basis.

Furthermore, Defferrard et al. [45] denoted \(\overline{{\mathbf {x}}}_{k}=T_{k}(\tilde{{\mathbf {L}}}) {\mathbf {x}} \in {\mathbb {R}}^{n}\) and the hidden state is recursively updated as \(\overline{{\mathbf {x}}}_{k}=2 \tilde{{\mathbf {L}}} \overline{{\mathbf {x}}}_{k-1}-\overline{{\mathbf {x}}}_{k-2}\), where \(\overline{{\mathbf {x}}}_{0}={\mathbf {x}}\) and \(\overline{{\mathbf {x}}}_{1}=\tilde{{\mathbf {L}}} {\mathbf {x}}\). This iterative process is shown in Fig. 2. Finally, the complete filter can be defined as \({\mathbf {z}} = g_{\theta }({\mathbf {L}}){\mathbf {x}} = \left[ \overline{{\mathbf {x}}}_{0}, \overline{{\mathbf {x}}}_{1}, \ldots , \overline{{\mathbf {x}}}_{K-1}\right] \theta \) which contains \({\mathcal {O}}(Km)\) operations.

Illustration of the iterative process used in ChebNet. The initial states \(\overline{{\mathbf {x}}}_{0}\) and \(\overline{{\mathbf {x}}}_{1}\) is set by \(\overline{{\mathbf {x}}}_{0} = {\mathbf {x}}\) and \(\overline{{\mathbf {x}}}_{1} =\tilde{{\mathbf {L}}} {\mathbf {x}}\), respectively. The final representation \(\overline{{\mathbf {x}}}_{K}\) is obtained by several iterative computations

To provide a meaningful graph for pooling operation, similar nodes should be clustered together where this process can be regarded as graph clustering, which is NP-hard. Thus, Defferrard et al. [45] utilized the Graclus multi-level clustering algorithm [47] to aggregate similar nodes and generate a coarsening graph. However, the order of vertices is not arranged in a meaningful way after coarsening. Thus, it needs to store all matched vertices when applying pooling operation, which would cause inefficient memory and computation. In order to accelerate the pooling operations, Defferrard et al. [45] proposed a pooling strategy that has the same efficiency as a 1D pooling. In detail, the procedure contains two steps: (i) construct a balanced binary tree and (ii) rearrange the nodes. As a result, it makes the pooling fast and suitable for parallel architectures.

3.1.2 GCN

Instead of using the information derived from K-hop neighbors to represent each node introduced in ChebNet [45], Kipf and Welling [48] also propose a spectral-based graph convolutional network (GCN) that encapsulates the hidden representation of each target node by aggregating the feature information of its first-order approximate neighbors [49]. Then, a deep neural network model is built by stacking such graph convolutional layers multi-times and is then used to obtain the final hidden representation of each node. As a result, the learned representation also contains information of its multi-hop neighbors similar to ChebNet [45].

Specifically, Kipf and Welling [48] set the number of hops as \(K=1\). Therefore, Eq. (6) becomes a linear function and is reformed as

with only two parameters \(\theta _{0}\) and \(\theta _{1}.\) Furthermore, Kipf and Welling [48] set the maximum eigenvalue as a constant \(\lambda _{max}=2\) and used a unique parameter \(\theta =\theta _{0}=-\theta _{1}\) to address the problem of overfitting on the local structure of a graph and minimize the number of operations. Under this setting and Eqs. (3), (7) is reformed as

Here, all eigenvalues of expression \({\mathbf {I}}_{n}+{\mathbf {D}}^{-\frac{1}{2}} {\mathbf {A}} {\mathbf {D}}^{-\frac{1}{2}}\) are lying in [0, 2] and the parameters of the filter are shared by all layers.

Note that stacking such convolutional operator to build a deep neural network model might produce some problems such as numerical instabilities and exploding/vanishing gradients. To solve these problems, Kipf and Welling [48] used a \( r enormalization~trick\) strategy for the above expression as

where \(\tilde{{\mathbf {A}}}={\mathbf {A}}+{\mathbf {I}}_{n}\) is the adjacency matrix of the graph with a self-connection. Similar to Eq. (1), \(\tilde{{\mathbf {D}}}_{i i}=\sum _{j} \tilde{{\mathbf {A}}}_{i j}\) is the degree matrix with respect to \(\tilde{{\mathbf {A}}}.\)

Then, Kipf and Welling [48] generalized the definition of Eq. (9), which is used for input signal \({\mathbf {x}} \in {\mathbb {R}}^n\) with only one channel, to the situation where each signal has multiple channels \({\mathbf {X}} \in {\mathbb {R}}^{n \times d}\). Here, d denotes the number of input channels (i.e., the dimension of node feature vector). To this end, the convolutional filter for signal \({\mathbf {X}}\) is defined as follows:

Here, \({\mathbf {W}} \in {\mathbb {R}}^{d \times f}\) is the matrix of filter parameters, \({\mathbf {Z}} \in {\mathbb {R}}^{n \times f}\) is the convolved feature matrix for all nodes and f denotes the dimensional of the embedding feature.

After defining the convolutional filter of each layer, Kipf and Welling [48] intent to build a multi-layer GCN with a layer-wise propagation rule:

where \({\mathbf {W}}^{(l)}\) is a trainable weighted matrix, \({\mathbf {H}}^{(l)} \in {\mathbb {R}}^{n \times h}\) is the matrix of hidden states for the l-th layer, and h denotes the dimension of higher representation. \({\mathbf {H}}^{(0)}\) is initialized by using the input signal \({\mathbf {X}}.\)

For semi-supervised node classification, Kipf and Welling [48] proposed a two-layer GCN model, which is schematically depicted in Fig. 3. In practice, Kipf and Welling [48] computed \(\hat{{\mathbf {A}}}=\tilde{{\mathbf {D}}}^{-1/2} \tilde{{\mathbf {A}}} \tilde{{\mathbf {D}}}^{-1/2}\) in pre-processing phase and introduced a forward propagation model as follows:

Schematic diagram of a two-layer GCN model. The dark green denotes target nodes that need to aggregate information from neighbors. The orange and purple lines denote the information stream for first-hop and second-hop neighbors, respectively. As a result, the output contains both first-hop and second-hop information

where \({\mathbf {W}}^{(0)} \in {\mathbb {R}}^{d \times h}\) is a matrix that maps the input feature to the hidden representation, \({\mathbf {W}}^{(1)} \in {\mathbb {R}}^{h \times f}\) is a hidden-to-output matrix, and \(\sigma (\cdot )\) is implemented by a \({\text {softmax}}\) function.

3.1.3 GraphSAGE

However, ChebNet and GCN are inherently transductive that need all nodes are existing during the training process and cannot generalize to previously unseen nodes. In order to enable a model to become inductive that has the ability to deal with those unseen nodes, Hamilton et al. [50] proposed a spatial-based graph convolutional network called GraphSAGE (SAmple and aggreGatE), which utilizes both the feature information of nodes (e.g., the TF-IDF feature when one node represents for one document) and the structural attributes of a node’s local neighborhood to learn a higher representation for each node.

Instead of training different hidden representations for each node separately, Hamilton et al. [50] designed a group of aggregators to learn embeddings by combining the information of nearby nodes of the current central node. Then, these aggregators are combined to form the forward propagation algorithm of GraphSAGE. There are existing K aggregators, which can be denoted as \({\text {AGGREGATE}}_{k}, \forall k \in \{1, \ldots , K\},\) and K parameter matrices \({\mathbf {W}}^{k}, \forall k \in \{1, \ldots , K\},\) which are used as the transformer between different hops.

In order to obtain the hidden state \({\mathbf {h}}_i^k\) of each target node \(v_i\) in each step \(k \in \left\{ 1, \ldots , K\right\} ,\) Hamilton et al. [50] firstly used \({\text {AGGREGATE}}_{k}\) to produce the aggregated neighborhood vector \({\mathbf {h}}_{N(i)}^{k}\) by using the information of all immediate neighbors of \(v_i\) which was produced in the previous time step. Then, \({\mathbf {h}}_{N(i)}^{k}\) is concatenated with the previous hidden state \({\mathbf {h}}_i^{k-1}\) of node \(v_i,\) and this concatenated vector is then transformed by \({\mathbf {W}}^{k}\) with an activation function to form the current state of the target node \(v_i\). Repeats the above process, the final feature representation of each node \({\mathbf {Z}}_i\) is produced in the K-th step.

In order to reduce the complexity of GraphSAGE and scale to a large graph, Hamilton et al. [50] utilized a sample strategy to choose a fixed-size set of neighbors for each target node. The illustration of the GraphSAGE model with a sampling strategy is shown in Fig. 4.

Illustration of GraphSAGE model with a sampling strategy. Here, the maximum number of neighbors for each hop is set as one and the maximum hop is set as two. The orange and purple edges denote different aggregators. After sampling, the information of white nodes are not used in the aggregation stage

Therefore, the complexity of GraphSAGE becomes \(O\left( \prod _{k=1}^{K} S_{k}\right) ,\) where \(S_{k}\) denotes the size of the neighbor’s set in the k-th step.

Additionally, Hamilton et al. [50] introduced three different aggregators, including mean aggregator, LSTM aggregator and pooling aggregator. In the mean aggregator, the aggregated neighbor vector \({\mathbf {h}}_{N(i)}^{k}\) is derived by simply utilizing the element-wise mean operation on the hidden state of neighbor \(\left\{ {\mathbf {h}}_{j}^{k-1}, \forall v_j \in N(i)\right\} ,\) which can be formed as

Equation (13) is similar to the convolutional operator in the GCN [48] and can be modified to an inductive variant from the original transductive framework. In the LSTM aggregator, Hamilton et al. [50] simply used an unordered sequence of node’s neighbors as the input of an LSTM model. In the pooling method, an element-wise max-pooling operator is used to gather information for each node, which is shown as follows:

where \(\max \) represents the pooling operator. Note that the mean and pooling aggregator are symmetric means that they are permutation invariant.

3.1.4 APPNP

Recently, Xu et al. [51] proposed an algorithm to reduce the complexity of GNNs by using the relationship between a random walk algorithm and a common message passing algorithm. However, this model considers a graph as a whole and loses the ability to capture the different information of local neighbors for each starting root node. Instead of using the random walk, Klicpera et al. [52] utilized the relationship between GCN and PageRank [53] to formulate a personalized PageRank-based propagation architecture. This propagation scheme is then used to design a graph convolution-based network called personalized propagation of neural prediction (PPNP).

In detail, Klicpera et al. [52] defined the personalized PageRank for each starting node \(v_i\) by using a recursive formulation, which is shown as follows:

where \({\mathbf {R}}_{i}\) is a one-hot indicator vector that denotes the teleport vector of node \(v_i\), and \(\alpha \in (0,1]\) is the teleport (or restart) probability that can be used to control the range of neighbor for each target node. Thus, Eq. (15) can be solved as the following form

Note that \({\mathbf {R}}_{i}\) is helpful to preserve the information of a node’s local neighbors. Further, Klicpera et al. [52] defined the fully personalized PageRank matrix by stacking all \(\varvec{\pi }_{\mathrm {ppr}}\left( {\mathbf {R}}_{i}\right) \) and replacing it with an identity matrix. The final representation of this PageRank matrix is shown as

Here, each element of \(\varvec{\varPi }_{\mathrm {ppr}}\) represents the influence score between two nodes. For example, the influence score of node \(v_i\) on node \(v_j\) is proportional to the value of \(\varvec{\varPi }_{\mathrm {ppr}}^{(j i)}\) and can be defined as \(I(i, j) \propto \varvec{\varPi }_{\mathrm {ppr}}^{(j i)}.\)

The process of the PPNP model can be divided into two consecutive parts, prediction and propagation, which is illustrated by Fig. 5. In the prediction stage, PPNP uses a neural network \(f_{\theta }\) with parameter set \(\theta \) to generate predictions for each node based on the input node features \({\mathbf {X}}.\) In the propagation step, the predictions matrix \({\mathbf {H}} \in {\mathbb {R}}^{n \times f}\) is used to produce the final result via the fully personalized PageRank scheme. So, the equation of PPNP can be defined as

where \({\mathbf {H}}_i = f_{\theta } ({\mathbf {x}}_i)\) and \(\sigma (\cdot )\) is implemented by a \({\text {softmax}}\) function. Note that the propagation matrix \(\hat{{\mathbf {A}}}\) can be replaced with other forms such as \({\mathbf {A}}_{\mathrm {rw}}={\mathbf {A}} {\mathbf {D}}^{-1}\). Furthermore, the number of propagation layers is arbitrary (in fact, infinitely many) and can avoid the oversmoothing problem.

The computational complexity and memory requirement of the PPNP model is \({\mathcal {O}}\left( n^{2}\right) .\) It is inefficient and may cause the outing of memory more easily for a large graph. In order to solve this problem, Klicpera et al. [52] proposed an approximate personalized propagation of neural predictions (APPNP) by replacing the full personalized PageRank with a variant of topic-sensitive PageRank [54]. In APPNP, the final predictions of all nodes are calculated via some propagation layer by a recursive formulation, which is shown as

where \({\mathbf {Z}}^{0}={\mathbf {H}}=f_{\theta }({\mathbf {X}})\) and the output of the last layer is followed by a \({\text {softmax}}\) function.

3.1.5 SGC

The development of traditional neural networks is from simplicity to complexity, e.g., from the logistic regression to CNNs for grid-like data or RNNs used in the sequence-like data. However, the computational complexity and memory requirement of most existing GNNs are always higher even in the early algorithms [48] and do not follow the trend that appears in the development of neural networks used for Euclidean data. In order to reduce the complexity of existing models, Wu et al. [55] proposed a linear graph neural network called simple graph convolutional (SGC) which is illustrated in Fig. 6.

The SGC model holds a hypothesis that the improved performance of GCNs is derived from the usage of information aggregated from neighborhoods.

Under this assumption, Wu et al. [55] removed all nonlinear transition functions except the \({\text {softmax}}\) function in the original GCNs to reduce the cost of computing. Therefore, the definition of this linear network can be obtained by modifying Eq. (12) and is shown as follows:

where \(\sigma (\cdot )\) is implemented by a \({\text {softmax}}\) function. Note that Eq. (20) can aggregate information from K-hop neighbors similar to the K-layer GCN model. Further, Wu et al. [55] collapse all the weight matrices between different GCN layers into a single matrix \({\mathbf {W}} = {\mathbf {W}}^{0} {\mathbf {W}}^{1} \ldots {\mathbf {W}}^{K}.\) Similarly, the repeated multiplication of \(\hat{{\mathbf {A}}}\) is collapsed into K power \(\hat{{\mathbf {A}}}^K.\) After this simplify, the definition of SGC can be reformed as

where \(\hat{{\mathbf {A}}}^K\) is a low-pass-type filter and calculated in the pre-processing stage. SGC is naturally to interpret because of the dividing of feature extraction \({\mathbf {H}} = \hat{{\mathbf {A}}}^K {\mathbf {X}}\) and classification \({\mathbf {Z}} = \sigma ({\mathbf {H}}{\mathbf {W}}).\) The latter is implemented by logistic regression and trained with any second-order method or stochastic gradient descent [56].

3.2 Attention mechanism

Attention mechanism is one of the most useful architecture in artificial intelligence, including computer vision, graph analysis. Instead of using a constant weight, different neighbors should have variant contributions for the target node. Additionally, the number of neighbors is variant to each node. The benefits of attention mechanism are dealing with variable-sized inputs and focusing on the most relevant part. Thus, it is natural to apply attention mechanism in node classification.

3.2.1 GAT

In order to capture different importance of neighborhood for a target node when producing the higher hidden representation, Veličković et al. [57] introduced an attention-based graph convolutional layer and is then used to construct arbitrary deep graph attention networks (GAT) by stacking such attention layers.

Illustration of multi-head attention mechanism used in the GAT model. Here, the number of attention is set as \(K = 3\). The aggregated features from each head are concatenated or averaged to obtain a higher representation for each node. The average operation is only used in the output layer. The self-connection is not considered

On the whole, the objective of the GAT model is to produce a new set of features \({\mathbf {Z}} = \left\{ {\mathbf {Z}}_1, {\mathbf {Z}}_2, \ldots , {\mathbf {Z}}_n \right\} \in {\mathbb {R}}^{n \times f}\) for all nodes by using \({\mathbf {X}} = \left\{ {\mathbf {X}}_1, {\mathbf {X}}_2, \ldots , {\mathbf {X}}_n \right\} \) as the model inputs. In detail, GAT firstly utilizes a learnable linear transformer to obtain the hidden representations \({\mathbf {H}}_i ={\mathbf {W}} {\mathbf {X}}_{i} \in {\mathbb {R}}^{f}\) for each node \(v_i\). Here, \({\mathbf {W}} \in {\mathbb {R}}^{f \times d}\) denotes the parametrized weight matrix of this transformer. Then, Veličković et al. [57] designed an attentional function \({\text {ATTENTION}}: {\mathbb {R}}^{f} \times {\mathbb {R}}^{f} \rightarrow {\mathbb {R}},\) to compute attention weights between each pair of nodes by using the above hidden representation

Specifical, \(e_{i j}\) denotes the importance of node \(v_j\) when computing the representation of node \(v_i.\) Note that, Veličković et al. [57] only use the first-order appropriate neighbors in Eq. (22). In order to make the weight coefficients more comparable across different nodes, a softmax function is used to normalize \(e_{ij}\) and the improved form is defined as

In fact, Veličković et al. [57] only used a single forward neural network with an activation function to compute the normalized coefficients. Thus, Eq. (23) is reformed as

where \({\mathbf {a}} \in {\mathbb {R}}^{2f}\) is the weight vector of the proposed attention mechanism implemented by a single-layer feedforward network and \(\sigma (\cdot )\) is implemented by function \({\text {LeakyReLU}}\). Once the coefficients of a node’s neighbors are all computed, a linear combination for all neighbors is used to obtain the final representation for each target node which is shown as follows:

Furthermore, Veličković et al. [57] applied a multi-head attention mechanism to improve the stability of the learning process, which is inspired by Vaswani et al. [58]. This improved mechanism is illustrated in Fig. 7 and can be defined as

where \(\alpha _{i j}^{k}\) denotes the normalized coefficients computed by the k-th attention function \({\text {ATTENTION}}_k\) and \(\parallel \) represents the operator which concatenating the output feature of all attention functions. Note that the concatenate operator of Eq. (26) will be replaced with an averaging operator if the multi-head attention is used in the last layer, and then, Eq. (26) can be redefined as

Here, \({\mathbf {H}}_j^k = {\mathbf {W}}^{k}{\mathbf {X}}_{j}\) is computed by the k-th linear transformer. Additional, GAT is efficient due to the parallelized compute of the attention layer and the complexity of one layer is appropriate to \({\mathcal {O}}\left( ndf+mf\right) .\)

3.2.2 AGNN

Similarly, Kiran et al. [59] proposed a new attention-based graph neural network (AGNN) with a dynamic and adaptive propagation layer and then used it to deal with the situation where the supervised information in the original data is scarce.

The AGNN model consists of one word-embedding layer and several attention-guided propagation layers. The front is used to map a bag-of-words representation of a document into an averaged word embedding. In the word-embedding layer, a linear transformer followed by an activation function is used to derive the initially hidden representation for each node by using the input features. Thus, the first layer of the AGNN model can be defined as \({\mathbf {H}}^{0}=\sigma \left( {\mathbf {X}} {\mathbf {W}}^{0}\right) ,\) where \({\mathbf {W}}^{0} \in {\mathbb {R}}^{d \times h}\) denotes the transform matrix, and \(\sigma (\cdot )\) is implemented by the rectified linear unit (ReLU) that can be calculated as \({\text {ReLU}}(x) = \max (0, x)\). In fact, \({\mathbf {H}}^{0} \in {\mathbb {R}}^{n \times h}\) is a hidden matrix by stacking the hidden state of all nodes.

Then, the normalized attention coefficient from node \(v_j\) to node \(v_i\) in the k-th layer is computed as follow

Here, \(\cos ({\mathbf {x}}, {\mathbf {y}})={\mathbf {x}}^{T} {\mathbf {y}} /\Vert {\mathbf {x}}\Vert \Vert {\mathbf {y}}\Vert \) with an \(L_2\) norm, e.g., \(\Vert x\Vert \) and \(\beta ^k \in {\mathbb {R}}\) is the only scalar parameter of k-th attention-guided propagation layer. Note that in Eq. (28), the self-connected attention coefficient is also considered. Similar to the work produced by Veličković et al. [57], the coefficient of a node’s neighbors is used to compute the next hidden representation of target node \(v_i\) and is defined as

Since the whole AGNN model consists of several same propagation layer, Kiran et al. [59] propose the following layer-wise propagation rule based on a recursive formulation \({\mathbf {H}}^{k+1}={\mathbf {P}}^{k} {\mathbf {H}}^{k},\) where \({\mathbf {P}}^{k} \in {\mathbb {R}}^{n \times n}\) is the coefficient matrix derived from the k-th propagation layer. Finally, the output layer consists of a linear transformer and an activation function to produce the final representation for each node

where \({\mathbf {W}}^{1} \in {\mathbb {R}}^{h \times f}\) is the transformation weight matrix, K denotes the number of attentional propagation layers, and \(\sigma (\cdot )\) is implemented by \({\text {softmax}}\) function. Note that \({\mathbf {W}}^{0},\) \({\mathbf {W}}^{1},\) and \(\beta ^{(k)}\) are the parameters that need to learn in the training process.

Instead of using all nodes in a graph to compute attention coefficients [60, 61], Kiran et al. [59] only used the first-order neighbors similar to the GAT model [57]. The illustration of AGNN is depicted in Fig. 8.

3.3 Autoencoder mechanism

Autoencoder mechanism is one of the most commonly used unsupervised technology to learn a low-dimensional embedding from large unlabeled training data. Moreover, there is existing a large number of node classification data with scarce labeled nodes. Thus, it is essential to utilize this mechanism to learn a higher representation for all nodes.

3.3.1 VGAE

Kipf and Welling [62] firstly employed the variational autoencoder (VAE) [63] to deal with the graph-structured data and proposed an unsupervised learning algorithm variational graph autoencoder (VGAE). The framework of VGAE can be separated into two parts: the inference model (encoder) and the generative model (decoder).

In detail, the inference model is implemented by only using a two-layer GCN model [48] and can be defined as

where \(q\left( {\mathbf {Z}}_{i} | {\mathbf {X}}, {\mathbf {A}}\right) ={\mathcal {N}}\left( {\mathbf {Z}}_{i} | \varvec{\mu }_{i}, \varvec{\varSigma }_i \right) \), \(\varvec{\mu }_{i} \in {\mathbb {R}}^{f}\) is the mean vector, \(\varvec{\varSigma }_i \in {\mathbb {R}}^{f \times f}\) denotes the covariance matrix of above Gaussian distribution. \({\mathbf {Z}}_{i}\) denotes the stochastic latent variable for the i-th node and is used to construct the latent matrix \({\mathbf {Z}} \in {\mathbb {R}}^{n \times f}.\) Specifically, \(\varvec{\mu }_{i}\) and \(\varvec{\varSigma }_i\) are obtained by using the GCN layer which is defined as \(\varvec{\mu }_{i} = {\text {GCN}}_{\varvec{\mu }} ({\mathbf {X}}_i, {\mathbf {A}})\) and \({\text {log}}\varvec{\varSigma }_i = {\text {GCN}}_{\varvec{\varSigma }} ({\mathbf {X}}_i, {\mathbf {A}}),\) respectively. Then, the definition of this two-layer GCN model is shown as follows:

where \(\mathring{{\mathbf {A}}}={\mathbf {D}}^{-\frac{1}{2}} {\mathbf {A}} {\mathbf {D}}^{-\frac{1}{2}}\) denotes the symmetrically normalized adjacency matrix and \(\sigma (\cdot )\) is implemented by a \({\text {ReLU}}\) function; \({\mathbf {W}}^{0}\) and \({\mathbf {W}}^{1}\) are the learnable weight matrices and \({\mathbf {W}}^{0}\) is shared between \({\text {GCN}}_{\varvec{\mu }} ({\mathbf {X}}_i, {\mathbf {A}})\) and \({\text {GCN}}_{\varvec{\varSigma }} ({\mathbf {X}}_i, {\mathbf {A}}).\)

In the generative process, Kipf and Welling [62] simply employed an inner product operation between different learned latent variables to implement the decoder and can be formed as:

Here, \(\sigma (\cdot )\) is implemented by a sigmoid activation function \({\text {sigmoid}}(x)={1}/{\left( 1 + e^{-x}\right) }\). Finally, the goal of VGAE is to minimize the variational lower bound:

with a Gaussian prior distribution

Here, \({\mathbf {0}}\) is a zero vector and \({\text {KL}}[\cdot \parallel \cdot ]\) operation denotes the Kullback–Leibler (KL) divergence between two distributions. Note that, Kipf and Welling [62] set \({\mathbf {A}}_{ij}=1\) in Eq. (34) when \({\mathbf {A}}\) is very sparse and used the \( r enormalization~trick\) strategy during the training process.

Additionally, Kipf and Welling [62] proposed a non-probabilistic variant of VGAE called graph autoencoder (GAE). In the GAE model, the embeddings of all nodes are computed as same as Eq. (32) and are then used to reconstruct \({{\mathbf {A}}}=\sigma \left( {\mathbf {Z}} {\mathbf {Z}}^{T}\right) .\)

3.3.2 G2G

In fact, the previous approaches represent each node by a single point vector in a low-dimensional continuous space, and it is difficult to obtain information about the uncertainty of representation. However, diverse nodes may contain different uncertainties. On the other hand, identical uncertainty is insufficient for modeling [64]. As a solution to this problem, Bojchevski et al. [65] proposed a new graph autoencoder model called Graph2Gauss (G2G) to represent each node as a low-dimensional Gaussian distribution which allows capturing the uncertainty of representations.

In detail, G2G uses a deep encoder \(f_\theta ({{\mathbf {X}}}_i)\) to map nodes to a middle representation \({{\mathbf {M}}}_i\) which is then used as an input to obtain the mean vector \(\varvec{\mu }_i = \sigma (\mu _\theta ({{\mathbf {M}}}_i))\) and covariance matrix \(\varvec{\varSigma }_i = \sigma (\varSigma _\theta ({{\mathbf {M}}}_i))\) of the Gaussian distribution, respectively. Here, \(\varvec{\mu }_i \in {{\mathbb {R}}}^f\), \(\varvec{\varSigma }_i \in {{\mathbb {R}}}^{f \times f}\) with \(f \ll n, d\), and \(\sigma (\cdot )\) is implemented by \({\text {ReLU}}\) or other nonlinear functions. \(\theta \) are parameters that need to be trained and shared by all instances. Although \(\mu _\theta (\cdot )\) and \(\varSigma _\theta (\cdot )\) are usually feed-forward neural networks, it can be CNNs or RNNs model where each node denotes an image or a sequence, respectively. Then, \(\varvec{\mu }_i\) and \(\varvec{\varSigma }_i\) are pass through a Gaussian distribution to obtain the node embedding \({{\mathbf {H}}}_i\), which is shown as follows:

In order to utilize the information of graph structure during learning node representation, Bojchevski et al. [65] proposed an unsupervised personalized ranking algorithm. The algorithm is constrained to satisfy the following pairwise constraints

where \(\forall v_i \in {\mathcal {V}}\), \(\forall v_j \in N_{k}(i)\), \(\forall v_{j^{\prime }} \in N_{k^{\prime }}(i)\), \(\forall k < k'\) and \(\varDelta ({{\mathbf {H}}}_i, {{\mathbf {H}}}_j)\) denotes the dissimilarity measure between the Gaussian distribution embedding of \(v_i\) and \(v_j\). Equation (37) expresses the intuition which the 1-hop neighbors of \(v_i\) should be closer to node \(v_i\) than the 2-hop neighbors in the embedding space and so on.

Since the dissimilarity measure \(\varDelta ({{\mathbf {H}}}_i, {{\mathbf {H}}}_j)\) is defined between the Gaussian distribution of two nodes, it is natural to use the (KL) divergence similar to He et al. [64] and Santos et al. [66]. Specifically, \(\varDelta ({{\mathbf {H}}}_i, {{\mathbf {H}}}_j)\) can be defined by an asymmetric KL divergence which is shown as follows:

where \({\mathbf {P}} = {(\varvec{\mu }_i-\varvec{\mu }_j)}^T\varvec{\varSigma }_i^{-1}(\varvec{\mu }_i-\varvec{\mu }_j)\), \(tr(\cdot )\) denotes the trace of a matrix, and \(det(\cdot )\) denotes the determinant of a matrix.

Since it is difficult to solve Eq. (37), Bojchevski et al. [65] introduced an energy-based method to define the energy between two nodes by using the KL divergence. Then, the objective of G2G is to optimize the following loss function

where \(E_{ij}={\text {KL}}({\mathcal {N}}(i)||{\mathcal {N}}(j))\) is the energy between two nodes, and \({\mathcal {D}}_t = \{(i,j_k,j_l)|{\text {sp}}(i,j_k)<{\text {sp}}(i,j_l)\}\) is the set of all valid instances. Additionally, Bojchevski et al. [65] proposed an unbiased node-anchored sampling (NaS) strategy to solve the unbalanced problem, which may occur in the original sampling strategy.

3.3.3 DGI

The previous unsupervised learning methods used for generating node embedding are mostly based on the random walk objective. However, these approaches pay too much attention to the localized structure information [67], and the performance relies heavily on the setting of hyperparameter [68]. To solve this problem, Veličković et al. [69] proposed an unsupervised learning method call deep graph infomax (DGI), which is based on the mutual information between the global representation of the entire graph and the patch representation of special input. Specifically, mutual information maximization is introduced into the graph data by DGI.

In detail, Veličković et al. [69] produced a high-level embedding \({\mathbf {H}}_{i}\) for each node \(v_i\) by using a graph convolutional encoder \(f: {\mathbb {R}}^{n \times d} \times {\mathbb {R}}^{n \times n} \rightarrow {\mathbb {R}}^{n \times f},\) such that \({\mathbf {H}} = f({\mathbf {X}}, {\mathbf {A}})\). Note that Veličković et al. [69] referred \({\mathbf {H}}_{i}\) as the patch representation of \(v_i\) since this embedding is formed by summarizing a patch of graph information. Veličković et al. [69] introduced a summary vector \({\mathbf {s}}\) to denote the global information of the entire graph. The summary vector \({\mathbf {s}}\) is computed by using a readout function \({\mathcal {R}}: {\mathbb {R}}^{n \times d} \rightarrow {\mathbb {R}}^{d}\), which uses the derived patch representations as input and can be formulated as

Then, Veličković et al. [69] assigned a probability score for each patch-summary pair by using a discriminator, \({\mathcal {D}}: {\mathbb {R}}^{d} \times {\mathbb {R}}^{d} \rightarrow {\mathbb {R}}.\) Note that this score will then be used in the objective function.

Furthermore, a negative sample strategy is employed to produce the product of marginals (negative samples). Specifically, an explicit (stochastic) corruption function, \({\mathcal {C}} : {\mathbb {R}}^{n \times d} \times {\mathbb {R}}^{n \times n} \rightarrow {\mathbb {R}}^{h \times d} \times {\mathbb {R}}^{h \times h},\) is used to obtain the alternative graph derived from the original graph which is shown as follows:

where h denotes the number of nodes in the alternative graph \(\breve{{\mathcal {G}}}.\) Note that Eq. (41) is only used for the situation where the dataset only contains one single graph. For the multi-graphs situation, some other graphs of the dataset will be used as \(\breve{{\mathcal {G}}}.\) Thus, the negative samples can be derived from the alternative graph.

Finally, in order to maximize the mutual information between \({\mathbf {H}}_i\) and \({\mathbf {s}},\) Veličković et al. [69] used a noise-contrastive type objective with a standard binary cross-entropy loss between the samples from the joint and the product of marginals and can be formed as

with

and

Here, the calculation of Eq. (42) is based on the Jensen–Shannon divergence between the positive examples and the negative examples. The DGI model is illustrated in Fig. 9.

A high-level overview of DGI. f represents a graph convolutional encoder. \({\mathcal {R}}\), \({\mathcal {D}}\), \({\mathcal {C}}\) denote the readout function, discriminator and corruption function, respectively. The hidden embedding \({\mathbf {H}}\) is used as the input of \({\mathcal {R}}\) to obtain the summary vector \({\mathbf {s}}\)

4 Experimental analysis

In this section, we compare the aforementioned graph neural networks on several popular node classification datasets. Firstly, we describe the statistic information of datasets and parameter settings in detail. Then, four commonly used evaluation metrics are introduced to evaluate the performance of various algorithms. The classification results of different methods are provided at the end.

4.1 Datasets

We conduct our experiments on several applications, including citation networks, co-author networks, Amazon networks, protein-protein interaction (PPI) networks. The detailed descriptions of each dataset are introduced in the following, and the statistic information of all datasets is summarized in Table 2.

4.1.1 Transductive learning

Transductive learning means that the trained models miss the ability to generalize to unseen nodes. In this category, several standard benchmark datasets, including five citation networks, one co-author network and two Amazon networks, are used to compare. For all of these datasets, the transductive experimental setup is similar to Yang et al. [70].

In detail, Cora, Citeseer and Pubmed are firstly introduced by Sen et al. [41] and DBLP is proposed by Pan et al. [71]. The Cora-ML dataset is similar to Cora and was produced by Bojchevski et al. [65]. In all of these citation datasets, each node represents a scientific paper and edges correspond to citations. Note that the citation networks are represented as undirected graphs according to [70]. Node features are bag-of-words encoded documents. The class labels represent the research domains to which each document belongs, and each node has a unique tag. For co-author graph CS, each node represents a researcher connected by an edge if they co-author a paper. Node features correspond to paper keywords for each author’s papers. The labels of nodes denote the most active fields of study for each author. In Amazon networks, Computers and Photo are segments of the Amazon co-purchase graph [72], where vertices denote goods and node features correspond to elements of a bag-of-words representation of a review. If two products are frequently bought together, there is an edge between them. The class labels represent the category of each product.

In order to obtain the performance of each model in all transductive datasets, only 20 nodes for each class are used to train, and the other 1000 nodes are used in the test phase. Note that all of the node features are utilized in the unsupervised learning methods and all graphs are undirected.

4.1.2 Inductive learning

Contrary to transductive learning, an algorithm is inductive means that it can be generalized to unseen graphs or nodes. In our experiments, a popular PPI network benchmark dataset is used in this setting.

In detail, the complete PPI dataset is provided by Zitnik and Leskovec [73] and then was preprocessed again by Hamilton et al. [50]. The version of PPI used in our experiments is the latter one. In this dataset, each graph corresponds to different human tissues, and nodes represent various protein functions. The features of each node are derived from positional gene sets, motif gene sets and immunological signatures. Each label represents a gene ontology set collected from the Molecular Signatures Database [43], and each node can hold several labels simultaneously. The dataset contains 20 graphs used in the training stage and two completely unobserved graphs for testing.

4.2 Experimental setup

Since most parameters of all algorithms are set according to the original papers, the validation procedure is stripped in our experiments. For all models, they have some identical settings introduced in the following. The maximum number of training epochs is 2000, and the training stage would be early stopped when the loss is not reducing in one consecutive hundred epochs. We repeatedly conduct each experiment five times and provide the values of mean and variance across all repeats. The parameters of all methods are optimized by using Adam [74]. For supervised or semi-supervised learning models, the objective of model training is to minimize the cross-entropy loss. On the other hand, G2G and DGI both have a customize loss function. For instance, cross-entropy and KL divergence are both used in VGAE. Similar to transductive datasets, the multi-label classification of the PPI dataset is regarded as a multi-class classification problem.

For those models in which node classification is not considered in the original paper, the initial configurations are referring to algorithms similar to their architecture. For instance, the parameter initialization of ChebNet is derived from GCN’s settings, and VGAE is similar to G2G. Note that the sampling technology of GraphSAGE introduced in Sect. 3 is not used in our experiments, and the mean aggregator is used to propagate information. After the embedding matrix is obtained, a linear transform function is trained to gain the probability distribution of classes for unsupervised learning methods, including VGAE, G2G and DGI.

The major software and hardware devices used in our experiments are listed in the following. Based on the deep learning framework PyTorchFootnote 1, an open source graph representation learning tool called PyTorch GeometricFootnote 2 (PyG) was used in our experiments. Specifically, the latest released version of PyG, 1.3.0, is used to implement all baseline methods except the G2G model. G2G is implemented by using the code provided by Bojchevski et al. [65] based on the TensorFlow frameworkFootnote 3. Note that the train data and test data used in G2G are proportional to the full dataset according to a rule in which the final size of training data is approximate to other baseline methods. In order to accelerate the training process, one graphics processing unit (GPU) card with 16 GB memory is used in all experiments. The implementations of all algorithms are available at https://github.com/Xiaoshunxin/GNN-Survey.

4.3 Evaluation metrics

In order to compare the performance of diverse algorithms, several commonly used evaluation metrics of the classification task, including macro-precision (macro-P), macro-recall (macro-R), macro-F1 and micro-F1, are used in our experiments.

Firstly, the true positive, false positive, true negative and false negative of i-th class are denoted as \(TP_i\), \(FP_i\), \(TN_i\) and \(FN_i\), respectively. Then, the definitions of these metrics are introduced in the following.

-

In order to obtain the macro-precision of multi-class classification, the precision of each class should be calculated at first and can be formed as \(P_i = TP_i / (TP_i + FP_i)\). Then, macro-P is obtained by averaging all the precisions, which is shown as follow

$$\begin{aligned} {\text {macro-P}} = \frac{1}{n} \sum _{i=1}^{n}P_i, \end{aligned}$$(43)where n denotes the number of classes.

-

Similar, the value of macro-R can be obtained by averaging all the recalls

$$\begin{aligned} {\text {macro-R}} = \frac{1}{n} \sum _{i=1}^{n}R_i, \end{aligned}$$(44)where \(R_i = TP_i / (TP_i + FN_i)\) denotes the recall of the i-th class.

-

In order to balance macro-P and macro-R, Macro-F1 is introduced and can be formed as

$$\begin{aligned} {\text {macro-F1}} = \frac{2 \times {\text {macro-P}} \times {\text {macro-R}}}{{\text {macro-P}} + {\text {macro-R}}}. \end{aligned}$$(45) -

Since macro-F1 does not consider the sample size of each class, it is suitable to use micro-F1 when the distribution of classes is unbalanced. The definition of micro-F1 is formed as

$$\begin{aligned} {\text {micro-F1}} = \frac{2 \times {\text {micro-P}} \times {\text {micro-R}}}{{\text {micro-P}} + {\text {micro-R}}}, \end{aligned}$$(46)with

$$\begin{aligned} {\text {micro-P}} = \frac{\sum _{i=1}^{n}TP_i}{\sum _{i=1}^{n}(TP_i + FP_i)}, \end{aligned}$$and

$$\begin{aligned} {\text {micro-R}} = \frac{\sum _{i=1}^{n}TP_i}{\sum _{i=1}^{n}(TP_i + FN_i)}. \end{aligned}$$

4.4 Experimental results

In this section, the compared algorithms are tested on nine publicly available datasets with several evaluation metrics mentioned above. The macro-P, macro-R, macro-F1 and micro-F1 of all algorithms on all datasets are listed in Table 3.

The blank cell described by one horizontal line represents that this algorithm is essential unsuitable to current dataset except for G2G and DGI. For instance, transductive learning algorithms including GCN, VGAE, ChebNet, APPNP and SGC cannot be used on the PPI dataset because this dataset is inductive. However, the performance of G2G and DGI on the PPI dataset is not obtained because the setting of inductive learning is not provided in the source code. In addition, the SGC model and GAT model have both obtained the best performance on the Computers dataset in macro-recall metric. We regard SGC as the best model because of the smaller variance.

Furthermore, several observations are derived from the results shown in Table 3. From the perspective of the algorithm, some algorithms are suitable for a special graph. For instance, GAT achieves the best performance on two transductive datasets (Cora and Photo) and one inductive benchmark (PPI) with all evaluation metrics. This observation can reflect the importance of attention mechanism in both transductive and inductive learning. SGC model obtains the minimum variances on all transductive learning datasets with all evaluation metrics. This phenomenon may reflect that the simpler a model is, the more stable it is. In addition, SGC achieves comparable performance on several datasets and obtains the best result on the CiteSeer benchmark with all evaluation metrics. It demonstrates that a simple method, which only has a few layers, may have comparable performance to complex models. G2G algorithm also achieves the best performance on three datasets (PubMed, DBLP and Cora-ML) in four criterions, except for the Macro-recall result on the DBLP dataset. This phenomenon demonstrates that not only the supervised information (e.g., node labels) is critical, but also the extensive unsupervised information (e.g., the information of graph structure) is all important for the node classification task. On the other hand, observations would be derived from the perspective of datasets. AGNN usually has a big variance on large datasets such as DBLP, Photo and Computers. In addition, the performance of different algorithms is greatly different on the same dataset. For instance, the gap between the best method and the worst network on the CiteSeer dataset is close to forty percent. It demonstrates that different algorithms may be suitable for different tasks.

The runtimes of each training epoch of different GNNs algorithms on several datasets are illustrated in Fig. 10. For those models which training embeddings in an unsupervised way, only the time cost of training hidden representation for each node is considered in our experiments. We also have some observations from this figure. The training time of unsupervised learning algorithms including VGAE, G2G and DGI is longer than those semi-supervised or supervised methods on most datasets. ChebNet has a larger time cost on CS because this model is sensitive to the size of nodes set and edge set, as well as the dimension of node features. The SGC model spends very little time on all datasets because there is only one linear transformation in the training stage.

5 Challenges and future directions

In this section, several challenges of the development of GNNs would be introduced based on the experimental results mentioned above. Then, some potential future directions are provided for interested researchers.

Based on the observations derived from the experimental results, we can find many existing problems in previous research works.

-

In transductive learning, several aforementioned graph analysis algorithms are difficultly scaled to large graphs in real-world applications such as community detection, which usually contains millions of nodes and edges.

-

Most of the existing graph neural networks are essential transductive learning algorithms. This means that they cannot handle nodes or graphs that never appear and are unsuitable for many applications.

-

Several deep learning paradigms used for graph analysis are supervised or semi-supervised learning. These methods often require lots of supervised information in the training stage to obtain a good performance. However, most of the existing graph data are unsupervised and it is difficult to apply the supervised algorithms to these unlabeled data.

-

The applications of node classification are mainly focused on those data in which there are existing real-world relationships between samples. However, it is a possible way to apply GNNs in different kinds of data via node classification.

-

Stacking several layers of graph convolution may cause over-smoothing that the learned representations of all nodes tend to be the same. As a result, the performance of graph convolution models would decrease dramatically.

Then, several potential future directions are introduced based on the challenges mentioned above.

-

The ability to deal with a large graph is important in many scenes. From the perspective of computational resources, the possible solution is to design a parallelized algorithm that can run with multiple GPU cards. On the other hand, many effective sampling techniques would be introduced to reduce the scale of large graphs.

-

Generalizing to unseen nodes or graphs has many benefits. For instance, the model can be trained only in some parts of the whole graph and then used to predict other parts. One of the essential reasons for losing the inductive ability is that they usually apply the information aggregation on the propagation matrix. As a result, we suggest that the information aggregation should be partial instead of global.

-

There is a lot of unsupervised information in graph data. Using this information can produce a meaningful representation for nodes or graphs. We can use unsupervised deep learning technologies such as autoencoder mechanism to achieve this objective.

-

For common classification or clustering tasks, heuristic methods can be used to model the real relationship between samples. Then, these tasks can be converted to node classification and be learned by various GNNs models.

-

To solve the over-smoothing problem, one can introduce external information to reduce the distortion caused by deep training. For example, the relationships between nodes can be used as supervised information to guide the training process.

Finally, we believe that the designed GNNs model would be more accurate and more quickly by further studying these directions.

6 Conclusion

In this paper, we introduced an overview of graph neural networks and provided a comparison among them in node classification tasks. According to the major learning paradigms, these graph analysis algorithms were divided into three categories: convolutional mechanism, attention mechanism and autoencoder mechanism. A comprehensive introduction of several popular methods for each category was provided. Furthermore, extensive comparative experiments were conducted on nine benchmark datasets to compare the performance of node classification. Finally, a number of challenges are introduced based on the experimental results and several potential future directions are provided to interested researchers. In the future work, we will explore more efficient graph neural networks for node classification based on these future directions.

References

LeCun, Y., Boser, B., Denker, J.S., Henderson, D., Howard, R.E., Hubbard, W., Jackel, L.D.: Backpropagation applied to handwritten zip code recognition. Neural Comput. 1(4), 541–551 (1989)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Proceedings of the 26th Conference on Neural Information Processing Systems, pp. 1097–1105 (2012)

Wan, W., Zhong, Y., Li, T., Chen, J.: Rethinking feature distribution for loss functions in image classification. In: Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, pp. 9117–9126 (2018)

Long, J., Shelhamer, E., Darrell, T.: Fully convolutional networks for semantic segmentation. In: Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, pp. 3431–3440 (2015)

Papadomanolaki, M., Vakalopoulou, M., Karantzalos, K.: A novel object-based deep learning framework for semantic segmentation of very high-resolution remote sensing data: Comparison with convolutional and fully convolutional networks. Remote Sens. 11(6), 684 (2019)

Chen, L., Zhang, H., Xiao, J., Nie, L., Shao, J., Liu, W., Chua, T.S.: Sca-cnn: Spatial and channel-wise attention in convolutional networks for image captioning. In: Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, pp. 5659–5667 (2017)

Aneja, J., Deshpande, A., Schwing, A.G.: Convolutional image captioning. In: Proceedings of the 2018 IEEE Conference on Computer Vision and Pattern Recognition, pp. 5561–5570 (2018)

Elman, J.L.: Finding structure in time. Cogn. Sci. 14(2), 179–211 (1990)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Tang, D., Qin, B., Liu, T.: Document modeling with gated recurrent neural network for sentiment classification. In: Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pp. 1422–1432 (2015)

Ma, Y., Peng, H., Cambria, E.: Targeted aspect-based sentiment analysis via embedding commonsense knowledge into an attentive lstm. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence, pp. 5876–5883 (2018)

Liu, S., Yang, N., Li, M., Zhou, M.: A recursive recurrent neural network for statistical machine translation. In: Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics, pp. 1491–1500 (2014)

Su, J., Wu, S., Xiong, D., Lu, Y., Han, X., Zhang, B.: Variational recurrent neural machine translation. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence, pp. 5488–5495 (2018)

Xiong, C., Merity, S., Socher, R.: Dynamic memory networks for visual and textual question answering. In: Proceedings of the 33nd International Conference on Machine Learning, pp. 2397–2406 (2016)

Lin, Y., Ji, H., Liu, Z., Sun, M.: Denoising distantly supervised open-domain question answering. In: Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics, pp. 1736–1745 (2018)

Karabatak, M., Ince, M.C.: An expert system for detection of breast cancer based on association rules and neural network. Expert Syst. Appl. 36(2), 3465–3469 (2009)

Cireşan, D.C., Giusti, A., Gambardella, L.M., Schmidhuber, J.: Mitosis detection in breast cancer histology images with deep neural networks. In: Proceedings of the 16th International Conference on Medical Image Computing and Computer-assisted Intervention, pp. 11–418 (2013)

Panakkat, A., Adeli, H.: Neural network models for earthquake magnitude prediction using multiple seismicity indicators. Int. J. Neural Syst. 17(1), 13–33 (2007)

Adeli, H., Panakkat, A.: A probabilistic neural network for earthquake magnitude prediction. Neural Netw. 22(7), 1018–1024 (2009)

Silver, D., Huang, A., Maddison, C.J., Guez, A., Sifre, L., Van Den Driessche, G., Schrittwieser, J., Antonoglou, I., Panneershelvam, V., Lanctot, M., et al.: Mastering the game of go with deep neural networks and tree search. Nature. 529(7587), 484 (2016)

Liu, W., Wang, Z., Liu, X., Zeng, N., Liu, Y., Alsaadi, F.E.: A survey of deep neural network architectures and their applications. Neurocomputing. 234, 11–26 (2017)

Zhang, Q., Yang, L.T., Chen, Z., Li, P.: A survey on deep learning for big data. Inf. Fusion. 42, 146–157 (2018)

Bullmore, E., Sporns, O.: Complex brain networks: graph theoretical analysis of structural and functional systems. Nat. Rev. Neurosci. 10(3), 186 (2009)

Yang, J., Leskovec, J.: Community-affiliation graph model for overlapping network community detection. In: Proceedings of 12th IEEE International Conference on Data Mining, pp. 1170–1175 (2012)

Bhatia, V., Rani, R.: A distributed overlapping community detection model for large graphs using autoencoder. Futur. Gener. Comp. Syst. 94, 16–26 (2019)

Huang, W., Song, G., Hong, H., Xie, K.: Deep architecture for traffic flow prediction: Deep belief networks with multitask learning. IEEE Trans. Intell. Transp. Syst. 15(5), 2191–2201 (2014)

Qi, H.: Graphical solution for arterial road traffic flow model considering spillover. IEEE Access. 6, 6755–6764 (2017)

Ji, G., Liu, K., He, S., Zhao, J.: Knowledge graph completion with adaptive sparse transfer matrix. In: Proceedings of the 30th AAAI Conference on Artificial Intelligence, pp. 985–991 (2016)

Hamaguchi, T., Oiwa, H., Shimbo, M., Matsumoto, Y.: Knowledge transfer for out-of-knowledge-base entities: A graph neural network approach. In: Proceedings of the 26th International Joint Conference on Artificial Intelligence, pp. 1802–1808 (2017)

Gori, M., Monfardini, G., Scarselli, F.: A new model for learning in graph domains. In: Proceedings of the 2005 IEEE International Joint Conference on Neural Networks, pp. 729–734 (2005)

Scarselli, F., Gori, M., Tsoi, A.C., Hagenbuchner, M., Monfardini, G.: The graph neural network model. IEEE Trans. Neural Netw. 20(1), 61–80 (2009)

Duvenaud, D.K., Maclaurin, D., Iparraguirre, J., Bombarell, R., Hirzel, T., Aspuru-Guzik, A., Adams, R.P.: Convolutional networks on graphs for learning molecular fingerprints. In: Proceedings of the 29th Conference on Neural Information Processing Systems, pp. 2224–2232 (2015)

Li, Y., Tarlow, D., Brockschmidt, M., Zemel, R.S.: Gated graph sequence neural networks. In: Proceedings of the 4th International Conference on Learning Representations (2016)

Atwood, J., Towsley, D.: Diffusion-convolutional neural networks. In: Proceedings of the 30th Conference on Neural Information Processing Systems, pp. 1993–2001 (2016)

Li, Q., Han, Z., Wu, X.M.: Deeper insights into graph convolutional networks for semi-supervised learning. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence, pp. 3538–3545 (2018)

Zhang, M., Chen, Y.: Link prediction based on graph neural networks. In: Proceedings of the 32nd Conference on Neural Information Processing Systems, pp. 5165–5175 (2018)

Xu, X., Liu, C., Feng, Q., Yin, H., Song, L., Song, D.: Neural network-based graph embedding for cross-platform binary code similarity detection. In: Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, pp. 363–376 (2017)

Niepert, M., Ahmed, M., Kutzkov, K.: Learning convolutional neural networks for graphs. In: Proceedings of the 33rd International Conference on Machine Learning, pp. 2014–2023 (2016)

Zhang, M., Cui, Z., Neumann, M., Chen, Y.: An end-to-end deep learning architecture for graph classification. In: Proceedings of the 32nd AAAI Conference on Artificial Intelligence, pp. 4438–4445 (2018)

Kazienko, P., Kajdanowicz, T.: Label-dependent node classification in the network. Neurocomputing. 75(1), 199–209 (2012)

Sen, P., Namata, G., Bilgic, M., Getoor, L., Galligher, B., Eliassi-Rad, T.: Collective classification in network data. AI Mag. 29(3), 93–93 (2008)

Tang, J., Qu, M., Wang, M., Zhang, M., Yan, J., Mei, Q.: Line: Large-scale information network embedding. In: Proceedings of the 24th International Conference on World Wide Web, pp. 1067–1077 (2015)

Subramanian, A., Tamayo, P., Mootha, V.K., Mukherjee, S., Ebert, B.L., Gillette, M.A., Paulovich, A., Pomeroy, S.L., Golub, T.R., Lander, E.S., et al.: Gene set enrichment analysis: a knowledge-based approach for interpreting genome-wide expression profiles. Proc. Natl. Acad. Sci USA 102(43), 15545–15550 (2005)

Xu, J., Li, Y.: Discovering disease-genes by topological features in human protein-protein interaction network. Bioinformatics. 22(22), 2800–2805 (2006)

Defferrard, M., Bresson, X., Vandergheynst, P.: Convolutional neural networks on graphs with fast localized spectral filtering. In: Proceedings of the 30th Conference on Neural Information Processing Systems, pp. 3844–3852 (2016)

Spielman, D.A.: Spectral graph theory and its applications. In: Proceedings of the 48th Annual IEEE Symposium on Foundations of Computer Science, pp. 29–38. IEEE (2007)

Dhillon, I.S., Guan, Y., Kulis, B.: Weighted graph cuts without eigenvectors a multilevel approach. IEEE Trans. Pattern Anal. Mach. Intell. 29(11), 1944–1957 (2007)

Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. In: Proceedings of the 4th International Conference on Learning Representations (2016)

Hammond, D.K., Vandergheynst, P., Gribonval, R.: Wavelets on graphs via spectral graph theory. Appl. Comput. Harmon. Anal. 30(2), 129–150 (2011)

Hamilton, W., Ying, Z., Leskovec, J.: Inductive representation learning on large graphs. In: Proceedings of the 31st Conference on Neural Information Processing Systems, pp. 1024–1034 (2017)

Xu, K., Li, C., Tian, Y., Sonobe, T., Kawarabayashi, K., Jegelka, S.: Representation learning on graphs with jumping knowledge networks. In: Proceedings of the 35th International Conference on Machine Learning, pp. 5449–5458 (2018)

Klicpera, J., Bojchevski, A., Günnemann, S.: Predict then propagate: Graph neural networks meet personalized pagerank. In: Proceedings of the 7th International Conference on Learning Representations (2018)

Page, L., Brin, S., Motwani, R., Winograd, T.: The pagerank citation ranking: Bringing order to the web. Tech. rep, Stanford InfoLab (1999)

Haveliwala, T.H.: Topic-sensitive pagerank. In: Proceedings of the 11st International Conference on World Wide Web, pp. 517–526. ACM (2002)

Wu, F., Zhang, T., Souza, A.H.J., Fifty, C., Yu, T., Weinberger, K.Q.: Simplifying graph convolutional networks. In: Proceedings of the 36th International Conference on Machine Learning, pp. 6861–6871 (2019)

Bottou, L.: Large-scale machine learning with stochastic gradient descent. In: Proceedings of the 19th International Conference on Computational Statistics, pp. 177–186 (2010)

Veličković, P., Cucurull, G., Casanova, A., Romero, A., Lio, P., Bengio, Y.: Graph attention networks. In: Proceedings of the 5th International Conference on Learning Representations (2017)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: Proceedings of the 31st Conference on Neural Information Processing Systems, pp. 5998–6008 (2017)

Thekumparampil, K.K., Wang, C., Oh, S., Li, L.J.: Attention-based graph neural network for semi-supervised learning (2018). arXiv:1803.03735

Duan, Y., Andrychowicz, M., Stadie, B., Ho, O.J., Schneider, J., Sutskever, I., Abbeel, P., Zaremba, W.: One-shot imitation learning. In: Proceedings of the 31st Conference on Neural Information Processing Systems, pp. 1087–1098 (2017)

Hoshen, Y.: Vain: Attentional multi-agent predictive modeling. In: Proceedings of the 31st Conference on Neural Information Processing Systems, pp. 2701–2711 (2017)

Kipf, T.N., Welling, M.: Variational graph auto-encoders (2016). arXiv:1611.07308

Kingma, D.P., Welling, M.: Auto-encoding variational bayes. In: Proceedings of the 2nd International Conference on Learning Representations (2013)

He, S., Liu, K., Ji, G., Zhao, J.: Learning to represent knowledge graphs with gaussian embedding. In: Proceedings of the 24th ACM International on Conference on Information and Knowledge Management, pp. 623–632 (2015)

Bojchevski, A., Günnemann, S.: Deep gaussian embedding of graphs: Unsupervised inductive learning via ranking. In: Proceedings of the 5th International Conference on Learning Representations (2017)

Dos Santos, L., Piwowarski, B., Gallinari, P.: Multilabel classification on heterogeneous graphs with gaussian embeddings. In: Proceedings of the 2016 Joint European Conference on Machine Learning and knowledge Discovery in Databases, pp. 606–622 (2016)

Ribeiro, L.F., Saverese, P.H., Figueiredo, D.R.: Struc2vec: Learning node representations from structural identity. In: Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 385–394 (2017)

Grover, A., Leskovec, J.: Node2vec: Scalable feature learning for networks. In: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 855–864 (2016)

Veličković, P., Fedus, W., Hamilton, W.L., Liò, P., Bengio, Y., Hjelm, R.D.: Deep graph infomax. In: Proceedings of the 6th International Conference on Learning Representations (2018)

Yang, Z., Cohen, W.W., Salakhutdinov, R.: Revisiting semi-supervised learning with graph embeddings. In: Proceedings of the 33rd International Conference on Machine Learning, pp. 40–48 (2016)

Pan, S., Wu, J., Zhu, X., Zhang, C., Wang, Y.: Tri-party deep network representation. In: Proceedings of the 25th International Joint Conference on Artificial Intelligence, pp. 1895–1901 (2016)

McAuley, J., Targett, C., Shi, Q., Van Den Hengel, A.: Image-based recommendations on styles and substitutes. In: Proceedings of the 38th International ACM SIGIR Conference on Research and Development in Information Retrieval, pp. 43–52 (2015)

Zitnik, M., Leskovec, J.: Predicting multicellular function through multi-layer tissue networks. Bioinformatics. 33(14), i190–i198 (2017)

Kingma, D.P., Ba, J.: Adam: A method for stochastic optimization. In: Proceedings of the 4th International Conference on Learning Representations (2014)

Acknowledgements

This work is partly supported by the National Natural Science Foundation of China under Grant Nos. 61502104 and 61672159.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xiao, S., Wang, S., Dai, Y. et al. Graph neural networks in node classification: survey and evaluation. Machine Vision and Applications 33, 4 (2022). https://doi.org/10.1007/s00138-021-01251-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-021-01251-0