Abstract

Finger knuckle print (FKP) as a physiological trait with a small image dimension, also a highly distinctive pattern, can be used as a reliable biometric identifier. In this paper, a new effective biometric authentication system using FKP texture based on relaxed local ternary pattern (RLTP) is presented. To further improve performance, cascading, overlapped patching and uniform rotation invariant pattern selection are used. Also to obtain more discriminative dominant patterns, an efficient learning framework is integrated with RLTP feature vectors. Identification and verification experiments conducted on the standard PolyU FKP dataset show the effectiveness of the proposed scheme.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Personal authentication including both identification and verification is important in different applications such as access to buildings, cars, computers and mobiles. Biometric authentication based on physiological or behavioral attributes is much more reliable compared to an ID card or a password, by occurring forged identity cards and hacked passwords. Various physiological traits such as face, fingerprint, iris and voice have been used. Among these, hand-based biometric features such as fingerprint, palm print, hand geometry and finger knuckle print (FKP) attract considerable attention to be more efficient in terms of accuracy and computational complexity [1]. In this paper, we focus on identification and verification of a person using FKP images. FKP image refers to an inherent skin pattern of outer surface around the phalangeal joint of human finger [2], and it is used as an efficient biometric trait with invariant, measurable, acceptable and permanence properties [3]. As shown in Fig. 1a, this characteristic has a small image size compared to other features such as palm print and therefore, it takes a shorter processing time [4]. Despite its small size, it is more reliable due to a more distinctive image compared to other methods such as fingerprint. The surface of the finger knuckle is extremely rich in lines and creases that are rather rounded but unique to individuals [3]. Also, in comparison with other hand-based biometric features such as fingerprint and palm print, the FKP is hard to be rubbed because the inner side of the hand is typically used to hold, read or do something else [3].

FKP extraction, a a typical FKP image, b FKP image acquisition device [6]

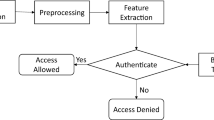

A biometric-based authentication system typically contains four main modules. The first module is responsible for the collection of objects, the second module extracts the features, the third module compares the features with the models stored in the dataset, and the fourth module determines whether there is a match or not [5]. Ideally, a biometric characteristic should be easy to collect and digitize [3]. FKP method of acquisition like the one shown in Fig. 1b is easy to use because it uses contactless and touch-free imaging techniques that are economical, architecturally simple and convenient for large population application. As the finger knuckle is also slightly bent when pictured, the inherent finger knuckle print patterns can be clearly captured and therefore, FKP's distinct features can be better extracted [6].

In this paper, we focus on the second stage to find a compact effective set of features to represent the FKP information. This feature extraction step has an important role in a FKP-based personal authentication system similar to other pattern classification tasks [7]. The features used for FKP can be categorized to geometric and texture features. In [3], conventional FKP recognition algorithms are grouped into coding methods, subspace methods, texture analysis methods and other image processing methods. In the proposed method in this paper, we use an enhanced texture feature extraction method and an efficient dominant pattern selection approach to improve the performance of FKP-based recognition and verification of individuals.

In the rest of this paper, Sect. 2 represents an overview of the related works. In Sect. 3, the proposed algorithm is reviewed. In Sect. 4, feature extraction stage of the proposed algorithm is discussed in more detail. The experimental results are provided in Sect. 5. Finally, summary and conclusions are presented in Sect. 6.

2 Related works

Woodward and Flynn were the first researchers to use the fine characteristics of the finger surface such as skin folds and crease patterns for human authentication [8]. Previous works used geometric features like length, width and shape of the finger [9] [10]. Subject similarity is calculated in [8] by capturing 3D range images of three adjacent fingers back surface and their curvature-based representation. The characteristics of the image texture which can be derived simultaneously from the intensity images of the hands are not used in this work. The system developed by Kumar and Ravikanth [4] extracts the knuckle texture and finger geometry features simultaneously in a peg-free and touchless imaging system and authenticates users based on the combination of these features. The accuracy of the proposed algorithm is highly dependent on knuckle segmentation performance. Further precision enhancement is accomplished with a more precise segmentation of the knuckle, which can also be obtained as a tradeoff in user convenience by using user pegs during imaging. Kumar and Zhou [11] proposed a FKP identification approach based on finite radon transform for the extraction of the orientation characteristics; which was previously proposed for palm print recognition in [12]. Zhang et al. [6] proposed a specially designed acquisition device as shown in Fig. 1b to collect FKP images. Unlike the devices proposed in [4] and [8], which first take the entire hand image and then extract the finger or finger knuckle surface regions, this system captures the FKP image directly. So with such a design, not only preprocessing steps are simplified considerably, but also the size of the imaging system is greatly reduced, which improves greatly its applicability. Zhang et al. in this work combine orientation and magnitude information extracted by Gabor filters for feature extraction. Zhang et al. in another research in [13] used the competitive coding method based on Gabor filters to extract local orientation information image as FKP features. The angular distance is used in the matching stage to measure the similarity between two competitive codes. The competitive coding scheme was proposed before for palm print recognition in [14]. Zhang et al. in another work in [15] used the Fourier transform based on band-limited phase-only correlation (BLPOC) to obtain global features of FKP images for matching process. Zhang et al. in the next work in [2] proposed a new approach for extracting and coding of features based on the monogenic signal theory. The 3-bit code created in each pixel implicitly represents the pixel's local orientation and phase information. Experiments conducted on standard FKP dataset show that the proposed system matches twice as fast while achieving competitive reliability with state-of-the-art methods of verification. In another research work, Zhang et al. [7] proposed a Riesz transform-based coding scheme for palm print and finger knuckle print recognition to extract local image features and used the normalized Hamming distance to match. Compared to other successful coding approaches, this approach achieved similar verification performance, but is about three times faster in the extraction of features.

The local binary pattern (LBP) is used recently in very pattern recognition applications as a powerful means of representing texture also very simple and highly reliable. After log Gabor filtering the image, Shariatmadar et al. [16] calculate LBP histograms as feature vectors for personal identification and identity verification based on FKP. The bio-hashing method is used for the matching process. Gao et al. [17] discuss the handling of the FKP scaling, rotational and translation variant resulting from flexibility in positioning the knuckle of the finger during the capturing process. These variations are handled using a dictionary learning method to reconstruct the captured finger knuckle image. Yu Pengfei et al. [18] proposed another approach based on LBP to identify individuals using FKP. The experimental results show that the method based on LBP outperforms the methods based on PCA and LDA. Gao et al. [19] proposed an integration scheme to combine multiple orientation and texture information through score level fusion in order to further enhance FKP validation accuracy. Using a multi-level image thresholding scheme, orientation coding is obtained in the proposed method after Gabor filtering on FKP image. Also, for feature extraction, LBP operator is applied to each Gabor filtering response. Kumar [20] in order to achieve improved performance in personal authentication used minor finger knuckle patterns along with major finger knuckle print. Local binary patterns (LBPs), improved local binary patterns (ILBPs) and 1D log Gabor filters were used to extract texture patterns of finger knuckle surface. Kumar et al. in the next work in [21] investigate the effective use of minutiae quality in improving knuckle pattern matching efficiency based on the fact that much of the law enforcement and forensic analysis of hand biometrics relies on the recovery and matching of minutiae patterns. Grover et al. [22] present the combination of the adaptive fuzzy decision level fusion and the FKP-based authentication score level fusion to enhance the individual fusion methods. Kulkarni et al. [23] use kekre wavelet transform-based energy coefficients as unique features for matching. In Nigam et al. [24], each pixel is represented by its vertical and horizontal Gorp code instead of its gray value, which is obtained using the gradient of its eight adjacent pixels calculated using Scharr kernels x and y directions, respectively. Usha et al. [25] suggest an integrated approach for FKP-based personal authentication based on two methodologies: angular geometric analysis-based feature extraction method (AGFEM) and contourlet transform-based feature extraction method (CTFEM). Usha et al. in another research work in [26] introduce a new approach based on two methods, including geometric and texture analysis. For angular geometric analysis, the shape-oriented characteristics of the finger knuckle print are extracted, while the knuckle texture feature analysis is performed using multi-resolution transform known as curvelet transform. Khellat-kihel et al. [27] using FKP, fingerprint and finger’s venous network establish a multimodal system which uses different resources to obtain a reliable recognition system. Considering the much information collected from various resources, in this approach the selection of the best features is considered as a solution to reduce memory space and execution time. Waghode et al. [28] use Gabor filter in preprocessing stage to remove noise, PCA for extraction of features and LDA for classification. Jaswal et al. [29] use gradient ordinal pattern (GOP) and star GOP (SGOP) to obtain robust edge information against varying illumination and a new deep-matching technique in the classification process. The matching algorithm has a multistage architecture with n layers like a construction similar to deep convolution neural nets. But this architecture for feature representation does not learn any NN model. Sid et al. [30] propose a biometric system based on FKP using a deep learning algorithm called discrete cosine transform network (DCTNet) and support vector machine (SVM) classifier. Proposed DCTNet architecture is composed of convolution layer, binary hashing stage and block-wise histogram extraction. Amraoui et al. [31] use compound LBP (CLBP) to create a robust feature descriptor that incorporates both the sign and inclination information of the differences between the center and the neighboring gray values. Usha et al. [32] propose a strategy for improved performance by simultaneously extracting and incorporating geometric and texture features of the finger knuckle by score level fusion on the entire dorsal surface of the finger. Completed local ternary pattern (CLTP), 2D log Gabor filter (2DLGF) and Fourier scale invariant feature transform (F-SIFT) were implemented to extract the local texture features of an acquired finger back knuckle surface. Gao et al. [33] perform reconstruction in Gabor filter response to handle variations caused in the image acquisition stage and use a score level adaptive binary fusion rule to adaptively fuse the matching distances before and after reconstruction. Muthukumar et al. [34] proposed a score level fusion FKP recognition technique with short and long Gabor wavelet and SVM classification process. In [35] by Zhai et al., a batch-normalized CNN with deep learning method is proposed. By the proposed CNN, more distinctive features can be extracted and satisfying recognition performance is achieved. Chlaoua et al. [36] apply deep learning to create a multimodal biometric system based on images of FKP modalities. FKP features are extracted by principal component analysis network (PCANet). Joshi et al. [37] use images extracted from a preprocessed knuckle region of interest (ROI) to train a Siamese convolutional neural network for FKP recognition. Network training is done based on the Euclidean distance as output to maximize the dissimilarity between images from different persons or fingers, and minimize the distance between images from the same finger. The proposed network contains three convolutional layers; each is followed by the ReLu activation function, batch normalization and dropout. Fei et al. [38] suggest a discriminative direction binary feature learning (DDBFL) method for FKP recognition. In this paper, to better describe the direction information of FKP images, a direction convolution difference vector (DCDV) is proposed. Then, DDBFL method is employed to learn K hash functions to map a DCDV into a binary feature vector. Final descriptor for FKP recognition is formed by concatenating block-wise histograms of the DDBFL codes. Choudhury et al. [39] propose a method for personal authentication using a deep learning method and the fusion of two biometric attributes, fingernail plates and finger knuckles. Kim et al. [40] propose a biometric system based on a finger-wrinkle image captured by the camera of a smartphone. To improve the recognition performance degraded by misalignment and illumination variation during image capturing, a deep residual network is employed. In [41] by Thapar et al., matching the full dorsal fingers, instead of major/minor ROIs alone, is proposed. A Siamese-based CNN matching framework, FKIMNet, which creates a 128-D features of an image, is used by incorporating dynamically adaptive margin, hard negative mining and data augmentation.

Usha et al. [1], Jaswal et al. [3] and Sadik et al. [5] have an overview of the proposed methods of FKP-based authentication system.

3 Proposed algorithm

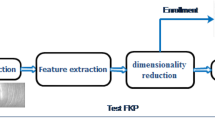

Proposed algorithm in this paper is based on texture feature extraction, and no geometric feature is used. Overall architecture of a typical FKP authentication system based on texture feature extraction and matching process is presented in Fig. 2.

Overall architecture of a typical FKP authentication system based on texture feature extraction and matching [24]

Region-of-interest (ROI) extraction is not considered in this paper, and this process has been done in the used standard dataset for evaluation in this research. Image enhancement is needed before the feature extraction stage, not only to emboss the essential elements of the visual appearance, but also to counter the effect of illumination variations, local shadowing and highlights. The preprocessing stage, as the method proposed for face recognition application in [42], includes gamma correction, difference of Gaussian (DoG) filtering and contrast equalization.

Gamma correction is a nonlinear gray-level transformation that changes gray-level I to Iγ, where \(\gamma \in \left[ {0,1} \right]\) is a predefined parameter. This transformation improves the image's regional dynamic range in dark and shadowed regions while compressing it at highlights and bright regions. Gaussian difference (DoG) filtering is an easy way to achieve band-pass filtering operation. The DoG, as the difference between two differently low-pass filtered images in Eq. (1), removes high-frequency components representing noise, as well as some low-frequency components representing the homogeneous areas in the image. It is assumed that the frequency components in the pass band are associated with the image edges:

The DoG as an operator or convolution kernel is defined as follows:

Contrast equalization is the final stage of image enhancement chain. This process rescales the image intensities in order to obtain a consistent measure of total contrast or intensity variation. Using a robust estimator is important because the image typically contains extreme values produced by highlights, small dark regions, garbage at the image borders, etc. For this purpose, a simple and rapid approximation based on a two-stage process as follows is used:

In Eq. (3), a is a constant that reduces the influence of large values, τ is a threshold used to truncate large values after the first stage of normalization, and the mean is over the whole image. By default, values a = 0.1 and τ = 10 are used.

The effect of these three mentioned stages is shown on a sample FKP image in Fig. 3.

In the classification stage, a feature vector is compared to the feature vectors of training patterns and the dominant FKP is recognized. In the proposed algorithm, a simple classifier of the nearest neighbor with Chi-square distance is used in the FKP feature matching stage. Chi-square distance between two vectors of S and M is calculated from Eq. 4:

A strategy to increase recognition accuracy is biometric parameter fusion. In the proposed algorithm in this paper, no different biometric trait is used, but to achieve better performance, the fusion of the extracted texture features from two, three and four fingers is investigated.

As any other pattern classification task, in FKP-based authentication systems, feature extraction stage plays a key role in improving results. This stage often makes a distinction between the most recognition systems, including finger knuckle print recognition. It can be said that the efficiency difference of finger knuckle print recognition algorithms, is influenced by the proposed feature extraction scheme. The main contribution of this paper is also in the feature extraction stage which is considered in detail in the next section.

4 Feature extraction

Block diagram of the feature extraction stage for FKP recognition algorithm proposed in this paper is shown in Fig. 4.

In the proposed method, before the main feature extraction step, the LBP features are extracted from the input image, and a LBP image is achieved. Applying an additional stage of feature extraction before applying the main stage of feature extraction is called cascading in [43]. This idea is proposed in [43] for local Zernike moments feature extraction in a face recognition application, and it resulted in more robust and reliable features. In the next stage, the previous generated LBP image is patched. Patching is done in a way that the parts are overlapped. Overlapping of parts may lead to feature vectors continuity, to the more qualified image texture description and thus to the improved results. Then, in each generated patch of the previous step, the relaxed local ternary pattern (RLTP) features are extracted and their histogram is formed as a feature vector for that patch. RLTP, based on the concept of uncertain state to encode the small pixel differences in traditional local ternary pattern (LTP), was proposed in [44] for face recognition application. This idea resulted in strong resistance to noise and illumination variations compared with traditional LBP and LTP.

To remove noisy and irrelevant features that cause the classification process to deviate while increasing the dimensions of the feature vectors, feature patterns should be refined. The idea of rotation invariant uniform pattern proposed in [45] can be used, but one type of predefined patterns of interest is used in this method. In this paper to obtain more discriminative patterns of FKP images, a learning framework which is formulated into a three-layered model in [46] is integrated with RLTP feature vectors of previous stage. In the remaining part of this section, two important algorithm stages, including RLTP feature extraction and feature refinement, are investigated in more detail.

4.1 Relaxed local ternary pattern

As a nonparametric descriptor, local binary patterns (LBPs) are simple but discriminative features for texture classification [47]. \({\text{LBP}}_{P \cdot R}\) encodes the pixel differences between the center pixel and N neighbors on a neighborhood of radius R. This LBP code is obtained by Eq. 5:

In Eq. 5, the gray level of the center pixel is shown with ic and gray level of circular neighbors with ip; s(z) is a threshold function defined in Eq. 6:

An example of basic LBP operator is illustrated in Fig. 5.

The basic LBP in Eq. 5 is sensitive to noise due to the thresholding precisely based on the center pixel [42]. Changes in the encoding pixel differences from 0 to 1 or vice versa will result from a small image noise. Sensitivity of the local ternary pattern (LTP), introduced in [42], to noise is less than LBP. In this method, a small pixel difference is coded into a separate state. LTP code is calculated by Eq. 7:

In Eq. 7, \(s^{\prime}\left( {z, t} \right)\) is a threshold function and t is a predefined threshold value. Threshold function is defined in Eq. 8:

LTP histogram dimension formed by LTP features of Eq. 7 is too large. For example, LTP8.2 will result in a histogram of 38 = 6561 bins. For this reason, in [42] LTP code is divided into two positive and negative LBP codes:

However, in this process, some information may be lost. On the other hand, while there is a strong correlation between positive and negative LBP histograms, this process may result in much redundant information in both histograms.

The relaxed local ternary pattern in [44], by proposing the concept of uncertain state to encode the small pixel differences, solves many shortcomings of LBP and LTP. In this method, the threshold function of the local ternary pattern (LTP) is defined as follows:

0 and 1 refer to two certain states for pixel differences of strong positive and negative values. These states are less affected by noise and are more reliable. State X, as the uncertain or unknown state, is proposed for small pixel difference cases. In these cases, noise can easily change the state from 0 to 1 or vice versa. So a small pixel difference is encoded in this method as an uncertain state irrespective of its sign and magnitude, instead of strong 0 and 1 states. However, these uncertain states should be decided in order to form the feature vectors of each image patch. The suggested idea in [44] to deduce state X into the strong states encodes it in two strong states equally. This idea is based on the fact that the small pixel difference is likely to be equally positive and negative. With only one uncertain bit, RLTP and LTP generate two LBP codes. However, in two different histograms for LTP, two produced codes are accumulated, whereas in one histogram for RLTP, the degree of 0.5 is accumulated in two different histogram bins. The RLTP and LTP codes are more significantly different when there is more than one uncertain bit. In each pixel from the generated RLTP code, with n uncertain states, 2n LBP codes are generated.

The encoding process for two uncertain bits is shown with an example in Fig. 6. First, the image patch is encoded as a ternary code. Then, the ternary code is encoded into binary codes. The bit 0 and bit 5 are encoded in both states of 0 and 1. In this example, the ternary code results in four LBP codes.

Illustration of encoding scheme of relaxed LTP; firstly, the ternary code 11X1001X is obtained from the image and then turns into four binary codes [44]

For configuring histogram of RLTP codes in an image patch, LBP code j is accumulated to the bin j of RLTP histogram with amount of Eq. 11:

Therefore, for the example illustrated in Fig. 6, four generated binary codes contribute 0.25 to the bins 210, 211, 242 and 243 of the histogram, respectively. Superior performance of using RLTP in the face recognition application is reported with [44] respect to the LBP and LTP.

4.2 Efficient feature refinement

LBP and other patterns based on LBP, such as RLTP used in this paper, result in some forms of low-frequency patterns that rarely occur in the image. Such sparse high-dimensional data contributes to noisy and ambiguous patterns that may distort the classification process [46]. It is therefore necessary to refine feature vectors without great loss of information. Using uniform patterns or rotation invariant patterns is an idea to get rid of such additional patterns [45]. Uniform pattern refers to a binary pattern which has two times maximum number of transitions from 0 to 1 and vice versa. For example, 01110000 is a uniform pattern and 01010011 is a non-uniform pattern. This choice is justified by the fact that most local binary patterns are uniform in natural images and are dominant and therefore, sufficient to represent texture data. Alternatively, non-uniform patterns are either noisy or related to information that are ineffective in the overall texture presentation. So after calculating LBP codes from RLTPs, only the uniform patterns are hold and the rest of patterns are deleted.

Because rotation invariance is a desirable property for texture classification, in the rotation invariant uniform pattern, uniform patterns are circularly shifted to the right to achieve their minimum value. Thus, if LBPN·R code obtained from a RLTP code is non-uniform, it is ignored, and if it is a uniform code, \({\text{LBP}}_{N.R}^{u}\) is changed to \({\text{LBP}}_{N.R}^{{{\text{uri}}}}\) as defined in Eq. 12 before submitting in histogram:

For example, all of the patterns 01110000, 00011100 and 00000111, according to Eq. 12, are mapped to minimum code 00000111. Applying these two concepts in the extraction of RLTP patterns of FKP images, strongly reduces the dimensions of the feature vectors. For example, instead of 256 patterns for traditional LBP, \({\text{LBP}}_{8,R}\), 37 and 9 unique binary patterns can occur in uniform LBP, \({\text{LBP}}_{8,R}^{u}\) and uniform rotation invariant LBP, \({\text{LBP}}_{8,R}^{{{\text{uri}}}}\), respectively,.

In uniform pattern, the basic assumption is that a set of predefined patterns are dominant within all patterns and are enough to reflect all textural structures. This assumption, however, could not provide robust and discriminative descriptions in some textures, particularly for images with complex patterns and structures which include irregular edges and shapes [46] [48].

In [48], the first several patterns that occur most frequently were chosen as dominant LBP patterns. While not using predefined patterns, pattern type information is lost in this method due to using only pattern occurrences of dominant patterns [46]. In order to compensate for the missing data in the dominant LBP features and as an addition to the dominant LBP features, an additional feature set based on the Gabor filter responses is also used in [48].

A method of adaptive extraction of features is developed in [46] to learn from original patterns the most discriminative patterns. In the method of [46], the subset of efficient patterns is adaptively learned from the dataset. This learning model is configured as a three-layer model to estimate the efficient subset of patterns from the original patterns by simultaneously considering features' robustness, discriminative power and representation capability. The performance of this model is evaluated in [46] on two publicly available texture datasets for texture classification, two medical image datasets for protein cellular classification and disease classification, and a neonatal facial expression dataset (infant COPE dataset) for facial expression classification. Experimental results demonstrate that the obtained descriptors lead to considerable classification performance. This model is used in this paper to integrate with RLTP feature vectors in order to extract more efficient FKP texture features.

Suppose a training image set FKP1, FKP2, …, FKPm belonging to C classes and nc images in each class c, and FKPc,i is the FKP image i in the class c, where c = 1,2,…,C and for each c,i = 1,2,…, n c. The proposed learning model contains three layers as illustrated in Fig. 7 to obtain dominant patterns based on three targets including feature robustness, discriminative power and the capability of representation. Suppose that RLTPc, i is the RLTP histogram vector with pc bins or pattern types extracted from training image FKPc, i.

Three-layered algorithm for dominant feature extraction [46]

The robustness of patterns in the feature vector is related to occurrences of patterns in the image. Therefore, in the first layer, frequently appeared patterns in each input image which are more reliable to reflect textural structures are selected. On the other hand, patterns rarely occurred in an image and sensitive to noise will be ignored. Selecting the subset of patterns for each training image which consists of the most dominant patterns ensures feature vector robustness. The dominant pattern set of training image FKPc, i denoted as Jc, i (\(J_{c, i} \subseteq \left[ {1,2, \ldots, p_{c} } \right]\)), the minimum set of pattern types which can cover fraction n (0 < n < 1) of all pattern occurrences of that image, is calculated from Eq. 13:

In Eq. 13, \(f_{c, i, j}\) is the number of occurrences of pattern type j in the image \({\text{FKP}}_{c, i}\) and \(\left| {J_{c, i} } \right|\) denotes the number of elements in set \(J_{c, i}\).

Due to the existence of noise and variations in image illumination, the dominant patterns of images are different, even in the same class. Therefore, in layer two in order to identify texture images belonging to the same class, dominant pattern types that are largely consistent among all images in the class are selected by taking the intersection set of dominant pattern sets of all training images in that class. So the discriminative and robust pattern set is constructed as:

Dominant patterns of each class JCc cannot well describe textural structures of the whole dataset. Therefore, the union of all pattern sets \(JC_{c} \left( {c = 1,2 ,\ldots ,C} \right)\) denoted as \(J_{{{\text{global}}}}\) is constructed in layer three as dominant pattern set to cover the information of all classes:

The histogram of pattern types in \(J_{{{\text{global}}}}\) is used as feature vector to represent training and test images. Figure 7 illustrates all three mentioned stages.

5 Experimental results

5.1 Dataset

To evaluate the proposed algorithm, the largest publicly available dataset PolyU FKP in [49] is used, which is collected by the device depicted in Fig. 1. Both raw FKP images and their corresponding region of interests (ROIs) were present in this dataset. In this research, the set of these ROI images were used. The device shown in Fig. 1 to capture FKP image is designed in such a way that the ROI in the output image is easily accessible and does not change significantly in terms of geometric position and dimensions in multiple images and so ROI extraction is an easy task in this structure. The FKP images were collected from 165 persons, including 125 males and 40 females. 143 persons are 20–30 years old and the others are 30–50 years old. The dataset is available in the website of Biometric Research Center, The Hong Kong Polytechnic University [49]. The images were collected in two separate sessions. In each session, the subject was asked to provide 6 images for each of the left index (LI) finger, the left middle (LM) finger, the right index (RI) finger and the right middle (RM) finger. Therefore, 48 images from 4 fingers were collected from each subject. In total, the dataset contains 7,920 images from 660 different fingers. The original image size is 220 × 110. The images have finger artifacts, low contrast, illumination variation, reflection and non-rigid deformations and so are very suitable for evaluating different algorithms performance. The algorithm is run by MATLAB, Core i5, 2.6 GHz CPU and 4 GB memory RAM in Windows 8.1.

5.2 Identification results

In this experiment, the user identity is unknown. The acquired biometric information from the input user is compared to the templates corresponding to all users in dataset, and the most similar template is considered as the unknown identity. In order to see the effect of any of the ideas used in the proposed algorithm, in different rows of Table 1, identification accuracy is observed by adding different ideas while using an unchanged method of preprocessing and classification in all states. In each row of the table, the accuracy of the proposed algorithm is provided for identification based on a single finger, two fingers, three fingers and four fingers. As there are the images of four different fingers LI, LM, RI and RM in the used dataset, in each column, the average performance of the proposed algorithm on all possible states is reported.

In the first row of Table 1, the identification results using original LBP feature vector, setting R = 2 and P = 8, can be seen. In the second row, the identification rates are seen based on the RLTP feature vector by patching size of 16 × 16, radius 2 and 8 points. As it is seen, applying RLTP instead of LBP improves the identification rates on the average %37.9 with respect to the original LBP. In the third row, the identification results with cascading LBP and RLTP features are observed. This approach leads to an improvement in the identification rates by %42.8 compared to using original LBP in the first row and %3.4 compared to RLTP without cascading in the second row. In the fourth row, the ideas of the third row are observed by adding pixels overlapping in the patching. This idea causes an improvement in identification results by %1.7 compared to the previous state. In the fifth row of the table, the proposed algorithm results have been shown by using the idea of rotation invariant uniform in RLTP feature extraction. The results show that dominant pattern extraction based on this idea does not work very well and the identification results are better than original LBP, but worse than other states. However, it should be noted that the length of the feature vectors and therefore the running time of the overall algorithm have been reduced considerably. In the sixth row, the identification rates are reported by adding the idea of dominant pattern extraction based on three-layered model. The results of this row demonstrate the use of this idea, while causing reduction in the computational complexity, leads to an improvement in results by %0.7 on average.

In order to consider the effect of different parameters of the proposed algorithm, the identification results based on four fingers with variation of the radius and the points of neighborhood of LBP in cascading process, are shown in Fig. 8. As it is observed, the best accuracy is achieved with the radius of two and the neighborhood of eight points. In Fig. 9, the identification results with variation of the radius and the points of neighborhood in RLTP extraction have been shown. The best accuracy is achieved again in the radius of two and the neighborhood of eight points.

The results of identification performance of some of the state-of-the-art algorithms and the proposed algorithm are reported in Table 2. For our proposed algorithm, the average identification rate of the algorithm in single-, two-, three- and four-finger states has been used. Comparing the results in Table 2 presents the better performance of the proposed algorithm with respect to the traditional methods in the six first rows of the table and comparable results with respect to the new enhanced methods based on NN and deep learning in the six next rows in the table, while these methods have more complicated multistage architecture and because of having many layers, they require more complex and time-consuming training process.

5.3 Verification results

Identity verification requires comparing the input pattern with the corresponding patterns of the claimed identity.

The results of some of the state-of-the-art algorithms and the proposed algorithm are reported in Table 3. Comparing the results demonstrates the better performance of the proposed algorithm.

6 Conclusion and future work

This paper presented a new approach for personal authentication using the finger knuckle print. Knuckle print as a unique and steady biological trait among the individuals, requires contactless inexpensive collecting equipment and low processing time. In the proposed method, RLTP feature vectors are extracted from overlapped patched LBP image of original image. Then, by using an efficient three-layered model, dominant features are extracted. Experimental results on standard dataset in identification and verification applications prove the success of the proposed method. The proposed algorithm, because of using low-resolution small images, binary patterns and dominant patterns, is fast enough and can be used in real time. Also, the proposed algorithm based on the importance of speed and convenience or importance of accuracy can be used from single- to four-finger states.

In the future research, we plan to apply ROI extraction stage in our algorithm and consider the sensitivity of our algorithm to ROI variations and quality.

References

Usha, K., Ezhilarasan, M.: Finger knuckle biometrics–a review. Comput. Elect. Eng. 45, 249–259 (2015)

Zhang, L., Zhaang, L., Zhang, D.: Monogenic code: a novel fast feature coding algorithm with applications to finger-knuckle-print recognition. IEEE International Workshop on Emerging Techniques and Challenges for Hand-Based Biometrics (ETCHB), 2010, pp. 1–4.

Jaswal, G., Kaul, A., Nath, R.: Knuckle print biometrics and fusion schemes overview, challenges, and solutions. ACM Comput. Surv. 49(2), 1–46 (2016)

Kumar, A., Ravikanth, C.: Personal authentication using finger knuckle surface. IEEE Trans. Inform. Foren. Sec. 4(1), 98–110 (2009)

Sadik M. A., Al-Berry M. N., Roushdy M.: A survey on the finger knuckle prints biometric. In: Eighth International Conference on Intelligent Computing and Information Systems (ICICIS), pp. 197–204 (2017)

Zhang, L., Zhang, L., Zhang, D., Zhu, H.: Online finger-knuckle-print verification for personal authentication. Pattern Recog. 43(7), 2560–2571 (2010)

Zhang, L., Li, H.: Encoding local image patterns using riesz transforms: with applications to palm print and finger-knuckle-print recognition. J. Image Vis. Comput. 30(12), 1043–1051 (2012)

Woodard, D.L., Flynn, P.J.: Finger surface as a biometric identifier. Comput. Vis. Image Understand. 100(3), 357–384 (2005)

Jain, A.K., Duta, N.: Deformable matching of hand shapes for verification. Int. Conf. Image Process. 857–861 (1999)

Sanchez.Reillo, R., Sanchez.Avila, C., Gonzalez.Marcos, A.: Biometric identification through hand geometry measurements. IEEE Trans. Pattern Anal. Mach. Intell. 22(3), 1168–1171 (2000)

Kumar, A., Zhou, Y.: Personal identification using finger knuckle orientation features. Electron. Lett. 45(20), 1023–1025 (2009)

Jia, W., Huang, D.S., Zhang, D.: Palm print verification based on robust line orientation code. Pattern Recog. 41(5), 1504–1513 (2008)

Zhang, L., Zhang, L., Zhang, D.: Finger-knuckle-print: a new biometric identifier. In: Proceedings of the 16th IEEE international conference on image processing (ICIP), pp 1981–1984 (2009)

Kong, A.K., Zhang, D.: Competitive coding scheme for palm print verification. In: Proceedings of the 17th international conference on pattern recognition (ICPR), pp 520–523 (2004)

Zhang, L., Zhang, L., Zhang, D.: Finger-knuckle-print verification based on band limited phase only correlation. Springer Computer analysis of images and patterns, pp 141–148 (2009)

Shariatmadar, Z.S., Faez, K.: Finger-knuckle-print recognition via encoding local binary pattern. J. Circ. Syst. Comput. 22(06), 1350050 (2013)

Guangwei, G., Lei, Z., Jian, Y., et al.: Reconstruction based finger knuckle print verification with score level adaptive binary fusion. IEEE Trans. Image Process. 22(12), 5050–5062 (2013)

Yu, P.F., Zhou, H., Li, H.Y.: Personal identification using finger-knuckle-print based on local binary pattern. Appl. Mech. Mater. 441, 703–706 (2014)

Gao, G., Yang, J., Qian, J., Zhang, L.: Integration of multiple orientation and texture information for finger-knuckle-print verification. Neurocomputing 135, 180–191 (2014)

Kumar, A.: Importance of being unique from finger dorsal patterns: exploring minor finger knuckle patterns in verifying human identities. IEEE Trans. Inf. Foren. Sec. 9(8), 1288–1298 (2014)

Kumar, A., Wang, B.: Recovering and matching minutiae patterns from finger knuckle images. Pattern Recogn. Lett. 68(2), 361–367 (2015)

Grover, J., Hanmandlu, M.: Hybrid fusion of score level and adaptive fuzzy decision level fusions for the finger-knuckle-print based authentication. Appl. Soft Comput. 31, 1–13 (2015)

Kulkarni, S., Raut, R.D., Dakhole, P.K.: Wavelet based modern finger knuckle authentication. Proced. Comput. Sci. 70, 649–657 (2015)

Nigam, A., Tiwari, K., Gupta, P.: Multiple texture information fusion for finger- knuckle-print authentication system. Neurocomputing 188, 190–205 (2016)

Usha, K., Ezhilarasan, M.: Fusion of geometric and texture features for finger knuckle surface recognition. Alexand. Eng. J. 55(1), 683–697 (2016)

Usha, K., Ezhilarasan, M., ‘Personal recognition using finger knuckle shape oriented features and texture analysis. J. King Saud Univ. Comput. Inf. Sci. 28(4): 416–431 (2016)

Khellat.Kihel, S., Abrishambaf, R., Monteiro, J.L., Benyettou, M., ‘Multimodal fusion of the finger vein, fingerprint and the finger knuckle print using kernel fisher analysis. Appl. Soft Comput. 42: 439–447 (2016)

Waghode A. B., Manjare C. A., Biometric Authentication of Person using finger knuckle. Int. Conf. Comput. Commun. Control Autom. (ICCUBEA) 1–6 (2017)

Jaswal G., Nigam A., Nath R.: Finger Knuckle image based personal authentication using deepmatching. Int. Conf. Ident. Sec. Behav. Anal. (ISBA) 1–8 (2017)

Sid K. B., Laallam F. Z., Samai D., and Tidjani A.: Finger Knuckle print features extraction using simple deep learning method. Int. J. Comput. Sci. Commun. Inf. Technol. (CSCIT) 5: 12–18 (2017)

Amraoui A., Fakhri Y., Kerroum M. A., ‘Finger Knuckle print recognition system using compound local binary pattern. Int. Conf. Elect. Inf. Technol. (ICEIT), pp 1–5 (2017)

Usha, K., Ezhilarasan, M.: Robust personal authentication using finger knuckle geometric and texture features. Ain Shams Eng J 9(4), 549–565 (2018)

Gao G., Huang P., Wu S., Gao H., Yue D.: Reconstruction in gabor response domain for efficient finger-knuckle-print verification. Aust. N. Zeal. Control Conf. (ANZCC), pp 110–114 (2018)

Muthukumar, A., Kavipriya, A.: A biometric system based on Gabor feature extraction with SVM classifier for finger-knuckle-print. Pattern Recog. Lett. 125, 150–156 (2019)

Zhai Y., Cao H., Cao L., Ma H., Gan J., Zeng J., Piuri V., Scotti F., Deng W., Zhi Y., Wang J.: A novel finger-knuckle-print recognition based on batch-normalized CNN. Chin. Conf. Biomet. Recog. CCBR: Biometric Recognition, pp 11–21 (2018)

Chlaoua, R., Meraoumia, A., Aiadi, K.E., Korichi, M.: Deep learning for finger-knuckle-print identification system based on PCANet and SVM classifier. Evolv. Syst. 10(2), 261–272 (2018)

Joshi J. C., Nangia S. A., Tiwari K., Gupta K. K.: Finger Knuckleprint based personal authentication using siamese network. In: Proceedings of the 6th international conference on signal processing and integrated networks (SPIN), pp 282–286 (2019)

Fei, L., Zhang, B., Teng, S., Zeng, A., Tian, C., Zhang, W.: Learning discriminative Finger-Knuckle-print descriptor. IEEE Int. Conf. Acoust. Speech Signal Process. (ICASSP), pp 2137–2141 (2019)

Choudhury, S., Kumar, A., Laskar, S.H.: Biometric authentication through unification of finger dorsal biometric traits. Inform. Sci. 497, 202–218 (2019)

Kim, C.S., Cho, N.S., Park, K.R.: Deep residual network-based recognition of finger wrinkles using smartphone camera. IEEE Access 7, 71270–71285 (2019)

Thapar, D., Jaswal, G., Nigam, A.: FKIMNet a finger dorsal image matching network comparing component (Major, Minor and Nail) matching with holistic (Finger Dorsal) matching. Int. Joint Conf. Neural Netw, IJCNN (2019)

Tan, X., Triggs, B.: Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans. Image Process. 19(6), 1635–1650 (2010)

Sarıyanidi, E., Dag˘lı, V., Tek, S.C., Tunc, B., Gökmen, M.: Local zernike moments: a new representation for face recognition. IEEE Int. Conf. Image Process. (ICIP), 585–588 (2012)

Jianfeng, R., Xudong, J., Junsong, Y.: Relaxed local ternary pattern for face recognition. IEEE Int. Conf. Image Process. (ICIP) (2013)

Ojala, T., Pietikainen, M., Maenpaa, T.: Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 24(7), 971–987 (2002)

Guo, Y., Zhao, G., PietikäInen, M.: Discriminative features for texture description. Pattern Recog. 45(10), 3834–3843 (2012)

Huang, D., Shan, C., Ardabilian, M., Wang, Y., Chen, L.: Local binary patterns and its application to facial image analysis: a survey. IEEE Trans. Syst. Man Cybern. 41(6), 765–781 (2011)

Liao, S., Law, M.W.K., Chung, A.C.S.: Dominant local binary patterns for texture classification. IEEE Trans. Image Process. 18(5), 1107–1118 (2009)

Polyu finger knuckle print dataset. (2010). http://www.comp.polyu.edu.hk/biometrics.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Anbari, M., Fotouhi, A.M. Finger knuckle print recognition for personal authentication based on relaxed local ternary pattern in an effective learning framework. Machine Vision and Applications 32, 55 (2021). https://doi.org/10.1007/s00138-021-01178-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00138-021-01178-6