Abstract

This paper proposes a novel finger knuckle patterns (FKP) based biometric recognition system that utilizes multi-scale bank of binarized statistical image features (B-BSIF) due to their improved expressive power. The proposed system learns a set of convolution filters to form different BSIF feature representations. Later, the learnt filters are applied on each FKP traits to determine the top performing BSIF features and respective filters are used to create a bank of features named B-BSIF. In particular, the presented framework, in the first step, extracts the region of interest (ROI) from FKP images. In the second step, the B-BSIF coding method is applied on ROIs to obtain enhanced multi-scale BSIF features characterized by top performing convolution filters. The extracted feature histograms are concatenated in the third step to produce a large feature vector. Then, a dimensionality reduction procedure, based on principal component analysis and linear discriminant analysis techniques (PCA + LDA), is carried out to attain compact feature representation. Finally, nearest neighbor classifier based on the cosine Mahalanobis distance is used to ascertain the identity of the person. Experiments with the publicly available PolyU FKP dataset show that the presented framework outperforms previously-proposed methods and is also able to attain very high accuracy both in identification and verification modes.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

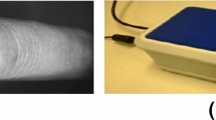

In today’s highly interconnected society, automated personal identification techniques have become vital of security systems, which require high classification schemes (Angelov and Gu 2018; Bao et al. 2018). One such person recognition technique is biometrics, that is identifying a person based on their physiological and behavioral characteristics (Adeoye 2010). Biometrics have several advantages compared to conventional methods such as passwords or ID cards, e.g., it can be forgotten or lost (Adeoye 2010; Rani and Shanmugalakshmi 2013; Akhtar and Alfarid 2011). In fact, many biometric traits have been proposed and widely being accpeted as well as used in diverse applications ranging from ranging from border crossing to mobile authentication (Zhang et al. 2018b; Akhtar et al. 2011). Recently, FKP characteristics is one of the hand based biometrics that is prominent emerging trait owing to advantages: (1) the acquisition of finger-knuckle surfaces is relatively easy with low cost and low-resolution simple cameras, (2) FKP based access systems can be used under various environmental conditions such as indoor and outdoor, (3) FKP features are stable and remain unchanged over time, i.e., FKP patterns are age-invariant, (4) FKP based biometric recognition systems are very reliable that gives high performances (Rani and Shanmugalakshmi 2013).

In the literature, few methods for FKP based biometric recognition systems have been proposed. For instance, Zhang et al. (2011) have presented an algorithm that integrated local orientations via ensemble of Gabor filters and global orientations via Fourier coefficients features for FKP person authentication. While, Zhang and Li (2012) enhanced the FKP recognition accuracy using two Riesz-transform-based coding schemes (i.e., RCode1 and RCode2). Shariatmadar and Faez (2013) have proposed a FKP recognition scheme usable both in personal identification and verification modes. Specifically, the scheme divides the ROI of captured image into a set of blocks and Gabor filters are applied on each block, which is followed by binary patterns extraction as feature histograms. Lastly, Bio hashing method is employed for matching process between the template and query histograms. Zeinali et al. (2014) proposed to use Directional Filter Bank (DFB) feature extraction with dimensionality reduction by LDA method for FKP recognition. Authors in (Shariatmadar and Faez 2014) devised a competent method based on multi-instance FKP fusion, which integrates the information from diverse fingers [i.e., he left index/middle fingers (LIF, LMF) and right index/middle fingers (RIF, RMF)]. The information are fused at different level, i.e., feature-, matching scores- and decision-levels.Nigam et al.(Nigam et al. 2016) introduced a FKP based authentication system that depends on multiple textures with score level fusion. Firstly, FKP images are pre-processed by Gabor filters. Secondly, quality parameters are extracted to be later made use of in recognition procedure. Tao et al. (Kong et al. 2014) designed a hierarchical classification method for FKP recognition. The system consists of two stages. In the first stage, recognition is conducted using basic Gabor feature and a novel decision rule with the fixed threshold. Whereas, in the second stage, speeded-up robust feature is used only when the basic feature is not able to perform recognition successfully. In turn, Chaa et al.(Chaa et al. 2018) introduced a novel method that combines two types of the histograms of oriented gradients (HOG) extracted from reflectance and illumination FKP trait by Adaptive Single Scale Retinex (ASSR) algorithm. While, a novel reconstruction-based finger-knuckle-print (FKP) verification method to decrease the false rejections caused by finger pose variations in data capturing process is devised by Zhang et al. (2018a, b). Recently, Chlaoua et al. (2018) have employed a simple deep learning method known as principal component analysis Network (PCANet). In the proposed model, PCA has been employed to learn two-stages of filter banks then a simple binary hashing and block histograms for clustering of feature vectors are used. The resultant features are finally fed to classification step, i.e., linear multiclass Support Vector Machine (SVM). The authors also studied a multimodal biometric system based on matching score level fusion. Zhai et al. (2018) designed a FKP recognition system using batch normalized convolution neural network with random histogram equalization as data augmentation scheme.

All in all, despite FKP being easy to use trait for person recognition, comparatively very limited researches have been conducted on FKP biometrics. Moreover, majority of existing FKP methods are not able to attain very high accuracy due to use of features that have lower power of discrimination. Similarly, they are very sensitive to image orientations and scales. Therefore, in this paper, we propose an automated method for finger knuckle pattern recognition that can attain excellent accuracy. The presented framework extracts ROIs from the capture finger knuckle images, which is followed by application of BSIF image descriptor on each ROI. BSIF is very robust to rotation and illumination variations. Then, the best features (corresponding to BSIF convolution filters) that achieve higher performances are selected to construct a bank of BSIF image representations. Next, a PCA + LDA based dimensionality reduction scheme is employed to obtain compact representation of the FKP trait. Lastly, nearest neighbor classifier is utilized to authenticate the user.

The remainder of this paper is organized as follows: In Sect. 2, the proposed system is described including feature extraction and matching process used in the system. Experimental protocol, dataset, and figures of merit are presented, and experimental results are given in Sect. 3. Finally, the conclusion and further works are drawn in Sect. 4.

2 Proposed FKP biometric system

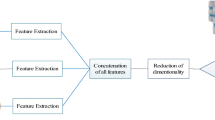

Figure 1 depicts the schematic diagram of the proposed finger knuckle pattern (FKP) based person recognition system. The framework consists of two phases: enrollment and identification. During enrollment phase, each user presents their finger knuckle to the sensor. The FKP image of the user is captured and processed to produce regions of interest described in Sect. 2.1. Then, the features are extracted using B-BSIF method, which is detailed in Sect. 2.2. The top performing extracted features from ROI are concatenated to form raw histogram (long feature vector) on which a PCA + LDA dimensionality reduction technique discussed in Sect. 2.3 is applied to obtain concise feature representation. The compact feature representation is stored in the database as template. All templates of enrolled users are used as training data for decision making classifier explained in Sect. 2.4. During verification phase, the captured FKP image from the user undergoes the above-mentioned process, and this time the extracted features are fed to the decision-making classifier to determine the identity of the person.

2.1 Extractionof the region of interest (ROI)

The procedure of extrcating the region of interest from FKP images are similar to the method presented in (Zhang et al. 2010).The ROI extraction technique is composed of four steps, which are explanied as follows:

Step 1: A Gaussian smoothing process is applied on the original FKP image, which is then down-sampled as the smoothed image to150 dpi (Dots Per Inch).

Step 2: The X-axis of the coordinate system is fixed so that the bottom boundary of the finger can be easily extracted. The bottom boundary of the finger is determined by a canny edge detector.

Step 3: The Y-axis of the coordinate system is determined by applying a Canny edge detector on the cropped sub-image extracted from the original FKP image based on X-axis. This process helps finding the convex direction coding scheme.

Step 4: The ROI regions indicated by the rectangle are extracted.

Figure 2 illustrates the global steps the of ROI extraction.

2.2 Binarized statistical image features extraction

Binarized Statistical Image Features (BSIF) is a local image descriptor constructed by binarizing the responses to linear filters (Kannala and Rahtu 2012). BSIF learns a set of convolution filters from natural images using ICA (Independent Component Analysis) based on unsupervised scheme. These learned filters are used to represent each pixel of the capture FKP image as a binary string by computing its response to learned convolution filters. The binary string for each pixel can be considered as a local descriptor of the image intensity pattern in the neighborhood of that pixel. Finally, the histogram of the pixels binary string values allows one to characterize the texture properties within the image sub-regions.

In this paper, we have used the open-source filters (Kannala and Rahtu 2012), which were trained using 50,000 image patches randomly sampled from 13 different natural scenic images. Three main steps build the BSIF filters: mean subtraction of each patches, dimensionality reduction using PCA (Principle Component Analysis), and estimation of statistically independent filters (or basis) using ICA. Give a FKP image sample I of size n × m and a filter Fi of same size, filter response is attained as follows (Kannala and Rahtu 2012):

where Fi stands for the convolution filters \(i=\left\{ {1,2, \ldots ,{\text{m}}} \right\}\) that represent statistically independent filters whose responses can be calculated simultaneously and then binarized in order to obtain the binary string as follow(Kannala and Rahtu 2012):

Finally, the BSIF features are obtained as a histogram of pixel’s binary codes that can efficiently describe the texture components of the FKP image. There are two essential factors into BSIF descriptor explicitly: the filter sizeand the length of the filter (i). Single filters with a fixed length may not be capable of generalizing well the finger knuckle patterns with varying intensities, scale and orientations. Therefore, we propose to utilize high performing multiple filters with different scales in order to capture eminent features, thus the name bank of binarized statistical image features (B-BSIF), which are further detailed in experimental section.

Figure 3 shows an example of an FKP image and the BSIF filters processing. Figure 3a presents the input ROI FKP image. Figure 3b illustrates the learned BSIF filter with a size 11 × 11 and of length 12 bits. While, Fig. 3c depicts the results of the individual convolution of the ROI FKP image with respective BSIF filters. Figure 3d presents the final BSIF encoded feature/image.

2.3 Dimensionality reduction

The histograms extracted from the encoded FKP image using B-BSIF descriptor are merged into one big feature vector. These vectors are big with high dimensions. Thus, it becomes difficult to process and evaluate them. Therefore, we perform dimensionality reduction before matching. The Principal Component Analysis (PCA) is a widely and simple method for dimensionality reduction, but in PCA separability between classes is ignored (Turk and Pentland 1991). To avoide PCA’s issue and to achieve more improved separability of feature subspace, Linear Discriminative Analaysis (LDA) (Belhumeur et al. 1997) may be deployed that may lead to attractive performances for recognition tasks. Though LDA still assumes a common covariance matrix among the classes that violates the normality principle. To supress these limitations of PCA and LDA and utilizing their strengths, in this paper, PCA + LDA has been adopted, where PCA algorithm is applied to reduce the dimensionality of large features while LDA algorithm is applied on PCA weights to increase the separability between the classes.

2.4 Matching module and normalization score

In the matching module of the proposed system, the Nearest Neighbor Classifier that uses the cosine Mahalanobis distance has been used.The criterion for similarity or dissimilarity is to minimize the distance (score) between the input query sample and the stored template. Assume that two vectors Vi and Vj stands for the feature vectors of query and template in the database images, respectively. Then the distance between \({{\text{V}}_{\text{i}}}\) and Vj is calculated by the following equation:

where C refers to the covariance matrix. Prior to finding the decision, a method named Min-Maxnormalization scheme is employed to transform the matching score into [0 1]. Given a set of matching scores\(\left\{ {{{\text{X}}_{\text{k}}}} \right\}\), where \({\text{K}}=1,2, \ldots ,{\text{n}}\).The normalization scores are given as:

where \(X_{K}^{'}\) represents the normalized scores. The final decision is made using normalized score.

3 Experiments

In this section, we present an experimental evaluation of the proposed FKP person authentication system based on Bank BSIF descriptor.

3.1 Database

The proposed system has been tested on the publicly available FKP dataset that provided by Hong Kong Polytechnic University (PolyU) (PolyU 2010). This database has 7920 images collected from 165 persons with 125 males and 40 females, their age is in the range of [20–50] years old. The images were captured in two sessions, with 48 different FKP images ofeach individual. Four finger types of every person have been collected that are: left index (LIF), left middle (LMF), right index (RIF) and right middle (RMF). Every finger type has 6 images in each session. There are total 1980 number of images for each finger type.

3.2 Experimental protocol

The evaluation of biometric recognition systems can be done in two modes: identification and verification. For the identification mode, the results have been presented in the form of the recognition rate. Rank-1 that is given by:

where \({{\text{N}}_{\text{i}}}\) denotes the number of images successfully assigned to the right identity and \({\text{N}}\) stands for the overall number of images attempts to be identified. The cumulative match curves (CMC) is used in closed-set identification task. The CMC present the accuracy performance of a biometric system and shows how frequently the individual’s template appears in the ranks based on the match rates (Jain et al. 2007). Thus, we have also adopted CMC curve in the experimental results. For the verification mode, we present the results in the form of the error equal rate (EER), when FAR (false accept rate) equal FRR (false reject rate). Moreover, to visually depict the performance of the biometric system, Receiver Operating Characteristics (ROC) curves have also been reported. A ROC curve explains how the FAR values are changed compared to the values of the Genuine Acceptance Rate (GAR) values.In addition in the mode of verification the measures VR@1%FAR (i.e., verification rate at operating point of 1% FAR), VR@0.1%FAR and VR@0.01%FAR have been used. Namely, VR@0.1% FAR refers to the verification rate that is equivalent to 1% FRR, is calculated for a FAR equal to 0.1%. This operating point is widely adopted in the biometric research community specially when the number of comparisons for “inter-class” tests (or “imposter tests”) is more than 1000. The verification rate is significant for studying the behavior of systems with low FAR using a large database or for simulating large scale application in order to improve system’s security and performance.

3.3 Experiment results

Here, we report three different experiments: Experiment I—construction of bank of BSIF filters, Experiment II—comparaisons between BSIF and B-BSIF and with the existing FKP recognition systems, and Experiment III—multibiometric FKP recognition system.

3.3.1 Experiment I: construction of bank of BSIF filters

The goal of this experiment is to construct a bank of filters BSIF. For comprehensive analysis, each individual BSIF filter with different parameter combination is applied on each individual FKP modality (LIF, LMF, RIF and RMF) in order to select the best BSIF parameters and respective filters. Since, the filter parameters (filter size \({\text{~}}\left( {\text{k}} \right){\text{~}}\) and filter length \({\text{~}}\left( {\text{n}} \right)){\text{~}}\) have a great influence on the performance of the proposed system, several sub-experiments were performed in both FKP identification and verification modes. The results are reported in Table 1. In Table 1, it is easy to see that the performance increases with the increase of the length \({\text{~}}\left( {\text{n}} \right)\) of the BSIF descriptor. The parameters (k, n) combinations that achieve high ranking performance on single modality has been selected and used to build the bank of BSIF (B-BSIF). These parameters are presented in Table 2, which are at this stage been fixed and used as estimated parameters for subsequent experiments. The model of the B-BSIF descriptor is illustrated in Fig. 4. As can be notice, the bank is composed of different BSIF descriptor sizes, which are (17 × 17), (15 × 15), (13 × 13), (11 × 11), (9 × 9) and (7 × 7) with the length of 12 bits. This bank of filters is applied to the each input FKP trait, as Fig. 4 also representates an example of using B-BSIF.

3.3.2 Experiment II: comparisons between BSIF and B-BSIF and with the existing FKP recognition systems

Here, we present two sets of experiments. First, experimental analysis to show efficacy of B-BSIF in comparsion with BSIF image texture descriptors for FKP based person recognition. The second experimentsproviding a comparison of the proposed system with prior works in the literatures. In this sets of experiments, the best BSIF parameters estimated in experiment I is utilized to built B-BSIF descriptor. The results of BSIF and B-BSIF on individual FKP traits are presented in Table 3. We can observe in Tables 1 and 3 that B-BSIF performs better than BSIF. The proposed method using B-BSIF achieves higher accuracy on the LMF,RMF,and LIF, modalities, e.g., on RMF modality the system achieved EER = 0.03% and Rank-1 accuracy = 99.70% in verification and identification modes, respectively.

The BSIF and B-BSIF comparsion results are also presented in term of CMC and ROC curves, which can be seen in Figs. 5, 6, 7, 8. These graphs also reports comparison study between the (BSIF + PCA + LDA) and the proposed method (B-BSIF + PCA + LDA) using FKP modality. The results clearly show that the performance is higher when the system uses the features of the B-BSIF descriptor.

To further demonstrate the efficacy of the proposed system, the comparison of the proposed system with prior works in the literatures on individual FKP modalities is given in Table 4. The proposed scheme outperforms the existing FKP person recognition system. For instance, the proposed method on RMF modality is able to attain 99.70% and 0.03% accuracy and error rate in identification and verification scenario, respectively. While, FKP based person recognition systems proposed in (Shariatmadar and Faez 2013) achieved 96.72% and 0.354% accuracy and error rate in identification and verification scenario, respectively.

3.3.3 Experiment III: Multibiometric FKP recognition system

The aim of this experiment is to study performance of the system in the case of information fusion, since multimodal systems fusing information from different sources are able to alleviate limitations (e.g., accuracy, noise, etc.) of the unimodal biometric systems. Thus, we studied different scenarios when the information presented by different finger types (LIF, LMF, RIF,and RMF) modality are integrated. The information were fused at score level using sum and min fusion rules. Four distinct experimental investigation were conducted by fusing only two types of fingers (Table 5), three types fingers (Table 6), all types of fingers (Table 7) and comparsion with existing FKP multimodal methods (Table 8). In Tables 5, 6 and 7, we can see that the multimodal FKP systems that fuse information from more than one source improves the accuracy of unimodal systems. For example, using only LIF modality features for person authentication (Table 4) resulted into 0.11% (EER) and 99.70% (rank-1 accuracy), while sum fusion of LIF and RMF (Table 5) yielded 0.00% (EER) and 100% (rank-1 accuracy).

The comparison of proposed multimodal system with existing systems in the literature is presented in Table 8. We can notice in Table 8 that the proposed system under verification mode attained lowest EER% compared to EER%by systems in (Shariatmadar and Faez 2014) and (Morales et al. 2011). In identification mode, the proposed system achieved highest recognition rate compared to schemes designed in (Shariatmadar and Faez 2013) and (Zeinali et al. 2014).

4 Conclusion

This paper presents a new method for recognizing individuals based on their finger knuckle patterns (FKP). Specifically, the proposed method uses bank of mutli-scale binarized statistical image features (B-BSIF). The system first extracts features using different best performing convolution filters to encode FKP trait. Then, histograms extracted from the encoded FKP images are concatenated into a big feature vector. A PCA + LDA technique is used to reduce the dimensionality of the feature vector. Finally, the nearest neighbor classifier, based on cosine Mahalanobis distance is used to recognition the user. The experimental results on a publicly available FKP PolyU database shows not only that the FKP modality is quite useful in biometric systems, presented B-BSIF scheme is very powerful and effective in FKP recognition but also the proposed mechanism outperform other existing state-of-the-art techniques. Furthermore, reported experiments also show that use of multiple finger types (i.e., multimodal system) improved the performance of systems using only single finger FKP information. In the future, to improve the security and robustness of the system against spoofing we aim to fuse FKP modality with other modalities (e.g., iris or voice) on mobile devices.

References

Adeoye OS (2010) A survey of emerging biometric technologies. Int J Comput Appl 9(10):1–5

Akhtar Z, Alfarid N (2011) Secure learning algorithm for multimodal biometric systems against spoof attacks. In: Proc. international conference on information and network technology (IPCSIT), vol. 4, pp 52–57

Akhtar Z, Fumera G, Marcialis GL, Roli F (2011) Robustness evaluation of biometric systems under spoof attacks. In: International conference on image analysis and processing, pp 159–168

Angelov PP, Gu X (2018) Deep rule-based classifier with human-level performance and characteristics. Inf Sci (Ny) 463–464:196–213

Bao R-J, Rong H-J, Angelov PP, Chen B, Wong PK (2018) Correntropy-based evolving fuzzy neural system. IEEE Trans Fuzzy Syst 26(3):1324–1338

Belhumeur PN, Hespanha JP, Kriegman DJ (1997) Eigenfaces vs. fisherfaces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell 19(7):711–720

Chaa M, Boukezzoula N, Meraoumia A (2018) Features-level fusion of reflectance and illumination images in finger-knuckle-print identification system. Int J Artif Intell Tools 27(3):1850007

Chlaoua R, Meraoumia A, Aiadi KE, Korichi M (2018) Deep learning for finger-knuckle-print identification system based on PCANet and SVM classifier. Evol Syst. https://doi.org/10.1007/s12530-018-9227-y

El-Tarhouni W, Shaikh MK, Boubchir L, Bouridane A (2014) Multi-scale shift local binary pattern based-descriptor for finger-knuckle-print recognition. In: 26th International Conference on Microelectronics (ICM), 2014, pp 184–187

Jain AK, Flynn P, Ross AA (2007) Handbook of biometrics. Springer, Berlin

Kannala J, Rahtu E (2012) Bsif: binarized statistical image features. In: 21st International Conference on Pattern Recognition (ICPR), 2012, pp 1363–1366

Kong T, Yang G, Yang L (2014) A hierarchical classification method for finger knuckle print recognition. EURASIP J Adv Signal Process 2014(1):44

Morales A, Travieso CM, Ferrer MA, Alonso JB (2011) Improved finger-knuckle-print authentication based on orientation enhancement. Electron Lett 47(6):380–381

Nigam A, Tiwari K, Gupta P (2016) Multiple texture information fusion for finger-knuckle-print authentication system. Neurocomputing 188:190–205

PolyU (2010) The Hong Kong polytechnic university (PolyU) Finger-Knuckle-Print Database [Online]. http://www.comp.polyu.edu.hk/ biometrics/FKP.html

Rani E, Shanmugalakshmi R (2013) Finger knuckle print recognition techniques—a survey. Int J Eng Sci 2(11):62–69

Shariatmadar ZS, Faez K (2013) Finger-knuckle-print recognition via encoding local-binary-pattern. J Circuits Syst Comput 22(6):1350050

Shariatmadar ZS, Faez K (2014) Finger-Knuckle-Print recognition performance improvement via multi-instance fusion at the score level. Opt J Light Electron Opt 125(3):908–910

Turk M, Pentland A (1991) Eigenfaces for recognition. J Cogn Neurosci 3(1):71–86

Zeinali B, Ayatollahi A, Kakooei M (2014) “A novel method of applying directional filter bank (DFB) for finger-knuckle-print (FKP) recognition. In: 22nd Iranian Conference on Electrical engineering (ICEE), 2014, pp 500–504

Zhai Y et al. (2018) A novel finger-knuckle-print recognition based on batch-normalized CNN. In: Chinese conference on biometric recognition, pp 11–21

Zhang L, Li H (2012) Encoding local image patterns using Riesz transforms: With applications to palmprint and finger-knuckle-print recognition. Image Vis Comput 30(12):1043–1051

Zhang L, Zhang L, Zhang D, Zhu H (2010) Online finger-knuckle-print verification for personal authentication. Pattern Recognit 43(7):2560–2571

Zhang L, Zhang L, Zhang D, Zhu H (2011) Ensemble of local and global information for finger–knuckle-print recognition. Pattern Recognit 44(9):1990–1998

Zhang D, Lu G, Zhang L (2018a) Finger-knuckle-print verification with score level adaptive binary fusion. In: Advanced Biometrics. Springer, Cham, pp 151–174

Zhang D, Lu G, Zhang L (2018b) Finger-knuckle-print verification. In: Advanced biometrics. Springer, Cham, pp 85–109

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Attia, A., Chaa, M., Akhtar, Z. et al. Finger kunckcle patterns based person recognition via bank of multi-scale binarized statistical texture features. Evolving Systems 11, 625–635 (2020). https://doi.org/10.1007/s12530-018-9260-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12530-018-9260-x