Abstract

Based on the criterion of minimum error entropy, this paper proposes a novel conjugate gradient algorithm, called MEE-CG. This algorithm has robust performance under non-Gaussian interference. Theoretical analysis and experimental results demonstrate that the proposed algorithm displays more robust performance than the conventional conjugate gradient methods on the basis of the mean square error and the maximum correntropy criterion. Compared with the stochastic gradient minimum error entropy algorithm and the recursive minimum error entropy algorithm, the proposed algorithm provides a trade-off between computational complexity and convergence speed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the past decades, the rapid development in adaptive filtering (AF) algorithms is regarded as a significance for practical applications [20]. The AF algorithms have been widely adopted in the field of signal processing including noise cancellation [11], channel equalization [8] and system identification [30]. The fundamental AF algorithms cover the famous least mean squares (LMS) [9], recursive least squares (RLS) [22] and conjugate gradient (CG) algorithm [29]. The LMS with the fixed step size always presents slow convergent speed [19]. Although the conventional RLS algorithm has a faster convergent speed, it suffers from higher computation complexity and numerical instability [25]. Comparing with the well-known RLS and LMS algorithms, the CG algorithm provides a trade-off between computational complexity and convergence speed [31]. Because of the variable step length according to the real-time input and the avoidance for calculating matrix inversion during derivation, the CG algorithm exhibits comparable performance in convergence with a smaller burden in computational complexity than the RLS algorithm [7].

The mean square error (MSE) acts as one of the most popular criteria in AF algorithms, which works well under Gaussian noises. However, signals will be most likely contaminated by non-Gaussian noise in various physical applications, including wireless channel tracking [18], multipath estimation [12] and acoustic echo cancellation [15]. Under these circumstances, the performance of the adaptive filter under the MSE criterion may work poorly [3, 5]. Therefore, combining with the Information Theoretic Learning (ITL) [23], various AF algorithms have been proposed to cope with inference from the non-Gaussian noise. The maximum correntropy criterion (MCC) [17, 24, 27] and minimum error entropy (MEE) [2, 4, 14], acting as the two typical examples for ITL, are insensitive to larger outliers, and improve the performance under impulsive noise. Several algorithms based on the MCC criterion have been proposed, such as the LMS-type algorithm [16, 21, 26], the RLS-type algorithm named the recursive maximum correntropy (RMC) [32] and the CG-type algorithm called the q-Gaussian MCC-CG [6]. Recent research has shown that the MEE criterion presents more robust performance than the MCC [10], and has been effectively utilized in the LMS-type algorithm [26], RLS-type algorithm named the recursive minimum error entropy (RMEE) [23] and the Kalman filter called the MEE-KF [2]. To the best of our knowledge, the CG has not been applied to the MEE criterion.

Under the MEE criterion, the LMS-type algorithm has slow convergent speed or poor steady-state error [14], and the RLS-type algorithm, or the RMEE, has improved both the convergent speed and the steady state error at the cost of a high computational complexity [23]. In this paper, we derive an CG-typed algorithm based on the MEE criterion, called MEE-CG. It can be expected that the MEE-CG algorithm will achieve a trade-off between computational complexity and convergence speed, compared with the LMS-type and RLS-type algorithms based on the MEE criterion.

For comparison, the LMS-type, CG-type [6] and RLS-type [32] algorithm under the MCC criterion are called MCC-LMS, MCC-CG and MCC-RLS, respectively. The LMS-type, CG-type and RLS-type [23] algorithm under the MEE criterion are called MEE-LMS, MEE-CG (proposed in this paper) and MEE-RLS, respectively.

The organization of the paper is structured as follows: in Sect. 2, the problem is addressed; in the following Sect. 3, we derive the MEE-CG algorithm; the analyses on the convergence and computational complexity are given in Sect. 4 and then experimental simulations for system identification are provided in Sect. 5; in the final Sect. 6, we summarize the paper and obtain the conclusion.

2 Problem Statement

2.1 Adaptive Filtering Theory

The block diagram of the basic adaptive filter is shown in Fig. 1. When taking the adaptive filtering theory into consideration, the desired response \({{d}}\in {{\textbf{R}}^1}\) are generated via an input \({\textbf{u}}\in {{\textbf{R}}^M}\) at instant n

where \({\textbf {w}}_{o}\in {{\textbf{R}}^M}\) denotes the unknown coefficient, and v(n) represents the zero-mean observation noise with variance \(\sigma ^{2}_{v}\). The estimation error can be represented as

where \(y(n)={\textbf{w}}_{}^T\left( {n -1} \right) {\textbf{u}}\left( n \right) \), and \({\textbf {w}}(n-1)\) accounts for the estimation of \({\textbf {w}}_{o}\) at instant \(n-1\). For simplification, we make the assumptions as follows: 1) The additive noise is white, and we have

2) The inputs \({\textbf{u}}(n)\) is composed of a zero-mean white sequence

3) The inputs are uncorrelated with the additive noise at moments (m, n)

2.2 Minimum Error Entropy (MEE) Criterion

From the Information Theoretic Learning (ITL), we can obtain the empirical version of the quadratic information potential [14, 26] for filters with sliding window in length L

where \({G_\sigma }\) stands for the Gaussian kernel function with bandwidth \(\sigma \), and \(\lambda \left( {0 < \lambda \le 1} \right) \) denotes the forgetting factor

Assume that the error is a random variable with probability density function and an estimator of Renyi’s quadratic entropy [14] for the error can be written as

According to ITL, minimizing the error entropy is equivalent to maximizing the formula (8). Therefore, based on the MEE [2, 4, 14], the cost function can be obtained as

We get the gradient of (9) as

For each instant n, we simplify the following expressions

Thus, we are able to change the form of the following expressions

So (10) can be rewritten as

Then we obtain

Through expressions (12–14), we can obtain the \({\Phi _L}\) (see the formula (11) in [26] for details) of the objective function under the MEE criterion and its recursive method.

3 Proposed Minimum Error Entropy Conjugate Gradient

Through the derivations in Sect. 2, we have

From (12), (14) and (15), we have

After applying the attenuation window to the correlation function for the data matrix in the CG, we achieve the same expressions as the RLS-type algorithm [1, 23]

The online CG method aims to minimize the following cost function [7]

and the solution of (18) is

The weight vector \({\textbf{w}}_L^{}\) and the direction vector \(\textbf{p}_L\) can be updated as

where step factor \(\alpha _L^{}\) and direction factor \({\beta _L}\) will update for each iteration and \(\textbf{g}_L\) denotes residual vector.

Substituting (17) into (20) and (21), the residual vector \(\textbf{g}_L\) for the online CG method is

According to the conjugate properties for \(\textbf{p}_L\) of the CG, we can get

and then the expression of \({\beta _L}\) can be derived as [1]

According to the definition of the step factor \(\alpha _L^{}\) in (20), substituting (20) into the objective function \(F({\textbf{w}}_L^{})\) in (18) yields

Setting the derivative of (18) to zero, \(\alpha _L^{}\) in (25) becomes

According to the derivations above, the detailed description for the MEE-CG algorithm is given in Algorithm 1.

4 Performance Analyses

4.1 Mean Value Behavior

Assuming the input signal to be ergodic and wide-sense stationary, \(\alpha \left( n \right) \), \(\beta \left( n \right) \), \(p\left( n \right) \), \({\textbf{w}}\left( n \right) \), \(\textbf{g}\left( n \right) ,{\textbf{r}}\left( n \right) \) and \({\textbf{R}}\left( n \right) \) are independent with each other, and then we have \(E\left[ {\alpha \left( n \right) } \right] = {\bar{\alpha }} \), \(E\left[ {\beta \left( n \right) } \right] = {\bar{\beta }} \), \(E\left[ {{\textbf{r}}\left( n \right) } \right] = r\), and \(E\left[ {{\textbf{R}}\left( n \right) } \right] = R\) for simplicity. After applying Z-transform to the proposed MEE-CG, to ensure the stability of the described system, we obtain the same conclusion about the range of \(\alpha _L^{}\) and \({\bar{\beta }} \) in [1]

where \(\lambda _i\) is the ith eigenvalue of R. When \({\bar{\beta }} \rightarrow 0\), we have \(0 \le {\bar{\alpha }} \le \mathrm{{2}}\lambda _{\max }^{\mathrm{{ - 1}}}\), which also supports the steepest descent algorithm in [9].

Besides, after M iterations, we have \(\tilde{\textbf{R}}(n)\tilde{\textbf{w}}(n) \approx \tilde{\textbf{r}}(n)\), where \( {\tilde{\textbf{x}}}\) represents the estimation for \(\textbf{x}\). Hence, the norm of \( \textbf{g}(M)\) satisfies

where \(\varepsilon \) can be an arbitrary small value. According to [1], we get the bound for the norm of \(\parallel \textbf{w}_o -\textbf{w}(n) \parallel \) as follows

where \(\textbf{R}_1 = \tilde{\textbf{R}}(n)\) is positive define, \(\Vert \textbf{w} \Vert _{{\textbf{R}}_1} = \sqrt{{{\textbf{w}}^T}{{\textbf{R}}_1}{\textbf{w}}},\) and the condition number is defined as \(\kappa = {\left\| {\textbf{R}} \right\| _2}{\left\| {{{\textbf{R}}^{ - 1}}} \right\| _2}.\) After taking the expectations on both side of (29), we can reach the conclusion that MEE-CG is convergent in mean value.

4.2 Mean Square Behavior

After reaching the steady state, we have \({{\textbf{w}}(n)} \approx {{\textbf{w}}(n - 1)}\). From (20), we have \(\alpha (n) \textbf{p}(n) \approx \textbf{0}\). Then multiplying \(\alpha (n)\) with (26), we can have \(\alpha (n)^{} \approx 0\), and thus \(\textbf{g}(n) \approx \textbf{g}(n-1)\). According to (24), \({\beta (n)} \approx 0\), we can obtain \(\textbf{p}(n+1) \approx \textbf{g}(n)\) from (21). Taking this equation into (26), one can obtain \(\textbf{g}(n) \approx \textbf{0}\). Since \(\textbf{g}(n) = {\textbf{r}}(n) - {\textbf{R}}(n)^{}{\textbf{w}}(n) \approx {\textbf{r}}(n)^{} - {\textbf{R}}(n){\textbf{w}}(n-1)\), we can easily get \({\textbf{w}}(n) \approx {\textbf{R}}(n)^{ - 1}{\textbf{r}}(n)\), which is the same as the MEE-RLS algorithm. Therefore, it can be concluded that the steady state behavior for both CG and RLS algorithms are equivalent [7]. Then, according to [23], the total weighted error in steady state for the proposed MEE-CG is the same as the RMEE algorithm

The following numerical simulations also verify the correctness of the theoretical values.

4.3 Computational Complexity

Table 1 displays the computational complexities of the MSE-RLS, MSE-CG, MCC-RLS, MCC-CG, MEE-RLS and MEE-CG per iteration. Table 1 shows that the computational complexity of the MSE-CG, MCC-CG, and MEE-CG is lower than that of the MSE-RLS, MCC-RLS, and MEE-RLS, respectively.

Sections 4.2 and 4.3 show that the proposed MEE-CG algorithm are capable of achieving the same steady error as the MEE-RLS with lower burden in computation than the MEE-RLS.

5 Experimental Results

In this section, the simulations will be carried out to prove the theoretical analysis and verify the superiority of the proposed MEE-CG algorithm. We gain all the simulations from the average of independent 100 Monte Carlo trials and the performances of the algorithms are evaluated by the steady-state mean-square deviation (MSD)

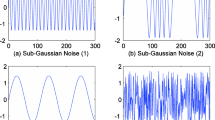

In the simulations, the unknown parameter \({\textbf {w}}_{o}\) is chosen to be a vector with the size \(12\times 1\), and we set the sliding window in the length of L=15. We choose the white Gaussian random sequences as inputs \({\textbf {u}}\) where the covariance matrix \(E\{{\textbf {uu}}^{T}\}\)=\({\textbf {I}}_{5}\) and \(E\{{\textbf {u}}^{T}{} {\textbf {u}}\}\)=5. The additive impulsive noise is \(v(n) \sim 0.94N(0,0.01) + 0.06N(0,225).\) Figure 1 compares the proposed MEE-CG under \(\sigma \)=1. The \(\lambda ^{2}\) parameters for MEE-CG1, MEE-CG2, MEE-CG3, MEE-CG4 and MEE-CG5 denote 0.942, 0.965, 0.980, 0.990 and 0.995 respectively. The corresponding theoretical values (30) are TH-MEE-CG1, TH-MEE-CG2, TH-MEE-CG3, TH-MEE-CG4 and TH-MEE-CG5 respectively.

Figure 2 shows that the convergence rate of the MCC-CG algorithm of these five sets of parameters has proportional to the value of \(\lambda \), and their steady-state errors are inversely proportional to the value of \(\lambda \). The theoretical values for steady-state error will be increased under the decrease of the forgetting factor \(\lambda \) as well as the increase of the bandwidth \(\sigma \).

Figure 3 shows that the convergence rate of the MEE-CG algorithm of these five sets of parameters has proportional to the value of \(\lambda \), and their steady-state errors are inversely proportional to the value of \(\lambda \). The theoretical values for steady-state error will be increased when decreasing the forgetting factor \(\lambda \) or increasing the bandwidth \(\sigma \). And, the convergence rates of the MCC-CG and MEE-CG can be found from Figs. 2 and 3, respectively. One can see that the MEE-CG1 reaches the same MSD for about 800 iterations than the MCC-CG1 and the MEE-CG4 is 400 iterations faster than the MCC-CG4 in Figs. 2 and 3. Thus, the proposed MEE-CG algorithm achieves faster convergence speed than the MCC-CG. When the kernel width sets within an appropriate range through the experimental results and related researches, the MEE-type algorithms can have good filtering performance and the kernel width can be chosen by cross-validation or trial and error methods in practical applications [2, 4, 14, 26]. In this paper, \(\sigma = 1\) is a good choice to ensure the performance of the proposed algorithm by trial and error methods.

Then, the comparison between MEE-LMS1 \(\left( {\mu = 0.2} \right) \), MEE-LMS2 \(\left( {\mu = 0.4} \right) \), MEE-RLS1 [23] \(\left( {{\lambda ^2} = 0.990} \right) \), MEE-RLS2 [23] \(\left( {{\lambda ^2} = 0.965} \right) \), MEE-CG1 \(\left( {{\lambda ^2} = 0.990} \right) \) and MEE-CG2 \(\left( {{\lambda ^2} = 0.965} \right) \) are given in Fig. 4. The proposed MEE-CG has a smaller MSD value and a faster convergence rate than MEE-LMS algorithm. Under the same \({\lambda ^2}\) values, it has the same MSD as MEE-RLS, and the convergence rate of MEE-CG becomes slightly slower than the MEE-RLS.

6 Conclusion

We have proposed a new MEE-CG method based on MEE criterion, which has the same theoretical steady state error as that of the MEE-RLS. The numerical simulations reveal that when coping with non-Gaussian noise, the MEE-CG has faster convergence speed than MEE-LMS algorithm and smaller steady state error than MCC-CG algorithm. In addition, the MEE-CG has comparable performance with the MEE-RLS, and its computational complexity is lower than the MEE-RLS.

Data Availability

All data generated or analysed during this study are included in this published article.

References

P.S. Chang, A.N. Willson, Analysis of conjugate gradient algorithms for adaptive filtering. IEEE Trans. Signal Process. 48(2), 409–418 (2000)

B. Chen, L. Dang, Y. Gu, N. Zheng, J.C. Principe, Minimum error entropy Kalman filter. IEEE Trans. Syst. Man. Cybern. Syst. 51(9), 5819–5829 (2021)

B. Chen, J.S. Zhao, P. Zhu, J.C. Principe, Quantized Kernel least mean square algorithm. IEEE Trans. Neural Networks Learn. Syst. 23(1), 22–32 (2012)

B. Chen, Y. Zhu, J. Hu, Mean\(-\)square convergence analysis of Adaline training with minimum error entropy criterion. IEEE Trans. Neural Netw. 21(7), 1168–1179 (2010)

B. Chen, Y. Zhu, J. Hu, J.C. Principe, System Parameter Identification: Information Criteria and Algorithms (Newnes, Oxford, 2013)

T. Chen, K. Xiong, H. Xie, G. Li, S. Wang, q-Gaussian Maximum Correntropy Adaptive Filter with Conjugate Gradient Method. In: 2019 Chinese Automation Congress (CAC) (IEEE, 2019), pp. 1164–1168

P.S.R. Diniz, M.O.K. Mendonca, J.O. Ferreira, T.N. Ferreira, Data-Selective Conjugate Gradient Algorithm. In: 2018 26th European Signal Processing Conference (EUSIPCO) (IEEE, 2018), pp. 707–711

Y. Ge, W. Zhang, H. Wang, On adaptive length of temporal filter for space-time equalization with cochannel interference. IEEE Signal Process. Lett. 25(7), 999–1003 (2018)

S. Haykin, Adaptive Filter Theory (Prentice-Hall, Hoboken, 2020)

A.R. Heravi, G.A. Hodtani, Where does minimum error entropy outperform minimum mean square error? A new and closer look. IEEE Access 6, 5856–5864 (2018)

E.P. Jayakumar, P.S. Sathidevi, An integrated acoustic echo and noise cancellation system using cross-band adaptive filters and wavelet thresholding of multitaper spectrum. Appl. Acoust. 141, 9–18 (2018)

C. Lan, H. Yue, Y. Xing, M. Ren, Multipath estimation based on modified epsilon-constrained rank-based differential evolution with minimum error entropy. IEEE Access 6, 61569–61582 (2018)

L. Li, X. Zhao, Variable step-size LMS algorithm based on hyperbolic tangent function. Circuits Syst. Signal Process. 42, 4415–4431 (2023)

Z. Li, L. Xing, B. Chen, Adaptive filtering with quantized minimum error entropy criterion. Signal Process. 172, 107534 (2020)

W. Liu, I. Park, J.C. Principe, An information theoretic approach of designing sparse Kernel adaptive filters. IEEE Trans. Neural Networks 20(12), 1950–1961 (2009)

W. Liu, P. Pokharel, J.C. Principe, Correntropy: properties and applications in non-Gaussian signal processing. IEEE Trans. Signal Process. 55(11), 5286–5298 (2007)

X. Liu, B. Chen, H. Zhao, J. Qin, J. Cao, Maximum correntropy Kalman filter with state constraints. IEEE Access 5, 25846–25853 (2017)

R. Pogula, T.K. Kumar, F. Albu, Robust sparse normalized LMAT algorithms for adaptive system identification under impulsive noise environments. Circuits Syst. Signal Process. 38(11), 5103–5134 (2019)

H. Radmanesh, M. Hajiabadi, Recursive maximum correntropy learning algorithm with adaptive Kernel size. Circuits and Syst. II: IEEE Trans. Exp. Briefs 65(7), 958–962 (2018)

A.H. Sayed, Adaptive networks. Proc. IEEE 102(4), 460–497 (2014)

A. Singh, J.C Principe, Using Correntropy as a Cost Function in Linear Adaptive Filters. In: 2009 International Joint Conference on Neural Networks (IJCNN) (IEEE, 2009), pp. 2950–2955

S.D. Stearns, Adaptive Signal Processing (Prentice-Hall, Hoboken, 1985)

G. Wang, B. Peng, Z. Feng, X. Yang, J. Deng, N. Wang, Adaptive filtering based on recursive minimum error entropy criterion. Signal Process. 179, 107836 (2021)

G. Wang, R. Xue, J. Wang, A distributed maximum correntropy Kalman filter. Signal Process. 160, 247–251 (2019)

S. Wang, W. Wang, S. Duan, L. Wang, Kernel recursive least squares with multiple feedback and its convergence analysis. IEEE Trans. Circuits Syst. II Exp. Briefs 64(10), 1237–1241 (2017)

Z. Wu, S. Peng, W. Ma, B. Chen, C. Jose, Principe, minimum error entropy algorithms with sparsity penalty constraints. Entropy 17(5), 3419–3437 (2015)

Z. Wu, J. Shi, X. Zhang, W. Ma, B. Chen, Kernel recursive maximum correntropy. Signal Process. 117, 11–16 (2015)

H. Xia, W. Ren, M. Han, Kernel Generalized half-quadratic correntropy conjugate gradient algorithm for online prediction of chaotic time series. Circuits Syst. Signal Process. 42, 2698–2722 (2023)

K. Xiong, S. Wang, The online random Fourier features conjugate gradient algorithm. IEEE Signal Process. Lett. 26(5), 740–744 (2019)

L. Yang, J. Liu, R. Yan, X. Chen, Spline adaptive filter with arctangent-momentum strategy for nonlinear system identification. Signal Process. 164, 99–109 (2019)

M. Zhang, X. Wang, X. Chen, A. Zhang, The Kernel conjugate gradient algorithms. IEEE Trans. Signal Process. 66(16), 4377–4387 (2018)

X. Zhang, K. Li, Z. Wu, Y. Fu, H. Zhao, B. Chen, Convex regularized recursive maximum correntropy algorithm. Signal Process. 129, 12–16 (2016)

Acknowledgements

The authors would like to thank all the reviewers who participated in the review.

Funding

This work was supported by the National Natural Science Foundation of China under Grant 61371182, 61971100 and 51975107.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares no Conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Li, G., Sun, Q., Zhang, Y. et al. Adaptive Filtering Based on Minimum Error Entropy Conjugate Gradient. Circuits Syst Signal Process 43, 4662–4674 (2024). https://doi.org/10.1007/s00034-024-02654-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-024-02654-w