Abstract

This article is devoted to estimating simultaneously both the states and the inputs of linear time-invariant fractional-order systems (LTI-FOSs) with the order \(0<\alpha <2\). Firstly, a necessary and sufficient stability criterion for LTI-FOSs with the order \(0<\alpha <1\) is derived by the linear matrix inequality technique. Secondly, a novel fractional-order observer combined with state vectors and ancillary vectors is given, which can generalize several forms of existing observers. Moreover, the parameter matrices of the desired observer for both the order \(0<\alpha <1\) and \(1<\alpha <2\) are solved on the basis of the stability theorem and the solution to the generalized inverse matrix. Finally, the fractional-order observer design algorithm is proposed and then applied to an illustrated example, in which the simulation results are reported to verify the effectiveness of the proposed approach.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional-order derivatives (FODs) have become a hot topic during the past decades because they are widely used in the control of dynamical processes [9, 13], and lots of real-world physical systems can be well described by FODs. There are three commonly used definitions of FODs named Riemann–Liouville derivative, Grünward–Letnikov derivative, and Caputo derivative. During the past 2 decades, various types of the stability and stabilization of linear time-invariant fractional-order systems (LTI-FOSs) have been widely investigated and many results are obtainable; for example, see [8, 16, 19, 20, 22, 25]. The robust stability and stabilization of fractional-order interval systems with the order \(\alpha \in (0,1)\) was investigated in [19]. The Mikhailov stability criterion and finite-time Lyapunov stability criterion for fractional-order linear time-delay systems were derived in [8] and [22], respectively. The robust stability and stabilization with the case \(\alpha \in (0,1)\) of fractional-order interval systems combined with coupling relationships were considered in [16]. Additionally, the control problems of fractional-order descriptor systems are widely studied by many scholars. The robust stabilization of uncertain descriptor fractional-order systems were solved by designing fractional-order controllers [25]. Both state and output feedback controllers were presented to stabilize the fractional-order singular uncertain systems with the order \(\alpha \in (0,2)\) [20]. Moreover, the stabilization criterion of the fractional-order triangular system was derived and the obtained stabilization results were utilized to consider the control problems of fractional-order chaotic systems in three cases [26]. The linear feedback controllers were proposed to address the synchronization and anti-synchronization of a class of fractional-order chaotic systems based on the triangular structure [27]. The passivity was considered for fractional-order neural network which is affected by time-varying delay [31]. The sliding controller was designed for the synchronization of fractional-order chaotic systems with disturbances [28].

It is worth mentioning that since Luenberger introduced the concept of observer for dynamic systems, which has become one of the fundamental system concepts in the modern control theory. The observer is a dynamic system which utilizes the available information of inputs and outputs to reconstruct the unmeasured states of the original system. Observer design for linear systems is a popular problem in control theory that has been studied in many aspects. This is due to the fact that a state estimation is generally required for the control when all states of the system are not available. The observer, observer-based controller and observer-based compensator were designed for the integer-order systems with applications can be found in [17, 18, 32]. More recently, many types of full-order and reduced-order observers for LTI-FOSs are obtainable. The observers were designed for LTI-FOSs with unknown inputs [24]. By decomposing the parameter matrixes, an observer was derived for the LTI-FOSs with \(0<\alpha \le 1\) without considering the unknown input [2]. On the basis of the solutions to generalized inverted matixes, the observer was presented for singular LTI-FOSs [23]. To satisfy the fault sensitivity, disturbance robustness and admissibility of singular LTI-FOSs, an H_/\(H_\infty \) fault detection observer was considered [6]. In addition, a nonasymptotic pseudo-state estimator was proposed for a class of LTI-FOSs which can be transformed into the Brunovsky’s observable canonical form of pseudo-state space representation with unknown initial conditions and \(H_\infty \)-like observer [33]. The dynamic compensator was designed based on disturbance estimator for fractional-order time-delay fuzzy systems with nonlinearities and unknown external disturbances in [12]. The robust fractional-order observer was designed for a class of disturbed LTI-FOSs in the form of time domains and frequency domains, respectively [3]. Notice that the above observers are under the definition of Caputo derivative, [34] designed observers for LTI-FOSs under the definitions of Riemann–Liouville derivative and Grünwald–Letnikov derivative. On the other hand, observer-based controllers (OBCs) have been applied to the control of LTI-FOSs effectively. The robust \(H_\infty \) OBCs were derived by use of the generalized Kalman–Yakubovich–Popov lemma [4], and the novel sufficient criterion was given by means of linear matrix inequalities (LMIs) to guarantee the stabilization of disturbed uncertain LTI-FOSs [10]. Several LMIs were presented to obtain the stabilization for uncertain LTI-FOSs on the ground of robust OBCs [11, 14]. The observer-based event-triggered output feedback controller was given to investigate fractional-order cyber-physical systems with \(0<\alpha <1\), in which the system was affected by stochastic network attacks [35]. The OBCs was derived for the stabilization of LTI-FOSs with the input delay [7].

Additionally, state estimation of LTI-FOSs in the presence of unknown inputs has been another fascinating and relevant topic in the modern control theory. Two different observers, \(H_\infty \) filter for the estimation of the states, and fractional-order sliding model uncertain input observer for the simultaneous estimation of both states and unknown inputs, have been addressed for LTI-FOSs with the consideration of proper initial memory effect [15]. A high-order sliding mode observer was proposed for the simultaneous estimation of the pseudo-state and the unknown input of LTI-FOSs with the single unknown input and the single output systems in both noisy and noise-free cases, respectively [1]. Nevertheless, the problem on the reconstruction of unknown inputs is still open. The reason for the reconstruction of unknown inputs is that, in some applications, it is either a costly task or not basically doable to measure some of the inputs. There are many situations where an input observer is required to estimate the cutting force of a machine tool or the exerting force/torque of a robotic system. In chaotic systems, one wishes to estimate not only the state for chaos synchronization but also the information signal input for the secure communication. Compared with the state estimation, less research has been carried out on estimating simultaneously the state of a dynamic system and its inputs. Notice that not only the state estimation but also the unknown inputs are of significance because the state estimation is generally required for the control when all states of the system are not available and the unknown input can represent the impact of the failure of actuators or plant components, and thus worth to be estimated and used in the field of fault detection and isolation. Consequently, a new fractional-order observer is presented to estimate both states and unknown inputs simultaneously. To the author’s best knowledge, this kind of observer for LTI-FOSs is quiet new and not fully investigated. Motivated by the above discussions, we investigate the interesting problem that both states and unknown inputs are simultaneously estimated for LTI-FOSs by reconstructing the original systems. In comparison with the aforementioned papers, the main contributions of this work are generalized as follows.

-

1.

Necessary and sufficient conditions are presented to guarantee the stabilization of LTI-FOSs with the case \(\alpha \in (0,1)\). Note that the stability criterion obtained can be applied to [23] but it is incorrect when it comes to the result in [19].

-

2.

A novel fractional-order dynamic observer consists of state vectors, ancillary vectors and the estimations is presented which shows that the observers designed in [2,3,4, 10, 11, 23, 24, 33, 34] are the special form of the observer obtained in this paper.

-

3.

Not only the states but also the unknown inputs are simultaneously estimated compared with [2, 3, 23, 24, 33]. The reconstruction of the initial LTI-FOSs makes the estimation more efficient than the results derived in [14, 15].

The rest of this paper is arranged as follows. The definition of Caputo derivative and the stability criteria for LTI-FOSs are proposed in Sect. 2. To estimate the states and unknown inputs simultaneously, a fractional-order dynamic observer is proposed, and the parameter matrices of the observer are solved by the solutions to the generalized inverse matrix in Sect. 3. In Sect. 4, an illustrated example is given to verify the correctness and efficiency of the obtained results. Section 5 draws the conclusion of the paper.

Notation: \(A^T\): the transpose of a matrix A; \(R^n\): the real n-dimensional Euclidean space; \(R^{n\times m}\) (\({\mathbb {C}}^{n\times m}\)): the set of all \(n\times m\) matrices defined in the real (complex) plane; \(A^*\): the conjugate transpose of Hermitian matrix A; \(\otimes \): the Kronecker product; \({\mathcal {Y}}^+\): the generalized inverse of matrix \({\mathcal {Y}}\); Re(A) (Im(A)): the real (imaginary) part of Hermitian matrix A; sym(A): \(A^T+A\).

2 Preliminaries and Problem Formulation

There are three mainly used definitions of fractional-order derivative: Riemann–Liouville derivative, Grünward–Letnikov derivative and Caputo derivative. In this paper, only Caputo derivative is used since this Laplace transform allows using initial values of classical integer-order derivatives with clear physical interpretations. Caputo derivative with \(\alpha \)-order is defined as

where \(n=[\alpha ]\), and the notation \(\Gamma (\cdot )\) denotes the Gamma function which is presented as

In the sequel, the following system is considered

where \(\alpha \in (0,2)\), \(x(t)\in R^n\) denotes the state, and \(A\in R^{n\times n}\).

To proceed, we begin with the following Lemmas.

Lemma 1

System (1) is stable with the order \(\alpha \in (0,2)\) iff [21]

Lemma 2

When \(\alpha \in (0,1)\), (1) is stable iff [30]

where \(\theta =(1-\alpha )\frac{\pi }{2}\), matrixes \(Q_1, Q_2\) are Hermitian matrixes such that \(Q_1=Q_1^*\in {\mathbb {C}}^{n \times n}\), \(Q_2=Q_2^*\in {\mathbb {C}}^{n \times n}\), and \(Q_1>0, Q_2>0\).

Lemma 3

If (1) with the order \(\alpha \in (0,1)\) satisfies the following conditions

where

then it is stable, where \(P_{\kappa 1}\in R^{n \times n}\), \(\kappa =1,2\) are positive symmetric matrices, and \(P_{\kappa 2} \in R^{n \times n}\) are skew-symmetric matrices.

Proof

To begin with, we define \(P_{\kappa 1}=Re(Q_{\kappa })\), \(P_{\kappa 2}=Im(Q_{\kappa })\), \(\kappa =1,2\). It follows form Lemma 2 that \(P_{\kappa 1}-iP_{\kappa 2}^T=P_{\kappa 1}+iP_{\kappa 2}\). Moreover, the asymptotic stability of (1) can be guaranteed iff there exist two skew-symmetric matrices \(P_{\kappa 2} \in R^{n \times n}\) and two positive symmetric matrices \(P_{\kappa 1}\in R^{n \times n}\) such that

Substituting (5) in (3) and combining with the Euler formulae yields that

Considering that a Hermitian matrix Q is positive iff \(\left[ \begin{matrix} Re(Q)&\quad Im(Q) \Im (Q)&\quad Re(Q) \end{matrix}\right] >0\), thus the above inequality is equivalent to

which completes the proof. \(\square \)

Lemma 4

(1) is stable with the order \(\alpha \in [1,2)\) iff

where \(P=P^T>0\) [5].

Consider the following fractional-order system

where \(\alpha \in (0,2)\), \(x(t)\in R^n\) is the state vector, \(y(t)\in R^p\) is the output vector, \(u(t)\in R^m\) is the control input, \(d(t)\in R^r\) is the unknown input vector, and \(\mathcal {A}\in R^{n\times n}, {\mathcal {B}}\in R^{n\times m}, {\mathcal {F}}\in R^{n\times r}, {\mathcal {C}}\in R^{p\times n}, {\mathcal {D}}\in R^{p\times r}\) are the known matrixes with appropriate dimensions.

Lemma 5

Consider the following matrix equation

The above equation has a solution iff [29]

and the general solution can be expressed as

where \({\mathcal {U}}\) can be selected arbitrarily and \(I_n\) is an \(n\times n\) identity matrix. In addition, some solution of the matrix equation can be expressed as

where \({\mathcal {Y}}^+=({\mathcal {Y}}^T{\mathcal {Y}})^{-1}\mathcal {Y}^T\).

3 Main Results

To cope with the simultaneous estimations of the states and unknown inputs, system (9) can be rewritten as

where \(\xi (t)=\left[ \begin{matrix} x(t)\\ d(t) \end{matrix}\right] \), \({\mathbb {A}}=[{\mathcal {A}} \quad {\mathcal {F}}]\), \({\mathbb {C}}=[{\mathcal {C}} \quad {\mathcal {D}}]\), \(\mathcal {E}=[I_{n\times n} \quad 0_{n\times r}]\).

To make the estimation meaningful, we first give the following assumption.

Assumption 1

rank \(\left[ \begin{matrix} {\mathcal {E}}\\ {\mathbb {C}} \end{matrix}\right] =n+r.\)

3.1 Observer Design for LTI-FOSs with Unknown Inputs

Consider the following fractional-order observer

where \(z(t)\in R^n\) is the state vector, \(\psi (t)\in R^n\) is the ancillary vector, and \({\hat{\xi }}(t)\in R^{n+r}\) is the estimation of x(t) and d(t). \({\mathbb {N}}\), \({\mathbb {J}}\), \({\mathbb {H}}\), \({\mathbb {M}}\), \({\mathbb {P}}\), \({\mathbb {Q}}\), \({\mathbb {G}}\), \({\mathbb {R}}\) and \({\mathbb {S}}\) are unknown matrices with appropriate dimensions requiring to be figured out in the following.

Remark 1

Without considering the unknown input and the ancillary vector, i.e., \(d(t)=0\), \(\psi (t)=0\), system (10) and observer (11) can degrade into the usual form. Specifically, (11) can generalize several existing observers in the following two forms.

-

1.

When \({\mathbb {R}}=I\), \({\mathbb {P}}=0\), \({\mathbb {Q}}=0\), \({\mathbb {G}}=0\) and \({\mathbb {M}}=0\), (11) gives that

$$\begin{aligned} D_t^{\alpha }z(t)&={\mathbb {N}}z(t)+{\mathbb {H}}u(t)+{\mathbb {J}}y(t),\\ {\hat{x}}(t)&=z(t)+{\mathbb {S}}y(t),\nonumber \end{aligned}$$ -

2.

When \({\mathbb {R}}=I\), \({\mathbb {S}}=0\), \({\mathbb {P}}=0\), \({\mathbb {Q}}=0\), \({\mathbb {G}}=0\), \({\mathbb {M}}=0\) and \(\mathcal {A}-{\mathbb {J}}{\mathcal {C}}={\mathbb {N}}\), we present the following observer

$$\begin{aligned} D_t^{\alpha }{\hat{x}}(t)&={{\mathcal {A}}{\hat{x}}(t)}+\mathcal {B}u(t)+{\mathbb {J}}(y(t)-{\mathcal {C}}{\hat{x}}(t)),\\ {\hat{y}}(t)&={\mathcal {C}}{\hat{x}}(t), \end{aligned}$$

Lemma 6

Observer (11) is effective for system (10) if there exists a matrix \({\mathbb {T}}\) such that

and the matrix

satisfies

Proof

We define the following error

where the matrix \({\mathbb {T}}\in R^{n\times n}\) is arbitrary with an appropriate dimension. Thus

and

If there exists a matrix \({\mathbb {T}}\) such that

then it holds that

Moreover, let

which yields that if \(\zeta (t)\rightarrow 0\), then \(e(t)\rightarrow 0\). In addition, the errors \(\zeta (t)\rightarrow 0\) and \(\psi (t)\rightarrow 0\) iff the matrix \(\Xi \) satisfies Lemma 1, which completes the proof. \(\square \)

3.2 Parameterization of the Observer

Actually, the design of observer (11) is to work out the matrices \({\mathbb {N}}\), \({\mathbb {J}}\), \({\mathbb {H}}\), \({\mathbb {M}}\), \({\mathbb {P}}\), \({\mathbb {Q}}\), \({\mathbb {G}}\), \({\mathbb {R}}\) and \({\mathbb {S}}\) appropriately. In the following, we will solve those matrices step by step.

Firstly, it follows from (12c) and (12d) that

In light of Lemma 5, (15) has a solution iff

Let \({\mathbb {E}}\in R^{{n\times (n+r)}}\) be an arbitrary matrix with full row rank such that

that is, \(\left[ \begin{matrix} {\mathbb {E}}\\ {\mathbb {C}} \end{matrix}\right] \) is equivalent to \(\left[ \begin{matrix} {\mathbb {T}}{\mathcal {E}}\\ {\mathbb {C}} \end{matrix}\right] \). Hence, there exists a matrix \({\mathbb {K}}\) such that

which yields that

i.e.,

By Lemma 5, (17) has a solution iff

and the solutions to (17) can be expressed as

Secondly, since \(\left[ \begin{matrix} {\mathbb {E}}\\ {\mathbb {C}} \end{matrix}\right] \) is of full column rank, (15) can be rewritten as

which admits a solution iff

Furthermore, on the basis of Lemma 5, the general solution to (20) is given as

where \(\Upsilon =\left[ \begin{array}{ccc} {\mathbb {E}} \\ {\mathbb {C}}\\ \end{array} \right] \), \(O_1\), \(O_2\) are arbitrary matrices with appropriate dimensions.

Finally, the solution to (22) can be described specifically as

where

Remark 2

It follows from Assumption 1 and \({\mathbb {E}}\) with full row rank that (17) holds. Additionally, once \({\mathbb {E}}\) is given, \({\mathbb {T}}\) and \({\mathbb {K}}\) can be calculated directly.

Remark 3

It follows from (14) that if \(\zeta (t) \rightarrow 0\), then \(e(t)\rightarrow 0\), i.e., e(t) is independent of \({\mathbb {R}}\). If we set \(O_2=0\) then (23c) and (23d) yield that

Notice that (12a) is equal to

or equivalently,

According to Lemma 5, the solution to (27) is presented as

in which \(O_3\) is an arbitrary matrix with an appropriate dimension. As a consequence,

where

Based on the above discussions, (13) can be rewritten as

where

Consequently, the design of observer (11) is reduced to the determination of the matrix Z such that (31) is stable. The matrix Z can be obtained in the following two cases.

3.2.1 \(\alpha \in (0,1)\)

Theorem 1

There exists a matix Z such that (31) is stable iff there exist a symmetric positive definite matrix \(P_1\) and a matrix \(Y_1\) such that

In this case, Z is determined by \(Z=P_1^{-1}Y_1\).

Proof

Substituting (31) in (3) in Lemma 3 yields that

To simplify the calculation, by setting \( P_{12}=P_{22}=0\), \(P_{11}=P_{21}=P_1\), we have

where \(Y_1=P_1Z\), which completes the proof. \(\square \)

3.2.2 \(\alpha \in [1,2)\)

Theorem 2

There exists a parameter matrix Z such that (31) is stable iff there exist a symmetric positive definite matrix \(P_2\) and a matrix \(Y_2\) such that

In such a case, Z is determined by \(Y_2=P_2^{-1}Z\).

Proof

Substituting (31) in Lemma 4, we have that

where \(Y_2=P_2Z\), which completes the proof. \(\square \)

Within the above results, we present the following design algorithm to determine the desired fractional-order observer.

4 Simulation Results

The following numerical example is presented to verify the derived theoretical results.

Example 1

The parameters of fractional-order system (9) are chosen as

then we have that

Clearly, \(rank \left[ \begin{matrix} {\mathcal {E}}\\ {\mathbb {C}} \end{matrix}\right] =5\), which satisfies Assumption 1.

Moreover, set

such that \(rank \left[ \begin{matrix} {\mathbb {E}}\\ {\mathbb {C}} \end{matrix}\right] =n+r\).

It follows from step 2 in Algorithm 1 that

According to step 4, we obtain that

Furthermore, step 5 deduces that

Hence, by step 6, \({\mathbb {R}}=U_1\) and \({\mathbb {S}}=U_2\).

In the sequel, set \(d(t)=[0.2\ 0.2]^T\). In such a case, by Theorem 1, we achieve that

As a consequence,

Therefore, \({\mathbb {M}}=O_1=O_3={\textbf {0}}_{3\times 3}\), and

It is easy to see that by step 9, \({\mathbb {P}}={\mathbb {Q}}={\textbf {0}}_{3\times 3}\). Finally, step 10 indicates that \({\mathbb {N}}=U_3\) and \({\mathbb {J}}=U_4\).

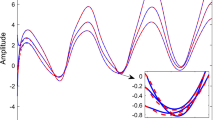

Based on the above calculations, the simulation results are presented as Figs. 1, 2 and 3 with \(\alpha =0.9\) and the initial states \((x_1(t),x_2(t),x_3(t))=(15,-5,5)\), \((z_1(t),z_2(t),z_3(t))=(0,5,5)\) and \((\psi _1(t),\psi _2(t),\psi _3(t))=(5,-10,5)\). The simulation results show that the designed fractional-order observer can simultaneously estimate both the states (see Figs. 1, 2) and the unknown inputs (see Fig. 3), effectively. Furthermore, within the same initial states, the simulation results with \(\alpha =0.6\) and \(\alpha =0.8\) are shown in Figs. 4 and 5, respectively. It follows from the simulation results that the convergence rate is faster when the order is more and more big.

5 Conclusion

In this paper, a fractional-order observer has been proposed to estimate both the states and the unknown inputs for LTI-FOSs with \(0<\alpha <2\). A necessary and sufficient criterion has been derived to guarantee the stability of LTI-FOSs with \(0<\alpha <1\). The parameterized matrices of the derived fractional-order observer have been solved on the basis of the stability theorems and the solution to the generalized inverse matrix. The fractional-order observer design algorithm has been presented to verify the correctness and effectiveness of the designed observer. Simulation results have shown that the desired observer can estimate both the states and the unknown inputs, simultaneously.

For future investigations, we may consider the simultaneous estimations of both states and unknown inputs for fractional-order nonlinear system and related fractional-order observer-based controller designed problems.

References

Z. Belkhatir, T.K. Laleg-Kirati, High-order sliding mode observer for fractional commensurate linear systems with unknown input. Automatica 82, 209–217 (2017)

E.A. Boroujeni, M. Pourgholi, H.R. Momeni, Reduced order linear fractional order observer, in Proceedings of the International Conference on Control Communication and Computing (ICCC), Thiruvananthapuram, India (2013), pp. 1–4

Y. Boukal, M. Darouach, M. Zasadzinski, N.E. Radhy, \(H_\infty \) observer design for linear fractional-order systems in time and frequency domains, in Proceedings of the European Control Conference (ECC), Strasbourg, France (2014), pp. 2975–2980

Y. Boukal, M. Darouach, M. Zasadzinski, N.E. Radhy, Robust \(H_\infty \) observer-based control of fractional-order systems with gain parametrization. IEEE Trans. Autom. Control 62(11), 5710–5723 (2017)

M. Chilali, P. Gahinet, P. Apkarian, Robust pole placement in LMI regions. IEEE Trans. Autom. Control 44(12), 2257–2270 (1999)

R.J. Cui, J.G. Lu, \(H\)_/\(H_\infty \) fault detection observer design for fractional-order singular systems in finite frequency domains. ISA Trans. 129, 100–109 (2022)

W.T. Geng, C. Lin, B. Chen, Observer-based stabilizing control for fractional-order systems with input delay. ISA Trans. 100, 103–108 (2020)

P. Gokul, R. Rakkiyappan, New finite-time stability for fractional-order time-varying time-delay linear systems: a Lyapunov approach. J. Frankl. Inst. 359(14), 7620–7631 (2022)

R. Hilfer, Applications of Fractional Calculus in Physics (World Scientific Publishing, Singapore, 2001)

S. Ibrir, M. Bettayeb, Observer-based control of fractional-order linear systems with norm-bounded uncertainties: new convex-optimization conditions, in Proceedings of the 19th World Congress on the International Federation of Automatic Control, Cape Town, South Africa, vol. 47(3) (2014), pp. 2903–2908

S. Ibrir, M. Bettayeb, New sufficient conditions for observer-based control of fractional-order uncertain systems. Automatica 59, 216–223 (2015)

S. Kanakalakshmi, R. Sakthivel, L.S. Ramya, A. Parivallal, Disturbance estimator based dynamic compensator design for fractional order fuzzy control systems. Iran. J. Fuzzy Syst. 18(4), 79–93 (2021)

A. Kilbas, H. Srivastava, J. Trujillo, North-Holland Mathematics Studies: Theory and Applications of Fractional Differential Equations (Elsevier, Amsterdam, 2006)

Y. Lan, H. Huang, Y. Zhou, Observer-based robust control of \(\alpha (1\le \alpha <2)\) fractional-order uncertain systems: a linear matrix inequality approach. IET Control Theory Appl. 6(2), 229–234 (2012)

S.C. Lee, Y. Li, Y.Q. Chen, H.S. Ahn, \(H_\infty \) and sliding mode observers for linear time-invariant fractional-order dynamic systems with initial memory effect. J. Dyn. Syst. Trans. ASME 136(5), 051022 (2014)

C. Li, J.C. Wang, Robust stability and stabilization of fractional order interval systems with coupling relationships: the \(0<\alpha <1\) case. J. Frankl. Inst. 349(7), 2406–2419 (2012)

Y.B. Liu, W.C. Sun, H.J. Gao, High precision robust control for periodic tasks of linear motor via B-spline wavelet neural network observer. IEEE Trans. Ind. Electron. 69(8), 8255–8263 (2022)

Z.T. Liu, H.J. Gao, X.H. Yu, W.Y. Lin, J.B. Qiu, J.J. Rodríguez-Andina, D.S. Qu, B-spline wavelet neural network-based adaptive control for linear motor-driven systems via a novel gradient descent algorithm. IEEE Trans. Ind. Electron. 71(2), 1896–1905 (2022)

J.G. Lu, Y.Q. Chen, Robust stability and stabilization of fractional-order interval systems with the fractional order \(\alpha \): the \(0 < \alpha < 1\) case. IEEE Trans. Autom. Control 55(1), 152–158 (2010)

S. Marir, M. Chadli, D. Bouagada, New admissibility conditions for singular linear continuous-time fractional-order systems. J. Frankl. Inst. 354(2), 752–766 (2016)

D. Matignon, Stability result on fractional differential equations with applications to control processing, in Proceedings of the Computation Engineering in System and Application Multiconference (IMACS, IEEE-SMC), Lille, France (1996), pp. 963–968

D. Melchor-Aguilar, Mikhailov stability criterion for fractional commensurate order systems with delays. J. Frankl. Inst. 359(15), 8395–8408 (2022)

I. N’Doye, M. Darouach, M. Zasadzinski, N.E. Radhy, Observers design for singular fractional-order systems, in Proceedings of the IEEE Conference on Decision and Control and European Control Conference (CDC-ECC) Orlando, FL, USA (2011), pp. 4017–4022

I. N’Doye, M. Darouach, H. Voos, M. Zasadzinski, Design of unknown input fractional-order observers for fractional-order systems. Int. J. Appl. Math. Comput. Sci. 23(3), 491–500 (2013)

I. N’Doye, M. Darouach, M. Zasadzinski, N.E. Radhy, Robust stabilization of uncertain descriptor fractional-order systems. Automatica 49(6), 1907–1913 (2013)

C.C. Peng, W.H. Zhang, Back-stepping stabilization of fractional-order triangular system with applications to chaotic systems. Asian J. Control 23(1), 143–154 (2021)

C.C. Peng, W.H. Zhang, Linear feedback synchronization and anti-synchronization of a class of fractionalorder chaotic systems based on triangular structure. Eur. Phys. J. Plus 134(6), 292 (2019)

K. Rabah, S. Ladaci, A fractional adaptive sliding mode control configuration for synchronizing disturbed fractional-order chaotic systems. Circuits Syst. Signal Process. 39(3), 1244–1264 (2020)

C. Rao, S. Mitra, Generalized Inverse of Matrices and Its Applications (Wiley, New York, 1971)

J. Sabatier, M. Moze, C. Farges, On stability of fractional order systems, in Plenary Lecture VIII on 3rd IFAC Workshop on Fractional Differentiation and Its Applications, Ankara, Turkey (2008)

N.H. Sau, N.T.T. Huyen, Passivity analysis of fractional-order neural networks with time-varying delay based on LMI approach. Circuits Syst. Signal Process. 39(12), 5906–5925 (2020)

P.W. Shi, W.C. Sun, X.B. Yang, I.J. Rudas, H.J. Gao, Master-slave synchronous control of dual-drive gantry stage with cogging force compensation. IEEE Trans. Syst. Man Cybern. Syst. 53(1), 216–225 (2023)

X. Wei, D.Y. Liu, D. Boutat, Non-asymptotic pseudo-state estimation for a class of fractional order linear systems. IEEE Trans. Autom. Control 62(3), 1150–1164 (2017)

M. Wyrwasm, Full-order observers for linear fractional multi-order difference systems. Bull. Pol. Acad. Sci. Tech. 65(6), 891–898 (2017)

M.H. Xiong, Y.S. Tan, D.S. Du, B.Y. Zhang, S.M. Fei, Observer-based event-triggered output feedback control for fractional-order cyber-physical systems subject to stochastic network attacks. ISA Trans. 104, 15–25 (2020)

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No. 62203247)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declared that they have no conflicts of interest to this work. We declare that we do not have any commercial or associative interest that represents a conflict of interest in connection with the work submitted.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Peng, C., Ren, L. & Zhao, Z. Both States and Unknown Inputs Simultaneous Estimation for Fractional-Order Linear Systems. Circuits Syst Signal Process 43, 895–915 (2024). https://doi.org/10.1007/s00034-023-02522-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-023-02522-z