Abstract

This study proposes a spline filtering algorithm-based generalized maximum correntropy criterion (GMCC), named the spline adaptive filter (SAF-)-GMCC algorithm. Compared with traditional spline algorithms, the SAF-GMCC can cope with impulsive interference effectively, because the GMCC has a low sensitivity to mutation signals. The GMCC-based variable step-size spline filtering algorithm (SAF-GMCC) is proposed to solve the limitation of the fixed step-size on the SAF-GMCC algorithm’s performance and to improve the convergence rate and steady-state error performance. Combining these algorithms with the active noise control (ANC) model, this study proposes the filtered-c generalized maximum correntropy criterion (FcGMCC) and variable step-size filtered-c generalized maximum correntropy criterion (FcVGMCC) algorithms. Finally, the nonlinear system identification model simulates an experimental environment with impulsive interference. The SAF-GMCC and SAF-VGMCC algorithms offer better robustness than the existing algorithms. And the alpha-stable noise environment simulation with different impact strengths, in the ANC model verifies the FcGMCC and FcVGMCC algorithms’ robustness in nonlinear and non-Gaussian noise environments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Adaptive filtering theory is an optimal filtering method developed based on linear filtering theories such as Wiener filtering theory [31] and Kalman filtering theory [7]. The Wiener filter is designed based on the known signal statistical properties to obtain optimal estimates in practical applications. However, this design is unsuitable for signal statistical properties are unknown or non-stationary. The Kalman filter is applicable when the input signal is non-stationary. However, this requires the previous estimate and the current observation to estimate the signal value. In practical applications of signal processing, a priori knowledge of the statistical properties of the signal and noise is often unavailable. Thus, the adaptive filter is required in the absence of a priori statistics. The adaptive filter can learn and adjust its parameters adaptively according to the signal sampling value, which finally achieves optimal filtering. Thus, the adaptive filter shows excellent adaptive and real-time characteristics for signal processing problems in unknown or non-stationary environments. The adaptive filter also has broad applicability in a range of fields, such as distributed linear cyber-physical system [10], wireless sensor networks [29] and other adaptive signal processing [32, 33, 35,36,37,38,39,40,41]. In linear adaptive filtering algorithms, the standard algorithms are the least mean square (LMS) algorithm with low computational complexity, the normalized LMS (NLMS) algorithm with strong tracking performance, and the affine projection algorithm with fast convergence speed. Although linear filtering algorithm has flourished, the linear adaptive filter is no longer suitable for practical applications. Therefore, research on nonlinear filters is on the agenda.

Many scholars have developed nonlinear filter models, proposing the Volterra adaptive filtering [1, 16, 34], functional link adaptive filtering [5] and neural network adaptive filtering [6] algorithms. The spline adaptive filtering (SAF) algorithm was developed to achieve high efficiency in nonlinear adaptive filtering algorithms, obtain lower computational complexity, and obtain better adaptability [18, 26, 31]. The SAF consists of a linear combiner and spline interpolation, with the spline control points stored in a set of discrete look-up tables (LUTs). The SAF adjusts the filter’s coefficients and spline control points through the error signal and input signal to identify the nonlinear system.

According to the different orders of nonlinearization, the SAF can be divided into the Wiener model [11], Hammerstein model [20], and variants arising from these two types according to different topology structures [24]. In recent years, some scholars have combined block-oriented architecture with spline interpolation function to propose a new Wiener model, called Wiener SAF [23]. The spline adaptive algorithms in this study are all Wiener-type structures.

The SAF-LMS algorithm is proposed based on the SAF. The SAF-LMS algorithm significantly solves nonlinear problems based on previous simulations and steady-state performance analysis [25]. However, LMS-type algorithms use the second-order statistical information of the error that deviates from the optimal solution in the face of non-Gaussian signals. The shortcomings of the LMS-type algorithm in non-Gaussian environment are still inevitable in the SAF, thus requiring explorations of the nonlinear SAF algorithm.

Studies have proposed many improved spline-type algorithms to solve various problems. Peng applied the maximum correntropy criterion (MCC) to the SAF algorithm, named SAF-MCC algorithm [18], taking advantage of the MCC criterion anti-mutation signal characteristic and performing excellently in a heavy-tailed non-Gaussian environment. Subsequently, algorithms suitable for non-Gaussian environments have continued to be proposed to combat impulsive interference; successfully proposed algorithms include the spline set-membership normalized least M-estimate algorithm [12], the spline algorithm based on the Versoria function criterion [14], and a family of logarithmic spline adaptive algorithm with hyperbolic cosine as the core [17].

Active noise control (ANC) technology integrates various modern advanced science and technology to alleviate the limitation of passive noise control due to space and cost reasons. Its advantages are the small size of the equipment, low cost, easy installation, debugging ability, and remarkable control effect on low-frequency noise. As the application of today’s control technology of mainstream noise has become increasingly mature, the ANC algorithm has played a critical role in the controlling ability. For example, the classic filtered-x LMS algorithm has been widely used for ANC. However, this algorithm generally assumes the secondary channel loudspeaker as an ideal linear distortion-free model. In real solutions, ANC systems are probably nonlinear. The specific manifestation of this nonlinearity is that the model input and output signals exhibit a nonlinear relationship. Moreover, harmonics are found in the output signal, and the original signal spectrum changes [19]. The linear ANC system is inapplicable in these cases, while the above SAF is a good solution.

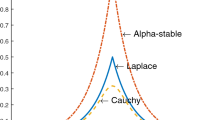

In general, the kernel function of the MCC defaults to the Gaussian kernel. Despite not being impeccable, the Gaussian kernel is accepted as maximally noncommittal to outliers. It also expresses maximum uncertainty concerning missing information [3, 28]. Thus, one is confronted with the problem of choosing a suitable kernel width value through the kernel approach. An inappropriate choice of width will significantly deteriorate the algorithm’s behavior. The generalized correntropy, which adopts the generalized Gaussian density function as kernel, was proposed. The study focuses on the problem of unsatisfactory simple spline filtering effect under non-Gaussian noise. The SAF-GMCC algorithm is proposed by introducing the generalized maximum correntropy criterion (GMCC) to the SAF. Then, the variable step-size is introduced, and the SAF-VGMCC algorithm is proposed to improve the SAF-GMCC algorithm’s performance. This performance is achieved after theoretically analyzing the SAF-GMCC and SAF-VGMCC algorithms and comparing the performance of the proposed new spline algorithms through simulations in an impulsive interference environment. This study proposes the filtered-c generalized maximum correntropy criterion (FcGMCC) and variable step-size filtered-c generalized maximum correntropy criterion (FcVGMCC) algorithms given the ANC system nonlinear problem. Finally, the effectiveness of the proposed algorithms in the nonlinear and non-Gaussian noise environments is verified through simulation in an alpha noise environment with different impulsive strengths.

The rest of this paper is structured as follows: Sect. 2 introduces the SAF and GMCC. Section 3 proposes the SAF-GMCC and SAF-VGMCC algorithms. Section 4 analyzes the range of the step-size and the steady-state of the SAF-GMCC algorithm under some simplifying assumptions. Section 5 provides some numerical simulations and Sect. 6 introduces the FcGMCC and FcVGMCC algorithms. Finally, Sect. 7 concludes this paper.

2 Spline Adaptive Filter and Generalized Correntropy

2.1 Spline Adaptive Filter

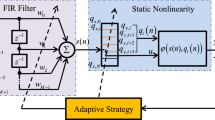

Figure 1 shows a structural schematic of the SAF. The main components are the cascade of a fair number of linear filters and a memoryless nonlinear function implemented by the spline interpolation scheme [22]. In Fig. 1, \(n\) is the instantaneous moment, and \({\mathbf{x}}\left( n \right)\) is the input signal of the whole system and the input of the linear adaptive filter. The \(s\left( n \right)\) is the output of the linear network. The spline interpolation function and the LUT address the input of the nonlinear network \(s\left( n \right)\). Finally, the output signal of the system is generated.

where \({\mathbf{w}}\left( n \right) = \left[ {w_{0} , \, w_{1} , \ldots ,w_{N - 1} } \right]^{T}\) is the adaptive weight vector for the finite impulse response filter. The tapped delayed input signal is represented as \({\mathbf{x}}\left( n \right) = \left[ {x\left( n \right), \, x\left( {n - 1} \right), \ldots , \, x\left( {n - N + 1} \right)} \right]^{T}\), where \(N\) is the number of filter taps. The input signal of the nonlinear spline filter, namely \(s\left( n \right)\), is the output signal of the linear time-invariant filter, and the final system output signal is \(y\left( n \right)\). The parameters \(i\) and \(u\) are the span index and local parameters, respectively, where \(u \in \left[ {0,1} \right]\).

The calculation formula of the local parameter \(u\left( n \right)\) is as follows

The formula for calculating the span index \(i(n)\) is as follows

where \(H\) is the total number of control points, \(\vartriangle x\) is the sampling interval, and \(\left\lfloor \bullet \right\rfloor\) is rounded down. For notation simplicity, we use \(i \equiv i(n)\).

The output of the SAF is as follows:

where \({\mathbf{u}}_{n} = \left[ {u^{3} \left( n \right), \, u^{2} \left( n \right), \, u\left( n \right),1} \right]^{T}\). For notation simplicity, we use \({\mathbf{u}} \equiv {\mathbf{u}}_{n}\) to determine the starting position of a group of continuous control points in the LUT through the span index to form a control point vector \({\mathbf{h}}_{i} = \left[ { \, h_{i} , \, h_{i + 1} , \, h_{i + 2} , \, h_{i + 3} } \right]^{T}\). \({\mathbf{C}}\) is the spline basis matrix. CR-spline and B-spline basis matrices are the most widely used among SAFs. Moreover, the CR-spline basis matrix [2] produces a more local approximation than the B-spline basis matrix [22]. To improve the accuracy of the estimation of nonlinear quantities, the CR-spline basis matrix is given as follows:

2.2 Generalized Maximum Correntropy Criterion

Given two random variables X and Y, the correlation entropy is defined by [4]

where \(\varphi ( \cdot )\) represents the nonlinear mapping determined by the kernel function. Additionally, \(\varphi ( \cdot )\) satisfies the following equation

where \(\kappa \left( { \cdot , \cdot } \right)\) denotes the Mercer nucleus. The kernel function of the GMCC is as follows:

where \(e = x - y\), \(\Gamma ( \cdot )\) represents the gamma function, \(\alpha\) is the shape parameter greater than zero, \(\beta\) is the scale coefficient, \(\lambda = 1/\beta^{\alpha }\) represents the kernel parameter, \(\gamma_{\alpha ,\beta }\) is the normalization constant, and the expression of \(\gamma_{\alpha ,\beta }\) is as follows:

From the above formulas, the entropy function of GMCC is

3 Proposed Algorithms

3.1 Proposed SAF-GMCC Algorithm

A sample-averaged estimate of the GMCC is given as follows

Under the condition of adaptive filtering, the cost function is defined as follows:

When \(\alpha = 2\), the GMCC-type algorithms will degenerate into the MCC-type algorithms. The cost function of the SAF-GMCC algorithm is as follows:

Some studies [4, 28, 30] have evaluated whether the absolute value function in \(J\left( {{\mathbf{w}}_{n} ,{\mathbf{h}}_{i,n} } \right)\) is differentiable inferring that the kernel functions about GMCC are differentiable. Through the gradient descent method, the updated method of the filter weight coefficient is as follows:

where \(\varphi^{\prime}_{i} \left( u \right) = {\dot{\mathbf{u}}}^{T} {\mathbf{Ch}}_{i,n}\), \({\dot{\mathbf{u}}} = \frac{{\partial {\mathbf{u}}}}{\partial u} = \left[ {3u^{2} \left( n \right),2u\left( n \right),1,0} \right]^{T}\) and \(f\left( {e\left( n \right)} \right)\) expresses

Similarly, it is deduced that the control point update method is as follows

In summary, the updated formulas of the SAF-GMCC algorithm are as follows:

where \(\mu_{{\mathbf{w}}}\) and \(\mu_{{\mathbf{h}}}\) represent the step-size convergence factor of the weights and control points, respectively.

3.2 Proposed SAF-VGMCC Algorithm

Like the linear adaptive filter algorithm, the SAF algorithm also has a problem because the convergence speed and the steady-state error cannot be considered simultaneously. Inspired by a previous study [13], in this study we introduce the variable step-size into the SAF-GMCC algorithm, and propose a variable step-size SAF-VGMCC algorithm.

In the SAF-GMCC algorithm, the weight \(\mu_{{\varvec{w}}}\) and the step-size of the control point \(\mu_{{\varvec{h}}}\) are fixed values. We use \(\mu_{{\varvec{w}}} \left( n \right)\) and \(\mu_{{\varvec{h}}} \left( n \right)\) to replace the fixed values. Then, (19) and (20) are rewritten as follows:

In addition, the adjustments of the variable step-sizes are all controlled by the squared value of the impulsive-free error.

where \(a < 1\) is a forgetting factor which is close to one. Furthermore, \(\overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{e}_{o}^{2} \left( n \right)\) is an estimate of the squared value of the impulse-free error [42], used to adjust the variable step process obtained by [13].

where \(\lambda\) is another forgetting factor close to one but less than one; \(c_{1} = 1.483\left( {1 + 5/\left( {N - 1} \right)} \right)\) is a finite correction factor; \(med(.)\) represents the median operator; and \(\gamma_{{\text{n}}}\) is expressed as follows

Table 1 shows the pseudocode of the SAF-VGMCC algorithm.

3.3 Proposed Algorithms Applied to ANC

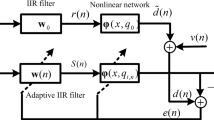

Figure 2 shows a schematic of a nonlinear ANC system based on the SAF algorithm.

The residual noise sensed by the error microphone is mathematically obtained as follows:

where \(*\) represents the linear convolution operator and \(s_{N} \left( n \right)\) is the impulse response of the secondary path.

This section discussed the practical applications of the proposed algorithms. In the ANC model, we propose a new ANC algorithm against impulsive interference, namely the FcGMCC algorithm. Its cost functions are the same as (21) and (22). The derivation process of the FcVGMCC algorithm is similar to that of the FcGMCC algorithm. The difference is that the FcVGMCC step-size satisfies (23) and (24). The updated formulas of the FcGMCC algorithm are as follows:

where \(x^{{\mathbf{^{\prime}}}} (n) = {\dot{\mathbf{u}}}^{T} {\mathbf{Cq}}_{i} \frac{1}{\Delta x}{\mathbf{x}}\left( n \right) * s_{N} \left( n \right)\) and \(u^{\prime} = {\mathbf{u}} * s_{N} \left( n \right)\).

4 Mean Analysis of SAF-GMCC

This section analyzes the range of step-sizes which makes the SAF-GMCC algorithm stable.

4.1 Mean Analysis of \(\mu_{{\mathbf{w}}}\)

Averaging two sides of (19) yields

Referring to Fig. 3, \({\mathbf{w}}_{0}\) and \(\varphi_{0} (p(n),{\mathbf{h}}_{0} )\) are the unknown systems to be identified and \(v(n)\) denotes additional ambient noise. The output error of the adaptive structure is as follows:

Since the noise signal \(v(n)\) is independent of \(x(n)\), using the Taylor formula in (30) yields:

Since \(f\left( {v\left( n \right)} \right)\) is an odd function, (32) is rewritten as follows:

Gaussian distribution is the probability distribution of the process \(p\left( n \right)\), \({\mathbf{x}}\left( n \right)\) is a Gaussian signal, and the mode \({\mathbf{w}}_{0}\) is non-random. Furthermore, the Lyapunov version of the central limit theorem [21] guarantees that the probability distribution of \(s\left( n \right)\) is close to the Gaussian distribution as long as the length of \({\mathbf{w}}_{n}\) grows. The above assumptions and the extension of the Bussgang theorem are used to evaluate the second term on the right side of (33), stated as follows.

Corollary: Let \({{\varvec{\Theta}}} = \left[ {\theta_{1} , \ldots ,\theta_{n} } \right]\) represent an n-dimensional Gaussian variate and \(G\left( z \right)\) is any analysis function defined in the subspace \({\mathbf{z}} = \left[ {\theta_{2} , \ldots ,\theta_{n} } \right]\) [21]. Then, the expectation of multiplying \(\theta_{1}\) by \(G\left( z \right)\) is described as follows:

where \(m_{\theta 1}\) denotes the average value of \(\theta_{1}\) and \(K_{1,i}\) expresses the covariance between \(\theta_{1}\) and \(\theta_{{\text{ i}}}\).

Using the corollary in (33), we derive the following expression:

where \({\mathbf{R}}_{x} = E\{ {\mathbf{x}}(n){\mathbf{x}}^{T} (n)\}\) represents the autocorrelation matrix of the input signal. Combining (34), (35) and (36), the following equation is calculated as:

where

The expectation of \({\text{w}}_{n}\) is solved using the recursive iteration equation after ignoring the expected time dependency, as follows:

where \({\mathbf{w}}_{ - 1}\) is the original condition of the linear filter.

If and only if the matrix \(\left[ {{\mathbf{I}} - \mu_{{\mathbf{w}}} a{\mathbf{R}}_{x} E\left\{ {f^{\prime } \left( {v\left( n \right)} \right)} \right\}} \right]\) is stable, unbiased estimates are obtained. Thus, \(\left[ {{\mathbf{I}} - \mu_{{\mathbf{w}}} a{\mathbf{R}}_{x} E\left\{ {f^{\prime } \left( {v\left( n \right)} \right)} \right\}} \right]\) is equivalent to \(\left| {{\mathbf{I}} - \mu_{{\mathbf{w}}} a{\mathbf{R}}_{x} E\left\{ {f^{\prime } \left( {v\left( n \right)} \right)} \right\}} \right| < 1\), and the following equation is obtained:

Therefore, if the nonlinearity converges to the actual value at the steady-state, namely \(\varphi (s(n)) \to \varphi_{0} (p(n))\), then we derive this expression:

4.2 Mean Analysis of \(\mu_{{\mathbf{h}}}\)

The mean analysis of \(\mu_{{\mathbf{h}}}\) is obtained similar to that of the previous subsection. The output error of the adaptive structure is as follows:

where \({\mathbf{u}}_{l,n}^{{}}\) and \({\mathbf{u}}_{s,n}^{{}}\) are the local variables \({\mathbf{u}}_{n}^{{}}\) for evaluating \(p\left( n \right)\) and \(s\left( n \right)\), respectively.

Similar to (34), the expectation of \({\mathbf{h}}_{i,n}\) is calculated as follows:

where \({\mathbf{U}}_{s} = E\left\{ {{\mathbf{u}}_{s,n} {\mathbf{u}}_{s,n}^{T} } \right\}\) is the autocorrelation matrix and \({\mathbf{U}}_{sp} = E\left\{ {{\mathbf{u}}_{s,n} {\mathbf{u}}_{p,n}^{T} } \right\}\) is a cross correlation matrix. The recursion Eq. (44) is solved by

where \({\mathbf{h}}_{ - 1}\) indicates the original condition of the spline control point.

Similar to the linear case, if and only if the matrix \(\left[ {{\mathbf{I}} - \mu_{{\mathbf{h}}} {\mathbf{C}}^{{\text{T}}} {\mathbf{U}}_{s} {\mathbf{C}}E\left\{ {f^{\prime } \left( {v\left( n \right)} \right)} \right\}} \right]\) is stable, the SAF-GMCC algorithm is stable. Thus, \(\left| {{\mathbf{I}} - \mu_{{\mathbf{h}}} \lambda \left( {{\mathbf{C}}^{{\text{T}}} {\mathbf{U}}_{s} {\mathbf{C}}} \right)E\left\{ {f^{\prime } \left( {v\left( n \right)} \right)} \right\}} \right| < 1\) can be obtained. The following equation can be obtained:

At the same time, when the system converges to the steady state value, its stable state solution is

4.3 Selection of Optimal Parameters

The trial-and-error determines the selection of parameters. However, this approach is unrealistic in practical application. Finding the optimal solution of the parameters is necessary for accurate signals. Thus, (28) and (29) are the cost functions of the SAF-GMCC algorithm. First, \(\mu_{{\mathbf{w}}}\), \(\mu_{{\mathbf{h}}}\), \(\alpha\) and \(\beta\) are replaced in (28) and (29), and \(\mu_{{\mathbf{w}}} (n)\), \(\mu_{{\mathbf{h}}} (n)\), \(\alpha (n)\) and \(\beta (n)\) are used to indicate that these variables are all time-varying. Let \({{\varvec{\upomega}}}_{n} = {\mathbf{w}}_{n} - {\mathbf{w}}_{0}\) and \({\mathbf{\rm H}}_{n} = {\mathbf{h}}_{i,n} - {\mathbf{h}}_{i,0}\), and then, \({\mathbf{w}}_{0}\) and \({\mathbf{h}}_{i,0}\) are subtracted from both sides of (28) and (29):

From a previous study [25], we have

where \({\mathbf{c}}_{k}\) expresses the k-th row of \({\mathbf{C}}\). \(v(n)\) is the measurement noise. Substituting (50) into (48) and (49), we obtain

where \(m = \frac{{{\mathbf{c}}_{3} {\mathbf{h}}_{i,0} }}{\vartriangle x}\) and \(g(e(n)) = \lambda_{1} \exp \left( { - \lambda_{2} |e(n)|^{\alpha } } \right)\left| {e\left( n \right)} \right|^{\alpha - 2}\). Multiplying (51) and (52) by their transpose from the left and taking expectation with the above approximations, we obtain

We aim to optimize the step-sizes and other variable factors for the SAF-GMCC algorithm by minimizing \({\mathbf{\rm H}}_{n + 1}^{2}\) and \({{\varvec{\upomega}}}_{n + 1}^{2}\). We take the partial derivative of (53) and (54) concerning all variables, and set them to zero. After simplifying, the following equation is obtained.

where

Like the method used in the previous study [9], we estimate \({{\varvec{\upomega}}}_{n}^{{}}\) and \({\mathbf{\rm H}}_{n}\) through one-step approximation as follows:

where \(\tilde{\mu }_{w}^{{}} (n)\) and \(\tilde{\mu }_{{\mathbf{h}}} (n)\) are the optimal step-sizes for estimating \({{\varvec{\upomega}}}_{n}^{{}}\) and \({\mathbf{\rm H}}_{n}\), respectively. \(\tilde{f}(e\left( n \right))\) is the value of \(\alpha (n)\) and \(\beta (n)\) when taking the optimal solutions.

When a unique solution exists (55), and the functions of the different parameters are convex functions in the vicinity of the solution, the time-varying optimal solution of the variables is solved [8, 34].

5 Numerical Simulations

MATLAB simulation experiments evaluate the performance of the proposed algorithms [15]. Let the unknown system order be N = 4. The weight vector of the unknown adaptive system, namely \({\mathbf{w}}_{0} = \left[ {0.6, - 0.4,0.25, - 0.15} \right]^{T}\), consists of linear components. The nonlinear memoryless objective function implemented by the 21-point length LUT control points takes the following values:

where sample interval \(\vartriangle x = 0.2\).

In this experiment, a Gaussian signal and a first-order color signal (\(AR(1) = [1, - 0.8]\) model) are used as the input signals to the system. The desired signal is expressed as follows:

where \(v\left( n \right)\) is the observation noise, that contains the background noise and the impulsive noise \(v\left( n \right) = v_{A}^{{}} \left( n \right) + v_{B}^{{}} \left( n \right)\). The background noise \(v_{A} \left( n \right)\) is a Gaussian white noise with a signal-to-noise ratio (\(SNR = 10\log_{10} ({{\sigma_{x}^{2} } \mathord{\left/ {\vphantom {{\sigma_{x}^{2} } {\sigma_{v}^{2} }}} \right. \kern-0pt} {\sigma_{v}^{2} }})\)) of 30 dB; \(\sigma_{x}^{2}\) indicates the variance of the input signal; and \(\sigma_{{\text{v}}}^{2}\) represents the variance of Gaussian white noise. The impulsive noise \(v_{B} \left( n \right)\) is a Bernoulli Gaussian (BG) process, i.e., \(v_{B} \left( n \right) = b\left( n \right)q\left( n \right)\), where \(q\left( n \right)\) is a Gaussian process with zero mean variance \(\sigma_{B}^{2}\) and has \(\sigma_{B}^{2} \gg \sigma_{A}^{2}\). Moreover, \(b\left( n \right)\) represents a Bernoulli process. Its probability density function is as follows:

where \({\text{P}}_{r}\) represents represent the average power of the BG signal, which determines the probability of impulsive interference. The larger the \({\text{P}}_{r}\) value, the stronger the impact. \({\text{P}}_{r} = 0\) and \({\text{P}}_{r} = 0.01\) are chosen for comparing simulation results.

Mean square derivation (\({\text{MSD}} = 10\log_{10} \left\| {{\mathbf{w}}(n) - {\mathbf{w}}_{0} } \right\|_{2}^{2}\)) measures the algorithm’s performance [23].

Figure 4a, b, and c shows the algorithms’ performance with different SNRs. The parameters of each algorithm are shown in Table 2. As the SNR decreases, the performance of the two proposed algorithms is consistently better than other algorithms. As the SNR decreases, the effect of the variable step-size strategy is no longer evident, as shown in Fig. 4a, b, and c. This decrease may be because the stability range of the step-sizes becomes narrow when the SNR is small, resulting in the variable step-size strategy not being performed correctly. The possible causes and their analysis will be part of our future research.

Figure 5a and b shows the MSD curves of all algorithms in different environments where the impulsive interference frequencies are 0 and 0.01, respectively. The setting parameters of all algorithms are shown in Table 3. The SAF-LMS algorithm has diverged in an impulsive interference environment. The SAF-SNLMS and SAF-VSNLMS algorithms can keep the convergence. However, the proposed SAF-GMCC and SAF-VGMCC algorithms remain stable under impulsive interference, and the performance is significantly better than several other algorithms. In addition, the SAF-VGMCC algorithm has better performance than the SAF-GMCC algorithm (Fig. 6).

However, the step-size variation of the original variable step-size algorithm does not effectively track the mutation signal. Thus, we propose an improvement strategy for the original variable step-size algorithm; as shown in Fig. 7, when \(n = 15000\), \({\mathbf{w}}_{0} = \left[ {0.6, - 0.4,0.25, - 0.15} \right]^{T}\) is reversed. The improved variable step-size algorithm exhibits excellent mutation tracking ability, where \(\mu_{{\mathbf{w}}} { = }\mu_{{\mathbf{h}}} { = }0.05\) and the other parameters are the same as in Fig. 6.

The improved variable step-size strategy adds a part to detect mutations; when \(\left| {e(n) - e(n - 1)} \right| \ge \vartheta_{n}\), the step-size jumps to the initial step-size \(\mu_{{\mathbf{w}}} { = }\mu_{{\mathbf{h}}} { = }0.05\), and the variable step-size process is repeated, where \(\vartheta_{n} = Mmed(\kappa_{n} )\) and \(\kappa_{n} = [\left| {e(n - 1) - e(n - 2)} \right|,\left| {e(n - 2) - e(n - 3)} \right|,...,\left| {e(n - M) - e(n - M - 1)} \right|]\).

6 Application of ANC

6.1 Comparative Experiment of ANC

This experiment shows that the secondary channel of the system is a non-minimum phase. The first step is to set N = 6 for the nonlinear spline structure. The standard averaged noise reduction (ANR) is used as the evaluation index to unify the criteria for judging the performance of these algorithms, and its expression is as follows:

where \({\uplambda }\) is a smoothing parameter, and \(\lambda \to 1,\;\lambda \ne 1\). In this experiment, \(\lambda = 0.999\).

In the experiment, we select the ubiquitous \(\alpha\)-stable noise defined as follows:

where

The simulation is based on the standard \(\alpha\)-stable distribution [27], whose parameter values are \(\beta { = 0, }\gamma { = 1}\), and \(\vartheta { = 0}\), and the characteristic function is as follows:

The noise intensity is controlled by adjusting the \(\alpha\)-parameter. The smaller the \(\alpha\), the greater the impact strength. The primary channel noise observed by the error microphone is given by [19]

The function \({\updelta }_{{\text{i}}} ,{\text{i = 1,2,}}...\) is to measure the strength of the nonlinear secondary channel, and this simulation takes \({\updelta }_{1} { = 0}{\text{.08}}\) and \({\updelta }_{2} { = 0}{\text{.04}}\). The input signal \({\mathbf{x}}\left( n \right)\) is the alpha-stable distributed noise. \(u\left( n \right){\text{ = x}}\left( n \right) * p\left( n \right)\), \(p\left( n \right)\) is the impulsive response of the transfer function of the primary channel. Afterward \(\alpha \equiv \alpha^{ * }\), in \(\alpha\)-stable noise to achieve ease of differentiation. The transfer functions of the primary and the secondary channels with non-minimum phase characteristics are as follows:

Figure 5 shows the performance of the FcNLMS, FcSNLMS, FcMCC, FcGMCC, and FcVGMCC algorithms based on the SAF-NLMS, SAF-SNLMS, SAF-MCC, SAF-GMCC, and SAF-VGMCC algorithms, respectively, where the impact strength is 2, 1.9, 1.8, and 1.7. Table 4 shows the parameters (Fig. 8).

The FcGMCC and FcVGMCC algorithms maintain stable convergence under the non-minimum phase channel, when the input signal does not contain impulsive noise. Under comprehensive comparison, the new algorithms’ convergence speed and steady-state ANR are better than other algorithms. The FcVGMCC algorithm shows better performance than the FcGMCC algorithm. The FcGMCC and FcVGMCC algorithms have superior performance, when \(\alpha = 1.9\). The steady-state ANR of the proposed FcVGMCC algorithm is better than that of other algorithms. When the input contains impulsive noise, the FcNLMS algorithm shows severe divergence state with the same step-size parameter. When \(\alpha = 1.8\) and \(\alpha = 1.7\), the FcGMCC and FcVGMCC algorithms offer clear advantages. Although the steady-state of the FcVGMCC algorithm is similar to that of the FcGMCC algorithm, the FcVGMCC algorithm convergence speed is faster than that of the FcGMCC algorithm.

7 Conclusions

This study proposes the SAF-GMCC and SAF-VGMCC algorithms, to improve the robustness of the traditional SAF-type algorithms. Unlike the algorithms based on the error mean square criterion, which relies on the Gaussian environment, the SAF-GMCC algorithm is not too sensitive to abnormal data because of the presence of the kernel function. Thus, the SAF-GMCC algorithm offers good robustness in impulsive interference. In addition, the variable step-size is introduced to improve the SAF-GMCC algorithm’s performance, and the SAF-VGMCC algorithm is proposed. Compared with the fixed step-size algorithm, the SAF-VGMCC algorithm has theoretically improved both convergence rate and steady-state error. Simulation in impulsive interference shows that the performance of the above algorithms is effective in impulsive interference. Finally, this study presents the practical applications of the proposed algorithms. Then, the SAF-GMCC and SAF-VGMCC algorithms are applied to nonlinear ANC, and the FcGMCC and FcVGMCC algorithms are proposed. The nonlinear problem in the ANC model is solved by introducing the nonlinear spline adaptive structure. In addition, a better noise removal effect is achieved with the algorithms’ robustness, even when the noise source contains impulsive interference. Simulation experiments shows the feasibility of the proposed FcGMCC and FcVGMCC algorithms in nonlinear and non-Gaussian noise environments.

Data Availability

The data that support the findings of this study are available from the corresponding author on request.

References

J. Caillec, Spectral inversion of second order volterra models based on the blind identification of wiener models. Signal Process. 91(11), 2541–2555 (2011). https://doi.org/10.1016/j.sigpro.2011.05.007

E. Catmull, R. Rom, A class of local interpolating splines. Comput. Aided Geom. Des. (1974). https://doi.org/10.1016/B978-0-12-079050-0.50020-5

B. Chen, J.C. Principe, J. Hu, Y. Zhu, Stochastic information gradient algorithm with generalized Gaussian distribution model. J Circuits Syst. Comput. (2012). https://doi.org/10.1142/S0218126612500065

B. Chen, L. Xing, H. Zhao, N. Zheng, J.C. Principe, Generalized correntropy for robust adaptive filtering. IEEE Trans. Signal Process. 64(13), 3376–3387 (2015). https://doi.org/10.1109/TSP.2016.2539127

D. Comminiello, M. Scarpiniti, L.A. Azpicueta-Ruiz, J. Arenas-García, A. Uncini, Functional link adaptive filters for nonlinear acoustic echo cancellation. IEEE Trans. Audio Speech Lang. Process. 21(7), 1502–1512 (2013). https://doi.org/10.1109/TASL.2013.2255276

J. Gong, B. Yao, Neural network adaptive robust control of nonlinear systems in semi-strict feedback form. Automatica 37(8), 1149–1160 (2001). https://doi.org/10.1016/S0005-1098(01)00069-3

S. Haykin, Kalman filtering and neural networks, Wiley, (2001)

L. Ji, J. Ni, Sparsity-aware normalized subband adaptive filters with jointly optimized parameters. J. Franklin Inst. 357(17), 13144–13157 (2020). https://doi.org/10.1016/j.jfranklin.2020.09.015

D. Jin, J. Chen, C. Richard, J. Chen, Model-driven online parameter adjustment for zero-attracting LMS. Signal Process. 152, 373–383 (2018). https://doi.org/10.1016/j.sigpro.2018.06.020

X. Li, G.L. Wei, L.C. Wang, Distributed set-membership filtering for discrete-time systems subject to denial-of-service attacks and fading measurements: a zonotopic approach. Inf. Sci. 547, 49–67 (2021). https://doi.org/10.1016/j.ins.2020.07.041

F. Lindsten, T.B. Schon, M.I. Jordan, Bayesian semi-parametric wiener system identification. Automatica 49(7), 2053–2063 (2013). https://doi.org/10.1016/j.automatica.2013.03.021

C. Liu, Z. Zhang, Set-membership normalised least M-estimate spline adaptive filtering algorithm in impulsive noise. Electron. Lett. 54(6), 393–395 (2018). https://doi.org/10.1049/el.2017.4434

C. Liu, Z. Zhang, X. Tang, Sign normalised spline adaptive filtering algorithms against impulsive noise. Signal Process. 148, 234–240 (2018). https://doi.org/10.1016/j.sigpro.2018.02.022

Q. Liu, Y. He, Robust geman-mcclure based nonlinear spline adaptive filter against impulsive noise. IEEE Access. 8, 22571–22580 (2020). https://doi.org/10.1109/ACCESS.2020.2969219

P. Lueg, Process of silencing sound oscillations, U. S. Patent 2043416 (1936)

T. Ogunfunmi, Adaptive nonlinear system identification: the volterra and wiener model approaches (Springer, Berlin, Germany, 2007)

V. Patel, S. Bhattacharjee, N. George, A family of logarithmic hyperbolic cosine spline nonlinear adaptive filters. Appl. Acoust. 178, 107–973 (2021). https://doi.org/10.1016/j.apacoust.2021.107973

S. Peng, Z. Wu, X. Zhang, B. Chen, Nonlinear spline adaptive filtering under maximum correntropy criterion. In TENCON 2015–2015 IEEE Region 10 Conference, IEEE, pp. 1–5 (2015). https://doi.org/10.1109/TENCON.2015.7373051

N. Quaegebeur, A. Chaigne, Nonlinear vibrations of loudspeaker-like structures. J. Sound Vibr. 309, 178–196 (2008). https://doi.org/10.1016/j.jsv.2007.06.040

M. Rasouli, D. Westwick, W. Rosehart, Quasiconvexity analysis of the Hammerstein model. Autom. 50(1), 277–281 (2014). https://doi.org/10.1016/j.automatica.2013.11.004

G. Scarano, D. Caggiati, G. Jacovitti, Cumulant series expansion of hybrid nonlinear moments of n variates. IEEE Trans. Signal Process. 41(1), 486–489 (1993). https://doi.org/10.1109/TSP.1993.193184

M. Scarpiniti, D. Comminiello D, R. Parisi, A. Uncini, Spline adaptive filters: theory and applications//Adaptive learning methods for nonlinear system modeling. Butterworth-Heinemann, 47–69 (2008).

M. Scarpiniti, D. Comminiello, R. Parisi, A. Uncini, Nonlinear spline adaptive filtering. Signal Process. 93(4), 772–783 (2013). https://doi.org/10.1016/j.sigpro.2012.09.021

M. Scarpiniti, D. Comminiello, R. Parisi, A. Uncini, Novel cascade spline architectures for the identification of nonlinear systems. IEEE Trans. Circuits Syst. I-Regul. Pap. 62(7), 1825–1835 (2015). https://doi.org/10.1109/TCSI.2015.2423791

M. Scarpiniti, D. Comminiello, G. Scarano, R. Parisi, A. Uncini, Steady-state performance of spline adaptive filters. IEEE Trans. Signal Process. 64(4), 816–828 (2016). https://doi.org/10.1109/TSP.2015.2493986

A. Singh, J. C. Principe, Using correntropy as a cost function in linear adaptive filters. In 2009 International Joint Conference on Neural Networks (IJCNN), IEEE, pp. 2950–2955 (2009). https://doi.org/10.1109/IJCNN.2009.5178823

S. Talebi, S. Godsill, D. Mandic, Filtering structures for α-stable systems. IEEE Control Syst. Lett. 7, 553–558 (2023). https://doi.org/10.1109/LCSYS.2022.3202827

M. Varanasi, B. Aazhang, Parametric generalized gaussian density estimation. J. Acoust. Soc. Amer. 86(4), 1404–1415 (1989). https://doi.org/10.1121/1.398700

X. Wang, J.H. Park, H. Liu, X. Zhang, Cooperative output-feedback secure control of distributed linear cyber-physical systems resist intermittent DoS attacks. IEEE Trans. Cybernetics. 51(10), 4924–4933 (2021). https://doi.org/10.1109/TCYB.2020.3034374

P. Wen, J. Zhang, S. Zhang, B. Qu, Normalized subband spline adaptive filter: algorithm derivation and analysis. Circuits Syst. Signal Process. 40, 2400–2418 (2021). https://doi.org/10.1007/s00034-020-01577-6

N. Wiener, Extrapolation, interpolation, and smoothing of stationary time series (Wiley, New York, 1964)

J. Zhang, Y. Jiang, X. Li, M. Huo, H. Luo, S. Yin, An adaptive remaining useful life prediction approach for single battery with unlabeled small sample data and parameter uncertainty. Reliab. Eng. Syst. Saf. 222, 0951–8320 (2022). https://doi.org/10.1016/j.ress.2022.108357

J. Zhang, Y. Jiang, X. Li, H. Luo, S. Yin, O. Kaynak, Remaining useful life prediction of Lithium-Ion battery with adaptive noise estimation and capacity regeneration detection. IEEE/ASME Trans. Mechatron. (2022). https://doi.org/10.1109/tmech.2022.3202642

J. Zhang, X. Xiao, Predicting low-dimensional chaotic time series using volterra adaptive filters. Acta Phys. Sin. 49, 403–408 (2000). https://doi.org/10.1016/S0370-2693(00)00081-2

H. Zhao, Y. Gao, Y. Zhu, Robust subband adaptive filter algorithms-based mixture correntropy and application to acoustic echo cancellation. IEEE Trans. Audio Speech Lang. Process. 31, 1223–1233 (2023). https://doi.org/10.1109/TASLP.2023.3250845

H. Zhao, B. Tian, Robust power system forecasting-aided state estimation with generalized maximum mixture correntropy unscented Kalman filter. IEEE Trans. Instrum. Meas. 71, 1–10 (2022). https://doi.org/10.1109/TIM.2022.3160562

H. Zhao, B. Tian, B. Chen, Robust stable iterated unscented kalman filter based on maximum correntropy criterion. Automatica (2022). https://doi.org/10.1016/j.automatica.2022.110410

H. Zhao, W. Xiang, S. Lv, A variable parameter LMS algorithm based on generalized maximum correntropy criterion for graph signal processing. IEEE Tran. Signal Inf. Process. Netw. 9, 140–151 (2023). https://doi.org/10.1109/TSIPN.2023.3248948

S. Zhou, H. Zhao, Statistics variable kernel width for maximum correntropy criterion algorithm. Signal Process. 176, 109589 (2020). https://doi.org/10.1016/j.sigpro.2020.107589

Y. Zhu, H. Zhao, X. He, Z. Shu, B. Chen, Cascaded random fourier filter for robust nonlinear active noise control. IEEE Trans. Audio Speech Lang. Process. 30, 2188–2200 (2022). https://doi.org/10.1109/TASLP.2021.3126943

Y. Zhu, H. Zhao, X. Zeng, B. Chen, Robust generalized maximum correntropy criterion algorithms for active noise control. IEEE Trans. Audio, Speech, Lang. Process. 28, 1282–1292, (2020). https://doi.org/10.1109/TASLP.2020.2982030

Y. Zou, S. Chan, T. Ng, Least mean M-estimate algorithms for robust adaptive filtering in impulse noise. IEEE Trans. Circuits Syst. II Analog Digit. Signal Process. 47(12), 1564–1569 (2000). https://doi.org/10.1109/82.899657

Acknowledgements

This work was partially supported by National Natural Science Foundation of China (Grant Nos: 62171388, 61871461, 61571374) and Fundamental Research Funds for the Central Universities (Grant No: 2682021ZTPY091).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Gao, Y., Zhao, H., Zhu, Y. et al. Spline Adaptive Filtering Algorithm-based Generalized Maximum Correntropy and its Application to Nonlinear Active Noise Control. Circuits Syst Signal Process 42, 6636–6659 (2023). https://doi.org/10.1007/s00034-023-02411-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-023-02411-5