Abstract

This paper aimed at investigating the exponential synchronization problem for a Markovian jump complex dynamical network through designing a state feedback controller. In this paper, it is supposed that both time delay and coefficient matrices switch between finite modes governed by a time-varying Markov process. The transition rate (TR) matrix of the Markov process is supposed to vary with time, and to be piecewise-constant. The time-varying transition rates are investigated under two cases: completely known TRs and partly unknown TRs, respectively. The synchronization problem of the proposed model is inspected by developing Lyapunov–Krasovski function with Markov-dependent Lyapunov matrices. The controller gain matrix for guaranteeing the synchronization problem is derived by using linear matrix inequalities. The resulted criteria depend on both delay size and the probability of the delay-taking value. Finally, a numerical example is provided to demonstrate the effectiveness of the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the last decades, much interest has been focused on complex dynamical networks (CDNs) because of its importance in real-world applications. CDNs are composed of a set of interconnected nodes as a basic unit with a special dynamic. Applications of CDNs are found in various fields, such as biology, physics, and technology [40, 44]. In the engineering field, CDNs can be referred to as communication networks, artificial neural networks, electric power grids, multi-agent systems for cooperative applications, etc. [16, 20, 34, 45]. CDNs can expose many dynamical behaviors, such as synchronization [2, 21, 47], multi-synchronization [26], and group synchronization [18]. Network synchronization has been perceived in various physical and man-made networks, such as fireflies firing in unison, routing messages in the Internet, and dynamics in a power grid [2]. Synchronization means that the state vectors of all the nodes in a CDN converge to a unified behavior as a common state vector. For example, in the synchronization problem of two coupled chaotic neurons, the synchronization means that the two neurons always generate spikes almost simultaneously [39]. There are many different types of synchronization, such as exponential synchronization [1, 48], finite-time synchronization [33], and asymptotic synchronization [14, 23, 27]. The problem of synchronization is a kind of stability problem that will be addressed in the next section. Exponential stability is a more general stability regime than asymptotic stability; therefore, it has received much attention from researchers [3, 8].

There exist many studies on the synchronization problem of the CDNs with different challenges. Over the last few decades, synchronization of CDNs has come to well characterize several natural phenomena. It is necessary to mention that synchronization of CDNs has many useful applications in image processing [6], secure communication [5, 49], power systems [9], and multi-agent system applications [30, 31, 51]. Time delays are an undeniable issue in practical systems, due to the limited speed of signal propagation or traffic congestion which cause instability or oscillation [22]; hence, the necessity to study time delays. A wide variety of methods are used to study the time delays in the CDNs [19, 58]. Coupling time delays that exist in most CDNs are caused by the exchange of data among nodes in a CDN [17, 24, 42, 54]. In addition, there is a finite set of modes in practical systems that the parameters of a network are faced to switch between these modes. The reason for switching could be dependent on two factors [57]: arbitrary factors [29] or stochastic factors [11, 41, 50, 53]. For example, stochastic factors include abrupt natural variation [12, 38] and casual component or communication topology damages [10]. The stochastic switching with the memoryless feature is usually modeled by Markov chain. The systems exposed to stochastic switching and modeled by Markov chain are called Markovian jump systems, which are a special case of hybrid systems [4]. Wang et al. [43] investigated the mean-square exponential synchronization problem for Markovian jump CDN (MJCDN) under feedback control, and some sufficient conditions have been derived in terms of linear matrix inequalities (LMIs). Global exponential synchronization of a network with Markovian jumping parameters and time-varying delay has been discussed in [50]. Li and Yue [25] investigated the synchronization problem in MJCDN with mixed time delays, and the delay-dependent synchronization criteria were resulted by using the LMIs. In the Markov model for switching between the modes, there is a TR matrix that specifies the behavior of the system at all times. It is useful to point out that the above-mentioned references suppose that the information on transition probabilities in the jumping process is completely known. Actually, the study of Markovian jump systems with partly unknown TRs (PUTRs) is crucial because the information on TRs is not completely known in most practical cases. For example, the changes of load in DC motors for various servomechanisms are random and uncertain; hence, achieving completely known TRs (CKTRs) is impossible. The PUTRs are practically sort of uncertainty for TRs, which unlike all types of uncertainties, do not require the bound or structure information of uncertainty. Some papers are devoted to addressing the synchronization problem of MJCDNs with PUTRs [55, 59]. Zhou et al. [59] studied the exponential synchronization problem for an array of Markovian jump-delayed complex network with PUTRs under a new randomly occurring event-triggered control strategy. The exponential synchronization problem of Markovian jump genetic oscillators with PUTRs has been reported in [55]. In traditional Markovian jump systems, typically known as homogeneous Markovian jump systems, the TRs are assumed to be constant over time [15, 56]. It is very idealistic to get the accurate and complete TR information, for it is both difficult and costly to measure the TRs with accuracy [11]. Therefore, the TRs of a Markov process in recent researches are assumed to have uncertainty. Another class of uncertain TRs is time-variant TRs. A Markov process with time-varying TRs is named non-homogeneous Markov process. One physical meaning example for a non-homogeneous Markovian jump system is unmanned aerial vehicle (UAV) systems, where the airspeed variation in such system matrices is ideally modeled as a homogeneous Markovian process. Because of weather changes, such as wind speed changes, the probabilities of the transition of these multiple airspeeds are not fixed, and vary with time. Thus, this practical problem can be modeled by a non-homogeneous Markovian structure [52]. In non-homogeneous Markovian jump systems, due to the instant changing of TRs, measuring the TR values is time-consuming and practically impossible; as a result, assuming this process as piecewise-homogeneous would be a practical solution. The TRs in the piecewise-homogeneous Markov process are assumed to be time-varying, but invariant during an interval. Actually, in piecewise-homogeneous Markovian jump systems, there are two Markovian signals: (1) a Markov chain as a low-level signal that manages switching between the different dynamics and topologies with time-varying TRs, and (2) another Markov chain (high-level signal) with constant TRs that governs switching between the TR matrices of the low-level Markovian signal. It is suitable to use a two-level Markovian structure for modeling of time-variant TRs. In other words, time-variant TRs are less conservative than the time-invariant TRs.

Most articles in the field of synchronization problem of CDNs with Markovian jump parameters have considered the homogeneous Markovian structure, which is far from the real-systems [7, 36, 37]. To the best of the authors’ knowledge, there is only literature about the exponential synchronization problem of the CDN via piecewise-homogeneous Markovian structure [28]. Li et al. [28] discussed the synchronization problem of MJCDN with piecewise-homogeneous TRs for a simple model. Only one of the matrices (the outer coupling matrix) is mode-dependent and piecewise-homogeneous Markov-based. All the other parameters in [28] are considered constant at all times. Also, the time-varying coupling delay in [28] is not mode-dependent. In this study, we consider the piecewise-homogeneous MJCDN model with a more complicated dynamic for each coupled node in the network that covers a larger class of MJCDNs. Notably, contributions of the presented paper compared with existing studies are as follows:

-

All matrices presented in this paper are piecewise-homogeneous Markovian mode-dependent. To the best of the authors’ knowledge, the synchronization problem for such a MJCDN with piecewise-constant TRs has been insufficiently investigated yet. To model the more realistic conditions of the physical systems, we consider this assumption.

-

The time-varying coupling delay is regarded to change according to piecewise-homogeneous Markovian modes, i.e., the considered coupling time delay depends on two-level Markovian signals which has been less studied yet.

-

The exponential synchronization problems of piecewise-homogeneous Markovian jump-delayed complex networks with PUTRs have seldom been investigated and still remain to be challenging. The assumption of PUTRs is more general than the completely known ones.

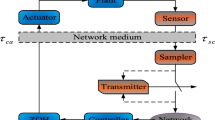

The contributions of this paper have not been offered in other studies and are quite original. Also, our proposed method is more complicated than [28, 32] due to assuming mode-dependent time-varying coupling delay and uncertain Markovian jump process which includes piecewise-homogeneous and partly unknown transition rates. The researchers aim at investigating of the synchronization problem for a new class of MJCDN, mode-dependent coefficient matrices, and mode-dependent time-varying coupling delay. It is worth mentioning that the mode-dependent time delays are present in many real networks, such as network control systems and sensor/actuator networks [12].

The organization of this paper can be described as follows. Problem formulation and some elementary results are presented in Sect. 3. The main results for the synchronization problem of the piecewise-homogeneous MJCDN under the case of CKTRs are reported in Theorems 1 and 2 in Sect. 3. In Sect. 3.1, we extend the synchronization problem under the case of PUTRs in Theorems 3 and 4. A numerical example is exploited to demonstrate the effectiveness of the obtained results under two mentioned cases. Finally, the conclusion will be given. For achieving the synchronization problem of our model in this paper, a proper controller is designed for each node in the network based on Lyapunov–Krasovskii functional method and LMIs.

Notations The notations applied in this paper are standard. \({\mathbb {R}}^n\) refers to the n-dimensional Euclidean space and the set of real \({n\times m}\) matrices are defined by \({\mathbb {R}}^{n\times m}\). Suppose \({\mathbf {A}}\) and \({\mathbf {B}}\) to be symmetric real matrices, then \({\mathbf {A}}>{\mathbf {B}}\) (\({\mathbf {A}}\ge {\mathbf {B}}\)) denotes that \({\mathbf {A}}-{\mathbf {B}}\) is positive definite (positive semi definite). The matrix \(\mathbf {I_n}\) represents the identity matrix of order n and \({\mathbf {0}}\) implies the zero matrix with suitable dimension. The superscript “T” represents the transpose, and \(\mathrm{{diag}}\{ .\}\) is a block-diagonal matrix. \(\left\| . \right\| \) is the Euclidean norm in \({\mathbb {R}}^p\). \({{\mathbb {E}}}\{ x\}\) (\({{\mathbb {E}}}\{ x\mathrm{{|}}y\}\)) means the expectation of the stochastic variable x (conditional on y). \(({\mathbf{L}} \otimes {\mathbf{K}})\) is a matrix with order \({mp \times nq}\) that defines the Kronecker product of matrices \({\mathbf{L}} \in {{\mathbb {R}}^{m \times n}}\) and \({\mathbf{k}}\in {{\mathbb {R}}^{p \times q}}\). Symmetric terms in a symmetric matrix are presented by \(*\). \({\lambda _{\hbox {max}}(.)}\) and \({\lambda _{\hbox {min}}(.)}\) denote the largest and smallest eigenvalues of a given matrix.

2 Problem Formulation and Preliminaries

Consider the following CDN with piecewise-homogeneous Markovian jump structure, in which the dynamical equation of each node, the coupling time delay, and the coefficient matrices are assumed to be mode-dependent:

where \({{\mathbf{x}}_b}(t) = {[{x_{b1}}(t),{x_{b2}}(t), \ldots ,{x_{bn}}(t)]^T}\in {{\mathbb {R}}^n}\) denotes the state vector of the bth node at time t, \({\mathbf{f}}({{\mathbf{x}}_b}(t))\) and \({\mathbf {u}}_b(t)\) are the continuous nonlinear vector function and the control input of the node b, respectively. \({\mathbf{A}}({r_t}),{\mathbf{B}}({r_t}) \in {{\mathbb {R}}^{n \times n}}\) are the mode-dependent coefficient matrices. \({{\varvec{\Gamma }} ({r_t})} = {[{\gamma _{bd}({r_t})}]_{n \times n}}\) indicates inner coupling between the elements of the node itself, and \({{\mathbf {G}}}({r_t}) = {[{g_{bd}}({r_t})]_{N \times N}}\) denotes outer coupling between the nodes of the whole network and represents the topological structure of the network. If there is a connection between node b and node d, then \({g_{bd}}({r_t}) = {g_{db}}({r_t}) \ne 0 \), \(b \ne d\); otherwise, \({g_{bd}}({r_t}) = {g_{db}}({r_t}) = 0\). It is assumed that the sum of each row of \({\mathbf{G}}({r_t})\) is zero, i.e., \(\sum \nolimits _{d = 1,b \ne d}^N {{g_{bd}}({r_t})} = - {g_{bb}}({r_t})\), \(b = 1,2, \ldots ,N\). The function \({{\tau }^{{r_t},{\sigma _t}}}(t)\) represents mode-dependent time-varying coupling delay convincing:

where \({{{\underline{\tau }}} \ge 0}\), \({{{\bar{\tau }}}\ge 0}\), and \(\mu \ge 0\) are assumed to be known constants. The process \(\{r_t,t \ge 0\}\) is a continuous-time piecewise-homogeneous Markov process with its values in the finite set \({\mathcal {W}} = \{ 1,2, \ldots ,w\}\) that characterizes switching between different modes. The probability function for the procedure \(\{r_t,t \ge 0\}\) with TR matrix \({{\varPi }^{{\sigma _{t + {{\varDelta } t}}}}} = [\pi _{ij}^{{\sigma _{t + {{\varDelta } t}}}}]_{w \times w}\) is defined by

where \({{\varDelta } t}>0\), \(\lim \limits _{{{\varDelta } t} \rightarrow 0} o({{\varDelta } t})/{{\varDelta } t} = 0\), and \(\pi _{ij}^{{\sigma _{t + {{\varDelta } t}}}} \ge 0\) for \(i \ne j\) denotes the TR from mode i at time t to mode j at time \(t+{{\varDelta } t}\) with the following condition (4). The time-varying TR matrix \({{\varPi }^{{\sigma _{t + {{\varDelta } t}}}}}\) is defined by (5).

Similar to the previous Markov process, the process \(\{ {\sigma _t},t \ge 0\}\) is continuous-time Markov process with its values in the finite set \({\mathcal {V}} = \{ 1,2,\ldots ,v\}\). This process is homogeneous and time invariant. The probability function with TR matrix \({\varLambda } = [\rho _{mn}]_{v \times v}\) is given by:

where \({{\varDelta } t}>0\), \(\lim \limits _{{{\varDelta } t} \rightarrow 0} o({{\varDelta } t})/{{\varDelta } t} = 0\), and \({\rho _{mn} \ge o}\) for \(m \ne n\) denotes the TR from mode m at time t to mode n at time \(t+{{\varDelta } t}\) with the following condition

and the TR matrix \({\varLambda }\) is given below

Moreover, in this paper, we assume that the transition probabilities for two-level Markovian signals are partly unknown, i.e., some elements in the TR matrices \({{\varPi }^{{\sigma _{t + {{\varDelta } t}}}}}\) for the Markov process \(\{r_t,t \ge 0\}\) and the TR matrix \({\varLambda }\) of the Markov process \(\{ {\sigma _t},t \ge 0\}\) are unknown. For example, (5) and (8) are in the form:

where \(``?''\) represents the unknown TR value. To solve this challenge, the following assumption is considered for two Markov processes \(\{r_t,t \ge 0\}\) and \(\{ {\sigma _t},t \ge 0\}\),

Remark 2.1

If all TR values are available and accessible, then \({{\mathcal {W}}^i}={{\mathcal {W}}^i_{k}}, {{\mathcal {V}}^m}= {{\mathcal {V}}^m_{k}}\) and \({{\mathcal {W}}^i_{uk}=\emptyset }, {{\mathcal {V}}^m_{uk}}=\emptyset \). In other words, if \({{\mathcal {W}}^i}= {{\mathcal {W}}^i_{uk}}, {{\mathcal {V}}^m}={{\mathcal {V}}^m_{uk}}\) and \({{\mathcal {W}}^i_{k}=\emptyset }, {{\mathcal {V}}^m_{k}}=\emptyset \), then all TR values are inaccessible.

Assumption 1

The function \({\mathbf{f}}:{{\mathbb {R}}^n} \rightarrow {{\mathbb {R}}^n}\) in system (1) has a sector-bounded property which satisfies the following condition [46]

where \({\mathbf{x}},{\mathbf{y}} \in {{\mathbb {R}}^n}\) and \({\mathbf {U}}\), \({\mathbf {V}}\) are known constant matrices with appropriate dimensions. Note that this property is more general than Lipschitz property.

This paper aims at synchronizing all of the nodes in the network to an isolated node with the state vector \({\mathbf{s}}(t) \in {{\mathbb {R}}^n}\). Let \({{\mathbf{e}}_b}(t) = {{\mathbf{x}}_b}(t) - {\mathbf{s}}(t)\) be the synchronization errors, where the state trajectory of the isolated node (or uncoupled node) is

where \( {{\mathbf{s}}}(t)\) is a particular solution of system (12). It must be assumed that \({{\mathbf{s}}}(.)\) and \({{\mathbf{x}}_b}(t)\), \(b={1,2,\ldots ,N}\) are well defined for \(t \ge {{-{\bar{\tau }}}}\). Then, the synchronization problem of piecewise-homogeneous MJCDN (1) can be changed to the stabilization problem of the following error dynamic system:

where \({{\mathbf {g}}}({{{\mathbf {e}}}_b}(t)) = {{\mathbf {f}}} ({{{\mathbf {x}}}_b}(t)) - {{\mathbf {f}}}({{\mathbf {s}}}(t))\).

The controller structure is assumed as

where \({{\mathbf{K}}_b}({r_t},{\sigma _t}) \in {{\mathbb {R}}^{n \times n}}\) is the controller gain matrices to be determined for each mode, i.e., for each node in the network, \(w \times v\) gain matrices are designed. By substituting (14) into (13), one can obtain the error dynamic system as

where \({\mathbf{e}}(t) = {[{\mathbf{e}}_1^T(t),{\mathbf{e}}_2^T(t), \ldots ,{\mathbf{e}}_N^T(t)]^T}\), \({\bar{\mathbf{g}}}({\mathbf{e}}(t)) = {[{{\mathbf{g}}^T}({{\mathbf{e}}_1}(t)),{{\mathbf{g}}^T}({{\mathbf{e}}_2}(t)), \ldots ,{{\mathbf{g}}^T}({{\mathbf{e}}_N}(t))]^T}\), \({\bar{\mathbf{A}}}({r_t})={\mathbf {I}}_N \otimes {\mathbf{A}}({r_t})\), \({\bar{\mathbf{B}}}({r_t})={\mathbf {I}}_N \otimes {\mathbf{B}}({r_t})\), \({\bar{\mathbf{G}}({r_t})}={\mathbf{G}}({r_t}) \otimes {{\varvec{\Gamma }}}({r_t})\) and

Definition 1

The MJCDN (1) is said to be exponentially mean-square synchronized, if the error system (15) is exponentially mean-square stable. Actually, there exist scalars \(\alpha >0\) and \(\beta >0\) as decay rate and decay coefficient, respectively, such that:

The following lemmas have been utilized through the paper.

Lemma 1

(Jensen inequality [13]). For any matrix \({{\varvec{\Delta }}} \ge 0\), scalars \(\eta _1\), \(\eta _2\), (\(\eta _2 > \eta _1\)) and a vector function \({{\varphi }}:[{\eta _1},{\eta _2}] \rightarrow {{\mathbb {R}}^n}\) such that integrations concerned are well defined, then:

Lemma 2

[35] For any matrix \(\left[ {\begin{array}{*{20}{c}} {\mathbf{M}}&{}{\mathbf{S}}\\ *&{}{\mathbf{M}} \end{array}} \right] \; \ge 0\), scalars \(\tau \ge 0\), \(\tau (t) \ge 0\), and \(0 < \tau (t) \le \tau \), vector function \({\dot{\mathbf{z}}}(t + .):[ - \tau ,0] \rightarrow {{\mathbb {R}}^n}\), such that the concerned integrations are well defined, then

where \({{\omega }}(t) = {[{{\mathbf{x}}^T}(t),{{\mathbf{x}}^T}(t - \tau (t)),{{\mathbf{x}}^T}(t - \tau )]^T}\),

Remark 2.2

In order to avoid the complexity of notation in the whole paper, we assume \(\{ {r_t}=i\}\) and \(\{ {\sigma _t}=m\}\). Also, all of the matrices are labeled as \({\bar{\mathbf{A}}}_{i}\), \({{\bar{\mathbf{B}}}_{i}}\),\( {{\bar{\mathbf{G}}}_{i}}\) and \( {\mathbf{K}}_{i,m}\).

The exponential synchronization problem for (1) is addressed in Theorem 1 for \({{\mathcal {W}}^i_{k}}={{\mathcal {W}}^i}, {{\mathcal {V}}^m_{k}}={{\mathcal {V}}^m}\) in Sect. 3 and in Theorem 3 for (\({{\mathcal {W}}^i_{uk}}\subset {{\mathcal {W}}^i}, {{\mathcal {V}}^m_{uk}}\subset {{\mathcal {V}}^m}\)) in Sect. 3.1. Designing of the desired state feedback controller based on the resulted conditions in Theorem 1 has been presented in Theorem 2. Also, the proper controllers based on the resulted conditions in Theorem 3 have been designed in Theorem 4. For the sake of simplicity, we denote \({\bar{\pi }}= \max \limits _{1 \le i \le w}\{-\pi _{ii}\}\) and \({\bar{\rho }}= \max \limits _{1 \le {m} \le v}\{-\rho _{mm}\}\).

3 Main Results

In this section, the exponential synchronization problem and designing of the proper controller for (1) for \({{\mathcal {W}}^i_{k}} ={{\mathcal {W}}^i}, {{\mathcal {V}}^m_{k}}= {{\mathcal {V}}^m}\) in Theorem 1 and 2 are investigated.

Theorem 1

For given mode-dependent controller gain matrix \({\mathbf{K}}({r_t},{\sigma _t})\) and positive scalars \(\alpha \), \({\bar{\tau }}\), \({\underline{\tau }}\), \(\mu \), the error system (15) is exponentially mean-square stable under that \({{\mathcal {W}}^i_{k}}={{\mathcal {W}}^i}, {{\mathcal {V}}^m_{k}}={{\mathcal {V}}^m}\), if there exist symmetric positive definite matrices \({\mathbf {P}}_{i,m}\), \({\mathbf {Q}}\), \({\mathbf {Z}}\), any matrix \({\mathbf {S}}\), and scalars \(\lambda _{i,m}> 0\), such that for any \((i,j \in {\mathcal {W}}^i_{k}\), \({\mathcal {W}}^i_{uk}=\emptyset )\) and \({(m,n \in {\mathcal {V}}^i_{k}}\), \({{\mathcal {V}}^i_{uk}=\emptyset )}\), such that the following conditions hold

where \({{{\varvec{\Xi }}}_{11}} = 2\alpha {{\mathbf{P}}_{i,m}} + {{\mathbf{P}}_{i,m}}{{\mathbf{K}}_{i,m}} + {\mathbf{K}}_{i,m}^T{{\mathbf{P}}_{i,m}}+ {{\mathbf{P}}_{i,m}}{{\bar{\mathbf{A}}}_{i}} + {\bar{\mathbf{A}}}_{i}^T{{\mathbf{P}}_{i,m}} + {{\mathbf{Q}}} - {\lambda _{i,m}}{\bar{\mathbf{U}}}+({{\bar{\pi }}+ \bar{p}})({{\bar{\tau }}}-{{\underline{\tau }}}) {{\mathbf{Q}}} + \sum \nolimits _{n \in {\mathcal {V}}} {{\rho _{mn}}{{\mathbf{P}}_{i,n}} + \sum \nolimits _{j \in {\mathcal {W}}} {\pi _{ij}^m{{\mathbf{P}}_{j,m}}} }\),

Proof

Construct the following Lyapunov–Krasovskii functional candidate

where

The infinitesimal generator \({\mathcal {L}}\) of the Markov process acting on \(V({{{\mathbf {e}}}_t},{r_t},{\sigma _t})\) is described as:

Then, by applying the total probability law [59], the above equality can be expressed as

Hence, for each \((i,m) \in {\mathcal {W}} \times {\mathcal {V}}\), it resulted:

It is straightforward to obtain:

Also

Based on Lemma 2 and (21), the following integral in (28) will be:

where \({{\omega }}(t) = {[{{{\mathbf {e}}}^T}(t-{{\bar{\tau }}}), {{{\mathbf {e}}}^T} (t-\tau ^{i,m}(t)),{{{\mathbf {e}}}^T}(t - {{\underline{\tau }}} )]^T}\),

For any scalars \({\lambda _{i,m}} > 0\), \((i \in {\mathcal {W}},m \in {\mathcal {V}})\), (11) is rewritten as

where

Moreover, the above inequality can be calculated in form of vector matrix as follows:

Also, based on Eqs. (4) and (7), for the integrals in (27) and (28) we have:

Hence, by substituting (35)–(38) and (15) into (25)–(28), Eq. (22) is stated as

Then, it follows:

where \( {{\xi }}(t) = {[\;\;{{{\mathbf {e}}}^T}(t),{{{\mathbf {e}}}^T}(t - {{\bar{\tau }}}),{{{\mathbf {e}}}^T}(t - {{\tau }^{i,m}(t)} ),{{{\mathbf {e}}}^T}(t - {{\underline{\tau }}}),{\bar{{\mathbf {g}}}^T}({{\mathbf {e}}}(t))}]^T\) and

According to Schur complements, Eq. (40) is equivalent to \({\varvec{\Phi }}\) in (20). If \({\varvec{\Phi }} < 0\), then

Therefore, relying on Dynkin’s formula, it can be found that

from the definition of \( V({{{\mathbf {e}}}_0},{r_0},{\sigma _0})\), it can be obtained

where,

Therefore, (42) is rewritten as

According to Definition 1, it is proved that the error system (15) is exponentially mean-square stable which means that the exponential synchronization problem of the piecewise-homogeneous MJCDN (1) is realized. \(\square \)

Remark 3.1

If the coupling time delay in (1) is not switching, the Lyapunov–Krasovskii functional candidate can be reduced to the following Lyapunov–Krasovskii functional one by applying changes:

where

Remark 3.2

The matrix \({\mathbf{Z}}\) in \(V_4({{{\mathbf {e}}}_t},{r_t},{\sigma _t})\) can be a mode-dependent one \({\mathbf{Z}}_{i,m}\); therefore, \(V_4({{{\mathbf {e}}}_t},{r_t},{\sigma _t})\) is defined as follows:

where the matrix \({\mathbf{L}}\) is a symmetric positive definite matrix. If the matrix \(Z_{i,m}\) is considered to be mode-dependent, a triple integral with mode-independent matrix \({\mathbf {L}}\) is added in \({V_4}({{{\mathbf {e}}}_t},{r_t},{\sigma _t})\). Besides, the matrix \({\mathbf{S}}\) introduced in Lemma 2 can be assumed to be mode-dependent matrix as \({\mathbf{S}}_{i,m}\).

It is noteworthy that by assuming Lyapunov–Krasovskii matrices as mode-dependent matrices, the number of decision variables is increased, and as a result, the feasibility problem of the LMIs can be solved with less conservative conditions. Besides, the above privilege, an increase in the number of decision variables leads to more complicated computing and is therefore considered to be a disadvantage.

The next theorem proposes some sufficient conditions to achieve the feedback controller gain for each node in the piecewise-homogeneous MJCDN (1) with CKTRs.

Theorem 2

Under \({{\mathcal {W}}^i_{k}}={{\mathcal {W}}^i}, {{\mathcal {V}}^m_{k}}={{\mathcal {V}}^m}\), for given positive scalars \(\alpha \), \({\bar{\tau }}\), \({\underline{\tau }}\), \(\mu \), the piecewise-homogeneous MJCDN (1) is exponentially synchronized in the mean-square, if there exist symmetric positive definite matrices \({{{\mathbf {P}}}_{i,m}} = {\text {diag}}\{{{\mathbf {P}}}_{i,m}^1,{{\mathbf {P}}}_{i,m}^2, \ldots ,{{\mathbf {P}}}_{i,m}^N\}\), \({\mathbf {Q}}\), \({\mathbf {Z}}\), \({{{\mathbf {X}}}_{i,m}} = {\text {diag}}\{{{\mathbf {X}}}_{i,m}^1, {{\mathbf {X}}}_{i,m}^2,\ldots ,{{\mathbf {X}}}_{i,m}^N\}\), any matrix \({\mathbf {S}}\), and scalars \(\lambda _{i,m}> 0\) for any \(i\in {\mathcal {W}}\), \(m \in {\mathcal {V}}\), such that (21) and the following LMI hold:

where:

and the other parameters are determined in Theorem 1. In addition, the controller gain matrix is given in the form of:

Proof

Pre- and post-multiplying both sides of (20) by \({{{\mathbf {L}}}^T} = {\text {diag}}\{ {{\mathbf {I}}},{{\mathbf {I}}},{{\mathbf {I}}},{{\mathbf {I}}}, {{\mathbf {I}}},{{{\mathbf {P}}}_{i,m}}{{\mathbf {Z}}^{ - 1}}\}\) and \({\mathbf {L}}\), respectively, and substituting \({{{\mathbf {X}}}_{i,m}} = {{{\mathbf {P}}}_{i,m}}{{{\mathbf {K}}}_{i,m}}\), yields

It is obvious that (48) is not an LMI, due to the nonlinear term \( - {{{\mathbf {P}}}_{i,m}}{{\mathbf {Z}}^{ - 1}}{{{\mathbf {P}}}_{i,m}}\). Hence, this inequality should be converted to an LMI. Noting that \({\mathbf {Z}} \geqslant 0 \) and \({{{{\mathbf {P}}}_{i,m}}{{\mathbf {Z}}^{ - 1}}{{{\mathbf {P}}}_{i,m}}-{{{\mathbf {P}}}_{i,m}}{{\mathbf {Z}}^{ - 1}}{{{\mathbf {P}}}_{i,m}}\geqslant 0}\), then by the Schur complement, we can get

then regarding to [28], it can be proved that:

If (46) holds, then (48) holds. Thus, this completes the proof. \(\square \)

3.1 Development of the Markovian Jump Problem with PUTRs (\({{\mathcal {W}}^i_{k}} \subset {{\mathcal {W}}^i}\) and \({{{\mathcal {V}}^m_{k}} \subset {{\mathcal {V}}^m}}\))

In this section, we extend the synchronization problem presented in Sect. 3 to the synchronization problem of piecewise-homogeneous MJCDN with PUTRs (\({{\mathcal {W}}^i_{k}} \subset {{\mathcal {W}}^i}\) and \({{{\mathcal {V}}^m_{k}} \subset {{\mathcal {V}}^m}}\)). The TRs determine the dynamical behavior of the Markovian jump systems in the jumping process. In practice, obtaining the exact values of the TR matrices is impossible or at least is a challenging task. To extend the analysis and management of the Markovian jump systems, the uncertain TRs have been assumed for the Markov chains. A descriptions of the uncertain TRs can be PUTRs where every value is considered as either completely known or completely unknown. Hence, it is very important to attend PUTRs, which do not need any knowledge of the unknown elements.

Theorem 3

For given controller gain matrix \({\mathbf{K}}({r_t},{\sigma _t})\) and positive scalars \(\alpha \), \({\bar{\tau }}\), \({\underline{\tau }}\), \(\mu \), the error system (15) is exponentially mean-square stable under that \({{\mathcal {W}}^i_{k}} \subset {{\mathcal {W}}^i}\) and \({{{\mathcal {V}}^m_{k}} \subset {{\mathcal {V}}^m}}\), if there exist symmetric positive definite matrices \({\mathbf {P}}_{i,m}\), \({\mathbf {Q}}\), \({\mathbf {Z}}\), any matrices \({\mathbf {S}}\), \({{\mathbf {E}}}_{i,m}\), \({{\mathbf {H}}}_{i,m}\) and scalars \(\lambda _{i,m}> 0\), such that for any \((i,j \in {\mathcal {W}}^i)\), \((m,n \in {\mathcal {V}}^m)\), (21) and the following LMIs hold:,

where  , and the other parameters follow the same definitions in Theorem 1.

, and the other parameters follow the same definitions in Theorem 1.

Proof

Consider the same Lyapunov functional as that in Theorem 1. It is obvious that (25) is expressed as follows:

according to Eqs. (4) and (7), for arbitrary mode-dependent matrices \({{\mathbf {E}}}_{i,m}\), \({{\mathbf {H}}}_{i,m}\), the following zero equalities hold:

with regard to (26)–(38), (54), and (55), it is obvious that

where

due to \(\pi _{ii}^{\sigma _{t + {{\varDelta } t}}} = - \sum \nolimits _{j = 1,i \ne j}^w {{\pi _{ij}^{\sigma _{t + {{\varDelta } t}}}}}\), \({\rho _{mm}} = - \sum \nolimits _{n = 1,m \ne n}^v {{\rho _{mn}}}\) and Schur complement, Equation (41) is held if the LMIs (51)–(53) and (21) are satisfied. \(\square \)

In Theorem 4, a desired Markovian mode-dependent controller is designed for every nodes in the piecewise-homogeneous MJCDN (1) with PUTRs.

Theorem 4

For given scalars \(\alpha \), \({\bar{\tau }}\), \({\underline{\tau }}\) and \(\mu \), the piecewise-homogeneous MJCDN (1) with PUTRs (\({{\mathcal {W}}^i_{k}} \subset {{\mathcal {W}}^i}\) and \({{{\mathcal {V}}^m_{k}} \subset {{\mathcal {V}}^m}}\)) based on the resulted conditions in Theorem 3 is exponentially synchronized in the mean-square by the feedback controller of the form (14), if there exist symmetric positive definite matrices \({{{\mathbf {P}}}_{i,m}} = {\text {diag}}\{ {{\mathbf {P}}}_{i,m}^1,{{\mathbf {P}}}_{i,m}^2,\ldots ,{{\mathbf {P}}}_{i,m}^N\}\), \({\mathbf {Q}}\), \({\mathbf {Z}}\), \({{{\mathbf {X}}}_{i,m}} = {\text {diag}}\{ {{\mathbf {X}}}_{i,m}^1,{{\mathbf {X}}}_{i,m}^2,\cdots ,{{\mathbf {X}}}_{i,m}^N\}\), any matrices \( {\mathbf {S}}\), \({{\mathbf {E}}}_{i,m}\), \({{\mathbf {H}}}_{i,m}\) and scalars \(\lambda _{i,m}> 0\) for any \(i \in {\mathcal {W}}\), \(m \in {\mathcal {V}}\), such that (21), (52), (53) and the following LMI hold:

where

and the other parameters are determined the same as definitions in Theorem 1 in Sect. 3. In addition, the controller gain matrices are given in the form of:

Proof

Pre- and post-multiplying both sides of (51) by \({{{\mathbf {L}}}^T} = {\text {diag}}\{ {{\mathbf {I}}},{{\mathbf {I}}},{{\mathbf {I}}}, {{\mathbf {I}}},{{\mathbf {I}}}, {{{\mathbf {P}}}_{i,m}}{{\mathbf {Z}}^{ - 1}}\}\) and \({\mathbf {L}}\), respectively, and substituting \({{{\mathbf {X}}}_{i,m}} = {{{\mathbf {P}}}_{i,m}}{{{\mathbf {K}}}_{i,m}}\), yields

similar to Theorem 2 in Sect. 3, based on the (50), it is proofed that the (59) is equivalent to (57). Therefore, this completes the proof. \(\square \)

Remark 3.3

For a particular example, Theorem 2 in Sect. 3 and Theorem 4 in Sect. 3.1 give the controller gain matrix \({{{\mathbf {K}}}_{i,m}} \in {{\mathbb {R}}^{n \times n}}\) for every mode \(i \in {\mathcal {W}}\) and \(m \in {\mathcal {V}}\). In practice, we have to know the current states of stochastic variables \(r_t\) and \(\sigma _t\), in order to select the proper controller gain matrix \({{\mathbf{K}}_k}({r_t},{\sigma _t})\), \(k=1,2,\ldots ,N\), for the controller (14). Thus, a mode observer is needed to identify the current state of the system for implementation.

Remark 3.4

Non-homogeneous Markovian jump models are more general than homogeneous models and are applicable to more practical situations. In this paper, if one denotes \({\mathcal {V}} = \{ 1\}\), the Markovian problem will be reduced to a homogeneous Markovian problem.

4 Numerical Example

In this section, we present one example to demonstrate the usefulness of the proposed results. Consider the piecewise-homogeneous MJCDN (1) with three coupled Chua’s circuits and \({\mathcal {W}} = \{ 1,2\}\) for low-level signal \(\{r_t, t \geqslant 0\}\). The mode-dependent coefficient matrices for each \(i \in {{\mathcal {W}}}\) are given as [60],

and the nonlinear function vector is

where \(h({{\mathbf {x}}}_{b1}(t))=-0.5(m_1-m_0) (|{{\mathbf {x}}}_{b1}(t)+ 1|-|{{\mathbf {x}}}_{b1}(t)-1|)\), \(a=9\), \(m_0=\frac{-1}{7}\), and \(m_1=\frac{2}{7}\).

Two following matrices satisfy the sector-bounded condition in (7)

the chaotic behavior of the isolated node (12) is shown in Fig. 1 .

The chaotic behavior (double scroll attractor) of the isolated node (12)

Moreover, the outer and inner coupling matrices, respectively, as

We consider \({\mathcal {V}} = \{ 1,2,3\}\) for Markov process (high-level signal) \(\{{\sigma _t},t \ge 0\}\), the piecewise-homogeneous transition rate matrices of low-level Markovian signal \(\{r_t,t \ge 0\}\) for each modes in \({\mathcal {V}}\) are given as

Furthermore, the homogeneous transition rate matrix that represents transition rate among three transition rate matrices mentioned above is as follows:

The mode-dependent coupling delays are assumed to be in range \({{\tau }^{r(t),\sigma (t)}(t)}\in {[0.35, 0.95]}\). In other words, the lower and upper bound of mode-dependent time-varying coupling delay is 0.35 and 0.95, respectively. Time-varying delays \({{\tau }^{r(t),\sigma (t)}(t)}\) for different values for two Markov processes \(\{r_t,t \ge 0\}\) and \(\{\sigma _t,t \ge 0\}\) are chosen as:

\({\tau ^{(1,1)}}(t)=0.9+0.05 \hbox {sin}(2t)\), \({\tau ^{(1,2)}}(t)=0.6+0.05 \hbox {sin}(10t)\), \({\tau ^{(1,3)}}(t)=0.5+0.05 \hbox {sin}(10t)\), \({\tau ^{(2,1)}}(t)=0.4+0.05 \hbox {sin}(10t)\), \({\tau ^{(2,2)}}(t)=0.9+0.05 \hbox {sin}(4t)\), \({\tau ^{(2,3)}}(t)=0.7+0.05 \hbox {sin}(3t)\). Then the maximum of derivatives of the mode-dependent time delays in various modes is \({\mu }=0.5\).

The initial state values for the isolated node are assumed as \({\mathbf {s}}(0)=[-0.2,-0.3,0.2]^T\). The nodes of the network have initial values as \({\mathbf {x}}_1(0)=[-3,6,0]^T\), \({\mathbf {x}}_2(0)=[4,2,0]^T\), and \({\mathbf {x}}_3(0)=[-7,4,0]^T\).

Both switching signals are shown in Figs. 2 and 3 for piecewise-homogeneous process \(\{r_t,t \ge 0\}\) and homogeneous process \(\{ {\sigma _t},t \ge 0\}\), respectively. Figure 4 shows the synchronization error vectors without applying feedback control inputs to the MJCDN. As shown in this figure, the coupled nodes \({\mathbf{x}}_b(t), b=1,2,3,\) in the MJCDN can not synchronize to the isolated node \({\mathbf{s}}(t)\) and the error vectors can not converge to zero without control inputs. Therefore, using the MATLAB LMI control Toolbox to solve the LMIs in Theorem 2, with \(\alpha =0.2\), the aforementioned control gain matrices are obtained for any mode \(i \in {\mathcal {W}}\), \(m \in {\mathcal {V}}\) as follows:

By applying control law (14), Fig. 5 shows that the synchronization errors between the state vectors of coupled nodes \({\mathbf{x}}_b(t),b=1,2,3,\) and isolated node \({\mathbf{s}}(t)\), where \({\mathbf{e}}_{b}\), \(b=1,2,3,\) converge to zero. It means the coupled nodes’ trajectories \({\mathbf{x}}_b(t),b=1,2,3,\) in the MJCDN synchronize to the isolated nodes’ trajectory \({\mathbf{s}}(t)\). Synchronization between the state vectors of isolate node \({\mathbf{s}}(t)\) and coupled nodes \({\mathbf{x}}_b(t),i=1,2,3\) is shown in Fig. 6. The corresponding control inputs are also shown in Fig.7.

The maximum upper bound of mode-dependent delays with \(\mu =0.8\) for different decay rate values \((\alpha )\) is presented in Table 1.

4.1 Case 2

The transition rate matrices \({{\varPi }^{m}},m \in {\mathcal {V}}\) for the Markov process \(\{{r_t},t \ge 0\}\) are assumed to be partially unknown as follows:

Furthermore, the transition rate matrix \(({{\varLambda } })\) for the Markov process \(\{{\sigma _t},t \ge 0\}\) that represents transition rate among three transition rate matrices \({{\varPi }^{m}}\) is supposed to be partially unknown as follows:

The mode-dependent coupling delays for each mode, \({\mu }\), \(\alpha \) are the same as the values in Case 1. By applying Theorem 4, the controller gain matrices are obtained for any mode \(i \in {\mathcal {W}}\), \(m \in {\mathcal {V}}\) as follows:

The initial state values for the isolated node and other coupled nodes are also assumed similar to Case 1. Without applying feedback control inputs to each coupled nodes, the error vectors \({\mathbf{e}}_b(t), b=1,2,3,\) are shown in Fig. 8, where the trajectories of the coupled nodes in the MJCDN do not converge to the trajectory of isolated node when no control signals are applied to the coupled nodes. By applying the control inputs (14) to each coupled nodes in the MJCDN with PUTRs, the synchronization errors \({\mathbf{e}}_b(t), b=1,2,3,\) are shown in Fig. 9. From Fig. 10, we can observe that the state trajectories of the coupled nodes converge to the state trajectory of the isolated node when the gain matrices are designed properly and applied to each coupled nodes. Also, the control inputs that are applied to each coupled nodes are shown in Fig. 11.

5 Conclusion

The present study is an investigation of the exponential stochastic synchronization for the piecewise-homogeneous MJCDN (1). In our proposed model, all coefficient matrices and time-varying coupling delay are considered as mode-dependent values. The piecewise-homogeneous Markovian structure is capable of presenting abrupt changes in order to reduce conservatism of the traditional Markovian jump systems. Assuming that coefficient matrices and time delay are mode-dependent, the degree of the freedom of the synchronization problem is inevitably increased; hence, the necessity of presenting a more realistic model of applications. Delay-dependent sufficient criteria are firstly derived from the theorems to guarantee the synchronization of the piecewise-homogeneous MJCDN. Synchronization mode-dependent controllers are then designed in terms of LMIs under the derived results. A numerical example under two cases has been given to demonstrate the effectiveness and usefulness of the proposed method. The researchers are interested in Wirtinger-based integral inequality approach, and improved reciprocally convex technique as well as semi-Markovian jump systems for future researches.

References

N. Akbari, A. Sadr, A. Kazemy, Exponential synchronization of a Markovian jump complex dynamic network with piecewise-constant transition rates and distributed delay. Trans. Inst. Meas. Control. 41(9), 2535–2544 (2019)

A. Arenas, A. Díaz-Guilera, J. Kurths, Y. Moreno, C. Zhou, Synchronization in complex networks. Phys. Rep. 469(3), 93–153 (2008)

T. Botmart, W. Weera, Guaranteed cost control for exponential synchronization of cellular neural networks with mixed time-varying delays via hybrid feedback control. Abstr. Appl. Anal. 2013, 1–12 (2013)

C.G. Cassandras, J. Lygeros, Stochastic Hybrid Systems: Research Issues and Areas. in Stochastic Hybrid Systems, (CRC Press, 2006), pp. 11–24

J.F. Chang, T.L. Liao, J.J. Yan, H.C. Chen, Implementation of synchronized chaotic Lü systems and its application in secure communication using PSO-based PI controller. Circuits Syst. Signal Process. 29(3), 527–538 (2010)

J.L. Chen, C.H. Huang, Y.C. Du, C.H. Lin, Combining fractional-order edge detection and chaos synchronisation classifier for fingerprint identification. IET Image Process. 8(6), 354–362 (2014)

Y. Chen, L. Yang, A. Xue, Finite-time passivity of stochastic markov jump neural networks with random distributed delays and sensor nonlinearities. Circuits Syst. Signal Process. 38(6), 2422–2444 (2019)

C.J. Cheng, T.L. Liao, C.C. Hwang, Exponential synchronization of a class of chaotic neural networks. Chaos, Solitons Fractals 24(1), 197–206 (2005)

F. Dorfler, F. Bullo, Synchronization and transient stability in power networks and nonuniform Kuramoto oscillators. SIAM J. Control Optim. 50(3), 1616–1642 (2012)

M. Faraji-Niri, M.R. Jahed-Motlagh, M. Barkhordari-Yazdi, Stabilization of active fault-tolerant control systems by uncertain nonhomogeneous markovian jump models. Complexity 21(S1), 318–329 (2016)

M. Faraji-Niri, M.R. Jahed-Motlagh, M. Barkhordari-Yazdi, Stochastic stability and stabilization of a class of piecewise-homogeneous Markov jump linear systems with mixed uncertainties. Int. J. Robust Nonlinear Control 27(6), 894–914 (2017)

G. Guo, Linear systems with medium-access constraint and Markov actuator assignment. IEEE Trans. Circuits Syst. I Regul. Pap. 57(11), 2999–3010 (2010)

K. Gu, J. Chen, V.L. Kharitonov, Stability of Time-Delay Systems (Springer, Boston, 2003)

E. Gyurkovics, K. Kiss, A. Kazemy, Non-fragile exponential synchronization of delayed complex dynamical networks with transmission delay via sampled-data control. J. Franklin Inst. 355(17), 8934–8956 (2018)

S. He, F. Liu, Robust stabilization of stochastic Markovian jumping systems via proportional-integral control. Signal Process. 91(11), 2478–2486 (2011)

C. Huang, D.W. Ho, J. Lu, Partial-information-based distributed filtering in two-targets tracking sensor networks. IEEE Trans. Circuits Syst. I Regul. Pap. 59(4), 820–832 (2012)

Y. Ji, X. Liu, Unified synchronization criteria for hybrid switching-impulsive dynamical networks. Circuits Syst. Signal Process. 34(5), 1499–1517 (2015)

Z. Jia, X. Fu, G. Deng, K. Li, Group synchronization in complex dynamical networks with different types of oscillators and adaptive coupling schemes. Commun. nonlinear Sci. Numer. Simul. 18(10), 2752–2760 (2013)

B. Kaviarasan, R. Sakthivel, Y. Lim, Synchronization of complex dynamical networks with uncertain inner coupling and successive delays based on passivity theory. Neurocomputing 186, 127–138 (2016)

A. Kazemy, Global synchronization of neural networks with hybrid coupling: a delay interval segmentation approach. Neural Comput. Appl. 30(2), 627–637 (2018)

A. Kazemy, J. Cao, Consecutive synchronization of a delayed complex dynamical network via distributed adaptive control approach. Int. J. Control Autom. 16(6), 2656–2664 (2018)

A. Kazemy, M. Farrokhi, Delay-dependent robust absolute stability criteria for uncertain multiple time-delayed Lur’e systems. Proc. Inst. Mech. Eng. Pt. I J. Syst. Contr. Eng. 227(3), 286–297 (2013)

A. Kazemy, É. Gyurkovics, Sliding mode synchronization of a delayed complex dynamical network in the presence of uncertainties and external disturbances. Trans. Inst. Meas. Control. 41(9), 2623–2636 (2019)

S.H. Lee, M.J. Park, O.M. Kwon, R. Sakthivel, Advanced sampled-data synchronization control for complex dynamical networks with coupling time-varying delays. Inf. Sci. (Ny) 420, 454–465 (2017)

H. Li, D. Yue, Synchronization of Markovian jumping stochastic complex networks with distributed time delays and probabilistic interval discrete time-varying delays. J. Phys. A Math. Theor. 43(10), 105101 (2010)

X. Li, D. Bi, X. Xie, Y. Xie, Multi-synchronization of stochastic coupled multi-stable neural networks with time-varying delay by impulsive control. IEEE Access 7, 15641–15653 (2019)

Z. Li, G. Chen, Global synchronization and asymptotic stability of complex dynamical networks. IEEE Trans. Circuits Syst. II Express Briefs 53(1), 28–33 (2006)

Z.X. Li, J.H. Park, Z.G. Wu, Synchronization of complex networks with nonhomogeneous Markov jump topology. Nonlinear Dyn. 74(1–2), 65–75 (2013)

T. Liu, J. Zhao, D.J. Hill, Exponential synchronization of complex delayed dynamical networks with switching topology. IEEE Trans. Circuits Syst. I Regul. Pap. 57(11), 2967–2980 (2010)

M. Long, H. Su, B. Liu, Group controllability of two-time-scale multi-agent networks. J. Franklin Inst. 355(13), 6045–6061 (2018)

M. Long, H. Su, B. Liu, Second-order controllability of two-time-scale multi-agent systems. Appl. Math. Comput. 343, 299–313 (2019)

Q. Ma, S. Xu, Y. Zou, Stability and synchronization for Markovian jump neural networks with partly unknown transition probabilities. Neurocomputing 74(17), 3404–3411 (2011)

J. Mei, M. Jiang, W. Xu, B. Wang, Finite-time synchronization control of complex dynamical networks with time delay. Commun. Nonlinear Sci. Numer. Simul. 18(9), 2462–2478 (2013)

G.A. Pagani, M. Aiello, The power grid as a complex network: a survey. Phys. A Stat. Mech. Appl. 392(11), 2688–2700 (2013)

P. Park, J.W. Ko, C. Jeong, Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47(1), 235–238 (2011)

R. Rakkiyappan, V.P. Latha, K. Sivaranjani, Exponential \(H_{\infty }\) synchronization of lur’e complex dynamical networks using pinning sampled-data control. Circuits Syst. Signal Process. 36(10), 3958–3982 (2017)

R. Rakkiyappan, N. Sakthivel, Stochastic sampled-data control for exponential synchronization of Markovian jumping complex dynamical networks with mode-dependent time-varying coupling delay. Circuits Syst. Signal Process. 34(1), 153–183 (2015)

P. Selvaraj, R. Sakthivel, C.K. Ahn, Observer-based synchronization of complex dynamical networks under actuator saturation and probabilistic faults. IEEE Trans. Syst. Man Cybern. Syst. 49(7), 1516–1526 (2018)

J.W. Shuai, D.M. Durand, Phase synchronization in two coupled chaotic neurons. Phys. Lett. A 264(4), 289–297 (1999)

S.H. Strogatz, Exploring complex networks. Nature 410(6825), 268 (2001)

G. Wang, Q. Yin, Y. Shen, F. Jiang, \(H_\infty \) synchronization of directed complex dynamical networks with mixed time-delays and switching structures. Circuits Syst. Signal Process. 32(4), 1575–1593 (2013)

J.A. Wang, C. Zeng, X. Wen, Synchronization stability and control for neutral complex dynamical network with interval time-varying coupling delay. Circuits Syst. Signal Process. 36(2), 559–576 (2017)

X. Wang, J.A. Fang, A. Dai, W. Cui, G. He, Mean square exponential synchronization for a class of Markovian switching complex networks under feedback control and M-matrix approach. Neurocomputing 144, 357–366 (2014)

X.F. Wang, Complex networks: topology, dynamics and synchronization. Int. J. Bifurc. chaos 12(5), 885–916 (2002)

Y.W. Wang, Y.W. Wei, X.K. Liu, N. Zhou, C.G. Cassandras, Optimal persistent monitoring using second-order agents with physical constraints. IEEE Trans. Automat. Contr. 64(8), 3239–3252 (2018)

Z. Wang, Y. Liu, X. Liu, \(H_{\infty }\) filtering for uncertain stochastic time-delay systems with sector-bounded nonlinearities. Automatica 44(5), 1268–1277 (2008)

Q. Wei, X.Y. Wang, X.P. Hu, Chaos synchronization in complex oscillators networks with time delay via adaptive complex feedback control. Circuits Syst. Signal Process. 33(8), 2427–2447 (2014)

J. Xiao, Z. Zeng, Robust exponential stabilization of uncertain complex switched networks with time-varying delays. Circuits Syst. Signal Process 33(4), 1135–1151 (2014)

T. Yang, L.O. Chua, Impulsive stabilization for control and synchronization of chaotic systems: theory and application to secure communication. IEEE Trans. Circuits Syst. I Fundam. Theory Appl. 44(10), 976–988 (1997)

X. Yang, J. Lu, Finite-time synchronization of coupled networks with Markovian topology and impulsive effects. IEEE Trans. Automat. Contr. 61(8), 2256–2261 (2015)

J.W. Yi, Y.W. Wang, J.W. Xiao, Y. Huang, Exponential synchronization of complex dynamical networks with Markovian jump parameters and stochastic delays and its application to multi-agent systems. Commun. Nonlinear Sci. Numer. Simul. 18(5), 1175–1192 (2013)

Y. Yin, P. Shi, F. Liu, K.L. Teo, C.C. Lim, Robust filtering for nonlinear nonhomogeneous Markov jump systems by fuzzy approximation approach. IEEE Trans. Cybern. 45(9), 1706–1716 (2014)

D. Zhang, S.K. Nguang, L. Yu, Distributed control of large-scale networked control systems with communication constraints and topology switching. IEEE Trans. Syst. Man, Cybern. Syst. 47(7), 1746–1757 (2017)

L. Zhang, Y. Wang, Y. Huang, X. Chen, Delay-dependent synchronization for non-diffusively coupled time-varying complex dynamical networks. Appl. Math. Comput. 259, 510–522 (2015)

W. Zhang, J.A. Fang, Q. Miao, L. Chen, W. Zhu, Synchronization of Markovian jump genetic oscillators with nonidentical feedback delay. Neurocomputing 101, 347–353 (2013)

W. Zhang, C. Li, T. Huang, J. Qi, Global stability and synchronization of Markovian switching neural networks with stochastic perturbation and impulsive delay. Circuits Syst. Signal Process. 34(8), 2457–2474 (2015)

J. Zhao, D.J. Hill, T. Liu, Synchronization of complex dynamical networks with switching topology: a switched system point of view. Automatica 45(11), 2502–2511 (2009)

Y.P. Zhao, P. He, H. Saberi Nik, J. Ren, Robust adaptive synchronization of uncertain complex networks with multiple time-varying coupled delays. Complexity 20(6), 62–73 (2015)

J. Zhou, H. Dong, J. Feng, Event-triggered communication for synchronization of Markovian jump delayed complex networks with partially unknown transition rates. Appl. Math. Comput. 293, 617–629 (2017)

W. Zhou, T. Wang, Q.C. Zhong, J.A. Fang, Proportional-delay adaptive control for global synchronization of complex networks with time-delay and switching outer-coupling matrices. Int. J. Robust Nonlinear Control 23(5), 548–561 (2013)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Akbari, N., Sadr, A. & Kazemy, A. Exponential Synchronization of Markovian Jump Complex Dynamical Networks with Uncertain Transition Rates and Mode-Dependent Coupling Delay. Circuits Syst Signal Process 39, 3875–3906 (2020). https://doi.org/10.1007/s00034-020-01346-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-020-01346-5