Abstract

In order to alleviate the effect of additive noise and to reduce the computational burden, we proposed a new computationally efficient cross-correlation based two-dimensional frequency estimation method for multiple real valued sinusoidal signals. Here the frequencies of both the dimensions are estimated independently with a one-dimensional (1-D) subspace-based estimation technique without eigendecomposition, where the null spaces are obtained through a linear operation of the matrices formed from the cross-correlation matrix between the received data. The estimated frequencies in both the dimensions are automatically paired. Simulation results show that the proposed method offers competitive performance when compared to existing approaches at a lower computational complexity. It has shown that proposed method perform well at low signal-to-noise ratio (SNR) and with a small number of snapshots.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In this paper, we consider the problem of estimating the frequencies of multiple two-dimensional (2-D) real-valued sinusoids in presence of additive white Gaussian noise. This problem is the more precise case of estimating the parameters of a 2-D regular and homogeneous random field from a single observed realization as of [1]. The real-valued 2-D sinusoidal signal models, also known as X-texture modes. These modes come into existence naturally in experimental, analytical, modal and vibrational analysis of circular shaped objects. X-texture modes are often used for modelling the displacements in the cross-sectional planes of isotropic, homogeneous, thick walled cylinders [2–4], laminated composite cylindrical shells [5], and circular plates [6]. These X-texture modes have also been used to describe the radial displacements of logs of spruce subjected to continuous sinusoidal excitation [7] and standing trunks of spruce subjected to impact excitation [8–10]. The proposed signal model offers cumbersome challenges for 2-D joint frequency estimation algorithms. Many algorithms for estimating complex-valued frequencies are well documented in the literatures [11, 12] and for 1-D real-valued frequencies in [13–15]. A vivid discussion on the problem of analyzing 2-D homogeneous random fields with discontinuous spectral distribution functions can be found in [16]. Parameter estimation techniques of sinusoidal signals in additive white noise include the periodogram-based approximation (applicable for widely spaced sinusoids) to the maximum-likelihood (ML) solution [17–19], the Pisarenko harmonic decomposition [20], or the singular value decomposition [21]. A matrix enhancement and matrix pencil method for estimating the parameters of 2-D superimposed, complex-valued exponential signals was suggested in [11]. In [22], the concept of partial forward–backward averaging is proposed as a means for en-hancing the frequency and damping estimation of 2-D multiple real-valued sinusoids (X-texturemodes) where each mode considered as a mechanism for forcing the two plane waves towards the mirrored direction-of-arrivals. In [23], 2-D parameter estimation of a single damped/undamped real/complex tone is proposed which is referred to as principal-singular-vector utilization for modal analysis (PUMA).

We present a new approach of solving the 2-D real valued sinusoidal signal frequencies estimation problem based on cross-correlation technique to resolve the identical frequencies. The proposed idea based upon the computationally efficient subspace based method without eigendecomposition (SUMWE) [24, 25]. The paper is organized as follows. The signal model, together with a definition of the addressed problem, is presented in Sect. 2. The basic definition and the proposed technique both are detailed in Sect. 3 followed by simulation results and conclusion in Sects. 4 and 5, respectively. Throughout this paper upper case, bold letters denote matrices where as lowercase bold letters are vectors. The superscript T denotes transposition of a matrix.

2 Data Model and Problem Definition

Consider the following set of noisy data:

where 0 ≤ m ≤ N1−1 and 0 ≤ n ≤ N2−1. The model of the noiseless data x(m,n) is described by,

The signal x(m,n) consists of D, two dimensional real-valued sinusoids described by normalized 2-D frequencies {ωk vk}, (k = 1,2…,D), the real amplitude {ak} (k = 1,2…,D) and the phases ϕ1k and ϕ2k which are independent random variables uniformly distributed over [0,2π]. e(m,n) is a zero mean additive white Gaussian noise with variance σ2. Further assumed that αk and βk are independent of e(m,n).

Let us define two M × 1 snapshot vectors with assumption M > D, described as follows

where,

From the above set of equations we can obtain pair of expression for the two M × 1 snapshot vectors by substituting equation (4a, b) in (3a) and (4c, d) in (3b) as follows,

where A(ω) = [γ(ω1)…γ(ωD)] and A(v) = [ρ(v1)…ρ(vD)] are M × D matrices, and s(m,n) = [a 1cos(ω1m + α1)cos(v1n + β1)…a Dcos(ωDm + αD)cos(vDn + βD)]T is the D × 1 signal vector, γ(ωi) and \( {{\uprho}}\left( {{\text{v}}_{\text{i}} } \right) \) are M × 1 vectors defined respectively as γ(ωi)=[1 cos(ωi)…cos((M−1)ωi )]T and \( {{\uprho}}\left( {{\text{v}}_{\text{i}} } \right) = [ 1 {\text{ cos}}\left( {{\text{v}}_{\text{i}} } \right) \ldots { \cos }\left( {\left( {{\text{M}} - 1} \right){\text{v}}_{\text{i}} } \right)]^{\text{T}} \). The modified M × 1 error vectors g(m,n) and h(m,n) are defined respectively as \( {\mathbf{g}}\left( {{\text{m}},{\text{n}}} \right) \triangleq \left[ {{\text{g}}_{ 1} \left( {{\text{m}},{\text{n}}} \right){\text{ g}}_{ 2} \left( {{\text{m}},{\text{n}}} \right)\ldots{\text{ g}}_{\text{M}} \left( {{\text{m}},{\text{n}}} \right)} \right]^{\text{T}} \) and \( {\mathbf{h}}\left( {{\text{m}},{\text{n}}} \right) \triangleq \left[ {{\text{h}}_{ 1} \left( {{\text{m}},{\text{n}}} \right){\text{ h}}_{ 2} \left( {{\text{m}},{\text{n}}} \right) \ldots {\text{h}}_{\text{M}} \left( {{\text{m}},{\text{n}}} \right)} \right]^{\text{T}} \), where \( {\text{g}}_{\text{j}} \left( {{\text{m}},{\text{n}}} \right) = 1/ 2\left[ {{\text{e}}\left( {{\text{m}} + {\text{j}} - 1,{\text{n}}} \right) + {\text{e}}\left( {{\text{m}} - {\text{j}} + 1,{\text{n}}} \right)} \right] \) and \( {\text{h}}_{\text{j}} \left( {{\text{m}},{\text{n}}} \right) = 1/ 2\left[ {{\text{e}}\left( {{\text{m}},{\text{n}} + {\text{j}} - 1} \right) +\,{\text{e}}\left( {{\text{m}},{\text{n}} - {\text{j}} + 1} \right)} \right] \). The matrices A(ω) and A(v) are full rank matrices because all the columns are linearly independent to each other.

2.1 Data Model Modification

We first obtained two new data models as follows,

by implementing following mathematical operations,

where

where zi(m,n) for i = 1,2…8 are M × 1 observation vectors. Ωω and Ωv are two D × D diagonal matrices defined respectively as Ωω = diag{cosω1…cosωD} and Ωv = diag{cosv1…cosvD}. The two M × 1 modified noise vectors qr(m,n) and qe(m,n) are defined respectively as qr(m,n) = [qr1(m,n)…qrM(m,n)]T, qe(m,n) = [qe1(m,n) qe2(m,n)…qeM(m,n)]T where \( {\text{q}}_{\text{ri}} \left( {{\text{m}},{\text{n}}} \right) = 1/ 4\left[ {{\text{e}}\left( {{\text{m}} - {\text{i}} + 2,{\text{n}}} \right) + {\text{e}}\left( {{\text{m}} + {\text{i}} - 2,{\text{n}}} \right) + {\text{e}}\left( {{\text{m}} + {\text{i}},{\text{n}}} \right) + {\text{e}}\left( {{\text{m}} - {\text{i}},{\text{n}}} \right)} \right] \) and \( {\text{q}}_{\text{ei}} \left( {{\text{m}},{\text{n}}} \right) = 1/ 4\left[ {{\text{e}}\left( {{\text{m}},{\text{n}} - {\text{i}} + 2} \right) + {\text{e}}\left( {{\text{m}},{\text{n}} + {\text{i}} - 2} \right) + {\text{e}}\left( {{\text{m}},{\text{n}} + {\text{i}}} \right) + {\text{e}}\left( {{\text{m}},{\text{n}} - {\text{i}}} \right)} \right] \) for i = 1,2…,M.

2.2 Further Modification of Data Model

As like Sect. 2.1, we deduced another set of modified data models described by,

The above two data models were obtained by implementing similar kind of mathematical operations as that of equation (9a, b) that is,

where

where pi(m,n) for i = 1,2…8 are M × 1 observation vectors and J is the M × M counter identity matrix, in which 1 s present in the principal anti-diagonal. The two M × 1 modified noise vectors qw(m,n) and qu(m,n) are defined respectively as qw(m.n) = Jqr(m.n) and qu(m.n) = Jqe(m.n).

3 Proposed Algorithm

In this section, we present the algorithm for 2-D frequency estimation for multiple real-valued sinusoidal signals.

3.1 Estimation of First Dimension Frequencies

Under the assumption of data model, from (5) and (7) we easily obtain the cross correlation matrix Ryz1 between the received data, yω(m,n) and zω(m,n) as,

where Rss is source signal correlation matrix defined by \( {\mathbf{R}}_{{{\mathbf{ss}}}} \,\triangleq\, {\text{E}}\left\{ {{\mathbf{s}}\left( {{\text{m}},{\text{n}}} \right){\mathbf{s}}^{\text{T}} \left( {{\text{m}},{\text{n}}} \right)} \right\} \). From (12), we have another data model that is pω(m,n) in backward way such that pω(m,n) = Jzω(m,n), similarly from (5) and (12) we can obtain another cross-correlation matrix between the two received data

In noise free case Ryp1 = JRyz1 but in practical case that is when signal is noise corrupted, then the relation holds true partially that is \( {\mathbf{R}}_{\text{yp1}} \cong {\mathbf{JR}}_{\text{yz1}} \). Considering the above assumptions we formulated an extended cross correlation of size M × 2M as,

Since A(ω) is a full rank matrix, we can divide A(ω) into two sub matrices as A(ω) = [(A1(ω))T (A2(ω))T]T where A1(ω) and A2(ω) are the D × D and (M-D) × D sub matrices consisting of the first D rows and last (M-D) rows of the matrix A(ω) respectively. There exists a D × M-D linear operator P1 between A1(ω) and A2(ω) [26] such that A2(ω) = P T1 A1(ω), using the above assumptions we can segregate (20) into the following two matrices.

where Rω1 and Rω2 consist of the first D rows and the last M-D rows of the matrix Rω, and \( {\mathbf{R}}_{\omega 2} = \mathop {\text{P}}\nolimits_{1}^{T} {\mathbf{R}}_{\omega 1} \). Hence, the linear operator P1 found from Rω1 and Rω2 as [26]. However, a least-squares solution [27] for the entries of the propagator matrix P1 satisfying the relation, \( {\mathbf{R}}_{\omega 2} = \mathop {\text{P}}\nolimits_{1}^{T} {\mathbf{R}}_{\omega 1} \) obtained by minimizing the cost function described as follows,

where \( ||.||_{\text{F}}^{2} \) denotes the Frobenius norm. The cost function ξ(P1) is a quadratic (convex) function of P1, which can be minimized to give the unique least-square solution for P1, that can be evidently shown as,

further by defining another matrix \( Q_{\omega } = [P_{1}^{T} - I_{M - D} ]^{T} \), such that Q Tω A(ω) = 0(M-D)×D which can be used to estimate the real valued harmonic frequencies of first dimension {ωk} for k = 1,2…,D as like [25]. Thus when the number of snapshots are finite the frequencies of first dimension can be estimated by minimizing following cost function, \( \hat{f}\left( \omega \right) = a^{T} \left( \omega \right) \hat{E} a\left( \omega \right) \) where \( a(\omega ) = [1 cos\omega \ldots cos\left( {M - 1} \right)\omega ]^{T } \) and \( \hat{E} \,\triangleq\, \hat{Q}_{\omega } (\hat{Q}_{\omega }^{T} \hat{Q} _{\omega } )^{ - 1} \hat{Q}_{\omega }^{T} \). The orthonormality of matrix \( \hat{Q}_{\omega } \) is used in order to improve the estimation performance while E is calculated implicitly using matrix inversion lemma as [24] and \( \hat{E}\,and \hat{Q}_{\omega } \) are the estimates of E and Qω.

Steps for estimating ωk:

-

Calculate the estimate \( \hat{R}_{\omega } \) of the cross-correlation matrix Rω using (20).

-

Partition \( \hat{R}_{\omega } \) and determine \( \hat{R}_{\omega 1} \) and \( \hat{R}_{\omega 2} \).

-

Determine the estimate of the propagator matrix P1 using (23).

-

Define \( \hat{Q}_{\omega } = [\hat{P}_{1}^{T} - I_{M - D} ]^{T} \) and from \( \hat{Q}_{\omega } \) find out \( \hat{E} \,\triangleq\, \hat{Q}_{\omega } (\hat{Q}_{\omega }^{T} \hat{Q}_{\omega } )^{ - 1} \hat{Q}_{\omega }^{T} \) using matrix inversion lemma.

-

The first dimension frequencies that is, {ωk} for k = 1,2…,D estimated by minimizing the following cost function, \( \hat{f}\left( \omega \right) = a^{T} \left( \omega \right) \hat{E}a\left( \omega \right) \).

3.2 Estimation of Second Dimension Frequencies

The method adopted for estimating the first dimension frequencies ωi for i = 1, 2,…,D, can be used for estimating the second dimension frequencies vi for i = 1, 2,…,D. That is the same procedure used in Sect. 3.1 of this Section applied to estimate second dimension frequencies. The second dimension frequencies obtained by doing similar kind of operation across the data models developed in (6), (8) and (13).

The proposed method has notable advantages over the conventional MUSIC algorithm [15], such as computational simplicity and less restrictive noise model. Though it required peak search but there is no eigenvalue decomposition (SVD or EVD) involved in the proposed algorithm unlikely MUSIC, where the EVD of the auto correlation matrix is needed. It also provide quite efficient estimate of the frequencies and the estimated frequencies in both dimensions are automatically paired.

4 Simulation Results

Computer simulation have been carried out to evaluate the frequency estimation performance of the proposed algorithm for 2-D multiple real-valued sinusoids in presence of additive white Gaussian noise. The average root-mean-square-error (RMSE) is employed as performance measure, apart from that some other simulations also conducted to show the detection capability and bias of estimation. Besides CRLB, the performance of the proposed algorithm is compared with those of 2D-MUSIC and 2D-ESPRIT [28] algorithms for real-valued sinusoids. Four type of analysis have been performed.

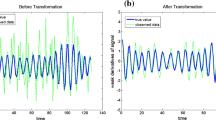

4.1 Analysis of Frequency Spectra

The signal parameters are N1 = N2 = 50 and the dimension of snapshot vector M = 20. Number of undamped 2-D real-valued sinusoids D = 2, amplitude {a k = 1} for k = 1,2…,D. The first dimension frequencies, and the second dimension frequencies are (ω1,ω2) = (0.1π,0.13π) and (v1,v2) = (0.13π,0.16π) respectively. Note that the frequency separation is 0.03, which is smaller than the Fourier resolution capacity 1/M (=0.05). This means classic FFT-based method cannot resolve these two frequencies, and also this method can resolve identical frequencies present in different dimensions (ω2 = v1 = 0.13π). Figure 1 displays spectra of the proposed algorithm at SNR = 10 dB. We can see from Fig. 1 that the frequency parameters in both the dimensions are accurately resolved. The estimated frequencies are shown in Table 1.

In second analysis that is shown in Fig. 2 where we considered the signal parameters are N1 = N2 = 100 and the dimension of snapshot vector M = 50, keeping all other parameters same as previous experiment. The detection of frequencies in both the dimensions are found to be more accurate. The estimated frequencies of this analysis are shown in Table 2.

4.2 Performance Analysis Considering RMSE

The same signal parameters as first analysis of Sect. 4.1 of this section is considered. We compared root-mean-square-error (RMSE) on the estimates for the proposed algorithm, MUSIC and 2-D ESPRIT algorithm as a function of SNR. Here a Monte-Carlo simulation of 500 runs was performed. Figure 3a shows the RMSEs and the corresponding CRB of first 2-D frequencies {ωk}, while Fig. 3b shows the second 2-D frequencies {vk} (k = 1,2…,D). It is clearly seen that the proposed algorithm outperforms the ESPRIT algorithm and in lower SNR case the performance is similar to that of MUSIC algorithm. As SNR increases the proposed algorithm performs exactly same as that of MUSIC algorithm.

4.3 Performance Analysis Considering Probability of Correct Estimation and Bias of Estimation

In this analysis, we considered Probability of correct estimation of frequencies as performance measure. Taking the same signal parameters as of last two Sections, we determined the probability of correct estimation of 2-D real-valued sinusoidal signal frequencies for both dimensions by varying SNR. The obtained results are shown in Fig. 4a, b. From the above analysis, it is evident that proposed method performs far superior compared to 2-D ESPRIT and behaves in a same way as conventional MUSIC algorithm but without any eigendecomposition (EVD/SVD). Similarly we analyzed the bias of estimation for each dimensions and the results are plotted in Fig. 5a, b respectively. From bias analysis, it is clear that the proposed method performs much better than ESPRIT and almost similar to that conventional MUSIC algorithm in varied SNR ranges.

4.4 Performance Analysis Considering Computational Time

In this section we compared the performance of proposed method and conventional MUSIC algorithm based on their computational timing. Considering the same signal parameters at a fixed SNR of 10 dB we vary the snap shot vector dimension (M) and the results are plotted in Fig. 6. From Fig. 6 its clear that proposed method is less time consuming compared to conventional MUSIC algorithms.

5 Conclusion

We have proposed a new approach based on subspace method without eigendecompostion using cross-correlation matrix for estimation of multiple real-valued 2-D sinusoidal signal frequencies embedded with additive white Gaussian noise. We have analytically quantified the performance of the proposed algorithm. It is shown that our algorithm remains operational when there exist identical frequencies in both the dimensions. Simulation results show that the proposed algorithm offers comparative performance when compared to MUSIC algorithm, but at a lower computational complexity and exhibit far superior performance when compared to ESPRIT algorithm. The frequency estimates thus obtained are automatically paired without an extra pairing algorithm.

References

Francos JM, Friedlander B (1998) Parameter estimation of two-dimensional moving average random fields. IEEE Trans Signal Process 46:2157–2165

Wang H, Williams K (1996) Vibrational modes of cylinders of finite length. J Sound Vib 191(5):955–971

Verma S, Singal R, Williams K (1987) Vibration behavior of stators of electrical machines, part I: theoretical study. J Sound Vib 115(1):1–12

Singal R, Williams K, Verma S (1987) Vibration behavior of stators of electrical machines, part II: experimental study. J Sound Vib 115(1):13–23

Zhang XM (2001) Vibration analysis of cross-ply laminated composite cylindrical shells using the wave propagation approach. Appl Acoust 62:1221–1228

So J, Leissa A (1998) Three-dimensional vibrations of thick circular and annular plates. J Sound Vib 209(1):15–41

Skatter S (1996) TV holography as a possible tool for measuring transversevibration of logs: a pilot study. Wood Fiber Sci 28(3):278–285

Axmon J, Hansson M (1999) Estimation of circumferential mode parameters of living trees. In: Proceedings of the IASTED international conference on signal image process (SIP’99), pp 188–192

Axmon J (2000) On detection of decay in growing norway spruce via natural frequencies. Licentiate of Engineering Thesis Lund University. Lund, Sweden, Oct 2000

Axmon J, Hansson M, Sörnmo L (2002) Modal analysis of living spruce using a combined Prony and DFT multichannel method for detection of internal decay. Mech Syst Signal Process 16(4):561–584

Hua Y (1992) Estimating two-dimensional frequencies by matrix enhancement and matrix pencil. IEEE Trans Signal Process 40(9):2267–2280

Haardt M, Roemer F, DelGaldo G (2008) Higher-order SVD-based subspace estimation to improve the parameter estimation accuracy in multidimensional harmonic retrieval problems. IEEE Trans Signal Process 56(7):3198–3213

Mahata K (2005) Subspace fitting approaches for frequency estimation using real-valued data. IEEE Trans Signal process 53(8):3099–3110

Palanisamy P, Sambit PK (2011) Estimation of real-valued sinusoidal signal frequencies based on ESPRIT and propagator method. In: Proceedings of the IEEE-international conference on recent trends in information technology, pp 69–73 June 2011

Stoica P, Eriksson A (1995) MUSIC estimation of real-valued sine-wave frequencies. Signal process 42(2):139–146

Priestley MB (1981) Spectral analysis and time series. Academic, New York

Walker M (1971) On the estimation of a harmonic component in a time series with stationary independent residuals. Biometrika 58:21–36

Rao CR, Zhao L, Zhou B (1994) Maximum likelihood estimation of 2-D superimposed exponential signals. IEEE Trans Signal Process 42:1795–1802

Kundu D, Mitra A (1996) Asymptotic properties of the least squares estimates of 2-D exponential signals. Multidim Syst Signal Process 7:135–150

Lang SW, McClellan JH (1982) The extension of Pisarenko’s method to multiple dimensions. In: Proceedings of the international conference on acoustics, speech, and signal processing, pp 125–128

Kumaresan R, Tufts DW (1981) A two-dimensional technique for frequency wave number estimation. Proc IEEE 69:1515–1517

Axmon J, Hansson M, Sörnmo L (2005) Partial forward–backward averaging for enhanced frequency estimation of real X-texture modes. IEEE Trans Signal Process 53(7):2550–2562

So HC, Chan FKW, Chan CF (2010) Utilizing principal singular vectors for two-dimensional single frequency estimation. In: Proceedings of the IEEE international conference on acoustics, speech, signal process, pp 3882–3886, March 2010

Xin J, Sano A (2004) Computationally efficient subspace-based method for direction-of-arrival estimation without eigen decomposition. IEEE Trans Signal process 52(4):876–893

Sambit PK, Palanisamy P (2012) A propagator method like algorithm for estimation of multiple real-valued sinusoidal signal frequencies. Int J Electron Electr Eng 6:254–258

Marcos S, Marsal A, Benidir M (1995) The propagator method for source bearing estimation. Signal Process 42(2):121–138

Golub GH, Van Loan CF (1980) An analysis of the total least squares problem. SIAM J Numer Anal 17:883–893

Rouquette S, Najim M (2001) Estimation of frequencies and damping factors by two-dimensional ESPRIT type methods. IEEE Trans Signal Process 49(1):237–245

Author information

Authors and Affiliations

Corresponding authors

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2013 Springer India

About this paper

Cite this paper

Sambit, P.K., Palanisamy, P. (2013). An Efficient Two Dimensional Multiple Real-Valued Sinusoidal Signal Frequency Estimation Algorithm. In: S, M., Kumar, S. (eds) Proceedings of the Fourth International Conference on Signal and Image Processing 2012 (ICSIP 2012). Lecture Notes in Electrical Engineering, vol 221. Springer, India. https://doi.org/10.1007/978-81-322-0997-3_9

Download citation

DOI: https://doi.org/10.1007/978-81-322-0997-3_9

Published:

Publisher Name: Springer, India

Print ISBN: 978-81-322-0996-6

Online ISBN: 978-81-322-0997-3

eBook Packages: EngineeringEngineering (R0)