Abstract

A finite-time observer is designed for linear invariant systems in the presence of unknown inputs or disturbances with unavailable upper bound. The main condition for designing the observer is strongly detectability. Geometric control theory is used to decompose the given system into two parts: strongly observable subsystem and strongly detectable subsystem. Through a series of transformations, the former can be partitioned into two parts: affected and unaffected by unknown inputs (UI-free and UI-dependent), and then the states can be exactly estimated via time-delayed observer in pre-defined time. The nonstrongly observable subsystem can be observed asymptotically. Without the upper bound of disturbances, the observer ensures that the convergence time can be set arbitrarily. A numerical example illustrates the effective of the proposed estimation schemes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Antecedents and Motivation

The problem of state observation for systems with disturbances or unknown inputs is important in modern control theory. Such observation problem plays an essential role in model-based fault detection [9, 25, 42]. The residuals of faulty system is made by the difference between the actual system outputs and the estimated outputs based on state estimation and when a system is affected by disturbances or unknown inputs, their effects have to be decoupled from the residuals to avoid false alarms [31]. Another application field is feedback control. After estimating the undetectable states and disturbances, robust feedback controllers can be designed [7, 27].

As the early linear observer, Luenberger observer [29] has been already applied and improved greatly [15, 37]. The convergence of such observers based on Luenberger observer is always asymptotic with time. The convergence rate is exponential and can be assigned by suitably choosing the observer gain.

In the presence of disturbances or unknown inputs, unknown input observer (UIO) has been developed. The problem of UIO has been initialized by Basile and Marro [1], Guidorzi and Marro [19]. Since then, there have been some developments for UIOs [8, 10, 23, 38]. A reduced order observer has been proposed by Hou and Muller for LTI system through a simple transformation [23], where the states are decomposed into disturbance-free and disturbance-affected components. A different decomposition approach is given by M. Lungu to reduce the LTI system with unknown inputs to a standard one [11]. Considering disturbances and actuator faults, Park decouples the state and output equations into three subsystems, and then reconstructs the states and faults [38]. Darouach designs full-order observers with unknown inputs in the state and measurement equations [10, 11], which design some matrixes to eliminate the influence of unknown inputs and to ensure the asymptotical convergence of observer error. Hui and Zak give a design procedure for full-order UIO using a projection operator approach [24]. Aiming at non-minimum phase systems or systems with non-unity relative degree, MS.Chen and CC.Chen estimate states and unknown inputs accurately [8].

For the majority of unknown input observers, one of the disadvantages is that only asymptotic convergence to zero of the observation and error is guaranteed [31]. Sometimes, it is necessary to ensure that the observation convergence time is less than the dwell time (e.g., in the case of walking robots [40]).

Sliding mode observers are widely used because of their insensitivity with respect to some classes of unknown inputs. The conventional sliding mode observer (SMO) [14, 47, 48] feeds back the output estimation error via a nonlinear term. Provided a bound on the magnitude of the disturbances, SMO can force the output estimation error to converge to zero in finite time, while the observer states converge asymptotically to the system states. Based on SMO, unknown inputs can be estimated [3, 25] and faults can be detected [42] or even reconstructed [21, 49]. The disadvantages of these observers are related to obligatory filtration, which causes an intrinsic error in the observed states that cannot be eliminated. A new generation of observers based on higher-order sliding mode (HOSM) differentiators has been recently studied [17, 18, 50], which avoid the filtration. In particular, HOSM-based observers can be considered as a successful technique for the state observation of perturbed systems, due to their high precision and robust behavior with respect to parametric uncertainties [44]. Fridman [17, 18] verifies that the finite time convergence of strongly observable states and asymptotic convergence of strongly detectable states for LTI system. One disadvantage of HOSM is that the bound of disturbances must be provided.

The problem of finite time stabilization is attractive because it usually demonstrates some nice features such as faster convergence rates and higher accuracies [13]. Besides HOSM, there are also some other observers ensuring finite time convergence. Engel and Kreisselmeier have designed a continuous observer that converges exactly to the state after a pre-defined time delay for linear systems [16]. The finite time converging estimate is computed from the present and delayed estimates provided by two distinct state observers. The application fields of the time-delayed observer have been extended to nonlinear systems [41] and linear time-varying systems [34]. By homogeneous systems theory, a continuous finite-time convergent observer was first proposed for double integrator systems. For higher-dimensional nonlinear cases, Shen [12, 43] develops a semi-global convergent observer rooted in [39] which have very small variations in homogeneous weight. Menard adds a linear error feedback to semi-global convergent observer, and ensuring global convergence [33]. Another global convergent idea is choosing appropriate observer gain [13, 28]. There are some other finite-time observers, such as terminal sliding-mode observer [45], moving horizon observer [35] and so on.

Aiming at finite time observability of the strongly detectable system, Bejarano decomposes nonstrongly observable system into strongly observable part and nonstrongly observable part. The states of strongly observable part are expressed as a function of output and its derivatives, which precision is easily affected by noises [4–6].

In the presence of unknown inputs or faults, Menold and Findeisen [34], Lee and Park [26] construct finite-time observers for LTI system based on reduced-order UIO and time-delayed observer. Lee and Park decompose a strongly detectable (observable) LTI system into an UI-free subsystem and two UI-dependent subsystems, and then observer the UI-free subsystem using time-delayed observer. Park [38] verifies that the strongly detectability (observability) of original system is equivalent to the detectability (observability) of UI-free subsystem. Time-delayed observer requires the observability of system [16], and furthermore, the observer poles can be placed arbitrarily, But the poles of detectable subsystem from strongly detectable system cannot be assigned arbitrarily. Therefore, not all states of strongly detectable system can be observed in finite time, which coincides with the definition of strongly detectability [21].

As stated above, most UIOs only provide asymptotic property [1, 8, 10, 11, 19, 24, 30, 38]. Some finite-time observers don’t consider the influence of disturbances [12, 22, 28, 33, 34, 39, 43]. Another observers, such as SMO and HOSM, can also provide finite-time convergence, but they need the bound of disturbances [3, 14, 17, 18, 21, 25, 42, 44, 47–50]. The methods of Bejarano [4–6] are easily affected by noises because of the use of derivatives of outputs. The combination of reduced-order UIO and time-delayed observer hardly estimate all strongly detectable states in finite time [26, 34]. In this paper, for strongly detectable systems with disturbances, strongly observable states and strongly detectable states are handled separately, and the upper bound of disturbances and derivatives of outputs are unnecessary in the process of finite time observation.

1.2 Contribution

In this paper, the observer is developed for the strongly detectable systems with disturbances, the upper bound of that is unavailable. The states of strongly observable subsystem can be reconstructed exactly in pre-defined arbitrary time without participation of some derivatives, and that of nonstrongly observable part are asymptotically estimated.

1.3 Structure of the Paper

In Sect. 2 we outline the problem statement and give some essential definitions. Section 3 is devoted to decomposing the given strongly detectable system. Section 4 deals with the design of finite time observer and asymptotic convergent observer. Some numerical examples are depicted in Sect. 5.

2 Problem Formulation

2.1 System Description

Let us consider the following continuous linear system affected by disturbances.

where \(x(t)\in \mathbb {R}^{n}\) represents the continuous state vector, \(u(t)\in \mathbb {R}^{m}\) is the known control input, \(y(t)\in \mathbb {R}^{p}\) is the measurable outputs of the system, \(\xi (t)\in \mathbb {R}^{q}\) is the disturbances or unknown inputs. \(A(t)\in \mathbb {R}^{n\times n}\), \(B(t)\in \mathbb {R}^{n\times m}\), \(C(t)\in \mathbb {R}^{p\times n}\), \(D(t)\in \mathbb {R}^{n\times q}\) is known constant matrices.

2.2 Preliminaries

Some definitions about the properties of system \(\Sigma \left( {A,C,D} \right) \) are introduced for designing the observer.

Definition 2.1

[20] \(\Sigma \) is strongly observable if for all \(x_0(t)\in \mathbb {R}^{n}\) and for every unknown input \(\xi (t)\), the following holds: \({y_\xi }\left( {t,{x_0}} \right) = 0\) for all \(t \ge 0\) implies \({x_0} \equiv 0\).

Definition 2.2

[20] \(\Sigma \) is strongly detectable if for all \(x_0(t)\in \mathbb {R}^{n}\) and for every unknown input \(\xi (t)\), the following holds: \({y_\xi }\left( {t,{x_0}} \right) = 0\) for all \(t \ge 0\) implies \(x_0=0\), as \(t \rightarrow \infty \).

Definition 2.3

[18] \(s_0\in \mathbb {C}\) is called an invariant zero of \(\Sigma \) if \(R({s_0}) < n + R\left( D \right) \), where \(R(s)\) is the Rosenbrock matrix of system \(\Sigma \)

Definition 2.4

[46] \(\nu \) is called output-nulling controlled invariant subspace if for every \(x_0\in \nu \), there exists a \(\xi \) satisfying that \(\left( A{x_0} + D\xi \right) \in v\) and \(Cx_0 = 0\). \(\nu ^*\) is called the maximal output-nulling controlled invariant subspace if for every \(\nu \), we have \(\nu \in \nu ^*\).

Remark 2.1

[46] The weakly unobservable subspace coincides with the maximal output-nulling controlled invariant subspace \(\nu ^*\).

Lemma 2.1

[20, 46] The following statements about strongly observable system are equivalent.

-

(i)

the system \(\Sigma \) is strongly observable.

-

(ii)

the Rosenbrock matrix of system \(\Sigma \) has no invariant zeros.

-

(iii)

\(\nu ^* = 0\).

Lemma 2.2

[20, 46] The following statements about strongly observable system are equivalent.

-

(i)

the system \(\Sigma \) is strongly detectable.

-

(ii)

the invariant zeros of the Rosenbrock matrix of system \(\Sigma \) satisfy Re \(s<0\).

Definition 2.5

[46] For a linear map \(A:X \rightarrow Y\), we define

called the kernel and image of \(A\), respectively.

From the following section, we can see that strongly observable system can be obtained from strongly detectable system, so this paper studies more extensive case-strongly detectable systems. Without loss of generality, it will be assumed that \(R(C) = p\), \(R(D) = q\), \(q < p\). The aim of this article is to build a scheme to provide the exact estimation of the states.

3 System Decomposition

3.1 Decomposition into Strongly Observable and Nonstrongly Observable Subsystems

Now, we will decompose the strongly detectable system \(\Sigma \left( {A,C,D} \right) \) into the strongly observable part and the nonstrongly observable part by employing geometric control theory [17]. With this aim, we need basis of \(\nu ^*\). Next, we will give an algorithm to construct basis of the weakly unobservable subspace \(\nu ^*\).

According to the definition of controlled invariant subspace [46], \(\nu ^*\) is the maximal \(( A, imD)\) controlled invariant subspace contained in the kernel of the map \(C\), so \(\nu ^*\) can be computed with the sequence [32, 46].

That converges in finite number of steps, i.e. for some integer \(k\), we have \({\nu _k} = {\nu _{k + 1}}\) for all \(t \ge k\). Consequently, the inclusion chain for \({\nu _t}\) must have the form

for some integer \(k\). We contend that \({\nu ^*} = {\nu _k}\).

Next, a matrix \(V\) is chosen as the basis of \({\nu ^*}\). F.J.Bejarano [4] constructs a nonsingular matrix rooted in [36] as following

where \({V^ \bot }\) satisfies \({V^ \bot }V = 0\).

The nonsingular matrices

where \({V^ + } = \left( {{V^T}V} \right) ^{ - 1}{V^T}, {M^ + } = {M^T}\left( {{M^T}M} \right) ^{ - 1}\).

Lemma 3.1

[2] A subspace \(\nu \) with basis \(V\) is an \(\left( {\begin{array}{l}A, imD\end{array}} \right) \)-controlled invariant subspace if and only if there exist matrices \({Q^*}\) and \({K^*}\) such that

From Eq. (3), we can see that \({\nu ^*}\) is controlled invariant subspace, so the basis \(V\) of \({\nu ^*}\) satisfy Eq. (5), which can be rewritten as \(AV = \left[ {\begin{array}{ll}V&\quad D\end{array}} \right] \left[ {\begin{array}{l}{{Q^*}}\\ {{K^*}}\end{array}} \right] \). Then all solutions of Eq. (5) are

where \(im{H_1} = ker \left[ {\begin{array}{ll}V&D\end{array}} \right] \) and \({K_1}\) is arbitrary, so \({Q^*}\) and \({K^*}\) are not unique.

Taking into account that \({V^ + }V = I\), from Eq. (5) it is easy to obtain the following equation:

where \({\bar{K}^*} = {K^*}{V^ + }\).

Let \(\bar{x} = Px\), the system in the new coordinate can be rewritten as follows:

where

The first subsystem \(({\bar{A}_{11}},{\bar{C}_1},{\bar{D}_1})\) is strongly observable, \({\bar{x}_1}(t){ \in \mathbb {R}^l}\) and \(R({\bar{D}_1}) = q\) [6].

3.2 Decomposition into UI-Free and UI-Dependent Subsystems

Consider the first strongly observable subsystem of (8)

Choose a nonsingular matrix

for a co-ordinate transformation of the strongly observable subsystem Eq. (10), where \(N\) is an arbitrary matrix making \(T\) nonsingular. Let \({\bar{x}_1}(t) = T{\tilde{x}_1}(t)\) and then the subsystem can be separated into the following form:

where \({\tilde{x}_{11}}(t){ \in \mathbb {R}^{l - q}},{\tilde{x}_{12}}(t){ \in \mathbb {R} ^q}\). In (8a) the second differential equation involves the unknown input. Dropping the UI-dependent equation, and then UI-free differential equation as following:

Assuming \(R({\bar{C}_1}{\bar{D}_1}) = R({\bar{D}_1}) = q\), and a nonsingular matrix can be chosen as following :

where \(Q\) is an arbitrary matrix making \(U\) nonsingular.

Defining

so

Pre-multiplying both sides of (12b) by \({U^{ - 1}}\), we have

Substituting (14) into (13), we have

where

4 Reconstruction of System States

Lemma 4.1

Assuming \(R({\bar{C}_1}{\bar{D}_1}) = R({\bar{D}_1}) = q\). Then \(({\bar{A}_{11}},{\bar{C}_1},{\bar{D}_1})\) is strongly observable, if and only if \((\mathbf {A}_1,\mathbf {C}_1)\) is observable.

Proof

Let

Designing nonsingular matrix

where \(V = [ {\begin{array}{ll}{ - {{\tilde{A}}_3}}&\quad {s{I_q} - {{\tilde{A}}_4}}\end{array}}] \). Obviously, \(R(M) = l + p,R(H) = l + q\).

\(({\bar{A}_{11}},{\bar{C}_1},{\bar{D}_1})\) is strongly observable, and can be expressed as following:

And then

Obviously, \( \left[ {\begin{array}{ll}{s{I_l} - {A_{11}}}&{}\quad {{{\bar{D}}_1}}\\ {{{\bar{C}}_1}}&{}0\end{array}} \right] \) loses rank if and only if \(\left[ {\begin{array}{l}{s{I_{l - q}} - \mathbf {A}_1}\\ {\mathbf {C}_1}\end{array}} \right] \) loses rank, so \( R\left[ {\begin{array}{l}{s{I_{l - q}} - \mathbf {A}_1}\\ {\mathbf {C}_1}\end{array}} \right] = l - q\) for all \(s\in \mathbb {C}\), i.e. \((\mathbf {A}_1,\mathbf {C}_1)\) is observable. End proof. \(\square \)

4.1 Reconstruction of Strongly Observable States

From Lemma 4.1, \((\mathbf {A}_1,\mathbf {C}_1)\) is observable, so a finite time observer(time-delayed observer) can be designed for Eq. (15) [16]. One can always find two different vectors \({L_1}\) and \({L_2}\) satisfying

which are stable. So we can design two standard Luenberger observers:

Designing

Then the state estimate of the system equation (15) is obtained by projecting the current states \({\tilde{z}_1}(t)\), \({\tilde{z}_2}(t)\) and the time-delayed observer states \({\tilde{z}_1}(t - \tau )\), \({\tilde{z}_2}(t - \tau )\) with time delay \(\tau \). i.e.

where \(\det \left[ {\begin{array}{ll}K&\quad {{e^{J\tau }}K}\end{array}} \right] \ne 0\), \(S: = \left[ {\begin{array}{ll}{{I_{(l - q) \times (l - q)}}}&\quad {{0_{(l - q) \times (l - q)}}}\end{array}} \right] {\left[ {\begin{array}{ll}K&\quad {{e^{J\tau }}K}\end{array}} \right] ^{ - 1}}\), so the observer (17) can guarantee that \({\hat{\tilde{x}}_{11}}(t)\) converge to \({\tilde{x}_{11}}(t)\) in finite time \(\tau \). Furthermore, one can see that the observer must be defined on the time interval \(t \in \left[ {{t_0},{t_0} + \tau } \right] \), i.e. the estimation error \(e(t) = {\hat{ \tilde{x}}_{11}}(t) - {\tilde{x}_{11}}(t)\) stays bounded \( \forall t \in \left[ {{t_0},{t_0} + \tau } \right] \).

Hence, for \(t \ge {t_0} + \tau \), from (14) and \({\bar{x}_1}(t) = T{\tilde{x}_1}(t)\), the state estimation of (10) is

Remark 4.1

[37] If \({L_i}\) is chosen such that \(Re{\lambda _i}({J_2}) < \sigma < Re{\lambda _i}({J_1}),i = 1,2, \cdots ,l - q\) for some \(\sigma < 0\), where \(Re{\lambda _i}({J_j})\) represents the real part of the Luenberger observer pole, then \(\det \left[ {\begin{array}{ll} K&\quad {{e^{J\tau }}K}\end{array}} \right] \ne 0\) for almost all \(\tau \in \mathbb {R}^+\).

Remark 4.2

If the Luenberger observer poles can be assigned arbitrarily, then the strict condition of Remark 4.1 can be easily met. Furthermore, observable system can provide arbitrary observer poles, but detectable system cannot, so it is difficult or unable to satisfy the condition of Remark 4.1 for detectable system. Lee and Park [26] can only deal with observable case, i.e., their scheme can only be applied into strongly observable system.

After observing the strongly observable part of system (1) in pre-defined time, the nonstrongly observable but strongly detectable subsystem can be estimated asymptotically as following.

4.2 Reconstruction of Nonstrongly Observable States

It has been assumed that \(R({\bar{D}_1}) = q\), so from system (8), we have

Substituted (19) into the second differential equation of (8a):

The observer of (20) can be designed as following:

so the error is governed by the following equation:

The strongly detectability of system \(\Sigma \) can result in the asymptotic convergence of \({\bar{x}_2}(t)\) [6], i.e.

The transformed states are estimated through a series of transformations, so the inverse process of the transformations can be used to obtain the states of system (1).

5 Numerical Examples

For observation problem, the known input does not play any role [4]. Therefore, without loss of generality it will be assumed that the control input \(u(t) = 0\). Then system (1) can be simplified into the following equation:

Consider the following example which is strongly detectable:

It can be verified that the system has an invariant zero at \({s_0} = - 1\). Defining \(\xi (t) = \sin (t)\).

To decompose the system into a strongly observable subsystem and a nonstrongly observable subsystem, the transformation matrices is chosen as following:

then the strongly observable subsystem \(({\bar{A}_{11}},{\bar{C}_1},{\bar{D}_1})\) can be obtained. The two following nonsingular matrixes \(T\) and \(U\) are used to decompose the above subsystem into UI-free and UI-dependent subsystems.

The time-delayed observer estimates the state of UI-free system in a pre-defined time, and simultaneously, the UI-dependent state can be easily calculated precisely.

After the states of strongly observable subsystem obtained, the nonstrongly observable state (strongly detectable state) is given by (21), which is asymptotic convergent.

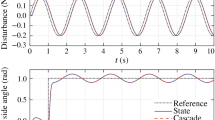

With the initial condition \({x_0} = \left[ {\begin{array}{l}1, 1, { - 1}\end{array}} \right] ^T\) for system (24) and the initial observer states all equal to zero, we define the convergence time \(\tau = 0.1s\), i.e. the estimation errors of strongly observable states are exactly equal to zero after the convergent time \(\tau \). In this example, it is easy to verify that \(x_1\) and \(x_2\) are strongly observable states. And then the finite time convergence and good accuracy of the estimation are both apparent from the plots of Fig. 1 Meanwhile, the following figure illustrates the difference between the asymptotic convergence behavior and the finite time convergence behavior.

The high-precision and pre-defined convergent time are not affected by the change of system initial states. To validate this, the initial condition of system (Sect. 2.2) is assigned on \(\left[ {{x_{in}},{x_{in}}, - {x_{in}}} \right] \), where \({x_{in}}\) is equal to 0.5, 1, 1.5, 2, 2.5, 3, 3.5, 4, 4.5 and 5 respectively, so the details of the trajectories of errors are shown in Figs. 2 and 3. The following two figures show that the strongly observable states can converge to the true states exactly in pre-defined time regardless of initial condition.

6 Conclusions

We suggested a state observer for linear invariant strongly detectable systems in the presence of unknown inputs with unavailable upper bound. Geometric control theory is made use of to decompose a strongly detectable system into a strongly observable part and a nonstrongly observable (but strongly detectable) part. For the former part, time-delayed observer can exactly estimate its states in arbitrarily pre-defined time. And for the latter part, the states are observed asymptotically. The simulation validates the high-precision and finite-time convergence of the presented observer, which cannot be affected by the change of initial states.

References

G. Basile, J. Marro, On the observability of linear time-invariant systems with unknown inputs. J. Optim. Theory Appl. 3(6), 410–415 (1969)

G. Basile, G. Marro, Controlled and Conditioned Invariants in Linear System Theory (Prentice Hall, Englewood Cliffs, 1992)

F. Bejarano, L. Fridman, A. Pisano, Global Hierarchical Observer for Linear Systems with Unknown Inputs, in 47th IEEE Conference on Decision and Control. Mexico (2008)

F. Bejarano, L. Fridman, A. Poznyak, Unknown input and state estimation for unobservable systems. SIAM J. Control Optim. 48(2), 1155–1178 (2009)

F. Bejarano, T. Floquet, W. Perruquetti, G. Zheng, Observability and detectability of singular linear systems with unknown inputs. Automatica 49(3), 793–800 (2013)

F. Bejarano, Partial unknown input reconstruction for linear systems. Automatica 47(8), 1751–1756 (2011)

H. Chehimi, S.H. Said, F. Msahli, Unknown inputs observer based output feedback controller for rotor resistance estimation in IMs. Int. J. Sci. Tech. Autom. Control Comput. Eng. 5(1), 1532–1543 (2011)

M.S. Chen, C.C. Chen, Unknown input observer for linear non-minimum phase systems. J. Frankl. Inst. 347(2), 577–588 (2010)

J. Chen, R.J. Patton, H.Y. Zhang, Design of unknown input observers and robust fault detection filters. Int. J. Control 63(1), 85–105 (1996)

M. Darouach, M. Zasadzinski, S.J. Xu, Full-order observers for linear systems with unknown inputs. IEEE Trans. Autom. Control 39(3), 606–609 (1994)

M. Darouach, Complements to full order observers design for linear systems with unknown inputs. Appl. Math. Lett. 22(7), 1107–1111 (2009)

X. Deng, Y. Shen, Aapproach to design semi-global finite-time observers for a class of nonlinear systems. Sci. China Ser. F. Inf. Sci. 52(10), 1746–1753 (2009)

H. Du, C. Qian, S. Yang, S. Li, Recursive design of finite-time convergent observers for a class of time-varying nonlinear systems. Automatica 49(2), 601–609 (2013)

C. Edwards, S.K. Spurgeon, On the development of discontinuous observers. Int. J. Control 59(5), 1211–1229 (1994)

M. Ekramian, F. Sheikholeslam, S. Hosseinnia, Adaptive state observer for Lipschitz nonlinear systems. Syst. Control Lett. 62(4), 319–323 (2013)

R. Engel, G. Kreisselmeier, A continuous-time observer which converges in finite time. IEEE Trans. Autom. Control 47(7), 1202–1204 (2002)

L. Fridman, J. Davial, A. Levant, High-order sliding-mode observation for linear systems with unknown inputs. Nonlinear Anal. 5(2), 189–205 (2011)

L. Fridman, A. Levant, J. Davila, Observation of linear systems with unknown inputs via high-order sliding-mode. Int. J. Syst. Sci. 38(10), 773–791 (2007)

R. Guidorzi, G. Marro, On Wonham stabilizability condition in the synthesis of observers for unknown input systems. IEEE Trans. Autom. Control 16(5), 499–500 (1971)

M. Hautus, Strong detectability and observers. Linear Algebra Appl. 50, 353–368 (1983)

J. He, C. Zhang, Fault reconstruction based on sliding mode observers for nonlinear systems. Math. Probl. Eng. (2012). doi:10.1155/2012/451843

Y. Hong, J. Huang, Y. Xu, On an output feedback-time stabilization problem. IEEE Trans. Autom. Control. 46(2), 305–309 (2001)

M. Hou, P.C. Mller, Design of observers for linear systems with unknown inputs. IEEE Trans. Autom. Control 37(6), 871–875 (1992)

S. Hui, S.H. Zak, Observer design for systems with unknown inputs. Int. J. Appl. Math. Comput 15(4), 431–446 (2005)

B. Kulcsar, J. Bokor, J. Shinar, Unknown input reconstruction for LPV systems. Int. J. Robust Nonlinear Control 20(5), 579–595 (2010)

K.S. Lee, T.G. Park, New results on fault reconstruction using a finite-time converging unknown observer. IET Control Theory Appl. 6(9), 1258–1269 (2012)

J. LI, Y. Fang, S. Shi, Robust output-feedback for hydraulic servo-position system of cold-strip rolling mill. Control Theory Appl. 29(3), 331–336 (2012)

Y. Li, X. Xia, Y. Shen, A high-gain-based global finite-time nonlinear observer, in 9th ICCA Santiago Chile (2011)

D.G. Luenberger, An introduction to observers. IEEE Trans. Autom. Control 16(6), 596–602 (1971)

M. Lungu, R. Lungu, Reduced order observer for linear time-invariant multivariable systems with unknown inputs. Circuits Syst. Signal Process. 32(6), 2883–2898 (2013)

M. Lungu, R. Lungu, Full-order observer design for linear systems with unknown inputs. Int. J. Control 85(10), 1602–1615 (2012)

G. Marro F. Morbidi, L. Ntogramatzidis, D. Prattichizzo, Geometric Control Theory for Linear Systems:a Tutorial, in Proceedings of the 19th International Symposium on MTNS Budapest Hungary (2010)

T. Menard, E. Moulay, W. Perruquetti, A global high-gain finite-time observer. IEEE Trans. Autom. Control 55(6), 1500–1506 (2010)

P.H. Menold, R. Findeisen, F. Allgower, Finite time convergent observers for linear time-varying systems, in Proceedings of the 11th Mediterranean Conference on Control and Automation (MED’03) Rhodos Greece (2003)

H. Michalska, D. Mayne, Moving horizon observers and observer-based control. IEEE Trans. Autom. Control 40(6), 995–1006 (1995)

B.P. Molinari, A strong controllability and observability in linear multivariable control. IEEE Trans. Autom. Control 21(5), 761–764 (1976)

J. O’Reilly, Observers for Linear Systems (Academic Press, New York, 1983)

T.G. Park, Estimation strategies for fault isolation of linear systems with disturbances. IET Control Theory Appl. 4(12), 2781–2792 (2010)

W. Perruquetti, T. Floquet, E. Moulay, Finite-time observers: application to secure communication. IEEE Trans. Autom. Control 53(1), 356–360 (2008)

F. Plestan, J.W. Grizzle, E.R. Westervelt, G. Abba, Stable walking of a 7-dof biped robot. IEEE Trans. Robot. Autom. 219(4), 653–668 (2003)

F. Sauvage, M. Guay, D. Dochain, Design of a nonlinear finite-time converging observer for a class of nonlinear systems. J. Control Sci. Eng. (2007). doi:10.1155/2007/36954

S. Shao, P.W. Wheeler, J.C. Clare, Fault detection for modular multilevel converters based on sliding mode observer. IEEE Trans. Power Electron. 28(11), 4867–4872 (2013)

Y. Shen, X. Xia, Semi-global finite-time observers for nonlinear systems. Automatica 44(12), 3152–3156 (2008)

y Shtessel, C. Edwards, L. Fridman, A. Levant, Sliding Mode Control and Observation (Springer, New York, 2013)

C.P. Tan, X. Yu, Z. Man, Terminal sliding mode observers for a class of nonlinear systems. Automatica 46(8), 1401–1404 (2010)

H. Trentelman, A.A. Stoorvogel, M. Hautus, Control Theory for Linear Systems, Communications and Control Engineering (Springer, New York, 2001)

V.I. Utkin, Sliding Modes in Control and Optimization (Springer, Berlin, 1992)

B. Walcott, S. Zak, State observation of nonlinear uncertain dynamical systems. IEEE Trans. Autom. Control 32(2), 166–170 (1987)

X.G. Yan, C. Edwards, Adaptive sliding-mode-observer-based fault reconstruction for nonlinear ystems with parametric uncertainties. IEEE Trans. Ind. Electron. 55(11), 4029–4036 (2008)

F. Zhu, State estimation and unknown input reconstruction via both reduced-order and high-order mode observers. J. Process. Control 22(1), 296–302 (2012)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Li, F., Zhao, G. & Huang, J. Exact State Estimation for Linear Systems with Unbounded Disturbances. Circuits Syst Signal Process 34, 1519–1533 (2015). https://doi.org/10.1007/s00034-014-9918-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-014-9918-y