Abstract

Mathematical models play a crucial role in controlling and preventing the spread of diseases. Based on the communication characteristics of diseases, it is necessary to take into account some essential epidemiological factors such as the time delay that takes an individual to progress from being latent to become infectious, the infectious age which refers to the duration since the initial infection and the occurrence of reinfection after a period of improvement known as relapse, etc. Moreover, age-structured models serve as a powerful tool that allows us to incorporate age variables into the modeling process to better understand the effect of these factors on the transmission mechanism of diseases. In this paper, motivated by the above fact, we reformulate an SEIR model with relapse and age structure in both latent and infected classes. Then, we investigate the asymptotic behavior of the model by using the stability theory of differential equations. For this purpose, we introduce the basic reproduction number \(\mathcal {R}_0\) of the model and show that this threshold parameter completely governs the stability of each equilibrium of the model. Our approach to show global attractivity is based on the fluctuation lemma and Lyapunov functionals method with some results on the persistence theory. The conclusion is that the system has a disease-free equilibrium which is globally asymptotically stable if \(\mathcal {R}_0<1\), while it has only a unique positive endemic equilibrium which is globally asymptotically stable whenever \(\mathcal {R}_0>1\). Our results imply that early diagnosis of latent infection with decrease in both transmission and relapse rates may lead to control and restrict the spread of disease. The theoretical results are illustrated with numerical simulations, which indicate that the age variable is an essential factor affecting the spread of the epidemic.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Infectious diseases have posed a significant threat to human health and economic growth. Consequently, controlling and reducing these diseases is becoming an increasingly collective priority. Mathematical modeling is a useful tool to investigate various characteristics of diseases such as their rapid spread, transmission routes, incubation period, relapse phase, and more. Compartmental models are an elegant method to understand how infectious diseases spread and identify the epidemiological factors that influence the propagation of the disease throughout the population [1,2,3]. Usually, epidemiological models assume that the population of susceptible individuals can progress to different categories of infection, such as exposed, infected, reinfected, and recovered. Among many models used to study the transmission dynamics of infectious diseases, the susceptible-infective-recovered (SIR) models have received more attention. In 1927, Kermack and McKendrick [4] were the first to develop the SIR model, where the total population is divided into three classes: susceptible, infective, and recovered. The model was initially designed to study infectious diseases such as measles, chickenpox, or mumps, where getting infected provides immunity. Since then, several versions of the SIR compartmental model have been created and developed to study different characteristics of infectious diseases, some of which are described in [5,6,7,8,9] and references therein.

In some infectious disease, there is a significant incubation period during which an individual has been infected but is not yet infectious [10]. For example, tuberculosis may take several months to develop into the infectious stage. Taking into account this fact, an additional category of individuals who have been exposed to the disease but are not yet capable of transmitting it has been added to the SIR epidemic model and then the novel epidemic model known as SEIR was introduced (see, e.g., [11,12,13]). Moreover, due to several factors, a recovered individual can be reinfected again after a period of improvement which is known as the relapse phase (see, e.g., [14,15,16]). Identifying the related factors that affect reinfection in recovered individuals allows public health services to create prevention programs and develop control strategies that eliminate such factors and reduce the relapse rate [17, 18].

Most of these models are based on differential equations (ODE), which assume that all individuals in different categories have the same waiting time. Otherwise, it is crucial to indicate that, for example, the duration of the latent period of exposed individuals may vary significantly among several elements and factors, such as the specificity of infections and the individual’s health status. For example, latent tuberculosis can remain in the inactive stage without causing disease for months, years, or even decades before becoming infectious, potentially developing into a severe stage, highly contagious, and deadly disease if left untreated or incompletely treated [19, 20]. Furthermore, the infection age period, which refers to the duration since the initial infection, is an important epidemiological element that plays a vital role in the modeling process of infectious diseases, particularly HIV/AIDS and hepatitis B (see, e.g., [21,22,23,24]).

In fact, age-structured models serve as a puissant tool to study the epidemiology of infectious disease ( see, e.g., [24,25,26]). Moreover, by investigating the global dynamics of these epidemic models, we can understand how the disease spreads through the population with the aim of developing some control strategies to reduce or even eradicate the spread of diseases. For example, Magal et al. [27] have investigated the global stability of endemic equilibrium for an age-structured model with infection age using the classic Volterra-type Lyapunov functions. These functions were also used to get the global stability of a delay SIR (susceptible-infected-removed) model with a fixed infection period in McCluskey [28] and Melnik and Korobeinikov [29] used them to obtain the global stability for SIR and SEIR models with age-dependent susceptibility. More details concerning the development of the Lyapunov functionals approach to study the global dynamics of infectious diseases, we name a few references [1, 18, 30,31,32,33,34,35,36,37].

Motivated by the above works, this paper introduces and analyzes an SEIR epidemic model with latency, infection age structure, and relapse. This model is appropriate for diseases that have latent periods and can also relapse, such as tuberculosis and herpes virus infection. To the best of our knowledge, our model is new, which lies in the fact that both latency and infection individuals are to be continuous age-dependent, with a relapse phase.

This study aims to clarify the global asymptotic behavior of the model by using the Lyapunov function method, which consists of constructing an appropriate Lyapunov function. For this purpose, we establish the threshold parameter \(\mathcal {R}_0\) in connection with the existence of the endemic equilibrium of the model. Then, we show that it determines the global asymptotic stability (or attractivity) of each equilibrium, that is, if \(\mathcal {R}_0<1\), then the disease-free equilibrium is globally asymptotically stable, whereas if \(\mathcal {R}_0>1\), then the endemic equilibrium uniquely exists and it is globally attractive.

The rest of this paper is organized as follows: In Sect. 2, we reformulate the mathematical model and provide some important preliminary concepts. Then, in Sect. 3, we show the existence of both disease-free and endemic equilibria of the model. Then, we analyze their local asymptotic stability by using the linearization approach. Furthermore, Sect. 4 is devoted to the relative compactness of solution semi-flow and the existence of a global attractor. Moreover, In Sect. 5, we prove the uniform persistence of the model. Section 6 discusses the global asymptotic stability of disease-free and endemic equilibria by employing the Lyapunov functional technique. Finally, Sect. 7 is dedicated to perform some numerical simulations that illustrate our theoretical results.

2 Model formulation

To create our model, we assume that the population can be divided into four subsets, namely, susceptible, exposed, infected, and recovered. Let S(t) be the size of the susceptible individual at time t, e(t, a) represent the density of exposed individuals who are infected but not yet infectious at time t with latency age a, i(t, b) denote the density of infected individual at time t with infection age b, and R(t) the size of recovered individuals at time t. Then, we suppose that the model to be studied is based on the following assumptions:

-

i.

Susceptible individuals become infected when they come into contact with infectious individuals. In this context, we consider the bilinear form \(\displaystyle S(t) \int \nolimits _{0}^{\infty } \vartheta (b) i(t,b) \text {d} b\) as the incidence rate for our model, where \(\vartheta (b)\) represents the age-dependent transmission coefficient, which describes the contact process between susceptible and infectious individuals.

-

ii.

Exposed individuals can leave the latent classe and become infected at rate of \(\varphi (a)\). Thus, the total rate at which exposed individuals progress into the infectious class alive may be given by \(\displaystyle \int \nolimits _{0}^{\infty } \varphi (a) e(t,a) \text {d} a\).

-

iii.

Infected individuals move to the recovered class at an age-dependent rate of \(\psi (b)\). As a result, the total rate at which the infected individuals become recovered can be determined by \(\displaystyle \int \nolimits _{0}^{\infty } \psi (b) i(t,b)\text {d} b\).

-

iv.

After the improvement period, there is a possibility of relapse, and hence, the recovered individual may get reinfected again at a rate denoted by \(\delta \). Consequently, the quantity of the reinfected individual can be presented by the linear relapse rate \(\delta R(t)\).

-

v.

The natural mortality rate for all individuals is given by \(\mu \). Moreover, the death rates of exposed and infectious individuals because of the disease are given by \(\nu _1(a)\) and \(\nu _2(b)\), respectively.

Therefore, the disease spread model according to the above assumptions is represented as follows:

with boundary conditions

and initial condition, by biological reasons, are the positive continuous functions

where

Throughout this paper, we consider the following assumptions and notations:

Assumption A

Assume that

- i.:

-

A, \(\mu \), \(\delta >0\), and \(\nu _1, \nu _2, \varphi , \psi , \vartheta \in L_{+}^{\infty }(0, \infty )\).

- ii.:

-

\(\vartheta \) and \(\varphi \) are Lipschitz continuous functions on \(\mathbb {R}_+\), with Lipschitz coefficients \(L_\vartheta \) and \(L_\varphi \), respectively.

- iii.:

-

For any function \(\pi \in L_{+}^{\infty }(0, \infty )\), we denote

$$\begin{aligned} \underline{\pi }:={\text {ess}}\inf \limits _{\tau \in \mathbb {R}_+} \pi (\tau )<+\infty , \quad \text {and} \quad \overline{\pi }:={\text {ess}}\sup \limits _{\tau \in \mathbb {R}_+} \pi ({\tau })<+\infty . \end{aligned}$$

Let us define the following functional space:

equipped with the norm

Notice that the initial condition of system (1)–(3) can be expressed as follows:

By the standard theory of functional differential equations (see, e.g., [38, 39]), we can verify that system (1) with boundary conditions (2) and initial condition (3) has a unique nonnegative continuous solution (S, e, i, R).

Next, we define a continuous semi-flow \(\Phi \,: \, \mathbb {R}_+ \times X \rightarrow X\) generated by system (1)–(3) by

Hence,

Denote

where

Let

Then, we define the following biologically feasible region

Now, we can prove the following result:

Proposition 2.1

Considering system (1)–(3), then we have

- i.:

-

\(\Gamma \) is positively invariant for \(\{\Phi (t,x_0) \}_{t\ge 0}\), that is, \(\Phi (t,x_0) \in \Gamma \), for \(x_0 \in \Gamma \) and \(t\ge 0\);

- ii.:

-

\(\{\Phi (t,x_0)\}_{t\ge 0}\) is point dissipative and \(\Gamma \) attract all points in X.

Proof

First, by the definition of the semi-flow \(\Phi \) given by (6), we have

where \(p_1\) and \(p_2\) are provided by (7). Therefore, by using the boundary conditions (2), we can get

where \(\mu _0\) is given by (8). Hence, by the variation of the constants, we find

which implies that \(\Phi (t,x_0) \in \Gamma \), \(t\ge 0\), for any solution of system (1)–(3) satisfying \(x_0 \in \Gamma \). Thus, we have \(\{\Phi (t,x_0)\}_{t\ge 0}\) is positively invariant for the set \(\Gamma \). Moreover, if follows from (10) that \(\displaystyle \limsup _{t\rightarrow \infty }\big \Vert \Phi (t,x_0) \big \Vert _{X} \le \frac{A}{\mu _0}\), for any \(x_0 \in \Gamma \). Consequently, it can be concluded that \(\{\Phi (t,x_0)\}_{t\ge 0}\) is point dissipative and \(\Gamma \) is an attracting set for all points in X. This completes the proof. \(\square \)

The following properties are direct consequences of Proposition 2.1.

Proposition 2.2

If \(x_0 \in X\) and \(\Vert x_0 \Vert _X \le \eta \) for some constant \(\eta \ge \frac{A}{\mu _0}\), then the following statements hold true for \(t\ge 0\):

- i.:

-

\( 0 \le S(t), \, \displaystyle \int \limits _{0}^{\infty } e(t,a) \text {d} a, \, \displaystyle \int \limits _{0}^{\infty } i(t,b) \text {d} b, \, R(t) \le \eta \);

- ii.:

-

\(e(t,0) \le \overline{\vartheta }\, \eta ^2\), and \( i(t,0) \le (\overline{\varphi } + \delta )\eta \);

- iii.:

-

\(\displaystyle \liminf _{t \rightarrow \infty } S(t) \ge \frac{A}{\mu + \underline{\vartheta } \eta }\).

3 Existence and local stability of equilibria

In this section, we will establish the existence of both disease-free and endemic equilibria of system (1)–(3). Furthermore, we will analyze the local asymptotic stability of these equilibria by using the linearization technique described in Webb [26, Section 4.5]. Before going on, for the sake of clarity, let us introduce the following notations:

with

Evidently, it can be observed that \(\zeta _1, \zeta _3 \le 1\). Now, we could state the following result about the existence of the disease-free equilibrium:

Lemma 3.1

System (1)–(3) has always a unique disease-free equilibrium \(E^0 = (S^0, 0, 0, 0)\), where

Proof

When there is no disease-transmission (i.e., \(e(t,a)=i(t,b) =R(t)=0\) for all \(t,a,b \in \mathbb {R}_{+}\)), the disease-free equilibrium of system (1)–(3) must satisfy the following equation \(A-\mu S^0=0\), which implies that \(S^0 = \frac{A}{\mu }\). Then, without any restrictions, system (1)–(3) admits a unique disease-free equilibrium, denoted by \(E^0=(S^0, 0, 0,0)\). This proves Lemma 3.1. \(\square \)

Next, we pass to study the local asymptotic stability of the disease-free equilibrium \(E^0\) given in Lemma 3.1. It is worth noting that investigating the dynamical behavior of the disease-free equilibrium aims to identify the impact of disease elimination on the population.

Theorem 3.2

Let \(\mathcal {R}_0\) be given by (21). Then, the disease-free equilibrium \(E^0\) of system (1)–(3) is locally asymptotically stable if \(\mathcal {R}_0<1\), whereas it is unstable if \(\mathcal {R}_0>1\).

Proof

Let \(S^0\) be given by (13). Consider the following pertubation variables: \( \tilde{S}(t) = S(t)-S^0\), \(\tilde{e}(t,a)=e(t,a)\), \(\tilde{i}(t,b)= i(t,b) \), and \(\tilde{R}(t)=R(t)\). Then, by linearizing system (1)–(3) around \(E^0\), we could find

Furthermore, consider the following exponential solution functions \(\tilde{S}(t)= x_1 e^{\lambda t}\), \(\tilde{e}(t,a) = x_2(a) e^{\lambda t}\), \(\tilde{i}(t,b) = x_3(b) e^{\lambda t}\), and \(\tilde{R}(t)= x_4 e^{\lambda t}\), where \((x_1, x_2(a), x_3(b), x_4) \in X\) is to be determined later, and \(\lambda \in \mathbb {\mathbb {R}}\). Therefore, by substituting them into system (14), it yields

Solving the second and third differential equations of system (15), we obtain

and

Before going on, we need to show that \(\lambda + \mu \not =0\) and \(\lambda + \mu +\delta \not =0\). To this end, we suppose by contradiction that \(\lambda +\mu +\delta =0\). Then, by inserting (17) into the fourth equation of system (15), we get \(x_3(0)=0\). This together with the first equation of system (15) gives \(\lambda +\mu =0\), which is a contradiction. Similarly, we can also show that \(\lambda +\mu \not =0\). According to the fourth equation of system (15), we could obtain

Next, by substituting (16), (17) and (18) into the last equation of system (15), we find

where \(\widehat{\zeta }_1( \lambda ) \), \(\widehat{\zeta }_2( \lambda )\), and \( \widehat{\zeta }_3( \lambda )\) are the Laplace transform of functions \(\varphi \phi _1\), \(\vartheta \phi _2\) and \(\psi \phi _1\), respectively. Thus, the characteristic equation of the linear system (15) at \(E^0\) can be expressed as follows:

Note that G is continuously differentiable function and satisfies

Therefore, G is monotonically decreasing of \(\lambda \in \mathbb {R}\). Thus, with the help of the intermediate value theorem, we can deduce that any eigenvalue \(\lambda \) of the equation \(G(\lambda )=1\) has a positive real part if \(G(0) > 1\). Hence, the disease-free equilibrium \(E^0\) of system (1)–(3) is unstable when \(G(0)>1\). Next, we consider \(G(0)<1\), and we claim that all roots of Eq. (19) have negative real parts. To this end, we suppose that \(\lambda \) is a complex root satisfying \(G(\lambda ) = 1\), such that \(\text {Re} (\lambda )\ge 0\). Therefore, by taking the real part of Eq. (19), it yields the following

where we have used \(\text {Re} (\lambda )\ge 0\). Hence, we have

which contradicts the assumption of \(G(0)<1\). Thus, we conclude that any eigenvalue \(\lambda \) of Eq. (19) has a negative real part if \(G(0) < 1\). At this stage, we define the basic reproduction number of (1)–(3) by

which is the average number of secondary infections produced by an infected individual in a population completely susceptible (see, e.g., [3] for more details). In conclusion, from the above analysis, it follows that the disease-free equilibrium \(E^0\) of system (1)–(3) is locally asymptotically stable whenever \(\mathcal {R}_0 < 1\), and unstable if \(\mathcal {R}_0 >1\). This completes the proof. \(\square \)

Remark 3.3

Denote

According to (11), we have \( \zeta _3 = \displaystyle \int \limits _{0}^{\infty } \psi (b) e^{-\int \limits _{0}^{b}(\psi (\sigma ) + \nu _2(\sigma )+\mu ) \text {d} \sigma } \text {d} b \le 1\). Therefore, it can be readily deduced that \(\tilde{\zeta }_3 <1\).

Remark 3.4

Recall that \(\vartheta (b)\) represents the disease-transmission function rate, and \(\varphi (a)\) denotes the rate at which the exposed individual becomes infectious. Thus, we have

is the number of infectious individuals produced by the primary cases after the incubation period, where

- i.:

-

Denotes the initial susceptible population size.

- ii.:

-

Denotes the probability refers to the likelihood of an exposed individual becoming infectious.

- iii.:

-

Represents the total transmission rate of an infectious individual who can transmit the disease during their infectious period.

Moreover, since \(\psi (b)\) is the function rate at which the infectious individual becomes recovered, and \(\delta \) is the relapse rate, we have

is the number of infectious cases produced by the primary case after the relapse phase, where

- iv.:

-

Refers to the proportion of individuals who return to being infectious after having recovered.

- v.:

-

It represents the likelihood of infectious individuals surviving an infectious disease and becoming recovered.

Consequently, the basic reproduction number \(\mathcal {R}_0\) of system (1)–(3) can be expressed in the following way \(\mathcal {R}_0 = \mathcal {R}_{01} + \mathcal {R}_{02}\).

Next, we move to investigate the existence and local asymptotic stability of the endemic equilibrium of system (1)–(3). First, we introduce the following result which ensures the existence of the endemic equilibrium under some conditions.

Lemma 3.5

Let \(\mathcal {R}_0\) be defined by (21). Then, system (1)–(3) has a unique endemic equilibrium \(E^*= (S^*, e^*(a), i^*(b), R^*)\), if \(\mathcal {R}_0>1\), where

where \(\tilde{\zeta }_3<1\) is given by (22).

Proof

For system (1)–(3), an endemic equilibrium \(E^* = (S^*, e^*(a), i^*(b), R^*)\) should verify the following system

In view of the second and third differential equations of system (23), it yields

and

where \(\phi _1(a)\) and \(\phi _2(b)\) are provided by (12). Furthermore, from the fourth equation of system (23) and by using (25), we can obtain

Next, by inserting (26) into the last equation of (23) and by using (24) and (25), we find

which implies that

Notice that the fact that \(\tilde{\zeta }_3 <1\) ensures the nonnegativity of \(S^*\). Moreover, by substituting (27) into the first equation of system (23), it results

Then, from the fifth equation of system (23) and by using (28), it follows

Lastly, by inserting (28) into Eq. (26), we get

This completes the proof of Lemma 3.5. \(\square \)

Remark 3.6

Note that \(e^*(0)\) and \(i^*(0)\) can be written as follows: \(e^*(0) = S^* J^*\) and \(i^*(0)= W^*\), where

We can also observe that when \(J^*=0\), the endemic equilibrium \(E^*\) becomes the disease-free equilibrium \(E^0\).

In what follows, we analyze the local asymptotic stability of \(E^*\). Notice that the study of the dynamical behavior of the endemic equilibrium is intended to determine how disease spreads when it becomes endemic in a population.

Theorem 3.7

Suppose \(\mathcal {R}_0>1\). Then, the endemic equilibrium \(E^*\) is locally asymptotically stable.

Proof

Let \(S^*\), \(e^*(a)\), \(i^*(b)\), and \(R^*\) be given in Lemma 3.5. Consider the following perturbation variables: \(\bar{S}(t) = S(t)- S^* \), \(\bar{e}(t,a) = e(t,a) - e^*(a)\), \(\bar{i}(t,b) = i(t,b) -i^*(b)\), and \(\bar{R}(t)= R(t)- R^*\). Then, through linearization of system (1)–(3) around \(E^*\), we obtain the following linearized system:

where \(J^*\) is given by (29). Next, we consider the following exponential functions: \(\bar{S}(t)= y_1 e^{\omega t}\), \(\bar{e}(t,a) = y_2(a) e^{\omega t}\), \(\bar{i}(t,b) = y_3(b) e^{\omega t}\) and \(\bar{R}(t)= y_4 e^{\omega t}\), where \((y_1, y_2(a), y_3(b), y_4) \in X\) is to be determined later, and \(\omega \in \mathbb {\mathbb {R}}\). Then, by substituting them into system (30), it yields

Therefore, the solutions of the second and third differential equations of system (31) are given, respectively, by

and

Now, we show that \(\omega +\mu +J^*\not =0\) and \(\omega + \mu +\delta \not =0\). To this end, we assume that \(\omega +\mu +\delta =0\). Then, it results from (33) and the fourth equation of system (31) that \(y_3(0)=0\). Hence, from the first equation of system (31), it follows that \(\omega +\mu +J^*=0\), which results in a contradiction. In the same way, we can also show that \(\omega +\mu +J^* \not =0\). Next, in view of system (31), we can express the characteristic equation corresponding to \(E^*\) of the linearized system (30) as follows:

where \(\widehat{\zeta }_1( \omega ) \), \(\widehat{\zeta }_2( \omega )\) and \( \widehat{\zeta }_3( \omega )\) are the Laplace transform of the functions \(\varphi \phi _1\), \(\vartheta \phi _2\) and \(\psi \phi _1\), respectively. Thus, Eq. (34) can be rewritten as follows:

It is clearly to see that if \(\mathcal {R}_0>1\), then we have

Assume that the equation \(H(\omega )=1\) has a complex root \(\omega \) such that \(\text {Re} (\omega )\ge 0\). Then, by taking the real part of Eq. (35), we have

which goes against the inequality (36), and this contradicts the assumption of \(\mathcal {R}_0>1\). Consequently, any eigenvalue \(\omega \) of \(H(\omega ) = 1\) has a negative real part, if \(\mathcal {R}_0 > 1\). Therefore, the endemic equilibrium \(E^*\) is considered to be locally asymptotically stable, if \(\mathcal {R}_0>1\). This completes the proof. \(\square \)

Remark 3.8

Biologically speaking, Theorem 3.2 and Theorem 3.7 imply that the disease can be eliminated from the community when the basic reproduction number \(\mathcal {R}_0<1\); while the disease will be able to start spreading through a population when \(\mathcal {R}_0>1\), if the initial sizes of the populations of the model are in the basin of attraction of the equilibria \(E^0\) and \(E^*\), respectively. However, to ensure that the elimination or spreading of the disease is independent of the initial sizes of the populations, it is necessary to show that the equilibria \(E^0\) and \(E^*\) are globally asymptotically stable, respectively (see, Theorem 6.1 and Theorem 6.6 obtained below).

4 Existence of compact global attractor

In this section, we will show that the semi-flow generated by system (1)–(3) admits a compact global attractor, which is necessary to study the attractivity of the endemic equilibrium in Sect. 6. According to the approach presented in the monograph of Hale [40, Chapter 3], the existence of the global attractor is established with the help of the following

Lemma 4.1

[40, Theorem 3.4.6] If \(T(t) \,: \, X\rightarrow X\), \(t \in \mathbb {R}_+\) is asymptotically smooth, point dissipative and orbits of bounded sets are bounded, then there exists a global attractor.

One can observe that the second and third statements of Lemma 4.1 are obtained directly by Proposition 2.1. Then, to derive the first statement of Lemma 4.1 (i.e., asymptotic smoothness of the semi-flow \(\Phi \) ), we will use the following

Lemma 4.2

[40, Lemma 3.2.3] For each \(t\in \mathbb {R}_+\), suppose \(T(t) = S(t) + U(t) \,: \, X \rightarrow X\) has the property that U(t) is completely continuous and there is a continuous function \(k: \, \mathbb {R}_+ \times \mathbb {R}_+ \rightarrow \mathbb {R}_+ \) such that \(k(t, r) \rightarrow 0\) as \(t \rightarrow \infty \), and \( \vert S(t)x \vert \le k(t, r) \) if \(\vert x \vert < r\). Then, T(t), \(t\in \mathbb {R}_+\), is asymptotically smooth.

Before we proceed, it is necessary to state some essential ingredients. Firstly, by applying the characteristic method [26, Chapter 1], we can solve the second and third first-order hyperbolic partial differential equations of system (1) with boundary condition (2) and initial condition (3) along the characteristic lines \(t - a = const\), and \(t-b=const\), respectively, as follows:

and

Furthemorer, we introduce the following proposition:

Proposition 4.3

The functions J(t) and W(t) given by (4) and (5), respectively, are Lipschitz continous on \(\mathbb {R}_+ \), with Lipschitz constants \(L_J\) and \(L_W\), respectively.

Now, based on the above preparations, we are able to state the main result of this section.

Theorem 4.4

Assume \(\mathcal {R}_0>1\). Then, there exists a global attractor \(\textbf{A}\) for the solution semi-flow \(\Phi \) of system (1)–(3) in \(\Gamma \).

Proof

To show the asymptotic smoothness of the semi-flow \(\Phi \) defined by (6), we only need to apply Lemma 4.2. Specifically, for each \(t\in \mathbb {R}_+\) and \(x_0 \in \Gamma \), we define \(\Phi _1\) and \(\Phi _2\) by

where

and

where J(t) and W(t) are given by (4) and (5), respectively. Then, we have \(\Phi =\Phi _1 + \Phi _2\) and it is clearly to observe that \(\widehat{e}\), \(\widehat{i}\), \(\tilde{e}\) and \(\tilde{i}\) are nonnegatives. By using (39) and (40), we could find

where \(p_0= \min \{ \underline{p}_1, \underline{p}_2 \}\), which means that the assumption on \(\Phi _2\) stated in Lemma 4.2 is satisfied. Next, we show that \(\Phi _1\) is completely continuous. Let \(t\in \mathbb {R}_+\) and \(E \subseteq \Gamma \) be a bounded set. Define

To claim that \(\Phi _1\) is completely continuous, it suffices to show that \(\Gamma _t\) is precompact set. To to this, it is enough to prove that

is precompact set with the help of Fréchet–Kolmogrov Theorem [41, Page 275] in Yosida’s monograph. So, from the definitions of \(\Phi _1\) and \(\Gamma \), it follows that \(\Gamma _{t}(e,i)\) is bounded. Therefore, the first condition stated in the Fréchet–Kolmogrov Theorem is satisfied. Moreover, according to (41) and (42), it is obvious to see that

which implies the third condition stated also satisfied. Lastly, we need to verify the second condition of the Fréchet–Kolmogrov Theorem. This involves to show that

and

uniformly in \(\Gamma _{t}(e,i)\). So, according to (42) we have \(\tilde{i}(0, \cdot ) = 0\). Thus, the expression (44) is automatically satisfied when \(t = 0\). Assume \(t > 0\) and \(h \in (0, t)\). Then, from (42), we have

Then, in view of Proposition 2.2, it follows

Next, with the help of Proposition 4.3, we obtain

where we have used W(t) is Lipschitz function with constant \(L_W\). Further, we have

In summary, by collecting the inequalities (45), (46) and (47), we get

which implies that (44) is satisfied. Similarly, by repeating the same calculations as above, we could get

Thus, Eq. (43) is also satisfied. This completes the proof of Theorem 4.4. \(\square \)

5 Uniform persistence

This section aims to show that system (1)–(3) is uniformly persistent whenever \(\mathcal {R}_0>1\) by using the approach developed in [42, Chapter 9]. Before going further, for the sake of convenience, we consider the function \(\mathcal {H}\,: \, \mathbb {R}_+ \rightarrow \mathbb {R}\), and denote

Then, we state the following two Lemmas, which will assist in the discussion ahead.

Lemma 5.1

[43, Lemma 4.2] Let \(\mathcal {H}\,: \, \mathbb {R}_+ \rightarrow \mathbb {R}\) be a bounded and continuously differentiable function. Then, there exist sequences \(\{t_n\}\) and \(\{r_n \}\) such that \(t_n \rightarrow \infty \) and \(r_n \rightarrow \infty \), \(\mathcal {H}(t_n) \rightarrow \mathcal {H}_{\infty }\), \(\mathcal {H}(r_n)\rightarrow \mathcal {H}^{\infty }\), \(\mathcal {H}'(t_n)\rightarrow 0\), and \(\mathcal {H}'(r_n) \rightarrow 0\) as \(n \rightarrow \infty \).

Lemma 5.2

[38, Chapter 7] Suppose \(\mathcal {H}\,: \, \mathbb {R}_+ \rightarrow \mathbb {R}\) is bounded function and \( y\in L^{1}_{+}(0, +\infty )\). Then, we have

Now, let us define the persistence function \(\rho \,: \, \Gamma \rightarrow \mathbb {R}_+ \), as follows

which provides the infective force at time t. Furthermore, we set

Hence, it can be clearly seen that, for any \(x_0 \in \Gamma {\setminus } \Gamma _0\), we have \( \displaystyle \lim _{t \rightarrow \infty } \Phi (t, x_0) =E^0\). Moreover, let us introduce the following definition of the uniform persistence concept.

Definition 5.3

[42, Page 61] System (1)–(3) is said to be uniformly weakly \(\rho \)-persistent (respectively, uniformly strongly \(\rho \)-persistent) if there exists an \(\varepsilon > 0\), independent of the initial condition, such that

for any \(x_0 \in \Gamma _0\).

Now we are in a position to state the following result:

Theorem 5.4

Assume \(\mathcal {R}_0>1\). Then, system (1)–(3) is uniformly weakly \(\rho \)-persistent.

Proof

Since \(\mathcal {R}_0>1\), there exists a small \(\varepsilon _0>0\) such that

and

In what follows, by a way of contradiction, we will show that system (1)–(3) is uniformly weakly \(\rho \)-persistent. Otherwise, there exists \(x_0 \in \Gamma _0 \) such that

Then, there exists \(t_0 \ge 0\) such that

Without loss of generality, we can assume that \(t_0 = 0\) since we can replace the initial condition with \(\Phi (t_0, x_0) \). Next, the first equation in (1) together with (51) provides

Thus, we have \(S_\infty \ge \frac{A}{\mu + \varepsilon _0}\). Therefore, there exists \(t_1\ge 0\) suc that

Furthermore, the fourth equation of system (1) with (38), gives

Then, by applying the Laplace transform on the inequality (54), we get

where \( \widehat{\zeta }_3\), \(\widehat{R}\), and \(\widehat{W}\) are the Laplace transform of \(\psi \phi _2\), R(t), and W(t), respectively. Hence, we have

Moreover, (5) together with (53) provides

where

Now, by taking the Laplace transform again on both sides of (57), we find

where \(\widehat{W}\), \(\widehat{P}\) and \(\widehat{R}\) are the Laplace transform of W(t), P(t), and R(t), respectively, with

Thus, we have

where \(\widehat{\zeta }_1\) and \(\widehat{\zeta }_2\) are the Laplace transforms of \(\varphi \phi _1\) and \(\vartheta \phi _2\), respectively. Next, inserting (59) into (58) and using (56), it yields

Note that \(\widehat{W}(\lambda )<+\infty \) because W(t) is bounded function; further, since \(x_0\in \Gamma _0\), we have \(\widehat{W}(\lambda )>0\) for all \(\lambda >0\). Then, dividing both sides by \(\widehat{W}(\lambda )\) and letting \(\lambda \rightarrow \varepsilon _0\) in (60), we immediately obtain

which contradicts (49), and hence the proof is complete. \(\square \)

Now, in order to move from uniform weak persistence to uniform strong persistence, we follow the approach described in [42, Chapter 9], (see also, McCluskey [33, Section 8]). We consider total \(\Phi \)-trajectories of system (1)–(3) in space X, where \(\Phi \) is a continuous semi-flow defined by (6). Let \(\textbf{x}(t)\,:\, \mathbb {R} \rightarrow X\) be a total \(\Phi \)-trajectory such that \(\textbf{x}(t)= (S(t), e(t, \cdot ), i(t, \cdot ), R(t))\), for all \(t \in \mathbb {R}\). Then, it follows that \(\textbf{x}(t+r)=\Phi (r, \textbf{x}(t))\) for all \(t\in \mathbb {R}\), and all \(r\in \mathbb {R}_+\). Hence, we have

for all \(t\in \mathbb {R}\), and \(a,b \in \mathbb {R}_+\). Now, let us introduce the following lemmas which will be used later to show the uniform strong persistence result.

Lemma 5.5

Let \(\textbf{x}(t)\) be a total trajectory in \(\Gamma \) for all \(t \in \mathbb {R}\). Then, the following statements hold: i. S(t) is strictly positive on \(\mathbb {R}\); ii. if \(\rho (\Phi (t))=0\) for all \(t \le 0\), then \(\rho (\Phi (t))=0\) for all \(t \ge 0\).

Proof

-

i.

Firstly, we show that \(S(t)>0\), for all \(t\in \mathbb {R}\). By way of contradiction, suppose (i) is not true. Then, there exists a fixed \(t^*\in \mathbb {R}\) such that \(S(t^*)=0\). Therefore, from (61) we have \(\frac{\text {d} S(t^*)}{\text {d} t}=A>0\). Hence, by the continuity of S(t), there exists a sufficiently small \(\epsilon >0\) such that \(S(t^*- \epsilon ) < 0\), which contradicts \(S(t)\in \Gamma \). Thus, S(t) is strictly positive on \(\mathbb {R}\).

-

ii.

Assume that \(J(t) = 0\), for all \(t\le 0\). Then, from fourth to fifth equations of system (61), it yields that \(R(t) \le 0\), for all \(t\le 0\). This together with last equation of system (61) provides

$$\begin{aligned} R(t)=0, \quad \text {for all } t \in \mathbb {R}. \end{aligned}$$(62)From fourth to fifth equations in system (61), we can obtain

$$\begin{aligned} J(t) = \int \limits _{0}^{\infty } \vartheta (b) \phi _2(b) \int \limits _{0}^{\infty } \varphi (a) \phi _1(a) S(t-a-b) J(t-a-b) \text {d} a \text {d} b + F(t), \end{aligned}$$(63)where

$$\begin{aligned} F(t)= \delta \int \limits _{0}^{\infty } \vartheta (b) \phi _2(b) R(t-b) \text {d} b. \end{aligned}$$By changing the variables, we can rewrite (63) as follows:

$$\begin{aligned} J(t)= & {} \int \limits _{-\infty }^{t} \vartheta (t-\sigma ) \phi _2(t-\sigma ) \int \limits _{0}^{\infty } \varphi (a) \phi _1(a) S(\sigma - a) J(\sigma -a) \text {d} a \text {d} \sigma +F(t) \\= & {} \int \limits _{-\infty }^{t} \vartheta (t-\sigma ) \phi _2(t-\sigma ) \int \limits _{-\infty }^{\sigma } \varphi (\sigma -\upsilon ) \phi _1(\sigma -\upsilon ) S(\upsilon ) J(\upsilon ) \text {d} \upsilon \text {d} \sigma +F(t). \end{aligned}$$Here, if \(J(t)=0\), for all \(t\le 0\), it can be deduced from Eq. (62) that \(F(t)=0\), for all \(t \in \mathbb {R}\); in addition, with the help of Proposition 2.1, we obtain

$$\begin{aligned} J(t) \le \overline{\vartheta } \eta \int \limits _{0}^{t} \int \limits _{0}^{\sigma }J(\upsilon ) \text {d} \upsilon d \sigma , \quad \text { for all } t\ge 0. \end{aligned}$$(64)Next, denote

$$\begin{aligned} \mathcal {B}(t) = \int \limits _{0}^{t} J(\upsilon )\text {d} \upsilon + \int \limits _{0}^{t} \int \limits _{0}^{\sigma }J(\upsilon ) \text {d} \upsilon \text {d} \sigma , \quad \text {for all } t\ge 0. \end{aligned}$$Thus, we have

$$\begin{aligned} \frac{\text {d} \mathcal {B}(t)}{\text {d} t}= & {} J(t) + \int \limits _{0}^{t} J(\upsilon )\text {d} \upsilon \\\le & {} \overline{\vartheta } \eta \int \limits _{0}^{t} \int \limits _{0}^{\sigma }J(\upsilon ) \text {d} \upsilon \text {d} \sigma + \int \limits _{0}^{t} J(\upsilon )\text {d} \upsilon \\\le & {} \gamma \mathcal {B}(t), \end{aligned}$$where \(\gamma =\max \left\{ \overline{\vartheta } \eta ,1\right\} \). Hence, we get \(\mathcal {B}(t) \le \mathcal {B}(0)e^{\gamma t}\), for all \(t\ge 0\). Notice that since \(\mathcal {B}(0)=0\), it results then \(\mathcal {B}(t)=0\), for all \(t\ge 0\), and hence \(J(t)=0\), for all \(t\ge 0\). Proof is complete. \(\square \)

Lemma 5.6

Let \(\textbf{x}(t)\) be a total trajectory in \(\Gamma \) for all \(t \in \mathbb {R}\). Then, \(\rho (\Phi (t))\) is either strictly positive or identical to zero on \(\mathbb {R}\).

Proof

Note that, for any \(t^* \in \mathbb {R}\), with the help of Lemma 5.5, we may observe \(J(t)=0\) for all \(t\ge t^*\), if \(J(t)=0\) for all \(t\le t^*\). This means that either

- i.:

-

J(t) is identically zero on \(\mathbb {R}\); or

- ii.:

-

there exists a decreasing sequence \(\{t_n\}_{n \ge 1}\) such that \(t_n \rightarrow -\infty \) as \(n \rightarrow \infty \) and \(J(t_n) >0\).

For the second statement (ii.), let us denote

From (4) we have

where

Moreover, in view of (5) it yields

where

Next, combining (66) and (68), we find

Denote \(\displaystyle \inf _{t\in \mathbb {R}}S(t)=\underline{S}\). Then, after making some changes of variables, we can get

Therefore, we have

where

Note that from (66) to (67), we have \(\tilde{J}_n(0)= J(t_n)>0\), and \(\tilde{J}_n\) is a continuous function at 0. By applying the result described in the monograph of Smith and Thiem [42, Corollary B.6], we can show that there is a positive constant \(\xi > 0\) that depends only on the function \(\gamma (t)\), such that \(J_n(t) > 0\) for all \(t > \xi \). Furthermore, using the definition of \(J_n(t)\) given by (65), we can easily see that \(J(t) > 0\) for all \(t > \xi +t_n\). Since \(t_n \rightarrow -\infty \) as \(n\rightarrow \infty \), it follows that \(J(t)>0\) for all \(t\in \mathbb {R}\). Consequently, J(t) is strictly positive on \(\mathbb {R}\). This proves Lemma 5.6. \(\square \)

Now, based on the above preparations, we will state the main result of this section.

Theorem 5.7

Assume \(\mathcal {R}_0>1\). Then, system (1)–(3) is uniformly strongly \(\rho \)-persistent.

Proof

In view of Theorem 4.4, the semi-flow \(\Phi \) generated by system (1)–(3) has a global compact attractor \(\textbf{A}\). Additionally, when \(\mathcal {R}_0>1\), Theorem 5.4 shows that system (1)–(3) is uniformly weakly \(\rho \)-persistence. This combined with Lemma 5.5, Lemma 5.6, and [42, Theorem 5.2] leads immediately to conclude that system (1)–(3) is uniformly strongly \(\rho \)-persistence. \(\square \)

Next, in accordance with [42, Theorem 5.7], we introduce the following

Theorem 5.8

There exists a compact attractor \(\mathbf{\tilde{A}}\) that attracts every solution with initial condition in \(\Gamma _0\). Moreover \(\mathbf{\tilde{A}}\) is uniformly \(\rho \)-positive, i.e., there exists a positive constant \(\varpi \), such that

Remark 5.9

In epidemiology, the uniform persistence concept means, roughly speaking, that the proportion of infected individuals is bounded away from 0 and the bound does not depend on the initial condition after a sufficient long time, if the basic reproduction number is larger than unity.

6 Global stability

In this section, we will discuss the main results of this paper. Firstly, we will start by studying the global stability of the disease-free equilibrium \(E^0\) of system (1)–(3) with the help of the Fluctuation Lemma 5.1. To this end, let us state the following result:

Theorem 6.1

Assume \(\mathcal {R}_0<1\). Then, the disease-free equilibrium \(E^0\) of system (1)–(3) is globally asymptotically stable in \(\Gamma \).

Proof

By Theorem 3.2, we only need to get the global attractivity of \(E^0\). Let \((S,e(t,\cdot ),i(t, \cdot ), R)\) be a solution of system (1)–(3), with the initial condition \((S_0, e_0, i_0,R_0) \) in \(\Gamma \). We first claim that \(W^\infty = J^\infty = R^\infty = 0\), which means that

In view of Lemma 5.1, there exists a sequence \(\{t_n \}\) such that \(t_n \rightarrow \infty \), \(R(t_n) \rightarrow R^\infty \) and \(\frac{\text {d} R(t_n)}{\text {d} t} \rightarrow 0\) as \(n \rightarrow \infty \). Last equation of system (1) with (38) gives

Passing to the limite as \( n \rightarrow \infty \) and by using Lemma 5.2, we find

which implies

Moreover, from (4) to (38), we can write

With the help of Lemma 5.2 again, it results

Furthermore, the formula of W(t) in (5) with (37) provides

According to Lemma 5.2, we can obtain

where we have used \(S^\infty \le S^0\). Now, by combining (70), (71) and (72), it yields

Due to \(\mathcal {R}_0<1\), it follows immediately that \(W^\infty =0\). This together with (70) and (71) leads to obtain \( R^\infty =J^\infty =0\). Therefore, it can be deduced that

Lastly, recall that \(S^0=\frac{A}{\mu }\). Then, we move to show that \(\displaystyle \limsup _{t \rightarrow \infty } S(t) =S^0\). To this end, it suffices to prove that \(S^\infty \ge S^0\) since it is straightforward to observe that \(S^\infty \le S^0\).

By Lemma 5.1, there exists a sequence \(\{t_n \}\) such that \(t_n \rightarrow \infty \), \(S(t_n) \rightarrow S^\infty \) and \(\frac{\text {d} S(t_n)}{\text {d} t} \rightarrow 0\) as \(n \rightarrow \infty \). First equation of (1) together with (37) allows to write

Letting \(n \rightarrow \infty \) with the help of Lemma 5.2, we can obtain \(0 \ge A - \mu S^\infty \), which implies that \(S^\infty \ge S^0\), where we have used \(W^\infty =0\). Therefore, based on the above discussion, we have derived the following conclusion

Thus, the proof is complete. \(\square \)

In what follows, based on the Lyapunov functionals technique and LaSalle invariance principle (we may refer to [44, 45], for more details), we will derive the global stability of the endemic equilibrium \(E^*\) of system (1)–(3). Before going on, it is necessary define the function \(g\,: \, (0, +\infty ) \longrightarrow \mathbb {R}_+\), as follows

which is a well-known ingredient to build the Lyapunov functional in Volterra–Lotka systems [46]. Note that \(g'(x)= 1-\frac{1}{x}\). Therefore, the function g is decreasing on (0, 1], and increasing on \([1, +\infty )\), and it has only one extremum, which is a global minimum at \(x=1\). Additionally, we have \(1-x+\ln x \le 0\) for \(x>0\), and the equality holds if and only if \(x=1\).

The following lemma ensures the well definition of the constructive Lyapunov functional defined by (78).

Lemma 6.2

Consider \(\mathcal {R}_0>1\). Let \(\mathbf{{x}}(t)\) be a total trajectory in \(\mathbf{\tilde{A}}\) for all \(t \in \mathbb {R}\). Then, the following estimates hold:

where \(\varpi _1 = \frac{A}{\mu + \varpi }\), \(\varpi _2= \frac{ \varpi \varpi _1 }{\mu + \delta } \zeta _1 \zeta _3\), and \(\varpi \) is given in Theorem 5.8.

Proof

In view of (61) and by using (69), we obtain

which means that \( \displaystyle \liminf _{t \rightarrow \infty }S(t) \ge \frac{A}{\mu +\varpi }=\varpi _1\), for each point in \(\mathbf{\tilde{A}}\). Thus, by invariance, we get

Moreover, we have \(e(t,a)= S(t-a) J(t-a)\phi _1(a)\). Then, according to (69) and (75), we can write

Next, we have \(i(t,b) = W(t-b)\phi _2(b)\), for all \(t \in \mathbb {R}\), where

and hence, we have

Finally, from equation of R in (61) and with the help of (77), we find

Hence, we have \( \displaystyle R_{\infty } \ge \frac{ \varpi \varpi _1}{\mu + \delta } \zeta _1 \zeta _3=\varpi _2\). Therefore, we have

The proof of Lemma 6.2 is complete for all \(t \in \mathbb {R}\). The proof is complete. \(\square \)

Furthermore, some straightforward lemmas are summarized below, which will be used in proving Theorem 6.6.

Lemma 6.3

Each solution of system (1)–(3) satisfies

Lemma 6.4

Set \(\delta ^*= \frac{\delta }{(\mu +\delta ) \zeta _1 S^*}\). Then, we have

Lemma 6.5

Each solution of system (1)–(3) satisfies

After setting the above preparations, we are about to show the global asymptotic stability of the endemic equilibrium \(E^*\).

Theorem 6.6

Assume \(\mathcal {R}_0>1\). Then, the endemic equilibrium \(E^*\) of system (1)–(3) is globally asymptotically stable.

Proof

By Theorem 3.7, \(E^*\) is locally asymptotically stable and there exists a global attractor \(\mathbf{\tilde{A}} \in \Gamma \). Hence, our aim is showing \(\mathbf{\tilde{A}} = \{ E^*\}\). Let \(\textbf{x}(t)=(S(t), e(t, \cdot ), i(t, \cdot ), R(t))\) be a total \(\Phi \)-trajectory in \(\mathbf{\tilde{A}}\) for all \(t\in \mathbb {R}\). By Lemma 6.2, there exists \(\varpi _0\) such that \(0 \le g(x) \le \varpi _0\) with x being any of \(\frac{S(t)}{S^*}\), \(\frac{e(t,a)}{e^*(a)}\), \(\frac{i(t,b) }{i^*(b)}\), and \(\frac{R(t)}{R^*}\), for any \(t\in \mathbb {R}\) and \(a \in \mathbb {R}_+\).

Define

where

with

where \(\delta ^*\) is given in Lemma 6.4. Note that for all \(a,b \in \mathbb {R}_{+}\), we have

Now, we move to show that \(\frac{\text {d} V(t)}{\text {d} t}\) is nonnegative. Here, we will independently calculate the derivatives \(\frac{\text {d} V_1}{\text {d} t}\), \(\frac{\text {d} V_2}{\text {d} t}\), \(\frac{\text {d} V_3}{\text {d} t}\), and \(\frac{\text {d} V_4}{\text {d} t}\), then we collect them all up. Firstly, by differentiating \(V_1\) along the solution of system (1)–(3), we obtain

where we have used \(A= \mu S^* + S^* \displaystyle \int \limits _{0}^{\infty }\vartheta (b) i^*(b) \text {d} b \). Then, after some calculations and rearrangement, we find

With the help of Lemma 6.3, we can obtain

Next, by differentiating \(V_2(t)\) along the solution of system (1)–(3), it follows

where we have used

Applying integration by parts on (81) and taking into account (79), we can get

Thus, we have

After doing some calculations and simplifying, we have consequently got

Similarly, the derivative of \(V_3(t)\) can be expressed as follows

Then, by performing some rearrangements, it yields

Next, differentiating \(V_4\) and considering that \(\mu + \delta =\displaystyle \frac{1}{R^*} \int \limits _{0}^{\infty } \psi (b) i^*(b) \text {d} a \), we obtain

Through some computations, we can get

In summary, by combining and rearranging the expressions (80), (82), (83), and (84) with the help of Lemma 6.4 and Lemma 6.5, the derivative of V(t) can be expressed as follows:

Therefore, it follows that \(\frac{\text {d} V(t)}{\text {d} t } \le 0\), which implies that the function V(t) is non-increasing. Further, we can observe that V(t) is bounded function on any solution \(\textbf{x}(\cdot )\), which means that \(\alpha \)-limit set of solution \(\textbf{x}(\cdot )\) must be contained in the largest invariance subset \(\mathcal {M}\) in \(\left\{ \frac{\text {d} V(t)}{\text {d} t}=0 \right\} \). Now, let us proceed to determine the subset \(\mathcal {M}\). To this end, it follows from \(\frac{\text {d} V(t)}{\text {d} t}=0\) that \(S(t)=S^*\), for all \(t \in \mathbb {R}\). Then, by taking into account \(S(t)=S^*\), it yields from the first equation of system (61) that

In addition, from the first equation of system (23), we have

Equation (86) with (87) provides that \(\displaystyle \int \limits _{0}^{\infty } \vartheta (b) i(t,b)\text {d} b = \displaystyle \int \limits _{0}^{\infty } \vartheta (b) i^*(b)\text {d} b\). Moreover, we have

which implies that \(e(t,a)=e^*(a)\), for all \(t\in \mathbb {R}\). Thus, it can be deduced that \(i(t,b) =i^*(b)\), and \(R(t)=R^*\), for all \(t \in \mathbb {R}\). Consequently, \(\mathcal {M}=\{E^*\}\) is the largest invariant subset of \(\left\{ \frac{\text {d} V(t)}{\text {d} t}=0 \right\} \). Hence, according to the Lyapunov–LaSalle invariance principle, the endemic equilibrium \(E^*\) can be considered globally asymptotically stable whenever \(\mathcal {R}_0>1\). This completes the proof of Theorem 6.6. \(\square \)

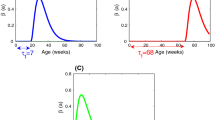

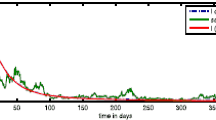

7 Numerical simulations

In this section, we will illustrate some numerical analysis to provide the theoretical results obtained in previous sections. We will consider herpes disease as an illustrative example that aligns well with the SEIR model (1)–(3). To this end, the backward Euler and linearized finite difference method will be used to discretize the ODEs and PDE in system (1)–(3), and the integral will be numerically calculated using Simpson’s rule.

We use parameters from previous research by Foss et al. [47]. The parameters A, \(\mu \), and \(\delta \) in system (1)–(3) take the following values: \( A=275\), \(\mu =0.014\), and \(\delta \) is assumed to be varied.

The functions \(\nu _1(a)\), and \(\nu _2(b)\) are considered to be constants, and \(\nu _1(a)=\nu _2(b)=\nu =0.019\). Moreover, the functions \(\vartheta (b)\), \(\varphi (a)\), and \(\psi (b)\) are chosen to be

where \(\beta _1\) is assumed to be varied, \(\beta _2 =0.03\), and \(\beta _3=0.09\). Moreover, the initial condition is chosen as

-

i.

When \(\beta _1= 7\times 10^{-4}\) and \(\delta =1/78.5\), then we have \(\mathcal {R}_0=0.7564<1\). Thus, according to Theorem 6.1 the disease-free equilibrium \(E^0\) is globally asymptotically stable (see, Figs. 1 and 2). This means that the disease eventually tends to go extinct.

-

ii.

When \(\beta _1= 7\times 10^{-3}\) and \(\delta =1/87.2\), then we have \(\mathcal {R}_0=1.4502>1\). In view of Theorem 6.6, the endemic equilibrium \(E^*\) is globally asymptotically stable (see Figs. 3 and 4). This indicates that the disease can start spreading through the population.

8 Discussion

In this paper, we have created and analyzed an SEIR epidemic model with continuous age structure for both latently infected individuals and infectious individuals with relapse to understand how these epidemiological factors affect the spread of the infectious disease. We then studied the global asymptotic stability of each equilibrium of the model by constructing the appropriate Lyapunov functional. Our theoretical results showed that the threshold parameter \(\mathcal {R}_0\) completely governs the spread of diseases. That is, if \(\mathcal {R}_0<1\), then the disease-free equilibrium is globally asymptotic stable, which means that the disease can be eradicated from the community, while the endemic equilibrium of the model is considered to be globally asymptotic stable whenever \(\mathcal {R}_0>1\), indicating that the disease will continue to spread through the population. To control the transmission of the disease, we should take related strategies to reduce the basic reproduction number to below one. From the expression of \(\mathcal {R}_0\), that is,

Notice that we must work on both terms to reduce the \(\mathcal {R}_0\) value. The first term can be reduced by decreasing the quantities of \(\zeta _1\) and \(\zeta _2\). However, the relapse rate \(\delta \) must be reduced to decrease the second term, as it has a direct effect, while the treatment rate \(\zeta \) does not. Thus, strategies for controlling the disease may include early diagnosis of latent infections, decreasing both transmission and relapse rates.

References

Castillo-Chávez, C., Song, B.: Dynamical models of tuberculosis and their applications. Math. Biosci. Eng. 1(2), 361–40 (2004)

Capasso, V.: Mathematical structures of epidemic systems. Berlin Heidelberg: Springer-Verlag (1993). https://doi.org/10.1007/978-3-540-70514-7

Diekmann, O., Heesterbeek, J.A., Metz, J.A.: On the definition and the computation of the basic reproduction ratio \(\cal{R} _0\) in models for infectious diseases in heterogeneous populations. J. Math. Biol. 28, 365–382 (1990)

Kermack, W.O., McKendrick, A.G.: A contribution to the mathematical theory of epidemics. Proc. Royal Soc. London A 115(772), 700–721 (1927)

Xu, R., Ma, Z.: Global stability of a SIR epidemic model with nonlinear incidence rate and time delay. Nonlinear Anal. Real World Appl. 10(5), 3175–3189 (2009)

Zaman, G., Han Kang, Y., Jung, I.H.: Stability analysis and optimal vaccination of an SIR epidemic model. Biosystems 93(3), 240–249 (2008)

Yuan, X., Wang, F., Xue, Y., Liu, M.: Global stability of an SIR model with differential infectivity on complex networks. Phys. A 499, 443–456 (2018)

Hu, Z., Teng, Z., Zhang, L.: Stability and bifurcation analysis in a discrete SIR epidemic model. Math. Comput. Simul. 97, 80–93 (2014)

Tahir, H., Khan, A., Din, A., Khan, A., Zaman, G.: Optimal control strategy for an age-structured SIR endemic model. Discret. Contin. Dynamic. Syst.-S 14(7), 2535–2555 (2021)

Brookmeyer, R.: Incubation period of infectious diseases. In Wiley StatsRef: Statistics Reference (2015). https://doi.org/10.1002/9781118445112.stat05241.pub2

Wang, L., Xu, R.: Global stability of an SEIR epidemic model with vaccination. Int. J. Biomath. 09(06), 1650082 (2016)

McCluskey, C.C.: Global stability for an SEIR epidemiological model with varying infectivity and infinite delay. Math. Biosci. Eng. 6(3), 603–610 (2009)

Xue, C.: Study on the global stability for a generalized SEIR epidemic model. Comput. Intell. Neurosci. (2022). https://doi.org/10.1155/2022/8215214

Wang, J., Shu, H.: Global analysis on a class of multi-group SEIR model with latency and relapse. Math. Biosci. Eng. 13(1), 209–225 (2016)

Bernoussi, A.: Stability analysis of an SIR epidemic model with homestead-isolation on the susceptible and infectious, immunity, relapse and general incidence rate. Int. J. Biomath. 16(05), 2250102 (2023)

Pradeep, B.G.S.A., Ma, W., Wang, W.: Stability and Hopf bifurcation analysis of an SEIR model with nonlinear incidence rate and relapse. J. Stat. Manag. Syst. 20(3), 483–497 (2017)

Tudor, D.: A deterministic model for herpes infections in human and animal populations. SIAM Rev. 32(1), 136–139 (1990)

Wang, J., Pang, J., Liu, X.: Modelling diseases with relapse and nonlinear incidence of infection: a multi-group epidemic model. J. Biol. Dyn. 8(1), 99–116 (2014)

Guo, Z.K., Xiang, H., Huo, H.F.: Analysis of an age-structured tuberculosis model with treatment and relapse. J. Math. Biol. (2021). https://doi.org/10.1007/s00285-021-01595-1

Liu, L., Ren, X., Jin, Z.: Threshold dynamical analysis on a class of age-structured tuberculosis model with immigration of population. Adv. Difference Equ. (2017). https://doi.org/10.1186/s13662-017-1295-y

Huang, G., Liu, X., Takeuchi, Y.: Lyapunov functions and global stability for age-structured HIV infection model. SIAM J. Appl. Math. 72(1), 25–38 (2012)

Xu, J., Geng, Y., Zhou, Y.: Global dynamics for an age-structured HIV virus infection model with cellular infection and antiretroviral therapy. Appl. Math. Comput., Elsevier 305(C), 62–83 (2017)

Shi, L., Wang, L., Zhu, L., Din, A., Qi, X., WuL, P.: Dynamics of an infection-age HIV diffusive model with latent infected cell and Beddington–DeAngelis infection incidence. Eur. Phy. J. Plus (2022). https://doi.org/10.1140/epjp/s13360-022-02428-w

Zou, L., Ruan, S., Zhang, W.: An age-structured model for the transmission dynamics of hepatitis B. SIAM J. Appl. Math. 70(8), 3121–3139 (2010)

Thieme, H.R., Castillo-Chávez, C.: How may infection-age-dependent infectivity affectthe dynamics of HIV/AIDS? SIAM J. Appl. Math. 53(5), 1447–1479 (1993)

Webb, G.F.: Theory of Nonlinear Age-Dependent Population Dynamics. Marcel Dekker, New York (1985)

Magal, P., McCluskey, C.C., Webb, G.: Lyapunov functional and global asymptotic stability for an infection-age model. Appl. Anal. 89(7), 1109–1140 (2010)

McCluskey, C.C.: Delay versus age-of-infection-global stability. Appl. Math. Comput. 217(7), 3046–3049 (2010)

Melnik, V.A., Korobeinikov, A.: Lyapunov functions and global stability for SIR and SEIR models withage-dependent susceptibility. Math. Biosci. Eng. 10(2), 369–378 (2013)

Liu, W.M., Levin, S.A., Iwasa, Y.: Influence of nonlinear incidence rates upon the behaviour of SIRS epidemiological models. J. Math. Biol. 23, 187–204 (1986)

Henshaw, S., McCluskey, C.C.: Global stability of a vaccination model with immigration. Electron. J. Differ. Equ. 2015(92), 1–10 (2015)

Yang, Y., Li, J., Zhou, Y.: Global stability of two tuberculosis models with treatment and self-cure. Rocky Mt. J. Math. 42(4), 1367–1386 (2012)

McCluskey, C.C.: Global stability for an SEI epidemiological model with continuous age-structure in the exposed and infectious classes. Math. Biosci. Eng. 9(4), 819–841 (2012)

Yang, Y., Li, J., Ma, Z., Liu, L.: Global stability of two models with incomplete treatment for tuberculosis. Chaos, Solitons & Fractals 43(1), 79–85 (2010)

Hu, R., Liu, L., Ren, X., Liu, X.: Global stability of an information-related epidemic model with age-dependent latency and relapse. Ecol. Complex. 36, 30–47 (2018)

Xu, R.: Global dynamics of an epidemiological model with age of infection and disease relapse. J. Biol. Dyn. 12(1), 118–145 (2018)

Din, A., Li, Y.: Controlling heroin addiction via age-structured modeling. Adv. Difference Equ. (2020). https://doi.org/10.1186/s13662-020-02983-5

Iannelli, M.: Mathematical Theory of Age-Structured Population Dynamics, In : Applied Mathematics Monographs. Vol. 7, comitato Nazionale per le Scienze Matematiche, Consiglio Nazionale delle Ricerche (C.N.R.), Giardini, Pisa (1995)

Magal, P.: Compact attractors for time-periodic age-structured population models. Electron. J. Differ. Equ. 65, 1–35 (2001)

Hale, J.K.: Asymptotic Behavior of Dissipative Systems. American Mathematical Society, Providence (1988)

Yosida, K.: Functional Analysis, 2nd edn. Springer, Berlin, Heidelberg (1968)

Smith, H. L., Thieme, H. R.: Dynamical Systems and Population Persistence, American Mathematical Society, Providence, 118 (2011)

Hirsch, W.M., Hanisch, H., Gabriel, P.: Differential equation models of some parasitic infections: Methods for the study of asymptotic behavior. Commun. Pure Appl. Math. 38(6), 733–753 (1985)

Hale, J.K.: Dynamical systems and stability. J. Math. Anal. Appl. 26(1), 39–59 (1969)

Walker, J.A.: Dynamical Systems and Evolution Equations: Theory and Applications. Plenum Press, New York (1980)

Goh, B.S.: Global stability in many species systems. Am. Nat. 111(977), 135–142 (1977)

Foss, A.M., Vickerman, P.T., Chalabi, Z., Mayaud, P., Alary, M., Watts, C.H.: Dynamic modelling of herpes simplex virus type-2 (HSV-2) transmission: issue in structure uncertainty. Bull. Math. Biol. 71(3), 720–749 (2009)

Acknowledgements

I would like to thank the anonymous referees for their comments which contributed to improve this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

NABTi, A. Dynamical analysis of an age-structured SEIR model with relapse. Z. Angew. Math. Phys. 75, 84 (2024). https://doi.org/10.1007/s00033-024-02227-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00033-024-02227-6