Abstract

We demonstrate the existence of solutions to Signorini’s problem for the Timoshenko’s beam by using a hybrid disturbance. This disturbance enables the use of semigroup theory to show the existence and asymptotic stability. We show that stability is exponential, when the waves speed of propagation is equal. When the waves speed is different, we show that the solution decays polynomially. This result is new. We perform numerical experiments to visualize the asymptotic properties.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We study the mechanical and thermal evolution of a thermoelastic beam in unilateral contact. These contact problems arise naturally in many situations in industrial processes, when two or more materials can come into contact or lose contact as a result of thermoelastic expansion or contraction. Here, we consider the cross-contact problem with Timoshenko’s beam model. The physical setting is represented in Fig. 1.

The equations of motion and energy balance are described by

The mathematical modelling can be found in [1, 2]. Here, \(\varphi (x,t),\) stands for the transversal displacement of the point x on the beam, \(\psi \) is the rotatory angle of the cross section and \(\theta \) is the difference of temperature of the beam. Here, \(\rho _1=\rho A,\ \ \rho _2=\rho I,\ k=\kappa G A,\ \ b= EI\) where E is Young’s modulus, G is the modulus of rigidity and \(\kappa \) is the transversal shear factor. The terms \(\rho ,\ A\) and I are density of body, the area of the cross section and the moment of inertia, respectively. The constants \(\rho _3,\ \tau ,\ \sigma \ > 0\) represent the physical parameters from thermoelasticity theory. The initial conditions of the model are given by

and we use the following boundary conditions

For the free end of the beam, where contact with the obstacle can occur, we consider Signorini’s contact condition.

This condition ensures that the transversal displacement at \( x = L \) is restricted between stops \(g_1\) and \(g_2.\) The mathematical boundary conditions for this physical setting are as follows

where \(S=k(\varphi _x+\psi )\) and \(M=b\psi _{x}.\)

In a series of articles by Andrews et al. [3], Kuttler and Shillor [4], the authors studied the problem of one-dimensional semi-static thermoelastic contact. They demonstrated the existence of global weak solutions of their respective models. The numerical aspects of the problem were studied in [5, 6]. In [7] was considered the Signorini’s problem of the Euler–Bernoulli thermoelastic beams system, the authors showed the global existence of weak solutions of the model which decay exponentially to zero. Finally, in [8], the authors demonstrated the global existence of weak solutions of the Signorini’s problem to Timoshenko’s thermoelastic beam model and by introducing an additional friction mechanism, the authors were able to show that the solution of the models decay exponentially to zero. This additional dissipative mechanism makes the difference in the proof of the exponential stability of the problem. Here, we only consider the dissipation produced by the difference of temperature, for this reason we need that the waves speed of propagation be equal.

In the general case (different propagation speeds), we prove that the decay rate is polynomial. Our method is different and follows the theory of semigroups.

The main contribution of this article is the use of semigroup theory to solve the Signorini’s problem. We do this by taking dynamic boundary conditions and addressing the Signorini’s problem using a Lipschitz disturbance, to obtain the normal compliance condition, then to arrive at the contact problem we use the observability inequalities. We believe that this method is stronger than the penalty method used in all the articles cited above. This is because we get more information about the asymptotic behaviour of the solution, under all boundary conditions. Unlike the articles [7,8,9] where special boundary conditions had to be used to show the exponential decay. Furthermore, with this method it is possible to prove the polynomial decay of the solutions of Timoshenko’s contact problem. Finally, we believe that the polynomial decay rate that we obtain is optimal in the sense that it is the same rate as that obtained in [10, 11] where optimality is demonstrated.

The remaining part of this manuscript is organized as follows: Sects. 2 and 3 deal with the global existence and the uniform stability of the hybrid system, respectively. In Sect. 4, we consider the normal compliance condition as a Lipschitz disturbance, then we take the limit \( \epsilon \rightarrow 0 \) to show the existence of global weak solutions to Signorini’s problem. In addition, we show the exponential stability, provided the waves speed of propagation of the system is equal and the polynomial stability in the general case. Finally, in Sect. 5 we develop numerical experiments that verify the decay properties of solutions.

2 The semigroup setting

Our starting point is to consider the linear hybrid Timoshenko system that is given by

Verifying the initial data (1.2) and the boundary conditions (1.3) with \(\varphi (L,t)=v(t)\), where

Equation (2.2) is called the dynamic boundary condition. The equations describe the oscillations of the uniform cantilever curved beam with a load mass \( \epsilon \) at its tip, with damping term proportional to the velocity. In a first moment, we omit the super index \(\epsilon \) in system (2.1)–(2.2), we use this dependence later when we begin the limit process \(\epsilon \rightarrow 0\). The objective of these boundary conditions is to apply the Lipschitz perturbation to obtain the normal compliance condition and then arrive to the Signorini’s problem.

Let us introduce the Hilbert space \({\mathcal {H}}\)

where

which is a Hilbert space with the norm

Denoting by \(\Phi =\varphi _t\), \(\Psi =\psi _t\), \(V=v_t\) system (2.2) can be written as

where \((\varphi ,\Phi ,\psi ,\Psi ,\theta ,v,V)\), \(U_0:=(\varphi _0,\varphi _1,\psi _0,\psi _1,\theta _0,v_0,v_1)\) and \({\mathcal {A}}\) is the operator

with domain of \({\mathcal {A}}\) given by

where

Moreover \({\mathcal {A}}\) is dissipative,

To show the well-posedness of (2.1)–(2.2), we only need to prove that \({\mathcal {A}}\) is an infinitesimal generator of a \(C_0\) semigroup. To do that it is enough to show that \(0\in \varrho ({\mathcal {A}})\), see [12] . That is for any \(F=(f_1,f_2,f_3,f_4,f_5,f_6,f_7)^\top \in {\mathcal {H}}\), there exists only one \(U\in D({\mathcal {A}})\) such that \({\mathcal {A}}U=F\). In fact, recalling the definition of \({\mathcal {A}}\) we get the system

and

Since \(\theta \) verify Dirichlet boundary condition and \(\Psi \) is already given by \(f_3\), using the Lax–Milgram Lemma we conclude that there exists only one \(\theta \in H^2(0,L)\). It remains to show the existence of \(\psi \) and \(\varphi \).

verifying the following boundary conditions

Denoting by \(U^i=(\varphi ^i,\psi ^i)\) the bilinear form

is symmetric, continuous and coercive over the convex set

Thus, for any \((f_6,f_7)\in L^2(0,L)\times L^2(0,L)\) there exists only one weak solution to the above system (see Theorem 5.6 (Stampacchia) page 138 of [13]). Using the equations, we conclude that \((\varphi ,\Phi ,\psi ,\Psi ,\theta ,v,V)\in D({\mathcal {A}})\)

Theorem 2.1

The operator \({\mathcal {A}}\) is the infinitesimal generator of a \(C_0\) semigroup of contractions.

The above theorem implies the global existence of solution for the corresponding hybrid problem.

3 Asymptotic behaviour of the hybrid system

The main tool we use in this section is the result due to Pruess [14] and Borichev and Tomilov [15].

Theorem 3.1

Let \(S(t)=e^{{\mathcal {A}}t}\) be a \(C_0\)-semigroup of contractions over a Hilbert space \({\mathcal {H}}\). Then, ( [14]) S(t) is exponentially stable if and only if

Moreover, if \(i{\mathbb {R}}\subset \varrho ({\mathcal {A}})\) then we have ( [15])

Let us consider \(U=(\varphi ,\Phi ,\psi ,\Psi ,\theta ,v,V)^\top \in D({\mathcal {A}})\) and \(F=(f_1,f_2,f_3,f_4,f_5,f_6,f_7)^\top \) \(\in {\mathcal {H}}.\) The resolvent equation \(i\lambda U-{\mathcal {A}}U=F\) in terms of its components can be written as

From (2.4) and the resolvent equation \(i\lambda U-{\mathcal {A}}U=F\), we get

To get exponentially stability, we use condition (1.6). Let us introduce the functionals

where

Note that in this case \(q'(x)\) is large in comparison with q for n large, therefore there exist positive constants such that

Lemma 3.1

For any \([\xi _1,\xi _2]\subset [0,L]\), the solution of system (2.1) satisfies

Proof

Multiplying Eq. (3.1)\(_4\) by \(q{\overline{M}}\), we get

Similarly, multiplying Eq. (3.1)\(_2\) by \(q{\overline{S}},\) we get

So, our result follows. \(\square \)

Let us denote by Q and R any functions satisfying

Lemma 3.2

Under the above conditions, we have

Proof

Multiplying (3.1)\(_4\) by \({\overline{\psi }}\) and using integration by parts, we get

from where we get (3.6). Similarly, multiplying (3.1)\(_2\) by \({\overline{\varphi }}\) we get the other inequality. \(\square \)

Lemma 3.3

Under the above conditions, we have that for any \([\xi _1,\xi _2]\subset [0,L]\) it follows

Proof

Integrating over ]0, L[ (3.1)\(_4\), we get

So, using Lemma 3.2 and (3.4) we get for \(\lambda \) large enough that

From Lemma 3.2, we get

from where our conclusion follows. \(\square \)

Theorem 3.2

The semigroup \(e^{{\mathcal {A}}t}\) associated with system (2.1) verifying (1.3) is exponentially stable provided (1.6) holds. If \(\chi _0\ne 0\) the semigroup decays polynomially to zero, that is

where c is independent of \(\epsilon \).

Proof

Multiplying (3.1)\(_5\) by \(\displaystyle \int \limits _x^L{\overline{\Psi }}\;{\text {d}}s\), we get

Therefore, we have

from where we get

From Lemma 3.3,

Using Gagliardo–Nirenberg’s inequality and relation (3.1), we get

Using Lemma 3.3, the above inequality and recalling the definition of \(J_2\) we get

To estimate \(J_3\), we use (3.6)

Using the same above procedure in (3.11), we get

On the other hand, using interpolation we get

Recalling the definition of \(J_1\) and using (3.10) and (3.12), we get

where we used inequalities of the type

Recalling (3.2) and substitution of \(J_1\) and \(J_2\) into (3.9) yields

Using Lemma 3.2, we arrive to

Multiplying (3.1)\(_4\) by \({\overline{S}},\) we get

Recalling the definition of S and using Eq. (3.1)\(_2\) to rewrite \(G_0\), we get

where R is such that \(|R|\le C\Vert U\Vert \Vert F\Vert \). Therefore, we get

Using the observability inequalities (Lemma 3.1), we get

hence (3.14) implies

for \(\epsilon =\delta ^2.\) Substitution of the above expression in (3.18) and using (3.14) we get

Using Lemma 3.2 implies

Note that

if \(\chi _0=0\), the exponential decays holds. Let us suppose that \(\chi _0\ne 0\). Using (3.14), we have

So we have

therefore from Theorem 3.1, the polynomial decays hold. \(\square \)

4 The semilinear problem

Here, we prove the well-posedness of the abstract semilinear problem and we show, under suitable conditions that the solution also decays polynomially to zero. Let \( {\mathcal {F}} \) be a local Lipschitz function defined over a Hilbert space \( {\mathcal {H}} \). Here, we assume that there exists a globally Lipschitz function \( \widetilde{{\mathcal {F}}_R}\) such that for any ball \( B_R=\{W\in {\mathcal {H}};\;\; \Vert W\Vert _{{\mathcal {H}}}\le R\} \),

Additionally, we assume that there exists a positive constant \(\kappa _0\) such that

Under these conditions we present.

Theorem 4.1

Let \(\{T(t)\}_{t\ge 0}\) be a \(C_0\) semigroup of contraction, exponentially or polynomially stable with infinitesimal generator \({\mathbb {A}}\) over the phase space \({\mathcal {H}}\). Let \({\mathcal {F}}\) locally Lipschitz on \({\mathcal {H}}\) satisfying conditions (4.1) and (4.2). Then, there exists a global solution to

that decays exponentially or polynomially, respectively.

Proof

By hypotheses, there exist positive constants \(c_0\) and \(\gamma \) such that \( \Vert T(t)\Vert \le c_0e^{-\gamma t}, \) and \(\widetilde{{\mathcal {F}}_R}\) globally Lipschitz with Lipschitz constant \(K_0\) verifying conditions (4.1) and (4.2). Let us consider the following space.

Using standard fixed point arguments, we can show that there exists only one global solution to

Multiplying the above equation by \(U^R\), we get that

Since the semigroup is contractive, its infinitesimal generator is dissipative, therefore

Using (4.2), we get

Note that for \(R> (1+k_0)\Vert U_0\Vert _{{\mathcal {H}}}^2\), we have that

In particular, we have

This means that \(U^R\) is also solution of system (4.3) and because of the uniqueness we conclude that \(U^R=U\). To show the exponential stability to system (4.3), it is enough to show the exponential decay to system (4.4). To do that, we use fixed points arguments.

Note that \({\mathcal {T}}\) is invariant over \(E_{\gamma -\delta }\) for \(\delta \) small, (\(\gamma >\delta \)). In fact, for any \(V\in E_{\gamma -\delta }\) we have

Hence, \({\mathcal {T}}(V)\in E_{\gamma -\delta }\). Using standard arguments, we show that \({\mathcal {T}}^n\) satisfies

Therefore, we have a unique fixed point satisfying

That is U is a solution of (4.4), and since \({\mathcal {T}}\) is invariant over \(E_{\gamma -\delta }\), then the solution decays exponentially. To show the polynomial stability, we consider the space

To show the invariance, we use

and use the same above reasoning. \(\square \)

Let us consider the semilinear system

The above system can be written as

where \({\mathcal {A}}\) is given by (2.3) and \({\mathcal {F}}\) is given by

Note that \({\mathcal {F}}\) is a Lipschitz function verifying hypothesis (4.1)–(4.2). In fact, \({\mathcal {F}}(0)=0\). Moreover,

Theorem 4.2

The nonlinear semigroup defined by system (4.5) is exponentially stable, provided \(\chi _0=0\). Otherwise the solution decays polynomially as established in Theorem 3.2.

Proof

It is a direct consequence of Theorem 4.1.

Let us introduce the functionals

where q is as in (3.3) hence there exist positive constants \(C_0\) and \(C_1\) such that

Under the above conditions, we establish the observability inequalities to the evolution system. \(\square \)

Lemma 4.1

The solution of system (4.5) satisfies

Proof

Multiply Eq. (4.5)\(_1\) by \(q{\overline{S}}\) and Eq. (4.5)\(_2\) by \(q{\overline{M}}\) summing up and performing integration by parts and use the same approach as in the proof of Lemma 3.3. To achieve the second inequality, we use \(q_0\) instead of q given by (3.3). \(\square \)

Theorem 4.3

For any initial data \( (\varphi _0,\varphi _1,\psi _0,\psi _1,\theta _0)\in {\mathcal {H}}\), there exists a weak solution to Signorini’s problem (1.1)–(1.5), which decays as establish in Theorem 4.2.

Proof

From Theorem 4.1, there exists only one solution to system (4.5) verifying

where

and

In particular, from Lemma 4.1 we have that

which means that the first order energy is uniformly bounded for any \(\epsilon >0\). Standard procedures implies that the solution of system (4.5) converges in the distributional sense to system (1.1). It remains to show that condition (1.4) holds. Using Theorem 4.1, we get that \(\varphi _t^\epsilon (L,t)\) and \(S^\epsilon (L,t)\) are bounded in \(L^2(0,T)\) for any \(\epsilon >0\), so is \(v_{tt}^\epsilon \). Using (4.5)\(_4\), we get

for any \(u\in L^2(0,T;{\mathcal {K}})\cap H^1(0,T;L^2(0,L))\), where \({\mathcal {K}}=\{w\in H^1(0,L),\;\; g_1\le w(L)\le g_2\}.\) It is no difficult to see that

In fact, from (4.5)\(_4\) \(\epsilon v_{tt}^\epsilon \) is bounded by a constant depending on \(\epsilon \), in \(L^2(0,T)\), from (4.9) \(v_{t}^\epsilon \) is also uniformly bounded in \(L^2(0,T)\). Therefore, \(v_{t}^\epsilon \) is a continuous function, uniformly bounded in \(L^\infty (0,T)\). Making an integration by parts, we get

Hence,

Note that

for any \(g_1\le u(L,t)\le g_2\). Similarly, we get

Therefore, from the last two inequalities we arrive to

for any \(u\in H^1(0,T;L^2(0,L))\) such that \(g_1\le u(L,t)\le g_2\). Letting \(\epsilon \rightarrow 0\) and recalling that \(v=\varphi (L,t)\) we get

From this relation, we get (1.5). The proof of the existence is now complete. To show the asymptotic behaviour, we use Theorem 4.2 to get

So, using the semicontinuity of the norm and noting that \({\mathcal {N}}(0)=0,\) we obtain

where C is a positive constant independent of parameter \(\epsilon .\) Thus, we conclude the exponential stability of the Signorini’s problem. Similarly, we get the polynomial stability. \(\square \)

Remark 4.1

We believe that the polynomial rate of decay is optimal in the sense that it is the same rate obtained in [10, 11] where the authors show the optimality.

Remark 4.2

The uniqueness of the solution to Signorini’s problem (1.1)–(1.4) remains an open question.

The same approach can be used to show existence of the semilinear problem

Theorem 4.4

Under the same hypothesis from Theorem 4.3, there is at least one solution to Signorini’s problem (4.11) satisfying (1.2)–(1.5).

Proof

As in Theorem 4.3, we consider the function

where f is given by (4.6). Note that \( {\mathcal {F}}(0)=0\). Using the mean value theorem to \(g(s)=|s|^\alpha s\), we obtained the inequality

Taking the norm in \({\mathcal {H}}\) and since \(\varphi ^{\epsilon }_i\) and \(\psi ^{\epsilon }_i\) belong to \(H^{1}(0,L)\subset L^{\infty }(0,L),\) then we get

Therefore, \({\mathcal {F}}\) is locally Lipschitz. Since

then

Thus, there exists a positive constant \(c_0\) such that

Note that for this function, there exists the cut-off function

It is not difficult to check that

is globally Lipschitz. Using Theorem 4.1, our conclusion follows. \(\square \)

5 Numerical approach

In this section, we consider the numerical solution of the penalized problem (4.5). We use the finite element methods over (0, L) and the finite difference in time.

5.1 Algorithms and numerical experiment

Let \(X_h\) be a partition over the interval \(\Omega =(0,L),\) that is, \( X_h=\{0=x_0<x_1<\cdots <x_N=L\},\ \ \ \Omega _{j+1}=(x_j,x_{j+1}), \) where \(N_e\) is the number of the elements obtained of partition. We consider the finite-dimensional \( S^h_1=\{u\in C(0,L); u\Big |_{\Omega _e}\in P_1(\Omega _e)\},\ \) where \(P_1\) is the set of linear polynomials over \(\Omega _e,\) and \( U^h=\{u^h\in S^h_1; u^h(0)=0\}\ \text{ and }\ V^h=\{v^h\in S^h_1; v^h(L)=0\} \). We use a representation of the functions \(\varphi ^h\) and \(\psi ^h\) as in [16], so we have

where \(\phi _i(x),\ i = 1,\ldots , 2N,\) and \(\omega _i(x),\ i = 1,\ldots , N,\) are the global vector interpolation functions. So, we obtain the following dynamical problem in \({\mathbb {R}}^N\times {\mathbb {R}}^{2N}\).

where \(\mathbf{M }_1\) is the thermal capacity matrix, \(\mathbf{K }_1:\) the conductivity matrix, \(\mathbf{C }_1:\) the coupled matrix and \(\mathbf{F }_1:\) the heat source vector. \(\mathbf{M }_2:\) the consistent mass matrix, \(\mathbf{K }_2(\mathbf{d }(t)):\) the vector of consistent nodal elastic stiffness at time t, and \(\mathbf{F }_2(t):\) the vector of consistent nodal applied forces generalized at time t. Furthermore, \(\theta _0,\) \(\mathbf{d }_0\) and \(\mathbf{d }_1\) are temperature, displacement and velocities, nodal initial.

To solve the above system, we introduce a partition P of the time domain [0, T] into M intervals of length \(\Delta t\) such that \(0=t_0<t_1<\cdots <t_M=T,\) with \(t_{n+1}-t_n=\Delta t\) and we use the well-known Trapezoidal generalized rules and Newmark’s methods (see [17, 18]). In our problem, we have a nonlinear system. Thus, our numerical scheme becomes

where

and \(\beta ,\ \gamma \) and \(\alpha \) are parameters that govern the stability and accuracy of the methods.

Remark 5.1

A typical numerical problem to the Timoshenko system is the shear locking. Numerical alternatives were performed in the literature, we indicate the classical reference by Arnold [19], Hughes et al. [20] and Prathap and Bhashyam [21].

Remark 5.2

To get computational results, we use the implemented code in Language C. The graphics were developed using GNUplot.

5.1.1 Numerical experiment

To verify the asymptotic behaviour of the numerical solutions, we consider the parameter from algorithms \(\beta =\frac{1}{4},\ \gamma =\frac{1}{2}\) and \(\alpha =\frac{1}{2}.\) In these experiments, we consider the following initial conditions:

Also, we take a finite element mesh with \(h=0.00125\) and \(\Delta t= 10^{-6}\) s.

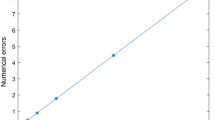

Experiment We consider a rectangular beam with \(L= 1.0\) m, thickness 0.1 m, width 0.1 m, \(E=69.10^{9}\) N/\(\text{ m}^2\) \(\rho =2700\) Kg/\(\text{ m}^3\),\(\ \nu =0.3\) (Poisson ratio), and \(\tau =42\) W/m K and \(\varphi _t(x,0)=1-\cos (\frac{2\pi }{L} x).\) The penalization parameter \(\epsilon =10^{-9}\) and \(g_2=-g_1=0.001\) m (Fig. 2).

References

Lions, J.L., Lagnese, J.E.: Modelling Analysis and Control of Thin Plates. Collection RMA. Masson, Paris (1989)

Timoshenko, S.P.: On the correction for shear of the differential equation for transversal vibrations of prismatic bars. Philos. Mag. Ser. 6(41), 744–746 (1921)

Andrews, K.T., Shillor, M., Wright, S.: On the dynamic vibrations of a elastic beam in frictional contact with a rigid obstacle. J. Elast. 42, 1–30 (1996)

Kutter, K.L., Shillor, M.: Vibrations of a beam between two stops. Dyn. Contin. Discrete Impuls. Syst. Sér. B Appl. Algorithms 8, 93–110 (2001)

Copetti, M.I.M., Elliot, C.M.: A one dimensional quasi-static contact problem in linear thermoelasticity. Eur. J. Appl. Math. 4, 151–174 (1993)

Dumont, Y., Paoli, L.: Vibrations of a beam between stops: convergence of a fully discretized approximation. Math. Model. Numer. Anal. 40, 705–734 (2006)

Muñoz Rivera, J.E., Arantes, S.: Exponential decay for a thermoelastic beam between tow stops. J. Therm. Stress 31, 537–556 (2008)

Berti, A., Rivera, J.E.M., Naso, M.G.: A contact problem for a thermoelastic Timoshenko beam. Z. Angew. Math. Phys. 66, 1–20 (2014)

Muñoz Rivera, J.E., Oquendo, H.P.: Exponential stability to a contact problem of partially viscoelastic materials. J. Elast. 63, 87–111 (2001)

da Dilberto, S.A., Santos, M.L., Rivera, J.E.M.: Stability to 1-D thermoelastic Timoshenko beam acting on shear force. Z. Angew. Math. Phys. 65, 1233–1249 (2014)

Almeida, D.S., Santos, M.L., Rivera, J.E.M.: Stability to weakly dissipative Timoshenko systems. Math. Methods Appl. Sci. 36, 1965–1976 (2013)

Liu, Z., Zheng, S.: Exponential stability of semigroup associated with thermoelastic system. Quart. Appl. Math. 51(3), 535–545 (1993)

Brézis, H.: Functional Analysis, Sobolev Spaces and Partial Differential Equations, p. 599. Springer, New York (2011). https://doi.org/10.1007/978-0-387-70914-7

Pruess, J.: On the spectrum of \(C_0\)-semigroups. Trans. Am. Math. Soc. 284(2), 847–857 (1984)

Borichev, A., Tomilov, Y.: Optimal polynomial decay of functions and operator semigroups. Math. Ann. 347, 455–478 (2009)

Loula, A.D.F., Hughes, J.R., Franca, L.P.: Petrov–Galerkin formulation of the Timoshenko beam problem. Comput. Methods Appl. Mech. Eng. 63, 115–132 (1987)

Newmark, N.M.: A method of computation for structural dynamics. J. Eng. Mech. 85, 67–94 (1959)

Hughes, T.J.R.: The Finite Element Method: Linear Static and Dynamic Finite Element Analysis. Dover Civil and Mechanical Engineering Series. Dover Publications, New York (2000)

Arnold, D.N.: Discretization by finite elements of a model parameter dependent problem. Numer. Math. (1981). https://doi.org/10.1007/BF01400318

Hughes, T.J.R., Taylor, R.L., Kanoknukulcahi, W.: A simple and efficient finite element method for plate bending. Int. J. Numer. Methods Eng. 11, 1529–1543 (1977). https://doi.org/10.1002/nme.1620111005

Prathap, G., Bhashyam, G.R.: Reduced integration and the shear-flexible beam element. Int. J. Numer. Methods Eng. 18, 195–210 (1982)

Acknowledgements

The authors would like to express their deepest gratitude to the anonymous referees for their comments and suggestions that have contributed greatly to the improvement of this article.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Rivera, J.E.M., da Costa Baldez, C.A. Stability for a boundary contact problem in thermoelastic Timoshenko’s beam. Z. Angew. Math. Phys. 72, 8 (2021). https://doi.org/10.1007/s00033-020-01437-y

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00033-020-01437-y

Keywords

- Timoshenko’s beams

- Thermoelasticity

- Contact problem

- Semilinear problem

- Asymptotic behaviour

- Numerical solution