Abstract

We introduce and thoroughly examine a novel approach grounded in B-spline techniques to address the solution of second-kind nonlinear Volterra integral equations. Our method revolves around the application of B-spline interpolation, incorporating innovative end conditions, and delving into the associated existence and error estimation aspects. Notably, we develop this technique separately for even and odd-degree splines, leading to super-convergent approximations, particularly significant when employing even-degree splines. This paper extends its commitment to a comprehensive analysis, delving deeply into the method’s convergence characteristics and providing insightful error bounds. To empirically validate our approach, we present a series of numerical experiments. These experiments underscore the method’s efficacy and practicality, showcasing numerical approximations that closely align with the anticipated theoretical outcomes. Our proposed method thus emerges as a promising and robust tool for addressing the challenging realm of nonlinear Volterra integral equations, bridging the gap between theoretical expectations and practical applications.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Integral equations is a branch of mathematics that deals with equations in which an unknown function appears under an integral sign. These equations arise in many areas of science and engineering, such as physics, chemistry, biology, economics, signal processing, potential theory electrostatics and fluid dynamics [1,2,3,4,5,6,7,8,9,10,11,12]. The theory of integral equations is an important tool for understanding and solving complex problems in various fields of science and engineering. Consider the second kind nonlinear Volterra integral equation

where g and G are given functions. A large variety of numerical methods have been developed to approximate the numerical solution of Volterra integral equations. In [13], the higher degree fuzzy transform technique is used for the numerical solution of the second kind Volterra integral equations with singular and nonsingular kernels. A smoothing transformation technique based on quasi-linearization and product integration methods has been used for the numerical solution of nonlinear weakly singular Volterra integral equations in [14]. A solution has been obtained in [15], using the fixed point technique in the setting of dislocated extended b-metric space for the Volterra integral equations. Xiao-yong [16] developed a high-order algorithm for the solution of nonlinear Volterra integral equations with vanishing variable delays. The algorithm was a nontrivial extension of single step methods. A Runge–Kutta method based on the first kind Chebyshev polynomials has been developed to approximate the solution of nonlinear stiff Volterra integral equations of the second kind in [17]. In [18], a collocation method based on Chebyshev polynomial interpolation is developed for the numerical solution of Volterra integral equations of the second kind. This method used the roots of Chebyshev polynomial as collocation points. A lot of other numerical approaches such as Chebyshev collocation method [19], Romberg extrapolation method [20, 21], iterated Galerkin method [22] and finite difference method [23] have been used to approximate the numerical solution of Volterra integral equations of the second kind.

Using spline functions in the context of numerical solution of integral equations dates back to 1970s. Hung [24], used spline function to approximate the solution of Volterra integral equations of the first and second kind. He obtained a convergent scheme based on linear and quadratic spline. He also proved that using cubic spline leads to divergent approximations. Tom [25], developed an approximation based on spline function to approximate the solution Volterra integral equations of the second kind. It is proved that, splines of degree greater than two with maximum degree of smoothness lead to unstable approximations for the solution of Volterra equations of the second time. Tom [26], determined the conditions for stability of spline based methods for the solution of Volterra integral equations. In [27], a \(C^2\)-cubic spline is used to approximate the solution of the Volterra integral equation of the second kind but the question of stability does not answered. Kauthen [28], developed a polynomial spline collocation method for the solution of an integral-algebraic equations of the Volterra type. In successive papers [29,30,31,32], Oja et al. discuss the stability and convergence of spline collocation method for the solution of Volterra integral equations of the second kind. It is proved that solving Volterra integral equations with step by step collocation using m-th degree spline of class \(C^{m-1}[0,T]\) leads to an unstable and divergent approximations for \(m\ge 3.\) In recent years, many researchers did attempt to approximate the solution of second kind Volterra integral equations using spline [33,34,35,36,37].

In the current work, we endeavor to devise a novel approach anchored in the collocation B-spline method, tailored to solve nonlinear Volterra integral equations of the second kind. Our methodology is meticulously designed, taking into account both odd and even-degree splines, which are treated separately to harness their unique strengths. For odd degree splines, we collocate the problem on the grid points of the uniform partition while the midpoints of the partition are used for the even degrees. For \(m\ge 2\), some extra relations are needed to uniquely determine the spline approximation. To construct such extra relations, we collocate the problem at near boundary midpoints for odd values of m and near boundary grid points for even values of m. At first, we prove that such an spline is exist and we obtain the local error bounds for interpolating sufficiently smooth functions. Then, we use the method to approximate the solution of nonlinear Volterra integral equations of the second kind. It is proved that the method is super-convergent for even m.

The rest of the paper is organized as follows: In Sect. 2, we will introduce a B-spline collocation method with new end conditions and we will obtain some local error bounds to be used in the convergence analysis. In Sect. 3, we will construct our new approach based on proposed B-spline for the solution of the nonlinear Volterra integral equations. Section 4 is devoted to the convergence analysis and error bound of the method. In Sect. 5, we will solve some problems to show the applicability and efficiency of the proposed method.

2 B-Spline Collocation Method

B-splines are the splines with smallest compact support, which means that the basis functions are non-zero only within a small interval or segment of the domain. Such a property makes B-splines computationally efficient, as the calculations can be localized to these smaller segments. B-splines form a partition of unity, which means that their sum over the entire domain is equal to one. This property allows for the construction of a global approximation by combining local B-spline basis functions. Each basis function is associated with a specific control point and only affects a small region of the domain. By properly choosing the control points and their corresponding basis functions, it is possible to create a global approximation that accurately represents the behavior of the solution throughout the domain. The partition of unity property also ensures that the approximated solution satisfies important properties, such as conservation laws or boundary conditions. This is crucial in many applications where physical laws or constraints need to be preserved. The partition of unity and non-negativity of the B-spline basis functions are equivalent to the affine invariance and the convex hull properties of B-spline curves. Meaning that, a B-spline curve is contained in the convex hull of its control polyline. Consequently, as the interpolated function u moves from a to b and crosses a knot, a basis function becomes zero and a new non-zero basis function becomes effective. As a result, one control point whose coefficient becomes zero will leave the definition of the current convex hull and is replaced with a new control point whose coefficient becomes non-zero. One of the advantages of using B-splines for this purpose is their ability to provide a smooth and continuous representation of the solution. This is particularly important in the context of differential equations, where the solution is often expected to be differentiable and exhibits certain smoothness properties.

In what follows, we will provide a succinct overview of (cardinal) B-spline functions and their properties. Afterward, we will introduce a novel numerical scheme that utilizes the Gauss–Legendre quadrature method. This scheme aims to numerically solve integral equations with high precision and convergence. If you wish to delve deeper into the subject matter, we recommend referring to references [38] and [39] for comprehensive information on B-splines and Gauss–Legendre quadrature method.

Let \(\Delta \equiv \{0=x_{0}< x_{1}< \cdots < x_{n}=T\}\) be a uniform partition of the interval [0, T] with \(h=\frac{T}{n}\) as step size and \(\Omega \equiv \left\{ \tau _{i}=x_{i}-\frac{h}{2}\right\} _{i=1}^{n}\) be the set of mid-points of \(\Delta \). Also let denote by \(I_i=[x_{i-1},x_{i}), i=1,\ldots , n-1\) and \(I_n=[x_{n-1},x_{n}]\), the subintervals of the partition \(\Delta \). Given a positive integer \(m<n\), the space of piecewise polynomials of degree m is defined as

where \(\Pi _{m}\) is the space of polynomials of degree m. Now for \(\Delta \) and m defined above the space of polynomial spline of degree m with the simple knots at the points \(x_{0}, x_{1},...,x_{n}\) can be defined as

where \(C^{m-1}[0,T]\) is the space of \(m-1\) time continuously differentiable functions defined on [0, T]. It is the smoothest space of piecewise polynomials of degree m. Polynomial spline spaces are finite dimensional linear spaces with very convenient bases. In the ongoing, following [38], we will introduce a basis for \(\mathcal{S}\mathcal{P}_{m}(\Delta )\), which is compact support and defines a partition of unity.

Let us define the extended partition \(\tilde{\Delta }=\{\tilde{x}_{i}\}_{i=0}^{n+2m}\), associated with \(\Delta \) with the knots \(\tilde{x}_{i}\) defined as

and

It should be noted that the interior knots \(\{\tilde{x}_{i}\}_{i=m}^{m+n}\) are uniquely determined while the first and last m points can be chosen arbitrarily. Let \(\tilde{\Delta }\) be the extended partition associated with \(\Delta \) and define

where \((x-y)_{+}^{m}\) is the truncated power function

and the divided difference operator \(\left[ \tilde{x}_{i},\ldots ,\tilde{x}_{m+i+1}\right] (x-y)_{+}^{m}\) is defined in [38] Definition 2.49. The set of functions \(\{\mathfrak {B}_i(x), ~ 0 \le i \le n+m-1 \}\) forms a basis for the space of spline functions of \(\mathcal{S}\mathcal{P}_{m}(\Delta )\) which is compact support, that is

and

Moreover, the basis functions form a partition of unity that is

In another terminology using the cardinal spline function

the B-spline basis functions can be constructed as follows [40]

which means that

Therefore, any spline \(s\in \mathcal{S}\mathcal{P}_{m}(\Delta )\) can be written in the following form

where \(c_i\), \(\small {i = 0,..., n+m-1}\) are unknown coefficients to be determined. We will define the interpolation conditions on the grid points and midpoints of the partition for odd and even m, respectively. Let for \(f\in C[a,b]\), s(x) satisfies the interpolatory conditions

for even m, and

for odd m.

As we know the dimension of \(\mathcal{S}\mathcal{P}_{m}(\Delta )\) is \(n+m\), thus in order to uniquely determine s(x), we need \(m-1\) end conditions for odd m and m end conditions for even m along with interpolatory conditions. To avoid the utilization of derivatives of the solution at the initial point as additional conditions, we introduce the following alternative end conditions:

for even m and

for odd m, where \(\delta =[\frac{m-1}{2}]\) and \(\sigma =[\frac{m}{2}]\) (\([\nu ]\) means the greatest integer smaller than \(\nu \)).

To establish the well-defined nature of the spline interpolation introduced above, it is necessary to refer to the following Theorem from [41], which provides a sufficient condition for the unique solvability of the resulting system.

Theorem 2.1

The coefficients matrix \(A=\left( \mathcal {B}_i(x_j)\right) \) of the B-spline, interpolating a function at the grid points \(x_j\) is nonsingular if and only if all diagonal elements \(\mathcal {B}_i(x_i)\) are nonzero.

At both the grid points and midpoint of the partition, the subsequent Theorem presents a local error estimate for the aforementioned spline interpolation. Before, we need to recall the following Lemma from [42].

Lemma 2.1

Let \(A=\{a_{ij}\}\) be an \(n\times n\) matrix with \(|a_{ii}|\ge \sum _{j=1,j\ne i}^{n}|a_{ij}|+\varepsilon ,\) \(1\le i \le n\), where \(\varepsilon >0\), then we have \(\Vert A^{-1}\Vert _{\infty }<\varepsilon ^{-1}\).

Theorem 2.2

Let \(s\in \mathcal{S}\mathcal{P}_{m}(\Delta )\) be the unique spline interpolation for \(f\in C^{m+2}[0,T]\) satisfying interpolatory conditions (2.2)–(2.4) for even m or (2.3)–(2.5) for odd m, then

if m is even, and

if m is odd.

Proof

We will prove the first relation, since the second one can be proved in a similar manner. Consider the following consistency relation which connect s(x) at the midpoints and grid points of the partition [40]

where

Let us define the error function \(e(x)=s(x)-f(x)\). Subtracting \(\sum _{j=-\delta -1}^{\delta +1}l_{i}f(x_{i+j})\) from both sides of (2.8), we have

which using the definition of e(x) the left hand side can be written as \(\sum _{j=-\delta -1}^{\delta +1}l_{i}e(x_{i+j})\) and by the interpolatory conditions (2.2), \(s(\tau _{k})\) in the right hand side can be replaced by \(f(\tau _{k})\) to obtain

By the assumptions \(f\in C^{m+2}[0,T]\), so \(M_1=\max _{0 \le x\le T}|f^{(m+2)}(x)|<\infty \). By expanding f in both terms in the right hand side of the relation above, using Taylor series expansion about \(x_{i}\) and simplifying we obtain

The system above contains \(n-m+1\) equations with \(n+1\) unknown \(e(x_{i}),~ 0\le i\le n\). Using (2.4) and (2.10) together, we obtain the following \(\tiny {(n+1)\times (n+1)}\) system

Obviously, certain elements within the above system are zero. By eliminating the unknowns corresponding to these zero elements, the size of the system can be reduced. Consequently, the system can be reformulated in its matrix form as shown below:

where

and

According to Theorem 2.1, the coefficients matrix \(\mathcal {A}\) is nonsingular if all the diagonal elements are nonzero. Specifically, all the diagonal elements of \(\mathcal {A}\) are equal to \(l_{\delta }\) which possesses the following value

Since \(m+\frac{1}{2}-\delta \in support (\mathcal {B}_{m+1})\) thus \(l_{\delta }\ne 0\) and the matrix \(\mathcal {A}\) is nonsingular. Therefore, from (2.12), we obtain

For \(m\le 6\), the system is strictly diagonally dominant and using Lemma 2.1 we have \(\left\| \mathcal {A}^{-1}\right\| _{\infty }<46\) thus, obviously in this case the proof is complete. Let \(m>6,\) it can be readily verified that for each B-spline basis function, all \(l_i\) values are positive and \(\sum _{i=0}^{m}l_i=1\), so \(\Vert \mathcal {A}\Vert _{_1}=1\) and \(\Vert \mathcal {A}\Vert _{_\infty }=1\). By leveraging the properties of the B-spline basis function \(\mathcal {B}_{m+1}\), it results

thus, \(\mathcal {A}\) is a symmetric matrix. On the other hand, the nonsingularity of \(\mathcal {A}\) implies that all of its eigenvalues are nonzero. Specifically, we have \(\mu (\mathcal {A}) \ne 0\), where \(\mu \) represents smallest absolute of eigenvalue of \(\mathcal {A}\). Drawing upon concepts from linear algebra, we have

where \(\rho \) represents the largest absolute eigenvalue of \(\mathcal {A}\). On the other hand using the Gershgorin’s Theorem, all eigenvalues \(\lambda \) of \(\mathcal {A}\) must lie within the Gershgorin’s discs

which results

It is noteworthy that the numerical experiments show that for fixed m, by varying n, we have \(\rho (\mathcal {A})\ne 1\) which means that \(\Vert \mathcal {A}\Vert _{_2}<1\). Consequently, based on the principles of linear algebra for symmetric matrices, we have

which means that \(\Vert \mathcal {A}^{-1} \Vert _{_2}\) cannot be infinite. Let denote by \(M_{2}\) the upper bound of \(\Vert \mathcal {A}^{-1} \Vert _{_2}\), then substituting into (2.14) we obtain

This together with (2.4) complete the proof of (2.6). \(\square \)

3 Numerical Method

In this section, we present a cutting-edge numerical methodology centered around the innovative utilization of B-spline methods for solving integral equations. The proposed approach offers a robust and computationally efficient framework for tackling integral equations. By employing B-splines to represent the unknown functions in the integral equation, we can construct a concise and highly accurate representation, leading to a significant reduction in computational effort while preserving solution accuracy. Throughout this section, we detail the theoretical foundation, algorithmic implementation, and numerical consideration of our B-spline-based method. We further substantiate the effectiveness and superiority of our approach through comprehensive examples including nonlinear integral equations in Sect. 5.

The operator representation of the integral Eq. (1.1) can be illustrated as follows

where \(\mathcal {K}:C^{m+1}\rightarrow C^{m+1}\) is a nonlinear integral operator in the following form:

Assuming the existence and uniqueness of the solution to Eq. (3.1), our objective is to employ an m-th degree B-spline of the following form

to approximate the solution.

3.1 Even Degree B-Spline

Let m be even. Upon replacing u(x) with s(x) in Eq. (3.1), we take the approach of collocating the obtained equation at the midpoints of the partition, we have

Then employing the B-spline basis functions at points \(\tau _{i}\), the Eq. (3.3) can be expressed as

for \(1 \le i \le n\). The system described above consists of n equations and \(m+n\) unknowns. In order to ensure the unique determination of the spline approximation, we require an additional m equations. To this end, we employ the collocation equation at m boundary and near-boundary grid points in the following manner

Upon utilizing the expressive B-spline basis functions, we can express Eq. (3.5) as follows

for \(i\in \{0,1,\ldots ,\delta \}\cup \{n-\delta ,\ldots ,n\}\). The relation (3.4) together with (3.6) yields a system of \(n+m\) equations involving \(n+m\) unknowns \(c_{j},~ j=0,\ldots ,n+m-1\), which must be diligently solved to define s(x). The solution to this system will lead us to the desired approximation of the function s(x) using proposed B-spline basis functions.

To effectively handle the integrals in (3.4) and (3.6), we employ the q-point Gauss–Legendre quadrature method. This numerical integration technique is well-suited for accurately approximating integrals over specific intervals by distributing q quadrature’s points in a way that optimally captures the underlying function’s behavior [39]. By introducing the notation \(z(t)=G\left( \tau _{i},t,\sum _{j=0}^{n+m-1}c_{j}\mathcal {B}_{m+1}\left( \frac{t-x_0}{h}-j+2\right) \right) \) and change of variable, the integrals in (3.4) can be reformulated as follows

Now by incorporating the Gauss–Legendre quadrature into our system of equations, we can efficiently compute the unknown coefficients \(c_j\), ultimately leading to a highly accurate approximation of s(x). We end up with

where \(\vartheta _{k}\) and \(\eta _{k}\) represent the weights and abscissas associated with q-point Gauss–Legendre integrating formula. In a same manner by introducing the notation \(\tilde{z}(t)=G\left( x_{i},t,\sum _{j=0}^{n+m-1}c_{j}\mathcal {B}_{m+1}\left( \frac{t-x_0}{h}-j+2\right) \right) \) the integrals in (3.6) can be approximated as follows

3.2 Odd Degree B-Spline

For odd m, we collocate the problem at grid points of the partition as follows

for \( 0 \le i \le n\). Obviously, the above system of equations requires \(m-1\) extra relations to be uniquely solvable. In this case, the collocated problem

can be utilized at the near boundary mid points of the partition for \(i \in \{1,\ldots ,\sigma \}\cup \{n-\sigma +1,\ldots ,n\}\). It is worth noting that the integrals in (3.9) and (3.10) can be approximated in the same manner as (3.7) and (3.8).

4 Convergence Analysis and Error Estimation

4.1 Spline Approximation Error

In this section, we embark on an in-depth exploration of the error estimation and convergence rates pertaining to the scheme proposed within this paper. Our objective is to rigorously assess the accuracy and convergence properties of the introduced methodology. We constructed a collocation method based on B-splines for the solution of

where \(\mathcal {K}\) is the Volterra integral operator (3.2). We will prove the convergence for even degree splines and the proof is similar for odd degrees. Therefore, let m be even, in order to achieve an error bound and analyze the convergence of the proposed method, we define the operator \(\mathcal {P}_n u:C^{m+1}\rightarrow {\mathcal{S}\mathcal{P}_{m}(\Delta )}\) such that, for any function \(\alpha \in C^{m+1}\) we have

Rewriting (3.3) and (3.5), we have

which by means of operator \(\mathcal {P}_n\) and (4.1) can be written in the operator form as

Also \(s\in \mathcal{S}\mathcal{P}_{m}(\Delta )\), thus \(\mathcal {P}_{n}s=s\) and we have

Lemma 4.1

The operator \(\mathcal {P}_{n}\) is uniformly bounded in \(C^{k}[0,T]\).

Proof

Using [38], Theorem 6.22, for every \(\alpha \in C[a,b]\), we have

so \(\mathcal {P}_{n}\) is uniformly bounded. \(\square \)

Lemma 4.2

The sequence of operators \(\mathcal {P}_{n}\) uniformly converges to identity operator.

Proof

Let \(\alpha \in C[0,T]\), then according to Lebesgue Lemma, we have

But the right hand side of the above inequality represents the error of best approximation for \(\alpha \) in \(\Pi _{m}.\) Suppose that \(u^{*}\) be the best approximation for f then using Jackson’s Theorem in each interval \([\tau _{i},\tau _{i+1}]\) we have

Thus we have

and the proof is complete. \(\square \)

In fact from Theorem 2.2 for \(\alpha \in C^{m+1}[0,T]\) we have

and if \(\alpha \in C^{m+2}[0,T]\), for even m we have the following local bound

We will show that (4.3) has a unique solution that converges to the solution of (4.1) and finally, we will obtain the rate of convergence. Let us restate the following Theorem from [43].

Theorem 4.1

Suppose that operators T and \(T_{n}\) on the Banach space B can be represented as

where K is a nonlinear, completely continuous operator mapping B into another Banach space \(B'\) and P and \(P_{n}\) are continues linear operators taking \(B'\) into B. Suppose that the operator equation

has a solution \(\upsilon \). A sufficient condition that \(\upsilon \) be an isolated solution of (4.4) in some sphere \(\Vert \omega -\upsilon \Vert \le \mu \hspace{2.84544pt}( \mu >0)\) is that K be differentiable at the point \(\upsilon \) the homogeneous equation

has only the trivial solution \(w=0.\) Suppose further that the sequence of operators \(P_{n}\) converges strongly to the operator P, then the equation

has a solution \(\upsilon _{n}\) satisfying \(\Vert \upsilon _{n}-\upsilon \Vert \le \mu \) for all sufficiently large n, \(\upsilon _{n}\rightarrow \upsilon \) as \(n\rightarrow \infty ,\) and the rate of convergence is bounded by

Proof

See [43]. \(\square \)

Theorem 4.2

Let for even m, \(u\in C^{m+1}[a,b]\) and \(s\in \mathcal{S}\mathcal{P}_{m}(\Delta )\) be the exact and spline solutions of problem (1.1), respectively. If s is obtained by (3.4)–(3.6), then it converges to u as n tends to infinity and for some constant C, we have

Also, if \(u\in C^{m+2}[a,b]\), the following local error bound holds

Proof

Since \(\mathcal {P}_{n}\rightarrow I\) thus we have \(\mathcal {P}_{n}\mathcal {K}\rightarrow \mathcal {K}\) and the assumptions of Theorem 4.1 are satisfied. Hence, the collocation equation

has a solution s for which \(\Vert s- u\Vert \rightarrow 0\). Also, using Theorem 4.1 we have the following bound

where \(M'\) is some finite constant. On the other hand, we have

which combining with complete continuity of \(\mathcal {K}\) gives

Also since \(|\mathcal {P}_{n}g-g|_{_{x_{i}}}=O(h^{m+2}),\) it results that

\(\square \)

4.2 Effect of Quadrature Rule on Error

We used a q-point Gauss–Legendre quadrature method to approximate integrals arose in the collocation system. It is well known that the error associated with q-point Gauss–Legendre quadrature for \(f\in C^{2q}[a,b]\) is bounded by [44]

Since we used quadrature in each subinterval thus \(b-a\le h\). Having that for q in range, the term \(\frac{(n!)^4}{(2n+1)(2n!)^3}\) remains bounded, thus

for small q. On the other hand, we proved that the error of the collocation system is \(O(h^{m+1})\) for odd m and \(O(h^{m+2})\) for even m. Thus, q should be chosen such that it satisfies

While the Newton-Cotes quadrature can be employed to approximate integrals in the collocation system, it is worth noting that its accuracy is inferior to Gauss quadrature method. Consequently, a greater number of quadrature nodes will be required for satisfactory results.

5 Some Test Problems

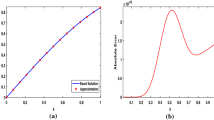

In this section, we solve some test problems of second kind Volterra integral equations to show the applicability of the proposed method,. Two examples with smooth kernel and one with non-smooth kernel are solved. The acquired results are compared with various existing methods to demonstrate the efficacy of our approach. The maximum absolute errors and the experimental orders of convergence are presented in Tables 1, 2, 3, 4, 5, 6, 7. The experimental orders are calculated by

where \(E_n\) denotes the maximum absolute error obtained with \(n+1\) collocation points. All the programs are written in Mathematica 12, and run on a system with Intel Core i7-2670 2.20 GHz and 8 GB of RAM.

Example 5.1

As first test problem consider the linear second kind Volterra integral equation

with the exact solution \(u(x)=\frac{1}{2}\left( \sin (x)+\cos (x)+e^{x}\right) \), where \(\bar{\lambda }\) is a constant parameter. In [30] and [31], it is show that step-by-step spline collocation procedure based on quadratic and cubic spline may diverge for some choices of collocation parameters. It is shown that the nonlocal approach based on spline can be better comparing to step-by-step algorithm. Here, we solve the test problem for various values of \(\bar{\lambda }\) using quadratic and cubic spline approach and compared the results with splines in [30] and [31]. The results are tabulated in Tables 1 and 2. It is obvious that using our approach more accurate results can be achieved. Also, the method is stable and the solution remains bounded. We also run the problem in larger domain [0, 5] and tabulated the results in Table 3. The results show that the method is still accurate and remains stable in larger domains.

Example 5.2

Consider the following nonlinear second kind Volterra integral equation

where \(\Phi (x,t,u(t))=xt+u(t)\) and \(\phi (x,t,u(x))=\frac{x}{1+x}\ln (1+u(x))\). The exact solution is \(u(x)=\frac{1}{10}x^{10}\). The problem is already solved using cubic spline [45] and a method based on mean-value Theorem [46]. We solved the problem using cubic spline approach. The number of collocation points for reaching the tolerance are tabulated in Table 4. Obviously, our method may require fewer collocation points comparing to the existing methods.

Example 5.3

Consider the following second kind singular cordial Volterra integral equation

with \(\varphi (t/x)=(t/x)^{\xi -1}\) and \(\xi >0\). In [43], for \(\varphi \in L^{1}[0,1]\), it is proved that \(V_{\varphi }\in \mathcal {L}(C^{m})\) where \(V_{\varphi }\) is the cordial integral operator

and \(\mathcal {L}(C^{m})\) is the space of linear bounded operators from \(C^{m}\) to \(C^{m}\). So the new approach can be used successfully without any modifications.

We solved this problem for various kinds of exact solutions. The problem is solved for \(\xi =\frac{3}{2}\) using various degrees of splines and maximum absolute errors and orders of convergence are tabulated in tables 4-7. Based on Theorem 4.2, the order of convergence depends on the regularity of the exact solution. The results in tables 4-7 verify the theoretical results as well. Also, it is obvious that using even degree splines we obtain super-convergent approximations.

Example 5.4

Consider the following nonlinear second kind Volterra integral equation

with the exact solution \(u(x)=\cos (x)\). The problem is already solved by a cubic spline method with standard end conditions in [47]. In Table 9, we compared the results obtained by our cubic spline method (\(m=3\)) with those reported in [47]. It is obvious that our results are more accurate than the standard cubic spline. Also, we solved the problems in the larger domain \([0,T], T=2,3,4,5,\) and tabulated the maximum absolute errors in Table 10. The results show that the method is applicable for larger domains as well.

6 Conclusion

The paper has been concerned with the application of B-splines for the solution of second kind Volterra integral equations. It is already known from literature that using splines with maximum order of regularity for the solution of second kind Volterra integral equations may lead to unstable approximations depend on the choice of collocation parameters [30, 31]. We developed a method based on B-splines for the second kind nonlinear Volterra integral equations which uses the grid points and mid points of the uniform partition as collocation points. The method is stable and convergent. Also, we found a super-convergence using even degree splines. We used the method for some test problems for which the step-by-step and nonlocal spline approaches are unstable. It is obvious from the obtained results that the method efficient and the theory is supported by numerical experiments as well. It is noteworthy that the method presented in this paper can be readily applied for approximating solutions to Fredholm integral equations, as well as integro-differential equations.

Availability of data and materials

Not applicable.

References

Linz, P.: Analytical and Numerical Methods for Volterra Equations. SIAM, Philadelphia (1985)

Wazwaz, A.M.: Linear and Nonlinear Integral Equations: Methods and Applications. Springer, Heidelberg (2011)

Atkinson, K., Han, W.: Theoretical Numerical Analysis: A Functional Analysis Framework. Texts in Applied Mathematics, vol. 39. Springer, Dordrecht (2009)

Baker, C.T.H.: The Numerical Treatment of Integral Equations. Monographs on Numerical Analysis, Clarendon Press, Oxford (1977)

Laurita, C., De Bonis, M.C., Sagaria, V.: A numerical method for linear volterra integral equations on infinite intervals and its application to the resolution of metastatic tumor growth models. Appl. Numer. Math. 172, 475–496 (2022)

Ghaderi Aram, M., Beilina, L., Karchevskii, E.M.: An adaptive finite element method for solving 3d electromagnetic volume integral equation with applications in microwave thermometry. J. Comput. Phys. 459, 111122 (2022)

Klein, M., Ghai, S.K., Ahmed, U., Chakraborty, N.: Energy integral equation for premixed flame-wall interaction in turbulent boundary layers and its application to turbulent burning velocity and wall flux evaluations. Int. J. Heat Mass Tran. 196, 123230 (2022)

Yao, W.-W., Zhou, X.-P.: Meshless numerical solution for nonlocal integral differentiation equation with application in peridynamics. Eng. Anal. Bound. Elements 144(5), 569–582 (2022)

Chen, Y., Guo, Z.: Uniform sparse domination and quantitative weighted boundedness for singular integrals and application to the dissipative quasi-geostrophic equation. J. Differ. Equ. 378(5), 871–917 (2024)

Wu, J., Yang, B., Guo, T.: Well-posedness and regularity of mean-field backward doubly stochastic volterra integral equations and applications to dynamic risk measures. J. Math. Anal. Appl. 535(1), 128089 (2024)

Assari, P., Dehghan, M.: A meshless local discrete galerkin (mldg) scheme for numerically solving two-dimensional nonlinear Volterra integral equations. Appl. Math. Comput. 350(1), 249–265 (2019)

Goligerdian, A., Khaksar, M., Oshagh, e: The numerical solution of a time-delay model of population growth with immigration using legendre wavelets. Appl. Numer. Math 197, 243–257 (2024)

Bahrami, F., Zeinali, M., Shahmorad, S.: Recursive higher order fuzzy transform method for numerical solution of volterra integral equation with singular and nonsingular kernel. J. Comput. Appl. Math. 403(15), 113854 (2022)

Najafi, E.: Smoothing transformation for numerical solution of nonlinear weakly singular volterra integral equations using quasilinearization and product integration methods. Appl. Numer. Math. 153(15), 540–557 (2020)

Karapinar, E., Panda, S.K., Atangana, A.: A numerical schemes and comparisons for fixed point results with applications to the solutions of volterra integral equations in dislocated extended b-metric space. Alexandria Eng. J. 59(2), 815–827 (2020)

Xiao-yong, Z.: A new strategy for the numerical solution of nonlinear volterra integral equations with vanishing delays. Appl. Math. Comput. 365, 124608 (2020)

Zhang, L., Ma, F.: Pouzet-runge-kutta-chebyshev method for volterra integral equations of the second kind. J. Comput. Appl. Math. 288, 323–331 (2015)

Hu, X., Wang, Z., Hu, B.: A collocation method based on roots of chebyshev polynomial for solving volterra integral equations of the second kind. App. Math. Lett. 29, 108804 (2023)

Afonso, S.M., Azevedo, J.S., DA Silva, M.P.G.: Numerical analysis of the chebyshev collocation method for functional volterra integral equations. App. Math. Lett. 3, 521–536 (2020)

Tao, L., Yong, H.: Extrapolation method for solving weakly singular nonlinear volterra integral equations of the second kind. J. Math. Anal. Appl. 324(1), 225–237 (2006)

Meštrović, M., Ocvirk, E.: An application of romberg extrapolation on quadrature method for solving linear volterra integral equations of the second kind. Appl. Math. Comput. 194(2), 389–393 (2007)

Lin, Y., Brunner, H., Zhang, S.: Higher accuracy methods for second-kind volterra integral equations based on asymptotic expansions of iterated galerkin methods. J. Int. Eq. Appl. 10(4), 375–237 (1998)

Jazbi, B., Jalalvand, M., Mokhtarzadeh, M.R.: A finite difference method for the smooth solution of linear volterra integral equations. Int. J. Nonlinear Anal. Appl. 4(2), 1–10 (2013)

Hung, H.S.: The numerical solution of differential and integral equations by spline functions. In: Tech. Summary Report 1053, Math. Res. Center, U.S. Army, University of Wisconsin, Madison, MR 40 6130 (1970)

El Tom, M.E.A.: Application of spline functions to volterra integral equations. Inst. Math. Appl. 8, 354–357 (1971)

El Tom, M.E.A.: On the numerical stability of spline function approximations to solutions of volterra integral equations of the second kind. BIT 14, 136–143 (1974)

Netravali, A.N.: Spline approximation to the solution of the volterra integral equation of the second kind. Math. Comput. 27(121), 99–106 (1973)

Kauthen, J.P.: The numerical solution of integral-algebraic equations of index 1 by polynomial spline collocation methods. Math. Comput. 70(236), 1503–1514 (2001)

Oja, P.: Stability of the spline collocation method for volterra integral equations. J. Int. Equ. Appl. 13(2), 141–155 (2001)

Oja, P., Saveljeva, D.: Cubic spline collocation for volterra integral equations. Computing 69(2), 319–337 (2002)

Oja, P., Deputat, V., Saveljeva, D.: Quadratic spline subdomain method for volterra integral equations. Math. Model. Anal. 10(4), 335–344 (2005)

Kangro, R., Oja, P.: Convergence of spline collocation for volterra integral equations. Appl. Numer. Math. 58, 1434–1447 (2005)

Murad, M.A., Harbi, S.H., Majee, S.N.: A solution of second kind volterra integral equation susing third order non-polynomial spline function. Baghdad Sci. J. 12(2), 406–4011 (2015)

Gabbasov, N.S.: Special version of the spline method for integral equations of the third kind. Diff. Equ. 55(9), 1218–1225 (2019)

Maleknejad, KH., Rostami, Y.: B-spline method for solving fredholm integral equations of the first kind. Int. J. Indust. Math. 11(1):ID IJIM–1057 (2019)

Liu, Z., Liu, X., Xie, J., Huang, J.: The cardinal spline methods for the numerical solution of nonlinear integral equations. J. Chem. 3236813 (2020)

Ramazanov, A.K., Ramazanov, A.R.K., Magomedova, V.G.: On the dynamic solution of the volterra integral equation in the form of rational spline functions. Math. Notes 111(4), 595–603 (2022)

Schumaker, L.L.: Spline Functions: Basic Theory. Cambridge University Press, Cambridge (2007)

Stoer, J., Bulirsch, R.: Introduction to Numerical Analysis. Texts in Applied Mathematics, 3rd edn. Springer, New York (2002)

Sakai, M.: Some new consistency relations connecting spline values at mesh and mid points. BIT Numer. Math. 23, 543–546 (1983)

Schoenberg, I.J., Whitney, A.: On polya frequency functions, iii: the positivity of translation determinants with an application to the interpolation problem by spline curves. Trans. Am. Math. Soc. 74, 246–259 (1953)

Lucas, T.R.: Error bounds for interpolating cubic splines under various end conditions. SIAM J. Numer. Anal. 11(3), 569–584 (1974)

Vainikko, G.: The convergence of the collocation method for non-linear differential equations. USSR Comput. Math. Math. Phys. 6(1), 47–58 (1966)

Gautschi, W.: On the construction of gaussian quadrature rules from modified moments. Math. Comp. 24, 245–260 (1970)

Sauter, S., Maleknejad, Kh., Torabi, P.: Numerical solution of a non-linear volterra integral equation. Vietnam J. Math. 44, 5–28 (2016)

Martire, A.L., Angelis, P.D., Marchis, R.D.: A new numerical method for a class of volterra and fredholm integral equations. J. Comput. Appl. Math. 379, 112944 (2020)

Rashidinia, J., Ebrahimi, N.: Collocation method for linear and nonlinear fredholm and volterra integral equations. Appl. Math. Comput. 270, 156–164 (2015)

Funding

This research is not supported by any specific funding.

Author information

Authors and Affiliations

Contributions

M. Ghasemi and A. Goligerdian wrote the main manuscript text and edit the text. M. Ghasemi and S. Moradi run the programs and did the numerical tests. All authors wrote the theoretical part. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ghasemi, M., Goligerdian, A. & Moradi, S. A Novel Super-Convergent Numerical Method for Solving Nonlinear Volterra Integral Equations Based on B-Splines. Mediterr. J. Math. 21, 129 (2024). https://doi.org/10.1007/s00009-024-02670-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00009-024-02670-9