Abstract

As an important tool of multi-way/tensor data analysis tool, Tucker decomposition has been applied widely in various fields. But traditional sequential Tucker algorithms have been outdated because tensor data is growing rapidly in term of size. To address this problem, in this paper, we focus on parallel Tucker decomposition of dense tensors on distributed-memory systems. The proposed method uses Hierarchical SVD to accelerate the SVD step in traditional sequential algorithms, which usually takes up most computation time. The data distribution strategy is designed to follow the implementation of Hierarchical SVD. We also find that compared with the state-of-the-art method, the proposed method has lower communication cost in large-scale parallel cases under the assumption of the α–β model.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In computer science, tensors are N-way arrays. In early days, tensor data was dealt with by vectorization, which broke construction information of original data. With the development of higher order data processing, tensor analysis has drawn lots of attention and shown advantage to vectorization methods. As the most important tool in tensor analysis, tensor decomposition methods are widely applied in multi fields such as computer vision [1, 2], machine learning [3, 4], neuroscience [5], data mining [6], social network computing [7] and so on. There are several tensor decomposition methods, among which Tucker decomposition [8] is one of most famous ones.

Tucker decomposition is traditionally solved by HOOI [9] with quite expensive cost due to iteration progress and TTM (Tensor Times Matrix) chain computation. Since Higher Order Singular Values Decomposition (HOSVD) [10] plays the role of initial step in HOOI, its truncated version Truncated HOSVD (T-HOSVD) can be used to approximate the output of HOOI, with only a little accuracy lost but great reduction of computation cost. An even better approximation is Sequential Truncated HOSVD (ST-HOSVD) [11], which applies greedy strategy to the truncation progress and achieves improvements in both accuracy and speed.

Although ST-HOSVD has reduced lots of computation cost compared to traditional methods like HOOI, it’s still far from enough. With the development of our society and techniques, data sizes are growing rapidly. Traditional sequential solution within one computation node fails to handle Tucker decomposition of large tensor data today, due to not only extremely long computation time but also memory limit of one single node. To solve Tucker decomposition efficiently, recent years have seen multiple parallel algorithms on this issue.

Based on Hadoop [12], HaTen2 [13] can handle sparse tensor data of billion scale. This method divides complex tensor multiplications into large amount of unrelated vector dot products. To cut the dependencies of original sequential steps, HaTen2 takes additional operators which increases computation cost but improves parallelism a lot. For sparse tensors, this method reduces execution time on clusters with few additional tasks, while it costs much more for nonsparse cases. To deal with dense data, [14] proposes the first distributed dense Tucker decomposition algorithm on CPU clusters. It distributes tensor data across all modes. For example, let \({\mathcal{X}}\) be a tensor of size \({I}_{1}\times {I}_{2}\times {I}_{3}\), and \(p={p}_{1}\times {p}_{2}\times {p}_{3}\) be the number of available processors. \(\mathcal{X}\) is divided into tensor blocks of size \(\frac{{I}_{1}}{{p}_{1}}\times \frac{{I}_{2}}{{p}_{2}}\times \frac{{I}_{3}}{{p}_{3}}\), and each processor owns one block. This distribution strategy has been followed by several works as described below. [15] distributes tensor data using the same strategy and optimizes the TTM chain computation for parallel HOOI. It constructs TTM-trees to present TTM schemes and searches the best one with least computational load. GPUTensor [16] applies the same distribution strategy of [14] to Tucker decomposition on GPU platforms.

This paper focus on Tucker decomposition of dense tensors, in which case, [14] offers the current state-of-the-art method. Although there are some other methods extends it, they all follow its data distribution strategy. But actually, it has not optimized communication cost among different processors yet, which has a great influence on computation efficiency for parallel algorithms. As the data size increases day by day, large scale parallel techniques are in great need for data analysis. So in this paper, we present a new parallel Tucker decomposition method with optimized communication cost. Different from the methods mentioned above, we merge the gram matrix computation and eigen decomposition into one SVD step, which is implemented in parallel based on Hierarchical SVD [17] and leads to much less communication cost.

The rest of this paper is organized as follows. Section 2 is the preliminaries including basic concepts of tensor operations and a simple instruction of ST-HOSVD. Section 3 explains the details of the proposed method, focusing on implementation of parallel SVD and communication cost analysis. Section 4 is the experiments.

2 Preliminaries

2.1 Tensor Notations

In this paper, we denote a tensor by calligraphic letters, like \(\mathcal{X}\). The dimension of a tensor is called order (also called mode). The space of a real tensor of order N and size \({I_1} \times \ldots \times {I_N}\) is denoted as \({R^{{I_1} \times {I_2} \times \ldots \times {I_N}}}\).

Definition 1

(Matricization). The mode-n matricization of tensor \({\mathcal{X}} \in {R^{{I_n} \times {I_2} \times \ldots {I_N}}}\) is the matrix, denoted as \({\mathcal{X}}_{(n)}\in {R}^{{I}_{n}\times {\prod }_{k\ne n}{I}_{k}}\), whose columns are composed of all the vectors obtained from \(\mathcal{X}\) by fixing all indices but n-th.

Definition 2

(Folding Operator). Suppose \(\mathcal{X}\) be a tensor. The mode-n folding operator of a matrix \(M={\mathcal{X}}_{(n)}\), denoted as \({fold_{n}}(M)\), is the inverse operator of the unfolding operator.

Definition 3

(Mode-n Product, TTM operator, Tensor Times Matrix). Mode-n product of tensor \({\mathcal{X}} \in {R^{{I_n} \times {I_2} \times \ldots {I_N}}}\) and matrix \(A \in {R^{J \times {I_n}}}\) is denoted by \(\mathcal{X}{\times }_{\mathrm{n}}A\), whose size is \({I_1} \times \ldots \times {I_{n - 1}} \times J \times {I_{n + 1}} \times \ldots \times {I_N}\), and defined by \( \mathcal{X} {\times }_{\mathrm{n}}A=fol{d}_{n}(M)\).

Definition 4

(Gram Matrix). The Gram Matrix of a matrix \(M\) is \(M{M}^{T}\).

2.2 St-HOSVD

Tucker factorization is a method that decomposes a given tensor \(X \in {R^{{I_1} \times {I_2} \times \ldots \times {I_N}}}\) into N factor matrices \({U_1},{U_2}, \ldots ,{U_N}\) and a core tensor \(\mathcal{C}\) such that

In this paper, we consider the optimization of ST-HOSVD [10] to solve Tucker decomposition. The algorithm of ST-HOSVD is described as Algorithm 1 below.

3 The Proposed Method

In this section, we will describe the proposed method in detail. In ST-HOSVD (Algorithm 1), to obtain the factor matrix of every mode, one needs to calculate the gram matrix of \({\mathcal{X}_{\left( n \right)}}\) and its eigen decomposition. But actually, one SVD step is sufficient to obtain the factor matrix. What’s more, in parallel cases, using truncated SVD can reduce communication cost, as is explained later. This is also a reason of why we prefer SVD here.

The implementation of parallel SVD in the proposed method can be divided into 3 steps:

-

Distribute data to each processor.

-

Compute SVD of the local data on each processor.

-

Root processor gathers SVD results and merge them to get the factor matrix.

Step 2 and 3 are the key of the proposed method, and data distribution in step 1 should follow them. So Sect. 3.1 explains step 2 and 3 firstly, followed by Sect. 3.2 with accuracy analysis. Section 3.3 describes data distribution and analysis communication cost after that.

3.1 Hierarchical SVD

The matricization of a tensor is usually a highly rectangle matrix \(M\in {R}^{I\times D}\) with \(I\ll D\). To compute the SVD of \(M\) efficiently, a natural way is to divide \(M\) into smaller matrices such that \(M = \left[ {{M_1}|{M_2}\left| \ldots \right|{M_p}} \right]\) with \({M}_{i}\in {R}^{I\times {D}_{i}}\), and compute SVD for each \({\mathrm{M}}_{i}\) and then merge them. Actually, this is the exact idea of Hierarchical SVD [17], which computes \(U\) and \(S\) parallelly while discarding \(V\) for the SVD of \(M:U \cdot S \cdot {V^T}\). And we can see in Algorithm 1, in ST-HOSVD, to obtain the factor matrix of Tucker decomposition for mode n, one only needs the left singular vectors \(U\) from the SVD of \({\mathcal{X}_{\left( n \right)}} = U \cdot S \cdot {V^T}\).

The Hierarchical SVD algorithm calculates the SVD of each \({M}_{i}\) by \({U}_{i}{S}_{i}{V}_{i}^{T}\) and discards the right singular matrices \({V}_{i}^{T}\). Then it calculates the SVD of \(\left[ {{U_1}{S_1}|{U_2}{S_2}\left| \ldots \right|{U_p}{S_p}} \right]\) and output the left singular matrix and singular values. Figure 1 illustrates the progress of Hierarchical SVD.

The illustration of Hierarchical SVD [17].

In the real world, most tensor data are of low-rank, which means truncated SVD with only a few leading singular values may lose little information of the original data. In this case, using SVD instead of gram matrix can reduce communication cost. The reason runs as follows. Suppose every processor has a local matrix \({M}_{i}\in {R}^{I\times {D}_{i}}\). The local gram matrix is of size \(I\times I\), which is the transferring data size per processor. But if we use SVD and the truncated rank is d, then each processor only needs to transfer \({U}_{i}{S}_{i}\in {R}^{I\times d}\). As mentioned above, d is usually much smaller than I in real world data, so the transferring data size \(I\times d\) is also much smaller than that of local gram matrix computation.

In extremely large-scale parallel cases with very large p, one can calculate SVD with more levels to reduce communication cost further. See Fig. 2 as an example. But one should also note that more levels come with larger error for truncated SVD. So we suggest this strategy only serve to trade off accuracy for computation time, or cases of non-truncated SVD.

3.2 Discussion on Error of Hierarchical SVD

Now let’s come to the accuracy of Hierarchical SVD. For normal SVD, the error analysis is very clear. Let \(A\) be a matrix and \({s_{\text{i}}},{u_i},{v_i}\;\left( {i = 1, \ldots n} \right)\) be its singular values in descent order and the corresponding left singular vectors and right singular vectors. Let \({\left(A\right)}_{d}\) represents the recovered matrix of reduced SVD of \(A\) with rank \(d\le n\), that is,

Then \({\left(A\right)}_{d}\) is the best approximation of \(A\) such that the rank is at most d. And the approximation error is \({\Vert A-{\left(A\right)}_{d}\Vert }_{F}=\sqrt{{\sum }_{i=d+1}^{n}{s}_{i}^{2}}\).

In [17], there is also a theorem to guarantee the error bound of Hierarchical SVD with multiple layers. We presente it here as below.

Theorem 1

(from [17]). Let \(A\) be a matrix. Assume that U and S are the outputs of q-level Hierarchical SVD algorithm with input A. Then there exists a unitary matrix V such that \(US=AV+\varPsi \), where

From Theorem 1, \(US{V}^{T}=A+\varPsi {V}^{T}\), and \({\Vert \varPsi {V}^{T}\Vert }_{F}={\Vert \varPsi \Vert }_{F}\), then we can know that \(U\) from Hierarchical SVD is the normal left matrix of \(A+\varPsi {V}^{T}\), which is close to \(A\). This means that Hierarchical SVD may increase error of reduced SVD by at most \(\left[{\left(1+\sqrt{2}\right)}^{q+1}-1\right]\) times.

For 1-layer Hierarchical SVD, there is a tighter bound guaranteed by another theorem from [17] as below. Here, we denote \(\overline{A}:=US\), where the SVD of A is \(A=US{V}^{T}\).

Theorem 2.

Let \(A = \left[ {{A_1}|{A_2}\left| \ldots \right|{A_p}} \right]\), \(B = \left[ {\overline {{{\left( {A_1} \right)}_d}} |\overline {{{\left( {A_2} \right)}_d}} \left| \ldots \right|\overline {{{\left( {A_p} \right)}_d}} } \right]\), and \({B}^{^{\prime}}=B+\Psi \), then there exists a unitary matrix \(W\) such that

In Theorem 2, it is routine to see that \(\overline {{{\left( B \right)}_d}} = US\), where U and S are the outputs of 1-level Hierarchical SVD algorithm with input A. Setting \(\varPsi \) to zero matrix will lead to

So for 1-layer Hierarchical SVD, Hierarchical SVD may increase error of reduced SVD by at most \(3\sqrt{2}\) times, which is small than the bound in Theorem 1 \(\left[{\left(1+\sqrt{2}\right)}^{2}-1\right]=2+2\sqrt{2}\).

3.3 Data Distribution and Communication Cost

Consider a tensor \({\mathcal{X}} \in {R^{{I_n} \times {I_2} \times \ldots {I_N}}}\). Denote \(I = {I_1} \times {I_2} \times \ldots \times {I_N}\) be the number of entries of the tensor, and \({J}_{k}=\frac{I}{{I}_{k}}\) be the products of all \({I}_{i}\) except for \({I}_{k}\).

In STHOSVD, data distribution occurs when implementing Hierarchical SVD across processors. For every mode k, the proposed method applies Hierarchical SVD on \({\mathcal{X}}_{(k)}\), the matricization of the tensor. We distribute data in a natural way. Divide \({\mathcal{X}_{\left( k \right)}} = \left[ {{M_1}|{M_2}| \ldots |{M_p}} \right]\), where \({M}_{i}\in {R}^{{I}_{k}\times {D}_{i}}\) and \({\sum }_{i=1}^{p}{D}_{i}={J}_{k}\), and every processor owns one matrix block \({M}_{i}\). To achieve maximum performance, the matrix blocks should have sizes of almost the same.

Assume that \({M_1},{M_2} \ldots {M_p}\) happen to have the same size of \({I}_{k}\times \frac{{J}_{k}}{p}\). Using the α–β model (the latency cost vs the per-word transfer cost), the communication cost of distributing \({M_1},{M_2} \ldots {M_p}\) from root processor to other processors is \(\left(p-1\right)\mathrm{\upalpha }+{I}_{k}\frac{{J}_{k}}{p}\upbeta =\left(p-1\right)\mathrm{\upalpha }+\frac{I}{p}\upbeta \). After every processor finishes calculating the SVD of the assigned matrix block \({M}_{i}\), the root processor will gather \({U}_{i}{S}_{i}\) from all processors, and the communication cost is \(\mathrm{\upalpha }{\mathrm{log}}_{2}\left(p\right)+\left(p-1\right){I}_{k}{r}_{k}\upbeta \), where \({r}_{k}\) is the reduced rank of mode k. So the total communication cost when calculating factor matrix of mode k is \(\left(p-1\right)\mathrm{\upalpha }+\frac{I}{p}\upbeta +\mathrm{\upalpha }{\mathrm{log}}_{2}\left(p\right)+\frac{\left(p-1\right)}{p}{I}_{k}{r}_{k}\upbeta =\left(p-1+{\mathrm{log}}_{2}\left(p\right)\right)\mathrm{\upalpha }+\left[\frac{I}{p}+\frac{\left(p-1\right)}{p}{I}_{k}{r}_{k}\right]\upbeta \).

Consider the state-of-the-art method from [14], with \(p\) processors organized in a grid of size \({p_1} \times {p_2} \times \ldots \times {p_N}\). Calculation of factor matrix of mode k includes an MPI send-receive operator and reduce operator for gram matrix, see [14] for details. The communication cost is \(\left({p}_{k}-1\right)\left(\mathrm{\upalpha }+\frac{I}{p}\upbeta \right)+\mathrm{\upalpha}\,{\mathrm{log}}_{2}\left({p}_{k}\right)+\frac{\left({p}_{k}-1\right)}{p}{I}_{k}^{2}\upbeta =\left({p}_{k}-1+{\mathrm{log}}_{2}\left({p}_{k}\right)\right)\mathrm{\upalpha }+\left[\left({p}_{k}-1\right)\frac{I}{p}+\frac{\left({p}_{k}-1\right)}{p}{I}_{k}{r}_{k}\right]\upbeta \). For most cases, \(\frac{I}{p}\) is the dominant item. In large-scale parallel cases, p is very large so that every \({p}_{k}\) is large too. Comparing the proposed method with the state-of-the-art method from [14], it is easy to see that the former one has lower communication cost.

TTM operator is simple here. We need only to send the factor matrix \({{\varvec{U}}}_{{\varvec{k}}}\) from root to all processors and the latter ones update the local assigned matrix block by \({M_i} \leftarrow {M_i}\,{\times_k}\,{U_k}\). Here we ignore the communication cost cause it needs little data to transfer, and the case of the state-of-the-art method from [14] is the same.

The proposed distributed ST-HOSVD algorithm is summarized in Algorithm 2.

4 Experiments

We conduct two kinds of experiments in this section. The first one focuses on the merging results of Hierarchical SVD, and the second one focuses on the comparison of the proposed method and the state-of-the-art method.

4.1 Experiments of SVD

In this part, we generate a random matrix A of size 128 × 1024. The matrix A is divided into different number of blocks by columns (\(A = \left[ {{A_1}|{A_2}\left| \ldots \right|{A_p}} \right]\)), and the 1-layer Hierarchical SVD with reduced rank 64 is implemented on it. Reduced SVD is used here as a comparison. In Hierarchical SVD, the right singular matrix V is discarded, and the left singular matrix U is the key output. To evaluate the quality of U, we estimate the projection error of A by

For the normal reduced SVD, the error is 0.2962. For Hierarchical SVD, the results runs is presented in Table 1. We can see that the projection errors of Hierarchical SVD are very close to that of the normal reduced SVD. These results show Hierssarchical SVD has only little accuracy lost compared with normal reduced SVD.

4.2 Experiments of Tucker Decomposition

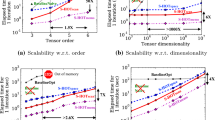

In this part, we present some experimental results to verify the performance of the proposed method. The experiments are implemented on a cluster with 128 nodes. Each node is equipped with 128 GB memory, a 32-core CPU of 2.0 GHz and a GPU of 13.3 TFLOPs peak single precision (FP32) Performance. We generate five tensor of size 1024 × 1024 × 1024 randomly, and compare the performances of the proposed method with the state-of-the-art method from [14]. The two methods are implemented using different number of nodes, namely 16, 32, 64, 128, to test their scalabilities. For each method, we apply it to the five tensors and set the reduced rank to be 512 for all modes. For the proposed method, the number of layers is set to 1 in Hierarchical SVD. The average executing time of each method is taken as its performance. The experimental result is reported in Table 2 and Fig. 3. It is clear that the proposed method has better performance with larger scale parallelism.

5 Conclusion

In this paper, we focus on the problem of high-performance Tucker decomposition on distributed-memory systems. We have proposed a method based on Hierarchical SVD for the problem, which has lower communication cost in large-scale cases compared with the state-of-the-art method. The experiments highlight the proposed method in term of executing time although there is a little accuracy lost.

In the future, we are going to explore how to make full use of the idea of Hierarchical SVD to improve parallel efficiency and reduce the error bound.

References

Barmpoutis, A.: Tensor body: real-time reconstruction of the human body and avatar synthesis from RGB-D. IEEE Trans. Cybern. 43(5), 1347–1356 (2013)

Tao, D., Li, X., Wu, X., Maybank, S.J.: General tensor discriminant analysis and Gabor features for gait recognition. IEEE Trans. Pattern Anal. Mach. Intell. 29(10), 1700–1715 (2007)

Li, X., Lin, S., Yan, S., Xu, D.: Discriminant locally linear embedding with high-order tensor data . IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 38(2), 342–352 (2008)

Signoretto, M., Tran Dinh, Q., De Lathauwer, L., Suykens, J.A.K.: Learning with tensors: a framework based on convex optimization and spectral regularization. Mach. Learn. 94(3), 303–351 (2013). https://doi.org/10.1007/s10994-013-5366-3

Mørup, M., Hansen, L.K., Herrmann, C.S., Parnas, J., Arnfred, S.M.: Parallel factor analysis as an exploratory tool for wavelet transformed event-related EEG. NeuroImage 29(3), 938–947 (2006)

Mørup, M.: Applications of tensor (multiway array) factorizations and decompositions in data mining. Wiley Interdisc. Rev. Data Mining Knowl. Disc. 1(1), 24–40 (2011)

Sun, J., Papadimitriou, S., Lin, C.-Y., Cao, N., Liu, S., Qian, W.: Multivis: content-based social network exploration through multi-way visual analysis. In: Proceedings of the 2009 SIAM International Conference on Data Mining, pp. 1064–1075. SIAM (2009)

Tucker, L.R.: Some mathematical notes on three-mode factor analysis. Psychometrika 31(3), 279–311 (1966)

De Lathauwer, L., De Moor, B., Vandewalle, J.: On the best rank-1 and rank-(R1, R2,…, RN) approximation of high-order tensors. SIAM J. Matrix Anal. Appl. 21(4), 1324–1342 (2000)

Lathauwer, L.D., Moor, B.D., Vandewalle, J.: A multilinear singular value decomposition. SIAM J. Matrix Anal. Appl. 21, 1253–1278 (2000)

Vannieuwenhoven, N., Vandebril, R., Meerbergen, K.: A new truncation strategy for the higher-order singular value decomposition. SIAM J. Sci. Comput. 34(2), A1027–A1052 (2012)

Hadoop information. https://hadoop.apache.org/

Jeon, I., Papalexakis, E.E., Kang, U., Faloutsos, C.: HaTen2: billion-scale tensor decompositions. In: 2015 IEEE 31st International Conference on Data Engineering, Seoul, 2015, pp. 1047–1058. https://doi.org/10.1109/ICDE.2015.7113355

Austin, W., Ballard, G., Kolda, T.G.: Parallel tensor compression for large-scale scientific data. In: 2016 IEEE International Parallel and Distributed Processing Symposium (IPDPS), Chicago, IL, pp. 912–922 (2016). https://doi.org/10.1109/IPDPS.2016.67

Chakaravarthy, V.T., Choi, J.W., Joseph, D.J., Murali, P., Sreedhar, D.: On optimizing distributed tucker decomposition for sparse tensors. In: ICS 2018: Proceedings of the 2018 International Conference on Supercomputing, pp. 374–384 (2018)

Zou, B., Li, C., Tan, L., Chen, H.: GPUTensor: efficient tensor factorization for context-aware recommendations. Inf. Sci. 299(4), 159–177 (2015)

Iwen, M.A., Ong, B.W.: A distributed and incremental SVD algorithm for agglomerative data analysis on large networks. SIAM J. Matrix Anal. Appl. 37(4), 1699–1718 (2016)

Acknowledgements

The work was supported by the National Key Research and Development Project of China (Grant No. 2019YFB2102500).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Fang, Z., Qi, F., Dong, Y., Zhang, Y., Feng, S. (2021). Distributed Dense Tucker Decomposition Based on Hierarchical SVD. In: Ning, L., Chau, V., Lau, F. (eds) Parallel Architectures, Algorithms and Programming. PAAP 2020. Communications in Computer and Information Science, vol 1362. Springer, Singapore. https://doi.org/10.1007/978-981-16-0010-4_31

Download citation

DOI: https://doi.org/10.1007/978-981-16-0010-4_31

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-16-0009-8

Online ISBN: 978-981-16-0010-4

eBook Packages: Computer ScienceComputer Science (R0)