Abstract

This paper addresses the optimal deconvolution estimation problem for measurement-delay systems over a network subject to random packet dropout, which is modeled by independent and identically distributed Bernoulli processes. First, the state estimator problem is solved by utilizing the reorganized innovation analysis approach, which is given in the linear minimum mean square error sense (LMMSE). Then, the noise estimator is obtained based on the state estimator and the projection formula. Last, we provide a numerical example to declare that our proposed estimation approach is effective.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Recently, the problem of deconvolution estimation for linear systems has attracted much attention due to its extensive applications in image processing [1], oil exploration [2, 3] and so on. The precursory work on the deconvolution problems can be traced back to [2], which contains the study of white noise estimation according to the Kalman filter approach. As for the deconvolution estimation problems, another approach based on the modern time series analysis method is presented in [4], which includes both the input white noise estimator and measurement white noise estimator. However, communication networks are usually unreliable and may lead to time delay and packet dropout, so that many results about time delay and packet dropout are presented in [5–17]. For the time delay problems, the authors apply the state augmentation approach to solve time delay problem in [5, 6]. There is another approach in [7], where the authors use the partial difference Riccati equation approach to deal with time delay problem.

We retrospect the pioneer work on the Kalman filter about packet losses to [8], in which the author uses the statistics of the unobserved Bernoulli process to describe the observation uncertainty and to derive the estimator. In [9], the sensor measurements are encoded together and sent over the network in a single packet, so that the Kalman filter receives either the complete observation if the packet is received or none of the observation if the packet is lost. In [10], the measurements are transmitted to the filter via two communication channels, while the authors consider the measurements may be sent through different communication channels in the networked filter systems in [11], thus [11] is more general than [10]. Some results about multiple packet dropout have been published in [12–17].

For nonlinear stochastic systems with multi-step transmission delays, multiple packet dropouts and correlated noises, the authors study the recursive estimations by using the innovation analysis approach in [15], in which the noises are assumed to be one-step autocorrelated and cross-correlated. In [16], the multiple packet dropouts phenomenon is considered to be random and is described by a binary switching sequence, which obeys a conditional probability distribution. The authors calculate the recursive estimators based on an innovation analysis method and the orthogonal projection theorem.

In previous works about time delay and packet dropout, the authors have dealt with time delay problem using the partial difference Riccati equation approach or the state augmentation approach. While, those approaches may bring tremendous computation when time delay \(d_{l-1}\) is large. The authors have used a scalar independent and identically distributed (i.i.d) Bernoulli process to describe the packet dropout phenomenon. In the real word, scalar can not satisfy realistic. Stimulated by above works about measurement dropout and time delay systems, we will investigate deconvolution estimation for discrete-time systems with measurement delay and packet dropout in this paper. According to the projection formula and the reorganized innovation analysis approach, the state estimation is first obtained. Then, we gain the white noise estimation on the basis of the state estimation obtained above. The major contributions of this paper are as follows: (i) we describe the multiplicative noise using a diagonal matrix dropout, which is described by an independent and identically distributed (i.i.d) Bernoulli process. A closed-form result is gained using the Hadamard product flexibly. (ii) according to the reorganized innovation analysis approach, we derive the optimal state estimator and noise estimator utilizing l Raccati difference equations and one Lyapunov difference equation. When the delay \(d_{l-1}\) is large, our proposed approach is more efficient than the classical augmentation approach in [5, 6] and partial difference Riccati equation approach in [7].

The organization of this paper is following. In Sect. 2, we put forward the problem formulae, some assumptions and remarks. In Sect. 3, we deduce the optimal state estimation according to the reorganized innovation analysis approach. Then, we obtain the white noise estimation based on the optimal state estimation gained above and the projection formula. A numerical example is given to explain the effectiveness of our approach in Sect. 4. Finally, some conclusions are provided in Sect. 5.

Notation: From beginning to end in this paper, the superscripts “\(-1\)” and “T” represent the inverse and transpose of a matrix. \(\mathcal {R}^{n}\) denotes an Euclidean space of n-dimension. \(\mathcal {R}^{n\,\times \,m}\) means the linear space of all \(n\times m\) real matrices. The measurement sequence \(\{y(0), \ldots , y(k)\}\) can be represented as \(\mathcal {L}\left\{ y(s)^{k}_{s=0}\right\} \). \(\odot \) denotes the Hadamard product. The diagonal matrix with diagonal elements \(\lambda _{1},\ldots , \lambda _{n}\) is expressed as \(diag\{\lambda _{1},\ldots , \lambda _{n}\}\). In addition, the mathematical expectation operator is denoted as E.

2 Problems Statement and Preliminary

Consider the linear system following

here \({x}(k)\in \mathcal {R}^{n}\) is an unknown state and \({y}_{i}(k)\in \mathcal {R}^{m_{i}}\) is delayed measurement, respectively. n(k) and \({ v}_{i}(k)\) are white Gaussian noises with zero mean and covariances \(E \{{n}(k) {n}^T (j)\} ={Q}\delta _{k,j}, E\{{ v}_{i}(k){v}^T_{i} (j)\} ={ R_{i}}\delta _{k,j}\) respectively. Here, \(\delta _{k,j}\) is the Kronecker function. We describe the packet dropout phenomenon with the mutually uncorrelated and identically distributed (i.i.d.) Bernoulli random variables \(\xi _{ij}(k)\), in the \(m_{i}\) channels with \(Pr\{\xi _{ij}(k)= 1\} =\alpha _{ij}\), \(Pr\{\xi _{ij}(k)= 0\} =1-\alpha _{ij}\). \(d_{i}\) satisfies that \(0=d_{0}<d_{1}<\cdots < d_{l-1}\). The initial state x(0) is a random vector with zero mean and covariance matrix D(0). The random process n(k), \(v_{i}(k)\), \(\xi _{i}(k)\) for all k and the initial state x(0) are uncorrelated mutually.

Let

Problem

For the given measurements \(\{y(k)\}^{N}_{k=0}\), find a LMMSE estimator \(\hat{x}(k\mid k)\) of x(k) and \(\hat{n}(k\mid k+T)\) of n(k), such that

is minimized. Note that \(T = 0\) is the filter, \(T >0\) is the smoother and \(T <0\) is the predictor.

Remark 1

From the distribution of \(\xi _{ij}(k)\), it is readily to deduce that \(E\{\xi _{ij}(k)\}=\alpha _{ij}\), \(E\{(\xi _{ij}(k)-\alpha _{ij})^{2}\}=\alpha _{ij}(1 -\alpha _{ij})\), \(E\{\xi _{ij}(k)(1-\xi _{ij}(k))\}=0\), \(E\{[\xi _{ij}(k)-\alpha _{ij}][\xi _{il}(s)-\alpha _{il}]\}\) \(=\alpha _{ij}(1-\alpha _{ij})\delta _{k,s}\delta _{j,l}\). \(E\{\xi _{ij}(k)\xi _{il}(s)\}\) \(=\alpha _{ij}\alpha _{il}\) for \(k\ne s\) or \(j\ne l\).

Remark 2

In the previous references, the authors usually use a scalar independent and identically distributed (i.i.d) Bernoulli process to describe the packet dropout phenomenon. In this paper, we describe the packet dropout via a diagonal matrix independent and identically distributed (i.i.d) Bernoulli process, which is more realistic. Regarding to the time delay systems, one can use the state augmentation approach to solve the optimal state estimation and white noise estimation problems, but the state augmentation approach may bring tremendous computational when the delay \(d_{l-1}\) is large. Therefore, in this paper we will deduce the problem of deconvolution estimation using the reorganized innovation analysis approach to avoid tremendous computation.

3 Main Results

In this section, we will present an analytical solution to the optimal state estimation and the white noise estimation according to the projection formula.

Lemma 1

([18]) Let \(\sigma = diag\{\sigma _{1},\ldots , \sigma _{n} \}\) and \(\rho = diag\{\rho _{1},\ldots ,\rho _{m}\}\) be two diagonal stochastic matrices, and A be any \(n \times m\) matrix. Then

3.1 Design of the Optimal State Estimator

In the next, we first provide an optimal state estimator, which will lead to the optimal white noise estimator. We note that \(y_{i}(k)\) is an additional measurement of the state \(x(k-d_{i})\), which is gained at time instant k with time delay \(d_{i}\), so the measurement y(k) contains time delay when \(k\ge d_{i}\). On the basis of [19], the linear space \(\mathcal \{{y(s)}^{k}_{s=0}\}\) includes the same information as

where the new observations

Obviously, \(Y_{0}(s)\), \(Y_{1}(s)\),..., \(Y_{l-1}(s)\) satisfy

where \(H_{i}=diag\{\xi _{0}(s),\xi _{1}(s+d_{1}),\ldots , \xi _{i}(s+d_{i})\}\bar{B}_{i}\), and

Obviously the new measurements \(Y_{0}(s)\), \(Y_{1}(s), \ldots , Y_{i}(s)\) are delay-free and the associated measurement noises \(V_{0}(s)\), \(V_{1}(s), \ldots , V_{i}(s)\) are white noises with zero mean and covariance matrices \(R_{V_{0}(s)}\,{=}\,R_{0}\), \(R_{V_{1}(s)}\,{=}\,diag\{R_{0},R_{1}\}, \ldots , R_{V_{i}(s)}\,{=}\,diag\{R_{0},R_{1},\ldots ,R_{i}\}\). The filter \(\hat{x}(k\mid k)\) is the projection of x(k) onto the linear space of

In order to compute the projection, we define the innovation sequence as follows:

where \(\hat{Y}(s,i)\) is the projection of \(Y_{i}(s)\) onto the linear space of

From (8)–(9), the innovation sequence is given as follows

here \(\phi _{i}=diag\{\alpha _{i1},\alpha _{i2},\ldots ,\alpha _{im_{i}}\}\), \(\tilde{x}(s,i)=x(s)-\hat{x}(s,i)\), the definition of \(\hat{x}(s,i)\) is same to \(\hat{Y}(s,i)\). We know that white noises \(\varepsilon (s,0)\), \(\varepsilon (s,1), \ldots , \varepsilon (s,l-1)\) are independent. Conveniently, we define that

Based on (10) and Lemma 1, the recognized innovation covariance matrices are calculated by

where

Now, we deduce the covariance matrices of one-step ahead state estimation error using the lemma as follows.

Lemma 2

The covariance matrices \(P_{i}(s+1)\) submits to the Riccati difference equations following,

where \(R_{\varepsilon (s,i)}\) is the one in (11), and \(D(s+1)\) can be calculated using

with initial value D(0).

Proof

According to (1), it is readily to yield (15). On the basis of projection formula, \(\hat{x}(s+1,i)\) is calculated

here \(K_{P}(s,i)=AP_{i}(s)\bar{B}_{i}^{T}diag\{\phi _{0}, \phi _{1}, \ldots ,\phi _{i}\}R_{\varepsilon (s,i)}^{-1}.\) From (1) and (16), one has that

Therefore, the prediction error covariance is obtained by

which is (12). On the basis of the definitions of \(\hat{x}(k-d_{i}+1,i)\), we have \(\hat{x}(k-d_{i}+1,i)=\hat{x}(k-d_{i}+1,i+1)\). The proof is accomplished.\(\nabla \)

Now, we introduce the filter according to the projection formula in Hilbert space.

Theorem 1

In view of the system (1)–(2), the filter \(\hat{x}(k\mid k)\) can be computed by

where, we calculate the estimation \(\hat{x}(k,0)\) by

with initial value \(\hat{x}(k-d_{1}+1,0)=\hat{x}(k-d_{1}+1,1)\). \(\hat{x}(k-d_{1}+1,i)\) is gained by

with \(\hat{x}(k-d_{i}+1,i)=\hat{x}(k-d_{i}+1,i+1)\). And one calculates \(\hat{x}(k-d_{l-1}+1,l-1)\) by

with initial value \(\hat{x}(0,l-1)=0\).

Proof

Because \(\hat{x}(k\mid k)\) is the projection of x(k) onto the linear space of

according to the projection theory, one has

therefore, (18) is proved. The proof of (19) and (20) can be yielded from (16). The proof is finished. \(\nabla \)

Remark 3

Applying the reorganized innovation approach, we have deduced the optimal filter by calculating Riccati equations (12) and one Lyapunov equation (15) of \(\mathbf {n\times n}\) dimension. On the other hand, if we let

the delayed measurement equation (2) can be converted into a delay-free equation. Further, the LMMSE estimator can be designed in terms of one Lyapunov equation and one Riccati equation of \(\mathbf {(d_{l-1}+1)n \times (d_{l-1}+1)n}\) dimension. Hence the high dimension Riccati equation related to the augmentation approach is avoided.

3.2 Design of the Optimal White Noise Estimator

We can export the optimal white-noise estimator \(\hat{n}(s\mid s+T)\) according to the innovation sequences \(\varepsilon (0)\), \(\varepsilon (1),\ldots , \varepsilon (s+T)\). When \(T\le 0 \), it can be observed that n(s) is independent of \(\varepsilon (0)\), \(\varepsilon (1),\ldots , \varepsilon (s+T)\). Then the estimator \(\hat{n}(s\mid s+T)\) is 0. When \(N>0\), the optimal input white-noise smoother \(\hat{n}(s\mid s+T)\) is defined following

where \(E\{n(s)\varepsilon ^{T}(s+T,i)\}R^{-1}_{\varepsilon (s+T,i)}\) is to be determined, such that

is minimized. On the basis of the projection formula, we can calculate the optimal recursive input white-noise smoother \(\hat{n}(s\mid s+T)\).

Theorem 2

Consider the system (1)–(2), the optimal recursive input white-noise smoother is given following

the initial value \(\hat{n}(s\mid s)=0\), and the smoother gain \(M_{n}(s+T)\) satisfies the equation as follows

The covariance matrix \(P_{n}(s+T)\) is derived following

with initial value \(P_{n}(s)=0\).

Proof

Using the projection formula, one obtains

where \(E\{n(s)\varepsilon ^{T}(s+T,i)\}R^{-1}_{\varepsilon (s+T,i)}\) is determined. We know that

here, \(\varPsi _{p}(s,i)=A-K_{p}(s,i)diag\{\phi _{0},\phi _{1},\ldots ,\phi _{i}\}\bar{B}_{i}.\) From (28), \(\tilde{x}(s+T,i)\) is derived as follows

where,

Putting (28), (29) into (27), one has

From above equations, it is readily to obtain

Considering that \(R_{\varepsilon (s+T, i)}\) is invertible, we define

Next, we begin to derive the expression of \(P_{n}(s+T)\). According to (24), \(\tilde{n}(s\mid s+T)\) is gained by

so one gets (25). The proof is finished. \(\nabla \)

Remark 4

According to projection formula and the optimal state estimator in Theorem 1, one can gain the smoother in Theorem 2 directly. What has we finished in this paper is the state estimator and white noise estimator for finite horizon, while the related work for infinite horizon has not been finished. In the future, we will solve the problems about state estimator and white noise estimator for infinite horizon under the condition that A is a stable matrix.

4 Numerical Example

Consider the following linear measurement-delay systems

with

\(x(0)=\left[ \begin{array}{c} -1\\ 1 \end{array}\right] ,\) \(\hat{x}(0,1)=\left[ \begin{array}{c} 0 \\ 0 \end{array}\right] \), \(D(0)=P(0)=\left[ \begin{array}{cc} 1 &{}-1\\ -1&{}1 \end{array}\right] \), \(\phi _{0}=diag\{0.85,0.73\}, \) \(\phi _{1}=diag\{0.79,0.89\} \), \(R_{0}=R_{1}=I_{2\times 2}\), \(Q=\left[ \begin{array}{cc} 1&{}1\\ 1&{}1\end{array}\right] \). n(k), \(v_{0}(k)\) and \(v_{1}(k)\) are white noises with zero mean and covariances Q, \(R_{0}\) and \(R_{1}\), respectively. We adopt d = 15, N = 100 in this simulation.

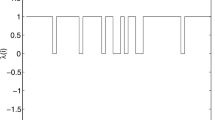

Primarily, according to Theorem 1, we give the simulation results in Figs. 1 and 2, where we can observe that the filter with additional measurement-delay channel represented by red line tracks the true state x(k) better than the filter without measurement-delay channel shown by green line. It suggests that the information coming from the measurement-delay channel is also important.

Then, we consider the influence of different packet dropping rate case,

The results are appeared in Figs. 3 and 4, where the filter for case 1 is represented by green line and the filter for case 2 is shown by the red line. It is shown in Figs. 3 and 4 that the filter for case 2 tracks the true state better. The reason is that the filter in case 2 is gained with more information than the filter in case 1.

5 Conclusion

In this paper, we have investigated the LMMSE state estimation and noise estimation for discrete-time system with time delay and random packet loss, which is modeled by an independent and identically distributed Bernoulli process. First, the optimal state estimator has been designed by using the reorganized innovation analysis approach. The solution to state estimator has been provided by calculating l Riccati difference equations and one Lyapunov difference equation. Next, an optimal input white-noise estimation has been put forward based on innovation analysis approach and state estimation mentioned above. Finally, a numerical example has been offered to show the effectiveness of the proposed approach. These works about the state estimator and noise estimator are only for finite horizon. In the future, we will solve problems about the state estimator and noise estimator for infinite horizon under the condition that A is a stable matrix.

References

Sadreazami H, Ahmad MO, Swamy MNS (2015) A robust multiplicative watermark detector for color images in sparse domain. IEEE Trans Circuits Syst II: Express Briefs 62(12):1159–1163

Mendel JM (1977) White-noise estimators for seismic date processing in oil exploration. IEEE Trans Autom Control 22(5):694–706

Mendel JM (1981) Minimun variance deconvolution. IEEE Trans Geosci Remote Sens 19(3):161–171

Deng Z, Zhang H, Liu S, Zhou L (1996) Optimal and self-tuning white noise estimators with application to deconvolution and filtering problem. Automatica 32(2):199–216

Xiao L, Hassibi A, How JP (2000) Control with random communication delays via a discrete-time jump system approach. In: Proceedings of the American Control Conference, pp 2199–2204

Wu X, Song X, Yan X (2015) Optimal estimation problem for discrete-time systems with multi-channel multiplicative noise. Int J Innov Comput Inf Control 11(6):1881–1895

Song IY, Kim DY, Shin V, Jeon M (2012) Receding horizon filtering for discrete-time linear systems with state and observation delays. IET Radar Sonar Navig 6(4):263–271

Nahi NE (1969) Optimal recursive estimation with uncertain observation. IEEE Trans Inf Theory 15(4):457–462

Sinopoli B, Schenato L, Franceschetti M, Poolla K, Jordan M, Sastry S (2004) Kalman filtering with intermittent observations. IEEE Trans Autom Control 49(9):1453–1464

Liu X, Goldmith A (2004) Kalman filtering with partial observation losses. In: Proceeding of the 43rd IEEE Conference on Decision and Control, pp 4180–4186

Gao S, Chen P (2014) Suboptimal filtering of networked discrete-time systems with random observation losses. In: Mathematical Problems in Engineering

Zhang H, Song X, Shi L (2012) Convergence and mean square stability of suboptimal estimator for systems with measurement packet dropping. IEEE Trans Autom Control 57(5):1248–1253

Han C, Wang W (2013) Deconvolution estimation of systems with packet dropouts. In: Proceeding of the 25th Chinese Control and Decision Conference, pp 4588–4593

Sun S (2013) Optimal linear filters for discrete-time systems with randomly delayed and lost measurements with/without time stamps. IEEE Trans Autom Control 58(6):1551–1556

Wang S, Fang H, Tian X (2015) Recusive estimation for nonlinear stochastic systems with multi-step transmission delay, multiple packet droppouts and correlated noises. Signal Process 115:164–175

Feng J, Wang T, Guo J (2014) Recursive estimation for descriptor systems with multiple packet dropouts and correlated noises. Aerosp Sci Technol 32(1):200–211

Li F, Zhou J, Wu D (2013) Optimal filtering for systems with finite-step autocorrelated noises and multiple packet dropouts. Aerosp Sci Technol 24(1):255–263

Horn RA, Johnson CR (1991) Topic in Matrix Analysis. Cambridge University Press, New York

Zhang H, Xie L, Zhang D, Soh YC (2004) A reorganized innovation approach to linear estimation. IEEE Trans Autom Control 49(10):1810–1814

Acknowledgments

This work is supported in part by the Shandong Provincial Key Laboratory for Novel Distributed Computer Software Technology, the Excellent Young Scholars Research Fund of Shandong Normal University, and the National Natural Science Foundation of China (61304013).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer Science+Business Media Singapore

About this paper

Cite this paper

Duan, Z., Song, X., Yan, X. (2016). Deconvolution Estimation Problem for Measurement-Delay Systems with Packet Dropping. In: Jia, Y., Du, J., Zhang, W., Li, H. (eds) Proceedings of 2016 Chinese Intelligent Systems Conference. CISC 2016. Lecture Notes in Electrical Engineering, vol 404. Springer, Singapore. https://doi.org/10.1007/978-981-10-2338-5_32

Download citation

DOI: https://doi.org/10.1007/978-981-10-2338-5_32

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-2337-8

Online ISBN: 978-981-10-2338-5

eBook Packages: Computer ScienceComputer Science (R0)