Abstract

In this paper, the state estimation problem is investigated for discrete-time output coupled complex networks with Markovian packet losses. Unlike the majority of emerging research on state estimation with Bernoulli packet dropout, the Markov chain is used to describe the random packet losses. In use of the Lyapunov functional theory and stochastic analysis method, the explicit description of the estimator gains is presented in the form of the solution to certain linear matrix inequalities (LMIs). At last, simulations are exploited to illustrate the proposed estimator design scheme is applicable.

This work is supported by National Natural Science Foundation (NNSF) of China under Grant 61374180 and 61573194.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Recently, the increasing interests have been attracted to the complexity of networks due to the successful research results in some practical fields such as biological, physical sciences, social and engineering [1]. With the presentation of small-world and scale-free characters of complex networks [2, 3], amount of efforts have been devoted to investigating the dynamical behaviours of complex networks in several different domains, mainly involving synchronization, state estimation, fault diagnosis and topology identification.

With the emergence of the large scale networks, it is common that merely partial information of nodes is accessible in the network outputs [4, 5]. It is imperative to estimate the unknown states of nodes via an effective state estimator. Lots of research achievements have been obtained for state estimation of complex networks [6,7,8,9]. For instance, state estimation of complex neural network with time delays was discussed in [6]. Moreover, state estimation of complex networks concerning the transmission channel with noise was studied in [8]. Also, state estimation for complex networks with random occurring delays was investigated in [9].

In reality, transmission congestion probably lead to packet dropout in network linking, which has an influence on the performance of complex networks. There exists some research concerning Bernoulli packet dropout for complex networks [10,11,12].

The robust filtering for complex networks with Bernoulli packet dropout was studied in [10]. Similarly, the synchronization of complex networks with Bernoulli packet losses was investigated in [11]. In addition, state estimation for complex networks with stochastic packet dropout that described as a Bernoulli random variable was studied in [12].

In practical networked systems, especially in wireless communication networks, the random packet dropout is often regarded as a time-relevant Markov process. That is to say, the Markovian packet losses model would sufficiently utilize the temporal relevance of channel conditions in the process of transmission. As a result, some research achievements have been existed on Markovian packet dropout for the networked systems [13,14,15,16]. Minimum data rate stability in the mean square with Markovian packet losses was studied in [15]. Also, stabilization of uncertain systems with random packet losses which described as a Markov chain was investigated in [16]. However, the research considering Markovian packet losses for state estimation of complex dynamical networks is relatively scarce.

In the paper, we focus on the state estimation for discrete-time complex networks with Markovian packet losses, where the transition probability is known. The network is output coupled that could economize on the channel resource. An effective state estimator is established to ensure such the stability of the state error. By employing the Lyapunov stability approach plus stochastic analysis theory, we derive the criteria sufficiently in the form of LMIs.

The rest of this paper is arranged as follows. In Sect. 2, an output coupled complex network with Markovian packet losses and the corresponding state estimator are presented. In Sect. 3, a sufficient criteria is exploited in terms of LMIs and the desired estimator gain matrix is obtained. In Sect. 4, illustrative simulations are provided to testify the applicability of the results derived. In the end, conclusions are drawn in Sect. 5.

2 Problem Formulation

We consider the following discrete-time complex network consisting of \( N \) coupled nonlinear nodes:

where \( x_{i} (k) = (x_{i1} (k),x_{i2} (k), \ldots ,x_{in} (k))^{T} \in R^{n} \) denote the state vector of the \( i^{th} \) node, \( y_{i} (k) \in R^{m} \) is the output vector of the \( i^{th} \) node, \( A_{i} \in R^{n \times n} \) denotes a constant matrix, \( f( \cdot ):R^{n} \times R^{n} \) represents a nonlinear function with \( f(0) \equiv 0 \), \( W = (w_{ij} )_{N \times N} \) is the coupling configuration matrix which describes the topological structure of the network. If there is a connection from node \( i \) to node \( j(j \ne i) \), then \( w_{ij} = 1 \); otherwise \( w_{ij} = 0 \). As usual, matrix \( W \) satisfies \( w_{ii} = - \sum\limits_{j = 1,j \ne i}^{N} {w_{ij} } \), \( \Gamma \in R^{n \times n} \) is the inner coupling matrix, \( C_{i} \in R^{m \times n} \) stands for the output matrix.

In fact, it is quite tough to access the states of some complex networks completely. In order to obtain the state variables of network (1), the output \( y_{i} (k) \) is transmitted to the observer network. Actually, losing data such as packet dropout may occur in the process of transmission. So it is of great value to take the advantage of accessible state information to approximate the unknown information of nodes in network (1), regardless of packet losses.

In this paper, the network measurements from the transmission channel are of the following form:

where \( \bar{y}_{i} (k) \in R^{m} \) is the actual measured output. The random variable \( r_{i} (k) \in {{\{ }}0,1{\}} \) indicates the state of the packet at time \( k \). If \( r_{i} (k) = 0 \) then the packet is lost; else it would succeed. The process of packet in the transmission channel is regarded as a Markov chain with two states: reception and loss. Furthermore, the transition probability matrix of the Markov chain is defined by

where \( \zeta = {{\{ }}0,1{\}} \) is the state space of the Markov chain, \( p \) is the failure probability when the previous packet succeed, and \( q \) is the recovery probability from the loss state. To make the process \( \{ r_{i} (k)\} \) ergodic, we believe that \( p,q \in (0,1) \). Without loss of generality, the transmitted signal in the initial state is assumed received successfully, that is, \( r_{i} (0) = 1 \).

For the purpose of estimating the states of network (1), we construct a state estimator as follows:

where \( \hat{x}_{i} (k) = (\hat{x}_{i1} (k),\hat{x}_{i2} (k), \ldots ,\hat{x}_{in} (k))^{T} \in R^{n} \) represents the estimation states of the nodes in network (1). \( \hat{y}_{i} (k) \in R^{m} \) denotes the output of the nodes in network (4), \( K_{i} \in R^{n \times m} \) stands for the observer gain to be determined.

By applying the Kronecker product, networks (1), (2) and (4) can be expressed as the following concise form:

where

-

\( x(k) = (x_{1}^{T} (k),x_{2}^{T} (k), \ldots ,x_{N}^{T} (k))^{T} \), \( \hat{x}(k) = (\hat{x}_{1}^{T} (k),\hat{x}_{2}^{T} (k), \ldots ,\hat{x}_{N}^{T} (k))^{T} \), \( y(k) = (y_{1}^{T} (k),y_{2}^{T} (k), \ldots ,y_{N}^{T} (k))^{T} \), \( \hat{y}_{i} (k) = (\hat{y}_{1}^{T} (k),\hat{y}_{2}^{T} (k), \ldots ,\hat{y}_{N}^{T} (k))^{T} \), \( \bar{y}_{i} (k) = (\bar{y}_{1}^{T} (k),\bar{y}_{2}^{T} (k), \ldots ,\bar{y}_{N}^{T} (k))^{T} \), \( f(x(k)) = (f^{T} (x_{1} (k)),f^{T} (x_{2} (k)), \ldots ,f^{T} (x_{N} (k)))^{T} \), \( f(\hat{x}(k)) = (f^{T} (\hat{x}_{1} (k)),f^{T} (\hat{x}_{2} (k)), \ldots ,f^{T} (\hat{x}_{N} (k)))^{T} \), \( A = diag\{ A_{1} ,A_{2} , \ldots ,A_{N} \} \), \( C = diag\{ C_{1} ,C_{2} , \ldots ,C_{N} \} \), \( K = diag\{ K_{1} ,K_{2} , \ldots ,K_{N} \} \), \( r(k) = (diag\{ r_{1} (k),r_{2} (k), \ldots ,r_{N} (k)\} ) \otimes I_{n} \), \( I_{n} \) is the identical matrix of \( n \) dimensions.

Letting the state error be

where \( \tilde{x}(k) = x(k) - \hat{x}(k) \), \( \tilde{f}(x(k)) = f(x(k)) - f(\hat{x}(k)) \). For the sake of concise expression, we could assume that \( H = I_{Nn} - r(k) \) and \( 0 < H < I_{Nn} \), then

Since that \( x(k) \) and \( \tilde{x}(k) \) both exist in (9) at the same time, we take the augmented state vector to be

It follows from (5) and (9) that

-

\( \begin{aligned} & e(k + 1) = \left[ {\begin{array}{*{20}c} {x(k + 1)} \\ {\tilde{x}(k + 1)} \end{array} } \right] \\ & = \left[ {\begin{array}{*{20}c} {Ax(k) + f(x(k)) + (W \otimes\Gamma )Cx(k)} \\ {[(A - KC) + (W \otimes\Gamma )C]\tilde{x}(k) + \tilde{f}(x(k)) + KHCx(k)}\end{array} } \right] \\ & = \left[ {\begin{array}{*{20}c} {f(x(k))} \\ {\tilde{f}(x(k))} \end{array} } \right] + \left[ {\begin{array}{*{20}c} {A + (W \otimes\Gamma )C} & 0 \\ {KHC} & {(A - KC) + (W \otimes\Gamma )C} \end{array} } \right]\left[ {\begin{array}{*{20}c} {x(k)} \\ {\tilde{x}(k)} \end{array} } \right] \\ & = \left[ {\begin{array}{*{20}c} {f(x(k))} \\ {\tilde{f}(x(k))} \end{array} } \right] + \left[ {\begin{array}{*{20}c} {A + (W \otimes\Gamma )C} & 0 \\ 0 & {(A - KC) + (W \otimes\Gamma )C} \end{array} } \right]\left[ {\begin{array}{*{20}c} {x(k)} \\ {\tilde{x}(k)} \end{array} } \right] + \left[ {\begin{array}{*{20}c} 0 & 0 \\ {KHC} & 0 \end{array} } \right]\left[ {\begin{array}{*{20}c} {x(k)} \\ {\tilde{x}(k)} \end{array} } \right] \\ & = \left[ {\begin{array}{*{20}c} {f(x(k))} \\ {\tilde{f}(x(k))} \end{array} } \right] + \left[ {\begin{array}{*{20}c} {A + (W \otimes\Gamma )C} & 0 \\ 0 & {(A - KC) + (W \otimes\Gamma )C} \end{array} } \right]\left[ {\begin{array}{*{20}c} {x(k)} \\ {\tilde{x}(k)} \end{array} } \right] + \left[ {\begin{array}{*{20}c} 0 & 0 \\ 0 & K \end{array} } \right]\left[ {\begin{array}{*{20}c} 0 & 0 \\ {HC} & 0 \end{array} } \right]\left[ {\begin{array}{*{20}c} {x(k)} \\ {\tilde{x}(k)} \end{array} } \right] \\ \end{aligned} \)

suppose

-

\( B = \left[ {\begin{array}{*{20}c} {A + (W \otimes \varGamma )C} & 0 \\ 0 & {(A - KC) + (W \otimes \varGamma )C} \end{array} } \right] \), \( D_{1} = \left[ {\begin{array}{*{20}c} 0 & 0 \\ 0 & K \end{array} } \right] \), \( D_{2} = \left[ {\begin{array}{*{20}c} 0 & 0 \\ {HC} & 0 \end{array} } \right] \), \( h(x(k),\hat{x}(k)) = \left[ {\begin{array}{*{20}c} {f(x(k))} \\ {\tilde{f}(x(k))} \end{array} } \right] \),

then

Before deriving the main results, an available assumption and a useful lemma are given as follows throughout this paper.

Assumption 1:

Suppose that \( f(0) = 0 \) and there exists a positive constant \( a \) such that

Lemma 1 (Schur Complement):

For a given real symmetric matrix \( \Pi = \left[ {\begin{array}{*{20}c} {\Pi _{11} } & {\Pi _{21} } \\ {\Pi _{12} } & {\Pi _{22} } \end{array} } \right] \), where \( \Pi _{11} =\Pi _{11}^{T} \), \( \Pi _{12} =\Pi _{21}^{T} \), \( \Pi _{22} =\Pi _{22}^{T} \), the condition \( \Pi < 0 \) is equivalent to

3 Main Results

In the section, the LMIs approach is applied to deal with the issue on state estimation of network (1), which was put forward previously.

Theorem 1:

Under Assumption 1, network (4) becomes an effective state estimator of network (1) if there exist such matrixes \( P = P_{r(k)} > 0 \), \( \bar{P} = P_{r(k + 1)} = (\Lambda \otimes I_{n} )P_{r(k)} > 0 \), that \( P = P^{T} = \left[ {\begin{array}{*{20}c} {P_{1} } & 0 \\ 0 & {P_{2} } \end{array} } \right] \), \( \bar{P} = \bar{P}^{T} = \left[ {\begin{array}{*{20}c} {\bar{P}_{1} } & 0 \\ 0 & {\bar{P}_{2} } \end{array} } \right] \) and \( \Lambda = diag(\Lambda _{1} ,\Lambda _{2} , \ldots ,\Lambda _{N} ) \), matrix \( K \), scalar \( \alpha > 0 \) such that the LMI \( \varphi < 0 \) in (12) hold.

where

-

\( \begin{aligned} \pi_{1} & = A^{T} \bar{P}_{1} A + A^{T} \bar{P}_{1} (W \otimes\Gamma )C + C^{T} (W \otimes\Gamma )^{T} \bar{P}_{1} A + C^{T} (W \otimes\Gamma )^{T} \bar{P}_{1} (W \otimes\Gamma )C - P_{1} + \alpha a^{2} I \\ \pi_{2} & = C^{T} H^{T} K^{T} \bar{P}_{2} A + C^{T} H^{T} K^{T} \bar{P}_{2} (W \otimes\Gamma )C \\ \pi_{3} & = A^{T} \bar{P}_{2} A - C^{T} K^{T} \bar{P}_{2} A - A^{T} \bar{P}_{2} KC + A^{T} \bar{P}_{2} (W \otimes\Gamma )C - C^{T} K^{T} \bar{P}_{2} (W \otimes\Gamma )C \\ & \quad + C^{T} (W \otimes\Gamma )^{T} \bar{P}_{2} A - C^{T} (W \otimes\Gamma )^{T} \bar{P}_{2} KC + C^{T} (W \otimes\Gamma )^{T} \bar{P}_{2} (W \otimes\Gamma )C - P_{2} + \alpha a^{2} I \\ \pi_{4} & = A^{T} \bar{P}_{2} - C^{T} K^{T} \bar{P}_{2} + C^{T} (W \otimes\Gamma )^{T} \bar{P}_{2} . \\ \end{aligned} \)

Moreover, the state estimator gain can be determined by

Proof:

Construct a Lyapunov functional candidate as follows:

For calculating the difference of \( V(k,\,r(k)) \) along the trajectories of (11) and getting the mathematical expectation, one can obtain that

where

-

\( \chi = \left[ {\begin{array}{*{20}c} {e(k)} \\ {h(x(k),\hat{x}(k))} \end{array} } \right] \), \( P = P_{r(k)} > 0 \), \( \bar{P} = P_{r(k + 1)} = (\Lambda \otimes I_{n} )P_{r(k)} > 0, \)

-

\( \varphi_{1} = \left[ {\begin{array}{*{20}c} {B^{T} \bar{P}B + B^{T} \bar{P}D_{1} D_{2} + D_{2}^{T} D_{1}^{T} \bar{P}B + D_{2}^{T} D_{1}^{T} \bar{P}D_{1} D_{2} - P} & {B^{T} \bar{P} + D_{2}^{T} D_{1}^{T} \bar{P}} \\ {\bar{P}B + \bar{P}D_{1} D_{2} } & {\bar{P}} \end{array} } \right]. \)

From Assumption 1, it is easy to show that

From (15) and (16), we obtain that

By Lemma 1, we can obtain that \( \varphi_{2} < 0 \) is equivalent to the inequality \( \varphi < 0 \). It can be derived that the estimation error network is asymptotically stable in the mean square by applying the Lyapunov functional approach. It means that network (4) is an effective state estimator of network (1).

4 Simulations

In the section, an example is given to justify the criteria proposed in the previous section. Considering an output coupled discrete-time complex network with 3 nodes. Following are the parameters for the network:

then select \( a = 0.4 \) in (16). Applying the MATLAB LMI Toolbox, we obtain the equations including the gain matrix in Theorem 1 as follows:

meanwhile, \( \alpha = 1.9009 \) is obtained in (16).

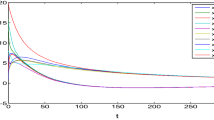

We choose the third state of each node to show the state trajectories of nodes, the simulations are presented in Fig. 1. Meanwhile, the simulations for all states of error system are shown in Fig. 2. From these simulations, we can conclude that the estimator (4) could effectively estimate the state of nodes in network (1), which exists Markovian packet losses. The proof is then verified.

5 Conclusions

In the paper, we have dealt with the problem of state estimation for discrete-time directed complex networks with coupled outputs. It often occurs the packet losses in practical transmission channel. We describe it as a Markovian packet dropout and the transition probability is known. By employing the Lyapunov functional theory and stochastic analysis method, a state observer has been constructed to witness the estimation error to be asymptotically stable in the mean square. The criteria has been established to guarantee the existence of the desired estimator gain matrix. The simulations have been shown to illustrate the applicability of the criteria obtained.

References

Strogatz, S.H.: Exploring complex networks. Nature 410(6825), 268–276 (2001)

Watts, D.J., Strogatz, S.H.: Collective dynamics of ‘small-world’ networks. Nature 393(6684), 440–442 (1998)

Barabási, A.L., Albert, R.: Emergence of scaling in random networks. Science 286(5439), 509–512 (1999)

Fan, C.X., Jiang, G.P., Jiang, F.H.: Synchronization between two complex dynamical networks using scalar signals under pinning control. IEEE Trans. Circuits Syst. 57(11), 2991–2998 (2010)

Fan, C.X., Wan, Y.H., Jiang, G.P.: Topology identification for a class of complex dynamical networks using output variables. Chin. Phys. B 21(2), 020510 (2012)

Wang, Z., Ho, D.W.C., Liu, X.: State estimation for delayed neural networks. IEEE Trans. Neural Netw. 16(1), 279–284 (2005)

Liu, Y., Wang, Z., Liang, J., et al.: Synchronization and state estimation for discrete-time complex networks with distributed delays. IEEE Trans. Syst. Man Cybern. Part B 38(5), 1314–1325 (2008)

Fan, C.X., Jiang, G.P.: State estimation of complex dynamical network under noisy transmission channel. In: 2012 IEEE International Symposium on Circuits and Systems (ISCAS), pp. 2107–2110 (2012)

Wang, L.C., Wei, G.L., Shu, H.S.: State estimation for complex network with randomly occurring coupling delays. Neurocomputing 122, 513–520 (2013)

Zhang, J., Lyu, M., Karimi, H.R., Guo, P., Bo, Y.: Robust H ∞ filtering for a class of complex networks with stochastic packet dropouts and time delays. Sci. World J. (2014)

Yang, M., Wang, Y.W., Yi, J.W., Huang, Y.H.: Stability and synchronization of directed complex dynamical networks with random packet loss: the continuous-time case and the discrete-time case. Int. J. Circuit Theory Appl. 41(12), 1272–1289 (2013)

Wu, X., Jiang, G.P.: State estimation for continuous-time directed complex dynamical network with random packet dropout. In: 2015 Chinese Control Conference (CCC), pp. 3696–3670 (2015)

Lv, B., Liang, J., Cao, J.: Robust distributed state estimation for genetic regulatory networks with Markovian jumping parameters. Commun. Nonlinear Sci. Numer. Simul. 16(10), 4060–4078 (2011)

Huang, M., Dey, S.: Stability of Kalman filtering with Markovian packet losses. Automatica 43(4), 598–607 (2007)

You, K., Xie, L.: Minimum data rate for mean square stability of linear systems with Markovian packet losses. IEEE Trans. Autom. Control 56(4), 772–785 (2011)

Okano, K., Ishii, H.: Stabilization of uncertain systems with finite data rates and Markovian packet losses. In: Proceedings of European Control Conference, Zürich, Switzerland, pp. 2368–2373, June 2013

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper

Cao, S., Wan, Y. (2017). State Estimation for Discrete-Time Complex Dynamical Networks with Markovian Packet Losses. In: Yue, D., Peng, C., Du, D., Zhang, T., Zheng, M., Han, Q. (eds) Intelligent Computing, Networked Control, and Their Engineering Applications. ICSEE LSMS 2017 2017. Communications in Computer and Information Science, vol 762. Springer, Singapore. https://doi.org/10.1007/978-981-10-6373-2_55

Download citation

DOI: https://doi.org/10.1007/978-981-10-6373-2_55

Published:

Publisher Name: Springer, Singapore

Print ISBN: 978-981-10-6372-5

Online ISBN: 978-981-10-6373-2

eBook Packages: Computer ScienceComputer Science (R0)