Abstract

Diabetic Retinopathy (DR), the most common eye disease of the diabetic patients, occurs when small blood vessels gets damaged in the retina, due to high glucose level. It affects 80% of all patients who have had diabetes for 10 years or more, which can also lead to vision loss. Detection of diabetic retinopathy in advance, protects patients from vision loss. The major symptom of diabetic retinopathy is the exudates. Exudate is a fluid that filters from the circulatory system into lesions or area of inflammation. Detecting retinal fundus diseases in an early stage, helps the ophthalmologists apply proper treatments that might eliminate the disease or decrease the severity of it. This paper focuses on automatic detection of diabetic retinopathy through detecting exudates in colour fundus retinal images and also classifies the rigorousness of the lesions. Decision making of the severity level of the disease was performed by software tools classifiers. We can give normal, mild, moderate, severe levels of images as the inputs to the Graphical User Interface window and can get the corresponding output parameters and predictions. For the sample input images given, the output images of the parameter evaluations are helpful for all men to detect the disorders and the clinicians for the diagnosis.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Increase of diabetic patients in INDIA is in an alarming proportion. Uncontrolled diabetes can lead to blindness. Normally this is due to diabetic retinopathy. If it is detected earlier and required treatments are taken, blindness can be avoided. It may affect both the eyes simultaneously. Diabetic retinopathy is affected to 50% of diabetic patients. Diabetic Maculopathy and Diabetic Neuropathy are the common affected diseases in diabetic patients. The possibility of blindness for these types of patients is 25% more. Diabetic retinopathy is of two types: Non-proliferated diabetic retinopathy and Proliferated diabetic retinopathy [1, 2]. Vitreous heamorrhage and retinal detachments are the immediate complications leading to blindness, if proper treatment is not provided at the correct time. If it reaches to the complicated level and loses vision, then it is not possible to revert. Hence diabetic patients who are affected with vision may be monitored periodically to assess the real status of retina. Hence apart from a multi powered disease control approach, it is highly essential to utilize the advance technology enabling the doctors to have regular real time assessment of retinopathy in diabetic patients through telemedicine [3].

Diabetic retinopathy is a condition occurring in persons with diabetes, which causes progressive damage to the retina, the light sensitive lining at the back of the eye. It is a serious sight-threatening complication of diabetes. Diabetes is a disease that interferes with the body’s ability to use and store sugar, which can cause many health problems. Too much sugar in the blood can cause damage throughout the body, including the eyes. Over time, diabetes affects the circulatory system of the retina. Diabetic retinopathy is the result of damage to the tiny blood vessels that nourish the retina. They leak blood and other fluids that cause swelling of retinal tissue and clouding of vision. The condition usually affects both eyes. The longer a person has diabetes, the more likely they will develop diabetic retinopathy. If left untreated, diabetic retinopathy can cause blindness [4].

Symptoms of diabetic retinopathy include:

-

Seeing spots or floaters in your field of vision

-

Blurred vision

-

Having a dark or empty spot in the center of your vision

-

Difficulty seeing well at night

The ocular fundus is the only part of human body through which the vascular network can be observed directly and non-invasively. This can provide a lot of pathological information about eye diseases such as glaucoma, central serous retinopathy (CSR) and early signs of systemic diseases like diabetes, hypertension and cardiovascular diseases. All over the world, the patients having these diseases are increasing day by day. The medical imaging technology is developing in such a way that mass screening of patients is possible in ophthalmology. Nevertheless, the scarcity of medical experts in all landmarks of a country is limiting the mass screening of patients in developing countries. Hence, development of an automated system for analyzing digital fundus images and diagnosing the diseases is the greatest challenge, when mass screening of patients is needed [5]. The present work involves detection, quantification and analysis of retinal image parameters for various diseases and combined so that an intelligent system is developed for the diagnosis of glaucoma, diabetes, hypertension, stroke, heart attack and central serous retinopathy. Large numbers of retinal images of patients with necessary pathological data were taken from Regional Institute of Ophthalmology (Dept. of Ophthalmology), Medical College, Thiruvananthapuram for this work. The labelled diagram of a human eye and an image of the retina is shown in Fig. 1. Diabetes is detected from the presence of exudates and haemorrhages and changes in blood vessel parameters like arteriolar-to-venular diameter ratio (AVR). Images obtained from fundus camera are enhanced using filtering. Image segmentation is done to detect optic disc, fovea, exudates area and blood vessels. Connected component method along with concentric circle methods are used to determine the artery-vein width ratio. An algorithm is developed for the detection and quantification of the disease level from the parameters specified. The result is validated with the clinical data of the patient and achieved good results. A predictor system is developed to give the status of the patient from the analysis of the retinal image parameters using neural network techniques [6, 7].

A Fundus Camera which is used to obtain fundus images of the human retina is shown in Fig. 2. In the camera the beams propagating from two light sources, and incandescent lamp used for viewing the fundus and ash lamp for photography, are optically combined by a beam splitter [8].

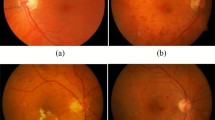

The fundus camera images of normal and diabetic patients are shown in Figs. 3 and 4. These images are used to detect and diagnosis the disease and the method of automatic detection systems are explained.

2 Proposed Method

The Graphical User Interface (GUI) window for the detection of diabetic retinopathy is designed as shown below in Fig. 5. This disease has dark red and yellow spots in it retinal fundus images. First input the image. Then resize to required size. Find the hue, saturation and intensity of the image [9]. Find contrast, homogeneity and correlation. Find threshold value for each. Use these values for disease detection.

In machine vision, colors of the pixels are composed from Red, Green and Blue, each measured in 8 bits (2 hexadecimal bits) [10]. The complete representation of a color would be of 6 hexadecimal bits. The problem is that a family of colors (like the orange and the combinations close to it) cannot be easily known from that 6 bits representation. Moreover, the changes in brightness will cause enormous changes in the RGB representation of a certain color. Therefore, in machine vision, the most used color representation is the HSI color space, which consists of Hue angle, Color saturation and Intensity [11]. To be independent of the intensity variance, we use the HS space. This will also help in making the processing and the computing faster.

The above describes histogram equalization on a gray scale image. However it can also be used on color images by applying the same method separately to the Red, Green and Blue components of the RGB color values of the image. However, applying the same method on the Red, Green, and Blue components of an RGB image may yield dramatic changes in the image’s color balance since the relative distributions of the color channels change as a result of applying the algorithm [12]. However, if the image is first converted to another color space, then the algorithm can be applied to the luminance or value channel without resulting in changes to the hue and saturation of the image. There are several histogram equalization methods in 2D and 3D space. However, it results in “whitening” where the probability of bright pixels is higher than that of dark ones [13].

With a large number of variables, K-Means may be computationally faster than hierarchical clustering (if K is small). K-Means may produce tighter clusters than hierarchical clustering, especially if the clusters are globular. Contrast is defined as the separation between the darkest and brightest areas of the image. Increase contrast and you increase the separation between dark and bright, making shadows darker and highlights brighter. Contrast enhancement plays a crucial role in image processing applications, such as digital photography, medical image analysis and scientific visualization. Image enhancement is a technique which reduces image noise, remove artifacts, and preserve details [14, 15]. Its purpose is to amplify certain image features for analysis, diagnosis and display. Contrast enhancement increases the total contrast of an image by making light colors lighter and dark colors darker at the same time. It does this by setting all color components below a specified lower bound to zero, and all color components above a specified upper bound to the maximum intensity (that is, 255). Color components between the upper and lower bounds are set to a linear ramp of values between 0 and 255. Because the upper bound must be greater than the lower bound, the lower bound must be between 0 and 254, and the upper bound must be between 1 and 255 [16, 17].

There are several reasons for an image/video to have poor contrast:

-

|| the poor quality of the used imaging device, |

-

|| lack of expertise of the operator and |

-

|| the adverse external conditions at the time of acquisition.|

These effects result in under-utilization of the offered dynamic range. As a result, such images and videos may not reveal all the details in the captured scene, and may have a washed-out and unnatural look. In graphics and imaging, color saturation is used to describe the intensity of color in the image [18]. A saturated image has overly bright colors. Using a graphics editing program we can increase saturation on under-exposed images, or vice versa. Digital Image Correlation (DIC) is a full-field image analysis method, based on grey value digital images that can determine the contour and the displacements of an object under load in three dimensions. Due to rapid new developments in high resolution digital cameras for static as well as dynamic applications, and computer technology, the applications for this measurement method has broadened and DIC techniques have proven to be a flexible and useful tool for deformation analysis.

The image shown in Fig. 6 is that of an acute diabetic patient. Likewise we can give normal, mild, moderate, severe levels of images as the inputs to the GUI window and can get the corresponding output parameters and predictions [19, 20]. For the sample input image given above, the output images of the parameters helpful for all men to detect the disorders and the clinicians for the diagnosis, are shown in Fig. 7.

3 Conclusion

According to the above images and values, we can send all the parameters to the highly qualified ophthalmologists. Final decisions and all other curing methods, preventive medicines and the consultation if needed should be done by the clinicians. The above developed system can be used by the biomedical engineers to help the doctors and the remotely resident patients. If this system is developed to work in online way, then the doctors can identify the eye conditions of the patients who are very far away from hospitals. The system can be developed to work as a kiosk in remote areas like ATM machines, X-ray labs, Clinical labs to test the blood and all etc. Then it will be very useful and will get a maximum coverage of the patients around the globe. The doctors need not run to all patients here and there. The patients can go to the very nearby labs and if needed only they can go the service of the doctors.

We now designed only a prototype of the system in software model only. If this system is to be developed in a hardware model, sufficient fund is needed just to make a sample. Then only we can make arrangements for the commercialization of the product.

This is going to be a great boon and boom for the common man (actual beneficiaries of this project) to get into the prevention of diabetes rather than cure as a result of periodic review using this project. Unfortunately if any person gets into the diabetic disorder, then this project will help in the periodic assessment of the various levels of the disease, thereby avoiding the progression of the disorder cent percentage.

References

S. Wild, G. Roglic, A. Green, R. Sicree, H. King, Global prevalence of diabetes estimates for the year 2000 and projections for 2030. Diab. Care 27(5), 1047–1053 (2004)

D.A. Quillen, Common causes of vision loss in elderly patients. Am. Family Physician 60(1), 99–108 (1999)

R. Klein, B.E.K. Klein, S.E. Moss, Visual impairment in diabetes. Ophthalmology 91(1), 1–9 (1984)

M.D. Saleh, C. Eswaran, An automated decision support system for non-proliferative diabetic retinopathy disease based on mas and has detection. Comput. Methods Programs Biomed. 108(1), 186–196 (2012)

A. Kumar, A.K. Gaur, M. Srivastava, A segment based technique for detecting exudate from retinal fundus image. Procedia Technol. 6, 1–9 (2012)

M.M. Fraz, S.A. Barman, P. Remagnino, A. Hoppe, A. Basit, B. Uyyanonvara, A.R. Rudnicka, C.G. Owen, An approach to localize the retinal blood vessels using bit planes and centerline detection. Comput. Methods Programs Biomed. 108(2), 600–616 (2012)

Xu Lili, Shuqian Luo, A novel method for blood vessel detection from retinal images. Biomed. Eng. Online 9(1), 14 (2010)

M. Foracchia, E. Grisan, A. Ruggeri, Luminosity and contrast normalization in retinal images. Med. Image Anal. 9(3), 179–190 (2005)

M. Vlachos, E. Dermatas, Multi-scale retinal vessel segmentation using line tracking. Comput. Med. Imaging Graph. 34(3), 213–227 (2010)

K. Saranya, B. Ramasubramanian, S. Kaja Mohideen, A novel approach for the detection of new vessels in the retinal images for screening diabetic retinopathy, in International Conference on Communications and Signal Processing (ICCSP), IEEE, 2012, pp. 57–61 (2012)

K. Narasimhan, V.C. Neha, K. Vijayarekha, An efficient automated system for detection of diabetic retinopathy from fundus images using support vector machine and Bayesian classifiers in Computing. in International Conference on IEEE Electronics and Electrical Technologies (ICCEET), pp. 964–969 (2012)

K.A. Goatman, A.D. Fleming, S. Philip, G.J. Williams, J.A. Olson, P.F. Sharp, Detection of new vessels on the optic disc using retinal photographs. IEEE Trans. Med. Imaging 30(4), 972–979 (2011)

B. Antal, A. Hajdu, An ensemble-based micro aneurysm detector for retinal images, in 18th IEEE International Conference on Image Processing (ICIP), IEEE, pp. 1621–1624 (2011)

M. Tamilarasi, K. Duraiswamy, Genetic based fuzzy seeded region growing segmentation for diabetic retinopathy images, in International Conference on IEEE Computer Communication and Informatics (ICCCI), pp. 1–5 (2013)

Balint Antal, Andras Hajdu, An ensemble-based system for micro aneurysm detection and diabetic retinopathy grading. IEEE Trans. Biomed. Eng. 59(6), 1720–1726 (2012)

C. Köse, U. Şevik, C. Ikibaş, H. Erdöl, Simple methods for segmentation and measurement of diabetic retinopathy lesions in retinal fundus images. Comput. Methods Programs Biomed. 107(2), 274–293 (2012)

M.U. Akram, S. Khalid, A. Tariq, S.A. Khan, F. Azam, Detection and classification of retinal lesions for grading of diabetic retinopathy. Comput. Biol. Med. 45, 161–171 (2014)

D. Marín, A. Aquino, M.E. Gegúndez-Arias, J.M. Bravo, A new supervised method for blood vessel segmentation in retinal images by using gray-level and moment invariants-based features, IEEE Transactions on Medical Imaging, 30(1), 146–158 (2011)

A. Anzalone, F. Bizzarri, M. Parodi, M. Storace, A modular supervised algorithm for vessel segmentation in red-free retinal images. Comput. Biol. Med. 38(8), 913–922 (2008)

M.U. Akram, A. Tariq, S.A. Khan, M.Y. Javed, Automated detection of exudates and macula for grading of diabetic macular edema. Comput. Methods Programs Biomed. 114(2), 141–152 (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Prageeth, P.G., Sukesh Kumar, A., Mahadevan, K. (2019). Real Time Periodic Assessment of Retina of Diabetic Patients for Early Detection of Diabetic Retinopathy. In: Chattopadhyay, S., Roy, T., Sengupta, S., Berger-Vachon, C. (eds) Modelling and Simulation in Science, Technology and Engineering Mathematics. MS-17 2017. Advances in Intelligent Systems and Computing, vol 749. Springer, Cham. https://doi.org/10.1007/978-3-319-74808-5_50

Download citation

DOI: https://doi.org/10.1007/978-3-319-74808-5_50

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-74807-8

Online ISBN: 978-3-319-74808-5

eBook Packages: EngineeringEngineering (R0)