Abstract

This work presents a scheme based on a discrete recurrent high order neural network identifier and a block control based on sliding modes for nonlinear discrete-time systems with input delays in real-time. The identifier is trained with an extended Kalman Filter based algorithm and the block control is used for trajectory tracking. Experimental results are included using a linear induction motor prototype with added delays to its input signals.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Delays in systems are a source of instability and poor performance, also, they make system analysis a more complex task [1, 2]. Time delay systems mainly inherit delay from their components and examples can be found easily in areas like chemical industry, hydraulic systems, metallurgical processing and network systems [1, 2].

System identification is a process to obtain a mathematical model of a system from data obtained from a practical experiment with the system [3]. Among the many techniques for system identification, neural networks stand up [3, 4].

Recurrent high order neural networks (RHONNs) internal connections allow them to capture the response of complex nonlinear systems and to have characteristics like robustness against noise, on-line and off-line training, and the possibility of incorporating a priori information about the system to identified [5,6,7]. On the other hand, training of neural networks with Kalman filter algorithms has proved to be reliable and practical, also, it offers advantages for the improvement of learning convergence and computational efficiency compared to backpropagation methods [5, 6].

Neural block control is a methodology which uses a neural identifier of the block controllable form of a system, then, based on this model a discrete control law is designed combining discrete-time block-control and sliding modes technique [5].

There are a number of methodologies which work with systems with input delay [8,9,10,11]. The main disadvantages of these methodologies are that they need a lot of information about the system, in our methodology, there is not necessary to know the model of the system because it works with the model obtained in the neural identification process. Moreover, most of them work in continuous-time which could be seen as a disadvantage due to the tendency towards digital rather than analog systems [12]. In this way, we present a rather simple to work with a scheme for discrete-time systems with input delays which can be used in real time even if the model of the system is unknown or incomplete.

On the other hand, compared with some of our previous works [13,14,15] this paper differs in that none of them treat the case of input delay in the system and some do not even consider any kind of delay.

The paper outline: Sect. 2 is dedicated to neural identification using RHONNs and extended Kalman filter (EKF) training. Then, the block control is in Sect. 3, results are shown in Sect. 4. Finally, the conclusions are included in Sect. 5.

2 Neural Identification

Neural identification is a process to obtain a mathematical model of a system by selecting a neural network and an adaptation law, in a way that the neural network responds in the same way to an input as the system to be identified [3].

2.1 Recurrent High Order Neural Network Identification

In this work, we use the following RHONN series-parallel model:

where \( n \) is the state dimension, \( \widehat{x} \) is the neural network state vector, \( \omega \) is the weight vector, x is the plant state vector, and \( u \) is the input vector to the neural network, \( l \) is the unknown time delay and \( z_{i} \left( \cdot \right) \) is defined as follows:

with \( L_{i} \) as the respective number of high-order connections, \( \left\{ {I_{1} , I_{2} , \cdots , I_{{L_{i} }} } \right\} \) is a collection of non-ordered subsets of \( \left\{ {1, 2, \cdots , n + m} \right\} \), \( d_{ij} \left( k \right) \) being non-negative integers and \( 1/\left( {1 + e^{ - \beta v} } \right) \) with \( \beta > 0 \) and \( v \) is any real value variable.

EKF Training Algorithm.

The training goal is to find the optimal weight vector which minimizes the prediction error. In this way, the weights \( \omega \) become the states to be estimated by the Kalman filter, and the identification error between \( x \) and \( \widehat{x} \) is considered as additive white noise [4]. The training algorithm is based on the EKF due to the nonlinearity of the neural network (1) and is defined in (4).

where \( \omega_{i} \in {\mathcal{R}}^{{L_{i} }} \) is the adapted weight vector, \( \eta \in {\mathcal{R}} \) is the learning rate, \( \widehat{x}_{i} \) is the \( i \) -th state variable of the neural network, \( K_{i} \in {\mathcal{R}}^{{L_{i} }} \) is the Kalman gain vector, \( R_{i} \in {\mathcal{R}} \) is the error noise covariance, \( H_{i} \in {\mathcal{R}}^{{L_{i} }} \) is vector with entries \( H_{ij} = \left[ {\partial \widehat{x}_{i} \left( x \right)/\partial \omega_{ij} \left( k \right)} \right]^{T} \) and \( P_{i} \in {\mathcal{R}}^{{L_{i} \times L_{i} }} \) is the weight estimation error covariance matrix, \( Q_{i} \in {\mathcal{R}}^{{L_{i} \times L_{i} }} \) is the estimation noise covariance matrix. \( P_{i} \) and \( Q_{i} \) are initialized as diagonal matrices with entries \( P_{i} \left( 0 \right) \) and \( Q_{i} \left( 0 \right) \), respectively.

RHONN Identification.

Consider the following Nonlinear Discrete-Time System with input delay:

where \( x \in {\mathcal{R}}^{n} \), \( u \in {\mathcal{R}}^{m} \), \( F \in {\mathcal{R}}^{n} \times {\mathcal{R}}^{m} \to {\mathcal{R}}^{n} \) is a nonlinear function and \( l = 1,2, \cdots \) is the unknown delay. Then, our identification process consists of approximating the system (5) with the RHONN (1) trained online with the EKF algorithm (4).

This identification process is validated achieving a small error between the system outputs and the identifier outputs for the same inputs.

3 Neural Block Control

The model of many practical nonlinear systems can be transformed in the block controllable form [5]:

where \( j = 1, \ldots ,r - 1 \), \( x \in {\mathcal{R}}^{n} \) is the state variable vector with \( x(k) = \left[ {x_{1} (k) \cdots x_{r} (k)^{T} } \right] \), \( \bar{x}_{j} = (k)\left[ {x_{1} (k) \cdots x_{j} (k)} \right]^{T} \), \( r \ge 2 \) is the number of blocks, \( u \in {\mathcal{R}}^{m} \), \( d(k) = \left[ {d_{1} (k) \cdots d_{j} (k) \cdots d_{r} (k)^{T} } \right] \) is the bounded unknown disturbance vector and \( f_{j} \left( \cdot \right) \) and \( B_{j} \left( \cdot \right) \) are smooth nonlinear functions. Consider the following transformation [5]:

where \( x_{1}^{d} \) is the tracking reference, \( x_{i}^{d} \) is the desired value for \( x_{i} \); and \( {\rm K} \) is a Shur matrix. Using (7) and selecting \( S_{D} \left( k \right) = \chi_{r} \left( k \right) = 0 \) system (6) can be rewritten as (8):

then, \( u\left( k \right) \) is defined in (9), where \( u_{eq} \) is calculated from \( S_{D} \left( {k + 1} \right) = 0 \) and \( u_{0} \) it is the control resources that bound the control.

Hence, the first step of the process is to design a RHONN identifier in a block controllable form for the system to be identified and then obtained the \( u\left( k \right) \) as in (9).

4 Results

Test Description.

Using the Lineal Induction Motor prototype (Fig. 1) which is based in a dSPACE® board RTI1104 and a MATLAB®/Simulink® interface the neural block control is implemented in a Simulink model with communication to the prototype by the dSPACE® tools. A subsystem to induce delays in the system input is created in Simulink®. The subsystem consists of that 4 s after the prototype starting the control signal is switched to a version with random time-delay. This is achieved using the block “Variable Transport Delay” with the configuration variable time delay which receives as input at each time a random number from 0 to 10 multiplied by the sampling time set to \( 0.0003\,\text{s}. \)

Experimental Results.

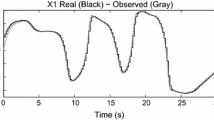

Table 1 shows the identification errors for all variable states and Fig. 2 shows two graphs the first one shows the velocity tracking, the second one shows the tracking of the flux magnitude which is defined as:

5 Conclusions

In general, it is seen that the proposed scheme adapts itself quickly even in the presence of real-time disturbances and the added delays in the input to the system. More specifically, the errors shown in Table 1 are small for all variable states, even for alpha and beta currents considering that they real values can be as high as 30 A. Figure 2 shows the velocity tracking with a good performance and a flux magnitude which is maintained around its reference. Also, when the added time delay starts at 4 s it is noticeable that the performance changes, however, the presented RHONN identifier – control scheme is still capable of maintaining the dynamic of the desired trajectory. Moreover, it is important to note that for real-time tests a series of external parameters are involved like imperfections of the prototype components which induce noise to the lecture of the signals. We are working on improving our scheme and available equipment to test it.

References

Boukas, E., Liu, Z.: Deterministic and Stochastic Time-Delay Systems. Birkhauser, Boston (2012)

Mahmoud, M.: Switched Time-Delay Systems: Stability and Control. Springer, Heidelberg (2014)

Norgaard, M., Ravn, O., Poulsen, N., Hansen, L.: Neural Networks for Modelling and Control of Dynamic System. Springer, New York (2000)

Fu, L., Li, P.: The research survey of system identification. In: 5th International Conference on Intelligent Human-Machine Systems and Cybernetics (IHMSC), Hangzhou, China, pp. 397–401. IEEE (2013)

Sanchez, E., Alanis, A., Loukianov, A.: Discrete-Time High Order Neural Control. Springer, Berling (2008)

Haykin, S.: Neural Networks and Learning Machines. Prentice Hall, Upper Saddle River (2009). International

Rovithakis, G., Christodoulou, M.: Adaptive Control with Recurrent High-order Neural Networks: Theory and Industrial Applications. Springer, London (2012)

Liu, M.: Neural network based fault tolerant control of a class of nonlinear systems with input time delay. In: Advances in Neural Networks - ISNN 2004: International Symposium on Neural Networks, Dalian, China, pp. 91–96. Springer (2004)

Spandan, R., Indra, K.: Adaptive robust tracking control of a class of nonlinear systems with input delay. Nonlinear Dyn. 85(2), 1124–1139 (2016)

Li, L., Niu, B.: Adaptive neural network tracking control for switched strict-feedback nonlinear systems with input delay. In: 2015 Sixth International Conference on Intelligent Control and Information Processing (ICICIP), Wuhan, China (2015)

Li, H., Wang, L., Du, H., Boulkrone, A.: Adaptive fuzzy backstepping tracking control for strict-feedback systems with input delay. IEEE Trans. Fuzzy Syst. 25, 642–652 (2017)

Ogata, K.: Discrete-time Control Systems. Prentice Hall, Upper Saddle River (1995). International

Alanis, A., Rios, J., Rivera, J., Arana-Daniel, N., Lopez-Franco, C.: Real-time discrete neural control applied to a linear induction motor. Neurocomputing 164, 240–251 (2015)

Alanis, A., Rios, J., Arana-Daniel, N., Lopez-Franco, C.: Neural identifier for unknown discrete-time nonlinear delayed systems. Neural Comput. Appl. 27(8), 2453–2464 (2016)

Rios, J., Alanis, A., Lopez-Franco, M., Lopez-Franco, C., Arana-Daniel, N.: Real-time neural identification and inverse optimal control for a tracked robot. Adv. Mech. Eng. 9(3), 1–18 (2017)

Acknowledgments

The authors thank the support of CONACYT Mexico, through Projects CB256769 and CB258068 (“Project supported by Fondo Sectorial de Investigación para la Educación”).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2018 Springer International Publishing AG

About this paper

Cite this paper

Rios, J.D., Alanís, A.Y., Arana-Daniel, N., López-Franco, C. (2018). Neural Identifier-Control Scheme for Nonlinear Discrete Systems with Input Delay. In: Melin, P., Castillo, O., Kacprzyk, J., Reformat, M., Melek, W. (eds) Fuzzy Logic in Intelligent System Design. NAFIPS 2017. Advances in Intelligent Systems and Computing, vol 648. Springer, Cham. https://doi.org/10.1007/978-3-319-67137-6_26

Download citation

DOI: https://doi.org/10.1007/978-3-319-67137-6_26

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-67136-9

Online ISBN: 978-3-319-67137-6

eBook Packages: EngineeringEngineering (R0)