Abstract

Both Smale’s alpha theory and Rump’s interval theorem provide the conditions which guarantee the existence of a simple solution of a square nonlinear system. In this paper, we generalize the conclusion provided by Rall to reveal the relationship between Smale’s alpha theory and Rump’s interval theorem. By point estimates, we propose the conditions under which the condition of Rump’s interval theorem holds. Furthermore, using only the information of the given system at the initial approximate point, we give the convergence conditions of interval Newton’s algorithm proposed by Rump.

Z. Li—This research was supported by Chinese National Natural Science Foundation under Grant Nos. 11601039, 11671169, 11501051, by the open fund Key Lab. of Symbolic Computation and Knowledge Engineering under Grant No. 93K172015K06, and by the Education Department of Jilin Province, “13th Five-Year” science and technology project under Grant No. JJKH20170618KJ.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Solving a nonlinear system in the form \(\varvec{f}(\varvec{x})=\varvec{0}\) with \(\varvec{f}={({f}_1, f_2, \ldots , f_n)}^{T}\) and \(\varvec{x}={(x_1, \ldots , x_n)}^T\) is one of the most fundamental problems in scientific computing. In this paper, we assume that \(\varvec{f}: \mathbb {R}^n \rightarrow \mathbb {R}^n\) and \(f_1, f_2, \ldots , f_n\) have all order continuous partial derivatives. Denote the Jacobian matrix of \(\varvec{f}\) at \(\varvec{x}\) by \(\varvec{f}'(\varvec{x})\).

Newton’s method and its modifications have long played an important role in solving nonlinear systems. Under certain conditions, Newton’s method constructs a sequence of iteration points that will converge to a solution of the given nonlinear system. In 1948, the author of [2] established the Kantorovich theorem based on the assumption that the Jacobian matrix of the nonlinear system is Lipschitz continuous on some domain. The Kantorovich theorem first gives the condition to ensure that a simple solution exists close to the initial point and the Newton iteration sequence quadratically converges to this simple solution. Using the technique of point estimates, Smale et al. [15,16,17] developed the alpha theory to locate and approximate simple solutions. The alpha theory requires only information concerning the nonlinear system at the initial point of the Newton iteration. By introducing the dominating sequence technique, Wang and Han [18] improved both the condition and conclusion of the alpha theory. With the aid of Schröder operator, Giusti et al. [3] provided a criterion for locating clusters of solutions of univariate nonlinear functions. Later on, Giusti et al. [4] generalized their results to locate breadth-one singular solutions of multivariate nonlinear systems. For the performance of the alpha theory, Hauenstein and Sottile [6] described the program alphaCertified to certify solutions of polynomial systems. Recently, Hauenstein and Levandovskyy [5] extended the program alphaCertified to verify solutions to polynomial-exponential systems.

Interval arithmetic is another important tool of verification methods. In 1960s, Krawczyk [9] first introduced an interval version of Newton’s method for verifying the existence of simple solutions. Moore [10] proposed computationally verifiable sufficient condition for interval Newton’s method given by Krawczyk. Rump [12] made interval Newton’s method perform better in practice, which is called Rump’s interval theorem and included in verifynlss function in INTLAB toolbox [13] in Matlab. Based on the deflation technique using smoothing parameters, Rump and Graillat [14] described a verification algorithm to verify multiple solutions of univariate nonlinear functions and double solutions of multivariate nonlinear systems. Further, Li and Zhi [8] provided an algorithm to verify breadth-one singular solutions of polynomial systems, which had been generalized to deal with the verification of isolated singular solutions in [7]. By combining interval algorithms with some other methods, Yang et al. [19] investigated the verification for real solutions of positive-dimensional polynomial systems.

In 1980, Rall [11] exhibited the relationship between the Kantorovich theorem and Moore’s interval theorem. By the quantities of the Kantorovich theorem, Rall provided the conditions under which Moore’s verifiable sufficient condition holds. For an initial approximate \(\varvec{x}^{(0)}\in \mathbb {R}^n \) and a radius \(\rho >0\), let \(\varvec{X}_{\rho }\) denote the set \(\{\varvec{x}: \Vert \varvec{x}-\varvec{x}^{(0)}\Vert _{\infty }<\rho \}\), and let \(\eta \), B, \(\kappa \) be the constants such that

where \(\varOmega \) is a sufficiently large region containing \(\varvec{x}^{(0)}\). Rall’s conclusion is that if

then

holds for any \(\rho \) satisfying the inequality

Since the alpha theory and Rump’s interval theorem are respectively the generalization of the Kantorovich theorem and Moore’s interval theorem, we generalize Rall’s conclusion to discuss the relationship between the alpha theory and Rump’s interval theorem in this paper. By only the information of the given system at the initial approximate point, we provide the conditions to guarantee that we can obtain an approximate point after finite Newton’s iterations, where this approximate iteration point corresponds to an interval solution satisfying the condition of Rump’s interval theorem. The next section will give some notation and background results.

2 Notation and Preliminaries

First of all, we emphasize that the norm \(\Vert \cdot \Vert \) of the vector and the matrix in this paper are both the infinite norm \(\Vert \cdot \Vert _{\infty }\) since the metric for the interval vector is closely related to the infinite norm.

Henceforward, we use boldface letters to express tuples and denote their entries by the same letter with subscripts, for example \(\varvec{\alpha }={({\alpha }_1, \ldots , {\alpha }_n)}^T\). Denote the usual product order on \(\mathbb {R}^n\) by \(\le \), that is, for arbitrary \(\varvec{\alpha }, \varvec{\beta }\in \mathbb {R}^n\), \(\varvec{\alpha }\le \varvec{\beta }\) if and only if \(\alpha _i\le \beta _i\) for \(1\le i\le n\).

For \(\varvec{x}\in \mathbb {R}^n\), if \(\varvec{f}'(\varvec{x})\) is nonsingular, then define

Given the initial approximate \(\varvec{x}^{(0)}\) with the associated simple root \(\varvec{x}^*\) of \(\varvec{f}\), we let \(\alpha \), \(\beta \) and \(\gamma \) to stand for \(\alpha (\varvec{f}, \varvec{x}^{(0)})\), \(\beta (\varvec{f}, \varvec{x}^{(0)})\) and \(\gamma (\varvec{f}, \varvec{x}^{(0)})\), respectively. Applying Newton’s method for \(\varvec{f}\) can get the Newton iteration sequence \(\{\varvec{x}^{(k)}\}\), that is,

The alpha theory provides the convergence conditions which ensure the sequence \(\{\varvec{x}^{(k)}\}\) converges to \(\varvec{x}^*\) only with the values \(\alpha \), \(\beta \) and \(\gamma \). The dominating sequence technique is a powerful tool for improving the alpha theory. The dominating sequence \(\{t^{(k)}\}\) is produced by the Newton iteration with the initial approximate \(t^{(0)}=0\) for the univariate function

where the equation \(h(t)=0\) has the following two solutions

The following theorem is a version of the alpha theory given by Wang and Han, where the condition on the quantity \(\alpha \) is best possible.

Theorem 1

[4, 18] If \(0<\alpha <3-2\sqrt{2}\), then for any \(t^{*}\le \rho <t^{**}\), the system \(\varvec{f}(\varvec{x})=\varvec{0}\) has exactly one simple root \(\varvec{x}^{*}\) in \(\overline{B}(\varvec{x}^{(0)}, \rho )\). In addition, the Newton iteration sequence \(\{\varvec{x}^{(k)}\}\) converges quadratically to \(\varvec{x}^{*}\), and the dominating sequence \(\{t^{(k)}\}\) increases and converges quadratically to \(t^*\). Furthermore, for all \(k\in \mathbb {N}\),

with

Denote the set of intervals by \(\mathbb {I}\mathbb {R}\). An interval vector \(\mathbf X =[\underline{\varvec{x}}, \overline{\varvec{x}}]\in \mathbb {I}\mathbb {R}^n\) with \(\underline{\varvec{x}}, \overline{\varvec{x}}\in \mathbb {R}^n\) and \(\underline{\varvec{x}}\le \overline{\varvec{x}}\) is defined by

For \(\varvec{x}\in \mathbb {R}^n\), \(\mathbf X =[\underline{\varvec{x}}, \overline{\varvec{x}}]\in \mathbb {I}\mathbb {R}^n\), \(\varvec{x}+\mathbf X =[\varvec{x}+\underline{\varvec{x}}, \varvec{x}+\overline{\varvec{x}}]\). Let \(\mathbf Y _\rho =\{\varvec{y}\in \mathbb {R}^n: \Vert \varvec{y}\Vert \le \rho \}\), then \(\overline{B}(\varvec{x}, \rho )=\varvec{x}+\mathbf Y _\rho \).

The norm of the interval vector \(\mathbf X =[\underline{\varvec{x}}, \overline{\varvec{x}}]\) is defined by

Besides \(\mathrm {int}(\mathbf X )\) designates the interior of the interval vector \(\mathbf X \). Given a set \(\mathbf Z \subset \mathbb {R}^n\), the interval hull of \(\mathbf Z \) is the narrowest interval vector containing \(\mathbf Z \), namely,

Given a continuous mapping \(\varvec{g}: \mathbb {R}^{n} \rightarrow \mathbb {R}^{m}\) and an interval vector \(\mathbf X \), the interval vector \(\varvec{g}(\mathbf X )\in \mathbb {I}\mathbb {R}^{m}\) is defined as

Given an interval matrix \(\mathbf A \in \mathbb {I}\mathbb {R}^{m\times n}\) and an interval vector \(\mathbf X \in \mathbb {I}\mathbb {R}^{n}\), the interval vector \(\mathbf A {} \mathbf X \) is defined by

Specially, the norm of the interval matrix \(\mathbf A \) is defined as

The following theorem is a version of the interval Newton’s method given by Rump.

Theorem 2

[12] Given \({\varvec{x}}^{(0)}\in \mathbb {R}^n\), \(\mathbf Y \in \mathbb {I} \mathbb {R}^n\) with \(\varvec{0}\in \mathbf Y \), \(R\in \mathbb {R}^{n\times n}\), if

then there exists a unique \({{\varvec{x}}^{*}}\in {\varvec{x}}^{(0)}+\mathbf Y \) such that \(\varvec{f}({{\varvec{x}}^{*}})=\varvec{0}\).

3 Main Results

To give the main results of this paper, we need the following functions,

Here we give some lemmas and one proposition from which the main theorem will easily follow.

Lemma 1

[15] Given \(\widetilde{\varvec{x}}\in \mathbb {R}^n\), if \(\gamma \Vert \widetilde{\varvec{x}}-{\varvec{x}}^{(0)}\Vert <1-\sqrt{2}/{2}\), then

Lemma 2

If \(0<\alpha < 3-2 \sqrt{2}\), then for all \(k\ge 1\),

Proof

If \(0<\alpha <3-2 \sqrt{2}\), then by Theorem 1,

Therefore

Since the right hand side of the above inequality monotonously increases from 0 to \(1-\sqrt{2}/2\) as \(\alpha \) goes from 0 to \(3-2\sqrt{2}\), it follows that (3) holds. By means of Lemma 1, (4) follows. \(\square \)

Lemma 3

If

then

Proof

Given an arbitrary real vector \(\varvec{y}\in \mathbf{Y }_{\rho }\), we expand the Jacobian matrix \(\varvec{f}'(\varvec{x}^{(0)}+\varvec{y})\) into power series and get

If (5) holds, then

Suppose that (5) (6) hold, then for an arbitrary real vector \(\varvec{y}\in \mathbf X _{\rho }\), we can infer that

which implies that (7) holds. \(\square \)

Lemma 4

Let

then for an arbitrary \(0< \alpha < \alpha ^*\), we have

Proof

According to Cartan’s root-finding formula, the equation \(g_3(\alpha )=0\) has only a positive real root \(\alpha ^*\) as in (8). Obviously, \(g'_1(\alpha )<0\) and \(g'_2(\alpha )>0\) for all \(0<\alpha < \alpha ^*\). Thus both \(\theta (\alpha )\) and \(\omega _2(\alpha )\) monotonously decrease on the interval \((0, 3-2\sqrt{2})\). A routine computation gives rise to

then for all \(0<\alpha <\alpha ^*\), \(\omega '_1(\alpha )>0\). This lemma follows immediately from what we have proved. \(\square \)

Proposition 1

Under the condition (5), the inequality (6) holds if and only if

Proof

Define \(\varDelta ={({q}/{2})}^2+{({p}/{3})}^3\) with

then

It follows by Lemma 4 that for all \(\alpha >0\), \(p<0\) and \(q<0\), then we have three cases to consider.

Case I: \(\alpha >\alpha ^*\). In this case, \(\varDelta >0\) and the equation

has only one real solution

An easy computation yields that for all \(\alpha >\alpha ^*\), \(\rho ^*>{(1-{\sqrt{2}}/{2})}/{\gamma }\). Therefore, in this case, (6) holds if and only if \(\rho >\rho ^*\), which contradicts the condition (5).

Case II: \(\alpha =\alpha ^*\). In this case, \(\varDelta =0\) and (11) has only two unequal real solutions

Clearly,

Hence in this case, (6) can not hold under the condition (5).

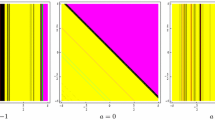

Case III: \(0<\alpha <\alpha ^*\). In this case, \(\varDelta <0\) and (11) has three unequal real solutions

Recalling Lemma 4, we know that for all \(0<\alpha <\alpha ^*\),

Thus in this case, (6) holds if and only if (10) holds.

As a whole, under the condition (5), the inequality (6) holds if and only if (9) and (10) hold. \(\square \)

On the basis of \(\alpha \), \(\beta \) and \(\gamma \), the following theorem provides the conditions such that (7) holds, which is an immediate conclusion of Proposition 1.

Theorem 3

If \(0<\alpha <{\alpha }^*\), then for any \(\rho \) satisfying the inequality

the condition (7) holds.

The following corollary indicates that the alpha theory is of greater precision than Rump’s interval theorem, which can be directly deduced by an easy computation.

Corollary 1

If \(0<\alpha <{\alpha }^*\), then

For the Newton iteration sequence \(\{ \varvec{x}^{(k)}\}\), define

In view of the quantity \(\alpha \) of the alpha theory proposed by Wang and Han [18], we give the following convergence condition of interval Newton’s algorithm proposed by Rump.

Proposition 2

Let \(\lceil \cdot \rceil \) be the integer ceiling function, \(p(\alpha )\) be defined by

and \(q(\alpha )\) be defined in (2). If \(0<\alpha < 3-2 \sqrt{2}\), then for any

the condition

holds for any \(\rho ^{(k)}\) satisfying the inequality

Proof

By Theorem 1 and Lemma 2, we know that if \(0<\alpha < 3-2 \sqrt{2}\), then for any \(k\in \mathbb {N}\),

If \(0<\alpha < 3-2 \sqrt{2}\), then for all \(k \in \mathbb {N}\),

which implies that for all \(k \in \mathbb {N}\),

Hence if \(0<\alpha < 3-2 \sqrt{2}\), then for all \(k \in \mathbb {N}\),

which implies that \(0<\alpha (\varvec{f},\varvec{x}^{(k)})<\alpha ^*\) holds if the iteration number k satisfies the inequality (13). Our conclusion will follow from Theorem 3. \(\square \)

With the aid of the quantity \(\alpha \) of the alpha theory given in [1], the conclusion of the above proposition can be improved as follows.

Proposition 3

If \(0<\alpha \le {(13-3\sqrt{17})}/{4}\), then for any \(k\ge 3\), the condition (14) holds for any \(\rho ^{(k)}\) satisfying the inequality (15).

Proof

Since the function \(\alpha / {\psi (\alpha )}^2\) monotonously increases on the interval \([0, 1-\sqrt{2}/2)\), it follows that \({\alpha }/{{\psi (\alpha )}^2}<1\) for any \(0<\alpha \le {(13-3\sqrt{17})}/{4}\). Recalling Proposition 1 in [15], we can deduce that if \(0<\alpha \le {(13-3\sqrt{17})}/{4}\), then for any \(k\in \mathbb {N}\),

As a result, if \(0<\alpha \le {(13-3\sqrt{17})}/{4}\), then \(0<\alpha (\varvec{f},\varvec{x}^{(3)})<\alpha ^*\). \(\square \)

4 Example

In this section, we propose some examples to illustrate our conclusion, which are done in Matlab R2012a with INTLAB V6 under Windows 7. In these examples, the true interval of the display as \({-0.0059\_\_\_\_\_\_\_\_\_\_}\) is obtained by subtracting and adding 1 to the last displayed digit, namely,

Example 1

Let

and \(\varvec{x}^{(0)}={(1.8, 1.8)}^T\). The program of the alpha theory computes

then by Proposition 3, we can immediately deduce that \(0<\alpha (\varvec{f}, \varvec{x}^{(3)})<\alpha ^*\). The values of \(\alpha (\varvec{f}, \varvec{x}^{(k)})\), \(\rho ^*(\varvec{f}, \varvec{x}^{(k)})\), \(\rho ^{**}(\varvec{f}, \varvec{x}^{(k)})\), \(k=1, 2, 3\), are shown in Table 1, and the values of \(\varvec{x}^{(k)}\), \(\rho ^{(k)}\), \(S(\mathbf{Y }_{\rho ^{(k)}}, \varvec{x}^{(k)})\), \(k=1, 2, 3\), are shown in Table 2.

Example 2

[11] Let

with

where \(t_i, w_i,i=1,2, \ldots , 9\), are respectively the nodes and weights of Gaussian integration rule of order 17 on the interval [0, 1] (See Table 3).

For the initial approximate

we have

It follows by Proposition 3 that \(0<\alpha (\varvec{f}, \varvec{x}^{(3)})<\alpha ^*\). To be precise,

Choose \(\rho ^{(3)}=2.206476714805001\mathrm {e}\text{- }09\), then \(S(\mathbf{Y }_{\rho ^{(3)}}, \varvec{x}^{(3)})\subseteq \mathrm {int}(\mathbf{Y }_{\rho ^{(3)}})\). The values of \(\varvec{x}^{(3)}\) and \(S(\mathbf{Y }_{\rho ^{(3)}}, \varvec{x}^{(3)})\) are shown in Table 4, where i stands for the coordinate index.

Example 3

Let

Given \(\varvec{x}^{(0)}\) with \(x_i^{(0)}=1.28\), \(i=1, 2, \ldots , 100\), it follows that

Recalling Proposition 2, we know that there exists \(k\in \mathbb {N}\) such that \(\alpha (\varvec{f}, \varvec{x}^{(k)})<\alpha ^*\). Indeed, for the first-step iteration point \(\varvec{x}^{(1)}\), we have

Choose \(\rho ^{(1)}=0.0229019461130694\), then \(S(\mathbf{Y }_{\rho ^{(1)}}, \varvec{x}^{(1)})\subseteq \mathrm {int}(\mathbf{Y }_{\rho ^{(1)}})\).

References

Blum, L., Cucker, F., Shub, M., Smale, S.: Complexity and Real Computation. Springer, New York (1998)

Kantorovich, L.V.: Functional analysis and applied mathematics. Uspehi. Mat. Nauk. 3(6), 89–185 (1948)

Giusti, M., Lecerf, G., Salvy, B., Yakoubsohn, J.C.: On location and approximation of clusters of zeros of analytic functions. Found. Comput. Math. 5(3), 257–311 (2005)

Giusti, M., Lecerf, G., Salvy, B., Yakoubsohn, J.C.: On location and approximation of clusters of zeros: case of embedding dimension one. Found. Comput. Math. 7(1), 1–58 (2007)

Hauenstein, J.D., Levandovskyy, V.: Certifying solutions to square systems of polynomial-exponential equations. J. Symb. Comput. 79(3), 575–593 (2015)

Hauenstein, J.D., Sottile, F.: Algorithm 921: alphacertified: certifying solutions to polynomial systems. ACM Trans. Math. Softw. 38(4), 1–20 (2011)

Li, N., Zhi, L.: Verified error bounds for isolated singular solutions of polynomial systems: case of breadth one. Theor. Comput. Sci. 479, 163–173 (2013)

Li, N., Zhi, L.: Verified error bounds for isolated singular solutions of polynomial systems. SIAM J. Numer. Anal. 52(4), 1623–1640 (2014)

Krawczyk, R.: Newton-algorithmen zur bestimmung von nullstellen mit fehlerschranken. Computing 4, 187–201 (1969)

Moore, R.E.: A test for existence of solutions to nonlinear system. SIAM J. Numer. Anal. 14(4), 611–615 (1977)

Rall, L.B.: A comparison of the existence theorems of Kantorvich and Moore. SIAM J. Numer. Anal. 17(1), 148–161 (1980)

Rump, S.M.: Solving algebraic problems with high accuracy. In: Kulisch, W.L., Miranker, W.L. (eds.) A New Approach to Scientific Computation, pp. 51–120. Academic Press, San Diego (1983)

Rump, S.M.: INTLAB-Interval Laboratory. Springer, Netherlands, Berlin (1999)

Rump, S.M., Graillat, S.: Verified error bounds for multiple roots of systems of nonlinear equations. Numer. Algorithm 54, 359–377 (2009)

Smale, S.: Newton method estimates from data at one point. The Merging of Disciplines: New Directions in Pure, Applied and Computational Mathematics, pp. 185C–196C. Springer, Berlin (1986)

Shub, M., Smale, S.: Computational complexity: on the geometry of polynomials and a theory of cost. I. Ann. Sci. Ècole Norm. Sup. 18(1), 107–142 (1985)

Shub, M., Smale, S.: Computational complexity: on the geometry of polynomials and a theory of cost. II. SIAM J. Comput. 15(1), 145–161 (1986)

Wang, X.H., Han, D.F.: On dominating sequence method in the point estimate and Smale theorem. Sci. China Ser. A 33(2), 135–144 (1990)

Yang, Z., Zhi, L., Zhu, Y.: Verfied error bounds for real solutions of positive-dimensional polynomial systems. In: The 2013 International Symposium on Symbolic and Algebraic Computation, pp. 371–378. ACM press, San Jose (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Li, Z., Wan, B., Zhang, S. (2017). The Convergence Conditions of Interval Newton’s Method Based on Point Estimates. In: Gerdt, V., Koepf, W., Seiler, W., Vorozhtsov, E. (eds) Computer Algebra in Scientific Computing. CASC 2017. Lecture Notes in Computer Science(), vol 10490. Springer, Cham. https://doi.org/10.1007/978-3-319-66320-3_20

Download citation

DOI: https://doi.org/10.1007/978-3-319-66320-3_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-66319-7

Online ISBN: 978-3-319-66320-3

eBook Packages: Computer ScienceComputer Science (R0)