Abstract

We present a local convergence analysis of a sixth order iterative method for approximate a locally unique solution of an equation defined on the real line. Earlier studies such as Sharma et al. (Appl Math Comput 190:111–115, 2007) have shown convergence of these methods under hypotheses up to the fifth derivative of the function although only the first derivative appears in the method. In this study we expand the applicability of these methods using only hypotheses up to the first derivative of the function. Numerical examples are also presented in this study.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Newton-like methods are famous for approximating a locally unique solution \(x^*\) of equation

where \(F:D\subseteq {\mathbb {R}}\rightarrow {\mathbb {R}}\) is a differentiable nonlinear function and D is a convex subset of \({\mathbb {R}}.\) These methods are studied based on: semi-local and local convergence [2, 3, 15, 16, 20].

The methods such as Euler’s, Halley’s, super Halley’s, Chebyshev’s [2–5, 8, 12, 17, 20] require the evaluation of the second derivative \(F''\) at each step. To avoid this computation, many authors have used higher order multi-point methods [1, 2, 6, 9–11, 14, 16, 19, 20].

Newton’s method is undoubtedly the most popular method for approximating a locally unique solution \(x^*\) provided that the initial point is close enough to the solution. In order to obtain a higher order of convergence Newton-like methods have been studied such as Potra–Ptak, Chebyshev, Cauchy Halley and Ostrowski method. The number of function evaluations per step increases with the order of convergence. In the scalar case the efficiency index [13, 16, 20] \(EI=p^{\frac{1}{m}}\) provides a measure of balance where p is the order of the method and m is the number of function evaluations.

According to the Kung–Traub conjecture the convergence of any multi-point method without memory cannot exceed the upper bound \(2^{m-1}\) [16, 20] (called the optimal order). Hence the optimal order for a method with three function evaluations per step is 4. The corresponding efficiency index is \(EI= 4^{\frac{1}{3}}=1.58740\ldots \) which is better than Newtons method which is \(EI= 2^{\frac{1}{2}}=1.414\ldots \) Therefore, the study of new optimal methods of order four is important.

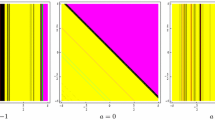

We study the local convergence analysis of three step King-like method with a parameter defined for each \(n=0,1,2,\ldots \) by

where \(x_0\in D \) is an initial point and \( \alpha \in {\mathbb {R}}\) a parameter. Sharma et al. [19] showed the sixth order of convergence of method (1.2) using Taylor expansions and hypotheses reaching up to the fourth derivative of function F although only the first derivative appears in method (1.2). These hypotheses limit the applicability of method (1.2). As a motivational example, let us define function F on \(D=[-\frac{1}{2},\frac{5}{2}]\) by

Choose \(x^*=1.\) We have that

Then, obviously function F does not have bounded third derivative in X, since \(F'''(x)\) is unbounded at \(x=0\) (i.e., unbounded in D). The results in [19] require that all derivatives up to the fourth are bounded. Therefore, the results in [19] cannot be used to show the convergence of method (1.2). However, our results can apply (see Example 3.3). Notice that, in-particular there is a plethora of iterative methods for approximating solutions of nonlinear equations defined on \({\mathbb {R}}\) [1, 2, 5–7, 9–11, 14, 16, 19, 20]. These results show that if the initial point \(x_0\) is sufficiently close to the solution \(x^*,\) then the sequence \(\{x_n\}\) converges to \(x^*.\) But how close to the solution \(x^*\) the initial guess \(x_0\) should be? These local results give no information on the radius of the convergence ball for the corresponding method. We address this question for method (1.2) in Sect. 2. The same technique can be used to other methods. In the present study we extend the applicability of these methods by using hypotheses up to the first derivative of function F and contractions. Moreover we avoid Taylor expansions and use instead Lipschitz parameters. Indeed, Taylor expansions and higher order derivatives are needed to obtain the equation of the local error and the order of convergence of the method. Using our technique we find instead the computational order of convergence (COC) or the approximate computational order of convergence that do not require the usage of Taylor expansions or higher order derivatives (see Remark 2.2, part 4). Moreover, using the Lipschitz constants we determine the radius of convergence of method (1.2). Notice also that the local error in [19] cannot be used to determine the radius of convergence of method (1.2).

We do not address the global convergence of the three-step King-like method (1.2) in this study. Notice however that the global convergence of King’s method [drop the third step of method (1.2) to obtain King’s method] has not been studied either. This is mainly due to the fact that these methods are considered as special case of Newton-like methods for which there are many results (see e.g [15]). Therefore, one can simply specialize global convergence results for Newton-like methods to obtain the specific results for method (1.2) or King’s method.

The paper is organized as follows. In Sect. 2 we present the local convergence analysis. We also provide a radius of convergence, computable error bounds and uniqueness result not given in the earlier studies using Taylor expansions. Special cases and numerical examples are presented in the concluding Sect. 3.

2 Local convergence analysis

We present the local convergence analysis of method (1.2) in this section. Let \(L_0 >0, L >0, M \ge 1\) be given parameters. It is convenient for the local convergence analysis of method (1.2) that follows to introduce some scalar functions and parameters. Define functions \(g_1, \, p,\, h_p,\, q,\, h_q\) on the interval \([0, \frac{1}{L_0})\) by

and parameter \(r_1\) by

We have that \(h_p(0)=h_q(0)=-1 <0\) and \(h_q(t)\rightarrow +\infty , h_p(t)\rightarrow +\infty \) as \(t\rightarrow \frac{1}{L_0}^-.\) It follows from the intermediate value theorem that functions \(h_p, h_q\) have zeros in the interval \((0, \frac{1}{L_0}).\) Denote by \(r_p, r_q\) the smallest such zeros. Moreover, define functions \(g_2\) and \(h_2\) on the interval \([0, r_p)\) by

and

We have that \(h_2(0)=-1 < 0\) and \(h_2(t)\rightarrow +\infty \) as \(t\rightarrow r_p^-.\) Denote by \(r_2\) the smallest zero of function \(h_2\) in the interval \((0, r_p).\) Furthermore, define functions \(g_3\) and \(h_3\) on the interval \([0, \min \{r_p, r_q\})\) by

and

We have that \(h_3(0)=-1 < 0\) since \(g_1(0)=g_2(0)=0\) and \(h_3(t)\rightarrow +\infty \) as \(t\rightarrow \min \{r_p, r_q\}.\) Denote by \(r_3\) the smallest zero of function \(h_3\) in the interval \((0, \min \{r_p, r_q\}).\) Set

Then, we have that

and for each \( t\in [0, r)\)

and

Denote by \(U(v,\rho ), \bar{U}(v,\rho )\) the open and closed balls in \({\mathbb {R}}\) with center \(v\in {\mathbb {R}}\) and of radius \(\rho >0.\) Next,we present the local convergence analysis of method (1.2) using the preceding notation.

Theorem 2.1

Let \(F: D\subset {\mathbb {R}}\rightarrow {\mathbb {R}}\) be a differentiable function. Suppose there exist \(x^*\in D,\, L_0 > 0,\, L >0,\, M \ge 1\) such that \( F(x^*)=0,\,\, F'(x^*)\ne 0\),

and

where the radius of convergence r is defined by (2.1). Then, the sequence \(\{x_n\}\) generated by method (1.2) for \(x_0\in U(x^*, r)-\{x^*\}\) is well defined, remains in \(U(x^*, r)\) for each \(n=0,1,2,\ldots \) and converges to \(x^*.\) Moreover, the following estimates hold

and

where the \(''g''\) functions are defined above Theorem 2.1. Furthermore, for \(T\in [r, \frac{2}{L_0})\) the limit point \(x^*\) is the only solution of equation \(F(x)=0\) in \(\bar{U}(x^*,T)\cap D\).

Proof

We shall show estimates (2.12)–(2.14) using mathematical induction. By hypothesis \(x_0\in {U}(x^*, r)-\{x^*\},\) (2.1) and (2.8), we have that

It follows from (2.15) and Banach Lemma on invertible functions [2, 3, 16, 17, 20] that \(F'(x_0)\ne 0\) and

Hence, \(y_0\) is well defined by the first sub-step of method (1.2) for \(n=0.\) Using (2.1), (2.3), (2.9) and (2.16) we get that

which shows (2.12) for \(n=0\) and \(y_0\in U(x^*, r).\) We can write that

Notice that \(|x^*+\theta (x_0-x^*)-x^*|=\theta |x_0-x^*| < r.\) Hence, we get that \(x^*+\theta (x_0-x^*)\in U(x^*, r).\) Then, by (2.10) and (2.18), we obtain that

We also have by (2.17) and (2.19) (for \(y_0=x_0\)) that

since \(y_0\in U(x^*, r)\). Next, we shall show \( F(x_0)-2F(y_0)\ne 0.\) Using (2.1), (2.4), (2.8), (2.20) and the hypothesis \(x_0\ne x^*,\) we have in turn that

It follows from (2.21) that

Hence, \(z_0\) and \(x_1\) are is well defined for \(n=0.\) Then, using (2.1), (2.5), (2.16), (2.17), (2.20) and (2.22) we get in turn that

which show (2.13) for \(n=0\) and \(z_0\in U(x^*, r).\) Next, we show that \( F(x_0){-}(\alpha -2)F(y_0)\ne 0.\) Using (2.1), (2.6), (2.8), (2.17) and \(x_0\ne x^*,\) we obtain in turn that

It follows from (2.24) that

Hence, \(x_1\) is well defined by the third sub-step of method (1.2) for \(n=0.\) Then using (2.1), (2.7),(2.16), (2.19) (for \(z_0=x_0\)), (2.23) (2.24) and (2.25), we obtain in turn that

so,

which shows (2.14) for \(n=0\) and \(x_1\in U(x^*, r).\) By simply replacing \(x_0, y_0, z_0,\, x_1\) by \(x_k, y_k, z_k, x_{k+1}\) in the preceding estimates we arrive at estimates (2.12) – (2.14). Then, it follows from the estimate \(|x_{k+1}-x^*| < |x_k-x^*| < r,\) we deduce that \(x_{k+1}\in U(x^*, r)\) and \(\lim _{k\rightarrow \infty }x_k=x^*.\) To show the uniqueness part, let \(Q=\int _0^1F'(y^*+\theta (x^*-y^*)d\theta \) for some \(y^*\in \bar{U}(x^*, T)\) with \(F(y^*)=0.\) Using (2.8), we get that

It follows from (2.27) and the Banach Lemma on invertible functions that Q is invertible. Finally, from the identity \(0=F(x^*)-F(y^*)=Q(x^*-y^*),\) we deduce that \(x^*=y^*\). \(\square \)

Remark 2.2

-

1.

In view of (2.8) and the estimate

$$\begin{aligned} |F'(x^*)^{-1}F'(x)|= & {} |F'(x^*)^{-1}(F'(x)-F'(x^*))+I|\\\le & {} 1+|F'(x^*)^{-1}(F'(x)-F'(x^*))| \le 1+L_0|x-x^*| \end{aligned}$$condition (2.10) can be dropped and M can be replaced by

$$\begin{aligned} M(t)=1+L_0 t \end{aligned}$$or

$$\begin{aligned} M(t)=M=2, \end{aligned}$$since \(t\in [0, \frac{1}{L_0}).\)

-

2.

The results obtained here can be used for operators F satisfying autonomous differential equations [2] of the form

$$\begin{aligned} F'(x)=P(F(x)) \end{aligned}$$where P is a continuous operator. Then, since \(F'(x^*)=P(F(x^*))=P(0),\) we can apply the results without actually knowing \(x^*.\) For example, let \(F(x)=e^x-1.\) Then, we can choose: \(P(x)=x+1.\)

-

3.

In [2, 3] we showed that \(r_1=\frac{2}{2L_0+L}\) is the convergence radius of Newton’s method:

$$\begin{aligned} x_{n+1}=x_n-F'(x_n)^{-1}F(x_n)\,\,\,\text {for each}\,\,\,n=0,1,2,\cdots \end{aligned}$$(2.28)under the conditions (2.8) and (2.9). It follows from the definition of r that the convergence radius r of the method (1.2) cannot be larger than the convergence radius \(r_1\) of the second order Newton’s method (2.28). As already noted in [2, 3] \(r_1\) is at least as large as the convergence radius given by Rheinboldt [18]

$$\begin{aligned} r_R=\frac{2}{3L}. \end{aligned}$$(2.29)The same value for \(r_R\) was given by Traub [20]. In particular, for \(L_0 < L\) we have that

$$\begin{aligned} r_R < r_1 \end{aligned}$$and

$$\begin{aligned} \frac{r_R}{r_1}\rightarrow \frac{1}{3}\,\,\, as\,\,\, \frac{L_0}{L}\rightarrow 0. \end{aligned}$$That is the radius of convergence \(r_1\) is at most three times larger than Rheinboldt’s.

-

4.

It is worth noticing that method (1.2) is not changing when we use the conditions of Theorem 2.1 instead of the stronger conditions used in [19]. Moreover, we can compute the computational order of convergence (COC) defined by

$$\begin{aligned} \xi = \ln \left( \frac{|x_{n+1}-x^*|}{|x_n-x^*|}\right) /\ln \left( \frac{|x_{n}-x^*|}{|x_{n-1}-x^*|}\right) \end{aligned}$$or the approximate computational order of convergence

$$\begin{aligned} \xi _1= \ln \left( \frac{|x_{n+1}-x_n|}{|x_n-x_{n-1}|}\right) /\ln \left( \frac{|x_{n}-x_{n-1}|}{|x_{n-1}-x_{n-2}|}\right) . \end{aligned}$$This way we obtain in practice the order of convergence in a way that avoids the bounds involving estimates using higher order Fréchet derivative of operator F.

3 Numerical examples

We present numerical examples in this section.

Example 3.1

Let \(D=(-\infty , +\infty ).\) Define function f of D by

Then we have for \(x^*=0\) that \(L_0=L=M=1.\) The parameters are

The comparison of radii are given in Table 1.

Example 3.2

Let \(D=[-1,1].\) Define function f of D by

Using (3.2) and \(x^*=0,\) we get that \(L_0=e-1 < L=e, M=2.\) The parameters are

The comparison of radii are given in Table 2.

Example 3.3

Returning back to the motivational example at the introduction of this study, we have \(L_0=L=96.662907,\,M=2.\) The parameters are

References

Amat, S., Busquier, S., Plaza, S.: Dynamics of the King’s and Jarratt iterations. Aequ. Math. 69, 212–213 (2005)

Argyros, I.K.: Convergence and Application of Newton-type Iterations. Springer, New York (2008)

Argyros, I.K., Hilout, Said: Computational Methods in Nonlinear Analysis. World Scientific Publ. Co., New Jersey (2013)

Argyros, I.K., Chen, D., Quian, Q.: The Jarratt method in Banach space setting. J. Comput. Appl. Math. 51, 103–106 (1994)

Argyros, I.K., George, S., Alberto, A.: Magrenan, high convergence order. J. Comput. Appl. Math. 282, 215–224 (2015)

Argyros, I.K., George, S.: Ball comparison between two optimal eight-order methods under weak conditions. SeMA Journal Boletin de la Sociedad Espaola de Matemtica Aplicada (2015). doi:10.1007/s40324-015-0035-z

Argyros, I.K., George, S.: Local convergence for an efficient eighth order iterative method with a parameter for solving equations under weak conditions. Int. J. Appl. Comput. Math. doi:10.1007/s40819-015-0078-y

Candela, V., Marquina, A.: Recurrence relations for rational cubic methods I: the Halley method. Computing 44, 169–184 (1990)

Chun, C., Neta, B., Scott, M.: Basins of attraction for optimal eighth order methods to find simple roots of nonlinear equations. Appl. Math. Comput. 227, 567–592 (2014)

Cordero, A., Maimo, J., Torregrosa, J., Vassileva, M.P., Vindel, P.: Chaos in King’s iterative family. Appl. Math. Lett. 26, 842–848 (2013)

Frontini, M., Sormani, E.: Some variants of Newton’s method with third order convergence. Appl. Math. Comput. 140, 419–426 (2003)

King, R.F.: A family of fourth-order methods for nonlinear equations. SIAM. J. Numer. Anal. 10, 876–879 (1973)

Kung, H.T., Traub, J.F.: Optimal order of one-point and multipoint iterations. J. Appl. Comput. Math. 21, 643–651 (1974)

Noor, M.A.: Some applications of quadrature formulas for solving nonlinear equations. Nonlinear Anal. Forum 12(1), 91–96 (2007)

Ortega, J.M., Rheinbolt, W.C.: Iterative Solution of Nonlinear Equations in Several Variables. Academic Press, New York (1970)

Petkovic, M.S., Neta, B., Petkovic, L., Džunič, J.: Multipoint Methods for Solving Nonlinear Equations. Elsevier, Amsterdam (2013)

Potra, F.A., Ptak, V.: Nondiscrete Induction and Iterative Processes, Research Notes in Mathematics, vol. 103. Pitman Publ., Boston (1984)

Rheinboldt, W.C.: An adaptive continuation process for solving systems of nonlinear equations. In: Tikhonov, A.N., et al. (eds.) Mathematical Models and Numerical Methods Publications, vol. 3(19), pp. 129–142. Banach Center, Warsaw

Sharma, J.R., Guha, R.K.: A family of modified Ostrowski methods with accelerated sixth order convergence. Appl. Math. Comput. 190, 111–115 (2007)

Traub, J.F.: Iterative Methods for the Solution of Equations. Prentice Hall, Englewood Cliffs (1964)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Argyros, I.K., George, S. Ball convergence of a sixth order iterative method with one parameter for solving equations under weak conditions. Calcolo 53, 585–595 (2016). https://doi.org/10.1007/s10092-015-0163-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10092-015-0163-y