Abstract

The concept of an evolutionarily stable strategy (ESS), introduced by Smith and Price [4], is a refinement of Nash equilibrium in 2-player symmetric games in order to explain counter-intuitive natural phenomena, whose existence is not guaranteed in every game. The problem of deciding whether a game possesses an ESS has been shown to be \(\varSigma _{2}^{P}\)-complete by Conitzer [1] using the preceding important work by Etessami and Lochbihler [2]. The latter, among other results, proved that deciding the existence of ESS is both NP-hard and coNP-hard. In this paper we introduce a reduction robustness notion and we show that deciding the existence of an ESS remains coNP-hard for a wide range of games even if we arbitrarily perturb within some intervals the payoff values of the game under consideration. In contrast, ESS exist almost surely for large games with random and independent payoffs chosen from the same distribution [11].

The work of the second author was partially supported by the ERC Project ALGAME.

For a full version with detailed examples and proofs see https://arxiv.org/abs/1701.08108 [5].

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

1.1 Concepts of Evolutionary Games and Stable Strategies

Evolutionary game theory has proven itself to be invaluable when it comes to analysing complex natural phenomena. A first attempt to apply game theoretic tools to evolution was made by Lewontin [3] who saw the evolution of genetic mechanisms as a game played between a species and nature. He argued that a species would adopt the “maximin” strategy, i.e. the strategy which gives it the best chance of survival if nature does its worst. Subsequently, his ideas were improved by the seminal work of Smith and Price in [4] and Smith in [12] where the study of natural selection’s processes through game theory was triggered. They proposed a model in order to decide the outcome of groups consisting of living individuals, conflicting in a specific environment.

The key insight of evolutionary game theory is that a set of behaviours depends on the interaction among multiple individuals in a population, and the prosperity of any one of these individuals depends on that interaction of its own behaviour with that of the others. An evolutionarily stable strategy (ESS) is defined as follows: An infinite population consists of two types of infinite groups with the same set of pure strategies; the incumbents, that play the (mixed) strategy s and the mutants, that play the (mixed) strategy \(t \ne s\). The ratio of mutants over the total population is \(\epsilon \). A pair of members of the total population is picked uniformly at random to play a finite symmetric bimatrix game \(\varGamma \) with payoff matrix \(A_\varGamma \). Strategy s is an ESS if for every \(t \ne s\) there exists a constant ratio \(\epsilon _t\) of mutants over the total population, such that, if \(\epsilon < \epsilon _t\) the expected payoff of an incumbent versus a mutant is strictly greater than the expected payoff of a mutant versus a mutant. For convenience, we say that “s is an ESS of the game \(\varGamma \)”.

The concept of ESS tries to capture resistance of a population against invaders. This concept has been studied in two main categories: infinite population groups and finite population groups. The former was the one where this Nash equilibrium refinement was first defined and presented by [4]. The latter was studied by Schaffer [10] who shows that the finite population case is a generalization of the infinite population one. The current paper deals with the infinite population case which can be mathematically modelled in an easier way and in addition, its results may provide useful insight for the finite population case. (For an example of ESS analysis in an infinite population game see the full version [5].)

1.2 Previous Work

Searching for the exact complexity of deciding if a bimatrix game possesses an ESS, Etessami and Lochbihler [2] invent a nice reduction from the complement of the clique problem to a specific game with an appointed ESS, showing that the ess problem is coNP-hard. They also accomplish a reduction from the sat problem to ess, thus proving that ess is NP-hard too. This makes impossible for the ess to be NP-complete, unless NP = coNP. Furthermore, they provide a proof for the general ess being contained in \(\varvec{\varSigma }_\mathbf{2}^{{\varvec{P}}}\), the second level of the polynomial-time hierarchy, leaving open the question of what is the complexity class in which the problem is complete.

A further improvement of those results was made by Nisan [8], showing that, given a payoff matrix, the existence of a mixed ESS is coDP-hard. (See Papadimitriou and Yannakakis [9] for background on this class.) A notable consequence of both [2] and [8] is that the problem of recognizing a mixed ESS, once given along with the payoff matrix, is coNP-complete. However, the question of the exact complexity of ESS existence, given the payoff matrix, remained open. A few years later, Conitzer finally settles this question in [1], showing that ess is actually \(\varvec{\varSigma }_\mathbf{2}^{{\varvec{P}}}\)-complete.

On the contrary, Hart and Rinott [11] showed that if the symmetric bimatrix game is defined by a \(n \times n\) payoff matrix with elements independently randomly chosen according to a distribution F with exponential and faster decreasing tail, such as exponential, normal or uniform, then the probability of having an ESS with just 2 pure strategies in the support tends to 1 as n tends to infinity. In view of this result, and since the basic reduction of [2] used only 3 payoff values, it is interesting to consider whether ESS existence remains hard for arbitrary payoffs in some intervals.

1.3 Our Results

In the reduction of Etessami and Lochbihler that proves coNP-hardness of ess the values of the payoffs used, are \(0, \frac{k-1}{k}\) and 1, for \(k \in \mathbb {N}\). A natural question is if the hardness results hold when we arbitrarily perturb the payoff values within respective intervals (in the spirit of smoothed analysis [13]). In our work we extend the aforementioned reduction and show that the specific reduction remains valid even after significant changes of the payoff values.

We can easily prove that the evolutionarily stable strategies of a symmetric bimatrix game remain the exact same if we add, subtract or multiply (or do all of them) with a positive value its payoff matrix. However, that kind of value modification forces the entries of the payoff matrix to change in an entirely correlated manner, hence it does not provide an answer to our question. In this work, we prove that if we have partitions of entries of the payoff matrix with the same value for each partition, independent arbitrary perturbations of those values within certain intervals do not affect the validity of our reduction. In other words, we prove that determining ESS existence remains hard even if we perturb the payoff values associated with the reduction. En route we give a definition of “reduction robustness under arbitrary perturbations” and show how the reduction under examination adheres to this definition.

In contrast, [11] show that if the payoffs of a symmetric game are random and independently chosen from the same distribution F with “exponential or faster decreasing tail” (e.g. exponential, normal or uniform), then an ESS (with support of size 2) exists with probability that tends to 1 when n tends to infinity.

One could superficially get a non-tight version of our result by saying that (under supposed continuity assumptions in the ESS definition) any small perturbation of the payoff values will not destroy the reduction. However, in such a case (a) the continuity assumptions have to be precisely stated and (b) this does not explain why the ESS problem becomes easy when the payoffs are random [11].

In fact, the value of our technique is, firstly, to get as tight as possible ranges of the perturbation that preserve the reduction (and the ESS hardness) without any continuity assumptions, secondly, to indicate the basic difference from random payoff values (which is exactly the notion of partition of payoffs into groups in our definition of robustness, and the allowance of arbitrary perturbation within some interval in each group), and finally, the ranges of the allowed perturbations that we determine are quite tight. For the reduction to be preserved when we independently perturb the values (in each of our partitions arbitrarily), one must show that a system of inequalities has always a feasible solution, and we manage to show this in our final theorem. Our result seems to indicate that existence of an ESS remains hard despite a smoothed analysis [13].

An outline of the paper is as follows: In Sect. 2 we define the robust reduction notion and we provide a reduction, based on the one from [2], that is essentially modified in order to be robust. In Sect. 3 we give our main result and Sect. 4 refers to further work and conclusions.

1.4 Definitions and Notation

Background from Game Theory. A finite two-player strategic form game \(\varGamma = (S_{1}, S_{2}, u_{1}, u_{2})\) is given by finite sets of pure strategies \(S_1\) and \(S_2\) and utility, or payoff, functions \(u_1: S_{1} \times S_{2} \mapsto \mathbb {R}\) and \(u_2: S_{1} \times S_{2} \mapsto \mathbb {R}\) for the row-player and the column-player, respectively. Such a game is called symmetric if \(S_{1} = S_{2} =: S\) and \(u_{1}(i,j) = u_{2}(j,i)\) for all \(i,j \in S\).

In what follows, we are only concerned with finite symmetric two-player strategic form games, so we write \((S, u_1)\) as shorthand for \((S, S, u_{1}, u_{2})\), with \(u_{2}(j,i) = u_{1}(i,j)\) for all \(i,j \in S\). For simplicity assume \(S = {1,\dots ,n}\), i.e., pure strategies are identified with integers \(i, 1 \le i \le n\). The row-player’s payoff matrix \(A_{\varGamma } = (a_{i,j})\) of \(\varGamma = (S, u_1)\) is given by \(a_{i,j} = u_{1}(i,j)\) for \(i,j \in S\), so \(B_{\varGamma }=A_{\varGamma }^{T}\) is the payoff matrix of the column-player. Note that \(A_{\varGamma }\) is not necessarily symmetric, even if \(\varGamma \) is a symmetric game.

A mixed strategy \(s = (s(1),\dots ,s(n))^T\) for \(\varGamma = (S, u_1)\) is a vector that defines a probability distribution on s and, in the sequel, we will denote by s(i) the probability assigned by strategy s on the pure strategy \(i \in S\). Thus, \(s \in X\), where \(X = \Big \{s \in \mathbb {R}_{\ge 0}^{n} : \sum _{i=1}^{n}s(i) = 1 \Big \}\) denotes the set of mixed strategies in \(\varGamma \), with \(\mathbb {R}_{\ge 0}^{n}\) denoting the set of non-negative real number vectors \((x_{1},x_{2},\dots ,x_{n})\). s is called pure iff \(s(i) = 1\) for some \(i \in S\). In that case we identify s with i. For brevity, we generally use “strategy” to refer to a mixed strategy s, and indicate otherwise when the strategy is pure. In our notation, we alternatively view a mixed strategy s as either a vector \((s_{1},\dots ,s_{n})^T\), or as a function \(s : S \mapsto \mathbb {R}\), depending on which is more convenient in the context.

The expected payoff function, \(U_k : X \times X \mapsto \mathbb {R}\) for player \(k \in {1,2}\) is given by \(U_{k}(s,t) = \sum _{i,j \in S}s(i)t(j)u_{k}(i,j)\), for all \(s,t \in X\). Note that \(U_{1}(s,t) = s^{T}A_{\varGamma }t\) and \(U_{2}(s,t) = s^{T}A_{\varGamma }^{T}t\). Let s be a strategy for \(\varGamma = (S, u_1)\). A strategy \(t \in X\) is a best response to s if \(U_{1}(t,s) = \max _{t' \in X}U_{1}(t',s)\). The support supp(s) of s is the set \(\{ i \in S : s(i) > 0 \}\) of pure strategies which are played with non-zero probability. The extended support ext-supp(s) of s is the set \(\{ i \in S :U_{1}(i,s) = \max _{x \in X}U_{1}(x,s) \}\) of all pure best responses to s.

A pair of strategies (s, t) is a Nash equilibrium (NE) for \(\varGamma \) if s is a best response to t and t is a best response to s. Note that (s, t) is a NE if and only if supp(s)\(\subseteq \) ext-supp(t) and supp(t)\(\subseteq \) ext-supp(s). A NE (s, t) is symmetric if \(s = t\).

Definition 1

(Symmetric Nash equilibrium). A strategy profile (s, s) is a symmetric NE for the symmetric bimatrix game \(\varGamma = (S, u_1)\) if \(s^{T}A_{\varGamma }s \ge t^{T}A_{\varGamma }s\) for every \(t \in X\).

A definition of ESS equivalent to that presented in Subsect. 1.1 is:

Definition 2

(Evolutionarily stable strategy). A (mixed) strategy \(s \in X\) is an evolutionarily stable strategy (ESS) of a two-player symmetric game \(\varGamma \) if:

-

1.

(s, s) is a symmetric NE of \(\varGamma \), and

-

2.

if \(t \in X\) is any best response to s and \(t \ne s\), then \(U_{1}(s,t) > U_{1}(t,t)\).

Due to [7], we know that every symmetric game has a symmetric Nash equilibrium. The same does not hold for evolutionarily stable strategies (for example “rock-paper-scissors” does not have any pure or mixed ESS).

Definition 3

( ess problem). Given a symmetric two-player normal-form game \(\varGamma \), we are asked whether there exists an evolutionarily stable strategy of \(\varGamma \).

Background from Graph Theory. An undirected graph G is an ordered pair (V, E) consisting of a set V of vertices and a set E, disjoint from V, of edges, together with an incidence function \(\psi _G\) that associates with each edge of G an unordered pair of distinct vertices of G. If e is an edge and u and \(\upsilon \) are vertices such that \(\psi _{G}(e) = \{u, \upsilon \}\), then e is said to join u and \(\upsilon \), and the vertices u and \(\upsilon \) are called the ends of e. We denote the numbers of vertices and edges in G by \(\upsilon (G)\) and e(G); these two basic parameters are called the order and size of G, respectively.

Definition 4

(Adjacency matrix). The adjacency matrix of the above undirected graph G is the \(n \times n\) matrix \(A_G := (a_{u \upsilon })\), where \(a_{u \upsilon }\) is the number of edges joining vertices u and \(\upsilon \) and \(n = \upsilon (G)\).

Definition 5

(Clique). A clique of an undirected graph G is a complete subgraph of G, i.e. one whose vertices are joined with each other by edges.

Definition 6

( clique problem). Given an undirected graph G and a number k, we are asked whether there is a clique of size k.

As mentioned earlier, in what follows, \(\mathbb {R}_{\ge 0}^{n}\) denotes the set of non-negative real number vectors \((x_{1},x_{2},\dots ,x_{n})\) and \(n=|V|\).

Theorem 1

(Motzkin and Straus [6]). Let \(G = (V,E)\) be an undirected graph with maximum clique size d. Let \(\varDelta _1 = \Big \{ x \in \mathbb {R}_{\ge 0}^{n} : \sum _{i=1}^{n} x_{i} = 1 \Big \}\). Then \(\max _{x \in \varDelta _1} x^{T}A_{G}x = \frac{d-1}{d}\).

Corollary 1

Let \(G = (V,E)\) be an undirected graph with maximum clique size d. Let \(A_{G}^{\tau ,\rho }\) be a modified adjacency matrix of graph G where its entries with value 0 are replaced by \(\tau \in \mathbb {R}\) and its entries with value 1 are replaced by \(\rho \in \mathbb {R}\). Let \(\varDelta _1 = \Big \{ x \in \mathbb {R}_{\ge 0}^{n} : \sum _{i=1}^{n} x_{i} = 1 \Big \}\). Then \(\max _{x \in \varDelta _1} x^{T}A_{G}^{\tau ,\rho }x = \tau + (\rho -\tau )\frac{d-1}{d}\).

Proof

\( x^{T}A_{G}^{\tau ,\rho }x = x^{T}\left[ \tau \cdot \mathbf {1} + (\rho -\tau ) \cdot A_G\right] x = \tau + (\rho -\tau ) \cdot x^{T}A_{G}x\), where \(\mathbf {1}\) is the \(n \times n\) matrix with value 1 in every entry. By Theorem 1 the result follows. \(\square \)

Corollary 2

(Etessami and Lochbihler [2]). Let \(G = (V,E)\) be an undirected graph with maximum clique size d and let \(l \in \mathbb {R}_{\ge 0}\). Let \(\varDelta _l = \Big \{ x \in \mathbb {R}_{\ge 0}^{n} : \sum _{i=1}^{n} x_{i} = l \Big \}\). Then \(\max _{x \in \varDelta _l}x^{T}A_{G}x = \frac{d-1}{d}l^2\).

2 Robust Reductions

Definition 7

(Neighbourhood). Let \(v \in \mathbb {R}\). An (open) interval \(I(v)=[a,b]\) (\(I(v)=(a,b)\)) with \(a<b\) where \(a \le v \le b\), is called a neighbourhood of v of range \(|b-a|\).

Definition 8

(Robust reduction under arbitrary perturbations of values). We are given a valid reduction of a problem to a strategic game that involves a real matrix A of payoffs as entries \(a_{ij}\). A consists of m partitions, with each partition’s entries having the same value v(t), for \(t \in \{1,2,\dots ,m\}\). Let \(I(v(t)) \ne \emptyset \) be a neighbourhood of v(t) and \(w(t) \in I(v(t))\) be an arbitrary value in that neighbourhood. The reduction is called robust under arbitrary perturbations of values if it is valid for all the possible matrices W with entries w(t).

For a first extension based on the reduction of [2], see the full version [5].

2.1 A Robust Reduction from the Complement of clique to ess

In the sequel we extend the idea of Etessami and Lochbihler [2] by replacing the constant payoff values they use with variables, and finding the intervals they belong to in order for the reduction to hold. We replace the zeros and ones of their reduction with \(\tau \in \mathbb {R}\) and \(\rho \in \mathbb {R}\) respectively. We also replace their function \(\lambda '(k) = 1 - \frac{1}{k}\) with \(\lambda (k) = 1 - \frac{1}{k^x}\), where \(k \in \mathbb {N}\) and \(x \ge 3\). Note that we can normalize the game’s payoff values in [0, 1] and retain the exact same ESSs.

Given an undirected graph \(G=(V, E)\) we construct the following game \(\varGamma _{k,\tau ,\rho }^{x}(G) := (S, u_1)\) for suitable \(\tau <\rho \) to be determined later. Note that from now on we will only consider rational \(\tau \) and \(\rho \) so that every payoff value of the game is rational.

\(S=V \cup \{a,b,c\} \) are the strategies for the players where \(a,b,c \notin V\).

\(n= |V| \) is the number of nodes.

-

\(u_1(i,j)=\rho \text { for all } i,j \in V \text { with } (i,j) \in E \).

-

\(u_1(i,j)=\tau \text { for all } i,j \in V \text { with } (i,j) \notin E \).

-

\(u_1(z,a)=\rho \text { for all } z \in S-\{b,c\} \).

-

\(u_1(a,i)=\lambda (k)=1 - \frac{1}{k^x} \text { for all } i \in V \).

-

\(u_1(y,i)=\rho \text { for all } y \in \{b,c\} \text { and } i \in V \).

-

\(u_1(y,a)=\tau \text { for all } y \in \{b,c\} \).

-

\(u_1(z,y)=\tau \text { for all } z \in S \text { and } y \in \{b,c\} \).

Theorem 2

Let \(G=(V, E)\) be an undirected graph. The game \(\varGamma _{k,\tau ,\rho }^{x}(G)\) with

-

\( \rho \in \left( 1 + \frac{n^{x-1}-2^x}{2^{x}n^{x-1}(n-1)}, \quad 1 + \frac{(n+1)^x - n2^x}{2^{x}(n+1)^{x}(n-1)} \right] \quad and\)

\(\tau \in \left[ (1 - \rho )(n-1) + 1 - \frac{1}{n^{x-1}},\quad 1 - \frac{1}{2^x}\right) \)

or

-

\( \rho \in \left( 1 + \frac{(n+1)^x - n2^x}{2^{x}(n+1)^{x}(n-1)},\quad + \infty \right) \quad and \)

\(\tau \in \left[ (1 - \rho )(n-1) + 1 - \frac{1}{n^{x-1}},\quad (1-\rho )(n-1) + 1 - \frac{n}{(n+1)^x}\right) \)

has an ESS if and only if G has no clique of size k.

Proof

Let \(G=(V,E)\) be an undirected graph with maximum clique size d. We consider the game \(\varGamma _{k,\tau ,\rho }^{x}(G)\) defined above. Suppose s is an ESS of \(\varGamma _{k,\tau ,\rho }^{x}(G)\).

For the reduction we will prove three claims by using contradiction, that taken together show that the only possible ESS s of \(\varGamma _{k,\tau ,\rho }(G)\) is the pure strategy a. Here we should note that these three claims hold not only for the aforementioned intervals of \(\tau \) and \(\rho \), but for any \(\tau ,\rho \in \mathbb {R}\) for which \(\tau < \rho \). \(\square \)

Claim 1

The support of any possible ESS of \(\varGamma _{k,\tau ,\rho }^{x}(G)\) does not contain b or c (\(supp(s) \cap \{b,c\} = \emptyset \)).

Suppose \(supp(s) \cap \{b,c\} \ne \emptyset \).

Let \(t \ne s\) be a strategy with \(t(i)=s(i)\) for \(i \in V, t(y)=s(b)+s(c)\) and \(t(y')=0\) where \(y,y' \in \{b,c\}\) such that \(y \ne y'\) and \(s(y)=min\{s(b),s(c)\}\). Since \(u_1(b,z)=u_1(c,z)\) for all \(z \in S\),

which yields \(U_1(t,s) = U_1(s,s)\) and so t is a best response to s. Also,

which yields \(U_1(s,t) = U_1(t,t)\). But this is a contradiction since it should be \(U_1(s,t) > U_1(t,t)\) as s is an ESS.

Claim 2

The support of any possible ESS of \(\varGamma _{k,\tau ,\rho }^{x}(G)\) contains a (\(supp(s) \nsubseteq V\)).

Suppose \(supp(s) \subseteq V\).

Then, we denote by \(A_G\) the adjacency matrix of the graph G.

But this is a contradiction since s is an ESS and therefore a NE. From Claim 1 and Claim 2, it follows that \(a \in supp(s)\), i.e. \(s(a)>0\).

Claim 3

\(s(a)=1\).

Suppose \(s(a)<1\).

Since (s, s) is a NE, a is a best response to s and \(a \ne s\). Then \(U_1(s,a) = \sum _{z \in supp(s)}s(z)u_1(s,a)=\rho =U_1(a,a)\). But this is also a contradiction since it should be \(U_1(s,a) > U_1(a,a)\) as s is an ESS.

Therefore, the only possible ESS of \(\varGamma _{k,\tau ,\rho }^{x}(G)\) is the pure strategy a. Now we show the following lemma, which concludes also the proof of Theorem 2.

Lemma 1

The game \(\varGamma _{k,\tau ,\rho }^{x}(G)\) with the requirements of Theorem 2 has an ESS (strategy a) if and only if there is no clique of size k in graph G.

Proof

We consider two cases for k:

Case 1: \(d<k\). Let \(t \ne a\) be a best response to a. Then \(supp(t) \subseteq V \cup \{a\}\).

Let \(r= \sum _{i \in V}t(i)\). So \(r>0, (t \ne a)\) and \(t(a)=1-r\). Combining Corollaries 1 and 2 we get,

If we can show that \(E<0\) then strategy a is an ESS. We show why \(E<0\):

Let’s define the following function: \(f(k,d,\rho ) = (1-\rho )(d-1) + 1- \frac{d}{k^x}\) with the restrictions: \(k \ge d+1 , 1 \le d \le n , x \ge 3\).

By minimizing \(f(k,d,\rho )\) with respect to k and d, we end up to 2 cases determined by the interval to which \(\rho \) belongs. So,

Therefore, we can demand \(\tau \) to be strictly less than \(\tau ^*\), making \(U_{1}(t,t) - U_{1}(a,t)\) negative. We conclude that when \(d<k\) then strategy a is an ESS.

Case 2: \(d \ge k\). Let \(C \subseteq V\) be a clique of G of size k. Then t with \(t(i)=\frac{1}{k}\) for \(i \in C\) and \(t(j)=0\) for \(j \in S \setminus C\) is a best response to a and \(t \ne a\), and

If \(E' \ge 0\) then a cannot be an ESS. We explain why \(E' \ge 0\):

Let’s define the following function:

Then we define the function \(z(d,\rho )\):

Now, given that \(\tau \) needs to be at least \(\tau ^{**}\) but strictly less than \(\tau ^{*}\) the following should hold:

So we conclude that when \(d \ge k\) then strategy a is not an ESS. This completes the proof of Lemma 1 and Theorem 2. \(\square \)

Corollary 3

The ess problem with payoff values in the domains given in Theorem 2 is coNP-hard.

3 Our Main Result

Now we can prove our main theorem:

Theorem 3

Any reduction as in Theorem 2 for \(x=x_{0} \ge 3\) from the complement of the clique problem to the ess problem is robust under arbitrary perturbations of values in the intervals:

where \(x_{1} \in \left( x_0 , x_{0}\log _{n}(n+1)\right) \), \(C = \frac{(n+1)^{x_0}-n^{x_1}}{n^{x_1-1}(n+1)^{x_0}(n-1)}\), \(D = C(n-1)\), any \(A \in (0,C)\) and \(B = (C-A)(n-1)\).

Proof

We denote three partitions of the game’s payoff matrix \(U: U_\tau , U_\rho , U_\lambda \) disjoint sets, with \(U_\tau \,\cup \, U_\rho \,\cup \, U_\lambda = U\) and values \(\tau ,\rho ,\lambda \) of their entries respectively. Each set’s entries have the same value. For every \(\lambda \in \left[ 1-\frac{1}{k^{x_0}}, 1-\frac{1}{k^{x_1}} \right] \) there is a \(x=-\log _k(1-\lambda )\) in the interval \([x_0, x_1]\) such that \(\lambda = 1-\frac{1}{k^{x}}\), where \(x_0 \ge 3\) and \(x_1 \in (x_0, x_{0}\log _n(n+1))\). We will show that, for this x, any reduction with the values of \(\tau , \rho \) in the respective intervals stated in Theorem 2, is valid.

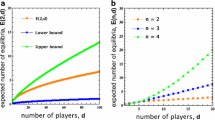

In Fig. 1, we show the validity area of \(\tau \) depending on \(\rho \) with parameter x, due to Theorem 2. The thin and thick plots bound the validity area (shaded) for \(x=x_0\) and \(x=x_1\) respectively.

While x increases, the parallel lines of the lower and upper bound of \(\tau \) move to the right, the horizontal line of the upper bound of \(\tau \) moves up, and the left acute angle as well as the top obtuse angle of the plot move to the left (by examination of the monotonicity of those bounds with respect to x).

The lower bound of \(\tau \) for an \(x=x' > x_0\) equals the upper bound of \(\tau \) for \(x=x_0\), when \(x'=x_0\log _n(n+1)\). Thus, for all \(\mathbf {x \in (x_0, x_0\log _n(n+1))}\) there is a non-empty intersection between the validity areas. We have picked an \(x=x_1 \in (x_0, x_0\log _n(n+1))\).

In Fig. 2, we show a zoom-in of the intersection of the validity areas of Fig. 1. Let the intersection of lines: \(1-\frac{1}{2^{x_0}}, (1-\rho )(n-1) + 1 - \frac{1}{n^{x_1-1}}\) be at point \(\rho =\rho _C\).

From the upper bound of \(\tau \) as a function of \(\rho \) we can see that \(\tan \varphi = n-1\).

For the rectangle with sides A, B shown in Fig. 2, the reduction is valid for all \(x \in [x_0,x_1]\), thus for all \(\lambda \in \left[ 1-\frac{1}{k^{x_0}}, 1-\frac{1}{k^{x_1}} \right] \). This completes the proof. \(\square \)

4 Conclusions and Further Work

In this work we introduce the notion of reduction robustness under arbitrary perturbations within an interval and we provide a generalized reduction based on the one in [2] that proves coNP-hardness of ess. We demonstrate that our generalised reduction is robust, thus showing that the hardness of the problem is preserved even after certain arbitrary perturbations of the payoff values of the derived game. As a future work we would like to examine the robustness of reductions for other hard problems, especially game-theoretic ones.

References

Conitzer, V.: The exact computational complexity of evolutionarily stable strategies. In: Chen, Y., Immorlica, N. (eds.) WINE 2013. LNCS, vol. 8289, pp. 96–108. Springer, Heidelberg (2013). doi:10.1007/978-3-642-45046-4_9

Etessami, K., Lochbihler, A.: The computational complexity of evolutionarily stable strategies. Int. J. Game Theory 37(1), 93–113 (2008). doi:10.1007/s00182-007-0095-0

Lewontin, R.: Evolution and the theory of games. J. Theor. Biol. 1(3), 382–403 (1961)

Smith, J.M., Price, G.R.: The logic of animal conflict. Nature 246(5427), 15–18 (1973)

Melissourgos, T., Spirakis, P.: Existence of evolutionarily stable strategies remains hard to decide for a wide range of payoff values. ArXiv e-prints, January 2017

Motzkin, T.S., Straus, E.G.: Maxima for graphs and a new proof of a theorem of Turán. Can. J. Math. 17, 533–540 (1965)

Nash, J.: Non-cooperative games. Ann. Math. 54(2), 286–295 (1951)

Nisan, N.: A note on the computational hardness of evolutionary stable strategies. Electron. Colloq. Comput. Complex. (ECCC) 13(076) (2006)

Papadimitriou, C., Yannakakis, M.: The complexity of facets (and some facets of complexity). J. Comput. Syst. Sci. 28(2), 244–259 (1984)

Schaffer, M.E.: Evolutionarily stable strategies for a finite population and a variable contest size. J. Theor. Biol. 132(4), 469–478 (1988)

Sergiu Hart, B.W., Rinott, Y.: Evolutionarily stable strategies of random games, and the vertices of random polygons. Ann. Appl. Probab. 18(1), 259–287 (2008)

Smith, J.M.: The theory of games and the evolution of animal conflicts. J. Theor. Biol. 47(1), 209–221 (1974)

Spielman, D.A., Teng, S.: Smoothed analysis: an attempt to explain the behavior of algorithms in practice. Commun. ACM 52(10), 76–84 (2009)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Melissourgos, T., Spirakis, P. (2017). Existence of Evolutionarily Stable Strategies Remains Hard to Decide for a Wide Range of Payoff Values. In: Fotakis, D., Pagourtzis, A., Paschos, V. (eds) Algorithms and Complexity. CIAC 2017. Lecture Notes in Computer Science(), vol 10236. Springer, Cham. https://doi.org/10.1007/978-3-319-57586-5_35

Download citation

DOI: https://doi.org/10.1007/978-3-319-57586-5_35

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-57585-8

Online ISBN: 978-3-319-57586-5

eBook Packages: Computer ScienceComputer Science (R0)