Abstract

This paper provides an overview on numerical aspects in the simulation of differential-algebraic equations (DAEs). Amongst others we discuss the basic construction principles of frequently used discretization schemes, such as BDF methods, Runge–Kutta methods, and ROW methods, as well as their adaption to DAEs. Moreover, topics like consistent initialization, stabilization, parametric sensitivity analysis, co-simulation techniques, aspects of real-time simulation, and contact problems are covered. Finally, some illustrative numerical examples are presented.

Access provided by CONRICYT-eBooks. Download chapter PDF

Similar content being viewed by others

Keywords

- BDF methods

- Consistent initialization

- Contact problems

- Co-simulation

- Differential-algebraic equations

- Real-time simulation

- ROW methods

- Runge–Kutta methods

- Sensitivity analysis

- Stabilization

Subject Classifications:

1 Introduction

Simulation is a well-established and indispensable tool in scientific research as well as in industrial development processes. Efficient tools are needed that are capable of simulating complex processes in, e.g., mechanical engineering, process engineering, or electrical engineering. Many of such processes (where appropriate after a spatial discretization of a partial differential equation) can be modeled asdifferential-algebraic equations (DAEs), which are implicit differential equations that typically consist of ordinary differential equations as well as algebraic equations. Often, DAEs are formulated automatically by software packages such as MODELICA or SIMPACK. In its general form, the initial value problem for a DAE on the compact interval I = [a, b] reads as

where \(F: I \times \mathbb{R}^{n} \times \mathbb{R}^{n}\longrightarrow \mathbb{R}^{n}\) is a given function and \(z_{a} \in \mathbb{R}^{n}\) is an appropriate initial value at t = a. The task is to find a solution \(z: I\longrightarrow \mathbb{R}^{n}\) of (1.1). Throughout it is assumed that F is sufficiently smooth, i.e., it possesses all the continuous partial derivatives up to a requested order.

Please note that (1.1) is not just an ordinary differential equation in implicit notation, since we permit the Jacobian of F with respect to z ′, i.e., \(F_{z^{{\prime}}}^{{\prime}}\), to be singular along a solution. In such a situation, (1.1) cannot be solved directly for z ′. Particular examples with singular Jacobian are semi-explicit DAEs of type

with a non-singular matrix M and the so-called differential state vector x and the algebraic state vector y. Such systems occur, e.g., in process engineering and mechanical multi-body systems. More generally, quasi-linear DAEs of type

with a possibly singular matrix function Q frequently occur in electrical engineering.

The potential singularity of the Jacobian \(F_{z^{{\prime}}}^{{\prime}}\) has implications with regard to theoretical properties (existence and uniqueness of solutions, smoothness properties, structural properties, …) and with regard to the design of numerical methods (consistent initial values, order of convergence, stability, …). A survey on the solution theory for linear DAEs can be found in the recent survey paper [141]. A comprehensive structural analysis of linear and nonlinear DAEs can be found in the monographs [90] and [92]. While explicit ordinary differential equations (ODEs) can be viewed as well-behaved systems, DAEs are inherently ill-conditioned and the degree of ill-conditioning increases with the so-called (perturbation) index, compare [75, Definition 1.1]. As such, DAEs require suitable techniques for its numerical treatment.

To this end, the paper aims to provide an overview on the numerical treatment of the initial value problem. The intention is to cover the main ideas without too many technical details, which, if required, can be found in full detail in a huge number of publications and excellent textbooks. Naturally not all developments can be covered, so we focus on a choice of methods and concepts that are relevant in industrial simulation environments for coupled systems of potentially large size. These concepts enhance basic integration schemes by adding features like sensitivity analysis (needed, e.g., in optimization procedures), contact dynamics, real-time schemes, or co-simulation techniques. Still, the core challenges with DAEs, that is ill-conditioning, consistent initial values, index reduction, will be covered as well.

The outline of this paper is as follows. Section 2 introduces index concepts and summarizes stabilization techniques for certain classes of DAEs. Section 3 deals with the computation of the so-called consistent initial values for DAEs and their influence on parameters. Note in this respect that DAEs, in contrast to ODEs, do not permit solutions for arbitrary initial values and thus techniques are required to find suitable initial values. The basics of the most commonly used numerical discretization schemes are discussed in Sect. 4, amongst them are BDF methods, Runge–Kutta methods, and ROW methods. Co-simulation techniques for the interaction of different subsystems are presented in Sect. 5. Herein, the stability and convergence of the overall scheme are of particular importance. Section 6 discusses approaches for the simulation of time crucial systems in real-time. The influence of parameters on the (discrete and continuous) solution of an initial value problem is studied in Sect. 7. Hybrid systems and mechanical contact problems are discussed in Sect. 8.

Notation

We use the following notation. The derivative w.r.t. time of a function z(t) is denoted by z ′(t). The partial derivative of a function f with respect to a variable x will be denoted by f x ′ = ∂ f∕∂ x. As an abbreviation of a function of type f(t, x(t)) we use the notation f[t].

2 Error Influence and Stabilization Techniques

DAEs are frequently characterized and classified according to its index. Various index definitions exist, for instance the differentiation index [62], the structural index [45], the strangeness index [90], the tractability index [92], and the perturbation index [75]. These index definitions are not equivalent for general DAEs (1.1), but they coincide for certain subclasses thereof, for instance semi-explicit DAEs in Hessenberg form. For our purposes we will focus on the differentiation index and the perturbation index only.

The differentiation index is one of the earliest index definitions for (1.1) and is based on a structural investigation of the DAE. It aims to identify the so-called underlying ordinary differential equation. To this end let the functions \(F^{(\,j)}: [t_{0},t_{f}] \times R^{(\,j+2)n}\longrightarrow \mathbb{R}^{n}\) for the variables \(z,z^{{\prime}},\ldots,z^{(\,j+1)} \in \mathbb{R}^{n}\) for j = 0, 1, 2, … be defined by the recursion

Herein, F is supposed to be sufficiently smooth such that the functions F ( j) are well defined.

The differentiation index is defined as follows:

Definition 2.1 (Differentiation Index, Compare [62])

The DAE (1.1) has differentiation index \(d \in \mathbb{N}_{0}\), if d is the smallest number in \(\mathbb{N}_{0}\) such that the so-called derivative array

allows to deduce a relation of type z ′ = f(t, z) by algebraic manipulations.

If such a relation exists, then the corresponding ordinary differential equation (ODE) z ′(t) = f(t, z(t)) is called the underlying ODE of the DAE (1.1).

The definition leaves some space for interpretation as it is not entirely clear what is meant by “algebraic manipulations.” However, for semi-explicit DAEs it provides a guideline to determine the differentiation index. Note that the special structure of semi-explicit DAEs is often exploited in the design of numerical schemes and stabilization techniques.

Definition 2.2 (Semi-Explicit DAE)

A DAE of type

is called semi-explicit DAE. Herein, x(⋅ ) is referred to as differential variable and y(⋅ ) is called algebraic variable. Correspondingly, (2.5) is called differential equation and (2.6) algebraic equation.

For semi-explicit DAEs the common approach is to differentiate the algebraic equation w.r.t. time and to substitute the occurring derivatives of x by the right-hand side of the differential equation. This procedure is repeated until the resulting equation can be solved for y ′.

Example 2.1 (Semi-Explicit DAE with Differentiation Index One)

Consider (2.5)–(2.6). Differentiation of the algebraic equation w.r.t. time yields

Herein, we used the abbreviation f[t] for f(t, x(t), y(t)) and likewise for the partial derivatives of g.

Now, if the Jacobian matrix g y ′[t] is non-singular with a bounded inverse along a solution of the DAE, then the above equation can be solved for y ′ by the implicit function theorem and together with the differential equation (2.5) we obtain the underlying ODE

and the differentiation index is d = 1.

In the above example, the situation becomes more involved, if the Jacobian matrix g y ′[t] is singular. If it actually vanishes, then one can proceed as in the following example.

Example 2.2 (Semi-Explicit DAE with Differentiation Index Two)

Consider (2.5)–(2.6). Suppose g does not depend on y and thus g y ′[t] ≡ 0. By differentiation of the algebraic equation we obtain

A further differentiation w.r.t. time yields

with (g (1)) y ′[t] = g x ′[t] f y ′[t]. Now, if the matrix g x ′[t] f y ′[t] is non-singular with a bounded inverse along a solution of the DAE, then the above equation can be solved for y ′ by the implicit function theorem and together with the differential equation (2.5) we obtain the underlying ODE

and the differentiation index is d = 2.

The procedure of the preceding examples works for semi-explicit Hessenberg DAEs, which are defined as follows:

Definition 2.3 (Hessenberg DAE)

-

(a)

For a given k ≥ 2 the DAE

$$\displaystyle{ \begin{array}{lcrllclll} x_{1}^{{\prime}}(t) & =& f_{1}(t,&y(t),&x_{1}(t),&x_{2}(t),&\ldots,&x_{k-2}(t),&x_{k-1}(t)), \\ x_{2}^{{\prime}}(t) & =& f_{2}(t,& &x_{1}(t),&x_{2}(t),&\ldots,&x_{k-2}(t),&x_{k-1}(t)),\\ & \vdots & & & & \ddots \\ x_{k-1}^{{\prime}}(t)& =&f_{k-1}(t,& & & & &x_{k-2}(t),&x_{k-1}(t)), \\ 0 & =& g(t,& & & & & &x_{k-1}(t))\end{array} }$$(2.7)is called Hessenberg DAE of order k, if the matrix

$$\displaystyle{ R(t):= g_{x_{k-1}}^{{\prime}}[t] \cdot f_{ k-1,x_{k-2}}^{{\prime}}[t]\cdots f_{ 2,x_{1}}^{{\prime}}[t] \cdot f_{ 1,y}^{{\prime}}[t] }$$(2.8)is non-singular for all t ∈ [t 0, t f ] with a uniformly bounded inverse ∥ R −1(t) ∥ ≤ C in [t 0, t f ], where C is a constant independent of t.

-

(b)

The DAE

$$\displaystyle{ \begin{array}{lcr} x^{{\prime}}(t)& =&f(t,x(t),y(t)), \\ 0 & =& g(t,x(t),y(t))\end{array} }$$(2.9)is called Hessenberg DAE of order 1, if the matrix g y ′[t] is non-singular with ∥ g y ′[t]−1 ∥ ≤ C for all t ∈ [t 0, t f ] and some constant C independent of t.

Herein, y is called algebraic variable and x = (x 1, …, x k−1)⊤ in (a) and x in (b), respectively, is called differential variable.

By repeated differentiation of the algebraic constraint 0 = g(t, x k−1(t)) w.r.t. to time and simultaneous substitution of the derivatives of the differential variable by the corresponding differential equations, it is straightforward to show that the differentiation index of a Hessenberg DAE of order k is equal to k, provided the functions g and f j , j = 1, …, k − 1, are sufficiently smooth. In order to formalize this procedure, define

Differentiation of g (0) with respect to time and substitution of

leads to the equation

which is satisfied implicitly as well. Recursive application of this differentiation and substitution process leads to the algebraic equations

and

Since Eqs. (2.11)–(2.12) do not occur explicitly in the original system (2.7), these equations are called hidden constraints of the Hessenberg DAE. Note that the matrix R in (2.8) is given by ∂ g (k−1)∕∂ y.

A practically important subclass of Hessenberg DAEs are mechanical multibody systems in descriptor form given by

where \(q(\cdot ) \in \mathbb{R}^{n}\) denotes the vector of generalized positions, \(v(\cdot ) \in \mathbb{R}^{n}\) the vector of generalized velocities, and \(\lambda (\cdot ) \in \mathbb{R}^{m}\) are Lagrange multipliers. The mass matrix M is supposed to be symmetric and positive definite with a bounded inverse M −1 and thus, the second equation in (2.13) can be multiplied by M(t, q(t))−1. The vector f denotes the generalized forces and torques. The term g q ′(t, q)⊤ λ can be interpreted as a force that keeps the system on the algebraic constraint g(t, q) = 0.

The constraint g(t, q(t)) = 0 is called constraint on position level . Differentiation with respect to time of this algebraic constraint yields the constraint on velocity level

and the constraint on acceleration level

Replacing v ′ by

yields

If g q ′(t, q)) has full rank, then the matrix g q ′(t, q)M(t, q)−1 g q ′(t, q)⊤ is non-singular and the latter equation can be solved for the algebraic variable λ. Thus, the differentiation index is three.

Remark 2.1

Note that semi-explicit DAEs are more general than Hessenberg DAEs since no regularity assumptions are imposed in Definition 2.2. In fact, without additional regularity assumptions, the class of semi-explicit DAEs is essentially as large as the class of general DAEs (1.1), since the settings z ′(t) = y(t) and F(t, y(t), z(t)) = 0 transform the DAE (1.1) into a semi-explicit DAE (some care has to be taken with regard to the smoothness of solutions, though).

2.1 Error Influence and Perturbation Index

The differentiation index is based on a structural analysis of the DAE, but it does not indicate how perturbations influence the solution. In contrast, the perturbation index addresses the influence of perturbations on the solution and thus it is concerned with the stability of DAEs. Note that perturbations frequently occur, for instance they are introduced by numerical discretization schemes.

Definition 2.4 (Perturbation Index, See [75])

The DAE (1.1) has perturbation index \(p \in \mathbb{N}\) along a solution z on [t 0, t f ], if \(p \in \mathbb{N}\) is the smallest number such that for all functions \(\tilde{z}\) satisfying the perturbed DAE

there exists a constant S depending on F and t f − t 0 with

for all t ∈ [t 0, t f ], whenever the expression on the right is less than or equal to a given bound.

The perturbation index is p = 0, if the estimate

holds. The DAE is said to be of higher index, if p ≥ 2.

According to the definition of the perturbation index, higher index DAEs are ill-conditioned in the sense that small perturbations with high frequencies, i.e., with large derivatives, can have a considerable influence on the solution of a higher index DAE as it can be seen in (2.15). For some time it was believed that the difference between perturbation index and differentiation index is at most one, until it was shown in [34] that the difference between perturbation index and differentiation index can be arbitrarily large. However, for the subclass of Hessenberg DAEs as defined in Definition 2.3 both index concepts (and actually all other relevant index concepts) coincide.

The definition of the perturbation index shows that the degree of ill-conditioning increases with the perturbation index. Hence, in order to make a higher index DAE accessible to numerical methods it is advisable and common practice to reduce the perturbation index of a DAE. A straightforward idea is to replace the original DAE by a mathematically equivalent DAE with lower perturbation index. The index reduction process itself is nontrivial for general DAEs, since one has to ensure that it is actually the perturbation index, which is being reduced (and not some other index like the differentiation index).

For Hessenberg DAEs, however, the index reduction process is straightforward as perturbation index and differentiation index coincide. Consider a Hessenberg DAE of order k as in (2.7). Then, by replacing the algebraic constraint 0 = g(t, x k−1(t)) by one of the hidden constraints g ( j), j ∈ { 1, …, k − 1}, defined in (2.11) or (2.12) we obtain the Hessenberg DAE

where we use the setting x 0: = y for notational convenience. The Hessenberg DAE in (2.17) has perturbation index k − j. Hence, this simple index reduction strategy actually reduces the perturbation index, and it leads to a mathematically equivalent DAE with the same solution as the original DAE, if the initial values x(t 0) and y(t 0) satisfy the algebraic constraints g (ℓ)(t 0, x k−1−ℓ (t 0), …, x k−1(t 0)) = 0 for all ℓ = 0, …, k − 1.

On the other hand, the index reduced DAE (2.17) in general permits additional solutions for those initial values x(t 0) and y(t 0), which merely satisfy the algebraic constraints g (ℓ)(t 0, x k−1−ℓ (t 0), …, x k−1(t 0)) = 0 for all ℓ = j, …, k − 1, but not the neglected algebraic constraints with index ℓ = 0, …, j − 1. In the most extreme case j = k − 1 (the reduced DAE has index-one) x(t 0) can be chosen arbitrarily (assuming that the remaining algebraic constraint can be solved for y(t 0) given the value of x(t 0)). The following theorem shows that the use of inconsistent initial values leads to a polynomial drift off the neglected algebraic constraints in time, compare [73, Sect. VII.2].

Theorem 2.1

Consider the Hessenberg DAE of order k in ( 2.7 ) and the index reduced DAE in ( 2.17 ) with j ∈{ 1,…,k − 1}. Let x(t) and y(t) be a solution of ( 2.17 ) such that the initial values x(t 0 ) and y(t 0 ) satisfy the algebraic constraints g (ℓ) (t 0 ,x k−1−ℓ (t 0 ),…,x k−1 (t 0 )) = 0 for all ℓ = j,…,k − 1. Then for ℓ = 1,…,j and t ≥ t 0 we have

with g ( j−ℓ+ν) [t 0 ]:= g ( j−ℓ+ν) (t 0 ,x k−1−(j−ℓ+ν) (t 0 ),…,x k−1 (t 0 )).

Proof

We use the abbreviation g (ℓ)[t] for g (ℓ)(t, x k−1−ℓ (t), x k−1(t)) for notational convenience. Observe that

and thus

We have g ( j)[t] = 0 and thus for ℓ = 1:

This proves (2.18) for ℓ = 1. Inductively we obtain

which proves the assertion. □

We investigate the practically relevant index-three case in more detail and consider the reduction to index one (i.e., k = 3 and j = 2). In this case Theorem 2.1 yields

The drift-off property of the index reduced DAE causes difficulties for numerical discretization methods as the subsequent result shows, compare [73, Sect. VII.2].

Theorem 2.2

Consider the DAE ( 2.7 ) with k = 3 and the index reduced problem ( 2.17 ) with j = 2. Let z(t;t m ,z m ) denote the solution of the latter at time t with initial value z m at t m , where z = (x 1 ,x 2 ,y) ⊤ denotes the vector of differential and algebraic states. Suppose the initial value z 0 at t 0 satisfies g (0) [t 0 ] = 0 and g (1) [t 0 ] = 0.

Let a numerical method generate approximations z n = (x 1,n ,x 2,n ,y n ) ⊤ of z(t n ;t 0 ,z 0 ) at time points t n = t 0 + nh, \(n \in \mathbb{N}_{0}\) , with stepsize h > 0. Suppose the numerical method is of order \(p \in \mathbb{N}\) , i.e., the local error satisfies

Then, for \(n \in \mathbb{N}\) the algebraic constraint g (0) = g satisfies the estimate

with constants C,L 0 , and L 1.

Proof

Since z 0 satisfies g (0)[t 0] = 0 and g (1)[t 0] = 0, the solution z(t; t 0, z 0) satisfies these constraints for every t. For notational convenience we use the notion g (0)(t, z(t)) instead of g (0)(t, x 2(t)) and likewise for g (1). To this end, for a given t n we have

Exploitation of (2.19)–(2.20) with t 0 replaced by t m and t m+1, respectively, yields

where L 0 and L 1 are Lipschitz constants of g (0) and g (1). Together with (2.22) we thus proved the estimate

□

The estimate (2.21) shows that the numerical solution may violate the algebraic constraint with a quadratic drift term in t n for the setting in Theorem 2.2. This drift-off effect may lead to useless numerical simulation results, especially on long time horizons. For DAEs with even higher index, the situation becomes worse as the degree of the polynomial drift term depends on the j in (2.17), i.e., on the number of differentiations used in the index reduction.

2.2 Stabilization Techniques

The basic index reduction approach in the previous section may lead to unsatisfactory numerical results. One possibility to avoid the drift-off on numerical level is to perform a projection step onto the neglected algebraic constraints after each successful integration step for the index reduced system, see [18, 47].

Another idea is to use stabilization techniques to stabilize the index reduced DAE itself. The common approaches are Baumgarte stabilization, Gear–Gupta–Leimkuhler stabilization, and the use of overdetermined DAEs.

2.2.1 Baumgarte Stabilization

The Baumgarte stabilization [22] was originally introduced for mechanical multibody systems (2.13). It can be extended to Hessenberg DAEs in a formal way. The idea is to replace the algebraic constraint in (2.7) by a linear combination of original and hidden algebraic constraints g (ℓ), ℓ ∈ { 0, 1, …, k − 1}. With the setting x 0: = y, the resulting DAE reads as follows:

The DAE (2.23) has index one. The weights α ℓ , ℓ = 0, 1, …, k − 1, with α k−1 = 1 have to be chosen such that the associated differential equation

is asymptotically stable with ∥ η (ℓ)(t) ∥ ⟶ 0 for ℓ ∈ { 0, …, k − 2} as t ⟶ ∞, compare [73, Sect. VII.2]. A proper choice of the weights is crucial since a balance between quick damping and low degree of stiffness has to be found.

The Baumgarte stabilization was used for real-time simulations in [14, 31], but on the index-two level and not on the index-one level.

2.2.2 Gear–Gupta–Leimkuhler Stabilization

The Gear–Gupta–Leimkuhler (GGL) stabilization [64] does not neglect algebraic constraints but couples them to the index reduced DAE using an additional multiplier. Consider the mechanical multibody system (2.13). The GGL stabilization reads as follows:

The DAE is of Hessenberg type (if multiplied by M −1) and it has index two, if M is symmetric and positive definite and g q ′ has full rank. Differentiation of the first algebraic equation yields

Since g q ′ is supposed to be of full rank, the matrix g q ′[t]g q ′[t]⊤ is non-singular and the equation implies μ ≡ 0.

The idea of the GGL stabilization can be extended to Hessenberg DAEs. To this end consider (2.7) and the index reduced DAE (2.17) with j ∈ { 1, …, k − 1} fixed. Define

and suppose the Jacobian

has full rank. A stabilized version of (2.17) is given by

where μ is an additional algebraic variable, x = (x 1, …, x k−1)⊤, and f = ( f 1, …, f k−1)⊤. The stabilized DAE has index max{2, k − j}. Note that

Moreover,

and thus μ ≡ 0 since G x ′ was supposed to have full rank.

2.2.3 Stabilization by Over-Determination

The GGL stabilization approaches for the mechanical multibody system in (2.24) and the Hessenberg DAE in (2.25) are mathematically equivalent to the overdetermined DAEs

and

respectively, because the additional algebraic variable μ vanishes in either case. Hence, from an analytical point of view there is no difference between the respective systems. A different treatment is necessary from the numerical point of view, though. The GGL stabilized DAEs in (2.24) and (2.25) can be solved by standard discretization schemes, like BDF methods or methods of Runge–Kutta type, provided those are suitable for higher index DAEs. In contrast, the overdetermined DAEs require tailored numerical methods that are capable of dealing with overdetermined linear equations, which arise internally in each integration step. Typically, such overdetermined equations are solved in a least-squares sense, compare [56, 57] for details.

3 Consistent Initialization and Influence of Parameters

One of the crucial issues when dealing with DAEs is that a DAE in general only permits a solution for properly defined initial values, the so-called consistent initial values. The initial values not only have to satisfy those algebraic constraints that are explicitly present in the DAE, but hidden constraints have to be satisfied as well.

3.1 Consistent Initial Values

For the Hessenberg DAE (2.7) consistency is defined as follows.

Definition 3.1 (Consistent Initial Value for Hessenberg DAEs)

The initial values x(t 0) = (x 1(t 0), …, x k−1(t 0))⊤ and y(t 0) are consistent with (2.7), if the equations

hold.

Finding a consistent initial value for a Hessenberg DAE typically consists of two steps. Firstly, a suitable x(t 0) subject to the constraints (3.1) has to be determined. Secondly, given x(t 0) with (3.1), Eq. (3.2) can be solved for y 0 = y(t 0) by Newton’s method, if the matrix R 0 = ∂ g (k−1)∕∂ y is non-singular in a solution (assuming that a solution exists). For mechanical multibody systems even a linear equation arises in the second step.

Example 3.1

Consider the mechanical multibody system (2.13). A consistent initial value (q 0, v 0, λ 0) at t 0 must satisfy

with

The latter two equations yield a linear equation for v 0 ′ and λ 0:

The matrix on the left-hand side is non-singular, if M is symmetric and positive definite and g q ′(t 0, q 0) is of full rank.

Definition 3.2 (Consistent Initial Value for General DAEs, Compare [29, Sect. 5.3.4])

For a general DAE (1.1) with differentiation index d the initial value z 0 = z(t 0) is said to be consistent at t 0, if the derivative array

in (2.4) has a solution (z 0, z 0 ′, …, z 0 (d+1)).

Note that the system of nonlinear equations (3.3) in general has many solutions and additional conditions are required to obtain a particular consistent initial value, which might be relevant for a particular application. This can be achieved for instance by imposing additional constraints

which are known to hold for a specific application, compare [29, Sect. 5.3.4]. Of course, such additional constraints must not contradict the equations in (3.3).

If the user is not able to formulate relations in (3.4) such that the combined system of equations (3.3) and (3.4) returns a unique solution, then a least-squares approach could be used to find a consistent initial value closest to a ‘desired’ initial value, compare [33]:

In practical computations the major challenge for higher index DAEs is to obtain analytical expressions or numerical approximations of the derivatives in F ( j), j = 1, …, d. For this purpose computer algebra packages like MAPLE, MATHEMATICA, or the symbolic toolbox of MATLAB can be used. Algorithmic differentiation tools are suitable as well, compare [72] for an overview. A potential issue is redundancy in the constraints (3.3) and the identification of the relevant equations in the derivative array. Approaches for the consistent initialization of general DAEs can be found in [1, 30, 35, 51, 71, 78, 93, 108]. A different approach is used in [127] in the context of shooting methods for parameter identification problems or optimal control problems. Herein, the algebraic constraints of the DAE are relaxed such that they are satisfied for any initial value. Then, the relaxation terms are driven to zero in the superordinate optimization problem in order to ensure consistency with the original DAE.

3.2 Dependence on Parameters

Initial values may depend on parameters that are present in the DAE. To this end the recomputation of consistent initial values for perturbed parameters becomes necessary or a parametric sensitivity analysis has to be performed, compare [66, 69]. Such issues frequently arise in the context of optimal control problems or parameter identification problems subject to DAEs, compare [68].

Example 3.2

Consider the equations of motion of a pendulum of mass m and length ℓ in the plane:

Herein, (q 1, q 2) denotes the pendulum’s position, (v 1, v 2) its velocity, and λ the stress in the bar. A consistent initial value (q 1, 0, q 2, 0, v 1, 0, v 2, 0, λ 0) has to satisfy the equations

Apparently, the algebraic component λ 0 depends on the parameter p = (m, ℓ, g)⊤ according to

But in addition, the positions q 1, 0 and q 2, 0 depend on ℓ through the relation (3.5). So, if ℓ changes, then q 1, 0 and/or q 2, 0 have to change as well subject to (3.5) and (3.6). However, those equations in general do not uniquely define q 1, 0, q 2, 0, v 1, 0, v 2, 0 and the question arises, which set of values one should choose?

Firstly, we focus on the recomputation of an initial value for perturbed parameters. As the previous example shows, there is not a unique way to determine such a consistent initial value. A common approach is to use a projection technique, compare, e.g., [69] for a class of index-two DAEs, [68, Sect. 4.5.1] for Hessenberg DAEs, or [33] for general DAEs.

Consider the general parametric DAE

and the corresponding derivative array

Remark 3.1

Please note that the differentiation index d of the general parametric DAE (3.8) may depend on p. For simplicity, we assume throughout that this is not the case (at least locally around a fixed nominal parameter).

Let \(\tilde{p}\) be a given parameter. Suppose \(\tilde{z}_{0} = z_{0}(\,\tilde{p})\) with \(\tilde{z}_{0}^{{\prime}} = z_{0}^{{\prime}}(\,\tilde{p}),\ldots,\tilde{z}_{0}^{(d+1)} = z_{0}^{(d+1)}(\,\tilde{p})\) is consistent. In order to find a consistent initial value for p, which is supposed to be close to \(\tilde{p}\), solve the following parametric constrained least-squares problem:

LSQ(p): Minimize

with respect to (ξ 0, ξ 0 ′, …, ξ 0 (d+1))⊤ subject to the constraints

Remark 3.2

In case of a parametric Hessenberg DAE it would be sufficient to consider the hidden constraints up to order k − 2 as the constraints in LSQ(p) and to compute a consistent algebraic component afterwards. Moreover, the quantities t 0 and \(\tilde{z}_{0}^{(\,j)}\), j = 0, …, d + 1, could be considered as parameters of LSQ(p) as well, but here we are only interested in p.

The least-squares problem LSQ(p) is a parametric nonlinear optimization problem and allows for a sensitivity analysis in the spirit of [54] in order to investigate the sensitivity of a solution of LSQ(p) for p close to some nominal value \(\hat{p}\). Let

with ξ = (ξ 0, ξ 0 ′, …, ξ 0 (d+1))⊤, \(\tilde{z} = (\tilde{z}_{0},\tilde{z}_{0}^{{\prime}},\ldots,\tilde{z}_{0}^{(d+1)})^{\top }\), and

denote the Lagrange function of LSQ(p).

Theorem 3.1 (Sensitivity Theorem, Compare [54])

Let G in ( 3.10 ) be twice continuously differentiable and \(\hat{p}\) a nominal parameter. Let \(\hat{\xi }\) be a local minimum of LSQ( \(\hat{p}\) ) with Lagrange multiplier \(\hat{\mu }\) such that the following assumptions hold:

-

(a)

Linear independence constraint qualification: \(G_{\xi }^{{\prime}}(\hat{\xi },\hat{p})\) has full rank.

-

(b)

KKT conditions: \(0 = \nabla _{\xi }L(\hat{\xi },\hat{\mu },\hat{p})\) with L from ( 3.9 )

-

(c)

Second-order sufficient condition:

$$\displaystyle{L_{\xi \xi }^{{\prime\prime}}(\hat{\xi },\hat{\mu },\hat{p})(h,h) > 0\qquad \forall h\not =0\:\ G_{\xi }^{{\prime}}(\hat{\xi },\hat{p})h = 0.}$$

Then there exist neighborhoods \(B_{\epsilon }(\hat{p})\) and \(B_{\delta }(\hat{\xi },\hat{\mu })\) , such that LSQ(p) has a unique local minimum

for each \(p \in B_{\epsilon }(\hat{p})\) . In addition, (ξ( p),μ( p)) is continuously differentiable with respect to p with

The second equation in (3.11) reads

and in more detail using (3.10),

for j = 0, 1, …, d. Let us define \(S_{0}^{(\ell)}:= (\xi _{0}^{(\ell)})^{{\prime}}(\hat{p})\) for ℓ = 0, …, d + 1. Then, we obtain

and in particular for j = 0,

which is the linearization of (3.8) around \((t_{0},\xi _{0},\xi _{0}^{{\prime}},\hat{p})\) with respect to p. Taking into account the definition of the further components F ( j), j = 1, …, d + 1, of the derivative array, compare (2.3), we recognize that (3.12) provides a linearization of (2.3) with respect to p. Hence, the settings

provide consistent initial values for the sensitivity DAE

where S(t): = ∂ z(t; p)∕∂ p denotes the sensitivity of the solution of (3.8) with respect to the parameter p, compare Sect. 7. Herein, it is assumed that F is sufficiently smooth with respect to all arguments.

In summary, the benefits of the projection approach using LSQ(p) are twofold: Firstly, it allows to compute consistent initial values for the DAE itself. Secondly, the sensitivity analysis provides consistent initial values for the sensitivity DAE. Finally, the sensitivity analysis can be used to predict consistent initial values under perturbations through the Taylor expansion

for \(p \in B_{\varepsilon }(\hat{p})\).

4 Integration Methods

A vast number of numerical discretizations schemes exist for DAEs, most of them are originally designed for ODEs, such as BDF methods or Runge–Kutta methods. The methods for DAEs are typically (at least in part) implicit methods owing to the presence of algebraic equations. It is beyond the scope of the paper to provide a comprehensive overview on all the existing numerical discretization schemes for DAEs, since excellent textbooks with convergence results and many details are available, for instance [29, 73, 75–77, 90, 92, 134]. Our intention is to discuss the most commonly used methods, their construction principles, and some of their features. Efficient implementations use a bunch of additional ideas to improve the efficiency.

All methods work on a grid

with \(N \in \mathbb{N}\) and step-sizes h k = t k+1 − t k , k = 0, …, N − 1. The maximum step-size is denoted by \(h =\max \limits _{k=0,\ldots,N-1}h_{k}\). The methods generate a grid function \(z_{h}: \mathbb{G}_{h}\longrightarrow \mathbb{R}^{n}\) with z h (t) ≈ z(t) for \(t \in \mathbb{G}_{h}\), where z(t) denotes the solution of (1.1) with a consistent initial value z 0. The discretization schemes can be grouped into one-step methods with

for a given consistent initial value z h (t 0) = z 0 and s-stage multi-step methods with

for given consistent initial values z h (t 0) = z 0, …, z h (t s−1) = z s−1. Note that multi-step methods with s > 1 require an initialization procedure to compute z 1, …, z s−1. This can be realized by performing s − 1 steps of a suitable one-step method or by using multi-step methods with 1, 2, …, s − 1 stages successively for the first s − 1 steps.

The aim is to construct convergent methods such that the global error \(e_{h}: \mathbb{G}_{h}\longrightarrow \mathbb{R}^{n}\) defined by

satisfies

or even exhibits the order of convergence \(p \in \mathbb{N}\), i.e.

Herein, \(\varDelta _{h}:\{ z: [t_{0},t_{f}]\longrightarrow \mathbb{R}^{n}\}\longrightarrow \{z_{h}: \mathbb{G}_{h}\longrightarrow \mathbb{R}^{n}\}\) denotes the restriction operator onto the set of grid functions on \(\mathbb{G}_{h}\) defined by Δ h (z)(t) = z(t) for \(t \in \mathbb{G}_{h}\).

A convergence proof for a specific discretization scheme typically resembles the reasoning

compare [131]. Herein, consistency is not to be confused with consistent initial values. Instead, consistency of a discretization method measures how well the exact solution satisfies the discretization scheme. Detailed definitions of consistency and stability and convergence proofs for various classes of DAEs (index-one, Hessenberg DAEs up to order 3, constant/variable step-sizes) can be found in the above-mentioned textbooks [29, 73, 75–77, 90, 92, 134]. As a rule, one cannot in general expect the same order of convergence for differential and algebraic variables for higher index DAEs.

4.1 BDF Methods

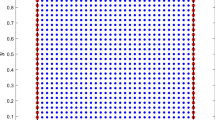

The Backward Differentiation Formulas (BDF) are implicit multi-step methods and were introduced in [40, 61]. A BDF method with \(s \in \mathbb{N}\) stages is based on the construction of interpolating polynomials, compare Fig. 1. Suppose the method has produced approximations z h (t i+k ), k = 0, …, s − 1, of z at the grid points t i+k , k = 0, …, s − 1. The aim is to determine an approximation z h (t i+s ) of z(t i+s ), where i ∈ { 0, …, N − s}.

To this end, let P(t) be the interpolating polynomial of degree at most s with

The polynomial P can be expressed as

where the L k ’s denote the Lagrange polynomials. Note that P interpolates the unknown vector z h (t i+s ), which is determined implicitly by the postulation that P satisfies the DAE (1.1) at t i+s , i.e.

The above representation of P yields

with α k = h i+s−1 L k ′(t i+s ), k = 0, …, s.

Example 4.1

The BDF methods with s ≤ 6 and a constant step-size h read as follows, see [134, S. 335]:

Abbreviations: z i+k = z h (t i+k ), k = 0, …, 6.

Introducing the expression for P ′(t i+s ) into (4.3) yields the nonlinear equation

for z h (t i+s ). Suppose (4.4) possesses a solution z h (t i+s ) and the matrix pencil

is regular at this solution, i.e., there exists a step-size h i+s−1 such that the matrix (4.5) is non-singular. Then the implicit function theorem allows to solve Eq. (4.4) locally for z h (t i+s ) and to express it in the form (4.2). In practice Newton’s method or the simplified Newton method is used to solve Eq. (4.4) numerically, which requires the non-singularity of the matrix in (4.5) at the Newton iterates.

BDF methods are appealing amongst implicit methods since the effort per integration step amounts to solving just one nonlinear equation of dimension n, whereas a fully implicit s-stage Runge–Kutta method requires to solve a nonlinear equation of dimension n ⋅ s. For numerical purposes only the BDF methods with s ≤ 6 are relevant, since the methods for s > 6 are unstable. The maximal attainable order of convergence of an s-stage BDF method is s.

Convergence results assuming fixed step-sizes for BDF methods for certain subclasses of the general DAE (1.1), amongst them are index-one problems and Hessenberg DAEs, can be found in [27, 63, 99, 111]. Variable step-sizes may result in non-convergent components of the algebraic variables for index-three Hessenberg DAEs, compare [64]. This is another motivation to use an index reducing stabilization technique as in Sect. 2.2.

The famous code DASSL, [29, 109], is based on BDF methods, but adds several features like an automatic step-size selection strategy, a variable order selection strategy, a root finding strategy, and a parametric sensitivity module to the basic BDF method. Moreover, the re-use of Jacobians for one or more integration steps and numerically efficient divided difference schemes for the calculation of the interpolating polynomial P increase the efficiency of the code. The code ODASSL by Führer [56] and Führer and Leimkuhler [57] extends DASSL to overdetermined DAEs, which occur, e.g., for the GGL stabilization in Sect. 2.2. In these codes, the error tolerances for the algebraic variables of higher index DAEs have to be scaled by powers of 1∕h compared to those of the differential states since otherwise the automatic step-size selection algorithm breaks down frequently, compare [110]. An enhanced version of DASSL is available in the package SUNDIALS, [80], which provides several methods (Runge–Kutta, Adams, BDF) for ODEs and DAEs in one software package.

4.2 Runge–Kutta Methods

A Runge–Kutta method with \(s \in \mathbb{N}\) stages for (1.1) is a one-step method of type

with the increment function

and the stage derivatives k j (t, z, h), j = 1, …, s. The stage derivatives k j are implicitly defined by the system of n ⋅ s nonlinear equations

where

are approximations of z at the intermediate time points t i + c ℓ h, ℓ = 1, …, s. The coefficients in the Runge–Kutta method are collected in the Butcher array

Commonly used Runge–Kutta methods for DAEs are the RADAU IIA methods and the Lobatto IIIA and IIIC methods. These methods are stiffly accurate, i.e., they satisfy c s = 1 and a sj = b j for j = 1, …, s. This is a very desirable property for DAEs since it implies that (4.9) and z i+1 (s) = z h (t i+1) hold at t i+1 = t i + c s h i . Runge–Kutta methods, which are not stiffly accurate, can be used as well. However, for those it has to be enforced that the approximation z h (t i+1) satisfies the algebraic constraints of the DAE at t i+1. This can be achieved by projecting the output of the Runge–Kutta method onto the algebraic constraints, compare [18].

Example 4.2 (RADAU IIA)

The RADAU IIA methods with s = 1, 2, 3 are defined by the following Butcher arrays, compare [134, Beispiel 6.1.5]:

The maximal attainable order of convergence is 2s − 1.

Example 4.3 (Lobatto IIIA and Lobatto IIIC)

The Lobatto IIIA methods with s = 2, 3 are defined by the following Butcher arrays, compare [134, Beispiel 6.1.6]:

The Lobatto IIIC methods with s = 2, 3 are defined by the following Butcher arrays, compare [134, Beispiel 6.1.8]:

The maximal attainable order of convergence is 2s − 2. A combined method of Lobatto IIIA and IIIC methods for mechanical multibody systems can be found in [124].

The main effort per integration step is to solve the system of nonlinear equations (4.8)–(4.9) for the unknown vector of stage derivatives k = (k 1, …, k s )⊤ by Newton’s method or by a simplified version of it, where the Jacobian matrix is kept constant for a couple of iterations or integration steps. Another way to reduce the computational effort is to consider ROW methods or half-explicit Runge–Kutta methods as in Sect. 4.3.

Convergence results and order conditions for Runge–Kutta methods applied to DAEs can be found in, e.g., [28, 75, 84, 85].

4.3 Rosenbrock-Wanner (ROW) Methods

In this section, we introduce and discuss the so-called Rosenbrock-Wanner(ROW) methods for DAEs, cf. [121], where H.H. Rosenbrock introduced this method class. ROW methods are one-step methods, which are based on implicit Runge–Kutta methods. In literature, these methods are also called Rosenbrock methods, linearly-implicit or semi-implicit Runge–Kutta methods, cf. [73].

The motivation to introduce an additional class of integration methods is to avoid solving a fully nonlinear system of dimension n ⋅ s and to solve instead of that only linear systems. Thus, the key idea for the derivation of Rosenbrock-Wanner methods is to perform one Newton-step to solve Eqs. (4.8)–(4.9) for a Runge–Kutta method with a ij = 0 for i < j (diagonally implicit RK method, cf. [73]). We rewrite these equations for such a method and an autonomous implicit DAE,

as follows:

Due to the fact that we consider a diagonally implicit RK method, the above equations are decoupled and can be solved successively. Then, performing one Newton-step with starting value k ℓ (0) leads to

for ℓ = 1, …, s.

We come to the general class of Rosenbrock-Wanner methods by proceeding with the following steps. First, we take as starting value k ℓ (0) = 0 for ℓ = 1, …, s. Then, the Jacobians are evaluated at the fixed point z h (t i ) instead of z i+1 (ℓ−1), which saves computational costs substantially. Moreover, linear combinations of the previous stages k j , j = 1, …, ℓ are introduced. And last but not least, the method is extended to general non-autonomous implicit DAEs as Eq. (1.1). We obtain the following class of Rosenbrock methods

with

The solution at the next time point t i+1 is computed exactly as in the case of Runge–Kutta methods:

with the stage derivatives k j (t, z, h) defined by the linear system (4.14). An example for a ROW method is the linearly implicit Euler method (s = 1), for which the stage derivative is defined as follows

For semi-explicit DAEs of the form (2.5)–(2.6), a ROW method as defined above reads as

with

for ℓ = 1, …, s. Herein, we have set z = ((z x)⊤, (z y)⊤)⊤ = (x ⊤, y ⊤)⊤ and

Up to now, we have considered ROW methods with exact Jacobian matrices J z = F z , \(J_{z^{{\prime}}} = F_{z^{{\prime}}}\). There is an additional class of integration methods, which uses for J z arbitrary matrices (‘inexact Jacobians’)—such methods are called W-methods, see [73, 146, 147].

We further remark that related integration methods can be derived, if other starting values are used for the stage derivatives, instead of k ℓ (0) = 0 as it is done to derive Eq. (4.14), cf. [67, 68]—the methods derived there as well as ROW and W-methods can be seen to belong the common class of linearized implicit Runge–Kutta methods.

An introduction and more detailed discussion of ROW methods can be found in [73]; convergence results for general one-step methods (including ROW methods) applied to DAEs are available in [41]. Moreover, ROW methods for index-one DAEs in semi-explicit form are studied in [53, 117, 120, 135]; index-one problems and singularly perturbed problems are discussed in [23, 24, 74]. Analysis results and specific methods for the equations of motion of mechanical multibody systems, i.e., index-three DAEs in semi-explicit form are derived in [145, 146]; compare also the results in [14, 106, 123].

4.4 Half-Explicit Methods

In this section, we briefly discuss the so-called half-explicit Runge–Kutta methods, here for autonomous index-two DAEs in semi-explicit form. That is, we consider DAE systems of the form

with initial values (x 0, y 0) that are assumed to be consistent. To derive the class of half-explicit Runge–Kutta methods, it is more convenient to use stages rather than the stage-derivatives k ℓ as before. In particular, for the semi-explicit DAE (4.24), (4.25), we define stages for the differential and the algebraic variables as

Then, it holds

Using this notation and the coefficients of an explicit Runge–Kutta scheme, half-explicit Runge–Kutta methods as firstly introduced in [75] are defined as follows

The algorithmic procedure is as follows: We start with X i1 = x h (t i ) assumed to be consistent. Then, taking Eq. (4.28) for X i2 and inserting into Eq. (4.29) lead to

this is a nonlinear equation that can be solved for Y i1. Next, we calculate X i2 from Eq. (4.28) and, accordingly, Y i2, etc. For methods with c s = 1, one obtains an approximation for the algebraic variable at the next time-point by y h (t i+1) = Y is . The key idea behind this kind of integration schemes is to apply an explicit Runge–Kutta scheme for the differential variable and to solve for the algebraic variable implicitly.

Convergence studies for this method class applied to index-two DAEs can be found in [26, 75]. In [7, 12, 105] the authors introduce a slight modification of the above stated scheme, which improves the method class concerning order conditions and computational efficiency. To be more precise, partitioned half-explicit Runge–Kutta methods for index-two DAEs in semi-explicit form are defined in the following way:

Results concerning the application of half-explicit methods to index-one DAE are available in [13]; the application to index-three DAEs is discussed in [107].

4.5 Examples

Some illustrative examples with DAEs are discussed. Example 4.4 addresses an index-three mechanical multibody system of a car on a bumpy road. A docking maneuver of a satellite to a tumbling target is investigated in Example 4.5. Herein, the use of quaternions leads to a formulation with an index-one DAE.

Example 4.4

We consider a vehicle simulation for the ILTIS on a bumpy road section. A detailed description of the mechanical multibody system is provided in [128]. The system was modeled by SIMPACK [81] and the simulation results were obtained using the code export feature of SIMPACK and the BDF method DASSL [29]. The mechanical multibody system consists of 11 rigid bodies with a total of 25 degrees of freedom (DOF) (chassis with 6 DOF, wheel suspension with 4 DOF in total, wheels with 12 DOF in total, steering rod with 1 DOF, camera with 2 DOF). The motion is restricted by 9 algebraic constraints. Figure 2 illustrates the test track with bumps and the resulting pitch and roll angles, and the vertical excitation of the chassis. The integration tolerance within DASSL is set to 10−4 for the differential states and to 108 for the algebraic states (i.e., no error control was performed for the algebraic states).

Example 4.5

We consider a docking maneuver of a service satellite (S) to a tumbling object (T) on an orbit around the earth, compare [103]. Both objects are able to rotate freely in space and quaternions are used to parametrize their orientation. Note that, in contrast to Euler angles, quaternions lead to a continuous parametrization of the orientation without singularities.

The relative dynamics of S and T are approximately given by the Clohessy-Wilshire-Equations

where (x, y, z)⊤ is the relative position of S and T, a = (a x , a y , a z )⊤ is a given control input (thrust) to S, \(n = \sqrt{\mu /a_{t }^{3}}\), a t is the semi-major axis of the orbit (assumed to be circular), and μ is the gravitational constant.

The direction cosine matrix using quaternions q = (q 1, q 2, q 3, q 4)⊤ is defined by

The matrix R(q) represents the rotation matrix from rotated to non-rotated state. The orientation of S and T with respect to an unrotated reference coordinate system is described by quaternions q S = (q 1 S, q 2 S, q 3 S, q 4 S)⊤ for S and q T = (q 1 T, q 2 T, q 3 T, q 4 T)⊤ for T. With the angular velocities ω S = (ω 1 S, ω 2 S, ω 3 S)⊤ and ω T = (ω 1 T, ω 2 T, ω 3 T)⊤ the quaternions obey the differential equations

where the operator ⊗ is defined by

Assuming a constant mass distribution and body fixed coordinate systems that coincide with the principle axes, S and T obey the gyroscopic equations

Herein u = (u 1, u 2, u 3)⊤ denotes a time-dependent torque input to S.

The quaternions are normalized to one by the algebraic constraints

which has to be obeyed since otherwise a drift-off would occur owing to numerical discretization errors. In order to incorporate these algebraic constraints, we treat (q 4 S, q 4 T)⊤ as algebraic variables and drop the differential equations for q 4 S and q 4 T in (4.34). In summary, we obtain an index-one DAE with differential state \((x,y,z,x^{{\prime}},y^{{\prime}},z^{{\prime}},\omega _{1}^{S},\omega _{2}^{S},\omega _{3}^{S},q_{1}^{S},q_{2}^{S},q_{3}^{S},\omega _{1}^{T},\omega _{2}^{T},\omega _{3}^{T},q_{1}^{T},q_{2}^{T},q_{3}^{T})^{\top }\in \mathbb{R}^{18}\), algebraic state \((q_{4}^{S},q_{4}^{T})^{\top }\in \mathbb{R}^{2}\), and time-dependent control input \((a,u)^{\top }\in \mathbb{R}^{6}\) for S.

Figure 3 shows some snapshots of a docking maneuver on the time interval [0, 667] with initial states

and parameters a t = 7071000, μ = 398 ⋅ 1012, J 11 α = 1000, J 22 α = 2000, J 33 α = 1000, α ∈ { S, T}. The integration tolerance within DASSL is set to 10−10 for the differential states and to 10−4 for the algebraic states. Figure 4 depicts the control inputs m ⋅ a = m ⋅ (a x , a y , a z )⊤ with satellite mass m = 100 and u = (u 1, u 2, u 3)⊤. Finally, Fig. 5 shows the angular velocities ω S and ω T.

5 Co-simulation

In numerical system simulation, it is an essential task to simulate the dynamic interaction of different subsystems, possibly from different physical domains, modeled with different approaches, to be solved with different numerical solvers (multiphysical system models). Especially, in vehicle engineering, this becomes more and more important, because for a mathematical model of a modern passenger car or commercial vehicle, mechanical subsystems have to be coupled with flexible components, hydraulic subsystems, electronic and electric devices, and other control units. The mathematical models for all these subsystems are often given as DAE, but, typically, they substantially differ in their complexities, time constants, and scales; hence, it is not advisable to combine all model equations to one entire DAE and to solve it numerically with one integration scheme. In contrast, modern co-simulation strategies aim at using a specific numerical solver, i.e., DAE integration method, for each subsystem and to exchange only a limited number of coupling quantities at certain communication time points. Thus, it is important to analyze the behavior of such coupled simulation strategies, ‘co-simulation’ , where the coupled subsystems are mathematically described as DAEs.

In addition to that, also the coupling may be described with an algebraic constraint equation; that is, DAE-related aspects and properties also arise here. Typical examples for such situations are network modeling approaches in general and, in particular, modeling of coupled electric circuits and coupled substructures of mechanical multibody systems, see [11].

Co-simulation techniques and their theoretical background are studied for a long time, see, for instance, the survey papers [83, 143]. In these days, a new interface standard has developed, the ‘Functional Mock-Up Interface (FMI) for Model-Exchange and Co-Simulation’, (https://www.fmi-standard.org/) . This interface is supported from more and more commercial CAE-software tools and finds more and more interest in industry for application projects. Additionally, the development of that standard and its release has also stimulated new research activities concerning co-simulation.

A coupled system of r ≥ 2 fully implicit DAEs initial value problems reads as

with initial values assumed to be consistent and the (subsystem-) outputs

and the (subsystem-) inputs u i that are given by coupling conditions

where we have set u: = (u 1 ⊤, …, u r ⊤)⊤, ξ: = (ξ 1 ⊤, …, ξ r ⊤)⊤ and \(h:= (h_{1}^{\top },\ldots,h_{r}^{\top })^{\top }: \mathbb{R}^{n_{y}} \rightarrow \mathbb{R}^{n_{u}}\), with \(n_{y} = n_{y_{1}} +\ldots +n_{y_{r}}\), \(n_{u_{1}} +\ldots +n_{u_{r}} = n_{u}\). Moreover, we assume here

that is, the inputs of system i do not depend on his own output. If the subsystems DAEs are in semi-explicit form, Eq. (5.1) has to be replaced by

with t ∈ [t 0, t f ] and (x i (t 0), y i (t 0)) = (x i, 0, y i, 0) with consistent initial values. This representation is called block-oriented; it describes the subsystems as blocks with inputs and outputs that are coupled.

In principle, it is possible to set up one monolithic system including the coupling conditions and output equations as additional algebraic equations:

This entire system could be solved with one single integration scheme, which is, however, as indicated above typically not advisable. In contrast, in co-simulation strategies, also referred to as modular time-integration [125] or distributed time integration [11], the subsystem equations are solved separately on consecutive time-windows. Herein, the time integration of each subsystem within one time-window or macro step can be realized with a different step-size adapted to the subsystem (multirate approach), or even with different appropriate integration schemes (multimethod approach). During the integration process of one subsystem, the needed coupling quantities, i.e., inputs from other subsystems, are approximated—usually based on previous results. At the end of each macro step, coupling data is exchanged. To be more precise, for the considered time interval, we introduce a (macro) time grid \(\mathbb{G}:= \{T_{0},\ldots,T_{N}\}\) with t 0 = T 0 < T 1 < … < T N = t f . Then, the mentioned time-windows or macro steps are given by [T n , T n+1], n = 0, …, N − 1 and each subsystem is integrated independently from the others in each macro step T n → T n+1, only using a typically limited number of coupling quantities as information from the other subsystems. The macro time points T n are also called communication points, since here, typically, coupling data is exchanged between the subsystems.

5.1 Jacobi, Gauss-Seidel, and Dynamic-Iteration Schemes

An overview on co-simulation schemes and strategies can be found, e.g., in [11, 104, 125]. There are, however, two main approaches how the above sketched co-simulation can be realized. The crucial differences are the strategy (order) how the subsystems are integrated within the macro steps and, accordingly, how coupling quantities are handled and approximated. The first possible approach is a completely parallel scheme and is called Jacobi scheme (or co-simulation/coupling of Jacobi-type). As the name indicates, the subsystems are integrated here in parallel and, thus, they have to use extrapolated input quantities during the current macro step, cf. Fig. 6. In contrast to this, the second approach is a sequential one, it is called Gauss-Seidel scheme (or co-simulation/coupling of Gauss-Seidel-type). For the special case of two coupled subsystems, r = 2, this looks as follows: one subsystem is integrated first on the current macro step using extrapolated input data yielding a (numerical) solution for this first system. Then, the second subsystem is integrated on the current macro step but, then, using already computed results from the first subsystem for the coupling quantities (since results from the first subsystem for the current macro step are available, in fact). The results from the first subsystem may be available on a fine micro time grid—within the macro step—or even as function of time, e.g., as dense output from the integration method; additionally, (polynomial) interpolation may also be used, cf. Fig. 6.

The sequential Gauss-Seidel scheme can be generalized straightforwardly to r > 2 coupled subsystems: The procedure is sequential, i.e., the subsystems are numerically integrated one after another and for the integration of the i-th subsystem results from the subsystems 1, …, i − 1 are available for the coupling quantities, whereas data from chronologically upcoming subsystems i + 1, …, r have to be extrapolated based on information from previous communication points.

The extra- and interpolation, respectively, are realized using data from previous communication points and, typically, polynomial extra- and interpolation approaches are taken. That is, in the macro step T n → T n+1, the input of subsystem i is extrapolated using data from the communication points T n−k , …, T n ,

t ∈ [T n , T n+1] and with the extrapolation polynomial Ψ i with degree ≤ k; for interpolation, e.g., for Gauss-Seidel schemes, we have correspondingly

The most simple extrapolation is that of zero-order, k = 0, leading to ‘frozen’ coupling quantities

A third approach to establish a simulation of coupled systems are the so-called dynamic iteration schemes, [11, 20, 21], also referred to as waveform relaxation methods, [82, 94]. Here, the basic idea is to solve the subsystems iteratively on each macro step using coupling data information from previous iteration steps, in order to decrease simulation errors. How the subsystems are solved in each iteration step can be in a sequential fashion (Gauss-Seidel) or all in parallel (Jacobi or Picard), cf. [11, 20]. The schemes defined above are contained in a corresponding dynamic iteration scheme by performing exactly one iteration step.

5.2 Stability and Convergence

First of all, we point out that there is a decisive difference between convergence and stability issues for coupled ODEs on the one hand and for coupled DAEs on the other hand. The stability problems that may appear for coupled ODEs with stiff coupling terms resemble the potential problems when applying an explicit integration method to stiff ODEs—thus, these difficulties can be avoided by using sufficiently small macro step-sizes H n = T n+1 − T n , cf. [9, 11, 104]. In the DAE-case, however, reducing the macro steps does not generally lead to an improvement; here, it is additionally essential that a certain contractivity condition is satisfied, see [9, 11, 21, 125].

5.2.1 The ODE-Case

For problems with coupled ODEs, convergence is studied, e.g., in [8, 10, 16, 17]. For coupled ODEs systems that are free of algebraic loops—this is guaranteed, for instance, provided that there is no direct feed-through, i.e., ∂ Ξ i ∕∂ u i = 0, i = 1, …, r, for a precise definition see [10, 16]—we have the following global error estimation for a co-simulation with a Jacobi scheme with constant macro step-size H > 0 assumed to be sufficiently small,

where k denotes the order of the extrapolation and ɛ i x is the global error in subsystem i and ɛ x is the overall global error, cf. [8, 10]. That is, the errors from the subsystems contribute to the global error, as well as the error from extra-(inter-)polation, \(\mathcal{O}(H^{k+1})\). These results can be straightforwardly deduced following classical convergence analysis for ODE time integration schemes.

5.2.2 The DAE-Case

For detailed analysis and both convergence and stability results for coupled DAE systems, we refer the reader to [9, 11, 20, 125] and the literature cited therein. In the sequel we summarize and sketch some aspects from these research papers.

As already said, in the DAE case, the situation becomes more difficult. Following the lines of [20], we consider the following coupled DAE-IVP representation

with x = (x 1 ⊤, …, x r ⊤)⊤, y = (y 1 ⊤, …, y r ⊤)⊤ and initial conditions (x i (t 0), y i (t 0)) = (x i, 0, y i, 0), i = 1, …, r. For the following considerations, we assume that the IVP(s) possess a unique global solution and that the right-hand side functions f i , g i are sufficiently often continuously differentiable and, moreover, that it holds

is non-singular for i = 1, …, r in a neighborhood of a solution (index-one condition for each subsystem). Notice that this representation differs from the previously stated block-oriented form. Equations (5.3)–(5.4) are, however, more convenient, in order to derive and to state the mentioned stability conditions, the coupling here is realized by the fact that all right-hand side functions f i , g i of each subsystem do depend on the entire differential and algebraic variables.

As before, we denote by a \(\ \tilde{\cdot }\ \) quantities that are only available as extra- or interpolated quantity. Thus, establishing a co-simulation scheme of Jacobi-type yields for the i-th subsystem in macro step T n → T n+1

Accordingly, for a Gauss-Seidel-type scheme, we obtain

With g = (g 1, …, g r )⊤, a sufficient (not generally necessary) contractivity condition for stability is derived and proven in [20]. The condition is given by

with \(\tilde{y} = (\tilde{y}_{1},\ldots,\tilde{y}_{r})\). For a detailed list of requirements and assumptions to be taken as well as for a proof and consequences, the reader is referred to [20, 21]. For the special case r = 2, the above condition leads to the following for the Jacobi-type scheme:

whereas, for the Gauss-Seidel-type scheme, we obtain

An immediate consequence is that for a Jacobi-scheme of two coupled DAEs with no coupling in the algebraic equation, i.e., \(g_{i,y_{j}} = 0\), for i ≠ j, we have α = 0.

As a further example, we discuss two mechanical multibody systems coupled via a kinematic constraint:

with q = (q 1 ⊤, q 2 ⊤)⊤ and G(q): = ∂ γ∕∂ q and G i (q): = ∂ γ∕∂ q i , i = 1, 2. Performing an index reduction by twice differentiating the coupling constraint and setting v i : = q i ′, a i : = v i ′ as well as x i : = (q i ⊤, v i ⊤)⊤ and y 1 = a 1 ⊤, y 2: = (a 2 ⊤, λ ⊤)⊤, f i : = (v i ⊤, a i ⊤)⊤, we are in the previously stated general framework:

Herein, γ (II) contains the remainder of the second derivative of γ without the term G 1 a 1 + G 2 a 2.

That is, the only coupling is via algebraic variables and in algebraic equations. If we set up a Jacobi-scheme, in macro step T n → T n+1, in subsystem 1, we have to use extrapolated values from subsystem 2, i.e., y 2 is replaced by

and in subsystem 1, accordingly, \(\tilde{y}_{1}(t) =\varPsi _{ 2}^{y}(t;y_{1}(T_{n-k}),\ldots,y_{1}(T_{n}))\). The above contractivity condition in this case reads

with R i : = G i M i −1 G i ⊤, i = 1, 2.

Analogously, we can consider a Gauss-Seidel-type scheme. Starting with subsystem 1, we have to extrapolate here y 2 from previous macro steps yielding x 1, y 1, which then can be evaluated during time-integration of subsystem 2. Stating the contractivity condition for this case and noticing that only the algebraic variable λ has to be extrapolated from previous time points, the relevant (λ-)part of the condition requires

We observe in both cases that mass and inertia properties of the coupled systems may strongly influence the stability of the co-simulation. In particular for the latter sequential Gauss-Seidel scheme, the order of integration has an essential impact on stability, i.e., the choice of system 1 and 2, respectively, should be taken such that the left-hand side of (5.5) is as small as possible.

This result has been developed and proven earlier in [11] for a more general framework, which is slightly different than our setup and for which the coupled mechanical systems are also a special case. In that paper, a method for stabilization (reducing α) is suggested. In [125], the authors also study stability and convergence of coupled DAE systems in a rather general framework and propose a strategy for stabilization as well.

For the specific application field of electric circuit simulation, the reader is referred to [20, 21] and the references therein. A specific consideration of coupled mechanical multibody systems is provided in [8, 9] and in [126], where the coupling of a multibody system and a flexible structure is investigated and an innovative coupling strategy is proposed. Lately, analysis results on coupled DAE systems solved with different co-simulation strategies and stabilization approaches are provided by the authors of [129, 130]. In [19], a multibody system model of a wheel-loader described as index-three DAE in a commercial software package is coupled with a particle code for soft-soil modeling, in order to establish a coupled digging simulation.

The general topic of coupled DAE system is additionally discussed in the early papers [82, 89, 94].

A multirate integrator for constrained dynamical systems is derived in [96], which is based on a discrete variational principle. The resulting integrator is symplectic and momentum preserving.

6 Real-Time Simulation

An important field in modern numerical system simulation is real-time scenarios. Here, a numerical model is coupled with the real world and both are interacting dynamically. A typical area, in which such couplings are employed, is interactive simulators (‘human/man-in-the-loop’), such as driving simulators or flight simulators, see [58], but also interactively used software (simulators), e.g., for training purposes, cf. [98]. Apart from that, real-time couplings are used in tests for electronic control units (ECU tests) and devices (‘hardware-in-the-loop’—HiL), see, e.g., [15, 122] and in the field of model based controllers (‘model/software-in-the-loop’—MiL/SiL), see, e.g., [42, 43].

It is characteristic for all the mentioned fields that a numerical model replaces a part of the real world. In case of an automotive control unit test, the real control unit hardware is coupled with a numerical model of the rest of the considered vehicle; in case of an interactive driving simulator, the simulator hardware and, by that, the driver or the operator, respectively, is also coupled with a virtual vehicle. The benefits of such couplings are tremendous—tests and studies can be performed under fully accessible and reproducible conditions in the laboratory. Investigations and test runs with real cars and drivers can be reduced and partially avoided, which can save time, costs, and effort substantially. From the perspective of the numerical model, it receives from the real world environment signals as inputs (e.g., the steering-wheel angle from human driver in a simulator) and gives back its dynamical behavior as output (e.g., the car’s reaction is transmitted to the simulator hardware, which, in turn, follows that motion making the driver feel as he would sit in a real car). It is crucial for a realistic realization of such a coupling that the simulation as well as the communication are sufficiently fast. That is, after delivering an input to the numerical model, the real world component expects a response after a fixed time Δ T—and the numerical model has to be simulated for that time span and has to feed back the response on time. Necessary for that is that the considered numerical simulation satisfies the real-time condition : the computation (or simulation) time Δ T comp has to be smaller or equal than the simulated time Δ T.

Physical models are often described as differential equations (mechanical multibody systems that represent a vehicle model). Satisfying the real-time condition here means accordingly that the numerical time integration of the IVP

is executed with a total computation time that is smaller or equal than Δ T. If a complete real-time simulation shall be run on a time horizon [t 0; t f ] which is divided by an equidistant time-grid \(\{T_{0},\ldots,T_{N}\}\), t 0 = T 0, t f = T N , T i+1 − T i = Δ T, the real-time condition must be guaranteed for any subinterval of length Δ T. In fact, this is a coupling exactly as in classical co-simulation—with the decisive difference that one partner is not a numerical model, but a real world component and, thus, the numerical model simulation must satisfy the real-time condition. Obviously, whether or not the real-time condition can be satisfied, strongly depends both on the numerical time integration method and the differential equation and its properties itself. In principle, any time integration method can be applied, provided that the resulting simulation satisfies the real-time condition.

The fulfillment of the real-time condition as stated above has, however, to be assured deterministically in each macro time step T i → T i +Δ T—at least in applications, where breaking this condition leads to a critical system shutdown (e.g., hardware simulators, HiL-tests). Whence, the chosen integration methods should not have indeterministic elements like step-size control or iterative inner methods (solution of nonlinear systems by Newton-like methods): varying iteration numbers lead to a varying computation time. Consequently, for real-time application, time integration methods with fixed time-steps and with a fixed number of possible iterations are preferred. Additionally, to save computation time, typically, low-order methods are in use, which is also caused by the fact that in the mentioned application situations, the coupled simulation needs not to be necessarily highly accurate, but stable.

6.1 Real-Time Integration of DAEs

For non-stiff ODE models, which have to be simulated under real-time conditions, even the simple explicit Euler scheme is frequently used. For stiff ODEs, the linearly implicit methods as discussed in Sect. 4.3 are evident, since for these method class, only linear systems have to be solved internally, which leads to an a priori known, fixed, and moderate computational effort, see [14, 15, 49, 118] and the references therein.

Since all typical and work-proven DAE time integration methods are at least partially implicit leading to the need of iterative computations, it is a common approach to avoid DAE models for real-time applications already in the modeling process (generally, for real-time applications, often specific modeling techniques are applied), whenever it is possible. However, this is often impossible in many application cases of practical relevance. For instance, the above-mentioned examples from the automotive area require a mechanical vehicle model, which is usually realized as mechanical multibody system model, whose underlying equations of motion are often a DAE as stated in Eq. (2.13). Thus, there is a need for DAE time integration schemes that are stable and highly efficient also for DAEs of realistic complexities.

Time integration methods for DAEs with a special focus on real-time applications and the fulfillment of the real-time condition are addressed, e.g., in [14, 15, 31, 32, 39, 44, 49, 50, 119]. In the sequel, we present a specific integration method for the MBS equations of motion (2.13) in its index-two formulation on velocity-level.

For the special case of the semi-explicit DAE describing a mechanical multibody system, compare (2.13), the following linearly implicit method can be applied, which is based on the linearly implicit Euler scheme. The first step is to reduce the index from three to two by replacing the original algebraic equations by its first time-derivative,

which is linear in v. The numerical scheme proposed in [14, 31] consists in handling the time-step for the position coordinates explicitly and requiring that the algebraic equation on velocity level is satisfied, i.e.,

In particular, this leads to the set of linear equations as follows

where J q∕v : = ∂ f∕∂(q∕v)(q i , v i ).

An important issue is naturally the drift-off , cf. Sect. 2, in the neglected algebraic constraints—here, in the above method for the index-two version of the MBS DAE, the error in the algebraic equation on position-level, i.e., 0 = g(q), may grow linearly in time; this effect is even more severe, since a low-order method is in use. Classical strategies to stabilize this drifting are projection approaches, cf., e.g., [73, 100], which are usually of adaptive and iterative character. The authors in [14, 31] propose and discuss a non-iterative projection strategy, which consists, in fact, in one special Newton-step for the KKT conditions related to the constrained optimization problem that is used for projection; thus, only one additional linear equation has to be solved in each time-step. The authors show that using this technique leads to a bound for the error on position level, which is independent of time. An alternative way to stabilize the drift-off effect without substantially increasing the computational effort is the Baumgarte stabilization, cf. Sect. 2 and [31, 48, 122].

7 Parametric Sensitivity Analysis and Adjoints

The parametric sensitivity analysis is concerned with parametric initial value problems subject to DAEs on the interval [t 0, t f ] given by

where \(p \in \mathbb{R}^{m}\) is a parameter vector and the mapping \(z_{0}: \mathbb{R}^{m}\longrightarrow \mathbb{R}^{n}\) is at least continuously differentiable. We assume that the initial value problem possesses a solution for every p in some neighborhood of a given nominal parameter \(\hat{p}\) and denote the solution by z(t; p). In order to quantify the influence of the parameter on the solution, we are interested in the so-called sensitivities (sensitivity matrices)

Throughout we tacitly assume that the sensitivities actually exist.

In many applications, e.g., from optimal control or optimization problems involving DAEs, one is not directly interested in the sensitivities S(⋅ ) themselves but in the gradient of some function \(g: \mathbb{R}^{m}\longrightarrow \mathbb{R}\) defined by

where \(\varphi: \mathbb{R}^{n} \times \mathbb{R}^{m}\longrightarrow \mathbb{R}\) is continuously differentiable. Of course, if the sensitivities S(⋅ ) are available, the gradient of g at \(\hat{p}\) can easily be computed by the chain rule as

However, often the explicit computation of S is costly and should be avoided. Then the question for alternative representations of the gradient \(\nabla g(\hat{p})\) arises, which avoids the explicit computation of S. This alternative representation can be derived using an adjoint DAE. Both approaches are analytical in the sense that they provide the correct gradient, if round-off errors are not taken into account.

Remark 7.1

The computation of the gradient using S is often referred to as the forward mode and the computation using adjoints as the backward or reverse mode in the context of automatic differentiation, compare [72]. Using automatic differentiation is probably the most convenient way to compute the above gradient, since powerful tools are available, see the web-page familywww.autodiff.org.

The same kind of sensitivity investigations can be performed either for the problem (7.1)–(7.2) in continuous time or for discretizations thereof by means of one-step or multi-step methods.

7.1 Sensitivity Analysis in Discrete Time

7.1.1 The Forward Mode

Suppose a suitable discretization scheme of (7.1)–(7.2) is given, which provides approximations z h (t i ; p) at the grid points \(t_{i} \in \mathbb{G}_{h}\) in dependence on the parameter p. We are interested in the sensitivities

for a nominal parameter \(\hat{p} \in \mathbb{R}^{m}\). As the computations are performed on a finite grid, these sensitivities can be obtained by differentiating the discretization scheme with respect to p. This procedure is called internal numerical differentiation (IND) and was introduced in [25].

To be more specific, let \(\hat{p}\) be a given nominal parameter and consider the one-step method

Differentiating both equations with respect to p and evaluating the equations at \(\hat{p}\) yields

Herein, we used the abbreviation [t i ] for \((t_{i},z_{h}(t_{i};\hat{p}),h_{i},\hat{p})\). Evaluation of (7.8)–(7.9) yields the desired sensitivities S h (t i ) of \(z_{h}(t_{i};\hat{p})\) at the grid points, if the increment function Φ of the one-step method and the function z 0 are differentiable with respect to z and p, respectively. Note that the function z 0 can be realized by the projection method in LSQ(p) in Sect. 3.2 and sufficient conditions for its differentiability are provided by Theorem 3.1.

The computation of the partial derivatives of Φ is more involved. For a Runge–Kutta method Eqs. (4.7)–(4.9) (with an additional dependence on the parameter p) have to be differentiated with respect to z and p. Details can be found in [68, Sect. 5.3.2].

The same IND approach can be applied to multi-step methods. Differentiation of the scheme (4.2) and the consistent initial values

with respect to p and evaluation at \(\hat{p}\) yields the formal scheme

More specifically, for an s-stage BDF method the function ψ is implicitly given by (4.4) (with an additional dependence on the parameter p). Differentiation of (4.4) with respect to p yields

and, if the iteration matrix \(M:= F_{z}^{{\prime}}[t_{i+s}] + \frac{\alpha _{s}} {h_{i+s-1}} F_{z^{{\prime}}}^{{\prime}}[t_{i+s}]\) is non-singular,

Herein, we used the abbreviation \([t_{i+s}] = \left (t_{i+s},z_{h}(t_{i+s}), \frac{1} {h_{i+s-1}} \sum \limits _{k=0}^{s}\alpha _{k}z_{h}(t_{i+k})\right )\).

7.1.2 The Backward Mode and Adjoints

Consider the function g in (7.4) subject to a discretization scheme, i.e.