Abstract

The purpose of this paper is to solve the 0–1 k-item quadratic knapsack problem (kQKP), a problem of maximizing a quadratic function subject to two linear constraints. We propose an exact method based on semidefinite optimization. The semidefinite relaxation used in our approach includes simple rank one constraints, which can be handled efficiently by interior point methods. Furthermore, we strengthen the relaxation by polyhedral constraints and obtain approximate solutions to this semidefinite problem by applying a bundle method. We review other exact solution methods and compare all these approaches by experimenting with instances of various sizes and densities.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The 0–1 k-item quadratic knapsack problem consists of maximizing a quadratic objective function subject to a linear capacity constraint with an additional equality cardinality constraint:

where n denotes the number of items, and all the data, k (number of items to be filled in the knapsack), \(a_j\) (weight of item j), \(c_{ij}\) (profit associated with the selection of items i and j) and b (capacity of the knapsack) are nonnegative integers. Without loss of generality, matrix \(C=(c_{ij})\) is assumed to be symmetric. Moreover, we assume that \(\max _{j=1,\ldots ,n} a_j \le b < \sum _{j=1}^n a_j\) in order to avoid either trivial solutions or variable fixing via constraint (1). Let us denote by \(k_{max}\) the largest number of items which could be filled in the knapsack, that is the largest number of the smallest \(a_j\) whose sum does not exceed b. We can assume that \(k \in \{2,\ldots ,k_{max} \}\), where \(k_{max}\) can be found in O(n) time [2]. Otherwise, either the value of the problem is equal to \(\max _{i=1,\ldots ,n} c_{ii}\) (for \(k=1\)), or the domain of (kQKP) is empty (for \(k > k_{max}\)).

(kQKP) is an NP-hard problem as it includes two classical NP-hard subproblems, the k-cluster problem [6] by dropping constraint (1), and the quadratic knapsack problem [18] by dropping constraint (2). Even more, the work of Bhaskara et al. [4] indicates that approximating k-cluster within a polynomial factor might be a harder problem than Unique Games. Rader and Woeginger [19] state negative results concerning the approximability of QKP if negative cost coefficients are present.

Applications of (kQKP) cover those found in previous references for k-cluster or classical quadratic knapsack problems (e.g., task assignment problems in a client-server architecture with limited memory), but also multivariate linear regression and portfolio selection. Specific heuristic and exact methods including branch-and-bound and branch-and-cut with surrogate relaxations have been designed for these applications (see, e.g., [3, 5, 9, 17, 22]).

The purpose of this paper is twofold.

-

1.

We introduce a new algorithm for solving (kQKP) and

-

2.

we briefly review other state of the art methods and compare the methods by running numerical experiments.

Our new algorithm consists of a branch-and-bound framework using

-

a combination of a semidefinite relaxation and polyhedral cutting planes to obtain tight upper bounds and

-

fast hybrid heuristics [16] for computing high quality lower bounds.

This paper is structured as follows. In Sect. 2 a semidefinite relaxation is derived, followed by a discussion of solving the semidefinite problems in Sect. 3. The relaxation is used inside a branch-and-bound framework, the various components of this branch-and-bound algorithm are discussed in Sect. 4. Other methods for solving (kQKP) and numerical results are presented in Sect. 5 and Sect. 6 concludes.

Notation. We denote by e the vector of all ones of appropriate size. \(\mathrm {diag}(X)\) refers to diagonal of X as a vector and \(\mathrm {Diag}(v)\) is the diagonal matrix having diagonal v.

2 A Semidefinite Relaxation of (kQKP)

In order to develop a branch-and-bound algorithm for solving (kQKP) to optimality we aim in finding strong upper bounds. Semidefinite optimization proved to provide such strong bounds, see e.g. [1, 20, 21].

A straightforward way to obtain a semidefinite relaxation is the following. Express all functions involved as quadratic functions, i.e. functions in \(xx^{t}\), replace the product \(xx^{t}\) by a matrix X and get rid of non-convexities by relaxing \(X=xx^{t}\) to \(X\succeq xx^{t}\).

Hence, we apply the following changes:

-

Replace the constraint \(e^{t}x = k\) by the constraint \((e^{t}x-k)^{2}=0\).

-

As for the capacity constraint, define \(b'\) to be the sum of the weights of the k smallest items. Clearly, \(b'\le a^{t}x\) is a valid constraint for (kQKP). Combining this redundant constraint with the capacity constraint we obtain \((b'-a^{t}x)(b-a^{t}x) \le 0\).

-

Transform the problem to a \(\pm 1\) problem by setting \(y=2x-e\).

-

Relax the problem by relaxing \(Y=yy^{t}\) to \(Y\succeq yy^{t}\), i.e., dropping the constraint Y being of rank one.

This procedure yields the following semidefinite problem:

with \(\tilde{E}= \tilde{e}\tilde{e}^{t}\), \(\tilde{e}= \begin{pmatrix}n-2k\\ e\end{pmatrix}\), \(\tilde{A}= \tilde{a}\tilde{a}^{t}\), \(\tilde{a}= \begin{pmatrix}a^{t}e-(b+b')\\ a\end{pmatrix}\), and appropriate\(\tilde{C}\).

Observation 1

(\(SDP_1\)) has no strictly feasible point and thus Slater’s condition does not hold.

Proof

Note that \({\langle \tilde{E},Y \rangle }=\tilde{e}^{t}Y\tilde{e}=0\) together with \(Y\succeq 0\) implies Y being singular and thus every feasible solution is singular.

\(\square \)

Observe that \(\tilde{e}= \begin{pmatrix}n-2k\\ e\end{pmatrix}\) is an eigenvector to the eigenvalue 0 of every feasible Y. Now consider matrix \(V=\begin{pmatrix}\frac{1}{2k-n}e^{t}\\ I_{n}\end{pmatrix}\). V spans the orthogonal complement of the span of eigenvector \(\tilde{e}\). Set \(Y=VXV^{t}\) to “project out” the 0-eigenvalue and consider the \(n\times n\) matrix X instead of the \((n+1) \times (n+1)\) matrix Y. The relationship between X and Y is simply given by

Looking at the effect of the constraints of (\({SDP_{1}}\)) on matrix X, we derive the following conditions.

-

From \(\mathrm {diag}(Y)=e\) we obtain the constraints

$$\begin{aligned} e^{t}Xe&= (2k-n)^{2}\\ \mathrm {diag}(X)&= e \end{aligned}$$ -

The left-hand side of constraint \({\langle \tilde{E},Y \rangle }=0\) translates into

$$\begin{aligned} {\langle \tilde{E},Y \rangle } = {\langle \tilde{E},VXV^{t} \rangle } = {\langle V^{t}\tilde{E}V,X \rangle } = {\langle 0,X \rangle } = 0 \end{aligned}$$and the constraint becomes obsolete.

-

Constraint \({\langle \tilde{A},Y \rangle } \le (b-b')^{2}\) yields the following.

$$\begin{aligned} {\langle \tilde{A},Y \rangle }&= {\langle \tilde{A},VXV^{t} \rangle } = {\langle V^{t}\tilde{A}V,X \rangle }=\\&={\langle (\frac{a^{t}e-(b+b')}{2k-n}e+a)(\frac{a^{t}e-(b+b')}{2k-n}e+a)^{t},X \rangle } \end{aligned}$$Hence,

$$\begin{aligned} {\langle (\frac{a^{t}e-(b+b')}{2k-n}e+a)(\frac{a^{t}e-(b+b')}{2k-n}e+a)^{t},X \rangle } \le (b-b')^{2} \end{aligned}$$

Defining \(\bar{a}= (\frac{a^{t}e-(b+b')}{2k-n}e+a)\) we finally obtain

where \(E=ee^{t}\), \(A=\bar{a}\bar{a}^{t}\), and appropriate cost matrix \(\bar{C}\).

Strengthening the Relaxation. Since we derived a relaxation from a problem in \(\pm 1\) variables, we can further tighten the bound by adding the well known triangle inequalities to the semidefinite relaxation (SDP). These are for any triple \(1 \le i< j < k \le n\):

For several problems formulated in \(\pm 1\) variables adding these constraints significantly improves the bound, see e.g. [20]. The set of matrices satisfying all triangle-inequalities is called the metric polytope and is denoted by MET. Thus, the strengthend semidefinite relaxation reads

3 Solving the Semidefinite Relaxations

3.1 Solving the Basic Relaxation (SDP)

The most prominent methods for solving semidefinite optimization problems are interior point methods. The interior point method is an iterative algorithm where in each iteration Newton’s method is applied in order to compute new search directions.

Consider the constraints \({\mathcal A}(X)=(\vdots )\) with \({\mathcal A}(X)= \left( \begin{array}{c} \langle A_{1},X \rangle \\ \langle A_{2},X \rangle \\ \vdots \\ \langle A_{m},X \rangle \end{array} \right) \). In each iteration we determine a search direction \(\varDelta y\) (y are variables in the dual semidefinite problem) by solving the system \(M \varDelta y = rhs\) where

Z denotes the (positive definite) matrix variable of the dual semidefinite program.

Forming this system matrix requires \(O(mn^{3}+m^{2}n^{2})\) steps and is among the most time-consuming operations inside the interior point algorithm. (The other time-consuming steps are maintaining positive definiteness of the matrices X and Z and linear algebra operations such as forming inverse matrices.)

The primal-dual pair of (SDP) in variables (X, s, y, Z, t) is given as follows.

Hence, the set of constraints is rather simple and the system matrix M reads

Even more, all data matrices have rank one which can be exploited when computing the inner products, e.g.,

Thus, the computation of the inner products of the matrices simplifies and computing the system matrix can be reduced from \(O(mn^{3}+m^{2}n^{2})\) to \(O(mn^{2}+m^{2}n)\). And since \(m=n+2\) in our case, we end up with \(O(n^{3})\).

Hence, (SDP) can be solved efficiently by interior point methods.

3.2 Solving the Strengthened Relaxation (\(SDP_{MET}\))

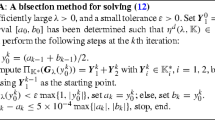

Problem (\(SDP_{MET}\)) has a considerably larger number of constraints than (SDP). Remember that \(X\in MET\) is described by \(4{n \atopwithdelims ()3}\) linear inequalities and thus solving (\(SDP_{MET}\)) by interior point methods is intractable. An alternative has been proposed in [12]. Therein the concept of bundle methods is used, in order to obtain an approximate optimizer on the dual functional and thus getting a valid upper bound on (\(SDP_{MET}\)), leading to a valid upper bound on (kQKP).

Bundle methods have been developed to minimize nonsmooth convex functions. To characterize the problem to be solved, an oracle has to be supplied that evaluates the function at a given point and computes an \(\epsilon \)-subgradient. The set of points, function values, and subgradients is collected in a “bundle”, which is used to construct a cutting plane model minorizing the function to be minimized. By doing a sequence of descent steps the cutting plane model is refined and one gets closer to the minimizer of the function.

We will apply the bundle method to minimize the dual functional of (\(SDP_{MET}\)). Let

i.e., the feasible region of (SDP). We introduce the dual functional

where \({\mathcal T}(X) \le e\) denotes the triangle inequalities (1). Minimizing \(f(\gamma )\) over \(\gamma \ge 0\) gives a valid upper bound on (kQKP). In fact, any \({\tilde{\gamma }} \ge 0\) gives a valid upper bound

Since we use this bound inside a branch-and-bound framework, this allows us to stop early and prune a node as soon as \(f(\tilde{\gamma })\) is smaller than some known lower bound. Furthermore, we do not rely on getting to the optimum. We will stop once we are “close” to optimum and branch, rather than investing time in dropping the bound by a tiny number.

Evaluating function (2) (the most time consuming step in the bundle method) amounts in solving (SDP) (with varying cost matrix), which can be done efficiently as discussed in the previous section. Having the maximizer \(X^{*}\) of (SDP), i.e. the function evaluation, a subgradient is given by \(g^{*}=e-{\mathcal T}(X^{*})\).

Dynamic Version of the Bundle Method. The number of variables \(\gamma \) in (2) is \(4{n \atopwithdelims ()3}\). This number is substantially larger than the dimension of the problem and we are interested only in those inequalities that are likely to be active at the optimum. Thus, we do not consider all triangle inequalities but work with a subset that is updated on a regular basis, say every fifth descent step. The update consists of

-

1.

adding the m inequalities being most violated by the current iterate X and

-

2.

removing constraints with \(\gamma \) close to 0 (an indicator for an inactive constraint).

In this way we are able to efficiently run the bundle algorithm by keeping the size of the variable vector \(\gamma \) reasonably small.

4 Branch and Bound

We develop an exact solution method for solving (kQKP) by designing a branch-and-bound framework using relaxation (\(SDP_{MET}\)) discussed above for getting upper bounds.

The remaining tools of our branch-and-bound algorithm are described in this section.

4.1 Heuristics for Obtaining Lower Bounds

We use two heuristics to obtain a global lower bound inside our algorithm: one that is executed at the root node and another one that is called at each other node in the branch-and-bound tree.

As a heuristic method at the root node we chose the primal heuristic denoted by \(H_{pri}\) in [16], which is an adaption of a well-known heuristic developed by Billionnet and Calmels [7] for the classical quadratic knapsack problem (QKP). This primal heuristic combines a greedy algorithm with local search.

At each node of the branch-and-bound tree, we apply a variable fixation heuristic inspired from \(H_{sdp}\) [16]. This heuristic method uses the solution of the semidefinite relaxation obtained at each node, it fixes variables under some treshold \(\epsilon >0\) to zero and applies the primal heuristic over the reduced problem. It updates the solution by performing a fill-up and exchange procedure over the unreduced problem. This procedure iterates, increasing \(\epsilon \) at each iteration, until the reduced problem is empty.

Both heuristics, the primal and the variable fixation one, are very fast and take only hundredths of a second for sizes of our interest.

4.2 Branching Rule and Search Strategy

As a branching variable we choose the “most fractional” variable, i.e., \(v=\mathrm {argmin}_{i}|\frac{1}{2} - x_{i}|\). The vector x is extracted from matrix X given by the semidefinite relaxation.

We traverse the search tree in a best first search manner, i.e., we always consider the node in the tree having the smallest upper bound.

4.3 Speed up for Small k

Whenever k, the number of items to be filled in the knapsack, is small, a branch-and-prune algorithm is triggered in order to speed-up the approach. No relaxation is performed at each node of the branch-and-prune tree and a fast depth first search strategy, in priority fixing variables to one, is implemented. We only check the feasibility of the current solution through the cardinality and capacity constraints.

This branch-and-prune approach is very fast, at most a few seconds, for very small k. So we embedded it into our branch-and-bound algorithm and run it at nodes where the remaining number of items to be filled in the current knapsack is very small (less or equal than 5 in practice). To solve the original problem, we can also replace the global branch-and-bound method using this branch-and-prune approach for small initial values of k, in practice we choose \(k \le 10\).

5 Numerical Results

We coded the algorithm in C++. For the function evaluation (i.e., solving (SDP)) we implemented a predictor-corrector variant of an interior point algorithm [14]. We use the ConicBundle Library of Ch. Helmberg [13] as framework for the bundle method to solve (\(SDP_{MET}\)).

We compare our method \( (B \& C)\) to:

-

(Cplex): IBM CPLEX solver, version 12.6.2 [11], with default settings. The original nonconvex 0–1 quadratic problem is given directly to CPLEX which is now able to deal with such a formulation.

-

(MIQCR+Cplex): our implementation of the MIQCR method [8]. MIQCR uses a semidefinite relaxation in order to obtain a problem having a convexified objective function; the resulting convex integer problem can then be solved by standard solvers. We use the CSDP solver [10] for solving the semidefinite relaxation to convexify the objective function, and IBM CPLEX 12.6.2 [11] with default settings to solve the reformulated convex problem.

-

(BiqCrunch): Also BiqCrunch [15] is an algorithm based on semidefinite and polyhedral relaxations within a branch-and-bound framework. In BiqCrunch a quadratic regularization term is added to the objective function of the semidefinite problem and a quasi-Newton method is used to compute the bounds. We use the BiqCrunch solver enhanced with our primal and variable fixation heuristics described in Sect. 4.1.

All experiments have been performed on an Intel i7-2600 quad core 3.4 GHz with 8 GB of RAM, using only one core. The computational results have been obtained for randomly generated instances from [16] with up to 150 variables. We choose \(k \in \{1,\dots , \lfloor \frac{n}{4}\rfloor \}\), b, \(a_j\) and \(c_{ij}\) are positive integers. The time limit for each approach is 3 h.

In Table 1 we display the run time of the overall algorithm, the gap at the root node, and the number of nodes produced during the branch-and-bound algorithm for each method. Each line of Table 1 represents average values over 10 instances for each n and \(\delta \) where n is the number of variables and \(\delta \) is the density of the profit matrix C. We put the number of instances solved within the time limit into brackets in case not all 10 instances could be solved. Average values are computed only over instances solved within the time limit.

The numerical experiments demonstrate that the methods having semidefinite optimization inside clearly outperform Cplex. In fact, Cplex already fails to solve all instances of size \(n=50\) within the time limit.

Instances with up to \(n=100\) variables can be solved most efficiently by the MIQCR approach, i.e., finding a convexified problem via semidefinite optimization and then solve the resulting convex problem using Cplex.

For \(n>100\), BiqCrunch performs best in terms of overall run time, but the domincance to MIQCR and our approach is not significant.

Our new approach provides by far the smallest gap at the root node. The high quality of our bound is also reflected in the number of nodes in the branch-and-bound tree. Our method explores a substantial smaller number of nodes than the other approaches.

Our approach is not superior to MIQCR or BiqCrunch in terms of overall computation time, however, the implementation is a prototype and there is room for speeding up the approach by experimenting with different settings in the branch-and-bound framework (such as branching strategies) as well as parameter settings in the bundle algorithm and in the update of the set of triangle inequalities. This is currently under investigation.

6 Conclusion

The 0–1 k-item quadratic knapsack problem is a challenging problem, as it includes two NP-hard problems, namely quadratic knapsack and k-cluster. We review approaches to solve this problem to optimality and introduce a new method, where the bound computation is based on a semidefinite relaxation. The derived basic semidefinite relaxation has only simple constraints, in fact all constraints are of rank one. This can be exploited in interior point methods to efficiently compute the system matrix. We strengthen the relaxation using triangle inequalities and solve the resulting semidefinite problem by a dynamic version of the bundle method.

To have a comparison with state of the art algorithms we implement the convexification algorithm MIQCR [8], use BiqCrunch [15] enhanced with our primal heuristics, and run Cplex. The numerical results prove that CPLEX is clearly outperformed by all the methods based on semidefinite programming. Our new method provides the tightest bound at the root node, while the overall computation time is smallest for MIQCR for \(n \le 100\) and BiqCrunch for larger n. An optimized implementation and a study of the best parameter settings for the various components inside our code is subject of further study.

References

Anjos, M.F., Ghaddar, B., Hupp, L., Liers, F., Wiegele, A.: Solving \(k\)-way graph partitioning problems to optimality: the impact of semidefinite relaxations and the bundle method. In: Jünger, M., Reinelt, G. (eds.) Facets of combinatorial optimization, pp. 355–386. Springer, Heidelberg (2013)

Balas, E., Zemel, E.: An algorithm for large zero-one knapsack problems. Oper. Res. 28, 1130–1154 (1980)

Bertsimas, D., Shioda, R.: Algorithm for cardinality-constrained quadratic optimization. Comput. Optim. Appl. 43, 1–22 (2009)

Bhaskara, A., Charikar, M., Guruswami, V., Vijayaraghavan, A., Zhou, Y.: Polynomial integrality gaps for strong SDP relaxations of densest \(k\)-subgraph. In: Proceedings of the Twenty-Third Annual ACM-SIAM Symposium on Discrete Algorithms, pp. 388–405 (2012)

Bienstock, D.: Computational study of a family of mixed-integer quadratic programming problems. Math. Programm. 74, 121–140 (1996)

Billionnet, A.: Different formulations for solving the heaviest k-subgraph problem. Inf. Syst. Oper. Res. 43(3), 171–186 (2005)

Billionnet, A., Calmels, F.: Linear programming for the 0–1 quadratic knapsack problem. Eur. J. Oper. Res. 92, 310–325 (1996)

Billionnet, A., Elloumi, S., Lambert, A.: Extending the QCR method to general mixed-integer programs. Math. Programm. 131(1–2), 381–401 (2012)

Bonami, P., Lejeune, M.: An exact solution approach for portfolio optimization problems under stochastic and integer constraints. Oper. Res. 57, 650–670 (2009)

Borchers, B.: CSDP, a C library for semidefinite programming. Optim. Meth. Softw. 11(1), 613–623 (1999)

IBM ILOG CPLEX Callable Library version 12.6.2. http://www-03.ibm.com/software/products/en/ibmilogcpleoptistud/

Fischer, I., Gruber, G., Rendl, F., Sotirov, R.: Computational experience with a bundle approach for semidefinite cutting plane relaxations of Max-Cut, equipartition. Math. Programm. Ser. B 105(2–3), 451–469 (2006)

Helmberg, C.: The conicbundle library for convex optimization, August 2015. https://www-user.tu-chemnitz.de/~helmberg/ConicBundle/Manual/index.html

Helmberg, C., Rendl, F., Vanderbei, R.J., Wolkowicz, H.: An interior-point method for semidefinite programming. SIAM J. Optim. 6(2), 342–361 (1996)

Krislock, N., Malick, J., Roupin, F.: Improved semidefinite bounding procedure for solving max-cut problems to optimality. Math. Program. Ser. A 143(1–2), 61–86 (2014)

Létocart, L., Plateau, M.-C., Plateau, G.: An efficient hybrid heuristic method for the 0–1 exact \(k\)-item quadratic knapsack problem. Pesquisa Operacional 34(1), 49–72 (2014)

Mitra, G., Ellison, F., Scowcroft, A.: Quadratic programming for portfolio planning: insights into algorithmic and computational issues. J. Asset Manage. 8, 249–258 (2007). Part ii: Processing of Portfolio Planning Models with Discrete Constraints

Pisinger, D.: The quadratic knapsack problem: a survey. Discrete Appl. Math. 155, 623–648 (2007)

Rader Jr., D.J., Woeginger, G.J.: The quadratic 0–1 knapsack problem with series-parallel support. Oper. Res. Lett. 30(3), 159–166 (2002)

Rendl, F., Rinaldi, G., Wiegele, A.: Solving max-cut to optimality by intersecting semidefinite, polyhedral relaxations. Math. Program. Ser. A 121(2), 307–335 (2010)

Rendl, F., Sotirov, R.: Bounds for the quadratic assignment problem using the bundle method. Math. Program. Ser. B 109(2–3), 505–524 (2007)

Shawa, D.X., Liub, S., Kopmanb, L.: Lagrangean relaxation procedure for cardinality-constrained portfolio optimization. Optim. Meth. Softw. 23, 411–420 (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Létocart, L., Wiegele, A. (2016). Exact Solution Methods for the k-Item Quadratic Knapsack Problem. In: Cerulli, R., Fujishige, S., Mahjoub, A. (eds) Combinatorial Optimization. ISCO 2016. Lecture Notes in Computer Science(), vol 9849. Springer, Cham. https://doi.org/10.1007/978-3-319-45587-7_15

Download citation

DOI: https://doi.org/10.1007/978-3-319-45587-7_15

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-45586-0

Online ISBN: 978-3-319-45587-7

eBook Packages: Computer ScienceComputer Science (R0)