Abstract

The image signature concept can be a successful approach to image comparison in content-based retrieval, but it is a very challenging task. Fundamental for this purpose is defining signature similarity. There exist a lot of similarity models which measure similarity of images or their objects in multimedia databases. In order to deal with semantic retrieval, we have introduced a three stage search engine. At each stage, we need different but equally effective similarity measures. Here, we have analysed asymmetric and symmetric approaches to signature comparison. In our experiment, we present an extensive comparison of some similarity measures dedicated to image retrieval.

Access provided by CONRICYT-eBooks. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the recent years, many researchers have intensively analysed similarity evaluations between whole images, their fragments, or some image elements, such as contours. Content-based similarity models have been developed [1] so as to comply with the system needs and user requirements regarding semantic multimedia retrieval.

According to Beecks et al. [1], a similarity model between the query and image or a group of image objects can be determined, even for a large multimedia database, by working out only the distance between their corresponding feature representations. We claim that, even though a query is automatically generated by a CBIR system or is prepared manually by a user as, for instance, we proposed in our system introducing a special dedicated GUI [2], the introduction of the spatial object location is strongly recommended.

Therefore, we provide in this paper a comparison between the Beecks’ concept of feature signatures and their similarity measure, and our search engine concept where image signature and spatial object location are treated as global image information, but object features are only local. We decided to compare two kinds of signatures in order to check what gain it would bring if we found objects and compare their locations, as we proposed in our search engine [3, 4]. The aforementioned knowledge is crucial for the effectiveness of multimedia retrieval systems.

2 Signature Matching

2.1 Metrics Properties

Generally, when we analyse a metric space we assume by default that four basic conditions are satisfied:

-

Non-negativity: d(x, y) ≥ 0;

-

Identity: d(x, y) = 0 <=> x = y;

-

$$ {\text{Symmetry}}:d\left( {x,y} \right) = d\left( {y,x} \right); $$(1)

-

Triangle inequality: d(x, y) + d(y, z) ≥ d(x, z) for any points x, y, z of the set.

These conditions express our common notions of distance. For example, the distance between distinct points is positive and the distance from point A to B is equal to the distance from B to A.

We may also need to find the distance between two vectors, namely, feature vectors. Then, in a normed vector space (X, ||∙||) we can define a metric on X by

A metric defined in such a way is translation invariant and homogeneous. The most widely used similarity measure is the Euclidean measure. It can be applied to measure the distance between two points or between two feature vectors.

However, in real life the symmetry is questionable, for example, the way up a hill and down a hill takes different time. A similar situation is when we compare images. We can imagine various criteria, for instance, the number of particular elements or segments which constitute a query. Hence, when we select as a query an image among the previous matching images, we obtain a different set of matching images because the symmetry is incomplete.

In such a situation a quasimetric may be needed. A quasimetric is defined as a function that satisfies the previously mentioned axioms for a metric without symmetry:

This notion is more often used in practice than in mathematics, and that is why sometimes it is called a semimetrics [5].

2.2 Signatures

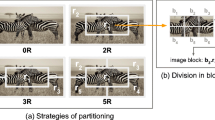

In our system [3], at the first stage, objects o ij are extracted from an image I i based on low-level features. These features are used for object classification. Additionally, the objects’ mutual spatial relationship is calculated based on the centroid locations and angles between vectors connecting them, with an algorithm proposed by Chang and Wu [6] and later modified by Guru and Punitha [7], to determine the first three principal component vectors (PCV oi , i = 1, …, 3 for each object o ij ). Spatial object location in an image is used as the global feature [4].

Definition 2.1

(Image signature [3])

Let the query be an image I q , such as I q = {o q1, o q2, …, o qn }, where o ij are objects. An image in the database is denoted as I b , I b = {o b1, o b2, …, o bm }. Let us assume that in the database there are, in total, M classes of the objects marked as L 1 , L 2 , …, L M . Then, as the image signature I i we denote the following vector:

where: nobc ik are the number of objects o ij of class L k segmented from an image I i . Note that the length of a signature is always the same and is equal to M.

As the second kind of signature we adopt the feature signature defined by Beecks et al. [8] in 2009 who aggregated features into a compact feature representation, for the purpose of effective calculation of similarity between two data objects. Rubner et al. [9], used two common feature representation types: feature histograms and feature signatures, which were worked out from global partitioning of the feature space and local feature clustering for each data object. Contrary to our approach, these authors applied global partitioning to the feature space, regardless of feature distributions of single objects, in order to create feature histograms which in turn correspond to the number of features located in the global partition.

According to Beecks et al. feature signature is defined as follows:

Definition 2.2

(Feature signature [1])

Let FS ⊆ R k be a feature space and C = C 1, …, C k be a local clustering of the features f 1, …, f n ∈FS of object o ij . Then a feature signature S o of length M i can be defined as a set of tuples from a FS × R + such as:

where: \( c_{k}^{o} = \frac{{\mathop \sum \nolimits_{{f \in c_{k} }} f}}{{|c_{k} |}} \) is a centroid of similar objects o ij of image I i and \( w_{k}^{o} = \frac{{|c_{k} |}}{n} \) is its weight.

It means that a feature signature S o of object o ij is a set of centroids \( c_{k}^{o} \) ∈ FS with the corresponding weights \( w_{k}^{o} \) ∈ R +.

According to Definition 2.2. carrying out the feature clustering individually for each data object reflects aggregation of feature distribution in a better way than any feature histogram. However, feature histograms are a special case of feature signatures, whose centroids stay the same for the whole database and the information about objects is reflected only via weights, which results in a limitation of object representation.

By this approach, Beecks et al. aggregated the objects’ location in the feature space which is substituted only by grouping similar feature values in signature and histogram form. They proposed only seven basic features: two coordinates, three components of colour and two texture descriptors, whereas we offered 45 features for a particular object, for example: moments of inertia and Zernike’s moments [10].

In our adaptation of their method, a number of objects of a particular class were interpreted as weights. Object centroids represent locations of real, early segmented, objects in the image space. Here, class centroids are situated in the geometrical centre among particular object centroids. We also use different methods to determine the similarity in these two approaches.

2.3 Similarity Functions

Asymmetry is one of the most controversial properties of similarity. In this subsection we describe the asymmetric approach to image signature matching and a signature quadratic form distance in comparison with standard similarity measures, such as Euclidean, absolute difference or Hamming. All the measures are implemented in our search engine [3, 4].

In order to answer the query I q , we compare it with each image I b from the database in the following way. A query image is obtained from the GUI, where the user constructs their own image from selected DB objects. First of all, we determine a similarity measure simsgn between the signatures of query I q and image I b :

computing it as an equivalent with the Hamming distance between two vectors of their signatures (cf. (4)), such that simsgn ≥ 0 and \( \mathop {max}\limits_{i} ( {\text{nob}}_{qi} - {\text{nob}}_{bi} ) \) ≤ thr, thr is the limitation of the quantity of elements of a particular class by which I q and I b can differ. It means that we select from the DB images with the same classes as the query. The above comparison is asymmetric because if we interchange the query and the image, we obtain negative similarity value, that is: simsgn (I q, I b ) = – simsgn (I b, I q ). Then, the condition of non-negativity of similarity is incomplete. This fact is crucial from the semantic matching point of view because the human brain recognizes things in context with others.

If the maximum component of (6) is bigger than a given threshold (a parameter of the search engine set by the user), then image I b is discarded. Otherwise, it goes to the next step and we find the spatial similarity simPCV (7) of images I q and I b , based on the City block distance between their PCVs as:

Definition 2.3

(Signature Quadratic Form Distance [1])

If S o = {\( c_{k}^{o} ,w_{k}^{o} , k = 1, \ldots ,M \)} and S q = {\( c_{k}^{q} ,w_{k}^{q} , k = 1, \ldots ,N \)} are two feature signatures, then the Signature Quadratic Form Distance (SQFD) between S o and S q is defined as:

where: A∈R (M+N)×(M+N) is the similarity matrix, w q = (\( w_{1}^{q} , \ldots ,w_{m}^{q} \)) and w o = (\( w_{1}^{o} \),…, \( w_{n}^{o} \)) are weight vectors and (w o | − w q ) = (\( w_{1}^{o} \), …, \( w_{n}^{o} , - w_{1}^{q} , \ldots , - w_{m}^{q} \)).

The similarity matrix A can be constructed assuming that there exists a similarity function f: FS × FS → R. The a k,l components of A are calculated as follows:

where: k, l = 1, …, N + M.

In our approach, there is the same number of classes for each image signature (N = M), hence we decided to assume the length of vectors w q and w o equal to M which implies the size of a square matrix A [M×M]. Then the signature form distance (8) can be simplified to the form:

and a k,l components are computed only for k, l = 1,…, M, according to (9). Here, in Definition 2.3 and in our approach, S q means a query signature, whereas S o means image signatures in the database. The signature similarity, computed according to SQFD (cf. (10)), gives more information than the one computed it according to simsgn (cf. (6)) which is seen in the results.

3 Results

Below we present examples of matching results obtained for the above-mentioned similarity measures. We applied two queries designed in our GUI. The former is a semidetached building with a hip roof and the latter is a terraced house with three gable roofs. From the semantic matching point of view, the best results should be images of houses with the same kinds of roofs and similar number of building segments. All figures present query (far left picture in each) and 11 best matched images which are ordered decreasingly, according to the similarity to the query. Figures 1 and 2 present results found according to the asymmetric image signature (cf. (6)). We can see that both results contain buildings with two flat roofs and one with a semicircular type, which are not desired.

Figures 3 and 4 present matching for both queries computed according to our modification of signature form distance (cf. (10)) and all results fulfil the semantic requirements. Figures 5 and 6 present matching for these same queries, computed according to the signature quadratic form distance (cf. (8)).

Generally, in the case of semantic comparison, it is difficult to compare these results in a quantitative way, so that is why we present the result in full form. This gives us the opportunity for a qualitative evaluation. Hence, as we can see, especially in Fig. 6, where there are no fully correct matchings because separate doors, stairs, balconies appear strongly undesirable. It happens because the Beecks’ team analysed less information about object spatial location than we did. Even though we used the simplified signature form distance, we obtained better results thanks to the fact that we added the separate object spatial location similarity (cf. (7)).

4 Discussion

In our analysis we have not decided to add such a popular approach as the SIFT method [11], because it mainly concentrates on finding a particular object similarity without a deep object spatial location analysis. The example of such a matching is shown in Fig. 7 where to the three terraced buildings seven houses with balconies were matched because a gutter or another less important element added in the query were found in the DB.

5 Conclusion

In this paper we compare the asymmetric and symmetric similarity measures applied to two kinds of signatures implemented in our content-based image retrieval system. In order to present the evaluation of the above-mentioned similarity measures, we used the database created in our institute containing mainly images of houses coming from the Internet.

We can observe that in a situation when a signature similarity is enhanced by object spatial location, the quality of semantic matching is better. All these similarity measures are applicable to signatures of different size and structure.

References

Beecks, C., Uysal, M.S. Seidl, T.: A comparative study of similarity measures for content-based multimedia retrieval. In: Multimedia and Expo (ICME), Suntec City, 19–23 July 2010

Jaworska, T.: Query techniques for CBIR. In: Andreasen, T., Christiansen, H., Kacprzyk, J., Larsen, H., Pasi, G., Pivert, O., De Tre, G., Vila, M.A., Yazici, A., Zadrożny, S. (eds.) Flexible Query Answering Systems, vol. 400, pp. 403–416. Springer, Cracow (2015)

Jaworska, T.: A search-engine concept based on multi-feature vectors and spatial relationship. In: Christiansen, H., De Tré, G., Yazici, A., Zadrożny, S., Larsen, H.L. (eds.) Flexible Query Answering Systems, vol. 7022, pp. 137–148. Springer, Ghent (2011)

Jaworska, T.: Spatial representation of object location for image matching in CBIR. In: Zgrzywa, A., Choroś, K., Siemiński, A. (eds.) New Research in Multimedia and Internet Systems, vol. 314, pp. 25–34. Springer, Wrocław (2014)

S. Rolewicz, Functional Analysis and Control Theory: Linear Systems, vol. Series: Mathematics and its applications, PWN-Polish Scientific Publishers, Warsaw 1987

Chang, C.-C., Wu, T.-C.: An exact match retrieval scheme based upon principal component analysis. Pattern Recogn. Lett. 16, 465–470 (1995)

Guru, D.S., Punitha, P.: An invariant scheme for exact match retrieval of symbolic images based upon principal component analysis. Pattern Recogn. Lett. 25, 73–86 (2004)

Beecks, C., Uysal, M.S. Seidl, T.: Signature quadratic form distances for content-based similarity. In: ACM Multimedia, Beijing, China, 19–24 Oct 2009

Rubner, Y., Tomasi, C., Guibas, L.J.: The earth mover’s distance as a metric for image retrieval. Int. J. Comput. Vision 40(2), 99–121 (2000)

Teague, M.R.: Image analysis via the general theory of moments. J. Opt. Soc. America 70(8), 920–930 (1980)

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing Switzerland

About this paper

Cite this paper

Jaworska, T. (2017). An Asymmetric Approach to Signature Matching. In: Zgrzywa, A., Choroś, K., Siemiński, A. (eds) Multimedia and Network Information Systems. Advances in Intelligent Systems and Computing, vol 506. Springer, Cham. https://doi.org/10.1007/978-3-319-43982-2_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-43982-2_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-43981-5

Online ISBN: 978-3-319-43982-2

eBook Packages: EngineeringEngineering (R0)