Abstract

Population pharmacokinetics is the study of sources and correlates of variability in drug exposure and response. The study of population pharmacokinetics represents an important aspect of drug development and plays a key role in finding the right dose to inform product labeling decisions. Application of novel mathematical and statistical tools to the study of population pharmacokinetics has revolutionized the drug development process. Pharmacostatistical models composed on pharmacokinetic, pharmacodynamic, disease progression, trial design aspects, and econometrics are widely used in decision-making at every stage of drug development. Nonlinear mixed-effects modeling methodology enables the analysis of sparsely collected pharmacokinetic and pharmacodynamic data from large-scale late-stage clinical trials to understand drug exposure–response relationships. Regulatory authorities such as the US FDA and EMEA have supported and worked with pharmaceutical industry to bring about a successful culture of change in drug development, which has evolved into a concept called model-based drug development (MBDD). MBDD uses modeling and simulation to implement a “learn and confirm” paradigm. This chapter is intended to provide the reader with a basic understanding of the various methods involved in population pharmacokinetics with an emphasis on the current gold standard of nonlinear mixed-effects modeling methodology.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Population pharmacokinetics

- Nonlinear mixed-effects modeling (NONMEM)

- Pharmacokinetics

- Pharmacodynamics

- Pharmacometrics

Population pharmacokinetics is defined as the study of the variability in plasma drug concentrations between individuals when standard dosage regimens are administered [1]. Studying the sources and correlates of variability in plasma concentrations provides clinicians with important information for designing appropriate dosing regimens. Important sources of interindividual variability in drug exposure may be due to various factors such as food, drug–drug interactions, pathophysiological conditions, and patient demographics.

During the course of new drug development, it is imperative to understand the safety and efficacy of a new chemical entity by taking into account experimental results from preclinical and clinical studies. Clinical development of a drug includes phase I–IV studies in which a candidate compound progresses through studies in healthy volunteers to clinical trials in patient populations. These trials typically require collection of several plasma concentrations followed by pharmacokinetic data analysis (compartmental or non-compartmental methods) and statistical analysis to test the study hypothesis. This method is known as standard, two-stage population pharmacokinetic analysis. The methodology first requires the estimation of individual pharmacokinetic parameters and then calculation of the summaries that represent population parameters (mean and standard deviation); this is followed by hypothesis testing via statistical analysis. This classical clinical pharmacological approach is somewhat limited to the early phase clinical trials with healthy populations where extensive pharmacokinetic sampling is feasible. It is logistically impossible to collect such data in large-scale clinical trials (phase III) where only sparse samples (1–2 samples per subject) are collected at intermittent clinical visits. Data collected in this manner is not amenable to traditional pharmacokinetic analysis; nonetheless, these trials contain plasma concentration data from the relevant patient population in which the drug will ultimately be used.

Lack of pharmacokinetic methodology to analyze sparse data limits the utility of routine therapeutic drug monitoring from actual patient populations. Other approaches such as naïve pooling and naïve averaging of the data have been proposed to handle sparse sample data but were shown to result in large biases in parameter estimates or to lack the inference on variability [2].

The pioneering work by Drs. Sheiner and Beal on nonlinear mixed-effects modeling (NLME) approaches set the stage for sparse sample pharmacokinetic data analysis. The NLME approach is a parametric model-based approach to study population pharmacokinetics. The NLME approach provides unbiased mean pharmacokinetic parameters as well as the estimate of variability by partitioning total variability in parameters into between-subject variability and residual variability [3]. The software developed to implement this analytical approach was named after the analytical method (nonlinear mixed-effects modeling (NONMEMTM)) by the University of Southern California and is currently licensed and managed by Icon Development Solutions (Baltimore, MD). Currently, NONMEMTM is considered the gold standard for population pharmacokinetic analysis; however, other software options that use different algorithms for parameter estimation are also available. These include Monolix (Lixoft, France), Phoenix (Certara, USA), ADAPT (BMSR, University of Southern California, USA), and Pmetrics (LAPK, University of Southern California, USA). Of note, it is now common in the pharmacometrics community to use the term NONMEM to describe the software program as opposed to the NLME analytical approach. Also, for purposes of clarification, the terms “population pharmacokinetics” and “NLME approach” are used interchangeably.

The objective of the current chapter is to describe the basic principles of population pharmacokinetic modeling. This will include basic terminology, statistical concepts of error structures, mixed-effects modeling, and methodology used to build a population pharmacokinetic model. An in-depth mathematical discussion is beyond the scope of this text. For a more extensive discourse, the reader is referred to reviews by Ene et al., Bonate et al., and Giltinan et al., as well as the NONMEMTM user guide (Icon Development Solutions Inc., MD, USA) [2, 4, 5].

1 Basic Terminology and Concepts

The term “model” in this chapter refers to a mathematical model that describes the pharmacokinetics or pharmacodynamics of a drug. These mathematical models originate from various compartmental model assumptions and are generally in the form of differential equations that describe the temporal profile of plasma concentrations that result from a particular dosage regimen. For example, the pharmacokinetic profile of a drug that is administered as an IV bolus and follows first-order elimination from a one-compartment model can be described by the following mathematical equation:

where Cj represents the concentration at the jth time point and CL and Vd represent clearance and volume of distribution, respectively; t is the time elapsed between dose ingestion and plasma sample collections. The above model consists of dose as input, time as an independent variable, concentration as a dependent variable, and CL and Vd as pharmacokinetic parameters. When a clinical pharmacokinetic experiment is conducted, post-dose plasma samples are collected from an individual at various time points. These data (longitudinal) are then fit to a model such as that described by Eq. 4.1 to estimate individual pharmacokinetic parameters CL and Vd. The process of estimating the parameters by fitting a model to the data is called “modeling.” Once the appropriate pharmacokinetic model is fit to the data and pharmacokinetic parameters are estimated, Eq. 4.1 can be used to calculate the resulting plasma concentrations from various inputs (i.e., dose and dosing frequency); this process is referred to as pharmacokinetic simulation. Modeling and simulation have become a vital component of clinical pharmacology and drug development programs, as they provide the tools for building predictive pharmacostatistical models. These predictive models are based on prior preclinical and clinical information and assist investigators in planning future confirmatory (phase III) clinical trials with a greater probability of success [6]. Pharmacometrics can be defined as the branch of science that is concerned with the interplay between mathematical models of biology, pharmacology, disease, and physiology. Pharmacometric data are used to describe and quantify interactions between xenobiotics and patients, including both beneficial and adverse effects [7]. This ideology has given birth to a new approach to developing drugs called model-based drug development (MBDD) [8]. MBDD involves the application of various mathematical and statistical modeling and simulation tools to assist in key drug development decisions, such as dosage selection and clinical trial design.

Pharmacokinetic modeling and simulation are both math and statistic intensive, and a basic appreciation of both is necessary. These topics are briefly addressed here but do not represent an exhaustive review of either subject. For more information, readers are referred to detailed texts on linear algebra, calculus, mathematical statistics, and probability theory. Nevertheless, a brief refresher is provided in this section on the required terminology. Random variables are real-valued functions of a sample space with a probability distribution function. The value of the random variable is determined by the outcome of a particular experiment. Random variables can be discrete, such as categorical scoring for a pharmacodynamic effect or continuous such as plasma concentrations. Expectation of a random variable and a function of a random variable can be calculated from probability theory for both discrete and continuous random variables, representing a weighted average of the possible values that it can take [9]. Random variables can have several probability distributions such as Bernoulli, binomial, Poisson, geometric, hypergeometric, and negative hypergeometric for discrete variables and uniform, normal, exponential, gamma, chi-squared, and Cauchy for continuous variables. Central limit theorem provides the theoretical basis that many random phenomena obey – at least approximately – a normal probability distribution [9]. Normal distribution of a continuous random variable is applicable to many assumptions in population pharmacokinetic modeling. A univariate, normal-variable distribution can be characterized by the mean and variance of that distribution. Multivariate normal-variable distribution can be characterized by a mean and a variance–covariance matrix [4, 9].

1.1 Methods for Studying Population Pharmacokinetics

Pharmacokinetic parameters in a population differ between individuals due to intrinsic and extrinsic factors. Intrinsic factors include age, weight, gender, genetics, and metabolic status of individuals, and extrinsic factors include concomitant medications, comorbid conditions, and food. An individual pharmacokinetic model consists of individual pharmacokinetic parameters, while a population pharmacokinetic model consists of population pharmacokinetic parameters and variability parameters. Variability parameters of interest include between-subject variability, between-occasion variability, and residual variability arising from errors in analytical methods, sampling, dosing, etc. For this introductory text, we will not detail the intercession variability. Traditionally, various methods have been applied to the study of population pharmacokinetics. Although some methods are more common than others, we will discuss a variety of such methods to provide a complete picture of their use in the study of population pharmacokinetics. When drug administration and sampling schedules are identical in all subjects in a study, one can take average plasma concentrations across the same time points and fit a model to the mean data. This approach is called naïve average data approach (NAD). It is not a reliable method for estimating population pharmacokinetic parameters because the averaging may completely smooth the pharmacokinetic variability and completely change the temporal pharmacokinetic profile (bi-peak phenomenon in individual pharmacokinetic profile may not be shown in a population average profile). Moreover, this method does not provide any information on the between-subject variability. This approach is currently only being used for preclinical experiments because other sources of variability such as variability between animals or between occasions are less than those observed in a clinical setting [2].

In situations where the sampling schedules are different between individuals, a naïve pooled approach (NPA) can be used. Using this approach, plasma concentrations from all subjects are pooled and fit to a model as if they originated from a single individual [10]. This approach can provide reliable population pharmacokinetic parameter data but as with NAD, it does not provide information on parameter variability. However, this approach has been shown to provide biased estimates when there is higher between-subject variability and heterogeneity in the sampling schedules. A standard two-stage (STS) approach involves the fitting of individual pharmacokinetic data and summarizing mean and variance data to determine population parameters. This method is applicable in situations where extensive sampling is performed; however, simulation studies show that this method provides upward biases in the variability parameters [3, 11, 12].

An NLME approach has been proposed as the appropriate theoretical mathematical framework for analyzing longitudinal pharmacokinetic data from clinical pharmacology studies [5, 10]. The NLME takes a midway compared to STS, NPD, and NPA approaches to appropriately pool the samples from various individuals and fit a population model with parameters of typical pharmacokinetic parameters and variability parameters. This approach can handle sparse samples in individuals (2–3 per subject) and nonuniform study designs and thus can be applied to data from late phase clinical trials and data from routine clinical practice. Some important features of NLME that differ from traditional methods discussed above include (1) collection of relevant pharmacokinetic information from a target population, (2) identification and measurement of variability in drug exposure during development, and (3) determination of the sources and estimating the magnitude of unexplained variability in the patient population [13]. It is vital to prospectively plan a population pharmacokinetic study with regard to study design (sample size, covariate selection), methodology, and analytical plan. The US FDA recommends population pharmacokinetic study designs that include single-trough, multiple-trough, and full-population pharmacokinetic sampling designs. The single-trough design has limited utility in that it only allows for inferences on drug clearance – and only if the samples are collected around the time of the true trough concentration of the drug. This design will not be useful in estimating other pharmacokinetic parameters such as absorption rate constant. The multiple-trough design consists of two or more blood samples obtained near the time of the trough concentration under steady-state conditions. The full-population pharmacokinetic sampling design involves the collection of multiple post-dose samples (typically 1–6) at various times that may differ between individuals [13].

Pharmacokinetic variability was once considered a nuisance variable when it came to data analysis; however, it is now appreciated that the magnitude of random variability is important because drug safety and efficacy are inversely proportional to the unexplainable variability in a drug’s pharmacokinetic and pharmacodynamic profile. The model shown in Eq. 4.1 must take into account errors in individual observations. A correct representation of the model is as follows:

where ε j is the error associated with the plasma concentration at the jth time point; generally the errors are assumed to be independent and have a normal distribution with a mean of zero and some (unknown) variance (σ 2). The same model in a population context will have at least another level of variability in addition to the residual error as described above in Eq. 4.2. This additional level of variability is referred to as between-subject variability (BSV) which occurs at the pharmacokinetic parameter level. Interindividual differences in pharmacokinetic parameters must be accounted for in a population model. A typical population model for a group of subjects administered an IV bolus dose is written as follows:

where C ij represents plasma concentration in the ith subject at the jth time point; Dose i and t ij represent individual dose and time of sample collection, respectively; CL i and Vd i represent individual clearance and volume of distribution, respectively. In a population model, we will mathematically relate the individual pharmacokinetic parameters to the population parameters as shown in the equations below:

where TVCL and TVVd are the typical values for population clearance and volume of distribution, respectively; η i represents the difference between the population parameter and the individual parameter on a logarithmic scale. One can understand this by simply rearranging the variables in Eq. 4.4 or Eq. 4.5:

The LOG in Eq. 4.6 is a natural logarithm, and \( {\eta}_i \) is the difference between an individual pharmacokinetic parameter and a typical population value. One can see from this equation that \( {\eta}_i \) can be either a positive or a negative value because a person can have a clearance value that is greater or less than the population average. The \( {\eta}_i \) is a vital concept to population modeling and mixed-effects concepts; it is discussed in greater detail below.

1.2 Fixed Effects, Random Effects, and Mixed Effects

Fixed effects are those variables whose levels represent an exhaustive set of all possible levels. Random effects are variables whose levels do not exhaust the set of possible levels, and each level is equally representative of the other levels [4]. Fixed effects are those that can be measured in an experiment; they include dosages and covariates such as age, gender, race, and creatinine clearance. Fixed effect parameters relate these fixed effects to the population pharmacokinetic parameters in a quantitative manner. For example, if one wants to relate creatinine clearance measured in individuals to the population clearance of a drug given as an IV bolus, then the population model is written as below:

CRCL i and θ in Eq. 4.7 represent the individual measured creatinine clearance and effect of creatinine clearance on the typical population estimate of clearance (TVCL), respectively. The individual creatinine clearance is normalized to a reference value of 120 mL/min in this case. The θ in Eq. 4.7 is a fixed effect parameter. The covariate submodel can be in the form of additive, proportional, exponential, or power models [14]. Typical values for pharmacokinetic parameters in the model are also considered a special case of fixed effects, because they do not vary between individuals. Random effect parameter quantifies the random unknown variability in the pharmacokinetic parameters and residual variability in the concentrations. Random effects in the population model include between-subject random effects, which are quantified by between-subject variability, and residual random effects, which are quantified by residual variability or intraindividual variability. Because plasma concentrations are a result of multivariate normal distributions of pharmacokinetic parameters (i.e., multiple parameters in the model have different distributions), the parameters that quantify the random effects are represented in a variance–covariance matrix or covariance matrix. The \( {\eta}_i \) is assumed to be normally distributed with a mean of zero and a variance of ω 2 i . The population model in Eq. 4.3 will include the following covariance matrix to quantify random effects:

where ω 21 and ω 22 represent the variances in η 1i and η 2i in the population, which represents between-subject variability in clearance and volume of distribution, respectively. The ω 2 ω 1 or ω 1 ω 2 represent covariance between clearance and volume of distribution. Covariance matrices are generally represented as lower triangular matrices because the upper triangular elements are the same as the lower triangular matrix elements. The individual parameter estimates in nonlinear mixed-effects modeling are estimated using Bayesian methodology, and they are generally referred to as empirical Bayes estimates (EBEs). The use of the phrase “empirical Bayes” emphasizes that the parameters for the prior distribution are estimated from the data and are used as if they were known to obtain the posterior distribution [15]. When there is less information in an individual, the model assumes the person to be a typical individual, and the individual parameters shrink toward population parameters. The opposite occurs when there is more information in an individual subject, which means more samples were collected for that person at informative time points. If the population model is adequate, the quality of the individual parameter estimates will depend heavily on the observed data. The variance of EBE distribution will shrink toward zero as the quantity of information at the individual level is reduced; this phenomenon is defined as η-shrinkage. Similarly, in cases where data are less informative, the individual weighted residual (IWRES; discussed below) distribution shrinks toward zero, which is defined as ε-shrinkage and is sometimes called “overfitting” [15].

The residual error (i.e., the difference between model predicted and observed concentration) can have a structure. Most important error structures encountered in pharmacokinetic modeling include additive, proportional, and combination errors. Additive error has the following structure:

where y ij is the observed data in the ith individual at the jth time point; ipred ij is the predicted concentration in the ith individual at the jth time point and ε ij is the random effect with a mean of zero and a variance of σ 2. Additive error is also called homoscedastic error; this error is not dependent on the magnitude of the prediction (higher or lower concentration). Proportional error, as the name indicates, is proportional to the magnitude of the concentration in the following way:

This is also equivalent to \( {y}_{ij}=i{\mathrm{pred}}_{ij}+i{\mathrm{pred}}_{ij}*{\varepsilon}_{ij} \).

In this type of error, the higher the concentration, the greater the error, but the coefficient of variation (ratio of the standard deviation to the mean) is constant. Thus, it is also called a constant coefficient variation model. In this model there is an interaction between residual error (ε ij ) and between-subject variability (η), due to the dependency of ipred ij on the EBEs. A proper estimation algorithm method that accounts for η-ε interaction should be used to avoid biases in parameter estimation, which will be discussed below. A combination error model combines the additive and proportional error models and is also sometimes called a “slope and intercept” model as shown below:

where ε 1ij and ε 2ij represent proportional and additive error components, respectively.

A mathematical model containing both fixed and random effects is called a mixed-effects model. Mixed-effects models can describe a linear or nonlinear relationship between an independent variable and a dependent variable. If the function describing this relationship is a linear model, then it is a linear mixed-effects model and is commonly used to assess bioequivalence data, QTc data, and dose-response relationships [16]. The functions that relate the plasma concentrations (dependent variables) to time (independent variables) are nonlinear as in Eq. 4.3, and nonlinear mixed-effects modeling (NONMEM) methodology is applied. As mixed-effects modeling includes random effect parameters, the optimization methods play an important role in estimating the parameters of the model. Several basic estimation algorithms that are commonly used in NONMEM methodology will be discussed below.

2 Estimation Methods Used in NONMEM

Parameter estimation in mixed-effects models is complex; hence ordinary least square-based methods are not optimal when residual variance is dependent on the model parameters [4]. Although estimation methods discussed thus far have focused on those available in NONMEMTM, other software packages with slightly different (or the same) algorithms are also available. Most of the NLME methods use maximum likelihood approach for parameter estimation. Likelihood is a conditional probability of an event occurring, given that another event has occurred. The probability of the data to which the model is being fit is written as a function (likelihood function) of model parameters; the maximum likelihood estimates (MLEs) represent where this probability is maximum. Several mathematical approximations were developed to calculate likelihood function to linearize the random effects, due to the nonlinear dependence on the observations [2].

The first-order (FO) approximation was the first to be used and takes a first-order Taylor series expansion of the population model with respect to the random effects around zero. Currently FO is not recommended due to the availability of better approximations such as first-order conditional estimation (FOCE) and first-order conditional estimation with interaction (FOCEI). FOCE takes a first-order Taylor series expansion around the conditional estimates of the interindividual random effect (η i ), instead of zero. The FOCEI method accounts for the interaction between the between-subject and within-subject variability components and should be used when heteroscedastic error models (e.g., proportional error) are used.

A number of newer NLME methods have been introduced based on expectation–maximization principles such as stochastic approximation expectation maximization (SAEM), Monte Carlo importance sampling (IMP, IMPMAP), and Markov chain Monte Carlo (MCMC) Bayesian methods in the newer versions of NONMEMTM. The EM-based methods are advantageous because they do not use linearized approximations (e.g., FO, FOCE) and therefore can theoretically induce less bias. MCMC Bayesian methods do not provide point estimates but provide a series of fixed effect parameters that are distributed according to their ability to fit the data. A comparison among FOCEI, ITS, IMP, IMPMAP, and Bayesian methods in a simulated, complex, target-mediated drug disposition model showed that newer methods performed similarly to FOCEI in parameter bias and standard error of the estimate (SE) [17]. It is important to realize that the calculated objective function value that represents the global fit statistic to the data cannot be compared between estimation algorithms, as the method of calculation varies significantly. For instance, the NONMEMTM software calculates the objective function in first-order approximation estimation methods as equivalent to −2* log likelihood, which is approximately distributed to the chi-square (χ2) statistic with q degrees of freedom, where q is the number of parameters in the model. NONMEMTM objective function can be used for hypothesis testing for hierarchical models, such as covariate analysis; this process is called log likelihood ratio testing. The objective function, calculated using the SAEM method in NONMEMTM, cannot be used for hypothesis testing; however, the parameters do represent maximum likelihood estimates. In the newer version of NONMEMTM software, multiple estimation methods can be used where SAEM is used for parameter estimation and important sampling-based methods (IMP, IMPMAP) for hypothesis testing and calculation of asymptotic standard error of parameters. Readers are referred to the NONMEMTM technical guide for mathematical derivations and further information on the differences in objective functions [18–20].

2.1 General Principles of Population Pharmacokinetic Model Development

Population pharmacokinetic models are hierarchical in nature in that they have a structural pharmacokinetic model, a covariate submodel, and a statistical model. The structural model consists of the compartmental model equation that describes the temporal profile of plasma concentrations. The statistical model includes submodels that may incorporate between-subject variability or interindividual variability, residual variability or intraindividual variability, and between-occasion variability. The structural model is generally based on a prior understanding of a drug’s pharmacokinetics from preclinical studies or phase I studies where extensive sampling was performed.

When only sparse data are available, the ability to identify a more complex compartmental model is compromised. For example, data from a drug that is optimally described by a two-compartment model may fit a one-compartment model better if plasma concentrations are missing during the drug’s distribution phase. Identifiability of a model and its parameters is an important consideration when framing a structural model. Structural identifiability is the ability to uniquely estimate a model’s parameters. Parameter identifiability is the ability to estimate a structurally identifiable model [4].

Let us consider a compartmental model that consists only of plasma concentration samples, yet we desire to estimate both renal and nonrenal clearance. It is impossible to separate these two parameters unless either the urine compartment or the nonrenal compartment (metabolite) is sampled. These identifiability issues arise quickly when the model gets complicated such as parent drug–metabolite models where both a parent drug and its metabolite are modeled in an integrated model such as in Fig. 4.2. In this model, it is not possible to estimate all three parameters: (1) metabolite formation rate, (2) volume of the metabolite compartment, and (3) metabolite elimination rate [21]. Generally, some assumptions involving the metabolic fraction or volume of metabolite compartments are made so that only two of the three parameters are estimated. Statistical models consist of between-subject variability in pharmacokinetic parameters and residual variability that cannot be explained by the between-subject variability. An exponential error model is generally used for between-subject variability in pharmacokinetic parameters to represent the log-normal distribution because negative values for pharmacokinetic parameters are not meaningful. Residual error models were discussed in the previous section, namely, proportional, additive, and combination error models.

First, a base model that includes a structural model with random effect parameters will be finalized. Generally, the base model will not contain any covariates. However, it is now common to include weight as a covariate for volume and clearance parameters based on established allometric scaling methods [22, 23]. The base model provides individual pharmacokinetic parameters (EBEs), which are used to evaluate potential covariate relationships using plots of EBEs versus covariates such as age, weight, and gender. When a large number of covariates are present, several screening methods are proposed, which use generalized additive modeling and Wald’s approximation to the likelihood ratio test [24, 25]. It is important to have an extensive discussion between the clinical and pharmacometrics teams to determine which covariates should be included in the model; this should be determined by clinical relevance and final utility of the model. Covariate modeling represents the model-based hypothesis testing framework and actually represents the act of finding sources and correlates of variability as per the definition of population pharmacokinetics. Two important methods currently used in covariate modeling are stepwise addition and full model estimation [26–28].

Stepwise covariate modeling includes a forward addition step where covariates are progressively added based on their statistical significance and a backward elimination step where each of the covariate parameters entered in the forward addition step are removed, and the statistical result is evaluated. As mentioned, the objective function that is used as global goodness of fit is a χ 2 statistic. For hierarchical models, the drop in objective function value by 3.84 points with an addition of one new parameter addition compared to the base model (no covariates) is statistically significant (α = 0.05). When a significant covariate is removed from the model, the objective function must increase by a similar magnitude. In stepwise addition, generally, a lower significance step is selected (α = 0.05 or lower) compared to backward elimination (α = 0.01 or higher); this is done to control for false positives. There is an automated computer program that performs stepwise covariate modeling (SCM); it is available in PsN tools [29]. For nonhierarchical models, Akaike criterion can be used for model selection [30].

For full model evaluation, it is recommended to add clinically relevant covariates and to construct a full model without statistical significance and then reduce the model by backward elimination. In this approach clinical relevance and utility of the covariate in clinical practice are more important than statistical significance [27, 31]. In cases where there is no covariate available but the base model shows clear multimodal distribution of EBEs, one can apply mixture models to assign an individual to two or more models. Mixture modeling helps to explain such multimodal distributions, and also the probability of each mixture population is estimated as a parameter [32]. The objective function value (OFV) that is minimized in mixture model is the sum of the OFVs for each patient (OFV i ), which in turn is the sum across the k subpopulations (OFV i, k ). The individual probability of belonging to a subpopulation can be calculated using the OFV in that individual together with the total probability in the population [33]. An example of mixture model for risperidone is discussed in the subsequent sections.

2.2 Evaluation of a Population Model

Population pharmacokinetic model development involves fitting several (100 or more) models with varying structural, statistical, and covariate models to come up with a parsimonious model that has no redundant parameters and is also an irreducible model. Several model diagnostics are commonly used to make decisions at every stage of modeling. Commonly used diagnostics include likelihood-based objective function value modulation, basic goodness of fit plots, residual plots, standard error of estimates, and normalized predicted distribution errors (NPDE). The likelihood objective function value is a global objective measure of model fit and can be used to retain a parameter in the model using the LRT method for hierarchical models. It is important to recognize that the LRT method theoretically does not apply to parameters with boundary conditions such as between-subject variability parameters and absorption lag time. However, the LRT method is applicable for inclusion decisions for covariance parameters (covariance can be a positive or negative value). Diagnostic plots are generally created using XPOSE library in R software, which is created specifically for evaluating population pharmacokinetic models [34, 35]. These plots will enable the modeler to visually inspect whether the model-predicted concentrations match the observed data and to also check model assumptions such as normality of random effects, statistical outliers, and covariate relationships.

Population modeling results in individual predictions (IPRED), population predictions (PRED), residuals (RES, IWRES, CWRES, WRES, etc.), and EBEs. These variables are used along with covariates and time after dose to prepare diagnostic plots or goodness of fit (GOF) plots. The most commonly reported plots for population pharmacokinetic models and those recommended by regulatory guidance agencies are discussed here. PREDs account for explainable between-subject variability by covariates, and IPREDs have additional between-subject variability [14]. A plot of observed data (DV) versus IPRED and PRED shows any structural model misspecifications or need of covariates to explain variability. A line of identity (solid line in Fig. 4.1 with a slope of one) and trend line (dark solid line in Fig. 4.1, preferably a regression line) are recommended for DV versus IPRED or PRED plots. Any deviations between the line of identity and trend line represent potential model misspecification. The DV versus PRED plots are more sensitive to covariate effects and the modeler looks for perceivable differences and lack of correlations before and after inclusion of a particular covariate. The DV versus IPRED plots can look artificially better in case of shrinkage (>30 %). Commonly calculated residuals in population modeling include individual weighted residuals (IWRES), weighted residuals (WRES), and conditional weighted residuals (CWRES).

Basic goodness of fit plots in population pharmacokinetic model evaluation. Plots in top panel have a line of identity (lighter solid line) and a regression line (darker solid line) as trend line. Plots in bottom panel have a (lighter solid) horizontal line at zero and a (darker solid) smooth line as trend line. The data was simulated from a one-compartment IV bolus model in NONMEM and refitted to the model to generate these plots

Residual is defined as the difference between observed concentration and predicted concentration in an individual. WRES is calculated as the ratio of residual-to-weight (generally variance) and is calculated based on FO approximation. WRES is not appropriate to use with FOCE or FOCEI approximations to the true model. CWRES is calculated based on FOCE approximation and have better qualities in identifying model misspecification [36]. The time after dose versus CWRES or IWERS plot with a horizontal line at 0 and a trend line is recommended for checking the independence of the residuals with the independent variable, which is a fundamental assumption in regression analysis. The trend line (preferably a smooth line) should be horizontal and must not show any trends (Fig. 4.1, bottom panel). The same should be the case with PRED versus residual plots. It is also suggested that any individual observations with an absolute CWRES > 6 be identified as statistical outliers, as the CWRES has a mean of zero and unit variance [31]. The SE is generated from the variance–covariance matrix during the minimization process; 95 % confidence intervals of the parameters can be calculated as the parameter estimate ± 2*SE. Generally, SE greater than or equal to 50 % represents a parameter with high imprecision [30]. The histograms and Q–Q (quantile–quantile) plots of EBEs are used to check the assumption of normality. If all points fall on the line of unity, then the normality assumption is satisfied (Fig. 4.2). NPDE is a simulation-based diagnostic that is used for model discrimination. By derivation, NPDE follows a standard normal distribution (normal distribution with mean of 0 and standard deviation of 1); any deviations from the model-predicted NPDE indicate a misspecification of the model [37]. Some other plots that are commonly used in population pharmacokinetic model development include IPRED versus time after dose, parameter versus parameter correlations, and EBE versus EBE plots [38].

Histograms and Q–Q plots of the between-subject random effects from the same model in Fig. 4.1 to check the assumption of normality. A probability density curve was added to the histogram. Any deviation from the line of unity in Q–Q plot represents the deviation from the normality assumption. ETA1 and ETA2 represent the random effect parameters on clearance and volume of distribution, respectively

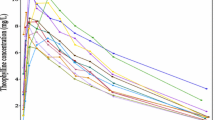

Once a final model is selected, several computing-intensive statistical methods are used for qualification and validation. These include visual predictive check (VPC), numerical predictive check (NPC), bootstrapping, cross-validation, and jack-knifing methods. VPC is a simulation-based diagnostic that takes into account all the model components (structural, fixed, and random effects) and is used to make model comparisons, suggest model improvements, and support appropriateness of a model. VPC is conducted by first simulating several datasets with the same design aspects as the clinical trial that generated the observed data used for model development. Then percentiles (5th, 50th, and 95th) of the all simulated concentrations (not to be confused with IPRED or PRED) and overlaying in a plot with observed data percentiles for the same. The entire distribution of the observed data should match the predicted data from the model [39]. When there are major differences in study design, such as different doses and sample collection times, it is recommended to use standardized VPC and prediction-corrected VPC, which are preferred over traditional VPCs [40, 41]. Numerical predictive check is very similar to VPC except instead of a visual display of concentrations, a metric (i.e., AUC) is calculated from simulated datasets and compared to the observed data. Bootstrapping is a resampling-based technique where original data are resampled to create several bootstrap samples; the final model is then fit to all the samples to calculate the nonparametric CIs of the parameters and distributions. These CIs are generally considered more reliable than the parametric SE-based CIs calculated from the variance–covariance matrix. Please refer to extensive descriptions on the cross-validation and jack-knifing techniques that can identify influential subjects and provide a more robust evaluation of the predictive capabilities of a model [42, 44].

2.3 Population Pharmacokinetic Modeling of Antipsychotics

In this section, several examples of population pharmacokinetic modeling applied to antipsychotic drugs are reviewed. Feng et al. reported an integrated population pharmacokinetic model for risperidone after oral administration from highly sparse sampling measurements from the CATIE study [43]. Risperidone is an atypical antipsychotic with selected antagonistic properties at serotonin 5-HT2 and dopamine D2 receptors [44]. The structural model was a one-compartment model with first-order absorption for risperidone that was linked to the active metabolite (9-hydroxy risperidone) compartment by formation clearance. The fraction of parent drug converted to metabolite was estimated as a function of parent clearance. Due to identifiability issues, it was assumed that the volume of the metabolite compartment was the same as that of the parent compartment. In this study, a total of 1236 plasma risperidone and 9-hydroxy risperidone concentrations were collected in 490 subjects. A clear multimodal distribution in individual risperidone clearance parameters was observed in the base model; this was likely due to the fact that risperidone is metabolized by the polymorphic cytochrome P 450 (CYP) 2D6 enzyme [45]. Therefore, a mixture modeling approach in the clearance parameter was utilized to capture the CYP2D6 polymorphism and explain the multimodal distribution in risperidone clearance. The mixture model was able to capture the CYP2D6 poor metabolizers (PM), intermediate metabolizers (IM), and extensive metabolizers (EM) successfully. The probability of being a PM, IM, or EM was estimated at 41 %, 52 %, and 7 %, respectively. The final model identified age as a significant covariate affecting 9-hydroxyrisperidone clearance.

Data from the above investigation suggest that older individuals may experience higher exposure to the active 9-hydroxy metabolite, thereby placing them at risk for toxicity. Combination error models with additive and proportional components were separately estimated for risperidone and its 9-hydroxy metabolite. Sherwin et al. applied the above model to data from 28 children and adolescents and successfully described the data, thereby suggesting that this model may be potentially useful for individualizing risperidone therapy in this population [46].

Thyssen et al. studied the population pharmacokinetics of oral risperidone in children, adolescents, and adults [47]. The modeling was conducted using a pooled dataset of 304 pediatric and 476 adult subject plasma concentration samples. Different models were developed for risperidone and active antipsychotic fraction (calculated as risperidone plus 9-hydroxyrisperidone concentrations at each sample collection time point). The structural model consisted of two compartments with first-order absorption, with body weight added as a covariate on clearance, and volume parameters based on allometric principles. Testing for statistical significance by LRT was not performed. In contrast to the study conducted by Feng et al. [43] mixture modeling was employed to describe the oral bioavailability of risperidone. Data from two subpopulations, representing PMs and EMs were modeled. Age and creatinine clearance were identified as significant covariates affecting the risperidone clearance. The probability of being an EM or a PM was estimated at 19 % and 81 %, respectively. Simulations from the model showed that risperidone and the active antipsychotic fraction were similar in children, adolescents, and adults.

Like risperidone, clozapine is another atypical antipsychotic; it is used in the treatment of refractory schizophrenia. After oral administration, clozapine is extensively metabolized by CYP1A2 to form the pharmacologically active metabolite, norclozapine. Ismail et al. characterized the population pharmacokinetics of clozapine and norclozapine in an integrated model [48]. Data from this investigation were collected retrospectively and fit to a final model that included one compartment for the parent compound and one compartment for the metabolite. The volume for the metabolite compartment was fixed to twice the amount of the parent compartment to avoid the identifiability issue. The fraction of conversion of clozapine to norclozapine was estimated separately for tablet and suspension formulations and found to be 0.015 and 0.4, respectively. Age and gender were significant covariates affecting the clearance of norclozapine. Different absorption rate constants were estimated for different formulations, with tablet and suspension formulations displaying a more rapid absorption compared to tablet formulations [48].

3 Summary and Conclusion

Population pharmacokinetic modeling provides valuable tools for studying the pharmacokinetics of drugs in a real world patient population. Model development is typically performed in a stepwise manner in which a hierarchical model is built that contains both structural and statistical components. Population pharmacokinetic modeling involves an understanding and mastery of several key disciplines, including math, statistics, pharmacology, and pharmacokinetics. Expertise in all of these disciplines must be carefully applied to concentration versus time data to synthesize appropriate population pharmacokinetic models that can be used to optimize drug therapy.

References

Aarons L (1991) Population pharmacokinetics: theory and practice. Br J Clin Pharmacol 32:669–670

Ette EI, Williams PJ, Ahmad A (2007) In: Williams PJ, Ette EI (eds) Pharmacometrics: the science of quantitative pharmacology, John Wiley & Sons Inc, Hoboken, pp 265–285

Sheiner LB, Beal SL (1980) Evaluation of methods for estimating population pharmacokinetics parameters. I. Michaelis-Menten model: routine clinical pharmacokinetic data. J Pharmacokinet Biopharm 8:553–571

Bonate PL (2011) Pharmacokinetic and pharmacodynamic modeling and simulation, 2nd edn. Springer Science+Business Media, LLC, New York, NY, USA

Giltinan MD, David M (1885) Nonlinear models for repeated measurement data (Chapman & Hall/CRC monographs on statistics & applied probability). Springer, New York, NY, USA

Sheiner LB (1997) Learning versus confirming in clinical drug development. Clin Pharmacol Ther 61:275–291

Barrett JS, Fossler MJ, Cadieu KD et al (2008) Pharmacometrics: a multidisciplinary field to facilitate critical thinking in drug development and translational research settings. J Clin Pharmacol 48:632–649

Lalonde RL, Kowalski KG, Hutmacher MM et al (2007) Model-based drug development. Clin Pharmacol Ther 82:21–32

Ross S (2014) A first course in probability, 9th edn. Pearson Education Limited, Harlow, England

Sheiner LB (1984) The population approach to pharmacokinetic data analysis: rationale and standard data analysis methods. Drug Metab Rev 15:153–171

Sheiner LB, Beal SL (1981) Evaluation of methods for estimating population pharmacokinetic parameters. II. Biexponential model and experimental pharmacokinetic data. J Pharmacokinet Biopharm 9:635–651

Sheiner LB, Beal SL (1983) Evaluation of methods for estimating population pharmacokinetic parameters. III. Monoexponential model: routine clinical pharmacokinetic data. J Pharmacokinet Biopharm 11:303–319

The US FDA (1999) www.fda.gov. [Online]. http://www.fda.gov/Drugs/GuidanceComplianceRegulatoryInformation/Guidances/ucm064982.htm

Mould D, Upton R (2012) Basic concepts in population modeling, simulation, and model-based drug development. CPT Pharmacometrics Syst Pharmacol 1:e6

Savic RM, Karlsson MO (2009) Importance of shrinkage in empirical bayes estimates for diagnostics: problems and solutions. AAPS J 11:558–569

Florian JA, Tornøe CW, Brundage R et al (2011) Population pharmacokinetic and concentration—QTc models for moxifloxacin: pooled analysis of 20 thorough QT studies. J Clin Pharmacol 51:1152–1162

Gibiansky L, Gibiansky E, Bauer R (2012) Comparison of Nonmem 7.2 estimation methods and parallel processing efficiency on a target-mediated drug disposition model. J Pharmacokinet Pharmacodyn 39:17–35

Beal SL, Sheiner LB (1992) NONMEM users guide- part VII. Conditional estimation methods. University of California, San Francisco

Bauer RJ (2013) NONMEM 7 technical guide. ICON Development Solutions Ellicott City, Maryland

Wang Y (2007) Derivation of various NONMEM estimation methods. J Pharmacokinet Pharmacodyn 34:575–593

Bertrand J, Céline M, Laffont CM et al (2011) Development of a complex parent-metabolite joint population pharmacokinetic model. AAPS J 13:390–404

Anderson BJ, McKee D, Holford NH (1997) Size, myths and the clinical pharmacokinetics of analgesia in paediatric patients. Clin Pharmacokinet 33:313–327

Holford NH (1996) A size standard for pharmacokinetics. Clin Pharmacokinet 30:329–332

Mandema JW, Verotta D, Sheiner LB (1992) Building population pharmacokinetic– pharmacodynamic models. I. Models for covariate effects. J Pharmacokinet Biopharm 20:511–528

Kowalski KG, Hutmacher MM (2001) Efficient screening of covariates in population models using Wald’s approximation to the likelihood ratio test. J Pharmacokinet Pharmacodyn 28:253–275

Wählby U, Jonsson EN, Karlsson MO (2002) Comparison of stepwise covariate model building strategies in population pharmacokinetic-pharmacodynamic analysis. AAPS PharmSci 4:E27

Gastonguay M (2004) A full model estimation approach for covariate effects: inference. AAPS meeting abstract W4354

Gastonguay M (2011) Full covariate models as an alternative to methods relying on statistical significance for inferences about covariate effects: a review of methodology and 42 case studies. PAGE Abstract

Lindbom L, Ribbing J, Jonsson EN (2004) Perl-speaks-NONMEM (PsN) – a Perl module for NONMEM related programming. Comput Methods Programs Biomed 75:85–94

Boeckmann AJ, Sheiner LB, Beal SL (2003) NONMEM user guide- part V, NONMEM Project Group, University of California, San Francisco.

Byon W, Smith MK, Chan P et al (2013) Establishing best practices and guidance in population modeling: an experience with an internal population pharmacokinetic analysis guidance. CPT Pharmacometrics Syst Pharmacol 2:e51

Frame B (2007) Mixture modeling with NONMEM V. Pharmacometrics: the science of quantitative pharmacology. Science+Business Media, LLC, New York, NY, USA. pp 723–757

Carlsson KC, Savic RM, Hooker AB et al (2009) Modeling subpopulations with the $MIXTURE subroutine in NONMEM: finding the individual probability of belonging to a subpopulation for the use in model analysis and improved decision making. AAPS J 11:148–154

Jonsson EN, Karlsson MO (1999) Xpose – an S-PLUS based population pharmacokinetic/pharmacodynamic model building aid for NONMEM. Comput Methods Programs Biomed 58:51–64

R: a language and environment for statistical. R (2014) http://www.R-project.org/

Hooker AC, Staatz CE, Karlsson MO (2007) Conditional weighted residuals (CWRES): a model diagnostic for the FOCE method. Pharm Res 24:2187–2197

Comets E, Brendel K, Mentré F (2008) Computing normalised prediction distribution errors to evaluate nonlinear mixed-effect models: the npde add-on package for R. Comput Methods Programs Biomed 90:154–166

Jonsson NE, Hooker A. xpose.sourceforge.net/bestiarium_v1.0.pdf. xpose.sourceforge.net. [Online] xpose.sourceforge.net

Karlsson MO, Holford NH. (2008) A tutorial on visual predictive checks. PAGE 17 abstract: 1434.

Wang DD, Zhang S (2012) Standardized visual predictive check versus visual predictive check for model evaluation. J Clin Pharmacol 52:39–54

Bergstrand M, Hooker AC, Wallin JE, Karlsson MO (2011) Prediction-corrected visual predictive checks for diagnosing nonlinear mixed-effects models. AAPS J 13:143–151

Colby E, Bair E (2013) Cross-validation for nonlinear mixed effects models. J Pharmacokinet Pharmacodyn 40:243–252

Feng Y, Pollock BG, Coley K et al (2008) Population pharmacokinetic analysis for risperidone using highly sparse sampling measurements from the CATIE study. Br J Clin Pharmacol 66:629–639

Leysen JE, Gommeren W, Eens A et al (1988) Biochemical profile of risperidone, a new antipsychotic. J Pharmacol Exp Ther 247:661–670

Fang J, Bourin M, Baker GB (1999) Metabolism of risperidone to 9-hydroxyrisperidone by human cytochromes P450 2D6 and 3A4. Naunyn Schmiedebergs Arch Pharmacol 359:147–151

Sherwin CM, Saldaña SN, Bies RR et al (2012) Population pharmacokinetic modeling of risperidone and 9-hydroxyrisperidone to estimate CYP2D6 subpopulations in children and adolescents. Ther Drug Monit 34:535–544

Thyssen A, Vermeulen A, Fuseau E et al (2010) Population pharmacokinetics of oral risperidone in children, adolescents and adults with psychiatric disorders. Clin Pharmacokinet 49:465–478

Ismail Z, Wessels AM, Uchida H et al (2012) Age and sex impact clozapine plasma concentrations in inpatients and outpatients with schizophrenia. Am J Geriatr Psychiatry 20:53–60

Acknowledgment

The author would like to express his sincere gratitude and thanks to Mr. Raj Thatavarthi and Mr. Karthik Lingineni at GVK Biosciences Pvt., Ltd., for helping in procuring literature and plots.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Chaturvedula, A. (2016). Population Pharmacokinetics. In: Jann, M., Penzak, S., Cohen, L. (eds) Applied Clinical Pharmacokinetics and Pharmacodynamics of Psychopharmacological Agents. Adis, Cham. https://doi.org/10.1007/978-3-319-27883-4_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-27883-4_4

Published:

Publisher Name: Adis, Cham

Print ISBN: 978-3-319-27881-0

Online ISBN: 978-3-319-27883-4

eBook Packages: MedicineMedicine (R0)