Abstract

In this article we present two very simple modifications to Differential Evolution (DE), one of the most competitive evolutionary algorithms of recent interest, to enhance its performance for the high-dimensional numerical functions while still preserving the simplicity of its algorithmic framework. Instead of resorting to complicated parameter adaptation schemes or incorporating additional local search methods, we present a simple strategy where the values of the scale factor (mutation step size) and crossover rate are switched in a uniformly random way between two extreme corners of their feasible ranges for different population members. Also each population member is mutated either by using the DE/rand/1 scheme (where the base vector to be perturbed is a randomly chosen member from the population) or by using the DE/best/1 scheme (where the base vector is the best member of the population). The population member is subjected to that mutation strategy which was responsible for the last successful update at the same population index under consideration. Our experiments based on the benchmark functions proposed for the competitions on large-scale global optimization with bound constraints held under the IEEE CEC (Congress on Evolutionary Computation) 2008 and 2010 competitions indicate that the basic DE algorithm with these simple modifications can indeed achieve very competitive results against the currently best known algorithms.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Over the past few decades, several families of evolutionary computing algorithms have been proposed for solving bound-constrained global optimization problems. Performances of these algorithms remain considerably good for problems with moderate number of decision variables or dimensions. However, most of them face difficulties in locating the global optimum with sufficient accuracy and without consuming too much Function Evaluations (FEs) as the number of dimensions of the search space increases beyond 100 or so. This is not surprising and is primarily caused by the exponential increase of the search volume with dimensions. Consider placing 100 points onto a real interval, say [0,1]. To obtain a similarly dense coverage, in terms of distance between adjacent points, the 10-dimensional space \( \left[ {0,1} \right]^{10} \) would require 10010 = 1020 points. The previously mentioned 100 points now appear as isolated points in a vast empty space. Usually the distance measures break down in higher dimensionalities and a search strategy that is valuable in small dimensions might be useless in large or even moderate dimensional search spaces.

Many real world problems demand optimization of a large number of variables. A few typical examples of such problems are shape optimization [1, 2], high-dimensional waveform inversion [3], and large scale economic load dispatch (involving 140 units or more) [4]. Recently researchers have been paying attention to the issue of designing scalable nature-inspired optimization techniques for optimizing very high dimensional functions. The ongoing interest of the scientific community is also evident from participations in the competitions on large scale single objective global optimization with bound constraints held under the IEEE CEC (Congress on Evolutionary Computation) 2008 and 2010 [5, 6].

The evolutionary methods for high-dimensional global optimization problems can be roughly categorized into three classes: the cooperative co-evolutionary methods, the micro Evolutionary Algorithms (EAs) and the Local Search (LS) based methods. Cooperative Co-Evolutionary Algorithms (CCEAs) [7–11] are well-known for solving high dimensional optimization problems and they result from an automatic divide and conquer approach. Recently some promising Cooperative Co-evolutionary (CC) algorithms were proposed like the CC versions of the Particle Swarm Optimization (CCPSO and CCPSO2) [10] and CC with Differential Grouping [11]. Micro–EAs (see for example [12–15]) are instances of typical EAs characterized by small population size and often simple fitness functions. Different forms of Memetic Algorithms (MAs) [16–19] developed by combining an LS method with a global evolutionary optimizer have been frequently applied to solve large scale function optimization problems.

Differential Evolution (DE) [20, 21] currently stands out as a very competitive evolutionary optimizer for continuous search spaces. Several attempts have been made to improve the performance of DE for moderate to high dimensional function optimization problems. Some noted DE-variants of current interest involve success-history based parameter adaptation strategies (like SaDE [22], JADE [23]), new mutation and crossover strategies (like Pro-DE [24], MDE-pBX [25]), and combining various offspring generation strategies (CoDE [26], EPSDE [27] etc.). For high-dimensional problems (more than 500 dimensions) DE has been adopted by methods encompassing all the three algorithmic philosophies outlined above. Owing to its inherent simplicity, DE was used as the base optimizer in Yang et al.’s first work [9] on random grouping based CCEAs. Zamuda et al. [28] extended DE by log-normal self-adaptation of its control parameters and by using cooperative co-evolution as a dimensional decomposition mechanism. Parsopoulos developed a cooperative micro-DE [29] for large scale global optimization. The self-adaptive DE was hybridized with MTS for large scale optimization by Zhao et al. [30]. Some other approaches of improving DE for high-dimensional function optimization can be found in [31–34].

We can see that in order to cope with the growing complexity of the problems to be solved, DE has been subjected to several modifications. However, despite the reported performance improvements, the improved DE algorithms are very often lacking one very important thing, that is the simplicity of the DE framework – the very reason why DE was and is loved by the practitioners of evolutionary computation. The present work is motivated by the question that can we improve DE for very high dimensional search spaces by simple parameter control strategies and by combining the basic ingredients of DE without any additional computation overheads (likely to be caused by external achieves, proximity and rank based parent selection schemes, additional local search schemes, keeping the long records of successful individuals etc.).

In this paper we present a simple DE scheme where the two crucial control-parameters of DE, namely the scale factor (equivalent to the mutation step size) F and the crossover rate Cr are switched between their respective limiting values in a uniformly random manner for each offspring generation process. Also each population member is mutated using either the DE/best/1 strategy or the DE/rand/1 strategy. The difference between these two strategies lies in the selection of the base vector to be perturbed. In case of DE/best/1, the base vector that has to be perturbed with the scaled difference of any two distinct population members is the best vector in the population yielding greatest fitness (i.e. smallest objective function value for a minimization problem). On the other hand, for the DE/rand/1 scheme, the base vector is a randomly chosen member from the current population. Each individual undergoes either of the two possible mutation strategies based on which strategy generated a successful offspring (which replaced the parent during selection) last time for the same population index. Thus, the choice of the mutation strategy depends on a unit length success memory of the record of just the last successful update. The proposed algorithm requires no tunable control parameter and is very easy to implement.

Switching of the scale factor between two extreme values (here 0.5 and 2) provides scopes for coarse search of large regions as well as refined search of smaller basins of attraction. Similarly by switching Cr values between 0 and 1, a balance between coordinate-wise search and generation of rotationally invariant search moves can be stricken. Our experiments indicate that the simple parameter switching coupled with the mixing of DE/best/1 and DE/rand/1 strategies can significantly improve the performance of DE on the high-dimensional function optimization problems. This conclusion is reached through a rigorous performance comparison of the proposed DE scheme with that of some of the most well-known large-scale optimizers including the winners of the two CEC competitions. While it is very hard (if not impossible) to analytically justify the suitability of the simple changes made to DE, we undertake some empirical studies based on the population spread and diversity to highlight the effectiveness of each of the modifications suggested.

2 The DE Algorithm

The initial generation of a standard DE algorithm consists of the four basic steps – initialization, mutation, recombination or crossover, and selection, of which, only last three steps are repeated into the subsequent DE generations. The generations continue till some termination criterion (such as exhaustion of maximum functional evaluations) is satisfied.

2.1 Initialization

DE begins search for the global optima in the \( D \) dimensional real parameter space by initiation of a random population of Np real-valued vectors whose components represent the D parameters of the optimization problem. A generalized notation used to identify the i th solution (real parameter vector) of the present generation G can be shown as:

Given the decision space bounds, \( \vec{X}_{\hbox{max} } = \left[ {x_{1,\hbox{max} } ,x_{2,\hbox{max} } , \ldots x_{D,\hbox{max} } } \right] \) and \( \vec{X}_{\hbox{min} } = \left[ {x_{1,\hbox{min} } ,x_{2,\hbox{min} } , \ldots x_{D,\hbox{min} } } \right] \), the j th dimension of i th individual can be initialized as:

where \( rand_{i,j} \) is a uniformly distributed random number lying in the range [0, 1] and it is instantiated anew for each ordered pair (i, j).

2.2 Mutation

In DE terminology, a population member (say i) of the current generation, known as the target vector, is chosen and is differentially mutated with the scaled difference vector(s) \( (\vec{X}_{{r_{1} ,G}} - \vec{X}_{{r_{2} ,G}}) \) to produce a mutant or donor vector. It is to be noted that the indices r 1 and r 2 are sampled from {1,2,…, Np} are different from the running index i and \( (r_{1} ,r_{2} \in \left\{ {1,2, \ldots Np} \right\}\backslash \{ i\} ) \). A scaling factor F, usually lying in the range [0.4, 2], scales the difference vector(s). Two commonly used DE mutation strategies are listed below:

where \( r_{1} ,r_{2} ,r_{3} \) are mutually exclusive indices that are stochastically selected from \( \left\{ { 1, 2, \ldots ,Np} \right\} \).

2.3 Crossover

In DE, the crossover step aims to combine the individual components of the parent and the mutant vector into a single offspring commonly known as trial vector \( \vec{U}_{i,G} = \left[ {u_{1,i,G} ,u_{2,i,G} , \ldots u_{D,i,G} } \right] \) DE primarily employs either of the two crossover strategies: exponential (two-point modulo) and binomial (uniform). Binomial crossover is preferred since it does away with the inherent representational bias in n-point crossover by simulating D random trials. Moreover a recent work [35] attributing to the sensitivity of crossover to population size has reported the exponential variant to be more prone as compared to its binomial counterpart. Owing to the aforesaid observations, here we employ binomial crossover to form the trial vector.

In order to implement the binomial crossover, the control parameter Crossover rate (Cr) is set to a fixed value lying in the range [0,1] and then D independent random numbers, between 0 and 1, are sampled uniformly and compared with Cr to decide which component is to be included in the trial vector. The method is outlined as:

where j r is a randomly chosen index from {1, 2, …, D} and it ensures that at least one component from the mutant vector is present in the offspring produced.

2.4 Selection

Finally, a selection process is performed through a one-to-one competition between the parent and the offspring to maintain a constant population size. The selection process can be described as:

where \( f(.) \) is the objective function to be minimized.

3 The Proposed Method

DE has 3 primary control parameters: the scale factor F, and the crossover rate Cr, and the population size Np. The performance of DE largely depends on F and Cr. Several efforts have been made in the past to control and adapt the value of these two parameters so that the algorithm may strike a balance between its explorative and exploitative behaviours on different fitness landscapes. Both of these parameters have their own allowable ranges. It is easy to see that Cr is similar to a probability value and hence it should lie in [0, 1]. Zaharie [36] derived a lower limit of F and her study revealed that if F be sufficiently small, the population can converge even in the absence of selection pressure. Ronkkonen et al. [37] stated that typically 0.4 < F < 0.95 with F = 0.9 can serve as a good first choice. They also opine that Cr should lie in (0, 0.2) when the function is separable, while in (0.9, 1) when the function’s variables are dependent. As is evident from [21] and the therein, numerous approaches have been proposed to improve the performance of DE by controlling or self-adapting these two control parameters and also by automating the choice of the appropriate offspring generation strategy. However, very often such methods may necessitate additional computational burdens, which, somewhat sacrifice the simplicity of DE. Thus, the question that naturally comes up is whether we can retain efficient search behaviour and adequate exploration-exploitation trade-off by doing something very simple? Can such easy modifications still result into a very competitive performance against the existing state-of-the-art? This article presents a humble contribution in this context.

The purpose of scaling factor F is to add weight to the difference vector and add it to base vector to produce mutant/donor vector. It is established in the study that F is strictly positive and greater than zero. A large value of \( F \) will support exploration, i.e. more of the feasible search volume can be covered. This property can be often desirable for solving high-dimensional optimization problems, since they possess a large search space. But, exploration is not helpful for converging and fine tuning of the solutions, necessary for detecting the optimum. A small value of \( F \) will serve the purpose of exploitation and support the convergence towards a solution.

In our proposal, for each population member, the F value is switched between 0.5 and 2 in a uniformly randomized way. Note that F = 2 is somewhat an unusual choice since this extreme value has not been reported for DE in commonly available papers. However, our experiments indicate that for the large scale problems this value can indeed enhance the performance than switching F between 0.5 and 1. When F is 2, the difference vector gets a higher importance. Consequently, the newly generated mutant point will be thrown relatively far from current base point, thereby enhancing the chances of venturing unexplored regions of the search space. When F takes a value of 0.5, the newly generated mutant point lies near by the base point as the difference vector gets very less weight. Thus it enhances the certainty of local neighborhood search. In Fig. 1 two possible distribution of the donor vectors have been shown around two mutant points generated by perturbing a base vector \( \vec{X}{}_{base} \) with two values of the scale factor, F = 0.5 and F = 2 on a 2D search space.

Similarly for each individual, the Cr value is switched between 0 and 1. Note that this means in our proposed DE variant can either the mutant/donor vector is directly accepted as the final offspring (for Cr = 1) or the final offspring differs from the target (parent) vector at a single index determined by j r (for Cr = 0). While the former situation corresponds to the generation of rotationally invariant points, the latter implies an axis parallel movement of the solution points.

Note that a plethora of DE variants has been published with different kinds of parametric (like sampling from a Cauchy or Gaussian distribution, see e.g. [22, 23]) and non-parametric (sampling from a uniform distribution like [31]) randomization in the tuning of F and Cr. However, such switching between only two extreme values has never been proposed earlier. In what follows we name the resulting DE variant as SWDE (Switching DE).

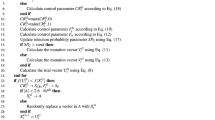

Each individual in SWDE can be mutated by any of the commonly used mutation strategies in DE. It is now well-known that DE/best/1 induces somewhat greedy search behaviour and hence can be recommended for unimodal functions. On the other hand, DE/rand/1 introduces more randomization (since different base vectors are perturbed to generate the mutant points for different individuals) and explorability and is, hence, suitable for multimodal functions. In our proposal we use a simple strategy for choosing any one of these two basic mutation schemes based on the success record of just the last successful update at the same population index. This means, at the first generation an individual is mutated either by DE/rand/1 or DE/best/1 chosen randomly. If the corresponding target individual is replaced by the trial (offspring), then the mutation scheme can be considered as a good choice, and will be used for that individual (of same index) in the next generation as well. If the trial is not selected for the individual, then the mutation strategy is unsuccessful, and in the next generation the other mutation scheme will be used for that individual.

We refer to this DE variant that combines the parameter switching scheme with a success-based selection of the mutation strategies as SWDE_Success (SWitching DE with Success-based selection of mutation scheme). Note that SWDE_Success does not require any control parameter to tune, except for the population size Np, which, however, is not really treated as a control parameter and is kept constant for most of the representative literature on DE. There is no need to fix any initial values for F and Cr, knowing only their feasible ranges would be fine. There is no parametric probability distribution whose parameters (like mean, variance, offset etc.) are required to be tuned.

Before moving on to the comparative study on standard benchmark suites, we would like to illustrate that DE with these simple modifications can indeed better preserve the population diversity, and thus can be helpful in retaining the useful information about promising search regions in a better way. Measurement of diversity level of the total population during the optimization work is another important aspect of empirical analysis, because maintaining diversity along the search process is another important aspect of large scale optimization. In proposed work “distance-to-average point” measurement for diversity of the population P G at generation G, as presented in [38], is used as follows:

where Np represents population size, L is the length of the longest diagonal in the search space of dimension D and \( \bar{x}_{j} \) is the average value of j-th dimension of the vector. Variation of population diversity with respect to iteration as defined in (6) for the population is plotted in Fig. 2 for the Rastrigin’s function in 50D. It is clear from the figure that the population of SWDE_Success never loses diversity prematurely. In this figure the red line depicts population diversity for SWDE_Success and blue one represents the same for standard DE.

In Fig. 3 we illustrate the distribution of population members of standard DE/best/1/bin (F = 0.8 and Cr = 0.8) and the proposed SWDE_Success on a 2D parameter space of the Rastrigin’s function. The figures present screenshots of the population at 1st, 5-th and 20-th iterations. Note that for the two algorithms, the iterations were started from the same initial population, so that the different spread of the evolving populations may be attributed to their internal search mechanisms only. It can be seen that the population members following the SWDE_Success scheme can capture the global optimum more efficiently while still preserving the population diversity.

4 Experiments and Results

A popular choice for evaluating the performance of the DE algorithm is to use the benchmark suite proposed for the IEEE CEC (Congress on Evolutionary Computation) competitions. These suites contain collection of functions of diverse nature, which can successfully validate the performance of an optimization algorithm in a variety of scenarios.

The CEC 2008 [5] and CEC 2010 [6] test suites are specially designed with large scale minimization problems (i.e. of dimensions D = 100, 500, and 1000), and thus, useful for testing our proposed DE variant (SWDE_Success). Following standard procedure, the mean and standard deviation of the error value is used to measure the performance of an algorithm. The error is calculated as the difference between the actual value of the global optimum and the obtained value of the optimum. Only for function F7 of CEC 2008, the absolute value of the obtained optimum is recorded and compared, because for that function, the globally optimal function value is unknown. The population size has been taken as 100 for SWDE_Success in all the cases. To evaluate the scalability of the algorithm in the worse condition, the comparison is rendered on 1000 and 2000 dimensional problems.

For all the CEC 2008 benchmark problems and for all algorithms compared, the maximum number of Function Evaluations (FEs) corresponding to each run was taken to be \( 5000 \times D \), where D denotes the dimensionality of the functions following [5]. Similarly for the CEC 2010 benchmarks, the maximum number of FEs corresponding to each run was fixed to \( 3000 \times D \) [6, 18]. The parametric settings for all the peer algorithms were kept similar to their respective literatures. For SWDE_Success, the population size was fixed to Np = 100 for all 1000D problems and Np = 150 for the 2000D problems. We find that this choice (which is standard and straight forward) provides consistently good performance on the used benchmarks, and little improvement takes place with increased execution time if we increase the population size any further.

A non-parametric statistical test called Wilcoxon’s rank sum test for independent samples [39] is conducted at the 5 % significance level in order to judge whether the results obtained with the best performing algorithm differ from the final results of rest of the competitors in a statistically significant way. In all result tables the statistical test results are summarized in the following way. If the final error yielded by an algorithm is statistically significantly different from that of the best performing algorithm on a particular function, then the mean error of the former is marked with a † symbol. If the difference of the error values found by one algorithm is not statistically significant, as compared to its best competitor, then the mean of this algorithm is marked with a ≈. The best performing algorithm in each case is marked with boldface.

In order to demonstrate how the proposed parameter switching scheme works harmoniously with the success-based mutation scheme, we begin with a comparative study among the proposed SWDE_Success, the standard DE/best/1/bin scheme with fixed F and Cr (F = 0.8, Cr = 0.9) and a SWDE that uses only DE/best/1 mutation scheme for all its population members. The obtained results are demonstrated in Table 1, which shows that SWDE provides much better results than DE/best/1 with fixed F and Cr values. Also it can be observed that SWDE_Success yields statistically better results as compared to both SWDE and DE/best/1 on all the 7 functions of the CEC 2008 test bed.

To compare the performance of SWDE_Success against the existing state-of-the-art, we consider the results of five other algorithms customised for large scale global optimization. Two of them are the variants of the Particle Swarm Optimisation (PSO) algorithm namely CCPSO2 [10] and EPUS-PSO (Efficient Population Utilization Strategy for Particle Swarm Optimization) [40], which use a variable grouping technique and an efficient population management scheme respectively. The third one is Sep-CMA-ES [41] a scalable variation of the popular CMA-ES optimization technique, which performs faster and better than the original algorithm, especially on separable functions. The fourth is MTS (Multiple Trajectory Search) [16], a hybrid local search method. The fifth one is MLCC (Multi-Level Cooperative Co-evolution) [42]. The results are listed Table 2, where SWDE_Success outperformed others, for all the functions except F6. For F6 CCPSO2 performed slightly better than SWDE_Success, however, result of the rank sum test indicates that this difference is not statistically significant. Thus, SWDE_Success is found to be more consistent on this benchmark suite. In terms of the average rank SWDE_Success is the clear winner, followed by CCPSO2 and MTS.

In Tables 3, 4 and 5 SWDE_Success results are compared with 11 other evolutionary optimizers including some recent DE-variants (DECC-ML, DECC-CG, DECC-DG, and DE/best/1/bin) on the CEC 2010 benchmarks. Out of the 20 high-dimensional functions SWDE_Success provided statistically significantly better results compared to all its peer algorithms on 14 functions, and ranked second in 3 functions. DECC-ML [43] performed best in three functions, namely F3, F11 and F16, jDELsgo [28] performed better on two functions F6 and F19, and MA-SW-Chains [18] performed better than others only in one case of F12. The rank sum test results indicate that on F3, the result of SWDE_Success is not statistically significantly different from the best result given by DECC_ML. Similarly for F6, the best result yielded by jDElsgo is statistically equivalent to that of SWDE_Success. For CEC 2010 functions also SWDE_Success holds the minimum average rank, the closest followers are jDELsgo, and MA-SW-Chains.

To further test the scalability of the proposed algorithm, three CEC 2008 functions (1 unimodal and 2 multimodals) of 2000 dimensions are used, as was also done in [10]. The performance is compared with two popular evolutionary large scale optimizers of diverse origins, CCPSO2 and Sep-CMA-ES [41]. The obtained results are summarized in Table 6. Sep-CMA-ES only performed better than SWDE_Success in the case of the separable function F1. However, according to the rank sum test, the difference between the final mean errors of sep-CMA-ES and SWDE_Success is not statistically meaningful. For the multimodal non-separable problems F3 and F7, SWDE_Success performed statistically better than both CCPSO2 and sep-CMA-ES. CCPSO2 took the second place for F7 and Sep-CMA-ES did the same for F3.

5 Conclusion

To address the problem of optimizing very high-dimensional numerical functions, this paper presents a new variant of DE, referred here as the SWDE_Success, which uses a simple switching scheme for the two key parameters of DE, the scale factor and the crossover rate. SWDE_Success also employs a success-based selection of either of the two kinds of mutation strategies. The algorithm uses two very common mutation strategies (the greedy DE/best/1 and the explorative DE/rand/1 schemes) and applies a simple scheme selection process, which only depends on the success of a mutation scheme in the previous iteration in terms of generating a successful offspring (one which could replace its parent during the selection).

Exploring a huge search volume (induced by the large number of variables) with a limited population of candidate solutions is challenging and it requires a judicious balance between the explorative and exploitative tendencies of an evolutionary algorithm. This requirement is nicely fulfilled by the random selection of the control parameter values from their extremities. Our results indicate that a combination of the high and unconventional value of F (= 2) with the low value (= 0.5) can be indeed very useful for solving benchmark functions. In contrast to some of the most prominent approaches (like [22, 23, 26, 27]) that sample F values from the interval of (0.4, 1) and Cr values from (0, 1), our results indicate that most of the useful information about F and Cr values can remain attached to the boundaries of their feasible regions. This point requires further analytical and experimental investigations in future.

The future works may also include a detailed study on the dynamics and search procedure of SWDE_Success, alongside an explanation of its success. Also the parameter switching strategy may be further investigated in other optimization scenarios like for moderate dimensional problems, for multi-objective, constrained and dynamic optimization problems.

References

Foli, K., Okabe, T., Olhofer, M., Jin, Y., Sendhoff, B.: Optimization of micro heat exchanger: CFD, analytical results and multiobjective evolutionary algorithms. Int. J. Heat Mass Transf. 49(5–6), 1090–1099 (2006)

Sonoda, T., Yamaguchi, Y., Arima, T., Olhofer, M., Sendhoff, B., Schreiber, H.A.: Advanced high turning compressor airfoils for low Reynolds number condition, part I: Design and optimization. J. Turbomach. 126(3), 350–359 (2004)

Wang, C., Gao, J.: High-dimensional waveform inversion with cooperative coevolutionary differential evolution algorithm. IEEE Geosci. Remote Sens. Lett. 9(2), 297–301 (2012)

Das, S., Suganthan, P.N.: Problem definitions and evaluation criteria for CEC 2011 competition on testing evolutionary algorithms on real world optimization problems. Technical Report, Jadavpur University, India and Nanyang Technological University, Singapore (2010)

Tang, K., Yao, X., Suganthan, P., MacNish, C., Chen, Y., Chen, C., Yang, Z.: Benchmark functions for the CEC’2008 special session and competition on large scale global optimization. In: Nature Inspired Computat. Applicat. Lab., Univ. Sci. Technol. China, Hefei, China, Tech. Rep. http://nical.ustc.edu.cn/cec08ss.php (2007)

Tang, K., Li, X., Suganthan, P., Yang, Z., Weise, T.: Benchmark functions for the CEC’2010 special session and competition on large scale global optimization. In: Nature Inspired Computat. Applicat. Lab., Univ. Sci. Technol. China, Hefei, China, Tech. Rep. http://nical.ustc.edu.cn/cec10ss.php (2009)

Potter, M., De Jong, K.: Cooperative coevolution: an architecture for evolving coadapted subcomponents. Evol. Comput. 8(1), 1–29 (2000)

Ray, T., Yao, X.: A cooperative coevolutionary algorithm with correlation based adaptive variable partitioning. In: Proceedings of the IEEE CEC, pp. 983–999, May 2009

Yang, Z., Tang, K., Yao, X.: Large scale evolutionary optimization using cooperative coevolution. Inf. Sci. 178(15), 2986–2999 (2008)

Li, X., Yao, X.: Cooperatively coevolving particle swarms for large scale optimization. IEEE Trans. Evol. Comput. 16(2), 210–224 (2011)

Omidvar, M.N., Li, X., Mei, Y., Yao, X.: Cooperative co-evolution with differential grouping for large scale optimization. IEEE Trans. Evol. Comput. 18(3), 378–393 (2013)

Krishnakumar, K.: Micro-genetic algorithms for stationary and non-stationary function optimization, SPIE 1196. Intell. Control Adapt. Syst. (1989). doi:10.1117/12.969927

Huang, T., Mohan, A.S.: Micro–particle swarm optimizer for solving high dimensional optimization problems. Appl. Math. Comput. 181(2), 1148–1154 (2006)

Dasgupta, S., Biswas, A., Das, S., Panigrahi, B.K., Abraham, A.: A micro-bacterial foraging algorithm for high-dimensional optimization. In: IEEE Congress on Evolutionary Computation (CEC 2009), pp. 785–792, Tondheim, Norway, May 2009

Rajasekhar, A., Das, S., Das, S.: μABC: a micro artificial bee colony algorithm for large scale global optimization. In: Soule, T. (ed.) Proceedings of the 14th Annual Conference Companion on Genetic and Evolutionary Computation (GECCO ‘12), pp. 1399–1400, ACM, New York, NY, USA. doi:10.1145/2330784.2330951. http://doi.acm.org/10.1145/2330784.2330951

Tseng, L.Y., Chen, C.: Multiple trajectory search for large scale global optimization. In: IEEE Congress on Evolutionary Computation (CEC 2008), pp. 3052–3059, Hong Kong, June 2008

Zhao, S.Z., Suganthan, P.N., Das, S.: Self-adaptive differential evolution with multi-trajectory search for large scale optimization. Soft. Comput. 15, 2175–2185 (2011)

Molina, D., Lozano, M., Herrera, F.: MA-SW-Chains: memetic algorithm based on local search chains for large scale continuous global optimization. In: IEEE Congress on Evolutionary Computation (CEC 2010), pp. 3153–3160, Barcelona, July, 2010

Molina, D., Lozano, M., Sánchez, A.M., Herrera, F.: Memetic algorithms based on local search chains for large scale continuous optimization problems: MA-SSW-Chains. Soft. Comput. 15, 2201–2220 (2011)

Storn, R., Price, K.: Differential evolution—a simple and efficient heuristic for global optimization over continuous spaces. J. Glob. Optim. 11(4), 341–359 (1997)

Das, S., Suganthan, P.N.: Differential evolution: a survey of the state-of-the-art. IEEE Trans. Evol. Comput. 15(1), 4–31 (2011)

Qin, A.K., Huang, V., Suganthan, P.: Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Trans. Evol. Comput. 13(2), 398–417 (2009)

Zhang, J., Sanderson, A.: JADE: adaptive differential evolution with optional external archive. IEEE Trans. Evol. Comput. 13(5), 945–958 (2009)

Epitropakis, M., Tasoulis, D., Pavlidis, N., Plagianakos, V., Vrahatis, M.: Enhancing differential evolution utilizing proximity based mutation operators. IEEE Trans. Evol. Comput. 15(1), 99–119 (2011)

Islam, S.M., Das, S., Ghosh, S., Roy, S., Suganthan, P.N.: An adaptive differential evolution algorithm with novel mutation and crossover strategies for global numerical optimization. IEEE Trans. Syst. Man Cybern. B Cybern. 42(2), 482–500 (2012)

Wang, Y., Cai, Z., Zhang, Q.: Differential evolution with composite trial vector generation strategies and control parameters. IEEE Trans. Evol. Comput. 15(1), 55–66 (2011)

Mallipeddi, R., Suganthan, P.N.: Differential evolution algorithm with ensemble of parameters and mutation and crossover strategies. In: Proc. Swarm Evol. Memet. Comput., Chennai, India, pp. 71–78 (2010)

Zamuda, A., Brest, J., Boˇskovi´c, B., Zumer, V.: Large scale global optimization using differential evolution with self-adaptation and cooperative co-evolution. In: IEEE Congress on Evolutionary Computation (CEC 2008), pp. 3718–3725, Hong Kong, June 2008

Parsopoulos, K.E.: Cooperative micro-differential evolution for high-dimensional problems. In: Genetic and Evolutionary Computation Conference 2009 (GECCO 2009), pp. 531–538, Montreal, Canada (2009)

Zhao, S.Z., Suganthan, P.N., Das, S.: Self-adaptive differential evolution with multi-trajectory search for large scale optimization. Soft. Comput. 15, 2175–2185 (2011)

Brest, J., Maučec, M.S.: Self-adaptive differential evolution algorithm using population size reduction and three strategies. Soft. Comput. 15(11), 2157–2174 (2011)

Wang, H., Wu, Z., Rahnamayan, S.: Enhanced opposition-based differential evolution for solving high-dimensional continuous optimization problems. Soft. Comput. 15(11), 2127–2140 (2011)

Weber, M., Neri, F., Tirronen, V.: Shuffle or update parallel differential evolution for large-scale optimization. Soft. Comput. 15(11), 2089–2107 (2011)

Wang, H., Wu, Z., Rahnamayan, S., Jiang, D.: Sequential DE enhanced by neighborhood search for large scale global optimization. In: IEEE Congress on Evolutionary Computation (CEC 2010), pp. 4056–4062, Barcelona, July, 2010

Zaharie, D.: Influence of crossover on the behavior of the differential evolution algorithm. Appl. Soft Comput. 9(3), 1126–1138 (2009)

Zaharie, D.: Critical values for the control parameters of differential evolution algorithms. In: Proc. 8th Int. Mendel Conf. Soft. Comput., pp. 62–67 (2002)

Ronkkonen, J., Kukkonen, S., Price, K.V.: Real parameter optimization with differential evolution. In: The 2005 IEEE Congress on Evolutionary Computation (CEC2005), vol. 1, pp. 506–513. IEEE Press (2005)

Hu, J., Zeng, J., Tan, Y.: A diversity-guided particle swarm optimizer for dynamic environments. In: Proceedings of Bio-Inspired Computational Intelligence Applivations, vol. 9, no. 3, pp. 239–247. Lecture Notes in Computer Science (2007)

Derrac, J., García, S., Molina, D., Herrera, F.: A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 1(1), 3–18 (2011)

Hsieh, S.T., Sun, T.Y., Liu, C.C., Tsai, S.J.: Efficient population utilization strategy for particle swarm optimizer. IEEE Trans. Syst. Man Cybern. B Cybern. 39(2), 444–456 (2009)

Ros, R., Hansen, N.: A simple modification in CMA-ES achieving linear time and space complexity. Lect. Notes Comput. Sci. 5199, 296–305 (2008)

Yang, Z., Tang, K., Yao, X.: Multilevel cooperative coevolution for large scale optimization. In: Proc. IEEE Congr. Evol. Comput., pp. 1663–1670, June 2008

Omidvar, M.N., Li, X., Yao, X.: Cooperative co-evolution with delta grouping for large scale non-separable function optimization. In: Proc. IEEE Congr. Evol. Comput., pp. 1762–1769, July 2010

Wang, Y., Huang, J., Dong, W.S., Yan, J.C., Tian, C.H., Li, M., Mo, W.T.: Two-stage based ensemble optimization framework for large-scale global optimization. Eur. J. Oper. Res. 228, 308–320 (2013)

Korošec, P., Šilc, J.: The differential ant-stigmergy algorithm for large scale real-parameter optimization. In: Ant Colony Optimization and Swarm Intelligence, Lecture Notes in Computer Science, vol. 5217, pp. 413–414, Springer, Berlin Heidelberg (2008)

Zhao, S., Liang, J., Suganthan, P.N., Tasgetiren, M.F.: Dynamic multi-swarm particle swarm optimizer with local search for large scale global optimization. In: Proceedings of IEEE Congress on Evolutionary Computation, pp. 3845–3852 (2008)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Das, S., Ghosh, A., Mullick, S.S. (2015). A Switched Parameter Differential Evolution for Large Scale Global Optimization – Simpler May Be Better. In: Matoušek, R. (eds) Mendel 2015. ICSC-MENDEL 2016. Advances in Intelligent Systems and Computing, vol 378. Springer, Cham. https://doi.org/10.1007/978-3-319-19824-8_9

Download citation

DOI: https://doi.org/10.1007/978-3-319-19824-8_9

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-19823-1

Online ISBN: 978-3-319-19824-8

eBook Packages: EngineeringEngineering (R0)