Abstract

In this chapter, we start by studying the problem of the optimal control, for a given fixed finite communication sequence. Then, we have formulated and solved the problem of the joint optimization of control and scheduling, for a given initial state. The numerical examples illustrating this method have pointed out that the obtained optimal schedule is extremely dependent on the chosen initial state x(0). These observations show that in the same way as the optimal control, the optimal scheduling depends on the current state of the system. However, from a computer science point of view, off-line scheduling has many advantages, essentially because it consumes a few computing resources and does not induce execution overheads. In order to obtain off-line schedules that are optimal from a certain point of view, it is necessary to use performance criteria depending on the intrinsic characteristics of the system, not on a particular initial state. This will be the objective of the next chapter. Nevertheless, this dependency between the optimal schedule and the plant state may be seen as promising way for improving the quality of control by means of plant state-based scheduling algorithms. The design of such algorithms will be studied in Chap. 6.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Optimal Integrated Control

- Resource-constrained Systems

- Communication Sequence

- Computer Science Point

- Mixed Integer Quadratic Programming (MIQP)

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

The general model of resource-constrained systems allows the on-line assignment of the sensors-to-controller and the controller-to-actuators scheduling vectors. This assignment may be based on a pre-computed off-line schedule or on an on-line scheduling algorithm. The problem of the optimal integrated control and off-line scheduling of the sensors-to-controller link is the dual problem of the optimal integrated control and off-line scheduling of the controller-to-actuators link, and may be solved in a similar way. However, the problem of the optimal integrated control and on-line scheduling of the sensors-to-controller link is different from the problem of the optimal integrated control and on-line scheduling of the controller-to-actuators link, and its formulation and solving are extremely dependant on the used technology (possibility of on-line arbitration based on the comparison of messages identifiers like in CAN networks for example). Furthermore, it is not clear whether an on-line scheduling algorithm of the sensors-to-controller link will be better (from a control performance point of view) than a predefined off-line scheduling algorithm, especially, because the sensors are physically distributed whereas the optimal on-line assignment of the sensors-to-controller link requires the knowledge of all sensors values, which may not be possible due to the communication constraints. To the best of the authors knowledge, this last question remains an open problem.

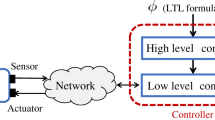

For that reason, in this chapter, as well as in the forthcoming Chaps. 5 and 6, we will assume that the state of the plant is available to the controller at each sampling period. This assumption will allow us to mainly focus on the problem of the optimal integrated control and scheduling of the controller-to-actuators link. Note that assuming that the state of the plant is available to the controller at each sampling period does not necessarily mean that we are restricted to a particular architecture, but rather that “state of the art methods”, such as an off-line scheduling, are deployed to obtain a satisfactory estimate of the state at the controller, using an adequate part of the bandwidth. Let 𝒮 be a resource-constrained system verifying this assumption. Furthermore, 𝒮 verifies p=n=b r , \(\sigma (k)=1_{n,1}, \forall k \in\mathbb{N}\) and 0<b w ≤m. To simplify the notation, let b=b w . In the model considered in the sequel, the resource-constrained system 𝒮 has two types of inputs: control inputs and scheduling inputs of the controller-to-actuators link. The fundamental problem that will be addressed is the problem of the joint optimization of control and scheduling. This problem may be viewed as the generalization of optimal control problems for linear sampled-data system. It naturally appears as having a hybrid character, since continuous aspects (system dynamics) as well as logical aspects (scheduling) interact.

Using the basic results of optimal control theory, we first describe the solution of the optimal control problem given a fixed communication sequence, over finite and infinite horizons. Then the problem of the optimal integrated control and scheduling of resource-constrained systems is formulated and solved. The formulation and solving of this problem are based on the existing theoretical tools from hybrid systems theory and especially the MLD framework [21], where this problem may be perfectly modeled. Finally, based on a numerical example, the solutions of this problem are studied.

1 Performance Index Definition

In order to introduce an appropriate measure of the “quality” of the control and scheduling, inspired by optimal control metrics [14], a quadratic cost function is associated to the system (3.2) (and implicitly to the resource-constrained system 𝒮).

where T f =NT s and Q c , R c and S c are positive definite matrices. These matrices define the design specifications of the ideal continuous-time controller. The sampled-data representation of the cost function J c (x c ,u c ,0,T f ) at the sampling period T s is

The expressions of Q 1, Q 2, Q 12 and Q 0 may be found in ([14], pp. 411–412). In the following, it is assumed that Q, Q 2 and Q 0 are positive definite matrices, where

Note that this representation does not involve any approximation and it is exact. Using this representation, the inter-sample behavior is taken into account.

2 Optimal Control over a Finite Horizon for a Fixed Communication Sequence

The problem of the optimal control, over a finite-time N, for a fixed admissible and maximal communication sequence δ N−1, may be formulated as follows:

Problem 4.1

Given an initial state x(0) and a final time N, find the optimal control sequence v N−1=(v(0),…,v(N−1)) that minimizes the cost function:

subject to

In order to solve this problem, we reconsider the state representation (3.12) of system 𝒮, which was established in Sect. 3.4 of Chap. 3. For a given maximal controller-to-actuators scheduling δ, system 𝒮 is described by the state equation (3.12a). The cost function J(x,u,0,N) may be rewritten in the form

where

and

Problem 4.1 is equivalent to the optimal control problem of a discrete linear time-varying system, given as follows:

Problem 4.2

Given an initial state x(0) and a final time N, find the optimal control sequence v N−1=(v(0),…,v(N−1)) that minimizes the cost function

subject to

Problem 4.2 is a classical optimal control problem of a discrete linear time-varying system. Different methods for its resolution were developed in control textbooks (see, for instance, [14]). Let:

and

The solution of this problem closely depends on the solution of an algebraic equation, involving the variable \(\tilde{S}(k)\) and described by

under the terminal condition

Equation (4.4) is the discrete algebraic Riccati equation associated to the Problem 4.2. Knowing that \(\tilde{Q}_{0}\) is semi-definite positive and \(\tilde{Q}_{2}(k)\) is definite positive (because D δ (k) is injective when δ is a maximal admissible communication sequence), than this equation admits a unique positive semi-definite solution. Consequently, Problem 4.2 admits a unique solution [14] defined by

with

3 Optimal Control over an Infinite Horizon for a Fixed Communication Sequence

Consider a T-periodic maximal communication sequence δ T−1 defined by

and verifying δ(k+T)=δ(k). Assume furthermore that δ∈𝒮c, where 𝒮c is the set of communication sequences that guarantee the reachability of system (3.12). The periodicity of the communication sequence induces the periodicity of the resource-constrained system 𝒮. As a result, matrices \(\tilde{A}(k)\), \(\tilde{B}(k)\) and \(\tilde{Q}(k)\) satisfy \(\tilde {A}(k+ T)=\tilde{A}(k)\), \(\tilde{B}(k+T)=\tilde{B}(k)\) and \(\tilde {Q}(k)= \tilde{Q}(k+T)\).

Let ι be a discrete time instant and H a positive integer, assume that N=ι+HT and consider the optimal control problem:

As illustrated in [35], a time invariant reformulation of the optimal control problem (4.8) may be obtained by using the lifting technique. The time invariant reformulation may be seen as a down sampled representation of system (3.12) with periodicity T, having an augmented input vector. In the following, the formulation of the time invariant representation is described.

Let Φ be the transition matrix associated with the state matrix \(\tilde{A}\) defined by:

Let Γ the matrix defined for s<l<s+T by

and for s=l by

Let

and

then for 0≤i≤T:

In particular, let

and

then the following relation is obtained:

Let Λ(i) the matrix defined for 0≤i<T by

then the cost function may be written

where

and \((\bar{Q}\iota)_{0}=\tilde{Q}_{0}\). Finally, the following optimal control problem is obtained

The corresponding discrete algebraic Riccati equation is given by:

with the terminal condition

where

The relationship between the solutions of Riccati equations (4.4) and (4.10), which are respectively, associated to the optimal control problems (4.8) and (4.9), is formalized by the following result.

Lemma 4.1

[35]

If \(\bar{S}_{\iota}(H)=\tilde{S}(\iota+NT)=\tilde{Q}_{0}\), then \(\bar {S}_{\iota}(q)=\tilde{S}(\iota+qT)\) for all q≤H.

This result follows from the fact that optimal control problems (4.8) and (4.9) are similar. By imposing the terminal condition \(\bar{S}_{\iota}(H)=\tilde{S}(\iota+NT)=\tilde{Q}_{0}\), called periodic generator, the optimal costs must be identical, which implies that the solutions of the Riccati equations (4.4) and (4.10) must be the same. As a result, when H→+∞, \(\bar{S}_{\iota}(q)\) converges to a constant solution \(\bar{S}_{\iota}\). Consequently, \(\tilde{S}(k)\) converges to a periodic solution, defined by \(\tilde{S}(k)=\bar{S}_{(k\ \mathrm{mod}\ T)}\). This periodic solution may be obtained by solving the algebraic Riccati equation associated with problem (4.9) when H→+∞ and for ι∈{1,…,T}. The optimal control gains may then be deduced from the relation (4.7) and are described by the sequence \((\tilde{K}(0),\ldots,\tilde{K}(T-1) )\).

This formulation will be of practical importance at the next chapters when the problems of the optimal integrated control and scheduling of resource-constrained systems will be tackled.

4 Finite-Time Optimal Integrated Control and Scheduling

In this paragraph, the problem of the finite-time optimal control and scheduling is formulated and translated into the mixed-integer quadratic programming (MIQP) formulation. We assume in the following that u(k)=0 and v(k)=0 for k<0, and that control commands u(k) and v(k) are subject to saturation constraints

where L i <0 and U i >0.

4.1 Problem Formulation

The finite-time optimal control and scheduling problem may be formalized as follows:

Problem 4.3

Given an initial state x(0) and a final time N, find the optimal control sequence v N−1=(v(0),…,v(N−1)) as well as the optimal communication sequence δ N−1=(δ(0),…,δ(N−1)) which minimizes the performance index:

subject to:

The problem of finding the optimal control sequence v N−1 for a given fixed communication sequence δ N−1 is a quadratic programming (QP) problem. The number of possible communication sequences is finite. The resolution of Problem 4.3 may be reduced to the exploration of all the feasible maximal communication sequences and the solving of a QP problem for each fixed communication sequence. However, in practice, the number of feasible communication sequences grows exponentially with N, which means that exhaustive search may not be applied to problems with large values of N.

The solution of the Problem 4.3 may be obtained by solving a simpler optimization problem, which may be seen as a constrained control problem, where the variables v N−1 are eliminated and the constraint (3.8) is replaced by (3.7). Let u N−1=(u(0),…,u(N−1)), this problem may be stated as follows:

Problem 4.4

Given an initial state x(0) and a final time N, find the optimal control sequence u N−1 as well as the optimal scheduling sequence δ N−1 that minimizes the performance index:

subject to

We observe that the constraints of optimal scheduling problem are composed of a set of linear equalities and inequalities as well as of the following logical formula:

In order to solve this problem, it is necessary to translate the logical formula (4.13) into linear inequalities. The connective “⟹” may be eliminated if (4.13) is rewritten in the following equivalent form

However, Eq. (4.14) contains terms which are the product of logical variables and continuous variables. Using the procedure described in [21], this product is translated into an equivalent conjunction of linear inequalities. For example, let

Then (4.14) may be rewritten in the equivalent form:

Note that the same procedure may be applied to

Let

and

then Problem 4.4 may be written in the form

where the expressions  , 𝒢, 𝒜 and

, 𝒢, 𝒜 and  are defined in the sequel.

are defined in the sequel.

Matrices 𝒜 and  include both the equality and the inequality constraints of the problem, and may be written in the form:

include both the equality and the inequality constraints of the problem, and may be written in the form:

It easy to see that the relation

is equivalent to

Matrices 𝒜eq and  describe equalities (3.4a), (4.14) and impose to the scheduling sequence of the controller-to-actuators link to be maximal. Matrix 𝒜eq is defined by

describe equalities (3.4a), (4.14) and impose to the scheduling sequence of the controller-to-actuators link to be maximal. Matrix 𝒜eq is defined by

where

-

SC is a N×mN matrix described by

$$\mathit{SC}= \left [ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 1_{1,m} & 0_{1,m} & \cdots& 0_{1,m} \\ 0_{1,m} & 1_{1,m} & \cdots& 0_{1,m} \\ & & \ddots& \\ 0_{1,m} & 0_{1,m} & \cdots& 1_{1,m} \\ \end{array} \right ]. $$ -

ST is a n(N+1)×(n(N+1)+mN) matrix that is used to represent the constraint (3.4a):

$$\mathit{ST}= \left [ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c@{\quad }c@{\quad }c@{\quad }c@{\quad }c@{\quad }c} I_n & 0_{n,n} & 0_{n,n}& \cdots& 0_{n,n} & 0_{n,m} & 0_{n,m} & \cdots & 0_{n,m} \\ -A & I_n & 0_{n,n}& \cdots& 0_{n,n} & -B & 0_{n,m} & \cdots& 0_{n,m} \\ 0_{n,n} & -A & I_n & \cdots& 0_{n,n} & 0_{n,m} & -B & \cdots& 0_{n,m} \\ & & \ddots& \ddots& & & & \ddots& \\ 0_{n,n} & 0_{n,n} & & -A & I_n & 0_{n,m} & 0_{n,m} & \cdots& -B \end{array} \right ]. $$ -

UU is a mN×mN matrix described by

$$\mathit{UU}= \left [ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c@{\quad }c} I_m & 0_{m,m} & 0_{m,m} & \cdots& 0_{m,m} \\ -I_m & I_m & 0_{m,m} & \cdots& 0_{m,m} \\ 0_{m,m} & -I_m & I_m & \cdots& 0_{m,m} \\ & & \ddots & \ddots& \\ 0_{m,m} & 0_{m,m} & & -I_m & I_m \end{array} \right ]. $$

Matrix  is defined by

is defined by

Let U and L the vectors defined by

Matrices 𝒜in and  describe the constraints defining the variables ξ(k) and o(k) ((4.15) and (4.17)). Matrix 𝒜in is defined by

describe the constraints defining the variables ξ(k) and o(k) ((4.15) and (4.17)). Matrix 𝒜in is defined by

where

Next, the matrix  is defined by

is defined by

Finally, matrices  and 𝒢 are defined by

and 𝒢 are defined by

and respectively,

where

The Problem 4.3 is identical to Problem 4.4 augmented with the additional constraint

where the vector \(u^{f}(k) \in\mathbb{R}^{b}\) containing the b “free” elements of u(k) (i.e., the elements of u(k) whose indices i satisfy δ i (k)=1) is arranged according to the increasing order of their indices. As a consequence, the optimal solutions of Problem 4.3 may be deduced from the optimal solutions of Problem 4.4 using the mapping (3.9).

The problem (4.18) is a mixed-integer quadratic program. It may be solved using many efficient academic and commercial solvers. In this monograph, this problem was solved using the solver CPLEX, whose MIP solver is based on the branch and bound method.

4.2 The Branch and Bound Method

The branch and bound method is a general search method for finding optimal solutions of discrete and combinatorial optimization problems. It was introduced in 1960 by Land and Doig [145] for the solving of the traveling salesman problem. This method aims at exploring, in an intelligent way, the space of feasible solutions of the problem. That’s why it may be classified among the implicit enumeration methods. In the following, the principles of this algorithm will be described in the case of a minimization. In order to simplify our explanation, we will suppose that considered problem admits at least one optimal solution. Of course, the other cases may be easily taken into account.

4.2.1 General Concepts

As its name indicates it, this method is based on two complementary mechanisms: branching and bounding.

-

Branching makes it possible to decompose a given problem into subproblems (by adding additional constraints), such that the union of the feasible solutions of these subproblems forms a partition (in the worst case a covering) of the feasible solutions of the original problem. In this manner, the resolution of the original problem is reduced to the resolution of the subproblems obtained by its branching. Branching induces a hierarchical relation between the different subproblems. This relation may be described and represented using concepts from the graph theory. In fact, in the branch and bound method, branching is applied in a recursive way to the subproblems where it may be possible (at a given stage of the execution of the algorithm), to find an optimal solution of the initial problem. As a result, the subproblems obtained by branching may be seen as the child nodes of the problem to which branching was applied, which is called parent node. Thus, all these nodes form a rooted tree, whose root node represents the initial problem to solve. This tree is usually called search tree or decision tree.

-

Bounding consists in computing an upper and a lower bound of the optimal solution of a given node. The bounding stage allows the branch and bound to avoid exploring the nodes where it is possible to certify that they do not contain any optimal solution. In fact, if the upper bound of a subproblem A is larger than the lower bound of another subproblem B, then the optimal solution cannot lie in the feasible set of solutions of subproblem A. For that reason, it becomes useless to branch node A. Node A is then pruned.

A node is called solved if an optimal solution of the associated subproblem was obtained. This may occur, for example, when the constraints defining it (and which were added progressively along the different branching steps), reduce its set of feasible solutions to a singleton. In other situations, branching may make the subproblem sufficiently simple to be solved by polynomial algorithms. The solved nodes are the final nodes or leave nodes of the decision tree. The algorithm finishes when all the nodes were either solved or pruned.

Example 4.1

Figure 4.1 describes the search tree of problem 𝒫 defined by

The branch and bound algorithm may be parameterized using the following four rules:

-

branching rules, describing how to divide a given problem into subproblems,

-

bounding rules, defining how to compute the upper and lower bounds of a given subproblem,

-

selection rules, stating how to select the next problem to consider,

-

elimination rules, describing how to recognize the subproblems that do not contain optimal solutions and that should be eliminated.

4.2.2 Application to Mixed-Integer Programming

To the best of the authors’ knowledge, the application of the branch and bound method to solve mixed-integer nonlinear programs was proposed for the first time by Dakin [68]. The resolution of mixed-integer quadratic programs was studied by many authors, for example, [85, 86, 146]. In the paper by Fletcher and Leyffer [85], the branch and bound method was applied to solve mixed-integer quadratic programs, allowing to obtain better experimental results than the other methods.

In this paragraph, we consider programs in the form:

where f is a positive semi-definite function and I is a set of indices indexing the Boolean components of 𝒱. A basic branch and bound algorithm for solving program 𝒫 is given in the following listing (Algorithm 4.1).

In Algorithm 4.1, U represents the cost of the best feasible solution of problem 𝒫 at a given stage of the execution of the algorithm and L a lower bound of the optimal cost of 𝒫. The set  , used for the computation of L, represents the set of nodes that have been already evaluated (i.e., bounded) and that may contain the optimal solution. Problem 𝒫′ denotes the problem obtained from 𝒫 by relaxing the integrality constraints that are imposed on the variables indexed by I, that is, replacing the constraints

, used for the computation of L, represents the set of nodes that have been already evaluated (i.e., bounded) and that may contain the optimal solution. Problem 𝒫′ denotes the problem obtained from 𝒫 by relaxing the integrality constraints that are imposed on the variables indexed by I, that is, replacing the constraints

by the constraints

These variables are thus considered as continuous variables in [0,1]. Thus, 𝒫′ is a quadratic program, and may be solved efficiently. Its solving is the most important part of the bounding or evaluation phase. In fact, the optimal cost of 𝒫′ represents a lower bound of the optimal cost of 𝒫.

The algorithm begins by solving 𝒫′ and giving its solution x ∗(𝒫′). If this solution respects the integrality constraints, then it is also an optimal solution for 𝒫 and the algorithm ends. Otherwise, there exists at least one non Boolean variable 𝒱 j , j∈I in x ∗(𝒫′). The algorithm proceeds by branching problem 𝒫 into two subproblems

and

that are added to the list  . The procedure is reiterated by the selection of a subproblem 𝒬 from

. The procedure is reiterated by the selection of a subproblem 𝒬 from  , based on the predefined selection rules. It may be possible that the relaxed subproblem 𝒬′ does not have any feasible solution. In this situation, it is pruned from the list

, based on the predefined selection rules. It may be possible that the relaxed subproblem 𝒬′ does not have any feasible solution. In this situation, it is pruned from the list  without being branched. The algorithm finishes when the list

without being branched. The algorithm finishes when the list  becomes empty or when a suboptimal solution with a predefined absolute tolerance (defined by ε) is found.

becomes empty or when a suboptimal solution with a predefined absolute tolerance (defined by ε) is found.

4.3 An Illustrative Numerical Example

Consider the collection of three continuous-time linear time invariant (LTI) subsystems defined by

where the subsystems S (1) and S (3) are open-loop unstable in opposition to the subsystem S (2) which is open-loop stable. The global system S composed by S (1), S (2) and S (3) may be described by the state matrix A c and the input matrix B c defined by

and

Assume that the global system S is controlled by a discrete-time controller executed at the sampling period T s =2 ms. The control commands are sent to the actuators through a bus that can carry at most one control command every 2 ms (b=b w =1). Assume now that the design criteria of the optimal continuous time controller for each subsystem are defined by matrices \(Q_{c}^{(1)}=\operatorname {Diag}(100,10)\), \(R_{c}^{(1)}=1\), \(Q_{c}^{(2)}=\operatorname {Diag}(1000,10)\), \(R_{c}^{(2)}=1\), \(Q_{c}^{(3)}=\operatorname {Diag}(1000,1000,1,1)\) and \(R_{c}^{(3)}=1\) and the desired closed-loop specifications for the global system are described by the closed-loop weighting matrices:

and

where μ 1, μ 2 and μ 3 are the weighting coefficients. In this example, μ 1, μ 2 and μ 3 were chosen equal to the inverse of steady state performance index of each separate subsystem controlled through a bus having an infinite bandwidth (μ 1=2.9, μ 2=0.13 and μ 3=0.016).

Remark 4.1

The use of a resource-limited shared communication medium for the transmission of the control commands to the distributed actuators introduces a coupling between the three subsystems, which requires the weighting of relative importance of each subsystem using coefficients μ 1, μ 2 and μ 3. This contrasts with the optimal control problem without communication constraints, where these constants have no impact on the optimal control of the three independent subsystems.

The length of the optimal control and communication sequences is N=100. An optimal solution with an error bound of 1×10−5 was required. The global system is started from the initial state x(0)=[1 0 1 0 1 0 0 0]T. Its responses are depicted in Figs. 4.2 and 4.3. The optimal schedule is depicted in Fig. 4.4. In this schedule, δ i =1 means that the bus is dedicated to the transmission of control signal u i .

The first network slots are mainly dedicated to subsystem S (1) until it is stabilized. It may be observed that subsystem S (2), which is open-loop stable and whose response time is larger than S (1), needs only three time slots to be stabilized. After the stabilization of subsystems S (1) and S (2), the network resources are entirely dedicated to the stabilization of subsystem S (3). When the subsystem S (3) is close to the equilibrium state (from t=0.13 s), then its control signals changes are minor. Consequently, the scheduling has no significant impact on control since the control signals of the three subsystems are relatively constant explaining thus the shape of the scheduling diagram, after t=0.13 s. However, this optimal schedule is dependent on the initial conditions. If the initial condition x(0) is modified, then the optimal control and schedule would be different. It is clear that such an open-loop schedule, which is generated off-line, cannot be applied at runtime as static schedule. In fact, assume that subsystem S (1) is disturbed at t=0.024 s. Observing the schedule, no slots are allocated to the transmission of the messages of subsystem S (1) between t=0.024 s and t=0.128 s, which would induce performance degradations.

These observations show that in the same way as the optimal control, the optimal scheduling depends on the current state of the system. Consequently, fixed schedules may not be optimal if the system is started from another initial state or if it is disturbed at runtime.

5 Notes and Comments

In this chapter, we have started by studying the problem of optimal control for a given fixed finite communication sequence. Then, we have formulated and solved the problem of the joint optimization of control and scheduling, for a given initial state. The numerical examples illustrating this method have shown that the obtained optimal schedule depends on the chosen initial state x(0). In other words, similarly to the standard optimal control case, the optimal scheduling depends on the current state of the system. However, from a computer science point of view, off-line scheduling has many advantages, essentially because it consumes a few computing resources and does not induce execution overheads. In order to obtain off-line schedules that are optimal from a certain point of view, it is necessary to use performance criteria that depend on the intrinsic characteristics of the system, not on a particular initial state. This will be the objective of the next chapter.

Nevertheless, this dependency between the optimal schedule and the plant state may be seen as a promising way for improving the quality of control by means of plant state-based scheduling algorithms. The design of such algorithms will be studied in Chap. 6.

References

K.J. Åström, B. Wittenmark, Computer-Controlled Systems: Theory and Design (Prentice Hall, New York, 1997)

A. Bemporad, M. Morari, Control of systems integrating logic, dynamics, and constraints. Automatica 35(3), 407–427 (1999)

S. Bittanti, A.J. Laub, J.C. Willems (eds.), The Riccati Equation. The Periodic Riccati Equation (Springer, Berlin, 1991)

R.J. Dakin, A tree search algorithm for mixed integer programming problems. Comput. J. 8(3), 250–255 (1965)

R. Fletcher, S. Leyffer, Numerical experience with lower bounds for MIQP branch-and-bound. SIAM J. Optim. 8(2), 604–616 (1998)

O.E. Flippo, A.H.G. Rinnoy Kan, A note on benders decomposition in mixed-integer quadratic programming. Oper. Res. Lett. 9(2), 81–83 (1990)

A.H. Land, A.G. Doig, An automatic method for solving discrete programming problems. Econometrica 28(3), 497–520 (1960)

R. Lazimy, Improved algorithm for mixed-integer quadratic programs and a computational study. Math. Program. 32(1), 100–113 (1985)

Author information

Authors and Affiliations

Rights and permissions

Copyright information

© 2014 Springer International Publishing Switzerland

About this chapter

Cite this chapter

Çela, A., Ben Gaid, M., Li, XG., Niculescu, SI. (2014). Optimal Integrated Control and Scheduling of Resource-Constrained Systems. In: Optimal Design of Distributed Control and Embedded Systems. Communications and Control Engineering. Springer, Cham. https://doi.org/10.1007/978-3-319-02729-6_4

Download citation

DOI: https://doi.org/10.1007/978-3-319-02729-6_4

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-02728-9

Online ISBN: 978-3-319-02729-6

eBook Packages: EngineeringEngineering (R0)