Abstract

Diagnostic models (DMs) have been widely applied to binary response data. However, in the field of educational and psychological measurement, a wealth of ordinal data are collected to measure latent structures where the traditional binary attributes may not adequately describe the complex response patterns. Considering that, we propose an extension of the sparse latent class model (SLCM) with ordinal attributes, with the purpose of fully exploring the relationships between attributes and response patterns. Furthermore, we discuss the strict and generic identifiability conditions for the ordinal SLCMs. We apply the model to the Short Dark Triad data and revisit the underlying personality structure. Evidence supports that SLCMs have better model fit to this real data than the exploratory factor models. We also confirm the efficiency of a Gibbs algorithm in recovering the empirical item parameters via a Monte Carlo simulation study. This study discusses a way of constructing DMs with ordinal attributes which helps broaden its applicability to personality assessment.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

1 Introduction

Cognitive diagnostic assessments, aimed at providing fine-grained information about respondents’ mastery of latent attributes, have gained increasing research attention in recent decades. The considerable expansion of cognitive diagnostic models (CDMs) has heightened the need for an inclusive and comprehensive modeling approach, where the sparse latent class models (SLCM; Chen et al. 2020) have served this purpose to fit most existing CDMs in an exploratory fashion.

The SLCM was originally proposed with binary attributes, including the deterministic input, noisy, “and” gate model (DINA; De La Torre 2009; Junker & Sijtsma 2001); the deterministic input, noisy, “or” gate model (DINO; J. L. Templin & Henson, 2006); the reduced non-compensatory reparameterized unified model (NC-RUM; DiBello et al. 1995; Templin et al. 2010) and the compensatory-RUM model (C-RUM; Hagenaars 1993; Maris 1999); and the family of general diagnostic models (De La Torre 2011; Henson et al. 2009; von Davier 2005). With binary representations of latent attributes, examinees’ attribute patterns are composed of either “mastery” or “non-mastery.” However, binary attributes are sometimes not accurate enough to describe the level of mastery as respondents can theoretically possess a specific attribute to different extents. Bolt and Kim (2018) provided empirical evidence that attributes derived from the fraction subtraction test (Tatsuoka 1987) are oversimplified if defined as binary. In addition, previous research also found that allowing attributes to have multiple levels improved the model-data fit (Haberman et al. 2008; von Davier 2018). These justifications support that assuming multiple levels of attributes are sometimes more desirable than binary levels. Therefore, developing CDMs with polytomous attributes would maximize the understanding of response patterns in binary or even polytomous data.

Many existing CDMs have been developed to measure polytomous responses, such as the Ordered Category Attribute Coding DINA model (OCAC-DINA; Karelitz 2004), the reduced reparameterized unified models (R-RUM; Templin 2004), the log-linear cognitive diagnostic model (LCDM ; Templin & Bradshaw( 2013), the general diagnostic models (GDM; von Davier 2005), and the pG-DINA model (Chen & Culpepper 2020). Based on whether the interactions between attributes are considered, these models can be further specified as the main-effect cognitive diagnostic models (i.e., the OCAC-DINA, R-RUM, LCDM, and GDM) and the all-effect cognitive diagnostic models (i.e., the pG-DINA). The former involves only the main effects of the required attributes, whereas the latter involves both the main effects and interaction effects. With the between-attribute interaction effects being considered, we are able to discover all types of attribute relationships and how they can affect the observed responses. A fully saturated model is the most general parameterization of the joint attribute distribution, where all the main effects and interaction effects are taken into consideration. However, the challenge is, when the attributes or attribute levels increase, the item parameters increase exponentially. This risk of the overparameterization makes its application restricted to the confirmatory settings. The fact is for confirmatory models, accurate scoring requires the correct specification of item-attribute relationship. Otherwise, misspecification of the item structure could result in inaccurate classification. To this end, an exploratory model has been instrumental in promoting our understanding of the item-attribute structures when they are not prespecified.

With the intention of constructing an exploratory SLCM model, the key concern is to determine whether polytomous attributes are necessary and how many intermediate levels are appropriate. With data-defined polytomous attributes, the levels of attributes and their interpretations can be derived from the model-fitting process. With expert-defined polytomous attributes, the justifications of attribute levels can be provided by experts in the related areas, especially in the area of educational testing and psychopathology. In this study, we take on the first approach to explore the attribute dimensions and levels. Once an adequate model size is determined, we can move to the formal estimation of model parameters.

This chapter is organized in the following sections. The 2. A Sparse Latent Class Model with Polytomous Attributes section introduces the model parameterization and discusses the identifiability conditions for the model. The 3. Gibbs Sampling section provides a Gibbs algorithm for Markov chain Monte Carlo estimation with the potential to enforce the identifiability and monotonicity constraints. The 4. An Empirical Application section illustrates how well the model fits the Short Dark Triad (SD3) data compared to an exploratory item factor model and performs a Monte Carlo simulation study to assess the statistical properties and feasibility of our model. The 5. Discussion section provides a summary of this study and some potential directions for future research.

2 A Sparse Latent Class Model with Polytomous Attributes

2.1 Model Configurations

2.1.1 Unstructured Mixture Model

Consider a scale that consists of J items and K underlying attributes. We denote the vector of response probabilities for a latent class c on item j as \(\boldsymbol \theta _{cj}=\left (\theta _{cj0},\dots ,\theta _{cj,P-1}\right )'\), where the element θ cjp represents the probability of observing the response category p on item j by the latent class c. Note that the scale can be either dichotomous (P = 2) or polytomous (P > 2). Given the vector of response probability θ cj, the probability of observing an ordinal response y j is written as

where y j ∈{0, ⋯ , P − 1} and \(\mathcal {I}\) is an indicator function. To describe the observed response patterns \(\prod _{j=1}^{J}P_j\), we introduce a collection of K ordinal attributes with M levels. In this setting, a total of M K latent classes can be created. Let the latent class index be c ∈{0, …, M K − 1}; each latent class has a K-vector of latent ordinal attributes η ∈{0, …, M − 1}K that can be mapped to an integer index c via bijection η ′ v = c with v = (M K−1, M K−2, …, 1)′. Next, we assume the membership in class c follows a multinomial distribution with structural parameters \(\pi _c \in \{\pi _0,\dots ,\pi _{M^K-1}\}\) where π c denotes the probability of membership in latent class c and \(\sum _{c=0}^{M^K-1}\pi _c=1\). By integrating out the latent class variable c, we can write the likelihood of observing a random vector y = (y 1, …, y J) as

where \(\boldsymbol {\varTheta } \in \mathbb {R}^{J \times M^K \times P}\) denotes the response probability array and π denotes the structural parameter vector. In a sample of N respondents, we denote the ordinal responses for respondent i as y i, i = 1, …, N. The likelihood of observing this sample is

where Y denoted the N × J response matrix.

In addition, a cumulative link function Ψ(⋅) is proposed to define the ordinal responses (Culpepper 2019). Specifically, we compute the probability of response category p by taking the difference in two adjacent cumulative probabilities. The response probability for latent class c on item j is written as

where τ ∈{τ j,0, …, τ j,P}. We define τ j,0 = −∞, τ j,P = ∞ which result in \(\Psi \left (\tau _{j, 0}-\mu _{c j}\right )=0\), \(\Psi \left (\tau _{j, P}-\mu _{c j}\right )=1\). Here, μ cj is the latent class mean parameter discussed in the next section. Ψ(τ j,p − μ cj) denotes the cumulative probability of a response at level p or less.

The assumption of local independence implies the response distribution Y given B and π can be presented as

where y ij refers to individual i’s response on item j and α i denotes the attribute profile vector for individual i.

2.1.2 Structured Mixture Model

A challenge with unstructured mixture model is that, as K or M increases, the number of parameters per item M K grows exponentially. To reduce the number of parameters, we impose a specific structure on μ cj by representing μ cj as \(\mu _{cj}:=\boldsymbol {\alpha }_{c}^{\prime } \boldsymbol {\beta }_{j}\) where α c is attribute profile design vector and β j is an item parameter vector of the same length as α c. A saturated model is the most general exploratory model which includes all the main- and higher-order interaction terms of predictors. Within the saturated model framework, K attributes with M levels lead to a total number of M K predictors. We let \(\boldsymbol A = (\boldsymbol \alpha _0,\dots ,\boldsymbol \alpha _{M^K-1})\) be a M K × M K design matrix which comprises the attribute profile vector α for each latent class. Moreover, we let B = (β 1, …, β J) be a J × M K item coefficient matrix. In this way, since we assume a sparse pattern on B explained later, the number of effective parameters is greatly reduced.

We code the attribute profile η as the design vector α using a cumulative coding strategy. Specifically, for attribute k, we define

so that a k incorporates an intercept for the first element and main effects for the exceeding different attribute levels. Therefore, the attribute design vector for an arbitrary class can be written as

where \(\bigotimes \) is the Kronecker product sign which frames all possible cross-level interactions between K attributes. Below we illustrate how the coding system works with a specific example.

Table 21.1 displays the attribute profile matrix A for a saturated SLCM with K = 2 attributes and M = 4 levels per attribute, where the first column prints the latent class integer c and the second column prints the latent class label in the way that each digit represents to which level latent classes master the attributes. For instance, latent class “12” in the column name implies the possession of the first attribute to the first level, and the remaining columns in the table refer to the predictors which compose the attribute profile vector α as Eq. 21.8 describes. The design vector α contains intercept component “00”; main-effect components “01,” “02,” “03,” “10,” “20,” and “30”; and two-way interaction components “11,” “12,” “13,” “21,” “22,” “23,” “31,” “32,” and “33.” For instance, label “11” in the row name corresponds to the cross-attribute effect between the first level of attribute 1 and the first level of attribute 2. For latent class “12” ( η 1 = 1, η 2 = 2), component “11” (a 1 = 1, a 2 = 1) is active given the coding rule η 1 ≥ 1 and η 2 ≥ 1. Specifically, for latent class “12” (i.e., η 1 = 1, η 2 = 2), the active components refer to “00,” “01,” “02,” “10,” “11,” and “12” columns.

2.1.3 Model Identifiability

Model identifiability issues have received considerable attention in the study of CDMs. Traditionally, parameter constraints derived for model identifiability are often imposed on a design Q-matrix. The Q-matrix specifies item-attribute relationships by showing which attributes each item measures but not showing how attributes interact to affect response probabilities. Conversely, in SLCM, a sparsity matrix \(\boldsymbol \Delta _{J \times M^K}\) substitutes the role of the traditional Q-matrix but takes the inter-attribute relationships into consideration. The sparsity matrix \(\boldsymbol \Delta _{J \times M^K}\) depicts the underlying pattern of the item parameter matrix B, where J denotes the total amount of items and M K denotes the total amount of predictors. An element δ = 1 suggests its corresponding β is active, whereas an element δ = 0 suggests its corresponding β is 0.

Definition 2.1 presents a classic notion of likelihood identifiability where a different set of parameter values leads to different values of likelihood. To this end, a model must be identifiable to elicit consistent parameter estimates. Based on the work established by Culpepper (2019) for the SLCM with binary attributes and ordinal responses, and the work by Chen et al. (2020) with binary attributes and dichotomous responses, we propose identifiability conditions for the SLCM with ordinal responses and ordinal attributes.

Definition 2.1

A parameter set Ω(π, B) is identifiable, if there are two sets of parameters (π, B) and \((\boldsymbol {\bar \pi }, \bar {\boldsymbol {B}})\) such that \(\mathbb {P}(\boldsymbol \pi ,\boldsymbol {B}) = \mathbb {P}(\boldsymbol {\bar \pi },\bar {\boldsymbol {B}})\) implies \(\boldsymbol \pi = \boldsymbol {\bar \pi }\), \(\boldsymbol B = \bar {\boldsymbol {B}}\).

We define a parameter space Ω(π, B) for the latent class proportion parameter π and the item coefficient parameter B as

where \(\Omega _1(\boldsymbol \pi ) = \{\boldsymbol \pi \in \mathbb {R}^{M^K}:\sum _{c=0}^{M^K-1}\pi _c=1, \pi _c>0\}\) and \(\Omega _2(\boldsymbol B) = \{\boldsymbol B \in \mathbb {R}^{J \times M^K}\}\).

Conditioned on the attribute profile vector α, the joint distribution of Y is a product of multinomial distributions represented by a P J vector:

where \(\bigotimes \) refers to the Kronecker product and \(\boldsymbol \theta _{cj}=\left (\theta _{cj0},\dots ,\theta _{cj,P-1}\right )'\). Further, the marginal distribution of Y over the proportion parameter π is

2.1.4 Monotonicity Constraints

Imposing monotonicity constraints ensures that mastering any irrelevant skills to an item will not increase the endorsing probability. Xu (2017) and Xu and Shang (2018) proposed the monotonicity constraints as follows:

where c 0 represents the latent class that does not own any relevant attribute and \(\mu _{c_0j}\) denotes its latent class mean parameters. \(\mathbb {S}_{0}\) denotes a set of latent classes that own at least one but not all relevant attributes, and \(\mathbb {S}_{1}\) denotes a set of latent classes that own all the relevant attributes. Given \(\mu _{cj} = \boldsymbol \beta _j^{\prime }\boldsymbol \alpha _c\), we can derive a lower bound condition L cj for each item parameters β cj that if β cj is lower bounded, the constraints above are satisfied. The derivation details can be found in Chen et al. (2020).

2.1.5 Strict Identifiability

Theorem 2.2

The parameter space Ω(π, B) is strictly identifiable if conditions (S1) and (S2) are satisfied.

-

(S1)

When Δ matrix takes the form of \(\Delta =\left (\begin {array}{l} D_{1} \\ D_{2} \\ D^{\ast } \end {array}\right )\) after row swapping where D 1, D 2 \(\in \mathbb {D}_s\), \(\mathcal {D}_s{=}\left \{D \in \{0,1\}^{K \times M^{K}}: D{=}\left [\begin {array}{cccccccc} 0 & \boldsymbol 1_{M-1}^\prime & 0 & \ldots & 0 & \ldots &0 \\ 0 & 0 & \boldsymbol 1_{M-1}^\prime & \ldots & 0 & \ldots &0 \\ \vdots & 0 & 0 & \ddots & 0 & \ldots & \vdots \\ 0 & 0 & 0 & \ldots & \boldsymbol 1_{M-1}^\prime & \ldots & 0 \end {array}\right ]\right \}\).

Note: 1 M−1 is a vector of 1 of length M − 1 which represents the activeness of an attribute on all M − 1 levels.

-

(S2)

In D ∗, for any attribute k = 1, 2, …, K, there exists an item j > 2K, such that all the main-effect components regarding this attribute are active (δ j,k0, δ j,k1, …, δ j,k(M−1)) = 1.

The proof details are provided in Appendix “Strict Identifiability Proof”.

2.1.6 Generic Identifiability

In this section, we propose the generic identifiability condition in (G1) and (G2) in Theorem 2.4. The generic condition is less stringent than the strict conditions (S1) and (S2) given in 2.2. Generic identifiability allows part of the model parameters to be non-identifiable such that these exceptional values are of measure zero in the parameter space.

Definition 2.3

A parameter set ΩΔ(π, B) is generically identifiable if the Lebesgue measure of the unidentifiable space C Δ with respect to ΩΔ(π, B) is zero.

Theorem 2.4

The parameter space Ω Δ(π, B) is generically identifiable if condition (G1) and (G2) are satisfied.

-

(G1)

When Δ matrix takes the form of \(\Delta =\left (\begin {array}{l} D_{1} \\ D_{2} \\ D^{\ast } \end {array}\right )\) after row swapping where D 1, D 2 \(\in \mathbb {D}_g\), \(\mathbb {D}_g{=}\left \{D \in \{0,1\}^{K \times M^{K}}: D{=}\left [\begin {array}{cccccccc} * & \boldsymbol 1_{M-1}^\prime & * & \ldots & * & \ldots &* \\ * & * & \boldsymbol 1_{M-1}^\prime & \ldots & * & \ldots &* \\ \vdots & * & * & \ddots & * & \ldots & \vdots \\ * & * & * & \ldots & \boldsymbol 1_{M-1}^\prime & \ldots & * \end {array}\right ]\right \}\)

Note: 1 M−1 is a vector of 1 of length M − 1 which represents the activeness of a specific attribute on all levels.

-

(G2)

In D ∗, for any attribute k = 1, 2, …, K, there exists an item j > 2K, such that all the main-effect components regarding this attribute are active (δ j,k0, δ j,k1, …, δ j,k,M−1) = 1.

The proof details are provided in Appendix “Generic Identifiability Proof”.

3 Gibbs Sampling

Following the Bayesian model formulation displayed in Sect. 21.2.1, this section outlines an MCMC approach for the proposed SLCM models. First, we introduce a deterministic relationship between the observed ordinal response Y ij and a continuous augmented latent variable \(Y_{ij}^*\) as Eqs. 21.14 and 21.15 show. The augmented variable \(Y_{ij}^*\) is generated from a normal distribution conditioned on the latent class mean parameter \(\mu _{ij} = \boldsymbol \alpha _i^{\prime } \boldsymbol \beta _j\). If \(Y_{ij}^*\) falls into the range [τ jp, τ j,p+1), the random variable Y ij takes the value of p.

We consider a multinomial prior for latent attribute variable α i as α i ∼ Multinomial(π). The latent class structural probability π follows a conjugate Dirichlet distribution \(\boldsymbol \pi \sim \mbox{Dirichlet}\left (\boldsymbol d_0\right )\) with hyperparameter \(\boldsymbol d_0 = {\mathbf {1}}_{M^K}^{\prime }\).

In addition, we adopt a spike and slab prior for B as Culpepper (2019) described. For each single β parameter, we formulate the Bayesian model as follows:

where \((\sigma _{\beta }^2, w_{0}, w_{1})\) are user-specified hyperparameters and L jc refers to the lower bound for satisfying the monotonicity constraints mentioned in Sect. 21.2.1.4. Noted that the intercepts in Δ are always set to be active with δ j0 = 1.

As a binary variable, we sample δ jc from the following conditional distribution:

where \(A = (\boldsymbol \alpha _1, \dots , \boldsymbol \alpha _{M^K})\) refers to the attribute profile matrix, \(\boldsymbol y_j^*\) is the augmented responses vector, and β j(c) is the coefficient vector β j that discards the p-th element. Once δ jc is updated, we update β j(c) given the full conditional distribution:

Given Eqs. 21.16 and 21.17, the Bernoulli parameter \(\tilde {\omega }_{jc}\) is derived as

where A c refers to the c-th column in the design matrix A. Note the derivation details can be found in Chen et al. (2020). The full MCMC sampling process is summarized in Algorithm 1, whereas Algorithm 2 presents the detailed sampling steps of the parameter matrix B and Δ.

4 An Empirical Application

4.1 Short Dark Triad

In the past decade, a great interest has been directed to measure the dark pattern of behaviors, goals, and characters. The Dark Triad (DT; Paulhus & Williams 2002) is one of the most popularly studied personality constructs, which encompasses three substantive dimensions: Machiavellianism, narcissism, and psychopathy. However, different studies have made contrasting conclusions on the construct of the DT (Persson et al. 2019). For instance, Furnham et al. (2013) have argued that psychopathy sometimes subsumes Machiavellianism and narcissism inadvertently. Others have declared that Machiavellianism and psychopathy have the same core and should be deemed as the same measure (Garcia & Rosenberg 2016; Glenn & Sellbom 2015; McHoskey et al. 1998). Traditionally, assessment of the DT often requires distinct measures for each dimension. To simplify the process of data collection, a measure, namely, Short Dark Triad (SD3; Jones & Paulhus 2014), was created with 27 items selected in a 5-point Likert-type scale (1, “Strongly disagree”; 2,“Slightly disagree”; 3,“Neutral”; 4,“Slightly agree”; and 5, “Strongly agree”). The SD3 items are shown in Appendix “Short Dark Triad” .

In this study, we investigate the latent construct underlying the SD3 scale through exploratory SLCMs. The dataset are available on the Open Psychometrics website (https://openpsychometrics.org/tests/SD3/), where we select a random sample of N = 5000 observations. Original item affiliations to the three dimensions can be inferred from the item index in Appendix “Short Dark Triad” (“M” refers to “Machiavellianism”; “N” refers to “narcissism”; “P” refers to “psychopathy”).

4.2 Model Comparisons

Traditionally, exploratory factor analysis (EFA; Furnham et al. 2014) has been the most popular tool to excavate latent constructs underlying manifest variables in a self-reported questionnaire. Unlike EFA models which treat personality traits as continuous variables, the SLCM allows us to explore the potential for interpreting the personality trait as discrete variables. The purpose of this section is to (1) fit exploratory SLCM with different attributes (K = 2, 3, 4) and attribute levels (M = 2, 3, 4) and (2) compare the exploratory SLCM to EFA models with (K = 2, 3, 4) factors.

Using a Bayesian approach, we apply a tenfold cross-validation approach to estimate out-of-sample predictive accuracy using within-sample estimates. We choose the k-fold cross-validation approach due to its simplicity compared to the leave-one-out method. Considering that an increasing number of folds help reduce the bias term (Vehtari & Lampinen 2002) caused by data split, we partition the DT3 dataset (N = 5000) into ten subsets {y k∣k = 1, …, 10}. One fold is used as testing data (N = 500), and the remaining folds are used as training data (N = 4500). For fold k, the testing and training data are denoted as y k and y (−k), respectively. For each training data y (−k), we employ the algorithm discussed in Sect. 21.3 to obtain posterior draws of the exploratory SLCM and computed posterior means as the point estimates for B and Δ. Thresholds τ are fixed to be τ = {−∞, 0, 2, 4, ∞}. We run 10 Markov chain Monte Carlo (MCMC) chains, and for each MCMC chain, a total of 80, 000 iterations are generated. Specifically, within each chain, the first 20, 000 iterations are discarded as burn-in samples, and the left 60, 000 iterations are retained as posterior samples. Finally, one chain is chosen out of the ten MCMC chains as it generated the highest marginal likelihood. Note here the point estimates are computed by taking element-wise means for the item parameter matrix B and the structural parameter vector π over posterior distributions. With the posterior distributions estimated from the training data, we then evaluate model fit in the testing data using log point-wise predictive density (lpd) which is defined as

where Ω k = {B k, π k} represents the item parameters estimated from the training data y (−k). To compute lpd in practice, we evaluate the integration of Ω k using MCMC posterior draws, and the log point-wise predictive density for data points in the testing data y k is written as

where B k, s are the s th draws from the posterior distributions given the training data y (−k) and i is the index of individuals. The complete log predictive density can be calculated by summing all observations over the 10-folds as \(\widehat {\operatorname {lpd}} = \sum _{k=1}^{10} \widehat {\operatorname {lpd}}_k\). Furthermore, the marginal likelihood of response y i for the s th draws is written as

where the latent variable \(\alpha ^{k,s}_i\) is integrated out with the hyperparameter π. Since data y ij are independent response data conditioned on the model parameter β j and the attribute pattern α i, we have

For EFA models, we apply the function “MCMCordfactanal” in the R package MCMCpack (Martin et al. 2011) to perform Bayesian estimation for posterior inference. We use its default setting of 10, 000 burn-in and 20, 000 mcmc iterations, and for each training sample y (−k), we take 500 posterior draws to compute predictive accuracy in testing sample y k. Similar to SLCM, we calculate the lpd criterion for the EFA models wherein the integration in Eq. (21.22) is obtained via the function “hcubature” in the R package cubature (Narasimhan et al. 2020). A higher lpd value indicates a model with higher prediction accuracy. Note that, considering the variety of latent variables assumed by SLCM and EFA, we use the marginal likelihoods f m instead of the conditional likelihoods f c in the predictive likelihood function \(f_m\left (\boldsymbol y_i \mid \boldsymbol B^{k,s}, \boldsymbol \pi \right )\).

As seen in Table 21.2, inclusion of more factors leads to a superior fit of EFA models for the reason that more nuisance variance is considered. In the two-dimensional case of K = 2, the SLCM (\(\operatorname {lpd} \geq -183595.3\)) performs better than the EFA (\(\operatorname {lpd} = -186914.5\)) if the attribute is ordinal M ≥ 3. Furthermore, in the three-/four-dimensional case of K ≥ 3, the SLCM consistently outperform the EFA regardless of the choice of M. Overall, the lpd of SLCM shows a slight rise when the dimension K or attribute level M increases, suggesting that increasing either the dimension or attribute level could improve the model fit. At this point, the best fitting model is the SLCM with K = 2 attributes and M = 4 attribute levels (\(\operatorname {lpd} = -183{,}443.2\)).

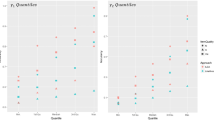

Given the information concerning the relative model fit, we estimated an exploratory SLCM with K = 2 and M = 4 in the same SD3 dataset (N = 5000). We ran 10 chains of length 80, 000 with a burn-in of 20, 000 iterations and keep the chain with the highest marginal likelihood. Figure 21.1 displays the estimated sparse structure of B, where we can summarize the two attributes as (1) narcissism and (2) Machiavellianism. The x-axis of Fig. 21.1 presents the predictors, where 01, 02, and 03 refer to the main-effect predictors of Machiavellianism; 10, 20, and 30 refer to the main-effect predictors of narcissism; and sparsity of the matrix is reflected on the fact that loadings manifest on the main-effect predictors. To this end, we can obtain a rough conclusion on the item and attribute relationships. In particular, items M1–M9, P1, P3, P5, P6, and P9 load mostly on Machiavellianism; items N1–N4, N6–N8, P2, and P7 load on narcissism; and items N5, N9, P4, and P8 load equally on the two dimensions.

As a fully saturated model, interactions can take place between any level of any two attributes. Figure 21.2 are stack barplots that clearly depict how increases in attributes correspond to changes in category response probabilities. The x- and y-axes indicate the latent classes and response probabilities; the stacked bars represent the five response categories. The barplots selectively present the item category response function for items M1, M7, N2, and P7. Item M1 has active coefficients β 00 = 2.0, β 01 = 0.5, β 02 = 0.6, β 03 = 0.8, β 21 = 0.3, β 22 = 0.1, and β 23 = 0.2. We can see that all main-effect terms regarding Machiavellianism manifest, while narcissism is active only on the interaction terms. In Fig. 21.3, latent classes 10, 20, and 30 that represents the group are less likely to endorse category 5 (5 = “Strongly agree) compared to other latent classes.

The active coefficients of item N2 are β 10 = 1.3, β 30 = 1.2, β 32 = 0.3, and β 33 = 0.9. We can tell that narcissism is more significant than Machiavellianism. In Fig. 21.3, latent classes 01, 02, and 03 which reflects the mastery of three levels on narcissism are most likely to endorse category 1 (5 = “Strongly disagree) compared to other latent classes.

Moreover, Fig. 21.3 shows the dominant response category for each latent class on the 27 items. Given a specific item, the value on the table represents the response category which a latent class has the highest probabilities to endorse over the other response categories.

4.3 Monte Carlo Simulation Study

This section presents a simulation study to evaluate the parameter recovery rate for SLCM with different sample sizes. We use the previous estimates of item parameter B and the structural parameter π (see Appendix “Empirical Item Parameters”) as the population parameters. Specifically, we have J = 27 items and assume the underlying dimension is K = 2 and attribute level is M = 4. The sample size is set to be n = 1000, 2000, 3000, with each sample size condition replicated for 100 times. For each replicated dataset, we run 10 chains where each single chain has a total of 60, 000 iterations with a burn-in sample of 20, 000 inside. The chain with the highest likelihood is chosen to perform posterior inference and compute the recovery accuracy. Note here we generate different attribute profiles α and responses Y per replication.

The estimation accuracy of B is evaluated in terms of the mean absolute deviation (MAD) for each single β. For each replication, we record the posterior mean of each single parameter in B as the estimates. Next, we compute the absolute deviation between the estimates and the true parameters. Then, we take the average of the absolute deviation over replications. Table 21.3 enunciates the MAD of βs with its activeness into consideration. In specific, the first row “B Δ” refers to the MAD averaged over the entire matrix B. The second row “B Δ=1” and the third row “B Δ=0” refer to the MAD averaged over the locations where δ s = 1 and δ s = 0, respectively. Likewise, we also compute and record the mean absolute deviation (MAD) for each π. Table 21.4 presents the recovery rate of Δ in terms of the proportion of entries that are correctly recovered. The first row “ Δ” refers to the proportion of correctly recovered δs over the whole matrix. The second row “ Δ = 1” and the third row “ Δ = 0” refer to the proportion of 1’s and 0’s in the population Δ matrix that are correctly recovered.

The result in Table 21.3 shows that the average EAD for B is 0.100, 0.069 and 0.052 for sample sizes of n = 1000, 2000, and 3000. The average EAD for π is 0.009, 0.008, and 0.007 corresponding to sample sizes of n = 1000, 2000, and 3000. Additionally, Table 21.4 shows the recovery rate for D is 0.883, 0.913, and 0.932 for sample sizes of n = 1000, 2000, and 3000. As seen, for both of them, the estimation accuracy rises as the sample size increases. Furthermore, in Table 21.3, we observe that the active entries in B have a larger bias than the inactive entries. Similarly, we have at least 0.954 of the inactive entries in D that are correctly estimated as “0” and at least 0.702 of the active entries in D that are correctly estimated as “1.” The simulation results support that the model can be mostly recovered by our Gibbs algorithm.

4.4 Model Convergence

To evaluate the convergence of the Gibbs sampler, we generate three chains for the SLCM with K = 2 and M = 4 under the most computationally intensive condition N = 3000. For each of the latent class mean parameter μ and the structural parameter π, the Gelman-Rubin proportional scale reduction factor (PSRF), also known as \(\hat {R}\), is calculated. A \(\hat {R}\) value of below 1.2 indicates the acceptable convergence. In our simulation, the maximum \(\hat {R}\) is found to be 0.97 for π and 1.06 for μ, with the 80, 000 iterations and 20, 000 burn-in samples inside. Therefore, we conclude the MCMC chains have reached a steady state.

5 Discussion

In this study, we propose a strict and generic model identifiability condition for SLCM with polytomous attributes, which expands the work of SLCM with binary attributes by Chen et al. (2020) and Culpepper (2019). We develop a Gibbs sampling algorithm with the design of enforcing the identifiability and monotonicity constraints. Specifically, with strict identifiability conditions imposed, we notice that the MCMC chains are often trapped and have a slow move forward. A possible explanation is that the strict conditions are too restrictive for the chains to search the right parameter space. Without explicitly enforcing the strict identifiability constraints, the models convergence in 80, 000 draws with estimates satisfying the proved generic identifiability conditions. The simulation results demonstrate that the algorithm is efficient in recovering the parameters in different sample sizes.

Overall, our study is innovative in the following aspects. First, we provide a successful case study of applying SLCM to a personality scale. Personality have traditionally been viewed as continuous traits instead of discrete categories, and factor analysis (FA) approach which assumes continuous latent variables is often used in personality measurement. However, with the estimated person scores, it is always a critical issue to identify cut-offs and classify individuals into different classes. To this end, if variables can be viewed more or less categorical, we can rely on the models with discrete latent variables to provide fine-grained information on the individual differences. Another advantage with discrete latent variables lies in its greatly reduced parameter space, with a potential of facilitating sampling. Overall, our study provides new insights into interpreting personality traits as a discrete measure. Plus, the model also has the potential of being applied to educational settings and contributes to better measurement for educational intervention (Chen & Culpepper 2020).

Second, our study, for the first time, compares SLCM and EFA models from an exploratory perspective. We found the SLCM fit significantly better than the EFA models with a higher prediction accuracy in several configurations. This finding has important implications for promoting applications of CDMs to the areas outside of educational measurement, where another early example is by Cho (2016) who has explored the construct validity of emotional intelligence in situational judgmental tests. In addition, our analysis of the item-attribute structures underlying SD3 supports the previous finding by Persson et al. (2019) that Machiavellianism and psychopathy are subsumable constructs. Moreover, they found the subscale composite scores for the three constructs contain relatively little specific variance, with an implication that reporting the total scores is more appropriate for SD3 than reporting the subscale scores. In our result, most items do not follow a simple structure pattern, which further support this statement that dimensions of SD3 are somewhat inseparable.

Third, from a methodology perspective, our paper addresses the model identifiability concerns of SLCM with polytomous attributes. The strict identifiability condition is way too restrictive in practice. For instance, when the number of attributes is relatively large compared to the items, (e.g., close to half the number of items), enforcing strict identifiability is equivalent to presuming a simple structure on all items. For personality assessment, a simple item structure is often unrealistic to achieve. For this reason, the generic identifiability that loosens some constraints broadens the model applicability.

There are still several recommendations for future study. First, although the MCMC chains successfully converge to the posterior distributions, the Gibbs samplers are still not efficient enough in exploring the parameter space. We have to run several chains and conduct a likelihood selection to find the one with best mixing. The difficulty of mixing could be due to the complexity of the saturated model wherein we have 16 parameters per item. To solve the mixing issue, future work is required to develop more flexible moves in the algorithm that can break local traps or jump between difference spaces.

Second, instead of framing the SLCM in an unstructured way, it is interesting to include a higher-order factor model (Culpepper & Chen 2019; De La Torre & Douglas 2004; Henson et al. 2009) or a multivariate normal distribution with a vector of thresholds and a polychoric correlation matrix (Chen & Culpepper 2020; Henson et al. 2009; Templin et al. 2008) in the latent class structure. There is also abundant room for future progress in selecting the competing structures for π.

Third, it is also possible to prespecify the number of attributes with a more established approach. For instance, Chen et al. (2021) present a crimp sampling algorithm to jointly infer the number of attribute for DINA model. In our study, the choice of attribute level is limited by the study design. Future work with focus on the selection of attribute level is greatly suggested.

Algorithm 1 Full Gibbs sampling algorithm

Algorithm 2 Full Gibbs sampling algorithm: B; Δ

References

Allman, E. S., Matias, C., Rhodes, J. A., et al. (2009). Identifiability of parameters in latent structure models with many observed variables. The Annals of Statistics, 37(6), 3099–3132. https://doi.org/10.1214/09-AOS689

Bolt, D. M., & Kim, J.-S. (2018). Parameter invariance and skill attribute continuity in the DINA model. Journal of Educational Measurement, 55(2), 264–280. https://doi.org/10.1111/jedm.12175

Chen, Y., Culpepper, S., & Liang, F. (2020). A sparse latent class model for cognitive diagnosis. Psychometrika, 85(1), 121–153. https://doi.org/10.1007/s11336-019-09693-2

Chen, Y., & Culpepper, S. A. (2020). A multivariate probit model for learning trajectories: A fine-grained evaluation of an educational intervention. Applied Psychological Measurement, 44(7–8), 515–530. https://doi.org/10.1177/0146621620920928

Chen, Y., Liu, Y., Culpepper, S. A., & Chen, Y. (2021). Inferring the number of attributes for the exploratory DINA model. Psychometrika, 86(1), 30–64. https://doi.org/10.1007/s11336-021-09750-9

Cho, S. H. (2016). An application of diagnostic modeling to a situational judgment test assessing emotional intelligence (Unpublished doctoral dissertation). University of Illinois at Urbana-Champaign.

Culpepper, S. A. (2019). An exploratory diagnostic model for ordinal responses with binary attributes: Identifiability and estimation. Psychometrika, 84(4), 921–940. https://doi.org/10.1007/s11336-019-09683-4

Culpepper, S. A., & Chen, Y. (2019). Development and application of an exploratory reduced reparameterized unified model. Journal of Educational and Behavioral Statistics, 44(1), 3–24. https://doi.org/10.3102/1076998618791306

De La Torre, J. (2009). DINA model and parameter estimation: A didactic. Journal of Educational and Behavioral Statistics, 34(1), 115–130. https://doi.org/10.3102/1076998607309474

De La Torre, J. (2011). The generalized DINA model framework. Psychometrika, 76(2), 179–199. https://doi.org/10.1007/s11336-011-9207-7

De La Torre, J., & Douglas, J. A. (2004). Higher-order latent trait models for cognitive diagnosis. Psychometrika, 69(3), 333–353. https://doi.org/10.1007/BF02295640

DiBello, L. V., Stout, W. F., & Roussos, L. A. (1995). Unified cognitive/psychometric diagnostic assessment likelihood-based classification techniques. In R. L. B. P. D. Nichols & S. F. Chipman (Eds.), Cognitively diagnostic assessment (pp. 361–390). Routledge. https://doi.org/10.4324/9780203052969

Furnham, A., Richards, S., Rangel, L., & Jones, D. N. (2014). Measuring malevolence: Quantitative issues surrounding the dark triad of personality. Personality and Individual Differences, 67, 114–121. https://doi.org/10.1016/j.paid.2014.02.001

Furnham, A., Richards, S. C., & Paulhus, D. L. (2013). The dark triad of personality: A 10 year review. Social and Personality Psychology Compass, 7(3), 199–216. https://doi.org/10.1111/spc3.12018

Garcia, D., & Rosenberg, P. (2016). The dark cube: dark and light character profiles (Vol. 4). PeerJ Inc. https://doi.org/10.7717/peerj.1675

Glenn, A. L., & Sellbom, M. (2015). Theoretical and empirical concerns regarding the dark triad as a construct. Journal of Personality Disorders, 29(3), 360–377. https://doi.org/10.1521/pedi_2014_28_162

Haberman, S. J., von Davier, M., & Lee, Y.-H. (2008). Comparison of multidimensional item response models: Multivariate normal ability distributions versus multivariate polytomous ability distributions. ETS Research Report Series, 2008(2), i–25. https://doi.org/10.1002/j.2333-8504.2008.tb02131

Hagenaars, J. A. (1993). Loglinear models with latent variables (No. 94). Sage Publications, Inc. https://dx.doi.org/10.4135/9781412984850

Henson, R. A., Templin, J. L., & Willse, J. T. (2009). Defining a family of cognitive diagnosis models using log-linear models with latent variables. Psychometrika, 74(2), 191–210. https://doi.org/10.1007/s11336-008-9089-5

Jones, D. N., & Paulhus, D. L. (2014). Introducing the short dark triad (SD3) a brief measure of dark personality traits. Assessment, 21(1), 28–41. https://doi.org/10.1177/1073191113514105

Junker, B. W., & Sijtsma, K. (2001). Cognitive assessment models with few assumptions, and connections with nonparametric item response theory. Applied Psychological Measurement, 25(3), 258–272. https://doi.org/10.1177/01466210122032064

Karelitz, T. M. (2004). Ordered category attribute coding framework for cognitive assessments (Unpublished doctoral dissertation). University of Illinois at Urbana-Champaign.

Kruskal, J. B. (1976). More factors than subjects, tests and treatments: an indeterminacy theorem for canonical decomposition and individual differences scaling. Psychometrika, 41(3), 281–293. https://doi.org/10.1007/BF02293554

Kruskal, J. B. (1977). Three-way arrays: rank and uniqueness of trilinear decompositions, with application to arithmetic complexity and statistics. Linear Algebra and Its Applications, 18(2), 95–138. https://doi.org/10.1016/0024-3795(77)90069-6

Maris, E. (1999). Estimating multiple classification latent class models. Psychometrika, 64(2), 187–212. https://psycnet.apa.org/doi/10.1007/BF02294535

Martin, A. D., Quinn, K. M., & Park, J. H. (2011). MCMCpack: Markov chain monte carlo in R. Journal of Statistical Software, 42(9), 1–21. https://doi.org/10.18637/jss.v042.i09

McHoskey, J. W., Worzel, W., & Szyarto, C. (1998). Machiavellianism and psychopathy. Journal of Personality and Social Psychology, 74(1), 192. https://doi.org/10.1037/0022-3514.74.1.192

Narasimhan, B., Johnson, S. G., Hahn, T., Bouvier, A., & Kiêu, K. (2020). cubature: Adaptive multivariate integration over hypercubes [Computer software manual]. (R package version 2.0.4.1)

Paulhus, D. L., & Williams, K. M. (2002). The dark triad of personality: Narcissism, Machiavellianism, and psychopathy. Journal of Research in Personality, 36(6), 556–563. https://doi.org/10.1016/S0092-6566(02)00505-6

Persson, B. N., Kajonius, P. J., & Garcia, D. (2019). Revisiting the structure of the short dark triad. Assessment, 26(1), 3–16. https://doi.org/10.1177/1073191117701192

Tatsuoka, K. K. (1987). Toward an integration of item-response theory and cognitive error diagnosis. In Diagnostic monitoring of skill and knowledge acquisition (pp. 453–488). Lawrence Erlbaum Associates, Inc. https://doi.org/10.4324/9780203056899

Templin, J. (2004). Generalized linear mixed proficiency models for cognitive diagnosis. (Unpublished doctoral dissertation). University of Illinois at Urbana-Champaign.

Templin, J., & Bradshaw, L. (2013). Measuring the reliability of diagnostic classification model examinee estimates. Journal of Classification, 30(2), 251–275. http://dx.doi.org/10.1007/s00357-013-9129-4

Templin, J., Henson, R. A., et al. (2010). Diagnostic measurement: Theory, methods, and applications. Guilford Press.

Templin, J. L., & Henson, R. A. (2006). Measurement of psychological disorders using cognitive diagnosis models. Psychological Methods, 11(3), 287–305. https://doi.org/10.1037/1082-989x.11.3.287

Templin, J. L., Henson, R. A., Templin, S. E., & Roussos, L. (2008). Robustness of hierarchical modeling of skill association in cognitive diagnosis models. Applied Psychological Measurement, 32(7), 559–574. https://doi.org/10.1177/0146621607300286

Vehtari, A., & Lampinen, J. (2002). Bayesian model assessment and comparison using cross-validation predictive densities. Neural Computation, 14(10), 2439–2468. https://doi.org/10.1162/08997660260293292

von Davier, M. (2005). A general diagnostic model applied to language testing data. ETS Research Report Series, 2005(2), i–35. https://doi.org/10.1348/000711007x193957

von Davier, M. (2018). Diagnosing diagnostic models: From von neumann’s elephant to model equivalencies and network psychometrics. Measurement: Interdisciplinary Research and Perspectives, 16(1), 59–70. https://doi.org/10.1080/15366367.2018.1436827

Xu, G. (2017). Identifiability of restricted latent class models with binary responses. The Annals of Statistics, 45(2), 675–707. http://www.jstor.org/stable/44245820

Xu, G., & Shang, Z. (2018). Identifying latent structures in restricted latent class models. Journal of the American Statistical Association, 113(523), 1284–1295. https://doi.org/10.1080/01621459.2017.1340889

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Appendices

Appendices

1.1 Short Dark Triad

See Table 21.5.

1.2 Empirical Item Parameters

See Table 21.6.

1.3 Strict Identifiability Proof

The proof is mainly based on Kruskal’s theorem (Kruskal 1976, 1977) and the tripartition strategy proposed by Allman et al. (2009). We first introduce the probability matrix H( Δ, B) and its Kruskal rank in Definitions 1 and 2.

Definition 1

The class-response matrix H( Δ, B) is a matrix of size M K × P J, wherein the rows denote attribute patterns and the columns denote response patterns. An arbitrary element (α c, y) in H( Δ, B) presents the probability of observing a response pattern y from the latent class with attribute profile α c:

Definition 2 (Kruskal Rank)

The Kruskal rank of matrix H is the largest number j such that every set of j columns in H is independent. If H has full row rank, the Kruskal rank of H is its row rank.

Theorem 3 (Allman et al. 2009)

Consider a general latent class model with r classes and \(\mathcal {J}\) items, where J ≥ 3. Suppose all entries of π are positive. If there exists a tripartition of the item set \(\mathcal {J}={1,2,\dots ,J}\) that divides \(\mathcal {J}\) into three disjoint, nonempty subsets \(\mathcal {J}_1\), \(\mathcal {J}_2\) , and \(\mathcal {J}_3\) such that the Kruskal ranks of the three class-response matrices H 1, H 2 , and H 3 satisfy

then the parameters of the model are uniquely determined, up to label switching.

To prove the model parameters are uniquely determined, we need to find three subsets of items in SLCMs that satisfy Theorem 3. Suppose we have three disjoint, nonempty subsets \(\mathcal {J}_1\), \(\mathcal {J}_2\), and \(\mathcal {J}_3\), the marginal probability of response Y can be reframed as a three-way tensor T of dimension \(P^{|\mathcal {J}_1|} \times P^{|\mathcal {J}_2|} \times P^{|\mathcal {J}_3|}\). Specifically, the (y 1, y 2, y 3)-th element in T is the marginal probability of the products of the three subsets items:

In other words, tensor T can be decomposed as an outer product of three vectors:

where \(\boldsymbol H_{\boldsymbol \alpha _c}(\boldsymbol D_i,\boldsymbol B_i)\) represents a row vector of M K in the class-probability matrix H(D i, B i) of size \(M^K \times P^{\mathcal {J}_I}\).

Kruskal (1976, 1977) and Allman et al. (2009) state that if the sum of the Kruskal ranks of H(D 1, B 1), H(D 2, B 2), and H(D 3, B 3) is greater or equal to 2M K + 2, the tensor decomposition is unique up to the row rescaling and label switching. Now we will give the proof by showing the existence of three item subsets \(\mathcal {J}_1\), \(\mathcal {J}_2\), and \(\mathcal {J}_3\). If the corresponding item-attribute matrices D 1, D 2, D 3 satisfy the structure of D s, D s, and D ∗ in Theorem 2.2, the Kruskal rank sum of their class-probability matrix H 1, H 2, and H 3 satisfies the minimum requirement 2M K + 2, and the model parameters are uniquely determined.

Proof 4 shows if D 1 and D 2 take the form of D s in Theorem 2.2, the class-response matrices H(D 1, B 1) and H(D 2, B 2) have a full Kruskal row rank of M K.

Proof 4

D s is the defined item structure matrix of dimension K × M K in Theorem 2.2, wherein the k-th item loads on all of the levels in attribute k, i.e., δ k = (δ k,0, …, δ k,M−1) = 1, and the corresponding item parameters β k,m ≠ 0 for m ∈{0, …, M − 1}. The class-response matrix H(D s, B s) of dimension M K × P K can be written as the Kronecker product of K sub-matrices H k:

where H k can be viewed as the attribute-category matrix of dimension M × P for the k-th item in D s. In H k, the rows indicate the attribute levels and columns indicate the response categories.

Given the item parameters are all nonzero β k,m ≠ 0, the latent class mean parameter μ k,m is different from each other given μ k,0 = β k,0, μ k,1 = β k,0 + β k,1,μ k,2 = β k,0 + β k,1 + β k,2, and μ k,M−1 = β k,0 + β k,1 + ⋯ + β k,M−1. Then, the rows in matrix H k are not linearly dependent so that H k is of full row Kruskal rank, namely, rank(H k) = M. For each item k in D s, we have rank(H k) = M. According to the property of Kronecker products, \( \boldsymbol H(\boldsymbol D_s,\boldsymbol B_s) = \bigotimes _{k=1}^{K} \boldsymbol H_{k} \) is also full Kruskal row rank with rank(H(D s, B s)) = M K.

The following Proof 5 shows if D 3 takes the form of D ∗ in Theorem 2.2, the class-response matrix H(D 3, B 3) has Kruskal row rank of 2.

Proof 5

Condition (S2) in Theorem 2.2 ensures the main-effect components of each attribute to be nonzero in at least one item in D ∗ so that D ∗ can distinguish every pair of latent classes. Specifically, there must exist an item j 0 in D ∗ that any two latent class c 1 and c 2 has different response probability matrix, i.e., \(\varTheta _{j_0,c_1} \neq \varTheta _{j_0,c_2}\). Therefore, there must exist two rows in H(D ∗, B ∗) that are independent of each other, implying that the Kruskal rank of H(D ∗, B ∗) is at least 2.

With Proofs 4 and 5, we have rank(H 1) + rank(H 2) + rank(H 3) ≥ 2M K + 2.

1.4 Generic Identifiability Proof

In the context of SLCMs, the item structure matrix Δ is a sparse matrix, so the real parameter space should be of dimension less than J × M K. To be differentiated from Eq. 21.9, we denote the parameter space with a sparsity structure Δ as

where \(\Omega _{\boldsymbol \Delta }^\ast (\boldsymbol B)\) presents the parameter space for B which have nonzero entry at position β when the corresponding δ = 1. Then, we define the unidentifiable parameter set C Δ as

As Definition 2.3 stated, ΩΔ(π, B) is a generically identifiable parameter space if the unidentifiable set C Δ is of measure zero within ΩΔ(π, B).

Similar to the strict identifiability proof, we will use the tripartition strategy to find three item subsets \(\mathcal {J}_1\), \(\mathcal {J}_2\), and \(\mathcal {J}_3\) that generate a tensor decomposition of D 1, D 2, and D 3. We proceed to show if D 1, D 2, and D 3 satisfy the structure of D g, D g, and D ∗ in Theorem 2.4, the Kruskal rank sum of the corresponding class-probability matrices H 1, H 2, and H 3 satisfies the minimum requirement 2M K + 2.

Proof 6

For H 1 and H 2, we use Theorem 7 to show that rank(H 1) = M K and rank(H 2) = M K hold almost everywhere in \(\Omega _{\boldsymbol D_1}\) and \(\Omega _{\boldsymbol D_2}\), respectively. Different from the Theorem 4 in Chen et al. (2020), we perform a transpose multiplication to the response-class matrix H(D g, B g) so that it can be transformed into a square matrix H(D g, B g)′ H(D g, B g) which has an accessible determinant function. Given Proof 10, we show \(G_{\boldsymbol D}(\boldsymbol B)\to \mathbb {R}\) is a real analytical function of B, and then we know \(\lambda _{\Omega _{\boldsymbol D}}(\boldsymbol A)\) has the Lebesgue measure zero. By Theorem 7, we can infer that H(D g, B g) is a full row rank matrix. Therefore, if \(\boldsymbol D_1 \in \mathbb {D}_g\) and \(\boldsymbol D_2 \in \mathbb {D}_g\), we have rank(H 1) + rank(H 2) = 2M K holds almost everywhere in \(\Omega _{\boldsymbol D_1} \bigotimes \Omega _{\boldsymbol D_2}\).

Theorem 7

Given \(\boldsymbol D \in \mathbb {D}_g\) , the corresponding class-response matrix H(D, B) is of full rank except some values of B from a measure zero set with respect to Ω D , i.e.,

where \(\lambda _{\Omega _{\boldsymbol D}}(A)\) denotes the Lebesgue measure of set A with respect to Ω D.

Proposition 8

If \(f:\mathbb {R}^n \to \mathbb {R}\) is a real analytic function which is not identically zero, then the set {x : f(x) = 0} has Lebesgue measure zero.

Remark 9

\(G_{\boldsymbol D}(\boldsymbol B) =\text{det}[\boldsymbol H(\boldsymbol \Delta ,\boldsymbol B)^{\prime } \boldsymbol H(\boldsymbol \Delta ,\boldsymbol B)]: \Omega _{\boldsymbol D} \to \mathbb {R}\) is a real analytic function of B.

Proof 10

G D(B) is a composition function:

where \(h(\boldsymbol \theta ):[0,1]^{K\times M^K}\) denotes a polynomial function and \(\boldsymbol \theta _{\boldsymbol \alpha _c}\) represents the probability vector for the latent class α c, which can be further written as the difference of two CDFs. A polynomial function is a real analytic function. Since the CDF is an integral of a real analytic function, the composition of real analytic functions (difference between two CDFs) is still a real analytic function. Furthermore, h(θ) is also a real analytic function of B. G D(B), as a determinant of h(θ)′ h(θ), is also a real analytic function.

Proof 11

For H 3, if D 3 takes the form of D ∗ in [S2], we can infer that there must exist an item j 0 in D 3 that for any two latent classes c 1 and c 2, we have \(\mu _{j_0,c_1} \neq \mu _{j_0,c_2}\). Then we have at least two rows in matrix H(D 3, B 3) to be independent of each other, implying that the Kruskal rank of H(D 3, B 3) is at least 2. The exceptional case could exist when β k,m = 0 holds for some k and m, which has Lebesgue measure zero with respect to \(\Omega _{\boldsymbol \Delta ^{\ast }}\). Consequently, rank(H 3) ≥ 2 holds almost everywhere in \(\Omega _{\boldsymbol \Delta ^{\ast }}\)

With Proofs 6 and 5, we have rank(H 1) + rank(H 2) + rank(H 3) ≥ 2M K + 2 holds almost everywhere in ΩΔ(π, B).

Rights and permissions

Copyright information

© 2023 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

He, S., Culpepper, S.A., Douglas, J. (2023). A Sparse Latent Class Model for Polytomous Attributes in Cognitive Diagnostic Assessments. In: van der Ark, L.A., Emons, W.H.M., Meijer, R.R. (eds) Essays on Contemporary Psychometrics. Methodology of Educational Measurement and Assessment. Springer, Cham. https://doi.org/10.1007/978-3-031-10370-4_21

Download citation

DOI: https://doi.org/10.1007/978-3-031-10370-4_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-031-10369-8

Online ISBN: 978-3-031-10370-4

eBook Packages: EducationEducation (R0)