Abstract

Structural fire safety requirements implicitly balance up-front investments in materials (protection or element sizing) with improved performance (loss reductions) in the unlikely event of a fire. For traditional prescriptive fire safety recommendations, the underlying target safety levels are not clear to the designer, nor is the associated balancing of risk and investment costs. While easy to apply, guidance / code-based approaches to the specification of fire protection / resistance have the severe disadvantage that the level of safety investment is not tailored to the specifics of the case, resulting in large overinvestments in some cases, and possibly insufficient structural fire safety in others. This observation is a major driver for the use of performance-based design (PBD) methodologies, where the fire safety design is tailored to the needs of the building. However, it is posited in this chapter that traditional PBD in a structural fire context is deterministic, with the safety foundation premised upon the collective experience of the profession. It is separately noted that building forms are increasingly uncommon in nature, due to material choice, height, failure consequences, etc., and, as such, collective experience is increasingly a weak safety foundation. This is where probabilistic methods add value and provide a quantified / explicit basis for demonstrating the adequacy of a design. This chapter, thus, focusses on uncertainties and uncertainty quantification in the context of structural performance in the event of fire, introducing reliability and risk concepts, with supporting data and applications.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

- Uncertainties

- Structural fire design

- Probabilistic methods

- Structural fire engineering

- Performance-based design

- Fuel load

- Heat transfer

- Probabilistic risk assessment

- Reliability

- Failure probability

- Safety margin

- Ventilation

- Probability distribution

- Statistical models

- Monte Carlo simulation

9.1 Introduction

9.1.1 Structural Design and Uncertainty

Probabilistic methods form the basis of verification of structural design under ambient conditions in most structural engineering standards around the world. Safety factors are specified based on statistical variations in load and resistance of a structure, applying reliability goals as a benchmark for verification that a structure provides an acceptable level of safety. Despite this fundamental principle, current structural fire engineering approaches are intrinsically deterministic and incorporate neither of the above two concepts of safety factors or reliability goals.

Instead, structural fire engineering analysis typically relies on the evaluation of the response of a structure to a single or very few deterministic scenarios which although often conservative do not normally account for uncertainties in the input, the modelling approach, or the output. This approach does not address in any meaningful way the amount of conservativeness inherent in a design, or provide any meaningful information about the actual level of safety. The evaluation is carried out in one of a number of ways, with verification done in either the time, the temperature, or the strength domain with the intent being simply to demonstrate that for a given scenario the fire resistance of an element or a structure is greater than or equal to the fire intensity. When verification is done in the temperature or the strength domain, this is almost always done based on the analysis of the response of the structure to one or a few design fires which represent a range of possible fires that could occur inside of that building. However, while these fires may be identified and elaborated using some risk-based technique , the analyses remain purely deterministic and the actual level of safety, margin of safety, probability of failure, or reliability is almost never calculated.

This deterministic demand/capacity evaluation in the strength or temperature domain is often termed performance-based design since the performance criteria may be set based on the unique features of the building in question and taking into account input from the various stakeholders in the project. The basic elements of performance-based design are defined in such a way as to allow the user freedom to compose any solution to a given engineering problem, allowing also the freedom to employ new techniques and technologies as they become available. The objectives must be clearly stated at the outset of the project, and any design solution which fulfils these objectives while still adhering to the performance targets of the design framework should be permitted. The effect of this on the spectrum of possible solutions available for any problem and the impact of this on verification requirements are shown in Fig. 9.1. As the design process tends towards a performance-based approach , the spectrum of possible solutions opens up, allowing more bespoke solutions to a problem.

Performance-based design is a necessity where buildings fall outside of either the classification afforded by prescriptive building codes around the world or where the materials or methods of construction are such that they introduce new risks or challenge the fire strategy of buildings in ways which were unforeseen in the development of the current regulations. In such cases, the building design falls outside of the bounds by which the fire engineering community can confidently rely on the collective experience of the profession (see Sect. 9.2). It is therefore not possible to ensure safety through the application of prescriptive codes based on the nearest existent classification. Therefore, two lines of action are open to the engineer: either the building design should be modified such that it falls within the scope of the classifications available or engineering analysis has to be undertaken to demonstrate that the level of safety provided by the building is consistent with the performance that may be expected by the society.

This may result in the situation whereby although the targets in terms of life, property, and business protection may remain similar to those in prescriptive design codes, these targets should typically remain independent of the prescriptive building code performance goals. Most legislative objectives are related to preventing loss of life—either of the building occupants or of the first responders working inside of a burning building [1]—and damage to neighbouring property. However, performance-based design also opens the possibility for alternative objectives to be considered such as limiting direct or indirect financial losses to a building’s owner, limiting environmental impact, or preservation of historic structures [2].

Also, when applying performance-based design , the collective experience of the profession in applying these techniques to the specific type of structure may be insufficient to guarantee a sufficient level of safety. In those situations, an explicit verification or quantification of the resulting safety level needs to be undertaken. This is discussed in some detail elsewhere [3]. In summary, and as discussed further in Sect. 9.2, this explicit verification of the safety level aims to ensure that the uncertainties associated with the demand/capacity evaluation do not result in a too high (unknown) likelihood of the structure not fulfilling the design objectives.

9.1.2 Importance of Considering Uncertainty

The basis of demand/capacity-based design in structural engineering is that the resistance of a structure is greater than the load applied on the structure. Consider, to illustrate the concept, an axially loaded element under ambient conditions (Fig. 9.2). The linear elastic response of the system may be defined according to several very simple relationships (see Table 9.1 for definitions):

Here, σ is the stress; P is an applied axial load; A is the cross-sectional area of the element; ε is the strain; E is the modulus of elasticity of the material; and L is the length of the element being analysed. Each of these relationships is related to the material properties, an external condition or input to the system, or a feature of the system.

Assuming some relationship between stress and strain which defines the modulus of elasticity , as well as the yield and ultimate stresses and strains as per Fig. 9.3, the failure of this system can be defined according to various different criteria, in function of the performance objective: for example, an evaluation based on a deformation criterion, i.e. ΔL > ΔL*, with ΔL* being a limiting deformation, or according to criteria based on the material response, e.g. σ > σ y; σ > σ u; ε > ε y; or ε > ε u.

Even for this simple system, the evaluation of such criteria incorporates, to some degree or another, uncertainties. In the case of the input to the system, there are uncertainties regarding the load which is applied. In the case of the system properties, there are uncertainties with regard to the material response as well as the geometry of the system. When considering the model chosen to analyse this system, there arise model uncertainties associated with the formulation of the material in the model or any discretization or simplifications to the model made by the user. Referring to the uncertainties inherent in the system, these may largely be attributed to aleatoric uncertainties , or aleatory variability , arising from the natural randomness in a process or in the input variables. This randomness can usually be measured and quantified. For discrete variables the randomness can generally be parametrized by different probability mass functions. Uncertainties related to the modelling of the system are referred to as epistemic uncertainties . Different models inherently contain a different degree of epistemic uncertainty. With regard to these epistemic uncertainties, the impact of this on structural design under ambient conditions is well illustrated in the work by Fröderberg and Thelandersson [4]; the impact on structural fire engineering is illustrated in the work by Lange and Boström [5].

At this point, it should be clear that the simple problem presented above contains a multitude of uncertainties: aleatory uncertainty arising from the input to the model in the form of the applied force or the different properties of the system and epistemic uncertainty arising from the modelling approach adopted and any simplifications or assumptions made. As a result of the combined effect of these uncertainties, we cannot always be sure that the condition of capacity being greater than demand, under any of the failure criteria identified above, is satisfied for a given design. The implication is that some degree of risk is being adopted in the acceptance of any model of this problem.

If temperature is introduced to this problem, the nature of the uncertainties remains largely the same; however the complexity of the problem multiplies. The stress-strain relationship of the material becomes a function of temperature, and thermal expansion means that both the cross-sectional area and the length of the element change. Each of the very simple relationships presented above now becomes also a function of temperature:

where α denotes the coefficient of thermal expansion, and ΔT denotes a change in temperature.

Having introduced temperature to the problem, it becomes also necessary to calculate temperature. As discussed in Chap. 5, the heat transfer inside the solid is governed by Fourier’s law, while convective and radiative heat transfers are to be taken into account at the surface. Each of these processes of heat transfer, conduction, convection, and radiation, now introduces additional variable uncertainties into our system, including conductivity, convective heat transfer coefficient , and emissivity required for calculation of heat transfer by radiation. The complexity of and thus the overall uncertainty associated with this simple problem have now increased dramatically, simply by the introduction of temperature. The certainty that the capacity is always greater than the demand, for any of the criteria listed, is now diminished.

As indicated in Sect. 9.1.1, the traditional means of addressing this uncertainty in structural fire engineering has always been to overestimate the load and to underestimate the capacity, thus accounting for uncertainties by increasing the nominal margin of safety. However, this indirect approach fails to acknowledge that engineering failures occur where the distributions of demand and capacity overlap, i.e. where demand > capacity within the tails of the distributions of the demand and capacity (see Fig. 9.6). Therefore, increasing the margin of safety by increasing the distance between the average demand and the average capacity in an arbitrary way cannot ensure that failure has a probability which is acceptably low to society. Thus, when an explicit verification of the safety level is required, the uncertainties associated with the design need to be explicitly considered.

9.1.3 Sources of Uncertainty

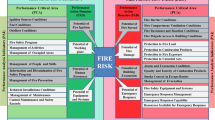

In structural fire engineering, uncertainties arise from many sources . Referring to the process of structural fire engineering described by Buchanan and Abu [6], Fig. 9.4, sources of aleatoric uncertainty can be seen to be introduced at every stage, and epistemic uncertainty arises depending on the models used at each stage. The nature of the sources of uncertainties means that uncertainties propagate through any analysis. The uncertain input variables are propagated through uncertain models which results in uncertain outputs from models.

Flow chart for calculating the strength of a structure exposed to fire, adopted from Buchanan and Abu [6]

When developing the fire model, uncertainties in the geometry of the fire compartment, fuel load , and fire characteristics arise. Arguably uncertainties associated with room geometry are significantly smaller than uncertainties associated with the fuel or the characteristics of the fire and can therefore be ignored. However, the fuel load and the fire characteristics are arguably very significant uncertainties in the entire process and generally cannot be ignored. In the Eurocode , uncertainties associated with the fuel load are treated by adopting some high-percentile fuel load from a distribution which varies with occupancy—increasing the demand for the design, as described above. Other uncertainties related to the fire characteristics however are not treated in any way satisfactorily; for example, the opening factor upon which the burning behaviour is largely dependent is usually treated entirely deterministically. Further uncertainty arises from the choice of fire model; as will be discussed later, different representations of fire (standard fire, parametric fire, travelling fire, zone models, field models) account for different factors related to the overall fire behaviour. The uncertainty associated with the use of these different models will be discussed later in this chapter.

Any uncertainties in the input variables to the fire model as well as uncertainties inherent in the fire model itself are propagated into an uncertainty for the thermal exposure which is an input to the heat transfer model , along with details of the geometry, the thermal properties, and the heat transfer coefficients. Element geometry is arguably similar to the room geometry in that the effects of uncertainties are likely to be relatively inconsequential compared with the uncertainties of thermal exposure, heat transfer coefficients , and material thermal properties. In this chapter, there is a discussion of the variability in thermal properties as well as heat transfer coefficients as input to the heat transfer model. Heat transfer is discussed in Chap. 5. It should be noted that any uncertainties in inputs or resulting from the modelling approach with respect to the fire model propagate through the analysis.

Uncertainties in the inputs to the heat transfer model are propagated, as well as any uncertainties in the model itself, to the structural model where the geometry, the applied loads, and the mechanical properties of the material all are subject to uncertainty. The overall effect of this propagation of uncertainty is a multiplicity of possible outcomes at every stage in the analysis process including in the final determination of the load capacity.

The above gives an overview of the many uncertainties associated with each of the steps in structural fire engineering analysis. These uncertainties can generally be parametrized by different probability distributions . The ability to do this depends to a large extent on the quality of information which is available about the specific variables. This is often cited as one of the most significant obstacles to the use of probabilistic methods in structural fire engineering that the rate of occurrence of events is typically so low that the informativeness of any resulting distributions is low. However, as will be shown in this chapter, many of the variables can be satisfactorily parametrized for a number of different applications. Where variables cannot be parametrized, or where epistemic uncertainties exist, then the sensitivity of solutions can be probed and engineering judgement can be exercised to ensure that design objectives are met.

9.2 Reliability and Risk Acceptance

9.2.1 Risk Acceptance in Structural Fire Design

As indicated in Sect. 9.1, traditional performance-based (structural) fire safety design is deterministic in nature, requiring the selection of design inputs, scenarios, and performance criteria that are deemed appropriately conservative by the engineer. In such a process, the safety level (or residual risk) associated with a given design is not evaluated, and the full spectrum of consequences and their associated probabilities are not interrogated. Instead, it is assumed that an adequate, but unquantified, level of safety is attained based upon engineering judgement and considerations: (a) that real fire events have occurred, with performance observed, and (b) that society has not expressed dissatisfaction with the levels of performance witnessed. In other words, the basis for acceptance of traditional performance-based design (or the safety foundation) is the experience of the fire safety profession (see left-hand side of Fig. 9.5) proposed in Hopkin et al. [7]. This safety foundation can only be justified where there are sufficient real fire events to observe, guide design processes, and offer society opportunities to express views on their dissatisfaction (or otherwise) of the consequences witnessed.

(Left) assumed basis of safe design, (right) demonstrated basis of safe design where experience is not an adequate basis, Hopkin et al. [7]

Traditional (structural) fire safety design and its associated safety foundation cannot, however, be extrapolated to exceptional structures, i.e. those with atypical consequences of failure or adopting innovative materials, as it is likely that insufficient instances exist where fires have occurred and performance is witnessed. For such complex cases, there is a need to explicitly evaluate the residual risk (see right-hand side of Fig. 9.5).

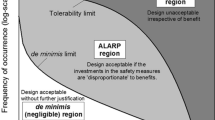

Within the framework presented by Van Coile et al. [3], there is an expectation that probabilistic risk assessment (PRA) methods be employed to demonstrate adequate safety for cases where the collective experience of the profession cannot be called upon to guide design approaches. In doing so, any design must be demonstrated to be tolerable to the society, and the residual risk as low as is reasonably practicable (ALARP). In structural safety, the full cost-benefit analysis implied by the ALARP evaluation is typically substituted by a reliability analysis , allowing to determine design acceptance based on structural failure probabilities only [3].

9.2.2 What Is Reliability?

ISO 2394:2015 [8] defines reliability as the ‘ability of a structure or structural member to fulfill the specified requirements, during the working life, for which it has been designed’. Reliability is expressed in terms of probability and can cover safety, serviceability, and durability of a structure. In the Eurocodes , no in-depth definition of reliability is given. However, in the fundamental requirements it is currently stated: ‘a structure shall be designed and executed in such a way that it will, during its intended life with appropriate degrees of reliability and in an economic way:

-

Remain fit for the use for which it is required; and.

-

Sustain all actions and influences likely to occur during execution and use’.

In the latter bullet point, a fire condition falls within the definition of ‘all actions’. To satisfy the above considerations in relation to reliability, Holicky [9] notes that there should be four important elements requiring consideration:

-

The definition of a failure, i.e. the limit state.

-

The time (reference) period under consideration.

-

The reliability level, i.e. an assessment of the failure probability .

-

The conditions of use (and the associated impact on the input uncertainties).

Importantly, the concept of absolute reliability does not generally exist (apart from in exceptional cases), i.e. few structures have a zero-failure probability and there must be an acceptance that there is a certain, small probability that a failure may occur within the intended lifespan of a structure [9]. This principle extends to structural design for fire safety, where structural elements or systems must have an acceptable failure probability that varies in function of the failure consequences. In the absence of such an acceptable failure probability , the drive towards absolute reliability would (sooner or later) result in grossly disproportionate costs to society, as more and more resources need to be spent to further reduce the failure probability.

In the context of structural design for fire, many fire safety objectives may exist (see Sect. 9.1.1), which are translated into functional requirements and performance criteria; see ISO 24679-1:2019 [10]. For each of the performance criteria, a reliability target can be specified, for example, a business continuity-driven performance requirement of a high certainty (reliability) of limited permanent deflection post-fire. In most common structural fire design situations, maintaining structural stability during fire is the primary functional requirement (relating, e.g., to a primary objective of life safety, possibly in conjunction with property protection). For this functional requirement , reliability in consideration of fire can be defined as the probability that the structure or structural member will maintain its load-bearing function in the event of fire, i.e. reliability is the complement of the failure probability . This definition of reliability in structural (fire) engineering will be applied herein.

Applying the above, the performance criterion can for example be specified as (i) a maximum deflection v max being smaller than a limiting value v lim or (ii) the load-bearing capacity of the structure R being larger than the load on the structure E (including self-weight). In the first illustrative case failure is defined by the exceedance of a (possibly deterministic) limiting deflection, while in the second case failure is defined as the exceedance of the resistance effect by the load effect . For the latter example, the failure probability definition is thus specified by Eq. (9.7). Thus, the limit defining the boundary between the failure domain and boundary of the safe domain is given by Z = R – E = 0. This is commonly referred to as the limit state corresponding with the performance criterion.

A limit state is a condition of a structure or component beyond which the structure no longer fulfils certain criteria for design. Examples of limit states in structural engineering include ultimate limit states beyond which it is expected that a structure will no longer carry the applied load and serviceability limit states beyond which it is expected that the level of comfort or confidence of the users of the building as a result of, e.g., deflections or vibrations, is no longer adequate. Ultimate limit states are of relevance for accidental actions such as fire whereas serviceability limit states have little arguable application for accidental actions.

In structural fire design situations, performance (and thus failure) is commonly evaluated given the occurrence of a fire. Consequently, the load reference period is recommended to be taken as the instantaneous load situation, i.e. an arbitrary-point-in-time load. Taking into account the specifics of the structure (i.e. the conditions of use referenced by Holicky [9]), the load and resistance effects are thus defined. A conceptual visualization of these is given in Fig. 9.6, showing the variation of the resistance effect R and the load effect E, as well as the ‘safety margin’ defined here by the difference in expected values μ R and μ E. As illustrated in Fig. 9.6, despite the nominal safety margin , situations of E exceeding R occur in the tails of the distribution. The acceptability of this observed failure probability now depends on the (availability of) maximum allowable failure probabilities, or in other words: target reliability levels.

9.2.3 Target Reliability Indices for Structural Design

Defining maximum allowable (target) failure probabilities is central to the application of reliability methods. Relative to the full ALARP evaluation highlighted in Sect. 9.2.1, specified target failure probabilities allow to omit cost evaluations from the design, thus restricting the design problem to engineering considerations (and not, e.g., discount rate assessments).

Commonly, a (target) failure probability is expressed in an alternative form as a reliability index (β), with

Φ −1 is the inverse standard normal cumulative distribution function, as applied amongst others in EN 1990. For completeness, the relationship between (P f) and (β) is as shown in Fig. 9.7. In the following subsections, target reliability indices for structural design are summarily presented both for ambient conditions and for fire.

9.2.3.1 Reliability Indices at Ambient Temperature

As noted in Sect. 9.1.1, reliability-based design has found wide application in structural engineering. For example, as the basis of the partial safety factors applied in the Structural Eurocodes , the target reliability index , β, governs everyday structural engineering practice. Different (recent) target values are, however, available from several sources [11].

Target failure probabilities (P f, t) for ambient design have received much attention in the literature, e.g. see Rackwitz [12] and Fischer et al. [13]. Target values have even been included in international standards, which can be linked to the Eurocode target reliability indices . ISO 2394:1998 [14] lists ‘example’ lifetime target reliabilities as a function of the failure consequence and the relative costs of safety measures (Table 9.2). Based on the formulation in ISO 2394:1998, these values have been informed by cost optimization and calibrated against existing practice. The standard further recommends the values 3.1, 3.8, and 4.3 to be used in ultimate limit state design based on both consequence of failure and cost of safety measures. Considering the general content of the standard, these values are considered applicable at an element level.

Target reliability indices specified in EN 1990 [15] as a function of the ‘reliability class’ are given in Table 9.3. The reliability classes can be associated with the consequence classes (i.e. high, medium, low). As also noted in ISO 2394:1998, considerations such as brittle or ductile failure may influence the chosen target.

The Eurocode target reliability indices are specified both for a 1-year reference period and a 50-year reference period (where 50 years equals the indicative design working life for common structures). Both sets, however, correspond with the same target reliability level, considering independence of yearly failure probabilities ; that is, irrespective of how long a structure has been standing, it is assumed that the per annum failure likelihood is constant. There is thus close agreement between β t,50 in Table 9.3 and lifetime targets in ISO 2394:1998.

The material-specific Eurocodes apply the 50-year reliability index of 3.8 on an element basis for the definition of partial safety factors . In case of additional redundancy in the system (e.g. due to robustness considerations), this will result in a higher system reliability index.

Target values for a 1-year reference period are given in the Probabilistic Model Code developed by the Joint Committee on Structural Safety [16]; see Table 9.4. These recommended values were derived from a calibration process with respect to the existing practice and are considered compatible with cost-benefit analyses, with explicit reference to the analysis by Rackwitz [12], and can be considered to relate to an updated recommendation relative to ISO2394:1998.

Table 9.4 is applicable to structural systems. In case of a single-element failure mode dominating system failure, these targets are directly applicable to the structural element. The target values are given as a function of the ratio ξ of the failure plus reconstruction cost to the construction cost and an obsolescence rate on the order of 3% is considered. For very large consequences (ξ > 10) an explicit cost-benefit analysis is recommended. The target reliabilities in Table 9.4 have been incorporated into ISO 2394:2015.

It is noteworthy that the reliability targets presented previously are in some manner linked to cost optimization, where the direct and indirect consequences resulting from ‘loss of the structure’ are taken into account. Mindful of the need for potential fatalities being tolerable, as is discussed by Van Coile et al. [3], this may be considered beyond the ambit of a direct life safety evaluation, which is generally concerned only with averting fatalities. Fischer et al. [17] proposed an alternative perspective, where (societal) life safety cost optimization is concerned solely with the preservation of life through incorporation of the life quality index (LQI) ; that is, safety investments are balanced directly against the reduction in risk to life. The obtained acceptable failure probability is then considered an absolute lower bound safety requirement for further reliability assessments and more general cost optimization considerations. This acceptable failure probability is given in Eq. (9.9) for coefficients of variation in the resistance and action effects of 0.1–0.3:

where C 1 is the marginal safety cost, γ s the discount rate, ω the obsolescence rate, N f the number of fatalities in case of failure, and SCCR the societal capacity to commit resource metric.

By way of an example, taking a consequence class 3 structure from ISO 2394:2015, the expected number of fatalities in the event of structural failure is less than 50 persons. If the building were in the UK, the SCCR for a 3% discount rate is $3,665,000 ppp (purchasing power parity) according to ISO 2394:2015. For a construction cost (C 0) of $40,000,000 ppp and a normal marginal safety cost (C 1/C 0) of 1%, the marginal safety cost is $400,000 ppp. Adopting an obsolescence rate of 2% and societal discount rate of 3%, the acceptable failure probability is 2 × 10−5 for a 1-year reference period. This would coincide with β = 4.1, i.e. a significantly less onerous reliability target when compared to the figures in EN 1990. This value should however be considered as an absolute lower bound, as it is (implicitly) assumed that there are no further benefits to society from the safety investment apart from averting fatalities [18]. For example, the benefit of reducing the risk of city conflagration or network resilience is not taken into account.

9.2.3.2 Reliability Targets and Fire

The application of the ambient reliability targets to structural fire design has received considerable research attention. In the Natural Fire Safety Concept (NFSC) [19], the Eurocode target reliability index of 3.8 (50-year reference), i.e. 4.7 for 1-year reference, was adopted as a starting point. By further assuming that the yearly probability of a fire-induced structural failure should be as unlikely as the yearly probability of a ‘normal-design’ structural failure, and considering fire-induced structural failures to be conditional on the occurrence of a ‘significant’ fire, the NFSC derives a target reliability index, β t,fi, for structural fire design through Eq. (9.10), with λ fi being the annual occurrence rate of a structurally significant fire:

Nevertheless, the NFSC goes on to consider that an acceptable target failure probability should be differentiated from that at ambient temperature in function of the building evacuation mode, in consideration that at the time of fire occurrence, in many buildings, occupants are actively encouraged to evacuate (reducing the potential number of fatalities), i.e.:

-

Normal evacuation: 1.3 × 10−4 [y−1].

-

Difficult evacuation: 1.3 × 10−5 [y−1].

-

No evacuation: 1.3 × 10−6 [y−1].

This concept is explored by Hopkin et al. [20] where the time-dependent failure probability of a steel structure is coupled with a stochastic evacuation timeline for a series of reference office buildings in determining so-named risk indicators.

One difficulty noted with the NFSC approach is discussed by Van Coile et al. [11] and Van Coile et al. [21]. There, it is highlighted that the Eurocode target reliability levels for ambient design which form the basis of the NFSC can be considered compatible with cost optimization considerations, as discussed above. The basic assumptions underlying the cost optimizations for ambient design conditions are however not necessarily applicable to structural fire design. Within Van Coile et al. [21] target failure probabilities are expressed in an alternative general form, in function of a damage to investment indicator (DII), expressed as

where b is the relative marginal safety investment cost, i.e. normalized to the construction cost (C 0), as defined by Eq. (9.12); λ is the failure-instigating event occurrence rate; and ξ is the relative failure costs, i.e. also normalized to the construction cost. In the case of fire, λ would be the structurally significant fire occurrence rate. For normal design conditions, lambda is expressed as one per annum, and the corresponding reliability target is for a 1-year reference period. This formulation is compatible with the traditional formulation underlying Rackwitz [12]:

Figure 9.8 presents the optimal reliability indices and failure probabilities in function of DII, as proposed by Van Coile et al. [21]. This formulation confirms the scaling of the target failure probability by the occurrence rate λ as proposed conceptually in the NFSC , under the condition however that the ratio of the other parameters in the DII (i.e. the costs of failure and the costs of further safety investments) remains unchanged. Investigation into the costs and benefits of structural fire protection is an area of ongoing research. Target safety levels for structural fire resistance have been derived, e.g. by Fischer [22] for steel structural elements and by Van Coile et al. [23] for concrete slabs.

Optimal failure probability and reliability index in function of the DII [21]

9.3 Uncertainty in Actions

Fire is an uncertain event. Depending upon building use, building size, fire strategy measures, fire safety management, etc., fire occurrence rates differ and so does the likelihood that a fire will develop to an extent that it is structurally significant. Once of an intensity to be considered structurally significant, the manifestation of the fire is uncertain and correspondingly the probability of a fire-induced structural failure. Sections 9.3.1 and 9.3.2 discuss uncertainties that arise in both the fire’s occurrence rate and development, alongside what uncertainty arises in mechanical action (load, moment, etc.). Section 9.4 speaks to uncertainty in the response of materials at elevated temperature.

9.3.1 Thermal Action

Uncertainty in the thermal action necessitates a separate consideration of the factors leading to a fire’s occurrence and its ability to become fully developed (i.e. fire occurrence rates and interventions), alongside those that influence the fire’s fully developed manifestation (i.e. fire modelling inputs). These are discussed separately in Sects. 9.3.1.1 and 9.3.1.2, respectively.

9.3.1.1 Fire Occurrence Rates and Interventions

Many events can occur between an ignition and a fire becoming fully developed. Jurisdiction-specific statistics are available which, when contrasted to building stock, give an indication of ignition rates. As fire statistics generally relate to reported fires, the thus obtained ignition frequencies should be considered to relate to fires which because of their severity, duration, or operational procedures warrant reporting.

However, subsequent to fire ignition , there will need to be a failure of numerous intervention mechanisms for the fire to become structurally significant. These could include (a) intervention of occupants via first-aid firefighting, (b) activation of automatic fire suppression systems, or (c) fire service operations.

In contributing to the development of Eurocode 1 , Part 1.2 [24], the natural fire safety concept (NFSC) project [19] explored some of the probabilistic aspects of structural fire design, with an emphasis on developing design methods that considered the relationships between early fire intervention measures and subsequent demands of the structural fire design. Table 9.5 summarizes some probabilistic factors for fire occurrence rate and differing intervention mechanisms. It should be noted that the values given likely vary significantly between jurisdictions. For sprinklers which are not installed according to standard, Schleich et al. indicate that a lower success rate (below 0.95) may be appropriate.

The NFSC makes further generalizations which are subsequently adopted in EN 1991-1-2:2002, grouping building types into ‘Danger of fire activation’ classifications , ranging from low to ultra-high, as given in Table 9.6. In this table, the probability of fire occurrence is again expressed per unit area but relates to probability of ignition and subsequent unsuccessful intervention by the occupants or fire service. That is, there is no consideration of active systems, such as sprinklers.

9.3.1.2 Fire Modelling Inputs

Once the fire can develop to an extent that it can be considered structurally significant, a fire model will be required to idealize the fire’s development/behaviour. Chapter 4 discusses the various fire models that can be employed. Generally, it is found that the following key inputs need to be defined (not in all cases for all models):

-

Growth/spread rate.

-

Fire load.

-

Ventilation conditions .

-

Near-field temperature.

9.3.1.2.1 Fire Growth Rate/Spread Rate

Studies on the variability in fire growth rates are limited in literature. In a residential context, Holborn et al. [25] estimated fire growth rate based on fire investigation data, with 1991 samples, gathered in the Greater London area. Fire damage area was assumed to be consistent with the fire area, for a heat release rate density (\( {\dot{Q}}^{{\prime\prime} } \)) of 250 kW/m2. Holborn et al. [25] proposed that the average fire growth parameter α (kW/s2) could be estimated by assuming a t 2 growth rate based on the area of fire damage when the fire was discovered (A 1) compared to when the fire brigade arrived (A 2), and the time intervals from ignition to discovery (t 1) and ignition to fire brigade arrival (t 2). This can be summarised as

From this it was determined, using assumed log-normal distribution parameters , that dwelling fires had a mean fire growth rate of 0.006 kW/s2, a standard deviation of 0.039 kW/s2, and a 95th percentile of 0.024 kW/s2.

Baker et al. [26] determined a residential growth rate distribution using zone modelling software B-RISK. A residential occupancy based on experiments undertaken in Sweden was modelled using probabilistic inputs for the ‘design fire generator’ (DFG) and by applying the Monte Carlo method. The outcome of the modelling indicated that a fire growth rate distribution could be approximated to a triangular distribution, with a minimum of 0 kW/s2, a maximum of 0.412 kW/s2, and a mode of 0.033 kW/s2.

In a commercial and public building context, Holborn et al. [25] also computed log-normal distribution parameters for the fire growth rate. However, the sample sizes were significantly reduced compared to the residential case . Results are given in Table 9.7.

Nilsson et al. [27] computed fire growth rate distribution parameters for commercial buildings based upon the data in the Swedish fire ‘Indicators, Data and Analysis’ (IDA). The IDA is a national database recording all rescue service responses. Given 2365 commercial fires, excluding arson, Nilsson et al. [27] like Holborn et al. [25] propose a log-normal distribution for the fire growth rate, with mean 0.011 kW/s2 and 95th percentile of 0.105 kW/s2.

Fire spread rates have been subject to further review, albeit no commonly accepted distributions are presented in the literature. Rackauskaite et al. [28] give spread rates which are computed from a range of large-scale fire experiments or real events . These are summarized in Table 9.8. Based upon operational experiences, Grimwood [29] gives faster spread rates, particularly for large open plan offices, as shown in Table 9.9. In the case of the LA Interstate Bank Fire, Grimwood notes that the fire took 66 min to travel 142 m laterally. In comparison, the fire spread laterally 80 m in 46 min at Telstar House, London.

9.3.1.2.2 Fire Load Density

Fire load density was subject to extensive surveys within CIB Working Group 14, led by Thomas [30]. Figures within CIB W14 influence the fire load densities adopted within the NFSC [19] and subsequently recommended in EN 1991-1-2:2002. Fire load density distributions within Eurocode 1 , Part 1.2, universally adopt a Gumbel type I distribution, with a coefficient of variation (COV) of 0.3. For different occupancy types, corresponding fire load densities are given in Table 9.10.

Zalok et al. [31] present a more contemporary review of fire loadings relative to the NFSC within commercial premises. The study undertook surveys in 168 commercial premises, concluding that fire load density generally followed a log-normal distribution. A summary of findings is given in Table 9.11.

Elhami Khorasani et al. [32] summarize the results of four fire load surveys across different countries. Data from the USA is then adopted to generate a new probabilistic model for fire load density , expressed in function of enclosure area. Equation (9.14) describes a probabilistic model for lightweight occupancies (office and clerical). Equation (9.15) gives a corresponding model for heavyweight occupancies (library, storage, file rooms):

where q is in units of MJ/m2; A f is the room size (m2); and ε is a random variable that is in accordance with the standard normal distribution.

The proposals of Elhami Khorasani et al. [32] are further developed by Xie et al. [33] who present a fire load density model for office and residential building types. Distributions for both occupancies are said to be log-normal with mean (μ qm – MJ/m2) and standard deviation (σ qm – MJ/m2) varying in function of enclosure area (A f – m2), as given in Eqs. (9.16) and (9.17) for offices, and Eqs. (9.18) and (9.19) for residential. The maximum enclosure sizes were c. 30 and 120 m2 for residential and offices, respectively:

9.3.1.2.3 Heat Release Rate

For fuel-controlled burning, the heat release rate density (\( {\dot{\mathrm{Q}}}^{{\prime\prime} } \) kW/m2) has importance. PD 7974–1:2019 [34], based on the work of Hopkin et al. [35], gives ranges for different occupancies as summarized in Table 9.12.

For most cases, \( \dot{Q^{{\prime\prime} }} \) corresponds with the maximum value estimated over the full duration of a fire. For hotels and industrial buildings, \( \dot{Q^{{\prime\prime} }} \) corresponds with the mean value estimated over a defined period of burning.

9.3.1.2.4 Ventilation Conditions

The breakage of openings and associated probabilities has not been subject to extensive research. Studies presented by Hopkin et al. [20] have adopted a uniform distribution between a lower and upper bound of 12.5% and 100% of the total opening area. This ventilation range has no basis other than to introduce some sensitivity to ventilation conditions . Analyses underpinning British Standard BS 9999:2017 by Kirby et al. [36] also adopt a uniform distribution but expressed in function of the opening size relative to the compartment floor area. These range from 5% to 40%, with opening heights varying from 30% to 100% of the compartment height.

The Joint Committee on Structural Safety (JCSS ) [37] provides a tentative probabilistic distribution for opening factor (O = A v√H/A t), where

with O max being the maximum opening factor (m0.5) assuming the failure of all non-fire-resisting external wall construction and ζ a random parameter that is log-normally distributed. The JCSS recommends that ζ have a mean of 0.2 and a standard deviation of 0.2, with any values exceeding unity suppressed so as not to generate negative opening factors.

9.3.1.2.5 Near-Field Temperature

Stern-Gottfried [38], in developing a travelling fire methodology, reviewed variability in near-field temperature at different points in time from ‘flashover’ in a limited number of large-scale fire experiments (Dalmarnock and Cardington fire tests). From this, it was determined that spatially resolved near-field temperatures followed a normal distribution. At different points in time, the mean near-field temperature varies. As such, Stern-Gottfried [38] proposes a relationship between average near-field temperature rise (ΔT avg) and coefficient of variation (δ). The relationship is defined by Eq. (9.21):

Stern-Gottfried [38] notes that Eq. (9.21) could be used as a nominal expression of the standard deviation for any temperature-time curve.

In the absence of alternative data, Hopkin et al. [20] used Eq. (9.21) to describe variability in the near-field temperature of travelling fires as part of a probabilistic framework. For travelling fires, Rackauskaite et al. [28] note temperatures of the near field to be in the range of 800–1200 °C. For a conservative case, early applications of the travelling fire method (e.g [39].) adopted a deterministic near-field temperature of 1200 °C. However, structural response is highly sensitive to this input, and therefore a treatment as a stochastic variable in some manner is advocated, e.g. a uniform distribution between 800 and 1200 °C.

9.3.2 Mechanical Action

9.3.2.1 Introduction

The uncertainty in load and associated actions on structures is discussed widely in the literature, e.g. JCSS [37], Ellingwood [40], and Holicky and Sykora [41]. The study of Ellingwood is specifically focused on fire events. The mechanical actions are traditionally subdivided into permanent actions and imposed (or variable) actions, and their variability with time is an aspect of particular relevance for structural fire engineering. Other mechanical loads include wind load, snow load, and earthquake load. The joint consideration of fire and, for example, earthquake loading may be necessary for exceptional building projects with high consequences of failure, i.e. a requirement for a very high reliability. Ellingwood however adopts a de minimis risk acceptance condition of the order of 10−6 for a 1-year reference period, which is subsequently applied as a screening probability for considering combinations of loads.

In design for normal conditions, the load variability is considered by a (characteristic or design) load with a low probability of being exceeded during the lifetime of the structure. Naturally, the day-to-day probability of occurrence of such high (design) load value is low, just as for the day-to-day probability of occurrence of a significant fire. Simultaneously taking into account both events would result in very onerous fire design requirements. Hence, the reduced safety and combination factors in the Eurocode (EN 1990) and in the ASCE design format (load and resistance factor design) (ASCE 7–16) lessen the required load under consideration for structural fire design compared to normal design conditions.

Thus, when directly taking into account the uncertainty in the permanent and imposed load effects , an arbitrary point in time (APIT) load is to be considered. This differs from the stochastic load models commonly considered for normal design conditions, where distribution models for the maximum load in a long (e.g. 50 years) reference period are applied.

A recent literature review by Jovanović et al. [42] of permanent and imposed load models applied in probabilistic structural fire engineering (PSFE) studies has shown that a large variation in models is commonly applied, notably for the imposed load effect . In summary, two distinct families of probabilistic models were discerned. These are revisited in the following Sect. 9.3.2.2, together with a discussion of background studies and recommended distributions to be applied in PSFE applications .

9.3.2.2 Permanent Load Model

9.3.2.2.1 Introduction

The permanent actions result from the self-weight of the structural elements and finishes, and can be considered time invariant [37, 40]. Hence, for the stochastic model of the permanent load, the models applied for normal design qualify as APIT permanent loads. This neglects possible combustion of finishes or structure, as is a standard and conservative approximation.

9.3.2.2.2 Background

Table 9.13 gives mean values and coefficient of variation for density γ for some common structural framing materials , while Table 9.14 lists standard values for the deviation of structural elements’ dimensions from their nominal values. Considering these standard deviations, the mean volume of a structural element exceeds its nominal value. The JCSS Probabilistic Model Code (PMC) however states in a simplifying manner that the mean value of the volume can be calculated directly from the mean value of the dimensions, and that the mean dimensions can be considered equal to their nominal value [37].

With both γ and the volume V described by a normal distribution, the self-weight l is in principle not normally distributed. However, when the coefficients of variation (COV) of the volume and density are small (which is generally the case), the resulting self-weight loads can nevertheless be assumed to be described by a normal distribution [43]. This has also been adopted in the JCSS PMC [37]. Considering Taylor expansion, the mean value of the self-weight μ l is given by μ γ·μ V. The coefficient of variation δ l can be estimated from Eq. (9.22), with standard values listed in Table 9.13 [41]:

When multiple materials or components contribute with their self-weight to the permanent load effect , this corresponds with an addition of normally distributed variables. When the constituent self-weights l i can be considered independent (with mean values μ li and standard deviation σ li), the overall permanent load is described by a normal distribution as well, with mean values μ G and standard deviation σ G given by

9.3.2.2.3 Commonly Applied Models in Probabilistic Structural Fire Engineering

When evaluating an existing building, evaluating the load effect through Eqs. (9.23) and (9.24) can be considered reasonable, and may allow a precise assessment of the appropriate probabilistic description of the permanent load. For general reliability studies and code calibration purposes, however, generally applicable models are preferred for generality (thus avoiding assumptions with respect to, e.g., floor build-up and materials).

As elaborated by Jovanović et al. [42], two models are commonly applied for describing the permanent load effect in PSFE . On the one hand, a series of studies (e.g [44].) and Iqbal and Harichandran [45] model the permanent load effect as a normal distribution with mean value equal to 1.05 G nom, with G nom as the nominal permanent load, and a coefficient of variation of 0.10. These studies have the 2005 study by Ellingwood as a common point of reference. The other series of studies (e.g [46, 47].) apply a normal distribution with mean value equal to G nom, and a COV of 0.10. These studies do not propose a differentiation of permanent load distribution by framing material.

9.3.2.2.4 Recommended Model for the Permanent Load

Considering the above, both commonly applied models agree on describing the permanent load by a normal distribution with a COV of 0.10. The normal distribution is in agreement with the background models. Taking into account Table 9.13, a COV of 0.10 can be considered a (practical) conservative assessment. Considering the discussed background information, the mean permanent load slightly exceeds its nominal value (in the order of 1% for concrete elements). It is considered preferable to neglect a 1% (order of magnitude) exceedance in accordance with the JCSS PMC recommendation than to set μ G equal to 1.05 G nom. This is considered to be compensated by the practical choice for a COV of 0.10.

In conclusion, the permanent load effect G is recommended to be described by a normal distribution, with mean equal to the nominal permanent load effect G nom, and COV of 0.10.

9.3.2.3 Live Load Model

9.3.2.3.1 Introduction

The live (or imposed) loads arise from a range of components, from building occupants to their possessions and movable items, like furniture. The total live load can be broken down into two components: (1) a sustained component and (2) an intermittent or transient component [37, 40, 41].

While both vary with time, by definition, a component of the sustained load is ever present—albeit its magnitude could vary. Figure 9.9 illustrates the difference between the sustained and intermittent live load components, adapted from Ellingwood [40].

Components of live load —sustained and intermittent, adapted from Ellingwood [40]

Normal people occupancy is generally included in the sustained load, e.g. Chalk and Corotis [48]. The intermittent live load on the other hand relates to exceptional events, such as overcrowding [48] or stacking of objects during refurbishing [37].

For PSFE , the arbitrary point in time (APIT) live load is of interest, and as the occurrence of the intermittent (transient) live load is by its conceptualization rare, it generally does not need to be taken into account simultaneously with fire exposure [40]. Ellingwood notes occurrence rates of c. 1/y and a duration of 1 day for the intermittent load. For a structurally significant fire occurrence rate of 10−6 per annum and duration of 4 h, the coincidence rate of a fire and intermittent live load is significantly below the proposed de minimis limit (10−6) leading Ellingwood to propose that the intermittent component be disregarded. While this can be considered sufficient for the general floor area of most buildings (e.g. offices, residential buildings), Jovanović et al. [42] state that care should be taken whenever the live load profile of the building has specific occurrence patterns or particular likelihood of overcrowding (e.g. sports stadia), or when considering buildings with high reliability requirements (e.g. high-rise structures). Figure 9.10 shows the coincidence rates of a 1-year returning intermittent live load and fire, for different compartment sizes and danger of activation (as defined in Table 9.6).

In the following, the APIT model for the sustained live load is discussed.

9.3.2.3.2 Background

The commonly applied live load models have been derived from load surveys conducted in the twentieth century.

Ellingwood and Culver [49] assessed an equivalent uniformly distributed APIT load Q from a 1974–1975 survey of US office buildings. The mean loads and COV are listed in Table 9.15 and include a nominal personnel load of 81 N/m2. Ellingwood and Culver report that no significant difference with UK data published in the early 1970s could be discerned, and list a gamma distribution as the appropriate distribution model.

Chalk and Corotis [48] list APIT sustained live loads for different occupancy types , taking into account data from multiple surveys (Table 9.16). Comparison with the office data listed in Table 9.15 confirms the order of magnitude values. Also Chalk and Corotis applied a gamma distribution in their calculations.

The JCSS PMC [37] tabulates live load distribution parameters as listed in Table 9.17 and recommends a gamma distribution for the APIT load . Reference is made to a limited number of documents, amongst which the 1989 CIB report [50] is of particular relevance. This report was drafted by Corotis and Sentler, which can reasonably be considered to imply a close relationship with the work presented in Table 9.16. The CIB report lists multiple surveys dating from 1893 to 1976. Looking into the PMC values for μ/Q nom, these are comparable to those listed in Table 9.16, with all categories except warehouses resulting in a value between 0.15 and 0.20.

With respect to the COV, the PMC specifies Eq. (9.25) for the standard deviation of the instantaneous imposed load. In this equation and Table 9.17, σ V is the standard deviation of the overall load intensity, σ U the standard deviation associated with the spatial variation of the load, A 0 an occupancy-specific reference area, A the loaded area, and κ an influence factor (commonly between 1 and 2.4; further taken as 2.2 for agreement with Ellingwood and Culver [49]). The COV for very large loaded areas is listed in Table 9.17 as COVinf, i.e. where the loaded area-dependent term in Eq. (9.25) reduces to zero. With the exception of the first-floor retail space, these COVs are smaller than those listed in Table 9.16. For small loaded areas, however, the COV resulting from Eq. (9.25) exceeds those in Table 9.16:

9.3.2.3.3 Commonly Applied Models in Probabilistic Structural Fire Engineering

With respect to the live load model , a wide variety of distribution models have been applied in PSFE . Not all studies however relate to APIT loads (for example using a load model for the maximum realization in a 50-year reference period instead). Limiting the discussion to APIT models, two families have been discerned in Jovanović et al. [42]:

-

1.

Gamma distribution with mean value μ/Q nom equal to 0.24 and COV of 0.60.

-

2.

Gumbel distribution with mean value μ/Q nom equal to 0.20 and COV of 1.10.

The first family has the 2005 Ellingwood study as a common point of reference. In this study, Ellingwood specifies μ/Q nom as being in the range of 0.24–0.50. In Ellingwood’s study (2005), reference is made to the data in Tables 9.15 and 9.16 and the underlying studies.

The second family models the APIT live load by the distribution for the maximum load in a 5-year reference period (i.e. ‘5y Gumbel distribution’). In essence, it is assumed that the imposed load can be modelled by a rectangular wave renewal process with a 5-year return period [51]. The 5-year return period corresponds with the expected time between renewals (changes in use and users [37]) for office buildings [41]. The specific distribution parameters listed above apply for office buildings designed in accordance with the Eurocode-recommended nominal (characteristic) imposed load of 2–3 kN/m2, considering the PMC load values , but can be used as a first approximation for other occupancies as well [41].

While both live load model families seem very distinct at first, the underlying data can reasonably be considered to be comparable, with both families linked to research by amongst others Corotis.

9.3.2.3.4 Recommended Model for the Imposed Load

The background documents agree on the use of a gamma distribution to describe the instantaneous sustained live load. Thus, it is adopted here as a recommendation based on precedent and considering the impossibility of negative values (note that the Gumbel distribution assigns a non-zero probability to negative realizations).

With respect to the distribution parameters, the background documents agree largely on the mean value μ for the sustained live load. Thus, for project-specific evaluations it is recommendable to define the mean sustained live load directly from listed data, such as the JCSS PMC [37]. The corresponding ratio μ/Q nom depends on the guidance-specific definition of Q nom. When defining Q nom through EN 1991-1-1:2002-recommended values, the ratio μ/Q nom is largely found to be in the range of 0.10–0.20. A value of 0.20 is considered reasonable for a first assessment for offices, residential areas, retail, hotels, and classrooms. A similar result is obtained for office buildings in accordance with ASCE 7–16, considering a Q nom recommendation of 65 psf.

The COV for the sustained live load can be considered dependent on the loaded area. For large loaded areas, a COV of 0.60 is found reasonable (see Table 9.17). For smaller loaded areas the COV is higher. Project-specific evaluations are again recommended when applicable. For general reliability assessments, a COV of 0.95 is recommended. This corresponds with the COV for office areas and classrooms at approximately 120 m2 loaded area. This value also results in a comparable ambient design reliability index compared to the Gumbel model with COV of 1.1 (which was used in the Eurocode background documents , i.e [47].).

In summary, for non-project-specific evaluations, excluding warehouses, the recommended model for the imposed load is given as follows:

-

For a large loaded area: Gamma distribution with μ/Q nom = 0.20, COV = 0.60.

-

For a small loaded area: Gamma distribution with μ/Q nom = 0.20, COV = 0.95.

9.3.2.4 Total Load Effect

9.3.2.4.1 Introduction and Commonly Applied Models

The models for the permanent load G and imposed load Q however do not convey the full story on the probabilistic modelling of mechanical actions. Additional stochastic factors are taken into account when combining the permanent and imposed load effects. Again, two distinct formulations are commonly applied: Eq. (9.26) with reference to Ravindra and Galambos [52] and Eq. (9.27) with reference to the JCSS PMC . Standard values for the stochastic variables are listed in Table 9.18:

Ravindra and Galambos [52] refer to Eq. (9.26) as an assumption, and explain that A and B are to be interpreted as characterizing the difference between computed and actual internal forces in the structure, while E is intended to characterize deviations introduced by characterizing a 3D structure into elements or subsystems and other simplifying assumptions (such as boundary conditions). They however do not mention a distribution type for these variables, and indicate that the mean values and COVs (as listed in Table 9.18) were ‘chosen’ and ‘assumed’ as ‘reasonable estimates based on data and judgements’, with further reference to a 1973 Washington University report.

The total load model of Eq. (9.27) is recommended in the JCSS PMC , where a difference is made in the recommended COV for K E in function of the considered load effect (axial load, moment). For frames, a COV of 0.1 is the higher value. Only for moments in plates the recommended value is higher at 0.2 [37]. The PMC provides no indication, however, as to the origin of these values. This formulation is nevertheless commonly applied in structural reliability calculations, and has been included in the background documents to the Eurocodes , e.g. Holicky and Sleich [47].

9.3.2.4.2 Recommended Model for the Total Load Effect

Neither of the above two models has extensive background available, and these are commonly applied based on precedent . The model of Eq. (9.27) is considered to have a greater authority considering its recommendation by the Joint Committee on Structural Safety, which is the common expert group on structural reliability of five international organizations (CEB, DIB, fib, IABSE, and RILEM).

Hence, the recommended total load model is given by Eq. (9.27) with K E being the model uncertainty for the total load effect , described by a log-normal distribution with mean 1.0 and COV 0.10.

Taking into account the recommended models for the permanent load G and the imposed load Q as defined above, and defining the load ratio χ by Eq. (9.28) (with nominal values corresponding with the characteristic values in the Eurocode design format ), the total load w is given in Fig. 9.11 relative to the nominal total load P nom = G nom + Q nom:

9.4 Materials and Applications

As stated in Sect. 9.1, one of the uncertainties that need to be addressed during structural analysis at high temperatures relates to properties of material during fire. This section provides an overview of existing studies on the subject.

9.4.1 Concrete

Strength of concrete is one of the primary properties that are required when analysing and quantifying performance of a concrete structural element (slabs, columns, beams, and walls) at normal or elevated temperatures. This section discusses the available test data on concrete strength retention factor and related temperature-dependent probabilistic models. When analysing reinforced concrete structures, the strength of reinforcement can be modelled following the discussion in the next section on steel material.

Qureshi et al. [53] compiled a database of existing tests on calcareous and siliceous concrete strength at high temperatures, keeping the two concrete types separate following a similar approach in the available deterministic Eurocode (EC) models [54]. A total of 242 data points for siliceous and 162 data points for calcareous concrete were collected. Concrete strength at high temperatures was normalized with respect to the measured strength (or average of multiple measurements) at 20 °C. A relatively large scatter in the data was observed across all temperature ranges. Qureshi et al. [53] followed two approaches to develop probabilistic models for the concrete compressive strength retention factor :

-

In the first approach, the data set was divided over different temperature groups with increments of 50 °C. Histograms for each temperature group were then constructed and compared with a number of different probability density functions (PDF) (e.g. log-normal, Weibull). The distribution that fits best over different temperature ranges and has a closed-form solution that can be implemented in computer codes was selected, and temperature-dependent functions for the parameters that would characterize the distribution were proposed.

-

In the second approach, the procedure by Elhami Khorasani et al. [55] was followed where a continuous temperature-dependent logistic function is fit to the data set using a Bayesian-based maximum likelihood calculation. In this approach, the logistic function can be a function of any form or defined with an existing deterministic function as the base (such as the EC model) with correction terms added to improve the fit to the data.

One important issue to be considered in developing probabilistic models at elevated temperatures is to ensure continuity and consistency in reliability appraisals in transition between ambient and elevated temperatures; therefore, it is important to note the existing assumptions that are applied at 20 °C. Holicky and Sykora [41] recommended the mean concrete strength at ambient temperature to be defined as the characteristic concrete strength plus two standard deviations, following a log-normal distribution with a coefficient of variation (COV) varying from 0.05 to 0.18 depending on the production procedure.

Using the approaches and considerations explained above, Qureshi et al. [53] proposed a Weibull distribution with parameters λ and k for calcareous and siliceous concrete strength retention factors, given that closed-form solutions of the Weibull distribution PDF f(x; λ, k) and quantile (i.e. inverse cumulative density function) Q( p; λ, k) are available, shown in Eqs. (9.29) and (9.30). In developing the model, distribution parameters at 20 °C were constrained to closely follow Holicky and Sykora’s recommendation. The size of data points above 700 °C was limited for calcareous concrete; therefore, in order to extend the model beyond 700 °C, it was assumed that the retention factor equals to zero at 1000 °C:

Figures 9.12 and 9.13 show the data set in comparison with the mean, and 5–95% quantiles of the probabilistic models based on the Weibull distribution fit for both calcareous and siliceous concrete. Eqs. (9.31)–(9.34) provide parameters of the Weibull distribution λ and k as a function of temperature T in Celsius.

For siliceous concrete :

For calcareous concrete :

Using the second approach explained above, Qureshi et al. [53] proposed continuous logistic functions as shown in Eqs. (9.35) and (9.36), where T is temperature in Celsius and ε is the standard normal distribution. A value of zero for ε generates the median of the function. Figures 9.14 and 9.15 show the data sets in comparison with the median and two standard deviation envelopes of the logistic functions for both calcareous and siliceous concrete.

For siliceous concrete :

For calcareous concrete :

Qureshi et al. [53] utilized the developed models from two approaches and evaluated probability of failure of reinforced concrete column sections under axial load. It was confirmed that the two models provide similar distribution of failure time and the results are not critically sensitive to the model choice.

9.4.2 Steel

For structural steel elements, the primary uncertainties of interest are the material properties and the variability in section profile. The former is discussed within this section, while the latter, as discussed before, carries less uncertainty compared to other random variables involved at elevated temperatures.

Elhami Khorasani et al. [55], Stephani et al. [56], and Qureshi et al. [53] presented a review of yield strength retention factors and discussed different probabilistic models for this parameter. The data set for the yield strength of steel used in the three studies was based on the data collected by the National Institute of Standards and Technology (NIST) [57]. The NIST study considered the sensitivity of stress-strain behaviour of structural steel to strain rate. Therefore, the data only include tests conducted with a strain rate that comply with the allowed strain rate in testing standards.

Steel yield strength at ambient temperature is typically defined as the 0.2% offset. However, the Eurocode (EC) retention factors at elevated temperatures [58] are based on the strength at a strain equal to 2%, which includes strain-hardening effects at lower temperatures. Such an effect is less significant at higher temperatures, where failure of a steel structure is expected to occur. Therefore, the NIST data set and existing studies, as listed above, considered measured data at both 0.2% offset and 2% strain. A total of 764 data points based on the 0.2% offset, covering a temperature range of 20–1038 °C, and 387 data points based on strain at 2% with a temperature range of 20–940 °C, were used to perform statistical analysis and quantify uncertainty of steel yield strength at elevated temperatures.

As discussed in the previous section, continuity with reliability appraisals at ambient temperature is important. Holicky and Sykora [41] recommended a log-normal distribution with mean equal to the characteristic yield strength plus two standard deviations, and COV of 0.07 to quantify uncertainty at ambient temperature. In the collected database, the retention factors were normalized based on the measured yield strength (or average strength in case of multiple measurements) at 20 °C. The majority of data points at 20 °C in the 0.2% data set are close to unity. It is hypothesized that the obtained variability at 20 °C for the 0.2% offset results from very limited intra-batch variability, together with limited inter-batch variability resulting from the different measurement sources. On the other hand, the 2% data set shows a scatter of data at 20 °C, reflecting uncertainty in material performance.

Stephani et al. [56] applied the first approach, based on a series of temperature groups and their histograms (as explained for the case of concrete material in the previous section) on the 0.2% data set. Two different statistical models , namely log-normal and a beta distribution bound by three times the standard deviation on both sides of the mean, were considered with varying means and COVs as a function of temperature. Stephani et al. coupled the proposed models with recommended statistics of steel yield strength at ambient temperature. Qureshi et al. [53] extended the work of Stephani et al. [56] by proposing continuous functions for model parameters varying with temperature where continuity at ambient temperature was also incorporated within the model. Qureshi et al. [53] proposed a log-normal distribution for 0.2% data. Equations (9.37) and (9.38) describe the model parameters ; Fig. 9.16 shows the measured data and the model. Qureshi et al. [53] applied the same approach to the 2% data, except in this case the model reflects the scatter in data at 20 °C rather than constraining the model to the recommended distributions for reliability measures at ambient temperature for 0.2% strain offset. Equations (9.39) and (9.40) describe the model parameters , and Fig. 9.17 shows the measured data and the model. In addition, Elhami Khorasani et al. [55] proposed a continuous logistic function for the 2% data. Figure 9.18 and Eq. (9.41) describe the model where T is temperature in Celsius, k y, θ is the EC steel retention factor , and ε is the standard normal distribution.

Parameters of log-normal distribution for 0.2% data:

Parameters of log-normal distribution for 2% data:

Logistic function for 2% data:

with \( {r}_{logit}=\ln \frac{\left({k}_{y,\theta }+{10}^{-6}\right)/1.7}{1-\left({k}_{y,\theta }+{10}^{-6}\right)/1.7} \).

The models discussed above, when applied to cases of isolated steel column subject to ISO 834 heating, gave comparable distributions of failure temperature for a particular loading condition. The logistic model (derived at 2% strain) implicitly captures the effect of strain hardening at lower temperatures, meaning that the choice of probabilistic model is important for cases where element failure could be expected at low (less than 400 °C) temperatures.

Elhami Khorasani et al. [55] also proposed a logistic function to capture uncertainty in the modulus of elasticity of steel , shown in Fig. 9.19 and Eq. (9.42) where T is the temperature in Celsius and ε is the standard normal distribution. The measured data set is from the National Institute of Standards and Technology (NIST) collected database [57]. The NIST data set can be grouped into three categories based on their measurement method: (1) static, (2) dynamic, and (3) unknown. Elhami Khorasani et al. [55] noted that previous discussions on the measurement method indicated that dynamic testing, in general, results in unconservative predictions of steel modulus. In addition, the analysis of structures under fire is equivalent to static thermal loading, and therefore, the data measured by dynamic testing were disregarded:

9.4.3 Timber

Timber is a graded material with highly variable properties. The material properties can be grouped into reference properties that are considered explicitly, while other properties are only assessed implicitly. Bending strength R m, bending modulus of elasticity E m, and density ρ are referred to as the reference material properties . JCSS [16] provides a list of expected values and coefficient of variation of timber properties such as the tension strength parallel or perpendicular to the grain, compression strength parallel or perpendicular to the grain, shear modulus, and shear strength as a function of the reference properties. For European softwood , JCSS [16] specifies a log-normal distribution for bending strength R m and bending modulus of elasticity E m with COVs of 0.25 and 0.13, respectively, and normal distribution with COV of 0.1 for density ρ. For glue-laminated timber , R m follows a log-normal distribution with COV of 0.15, but E m and ρ have similar distributions as the European softwood. More details can be found in JCSS (2006).

Three methods have been proposed in the literature on how to conduct structural analysis of timber structures at elevated temperatures. Brandon [59] discussed details of these approaches. One of these methods takes into account the mechanical properties of the material; the other two approaches calculate reduced capacity of a member during fire by reducing the cross section of the element as a function of the char layer. Charring rate is one of the basic quantities of assessment of fire resistance of wooden structural members. Due to the inherent variabilities and uncertainties involved in the fire exposure and the charring process, the charring rate is a factor with substantial uncertainty, which should be taken into account in any assessment of fire resistance of wooden members, in particular in the probabilistic approaches to assess fire resistance. In addition to the reduced dimensions from charring, the reduced cross-section method requires consideration of reduced material properties in a layer ahead of the char front, where timber has lost some strength due to increased temperature but has not charred. This layer is assumed to have zero strength in calculations.