Abstract

The rise of Performance Based Design methodologies for fire safety engineering has increased the interest of the fire safety community in the concepts of risk and reliability. Practical applications have however been severely hampered by the lack of an efficient unbiased calculation methodology. This is because on the one hand, the distribution types of model output variables in fire safety engineering are not known and traditional distribution types as for example the normal and lognormal distribution may result in unsafe approximations. Therefore unbiased methods must be applied which make no (implicit) assumptions on the PDF type. Traditionally these unbiased methods are based on Monte Carlo simulations. On the other hand, Monte Carlo simulations require a large number of model evaluations and are therefore too computationally expensive when large and nonlinear calculation models are applied, as is common in fire safety engineering. The methodology presented in this paper avoids this deadlock by making an unbiased estimate of the PDF based on only a very limited number of model evaluations. The methodology is known as the Maximum Entropy Multiplicative Dimensional Reduction Method (ME-MDRM) and results in a mathematical formula for the probability density function (PDF) describing the uncertain output variable. The method can be applied with existing models and calculation tools and allows for a parallelization of model evaluations. The example applications given in the paper stem from the field of structural fire safety and illustrate the excellent performance of the method for probabilistic structural fire safety engineering. The ME-MDRM can however be considered applicable to other types of engineering models as well.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fire safety engineering in general and structural fire safety engineering in particular are closely linked to the concepts of risk and reliability. Although this is not always fully acknowledged, making decisions on (structural) fire safety requirements inherently entails balancing the improbability of a severe fire with the damage this fire may induce if it does occur. While in the past fire safety requirements were generally prescriptive in nature, the advent of Performance Based Design (PBD) for fire safety engineering has highlighted questions related to cost-optimization and the definition of performance targets. Consequently, it comes as no surprise that a growing interest exists within the fire safety community for topics of risk and reliability.

Applying reliability concepts to fire safety requires evaluating the uncertain response of the design in case of fire. Traditionally this is done using Monte Carlo simulations (MCS). Applications in the area of structural fire safety can be found amongst others in [16] and [12]. In the field of evacuation modelling MCS have been applied by [35], while [7] applied MCS for evaluating fire spread in forest fires, and [17] used MCS for assessing the implicit reliability requirements incorporated in current UK standards. These publications in different fields of fire safety engineering illustrate the interest of the fire safety community in the application of probabilistic methods. However, the Monte Carlo methodology applied in the references above requires a very large number of model realizations and therefore becomes impractical when computationally expensive models are used or when evaluating small occurrence probabilities. More computationally efficient methods exist, see for example [2, 11, 15, 28, 32],—but these can be difficult to implement, and/or require prior knowledge on the type of probability density function describing the uncertain response. This knowledge on the distribution of the response is generally not available for fire safety problems, which is why it is important to use unbiased methods (i.e. methods without distributional assumptions on the model output). Monte Carlo simulations are unbiased, but as mentioned above the application of MCS becomes impractical for computationally expensive models. In conclusion, the application of risk- and reliability-based concepts to (structural) fire safety engineering is currently severely hampered by the lack of a computationally efficient and unbiased methodology for evaluating the stochastic response of systems exposed to fire.

In this paper a computationally efficient methodology is adapted and for the first time applied to structural fire safety. The methodology makes an unbiased estimation of the probability density function (PDF) which describes the uncertain response of a fire exposed structure or structural member, while requiring only a very limited number of model evaluations. Although the example applications illustrating the efficiency of the proposed method stem from the field of structural fire safety engineering, the method can be considered generally applicable to any engineering models.

2 Why Evaluate the Probability Density Function (PDF)?

2.1 Concepts of Failure and Failure Probabilities

For general engineering models, any model output Y can be considered as a function of a number of input variables X i . Evaluating for example the maximum temperature T max in a fire engulfed compartment, T max will be a function of amongst others the fire load density, the compartment dimensions, the lining thermal properties, and the ventilation characteristics. Some variables may be well known and can consequently be modelled by a single deterministic value. In the model for T max , this will in general be the case for the compartment dimensions. Other variables may be less clearly defined and should be modelled as stochastic variables. In the example above, for example the fire load density falls in the latter category. By considering the uncertainty on the input variables X i , the model output Y will be uncertain as well. Denoting with x the vector of stochastic variables and h the modelled relationship, this yields:

Considering a failure criterion for the output variable Y, for example failure if Y > y limit , the probability of failure P f can be calculated from the PDF f y describing Y, through:

The calculated probability of failure should subsequently be compared with an acceptability limit. For example, for structural failure in ambient design conditions, EN 1990 [6] specifies an acceptability limit for P f,50 of Φ(−3.8) = 7.23 × 10−5 for structures with normal failure consequences when considering a 50 year reference period, where Φ denotes the standard cumulative normal distribution function. When considering a 1 year reference period, the acceptability limit reduces to P f,1 of Φ(−4.7) = 1.30 × 10−6. These target safety levels are commonly applied at the level of structural elements through the design rules in the material specific Eurocodes. As summarily acknowledged in EN 1990, the application on an element level can generally be expected to result in a safety level of the global structure exceeding this target safety level. This is however not always the case, and for example a series system of not fully correlated members will have a higher failure probability than the failure probability of its constituent members. For a further discussion on reliability concepts and their application in the Eurocode design format reference is made to [14].

In fire safety engineering failures are conditional on the (uncertain) occurrence of fire. This is for example the case when considering a fire-induced structural failure or the failure of a smoke control system to maintain tenable conditions in a (single) staircase. Therefore, in fire safety engineering a conditional failure probability P f,fi is evaluated (i.e. failure given the occurrence of fire). When a failure criterion is known, the conditional probability P f,fi can be calculated from (2). If an acceptability limit has been defined with respect to the conditional failure probability, the acceptability of the design can subsequently be determined.

Often no explicit acceptability limit exists, but the current legislation in many countries allows for performance-based design solutions when it can be shown that the design is at least as safe as prescriptive accepted designs. In those situations a comparison of the conditional failure probabilities P f,fi allows the determination of the acceptability of the proposed design.

However, on its own the conditional failure probability does not provide any information on the appropriateness of the level of safety investment associated with the (accepted) design solution. When evaluating optimum levels of investment—as discussed in the next section of this paper—an absolute (annual) failure probability is required in order to determine the risk associated with fire-induced failures. This annual probability of failure P f,1 can be calculated from the conditional failure probability P f,fi through (3), where p fi,1 is the annual probability of a significant fire. The qualitative reference to a “significant fire” acknowledges that a fire threatening the good performance of a smoke control system may be different from a fire threatening structural stability. If an acceptability limit for the annual failure probability is known, the acceptability of the design can be determined.

Evaluation of (3) is illustrated by the fault tree of Figure 1, for a situation where the “significant fire” is defined as a fully-developed (post-flashover) fire, as is common in the area of structural fire safety. The conditional probability of failure of the system given a post-flashover fire is given by P f,fi and will need to be evaluated using a specialized and possibly computationally expensive model. To calculate the overall annual probability of failure P f,1, this conditional probability P f,fi is multiplied with the annual probability of fire ignition p ig,1 , the probability p f,u that the users fail to suppress the fire, the probability p f,fb of no early suppression by the fire brigade, and the probability p f,s that the suppression system fails to suppress the fire (p f,s = 1 when no suppression system is present). Values for these suppression parameters are for example listed in [9], and fire ignition frequencies can be found in e.g. [5].

Note that probabilistic calculations and an explicit evaluation of the failure probability are often avoided by considering characteristic values for the uncertain input variables, for example considering a 90% quantile for the fire load density. While this procedure seems very similar to the use of characteristic values and partial safety factors in traditional prescriptive design calculations, their application is fundamentally different as a system of prescribed characteristic values and partial safety factors is (or should be) based on underlying full-probabilistic calculations of P f and a comparison with an (implicit) acceptability limit. In other words: when applying prescriptive design rules the achievement of an acceptably low probability of failure can be assumed to result from the combination of characteristic values, safety factors and conservative assumptions, but this does not hold for innovative performance-based design solutions. For true reliability-based design solutions an explicit evaluation of P f is required, and therefore the PDF f y has to be determined.

2.2 A Note on Acceptability Limits

Evaluating the acceptability of a design solution after evaluating Equation (2) or (3) presupposes the existence of acceptability limits. Unfortunately, these limits are not always clearly defined. Implicitly referring to Equation (3), it is sometimes even questioned in fire safety engineering whether any requirement is necessary in case of a low probability of occurrence p fi,1 of a significant fire.

In the area of smoke control systems for example, it is sometimes argued that the system can be designed considering a sprinklered design fire. Only considering a sprinklered fire for design purposes implies assuming that the combined probability of fire ignition and sprinkler failure is so low that the consequences resulting from a smoke control failure in case of a non-sprinklered fire can be deemed acceptable from a risk perspective.

Similarly, for structural fire safety it is sometimes questioned whether a significant fire rating of the structural elements makes sense economically, considering the low probability of a fully developed fire to occur and considering a low expected fire load and beneficial ventilation characteristics expected to limit the fire severity. Note that the amount of fire rating which is considered as ‘significant’ will depend on the specific characteristics of the design, among which the occupancy classification and the height of the structure, see [17] for structural fire rating requirements according to the UK legislation. As for the smoke control example above, not requiring structural fire resistance implies assuming that the probability of a significant fire is sufficiently low to make a structural failure acceptable from a risk perspective. One well-known methodology which limits structural fire resistance requirements in function of the probability of fire occurrence is the Natural Fire Safety Concept [9]. When applying the Natural Fire Safety Concept (NFSC) the annual failure probability calculated by (3) is compared to the EN 1990 target failure probability (1 year reference period) discussed earlier. The implementation of the NFSC has however encountered resistance and the NFSC is currently for example not accepted in the UK. Considering the discussion above, it is important to fully understand the implied loss acceptance when using the NFSC to argue that no fire resistance is required, see also the discussion note in [30].

Although the limited qualitative justification paraphrased above cannot be considered sufficient grounds in itself for limiting investments in fire safety, it must be acknowledged that the occurrence rate of significant fires is low. This justifies a lower safety investment as compared to other events with equally severe consequences but with a higher occurrence rate. A scientifically and mathematically sound methodology for incorporating the uncertain occurrence of future extreme events when optimizing investments in safety has been presented by [23]. This methodology is known as Lifetime Cost Optimization (LCO) and balances additional safety investments with reductions in uncertain future losses when determining the optimum design solution. In its basic form this optimum safety investment is determined by maximizing the utility function Z in Equation (4), with B(p) the utility derived from the structure’s existence, C(p) the initial cost of construction, D(p) the costs due to failure, and p the vector of design parameters p i considered for optimization. This is conceptually illustrated in Figure 2. All the contributions to the total utility Z have to be evaluated at a single reference point in time (for example: evaluation of present value), through the application of a discount rate, see the discussion in [20] for the concepts of discounting the utility of safety investments.

For the discussion here, it is mainly the failure cost D which is of interest as it is assumed that the benefit B derived from the structure’s existence can often be considered independent from the level of safety investment, see [24]. The failure cost D is amongst others dependent on the costs C F incurred at the time of failure for a single failure event, the discount rate, and on the probability density function of future failure occurrences. The costs C F can for example be evaluated through the framework presented in [19]. The discount rate is an economic parameter required to appreciate future costs, see [24] and [20]. As derived in [29], probability density function of future failure occurrences can be calculated from the conditional failure probability P f,fi . Consequently, also the derivation of optimum levels of safety investment requires an evaluation of Equation (2). For a more detailed discussion and an introduction to concepts of Lifetime Cost Optimization for fire safety engineering, see [29] and [30].

It is noted without detailed discussion that not only the definition of acceptability limits is challenging, but also the definition of failure criteria is a difficult task at best. For example in the area of structural safety, straightforward strength criteria can be defined for statically determinate members, but for structural systems no such criteria exist. This leads to a number of difficult questions, e.g. whether local failure of secondary beams is considered acceptable, and whether the local collapse of the ceiling of a fire compartment should be considered as structural failure when the overall stability of the building is maintained.

On a conceptual note, the above difficulties can in principle be avoided as it is not the (conditional) probability of a specific type of “failure” per se that is of interest, but the (conditional) probabilities of all possible states of the system in response to a fire. Each possible state of the system corresponds with a different total cost, taking into account the level of material damages, losses to human life and limb, and immaterial losses. Integrating the total costs over all possible damage states for a given design solution gives an assessment of the expected costs in case of the occurrence of a specific type of fire. Integrating over all possible fires allows to compare the failure costs associated with different proposed design solutions, without requiring—in principle—an explicit definition of failure criteria. The above may allow the combination of discussions on acceptability limits and failure criteria in a single methodology. This is not further investigated here, but again evaluating the uncertain response of the system is identified as a necessity. Consequently, also for proposing failure limits and acceptability criteria, and for cost-optimization, knowledge of the PDF is highly beneficial.

2.3 Traditional (Implicit) PDF Evaluation

Evaluating the PDF of an uncertain model output Y is traditionally done through Monte Carlo simulations (MCS), see [3]. Monte Carlo methods are based on generating a large number of random input vectors x and evaluating the corresponding model output. By doing so, a histogram of the output variable Y is obtained. However, when interested in extreme values of the model output Y—as is generally the case when considering the failure of a safety system—a very large number of Monte Carlo simulations is required in order to obtain a sufficient precision.

Due to the requirement for a large number of simulations, the computational time can easily become infeasible when applying MCS in combination with computationally expensive models. More efficient adaptations from MCS do exist—for example Importance Sampling [8] and Markov Chain Monte Carlo [13]. However, while reducing the number of required model evaluations these methods do not fundamentally alter the concept of running a large number of model evaluations to investigate the uncertainty of the model output. Alternatively, methods can be used which make a (relatively) efficient estimation of the moments (distribution parameters) of the output variable Y, see for example Latin Hypercube Sampling [22]. An estimation of the PDF describing Y is subsequently made by assuming the type of PDF describing Y and implementing the calculated moments. Assuming a PDF type can however prove inappropriate in fire safety engineering, as discussed in the introduction.

3 An Unbiased and Computationally Efficient Method

3.1 The ME-MDRM

Recently, a computationally very efficient method has been developed by [34]. The method is known as the (Fractional-Moment) Maximum Entropy Multiplicative Dimensional Reduction Method (ME-MDRM) and has been successfully applied to computationally very demanding structural Finite-Element calculations by [4]. The method makes an unbiased estimation of the PDF describing the uncertain model output Y by using the criterion of maximum entropy on a set of calculated model outputs or observed test results. The calculation concept for the maximum-entropy estimation is considered to be the mathematically correct procedure for avoiding biases with respect to the unknown PDF type or shape [18]. Furthermore, [21] and [34] propose the use of fractional moments for the maximum-entropy calculation, since these fractional moments are found to result in more stable estimates.

The computational requirements of the method are reduced by considering Gaussian interpolation—instead of crude Monte Carlo simulations—for calculating the aforementioned fractional moments. A further reduction of computational requirements is obtained by considering multiplicative dimensional reduction.

For a standard application of the method as proposed by [34], the total number of model evaluations required for application of the method equals nL + 1, with n the number of stochastic parameters and L the number of Gauss integration points. Consequently, in case of 5 stochastic parameters and 5 Gauss integration points, only 26 model evaluations are required, compared to thousands of model evaluations required for the application of traditional Monte Carlo methods. However, the required number of ME-MDRM model evaluations can be further reduced to n (L − 1) + 1, as shown below.

Furthermore, the method can very easily be applied together with existing programs and models.

3.2 Computational Efficiency

If the model evaluation is computationally very expensive, the total time required for the probabilistic evaluation is governed by the number of model evaluations. Under this assumption the computational efficiency of the ME-MDRM compared to alternative methods is fully determined by the number of model realizations required by this alternative method. In order to make a indicative comparison with other commonly used methods, a distinction must be made between biased and unbiased estimates.

Only Monte Carlo based methods and Maximum Entropy applications are open to the user to make an unbiased estimate of the PDF. Note that here the denomination ‘Monte Carlo based methods’ refers to all types of methods whose PDF estimation is based on performing a large number of model evaluations. This includes methods based on deterministic sampling schemes. The relative efficiency gain obtained by the ME-MDRM is then defined by the ratio N MC /(4n + 1), with N MC the number of evaluations required for application of the Monte Carlo based method and considering 5 Gauss integration points for the ME-MDRM as discussed further. As long as the number of stochastic variables is small (say 10 stochastic variables), the ME-MDRM will easily be an order of magnitude faster than the Monte Carlo based method. For the crude MCS results presented further (10000 MCS), the ME-MDRM evaluation was between 150 and 500 times faster than the MCS.

For biased estimates where the distribution for the model output Y has been chosen a priori, only the moments of this chosen distribution are assessed. The Maximum Entropy estimation presented further is based on the evaluation of the distributional moments, and thus a modified (reduced) version of the ME-MDRM methodology can directly be applied for this type of biased assessment. This reduced methodology will be denoted as MDRM–G and is presented in ‘Basic application example 2’. MDRM–G is based on the same model evaluations as the full ME-MDRM methodology and thus requires the same 4n + 1 model evaluations when considering 5 Gauss integration points. Again assuming that the number of model evaluations governs the total calculation time, the efficiency gain by the MDRM–G compared to the alternative biased method is given by N BM /(4n + 1), with N BM the number of model evaluations for the alternative biased method. This ratio will be smaller than the ratio obtained for the unbiased application above and may be in the range of unity in specific cases and when applying efficient alternative methodologies.

This paper however strongly promotes the use of unbiased estimates for applications in fire safety engineering considering the lack of knowledge on standard distribution types for model outputs. For this unbiased assessment, the ME-MDRM is considered to result in a very significant gain in efficiency as discussed above.

3.3 The Calculation Methodology

The methodology estimates the PDF describing the stochastic output variable Y through the principle of maximum entropy. As shown by [21], this principle results in an estimated PDF given by Equation (5), with m the estimation order, λ i estimated coefficients and α i estimated exponents. The coefficient λ 0 normalizes the PDF—i.e. ensures that the integral of the estimated PDF across the entire domain of Y equals 1—and is given by Equation (6). The optimum values for the exponents α i and coefficients λ i are determined by minimizing the Kullback–Leibler divergence between the true PDF and the estimated PDF [34]. Elaborating this mathematically results in the minimization criterion of Equation (7), with \( M_{Y}^{{\alpha_{i} }} \) the α th i sample moment of Y. For a (random) set of N realizations y j this sample moment is given by Equation (8).

Determining the set of exponents and coefficients which minimizes (7) can be realized through readily available optimization algorithms and results in a mathematical formulation for the estimated PDF in Equation (5). For many optimization algorithms (e.g. simplex algorithm used in this paper) the solution may however be highly sensitive to the algorithm starting solution. As confirmed by Tagliani in personal correspondence to the author, it is recommendable to perform a large number of independent optimizations by considering a Monte Carlo simulation for this starting solution. Alternatively, Latin Hypercube Sampling (LHS) can be applied for the starting solution in order to increase efficiency—for a discussion on LHS see [22]. These repeated (randomized) optimizations increase the computational requirements compared to the original methodology presented in [34] but have the advantage of increased reliability of the final optimization result. As the computational requirements are centred around the evaluation of the (computationally expensive) model describing Y, this reliance on multiple evaluations for the optimization procedure does not constitute a problem and is computationally relatively inexpensive. The detailed methodology used in this paper for the minimization calculations is presented in the step by step calculation of ‘basic application 1’.

In principle the estimation order m can be freely chosen, but while a higher estimation order will result in a better agreement with the input data, a too high estimation order may introduce spurious relationships for (unavoidably) limited sets of input data y j . Novi Inverardi and Tagliani [21] propose to evaluate the ME optimization of (5)–(7) for different m, and choose the result for which the value of (7) is minimal while taking into account a penalty factor for increased m. For the applications further in this paper, this procedure however does not result in a clear preference for m as the resulting minimized values are very close to each other, resulting in a preference which may at times depend on the starting solution or optimization algorithm. This will be further investigated in follow-up research. In general, the use of a third or fourth order (m = 3 or 4) has been proven sufficient for the estimation of the PDF [34] and [4]. In the application examples further, m = 4 will be maintained unless stated otherwise, as different analyses have shown that m = 4 is better capable of capturing non-traditional PDF shapes. In order to increase efficiency, the exponents α i can be limited to real numbers in the range [−2; 2].

In the discussion above, the evaluation of the sample moment \( M_{Y}^{{\alpha_{i} }} \) has not been elaborated in detail. As indicated by Equation (8), the sample moment can in principle be determined through a crude Monte Carlo simulation, but this would severely undermine the goal of avoiding the need to perform many computationally expensive evaluations of the model describing Y. This problem is alleviated by considering multiplicative dimensional reduction in conjunction with Gaussian interpolation. The derivations below assume that the probability density functions describing the stochastic input variables are known.

Applying multiplicative dimensional reduction, Equation (1) is conceptually approximated by Equation (9), with h 0 the model response when all n stochastic input variables are set equal to their median values \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{\mu } \), and h l the unidimensional cut functions defined by Equation (10). The unidimensional cut functions effectively isolate the effect of the different stochastic input variables, and thus result in an approximation when combined to consider the overall model response h(x).

Considering multiplicative dimensional reduction and considering the different stochastic variables x l to be independent, the kth moment of the stochastic model response Y is given by (11), with E[.] the expectance operator and f xl the probability density function of x l .

The evaluation of the kth moment for the lth cut function is approximated with great accuracy by considering Gaussian quadrature. In its most basic form, Gaussian quadrature approximates the integration of a function g(z) over the entire domain of a standard normally distributed variable Z by a weighted sum of a limited number of well-chosen evaluation points z j , as mathematically given by Equation (12) with ϕ the standard normal PDF, L the number of Gauss integration points (for most cases 5 integration points is sufficiently accurate), and w j the associated Gauss weights [34]. For L = 5 the Gauss points z j and associated weights w j are given in Table 1.

Equation (12) can be generalized to (13) for non-standard normal distributed variables X, with \( F_{x}^{ - 1} \) the inverse cumulative density function of X. This is a modification to the method used in [34] where different integration methodologies are suggested in function of the distribution type describing the stochastic variable. The additional approximation proposed here (i.e. the generalized Gauss integration) has the advantage of easier application of the methodology to any type of distribution and allows to further reduce the number of model evaluations, as discussed further. However, when the distribution function describing X is ‘non-traditional’ (for example a truncated distribution or a non-continuous distribution), this additional approximation should not be applied.

Considering the kth power of the cut function h l and the probability density functions f xl as specific situations of Equation (13), the application of Gaussian quadrature for the evaluation of Equation (11) is straightforward, resulting in an approximation for the moment \( M_{Y}^{{\alpha_{i} }} \) by:

with y j,l the model realization for the jth Gauss point in the lth cut function, as mathematically given by:

and h 0 the model result when all stochastic variables are given by their median value.

In summary, Equation (14) replaces Equation (8). Consequently, the estimation of the full PDF describing Y is obtained by considering 1 model evaluation for h 0 and nL model evaluations for the cut functions. Note that calculating a different power α i in the minimization procedure of Equation (7) does not require new model evaluations.

Furthermore, if the number of Gauss integration points L is uneven, one of the Gauss points z j equals 0, resulting in one of the Gauss points equal to the median. This further reduces the number of required model calculations to 1 + n·(L − 1). Consequently, when considering 5 Gauss integration points, the total number of model evaluations required for approximating the PDF describing the output variable Y is 1 + 4n. This further reduction of the required number of simulations is made possible through the generalized Gauss integration scheme of (13) and is a modification of the original methodology presented in [34].

Note that for the remainder of this paper, the full methodology derived above will be denoted with ME-MDRM, applying the same name as introduced in [34].

3.4 Basic Application Example 1

3.4.1 Application Example

The application of the methodology is illustrated here by a mathematical example which can be recalculated easily. Consider Equation (16) with X 1 , X 2 and X 3 three independent lognormal variables. Given mean values equal to 3, 4 and 2, and coefficients of variation of 0.3, 0.5 and 0.2 for X 1, X 2 and X 3 respectively, basic probability theory learns that the stochastic output variable Y is given by a lognormal distribution as well, with a mean value of 12.48 and a coefficient of variation of 0.646.

While the PDF describing Y is known exactly, the ME-MDRM methodology can be applied as well, considering only the required 1 + 3·(5 − 1) = 13 model evaluations (for L = 5). These evaluations and the underlying values for z j are given in Table 2, with z j,l the considered Gauss integration point, x j,l the corresponding realization for X l , and y j,l the model evaluation calculated through Equation (16). Further details on the evaluation of y j,l and the calculation of the ME-MDRM estimate are given below in a step by step overview of the calculation.

The row with all z j,l = 0 for all 3 stochastic variables is evaluated only once in the first row of Table 2. Having evaluated the model for each of the Gauss point combinations, any moment \( M_{Y}^{{\alpha_{i} }} \) of Y is readily approximated through Equation (14). Consequently, the optimization of Equation (7) can be applied, resulting in values for the coefficients λ i and exponents α i .

The Maximum Entropy result for m = 3 is given in Table 3, defining the mathematical formulation of the estimated PDF through Equation (5).

A comparison of the analytical expression of the lognormal PDF and CDF with 10000 crude Monte Carlo simulations on the one hand, and the result of the ME-MDRM calculation on the other hand is given in Figures 3 and 4—note that the horizontal axis in Figure 2 has been chosen in an extended range in order to accentuate the differences. The ME-MDRM estimation almost perfectly matches the analytical expression for this simple mathematical example, making it very difficult to visually discern the differences between the curves.

Note: the basic application example above shows that the ME-MDRM results in a very precise estimate for f Y when Y is described by a lognormal distribution. Similar results have been obtained in test calculations with Y described by a normal distribution and a Gumbel distribution. Furthermore, [34] reports an excellent estimation performance of the Maximum Entropy principle for Y described by a Weibull distribution and a Pareto distribution. As the ME-MDRM results in a continuous estimate, the methodology is not capable of capturing discontinuous PDF’s. Similarly, as the ME-MDRM is based on a limited number of model evaluations and since also the estimation order m is necessarily limited, the method is incapable of accurately capturing a hypothetical PDF which has many intensity fluctuations [e.g. wave-like intensity f y (Y)].

3.5 Step by Step Overview of the Calculation

3.5.1 Introduction

A first application of the ME-MDRM methodology may be quite challenging. Therefore, step by step calculation results for the example above are presented here. This Section is intended to support an independent application of the ME-MDRM. As discussed earlier, the Maximum Entropy evaluation is based on an optimization calculation (the minimization of the Kullback–Leibler divergence). The optimization procedure described further is the specific methodology applied in this paper. Any other optimization methodology can be used and more efficient methodologies may exist. This currently remains a topic of future research.

As discussed, the Maximum Entropy evaluation is defined by the minimization of Equation (7), with \( M_{Y}^{{\alpha_{i} }} \) the fractional moment defined by (14). For clarity, these equations are reprinted below as (17) and (18).

Equation (18) is dependent on:

-

h 0 , the model result when all stochastic variables are given by their median value

-

w j , the Gaussian weight for integration point j (given in Table 1 for L = 5 integration points)

-

y j,l , the model result for the jth Gauss point in the lth cut function. In other words, this is the model result for which the value of the lth stochastic variable is defined by the jth Gauss point and all other variables are evaluated at their median values.

-

α i , the exponent. This exponent is optimized further as part of the Maximum Entropy assessment.

For a given coefficient α i , (18) is readily evaluated considering the weights w j of Table 1 and the model evaluations y j,l in Table 2.

The actual Maximum Entropy evaluation is obtained through Equation (17). This equation is in principle minimized by changing the values of both the coefficient λ i and the exponents α i . As discussed, the minimization of (17) has been found to depend on the starting solution of the optimization. In principle any methodology which circumvents this issue can be applied. In the development of the current study, a number of different concepts have been evaluated.

One pragmatic concept which has proven reliable for the evaluations is to consider a large set (i.e. Monte Carlo simulation) of coefficients for α i in the range [−2; 2] and to determine the associated λ i which minimize (17). Subsequently, the minimum result across the set of α i values is considered. This effectively separates the minimization of (17) in 2 separate steps: a first step minimization across λ i for given α i , and a subsequent evaluation of the minimum result across the α i . In order to ensure that the α i are distributed across the range of possible values and to improve the sampling efficiency a Latin Hypercube simulation has been used instead of a traditional Monte Carlo simulation. This methodology has been applied for the calculations presented throughout this paper.

Considering the above, the ‘step by step’ evaluation of basic example 1 is given below:

3.5.2 Step 1

As a first step in the application of the ME-MDRM the model realizations h 0 and y j,l have to be evaluated.

It is important to note that evaluating the median value of a stochastic variable is in meaning identical to evaluating the realization corresponding with the Gauss point z = 0. Mathematically, this is written as:

with \( F_{X}^{ - 1} \) the inverse cumulative distribution function for the stochastic variable X l .

Equation (19) is generally applicable. Consequently, for the example of Equation (16) above, h 0 can be evaluated as (20). The result of (20), the Gauss point values (all 0) and associated realizations x l were given earlier in the first row of Table 2.

As the realizations x l in (20) correspond both with the median value realization of the stochastic input variable X l and the realization of X l for a Gauss point z = 0, (20) is also the result for all of the cut functions evaluated at the 3rd Gauss point z 3 = 0, see Table 1 for the list of Gauss points (for L = 5). Consequently, the result of (20) is used in each of the cut functions, but only a single model evaluation is needed. This is why the total number of model evaluations in the proposed ME-MDRM is given by n (5 − 1) and not 5n. This also explains why the first row in Table 2 refers not only to h 0 , but also to y 3,1 , y 3,2 and y 3,3 .

All other model realizations y j,l are evaluated by considering the Gauss point z j for evaluating the stochastic variable X l , and median values (i.e. Gauss point z = 0) for all other variables. Consequently y 1,1 is given by (21). The input values of (21) and the associated result y 1,1 were given above in the second row of Table 2. All other model evaluations y j,l required for evaluating (14) are calculated using similar equations and have been listed in Table 2.

3.5.3 Step 2

Step 2 is the generation of a Monte Carlo or Latin Hypercube set for the coefficients α i , as discussed above. For an estimation order m = 3, every realization consists of 3 alpha values. In total 1000 simulations have been considered. A selection of realizations are given in Table 4.

3.5.4 Step 3

Step 3 consists of the minimization of (17) for each of the input combination α i . Note that the parameter λ 0 is a normalization constant defined by (6) and is therefore fully determined by the other parameters. The minimization is done using the simplex algorithm.

Optimum values for λ i for the coefficients α i defined in Step 2 are listed in Table 4, together with the associated value Z for the minimized function as defined in (17).

3.5.5 Step 4

Step 4 entails choosing the combination of α i and λ i from step 3 which result in the minimum value for Z, as defined in (17). This implies choosing the row in Table 4 with minimum value for Z. This is de facto a Monte Carlo based optimization across the α i , where the optimum coefficients λ i for given exponents α i have been determined in the previous step.

Considering the results in Table 4, entry 629 gives the minimum value for Z. The associated values for α i and λ i have been listed above in Table 3.

3.5.6 Step 5

Considering α i and λ i as defined by Step 4, the PDF f y is mathematically estimated by (5).

As an example, and considering the optimum α i and λ i as evaluated in Step 4 and listed in Table 3, f y (14) is estimated below through Equation (22). This result is an excellent match with the true analytical result (f y (14) indeed equals 0.043), as visualized in Figure 3.

3.6 Basic application example 2: efficient estimation of parameters of a known or assumed distribution type

The ME-MDRM as discussed above makes an unbiased estimate of the PDF describing the model output Y through the Maximum Entropy optimization of Equations (5)–(8). However, often a standard distribution type for Y exists or is assumed. When the distribution type is known, the goal of stochastic model evaluations is the estimation of the parameters of this known distribution. This has been referred to earlier as making a biased estimate of the PDF. The estimation of the distribution parameters is traditionally done using for example Latin Hypercube Sampling (LHS), see [22].

For situations where the distribution type for Y is pre-determined, a reduced application of the ME-MDRM is possible in which the Maximum Entropy principle is not considered. In this reduced application, the Multiplicative Dimensional Reduction Method of Equation (9) is applied in conjunction with Gaussian quadrature of Equation (14) for estimating the moments of the assumed distribution type for Y. Subsequently, the parameters describing the known distribution type can be derived from the estimated moments. This methodology for the estimation of parameters of an assumed distribution type will further be denoted as “MDRM–G”.

Consider for example a situation where Y is the resistance of a structural element. In this case a lognormal distribution would be a standard choice for describing the PDF [26], and MDRM–G can be applied to estimate the mean µ Y and standard deviation σ Y which define the parameters of this lognormal distribution. This is done by applying Equation (14) for evaluating the first and second order moments of Y (i.e. giving α i a value of 1 and 2 respectively). While the first order moment is a direct estimate of the mean value µ Y , the standard deviation σ Y is approximated by Equation (23).

The efficiency of using MDRM–G for estimating the parameters of the LN is illustrated by Table 5, where the LN parameters corresponding with the example above are compared (i.e. analytical, MCS, and MDRM–G). For this specific example, the MDRM–G methodology with 13 model realizations results in a better estimation than the 10000 MCS.

While the application of the Maximum Entropy principle is recommended for making an unbiased estimate of the PDF when the distribution type is not known a priori, the above example illustrates that the application of MDRM–G can in itself give an excellent estimate of the parameters for a known or assumed distribution type.

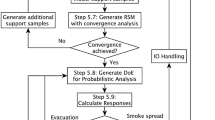

From a practical perspective the procedure of Table 6 is recommended to choose between the ME-MDRM result and an assumed distribution type with parameters determined with the MDRM–G:

The relevance and efficiency of this proposed procedure is illustrated by the practical applications given further. These applications consider different durations of the ISO 834 standard fire. The ISO 834 standard fire has been chosen as a common reference for fire safety engineers. In actual design and assessment applications, the relevant fire curve and exposure duration (when relevant) have to be determined in function of the building characteristics. The examples further are intended to illustrate the application of the ME-MDRM and do not entail a recommendation regarding the use of the ISO 834 standard fire curve or specific exposure durations.

4 Practical Application 1: Load Bearing Capacity of an Eccentrically Loaded Concrete Column Subjected to Fire

4.1 Introduction

The structural stability of (concrete) columns in case of fire exposure is of primary importance for the overall stability of the building. In order to allow for true risk and reliability-based decision making for structural fire safety, the structural reliability of concrete columns during fire exposure has to be evaluated. However, due to the strong non-linearity of the column behaviour and due to second order effects, advanced computationally expensive calculation tools need to be used, especially if interaction with floorplates or local fire exposure of continuous columns is to be modelled. As the evaluation of a single column can already be computationally expensive, performing Monte Carlo simulations for reliability analysis becomes untenable.

For specific situations and using analytical approximations, reliability calculations for concrete columns subjected to eccentric loads have been performed in [25] and [1] using Monte Carlo simulations and FORM.

A numerical model for an iterative second-order calculation of fire-exposed concrete columns has been developed by [33]. Here another approach is used, i.e. application of a Direct Stiffness Method (DSM) matrix calculation described in [31]. This calculation approach discretizes the structural frame (in casu a single column) in segments. In the example below a segment length of approximately 4 cm has been used. Each segment is characterized by its temperature- and load-dependent axial and bending stiffness, which are evaluated by a cross-sectional model. The mutual interaction of the different segments and their interaction with the external loads is incorporated in the stiffness matrix of the DSM. For given axial and bending stiffness of the individual segments, the DSM results in an evaluation of the displacement and member forces for the structural frame. As the segment stiffness is load dependent (i.e. depends on the member forces) and as the displacement of the frame results in second order effects, the method is evaluated iteratively until convergence. For a given vertical load and eccentricity the converged results correspond with those of the model by [33]. The DSM has the advantage that any type of (localized) exposure and intermediate restraints can be modelled. However, as the initial goal is an evaluation of the applicability of the ME-MDRM methodology and since an isolated column with pin connections at the top and bottom is one of the most generally relevant practical cases, the DSM is applied for the square column model as defined in Table 7 and Figure 5, considering exposure to 60 min ISO 834 standard fire.

Table 7 also specifies the considered stochastic variables and associated probabilistic models. For the temperature dependent concrete compressive strength and steel yield stress reduction factors k fc(θ) and k fy(θ) (where θ is the local material temperature), the probabilistic models given in [30] are applied. These models consider the nominal reduction factor given in the Eurocodes as mean value and a temperature-dependent coefficient of variation V based on test data. For the concrete V kfc(θ) = 0 at 20°C and 0.045 at 700°C, with linear interpolation for intermediate temperatures and V kfc(θ) = 0.045 for temperatures above 700°C. For the reinforcement yield stress reduction factor a similar model is used with V kfy(θ) = 0 at 20°C, V kfy(θ) = 0.052 at 500°C, linear interpolation for intermediate temperatures, and V kfy(θ) = 0.052 for temperatures above 500°C. Note that the probabilistic model for the temperature-dependent reduction factor is independent from the probabilistic model for the reference 20°C material strength to which the reduction factor applies.

The ME-MDRM is applied to evaluate PDF’s describing respectively the load bearing capacity P max for given eccentricity e, and the maximum eccentricity e max for a given load P.

4.2 Load Bearing Capacity for a Given Eccentricity e

The load bearing capacity P max is evaluated for an eccentricity e = 5 mm as the maximum force for which equilibrium can be obtained in the DSM calculation. For larger P the lateral deflection of the column results in a second order bending moment at the mid-section of the pinned column which is larger than the bending moment capacity of the column cross-section, resulting in failure of the column. Determining P max is done iteratively by step-wise improving the estimate for P max . A computational precision of 1 kN has been applied.

Since 6 stochastic variables are considered in accordance with Table 7, only 25 (6 × 4 + 1) model evaluations are required for applying the ME-MDRM methodology.

Results obtained through the ME-MDRM are compared with a histogram of 10000 Monte Carlo simulations in Figure 6, illustrating the excellent performance of the method for correctly capturing the shape of the PDF. Figure 6 clearly shows that a priori assuming a lognormal approximation would not result in a correct description of the shape of the PDF. Furthermore, as illustrated by Figure 7 the lognormal approximation results in an unsafe estimate of the occurrence rate of low values for P max . Admittedly the ME-MDRM result deviates as well from the observed cumulative frequency for low P max , but the deviation is conservative and the approximation is excellent for probabilities as small as 0.005, indicating for example that the ME-MDRM would very accurately predict a characteristic value for P max corresponding with a 99.5% exceedance probability.

On the other hand, the application of the reduced methodology of MDRM–G in itself gives an almost perfect estimate of the parameters for the lognormal distribution. This is illustrated by the excellent match of the two lognormal approximations with each other, both in Figure 6 and in Figure 7. The underlying estimates for the mean µ and standard deviation σ of P max are given in Table 8 for the MCS and MDRM–G respectively.

In the above the obtained estimates for the PDF are compared with an MCS histogram. For practical applications only the ME-MDRM PDF and the assumed lognormal PDF with parameters estimated through MDRM–G would be available. Applying the proposed procedure of Table 6 for choosing between both options, the PDF shapes in Figure 6 do not match and consequently the unbiased ME-MDRM estimate would be preferred. This choice for the ME-MDRM result is in agreement with the preference resulting from the comparison with MCS.

4.3 Maximum Eccentricity for a Given Vertical Load P

An alternative application of the DSM allows to evaluate the maximum allowable eccentricity e max for a given column load P. The calculation methodology itself is identical to the one discussed above for determining P max for given e.

Results of 10000 Monte Carlo simulations are compared in Figures 8 and 9 with the ME-MDRM estimation (25 model realizations) and with two lognormal approximations: one for which the parameters are estimated from the MCS, and one for which the parameters are estimated through application of MDRM–G (i.e. using the same 25 model realizations).

Again it is observed that the Maximum Entropy estimation of the PDF results in a very good estimate of the overall shape of the PDF. On the other hand, a priori assuming a lognormal distribution does not give a good match with the observed histogram.

Evaluating Figure 9, the occurrence rate of low e max is much better estimated through the ME-MDRM, although the result is admittedly slightly non-conservative compared to the observed histogram. Furthermore, a priori assuming a lognormal distribution results in a sever overestimation of the structural capacity.

The values obtained for the mean µ and standard deviation σ estimated through the MCS and the MDRM–G are given in Table 9, again illustrating the excellent approximation by the MDRM–G methodology.

As for P max above, the ME-MDRM estimated PDF and CDF result in a much better approximation compared to a priori choosing for a lognormal distribution. Applying the proposed procedure of Table 6 to choose between the ME-MDRM PDF and the assumed lognormal PDF with parameters estimated through MDRM–G would result in opting for the ME-MDRM estimate. This choice for the ME-MDRM result is in agreement with the preference resulting from the comparison with MCS.

5 Practical Application 2: Bending Moment Capacity of a Concrete Slab Exposed to Fire

Traditionally a lognormal distribution would be assumed for the PDF describing the bending moment capacity M R,fi,t of a concrete slab during fire. However, considering the importance of the concrete cover c, a mixed-lognormal approximation should be used [30]. This very specific type of PDF could only be determined as part of a research project and through a large number of MCS. It is therefore most interesting to evaluate how the ME-MDRM performs here—i.e. to assess whether the ME-MDRM is capable of identifying the irregularity of the PDF.

Consider the concrete slab configuration of Table 10. MCS for the bending moment capacity M R,fi,t are executed for exposure to 240 min of ISO 834 standard fire, using the approximate analytical model, of Equation (24).

As Table 10 indicates 5 stochastic variables, only 21 model evaluations are needed for application of the ME-MDRM. The obtained PDF and CDF are compared in Figures 10 and 11 with the mixed-lognormal approximation, a traditional lognormal approximation (with parameters estimated both from the MCS and through MDRM–G) and the histogram of the MCS.

As shown in the graphs above, the ME-MDRM results in a very reasonable approximation and correctly identifies the irregular shape of the PDF. The irregularity of the PDF estimated with ME-MDRM indicates to the user that more detailed analyses may be required. Ideally these additional analyses identify the cause of the irregularity and provide the user with additional information to consider for a reframing of the problem, resulting in casu in considering a mixed-lognormal distribution, see [27] and [30].

Considering the proposed procedure of Table 6 for estimating PDF’s, the difference between the assumed lognormal estimate and the unbiased ME-MDRM estimate would result in opting for the ME-MDRM estimate. Note that this ME-MDRM estimated PDF has an excellent agreement with the observed cumulative frequency of the MCS up to a CDF precision of 10−2, indicating that for example a characteristic value with 99% exceedance probability is very accurately predicted.

For completeness the mean and standard deviation estimated from the MCS and through MDRM–G are compared in Table 11, further illustrating the excellent performance of MDRM–G in estimating parameters for an a priori assumed distribution.

6 Practical Application 3: Stochastic Response of a Concrete Portal Frame Exposed to Fire

6.1 Introduction

The ME-MDRM can also be applied to evaluate the response of structural systems where the overall structural behaviour is defined through the interaction of the different components. In the following, this interaction is considered by applying the Direct Stiffness Method (DSM) matrix calculation described earlier in Practical application 1.

The concrete portal frame of Figure 12 is considered to be exposed to 30 min of the ISO 834 standard fire. While the beam in the frame is considered to be exposed from 3 sides only (top surface air cooled in accordance with EN 1992-1-2), both columns are exposed to fire from 4 sides. Even for the simple portal frame of Figure 12 the interaction of the different components has to be considered to evaluate to overall structural response. This is amongst others because the lateral support conditions for the top beam depend on the restraint (stiffness) exerted by the columns, resulting in a partial inhibition of the thermal expansion of the top beam. This restraint will in turn give rise to fire-induced lateral forces pushing the columns outwards.

The parameters describing the top beam are given in Table 12, while the columns are described by the same data as given earlier in Table 7.

The time-dependent deformation of the frame is visualized in Figure 13, considering mean values for the stochastic variables of Tables 12 and 7. The displacements in Figure 13 have been scaled by a factor of 50 for a more clear visual effect. Only half the frame has been visualized as the problem setup is perfectly symmetrical (when considering characteristic or mean values for all variables).

Figure 13 clearly illustrates how the thermal expansion of the beam pushes the column outwards. These kind of effects have been identified for example in [10] as a potential cause of premature structural failure in case of fire. In the conceptual model of Figure 12 the column is exposed to fire as well as the beam. Consequently the thermal elongation of the column lifts the beam during fire exposure as shown in Figure 13.

As the column partially restrains the expansion of the beam, the beam is subjected to an axial restraining force and the column to a shear force. The column shear force corresponding with Figure 13 is visualized in Figure 14. Also at 0 min of exposure a shear force exists as also in ambient design conditions the assumptions underlying standard cross-section calculations results in an elongation of the beam, as discussed in [31]. This beam elongation is partially restrained by the column.

Note that while the shear force in the column approximately doubles in the considered 30 min of ISO 834 fire exposure, this increase in shear force coincides with a reduction of the shear capacity due to the heating of the column.

6.2 Application of the ME-MDRM

In order to understand the uncertainty associated with the model outputs, a probabilistic evaluation is made.

A specific question relates to the relationship between the stochastic realizations for the left and right column: should they be considered independent, perfectly correlated, or correlated up to an intermediate degree? Dependent on the specifics of the construction method and planning, different levels of correlation may be appropriate. A high degree of correlation can be considered appropriate assuming both columns have been made simultaneously and using the same concrete mix and reinforcement shipment.

As the goal is to illustrate the application of the ME-MDRM to structural systems, the above discussion is not further elaborated here. Both columns are considered to be perfectly correlated as this allows to maintain the symmetry applied in Figure 13. Considering symmetry reduces the computational requirements, which is an important consideration for the MCS validation of the ME-MDRM result.

Note that one of the advantages of ME-MDRM would be to reduce the number of required modelling assumptions (e.g. symmetry) as the model computational time is less onerous as compared to MCS.

The ME-MDRM is applied considering independent stochastic realizations for the columns (Table 7) and the beam (Table 12). This results in a total of 15 independent stochastic variables and 4·15 + 1 = 61 required model evaluations.

As the shear force is quasi-constant in the column (see Figure 14), only the force V column,connection at the beam-column connection is considered. The MCS and ME-MDRM results for this shear force are visualized in Figures 15 and 16, together with the PDF corresponding with an assumed lognormal distribution.

Similarly, results for the horizontal outwards displacement u connection of the beam-column connection are visualized in Figures 17 and 18.

For this specific example, the ME-MDRM estimation is not fundamentally better than a priori opting for a lognormal distribution (whose parameters can be estimated efficiently through the MDRM–G methodology).

However, it should be emphasized that the logarithmic scales in the CDF visualizations emphasizes the differences between the observed cumulative frequency and the estimation. Considering for example Figure 18, the ME-MDRM estimation gives an excellent approximation for the 95% quantile. For larger quantiles, the approximation is less perfect, but conservative (as it overestimates the occurrence rate of respectively large shear forces and large displacements).

Furthermore, when focusing on the PDF visualizations, the match obtained through the ME-MDRM methodology is very good. Also, when increasing the estimation order m of the ME-MDRM to 5, a much better approximation is obtained.

Considering the methodology proposed in Table 6 and maintaining m = 4, a practical recommendation would be to accept the lognormal distribution as the ME-MDRM estimated PDF and the lognormal PDF with parameters estimated through MDRM–G are similar. As indicated in Figures 16 and 18, this lognormal distribution results in a good overall agreement with the observed MCS cumulative frequency.

For completeness, the MCS estimated parameters for the lognormal distribution are compared with the MDRM–G estimated parameters in Tables 13 and 14. Again, the efficiency of the MDRM–G methodology for estimating the parameters is illustrated.

7 Discussion

The application of the ME-MDRM methodology to concrete structures exposed to fire is shown to be very promising. Although the estimated CDF does not always accurately match the extreme quantiles of the observed cumulative frequency (crude Monte Carlo simulations), the overall shape of the PDF is very well approximated. Furthermore, the ME-MDRM estimation is found to be very accurate for estimating characteristic values of the model output, especially considering the limited number of required model evaluations.

The performance of the ME-MDRM is especially remarkable when the observed histogram deviates from a traditional (lognormal) distribution. In these cases the unbiased PDF estimate is clearly superior to a priori assuming a (lognormal) PDF type.

When a traditional distribution type is known or assumed, the parameters can be very efficiently estimated through a reduced application of the methodology (i.e. without the Maximum Entropy principle). This reduced methodology has been denoted as MDRM–G and can have significant practical applicability for studies where the parameters of an assumed/known PDF are currently estimated through for example Latin Hypercube Sampling.

From a practical perspective it is recommended to evaluate both the unbiased ME-MDRM estimated PDF and the assumed PDF type with parameters calculated through MDRM–G. Note that only a single set of n·(L − 1) model evaluations is required to make both PDF estimates. If both PDF’s are very similar, the assumed (known) PDF type may be considered appropriate. Whenever a significant difference exists between both estimates, the unbiased ME-MDRM estimate is recommended.

Note that the ME-MDRM methodology is compatible with any type of advanced calculation model and requires no difficult interaction with the model calculation core. More specifically, it suffices to generate the n·(L − 1) model input vectors in a readily available spreadsheet or mathematical tool and to implement these values as part of the model input data. Having run all n·(L − 1) simulations, the results are to be collected and implemented in a separate calculation tool for applying the Maximum Entropy methodology. Depending on the flexibility of the model (e.g. black box with restricted access, or flexible tool with clear input and output files) the above can be automated and all calculations can be run simultaneously or in a single batch. As the ME-MDRM model evaluations are not dependent on intermediate results (as compared to methodologies which work iteratively), this results in excellent opportunities for parallelization.

8 Conclusions

A computationally efficient methodology has been presented which makes an unbiased estimate of the probability density function (PDF) describing a stochastic model output Y. Making an unbiased estimate of the PDF (as opposed to a biased estimate where the PDF type is a priori known or assumed) is especially important in the field of fire safety engineering where the PDF type describing model output is generally not known.

The presented unbiased method is known as the Maximum Entropy Multiplicative Dimensional Reduction Method (ME-MDRM) and results in a mathematical formula for the estimated PDF, while requiring only a very limited number of model evaluations. When considering the full methodology as presented in the paper, only 4n + 1 model evaluations are required, with n the number of stochastic input variables. The method can easily be used together with existing models and calculation tools. No modification of the calculation model itself is needed, and as the input vectors corresponding with these 4n + 1 model evaluations are fixed at the onset of the method, the ME-MDRM has a large potential for parallel computing.

A reduced application of the method allows to estimate the moments of the model output Y using the same limited set of 4n + 1 model evaluations. This reduced application has been denoted as the MDRM–G method and can be used for making a biased estimate of the PDF (i.e. an estimate where the PDF type is a priori known or assumed). This biased application is however not recommended when the PDF type has not been verified a priori.

Application of the ME-MDRM and MDRM–G is illustrated with example applications from the field of structural fire safety, indicating the excellent performance of the method in capturing non-traditional distribution shapes. While the examples come from the field of structural fire safety, the presented methodology is applicable to other types of engineering problems as well.

Abbreviations

- E[.]:

-

Expected value operator

- F x :

-

Cumulative density function for the variable X

- \( F_{X}^{ - 1} \) :

-

Inverse cumulative density function for the variable X

- f xl :

-

Probability density function describing the lth stochastic input variable

- f y :

-

Probability density function describing Y

- \( \hat{f}_{y} \) :

-

ME-MDRM estimate for f y

- h(.):

-

Model response indicator

- h l (.):

-

Unidimensional cut function for h(.) where all stochastic input variables expect the lth are evaluated by their median value

- h 0 :

-

Model response y when all stochastic variables are given by their median values

- L :

-

Number of Gauss integration points for the Gaussian interpolation

- \( M_{Y}^{{\alpha_{i} }} \) :

-

α th i sample moment of Y

- m :

-

Order of the Maximum Entropy estimate

- n :

-

Number of stochastic variables

- P f :

-

Probability of failure

- P f,fi :

-

Probability of failure conditional on the occurrence of a ‘significant’ fire

- w j :

-

Gauss weight for the jth Gauss integration point

- x :

-

Vector of stochastic input variables x l

- Y :

-

The stochastic model output

- y :

-

Realization of the model output

- y j,l :

-

Model realization where the lth stochastic variable is defined by the jth Gauss integration point, and all other variables are evaluated by their median value

- z j :

-

jth Gauss integration point

- α i :

-

Exponent i for the ME-MDRM estimate of the PDF

- λ i :

-

Coefficient i for the ME-MDRM estimate of the PDF

- λ 0 :

-

Normalization coefficient for the ME-MDRM estimate of the PDF

- μ X :

-

Mean value of stochastic variable X

- \( \hat{\mu }_{X} \) :

-

Estimated mean value of stochastic variable X

- \( \overset{\lower0.5em\hbox{$\smash{\scriptscriptstyle\frown}$}}{\mu }_{X} \) :

-

Median value of stochastic variable X

- σ X :

-

Standard deviation of stochastic variable X

- Φ :

-

Standard normal cumulative distribution function

- φ:

-

Standard normal probability density function

- LHS:

-

Latin Hypercube Sampling

- MCS:

-

Monte Carlo simulations

- ME-MDRM:

-

Maximum Entropy Multiplicative Dimensional Reduction Method

- MDRM–G:

-

Parameter estimation based on Multiplicative Dimensional Reduction Method and Gaussian interpolation

- PDF:

-

Probability density function

References

Aachenbach M, Morgenthal G (2015) Reliability of rein-forced concrete columns subjected to standard fire. In: Proceedings of CONFAB 2015, 02-04/09, Glasgow, UK

Albrecht C, Hosser D (2011) A response surface methodology for probabilistic life safety analysis using advanced fire engineering tools. In: Proceedings of the 10th international symposium of the international association for fire safety science (IAFSS), 19-23/06, Maryland, USA, pp 1059–1072

Ang AH-S, Tang WH (2007) Probability concepts in engineering, 2nd edn. Wiley, New York

Balomenos GP, Genikomsou AS, Polak MA, Pandey MD (2015) Efficient method for probabilistic finite element analysis with application to reinforced concrete slabs. Eng Struct 103:85–101

BSI (2003) PD 7974-7:2003, Application of fire safety engineering principles to the design of buildings—Part 7: Probabilistic risk assessment. British Standard, London, UK

CEN (2002) EN 1990: Eurocode 0: basis of structural design. European Standard, Brussels, Belgium

Conedera A, Torriani D, Neff C, Ricotta C, Bajocco S, Pezzatti GB (2011) Using Monte Carlo simulations to estimate relative fire ignition danger in a low-to-medium fire-prone region. For Ecol Manag 261:2179–2187

Engelund S, Rackwitz R (1993) A benchmark study on importance sampling techniques in structural reliability. Struct Saf 12:255–276

European Commission (2002) Valorisation project—natural fire safety concept. Final report EUR 20349 (KI-NA-20-349-EN-S). http://bookshop.europa.eu

fib (2008) fib Bulletin 46: State-of-art report: Fire design of concrete structures—structural behaviour and assessment. Fédération internationale du béton (fib), Lausanne, Switzerland

Frantzich H, Magnusson SE, Holmquist B, Ryden J (1997) Derivation of partial safety factors for fire safety evaluation using the reliability index β method. In: Proceedings of the 5th international symposium of the international association for fire safety science (IAFSS), 03-07/03, Melbourne, Australia

Gernay T, Elhami Khorasani N, Garlock M (2016) Fire fragility curves for steel buildings in a community context: A methodology. Eng Struct 113:259–276

Geyer CJ (1992) Practical Markov chain Monte Carlo. Stat Sci 7:473–483

Gulvanessian H, Calgaro J-A, Holicky M (2012) Designers’ guide to eurocode: basis of structural design EN 1990, 2nd edn. ICE Publishing, London

Guo Q, Jeffers AE (2014) Direct differentiation method for response sensitivity analysis of structures in fire. Eng Struct 77:172–180

Hietaniemi J (2007) Probabilistic simulation of fire endurance of a wooden beam. Struct Saf 29:322–336

Hopkin D (2016) A review of fire resistance expectations for high-rise UK apartment buildings. Fire Technology 53(1):87–106

Jaynes E (1957) Information theory and statistical mechanics. Phys Rev 106:620–630

Lange D, Devaney S, Usmani A (2014) An application of the PEER performance based earthquake engineering framework to structures in fire. Eng Struct 66:100–115

Nathwani J, Lind NC, Pandey MD (1997) Affordable safety by choice: the life quality method. University of Waterloo, Waterloo

Novi Inverardi PL, Tagliani A (2003) Maximum entropy density estimation from fractional moments. Commun Stat Theory Methods 32:327–345

Olsson A, Sandberg G, Dahlblom O (2003) On Latin hypercube sampling for structural reliability analysis. Struct Saf 25:47–68

Rosenblueth E, Mendoza E (1971) Reliability optimization in isostatic structures. J Eng Mech Div 97:1625–1642

Rackwitz R (2001) Optimizing systematically renewed structures. Reliab Eng Syst Saf 73:269–280

Sidibé K, Duprat F, Pinglot M, & Bourret B (2000) Fire Safety of reinforced concrete columns. ACI Struct J 97:642–647

Torrent RJ (1978) The log-normal distribution: a better fitness for the results of mechanical testing of materials. Matér Constr 11:235–245

Van Coile R, Caspeele R, Taerwe L (2013) The mixed lognormal distribution for a more precise assessment of the reliability of concrete slabs exposed to fire. In: Proceedings of the 2013 european safety and reliability conference (ESREL). 29/09-02/10, Amsterdam, The Netherlands, pp 2693–2699.

Van Coile R, Caspeele R, Taerwe L (2014a) Reliability-based evaluation of the inherent safety presumptions in common fire safety design. Eng Struct 77:181–192

Van Coile R, Caspeele R, Taerwe L (2014b) Lifetime cost optimization for the structural fire resistance of concrete slabs. Fire Technol 50:1201–1227

Van Coile R (2015) Reliability-based decision making for concrete elements exposed to fire. Doctoral dissertation. Ghent University, Belgium

Van Coile R (2016) Towards reliability-based structural fire safety: development and probabilistic applications of a direct stiffness method for concrete frames exposed to fire. Postgraduate dissertation. Ghent University, Belgium

Van Weyenberge B, Deckers X, Caspeele R, Merci B (2016) Development of a full probabilistic QRA method for quantifying the life safety risk in complex building designs. In: Proceedings of the 11th conference on performance-based codes and fire safety design methods, 23-25/05, Warsaw, Poland

Wang L, Caspeele R, Van Coile R, Taerwe L (2015) Extension of tabulated design parameters for rectangular columns exposed to fire taking into account second order effects and various fire models. Struct Concr 16:17–35

Zhang X (2013) Efficient computational methods for structural reliability and global sensitivity analyses. Doctoral dissertation. University of Waterloo, Waterloo, Canada

Zhang X, Li X, Hadjisophocleous G (2013) A probabilistic occupant evacuation model for fire emergencies using Monte Carlo methods. Fire Saf J 58:15–24

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Van Coile, R., Balomenos, G.P., Pandey, M.D. et al. An Unbiased Method for Probabilistic Fire Safety Engineering, Requiring a Limited Number of Model Evaluations. Fire Technol 53, 1705–1744 (2017). https://doi.org/10.1007/s10694-017-0660-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10694-017-0660-4