Abstract

A direct multiple shooting (MS) method is implemented to solve optimal control problems (OCP) in the Mayer form. The use of an MS method gives rise to the so-called ‘continuity conditions’ that must be satisfied together with general algebraic equality and inequality constraints. The resulting finite nonlinear optimization problem is solved by a first-order descent method based on the filter methodology. In the equivalent tri-objective problem, the descent method aims to minimize the objective function, the violation of the ‘continuity conditions’ and the violation of the algebraic constraints simultaneously. The numerical experiments carried out with different types of benchmark OCP are encouraging.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

Keywords

24.1 Introduction

An optimal control problem (OCP) is a constrained optimization problem that has a set of dynamic equations as constraints. Application domains of OCP are varied [1]. There are three types of OCP that differ in the formulation of the functional to be optimized. For example, an OCP of the Lagrange form has the objective functional in its pure integral form as shown

where \(\mathbf {y} \in \mathbb {R}^{\bar{s}}\) is the vector of state variables of the dynamic system, \(\mathbf {u} \in U \subset \mathbb {R}^c\) is the vector of control or input variables and U represents a class of functions (in particular functions of class \(C^1\) and piecewise constant) and usually contains limitations to the control [2]. To convert problem (24.1) into the Mayer form, a new variable is added to the states vector \(\mathbf {y}\), such that \(y^{\prime }_{s}(t) = f_2(t, \mathbf {y}(t), \mathbf {u}(t))\) with the initial condition \(y_{s}(0) = 0\), where \(s=\bar{s}+1\) represents the total number of state variables. Thus, problem (24.1) becomes:

In the OCP we want to find \(\mathbf {u}\) that minimizes the objective functional J subject to the dynamic system of ordinary differential equations (ODE). The problem may have other more complex ‘terminal constraints’ \(H(T,\mathbf {y}(T),\mathbf {u}(T))~=~0\). States \(\mathbf {y}\) and control \(\mathbf {u}\) may also be constrained by algebraic equation constraints \(h_e(t, \mathbf {y}(t), \mathbf {u}(t)) = 0,\, e \in E\) and ‘path constraints’ \(g_j(t, \mathbf {y}(t), \mathbf {u}(t)) \le 0,\, j \in F\), where \(E=\left\{ 1,2,\right. \left. \ldots ,m \right\} \) and \(F=\left\{ 1,2,\ldots ,l \right\} \).

Methods for solving OCP like (24.2) can be classified into indirect and direct methods. Indirect methods use the first-order necessary conditions from Pontryagin’s maximum principle to reformulate the original problem into a boundary value problem. On the other hand, direct methods solve the OCP directly [3] transforming the infinite-dimensional OCP into a finite-dimensional optimization problem that can be solved by effective and well-established nonlinear programming (NLP) algorithms. All direct methods discretize the control variables but differ in the way they treat the state variables [4]. They are also classified as Discretize then Optimize strategies in contrast to the Optimize then Discretize strategies of the indirect methods [1].

This paper explores the use of a first-order descent method based on the filter methodology [5, 6] to solve the NLP problem, within a direct method for solving an OCP in the Mayer form. The use of a direct multiple shooting (MS) method gives rise to the so-called ‘continuity conditions’ that must be satisfied. The novelty here is that a filter methodology is used to minimize the objective function, the violation of the ‘continuity conditions’ and the violation of algebraic constraints simultaneously. The NLP problem is a tri-objective problem and the first-order descent method generates a search direction that is either the negative gradient of one of the functions to be minimized or a convex combination of negative gradients of two functions. To overcome the drawback of computing first derivatives, the gradients are approximated by finite differences.

The paper is organized as follows. Section 24.2 briefly describes the direct MS algorithm for solving the OCP in the Mayer form. The herein proposed first-order descent filter algorithm is discussed in Sect. 24.3, the numerical experiments are shown in Sect. 24.4 and we conclude the paper with Sect. 24.5.

24.2 Direct Multiple Shooting Method

In a direct single shooting (SS) method, only the controls are discretized in the NLP problem [3]. The dynamic system is solved by an ODE solver to get the state values for the optimization. Thus, simulation and optimization are carried out sequentially. On a specific grid defined by \(0 = t_1< t_2< \cdots< t_{N-1} < t_N = T\), where \(N-1\) is the total number of subintervals, the control \(\mathbf {u}(t)\) is discretized, namely using piecewise polynomial approximations. The simplest of all is a piecewise constant, \( \mathbf {u}(t) = \mathbf {q}^i, \,\,\ \text{ for } \,\,\ t \in [t_i, t_{i+1}] \,\, \text{ and } \,\, i=1, \ldots , N-1 \) so that \(\mathbf {u}(t)\) only depends on the control parameters \(\mathbf {q} = (\mathbf {q}^1, \mathbf {q}^2, \ldots , \mathbf {q}^{N-1})\) and \(\mathbf {u}(t) = \mathbf {u}(t,\mathbf {q})\). When the horizon length T is not fixed, the control parameter vector also includes T to define the optimization variables. The dynamic system is solved by (forward numerical integration) an ODE solver and the state variables \(\mathbf {y}(t)\) are considered as dependent variables \(\mathbf {y}(t,\mathbf {q})\). The main advantage of a direct SS method is the reduced number of decision variables (control parameters) in the NLP even for very large dynamic systems. However, unstable systems may be difficult to handle.

In a direct MS method, discretized controls and state values at the start nodes of the grid (grid points)—\(\mathbf {x}^i \in \mathbb {R}^s\), \(i=1,2,\ldots ,N-1\), known as MS node variables—are the decision variables for the NLP solver [7]. After the discretization of the controls, the ODE system is solved on each shooting subinterval \([t_i, t_{i+1}]\) independently, but they need to be linked by the auxiliary variables \(\mathbf {x}^i\), \(i=1,2,\ldots ,N-1\). They are the initial values for the state variables for the \(N-1\) independent initial value problems on the subintervals \([t_i, t_{i+1}]\):

where \(\mathbf {y} \in \mathbb {R}^s\). Trajectories \(\mathbf {y}^i(t; \mathbf {x}^i, \mathbf {q}^i)\) are obtained where the notation “\((t; \mathbf {x}^i, \mathbf {q}^i\))”, for the argument, means that they are dependent on t as well as on the specified values for the node variables \(\mathbf {x}^i\) and control parameters. The initial state values \(\mathbf {x}^i\) should satisfy the ‘continuity conditions’

(ensuring continuity of the solution trajectory), the initial value \(\mathbf {x}^1 = \mathbf {y}_0\) and the final state constraints \(\mathbf {x}^N = \mathbf {y}_T\) [4, 8].

We choose to implement a direct MS method since it can cope with differential and algebraic equations that show unstable dynamical behavior [7]. The main steps of the direct MS algorithm are shown in Algorithm 1.

24.3 First-Order Descent Filter Method

The herein proposed first-order descent filter method relies on descent directions for two constraint violation functions (handled separately) and for the objective function in order to converge towards the optimal solution of the NLP problem. One of the constraint violation functions emerges from the ‘continuity constraints’ violation (including initial state and final state constraints) and the other comes up from the state and control algebraic equality and inequality constraints. We assume that the NLP problem is a non-convex constrained optimization problem (COP). For practical purposes, we assume that the OCP is in the Mayer form, the ODE system has initial and boundary state values, state and control variables are constrained by algebraic equality and inequality constraints, and the explicit 4th. order Runge-Kutta integration formula is used to solve the dynamic system in each subinterval \([t_i, t_{i+1}]\) using 5 points.

As stated in the last section, the decision variables of the COP are the initial state values at the nodes \(\mathbf {x}^i \in \mathbb {R}^s\), \(i~=~1,\ldots ,N\) and the control variables \(\mathbf {q}^i \in \mathbb {R}^c\), \(i~=~1,\ldots ,N-1\). Besides possible algebraic constraints on the state and control variables, the ‘continuity constraints’ (24.3), the initial state and the final state constraints must be added to the optimization problem formulation. Thus, our COP has the following form:

where \(I=\left\{ 1,\ldots ,N-1 \right\} \) and \(I_N = I \cup \{N\}\). To solve the optimization problem (24.4), the set of ODE must be solved so that the ‘continuity constraints’ \(\mathbf {y}^i(t_{i+1}; \mathbf {x}^i, \mathbf {q}^i) - \mathbf {x}^{i+1} = 0\), the initial state and the final state constraints, the other equality and inequality constraints and the objective function are evaluated (see Algorithm 1). Since problem (24.4) has constraints, we seek optimal values for \(\mathbf {x}\) and \(\mathbf {q}\) such that all the constraints are satisfied—a feasible solution of the COP—and the objective function takes the least value.

24.3.1 Filter Methodology

To check solution feasibility, a measure for the violation of the constraints is adopted. To implement the herein proposed filter methodology, the constraints are fractionated into two sets and their violations are computed and handled separately. We denote the violation of the ‘continuity constraints’, initial state and final state constraints by the non-negative function:

where \(L=\{1,2,\ldots ,s\}\), noting that \(\theta (\mathbf {x},\mathbf {q})\) is zero if the solution \((\mathbf {x},\mathbf {q})\) satisfies these constraints, and is positive otherwise. These are the constraints that are more difficult to be satisfied and we need to priority drive the violation \(\theta \) to zero as soon as possible so that the ODE integration runs as close as possible to the exact values of the state variables.

To evaluate the algebraic equality and inequality constraints violation, a non-negative function p, also based on the Euclidean norm of vectors, is used

and similarly, \(p(\mathbf {x},\mathbf {q})=0\) when the corresponding constraints are satisfied, and \(p(\mathbf {x},\mathbf {q})>0\) otherwise. The violation of these constraints is also forced to converge to zero.

The extension of the filter methodology [5] into the descent algorithm to solve the COP is equivalent to the reformulation of the problem (24.4) as a tri-objective optimization problem that aims to minimize both the feasibility measures, defined by the constraint violation functions \(\theta (\mathbf {x},\mathbf {q})\) and \(p(\mathbf {x},\mathbf {q})\), and the optimality measure defined by the objective function \(y_s(T)\):

In our filter methodology, a filter \(\mathscr {F}\) is a finite set of triples \((\theta (\mathbf {x},\mathbf {q}), p(\mathbf {x},\mathbf {q}), y_{s}(T))\) that correspond to points \((\mathbf {x},\mathbf {q})\), none of which is dominated by any of the others in the filter. A point \((\hat{\mathbf {x}},\hat{\mathbf {q}})\) is said to dominate a point \((\mathbf {x},\mathbf {q})\) if and only if the following conditions are satisfied simultaneously:

with at least one inequality being strict. The filter is initialized to \(\mathscr {F} = \{(\theta ,p,y_{s}): \theta \ge \theta _{\max }, p \ge p_{\max } \}\), where \(\theta _{\max }, p_{\max }>0\) are upper bounds on the acceptable constraint violations. Let \(\mathscr {F}_k\) be the filter at iteration k of the algorithm. To avoid the acceptance of a trial point \((\bar{\mathbf {x}},\bar{\mathbf {q}})\) (approximation to the optimal solution), or the corresponding triple \((\theta (\bar{\mathbf {x}},\bar{\mathbf {q}}), p(\bar{\mathbf {x}},\bar{\mathbf {q}}), \bar{y}_{s}(T))\), that is arbitrary close to the boundary of the filter, the conditions of acceptability to the filter define an envelope around the filter and are as follows:

for all points \((\mathbf {x}^{(l)},\mathbf {q}^{(l)})\) that correspond to triples \((\theta (\mathbf {x}^{(l)},\mathbf {q}^{(l)}),p(\mathbf {x}^{(l)},\mathbf {q}^{(l)}),y^{(l)}_{s}(T))\) in the filter \(\mathscr {F}_k\). Points with constraint violations that exceed \(\theta _{\max }\) or \(p_{\max }\) are not acceptable. The constant \(\gamma \in (0,1)\) is fixed and the smaller the tighter is the envelope of acceptability. The above conditions impose a sufficient reduction on one of the feasibility measures or on the optimality measure for a point to be acceptable. When the point is acceptable to the filter, the filter is updated and whenever a point is added to the filter, all the dominated points are removed from it.

24.3.2 The First-Order Descent Filter Algorithm

The proposed first-order descent method is based on using gradient approximations of the functions, \(\theta \), p or \(y_{s}\), of the tri-objective problem (24.7), to define search directions coupled with a simple line search to compute a step size that gives a simple decrease on one of the measures \(\theta \), p or \(y_{s}\). Since \(\theta \) is the most difficult to reduce, priority is given to searching along the (negative) gradient of \(\theta \) or a (negative) combination of the gradient of \(\theta \) with the gradient of p or \(y_{s}\). See Algorithm 2. For easy of notation \( \mathbf {v} = \left( x^1_1,\ldots ,x^1_s, \ldots , x^N_1,\ldots ,x^N_s,q^1_1,\ldots ,q^{1}_c, \ldots , q^{N-1}_1,\ldots ,q^{N-1}_c \right) ^T \) is used to denote the vector of the decision variables (\(\mathbf {v} \in \mathbb {R}^{n_D}\;, n_D = N s+(N-1) c\)).

Each component i of the gradient of \(\theta \) with respect to the variable \(v_i\), at an iteration k, is approximated by

for a positive and sufficiently small constant \(\varepsilon \), being the vector \(\mathbf {e}_i \in \mathbb {R}^{n_D}\) the i column of the identity matrix. Similarly for the gradients approximation of p and \(y_{s}\).

To identify the best point computed so far, the below conditions (24.10) are imposed. Let \(\mathbf {v}^{best}\) be the current best approximation to the optimal solution of problem (24.7). A trial point, \(\bar{\mathbf {v}}\), will be the best point computed so far (replacing the current \(\mathbf {v}^{best}\)) if one of the conditions

holds, where \(\varTheta = \theta +p\). At each iteration, the algorithm computes a trial point \(\bar{\mathbf {v}}\), approximation to the optimal solution, by searching along a direction that is the negative gradient of \(\theta \), or a negative convex combination of the gradients of \(\theta \) and p, \(\theta \) and \(y_{s}\), or p and \(y_{s}\), at the current approximation \(\mathbf {v}\). The selected direction depends on information related to the magnitude of \(\theta \) and p, at \(\mathbf {v}\). For example, if \(p(\mathbf {v})\) is considered sufficiently small, i.e., \(0 \le p(\mathbf {v}) \le \eta _1\), while \(\theta (\mathbf {v})> \eta _1\) (for a small error tolerance \(\eta _1>0\)), then the direction is the negative gradient of \(\theta \) at \(\mathbf {v}\). The search for a step size \(\alpha \in (0,1]\) goals the reduction of \(\theta \) (‘\(M \leftarrow \theta \)’ in Algorithm 2). On the other hand, if both p and \(\theta \) are considered sufficiently small, then the direction is the negative convex combination of the gradients of \(\theta \) and \(y_s\), although the search for \(\alpha \) forces the reduction on \(\theta \).

If both \(\theta \) and p are not small yet (situation that occurs during the initial iterations) the direction is along the negative convex combination of the gradients of \(\theta \) and p, although the line search forces the reduction on \(\theta \). However, if \(0 \le \theta (\mathbf {v}) \le \eta _1\) but \(p(\mathbf {v}) > \eta _1\), then the direction is along the negative convex combination of the gradients of p and \(y_{s}\) and the line search forces the reduction on p. Further details are shown in the Algorithm 2.

The new trial point is accepted for further improvement if it satisfies the conditions to be acceptable to the current filter (see conditions (24.8)), although each trial point is considered as a new approximation to the optimal solution only if it is better than the previously saved best point, according to (24.10). In this situation, a new outer iteration—indexed by k in Algorithm 2—is carried out unless the convergence conditions are satisfied (see (24.11) below). If the trial point is accepted but it does not satisfy (24.10), \(\theta \), p and \(y_{s}\) are evaluated at the trial point and a new inner iteration—indexed by It—is carried out. This inner iterative process runs for a maximum of \(It_{\max }\) iterations.

The trial point might not be acceptable to the filter, in which case another inner iteration is tried. If the number of iterations with non acceptable trial points reaches \(It_{\max }\), the new direction is along the negative convex combination of the gradients of \(\theta \) and \(y_s\) (with a reduction on \(y_s\) in the line search); otherwise, the negative convex combination of the gradients of \(\theta \) and p (with a reduction on \(\theta \) in the line search) is tested.

The convergence conditions are said to be satisfied at a new trial point—the best point computed so far, \(\mathbf {v}^{best}\),—if

for small error tolerances \(\eta _1>0\) and \(\eta _2>0\), where the superscript pr.best refers to the previous best point. The outer iterative process also terminates if the number of iterations exceeds \(k_{\max }\).

24.4 Numerical Experiments

The new direct MS method based on descent directions and the filter methodology has been tested with seven OCP. The MATLAB\(^\circledR \) (MATLAB is a registered trademark of the MathWorks, Inc.) programming language is used to code the algorithm and the tested problems. The numerical experiments were carried out on a PC Intel Core i7–7500U with 2.7 GHz, 256 Gb SSD and 16 Gb of memory RAM. The values set to the parameters are shown in Table 24.1.

First, three problems with free terminal time T are solved. A simple approach is to apply the change of variable \(t = T \tau \), (with \(dt = T d\tau \)) which transforms the problem into a fixed boundary problem on the interval [0, 1] and treats T as an auxiliary variable. When the objective is to minimize T, an alternative is to add a new variable to the states vector \(\mathbf {y} \in \mathbb {R}^{s-1}\) such that \(y^{\prime }_{s}(t) = 1\), with initial value \(y_{s}(0) = 0\).

Problem 24.1

A simple car model (Dubins car) is formulated with three degrees of freedom where the car is imagined as a rigid body that moves in a plane [2]. The position of the car is given by \((x, y, \beta )\) where x and y are the directions and \(\beta \) is the angle with the X axis. The problem is to drive in minimum time the car from a position to the origin:

The results from both strategies to handle T free are shown in Table 24.2. The initial guesses were \(x(t_i)= 2,\, y(t_i)= 0,\, \beta (t_i)= 1, i\in I_N\) and \(u(t_i)= 0, i\in I\). The number of points considered in [0, T] is 11. The table shows the values of J, \(\theta \) and p achieved at iteration k, as well as the number of function evaluations, nfe, and the time in seconds, time. Optimal solution reported [2] is \(J^*= 4.32174\). The results are considered quite satisfactory. We show in Fig. 24.1a, b the optimal states trajectory and control respectively, obtained from the run that considers the change of variable \(t \rightarrow \tau \). Figure 24.1c displays the optimal control required to achieve identical states trajectory from the run that adds a new state variable. Slightly different optimal controls were obtained to reach identical states trajectory.

a States trajectory for Dubins car. b Optimal control for Dubins car. c Optimal control for Dubins car (when adding new \(y_s\)). d States trajectory for R allocation. e Optimal control for R allocation. f Optimal control for R allocation (when adding new \(y_s\)). g States trajectory for Zermelo. h Optimal control for Zermelo. i Optimal control for Zermelo (when adding new \(y_s\))

Problem 24.2

The resource allocation problem (R allocation) goals the assignment of resources in minimum time [2]:

Since \(u_2=1-u_1\) the control vector can be reduced to a scalar \(u_1 \equiv u \in [0,1]\). Using the initial guesses \(y_1(t_i)= 1,\, y_2(t_i)= 0, i\in I_N\), \(u(t_i)= 0, i\in I\) and \(N=11\), the results are shown in Table 24.2. Optimal solution reported [2] is \(J^*= 0.714118\). Figures 24.1d, e show the optimal states \(y_1,\, y_2\) and control \(u_1,\, u_2\) respectively, for the case where a change of variable is applied. Figure 24.1f shows the control for the case of handling T free through the adding of a new state variable. The states trajectory are similar to Fig. 24.1d.

Problem 24.3

Consider an unmanned aerial vehicle (Zermelo) flying in a horizontal plane with constant speed V, although the heading angle u(t) (control input) (with respect to the X axis) can be varied. Winds are assumed to be in the Y direction with speed w. The objective is to fly from point A \(=(0,1)\) to B \(=(0,0)\) in minimum time:

For \(V=1\), \(w=1/\sqrt{2}\) and using the initial guesses \(x(t_i)= 0,\, y(t_i)= 1, i\in I_N\) and \(u(t_i)= 0, i\in I\), the results are shown in Table 24.2 for \(N=11\). A value near \(T=3.5\) is exhibited in [9]. The optimal states \(x,\, y\) and control u (from the run based on the change of variable \(T \rightarrow \tau \)) are shown in Fig. 24.1g, h respectively. Figure 24.1i presents the optimal control obtained from the run that adds a new variable to the states vector.

The next three problems are OCP of the Lagrange form and the last problem is already in the Mayer form.

Problem 24.4

In a continuous stirred-tank chemical reactor (Tank reactor), \(y_1\) represents the deviation from the steady-state temperature, \(y_2\) represents the deviation from the steady-state concentration and u is the effect of the coolant flow on the chemical reaction [10]:

The optimal solution reported in [10], for \(T=0.78\) and \(R=0.1\), is \(J^*= 0.0268\). Using the initial guesses \(y_1(t_i)= 0.05,\, y_2(t_i)= 0, i\in I_N\) and \(u(t_i)= 0.75, i\in I\), with \(N~=~11\), the results are shown in Table 24.3. The proposed strategy has produced again a reasonably good solution. Figures 24.2a, b show the optimal states \(y_1, y_2\) and control u respectively.

States trajectory and optimal control. a States for problem Tank reactor. b Control for problem Tank reactor. c States for problem masstravel. d Control for problem masstravel. e States for problem trajectory. f Control for problem trajectory. g States for problem obstacle. h Control for problem obstacle

Problem 24.5

In the point mass maximum travel example (masstravel), the force u(t) that moves a mass to the longest distance is to be found (with \(T=10\) fixed):

The results, for \(k_0=0.1,\, k_1=0.2,\, k_2=1,\, k_3=1\) and \(N=11\), are shown in Table 24.3. The initial guesses were \(s(t_i)= 1,\, v(t_i)= 2, i\in I_N\) and \(u(t_i)= 5, i\in I\). When transforming the above form into the Mayer form, the objective function value is just s(T) (thus no new state variable was added to the states vector). To confirm convergence, the problem is also solved with \(\eta _1=1E{-}10\), \(\eta _2=1E{-}06\) in (24.11)—identified with \(^\S \) in Table 24.3. Figures 24.2c, d contain the states and control respectively.

Problem 24.6

(trajectory) Find u(t) that minimizes J (with \(T=3\) fixed) [4],

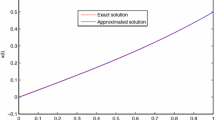

The obtained results for \(N=11\), with the initial guesses \(y(t_i)= 1, i\in I_N\) and \(u(t_i)= 0, i\in I\), are displayed in Table 24.3. Results with \(\eta _1=1E{-}10\), \(\eta _2=1E{-}06\) in (24.11) are also included. The Fig. 24.2e, f present the states and control respectively.

Problem 24.7

The obstacle problem (obstacle) can be reformulated as [3] (\(T=2.9\)):

Using the initial guesses \(y_1(t_i)= 0,\, y_2(t_i)= 0, i\in I_N\), \(u(t_i)= 0, i\in I\) and \(N=11\), the results are shown in Table 24.3. This problem is also solved with \(\eta _1=1E{-}10\), \(\eta _2=1E{-}06\) in (24.11) to analyze the convergence issue. Figures 24.2g, h show the states \(y_1, y_2\) and control u respectively.

24.5 Conclusions

A first-order descent method based on a filter methodology is proposed to solve a finite-dimensional nonlinear optimization problem that arises from the use of a direct multiple shooting method for OCP. The implemented filter method relies on three measures. The two feasibility measures are handled separately in order to give priority to the minimization of the ‘continuity constraints’ violation over the algebraic equality and inequality constraints violation and the objective function. This priority is patent by the use of search directions that are along either the negative of the gradient of the ‘continuity constraints’ violation function or a negative convex combination of that gradient and the gradient of the other constraints violation, or the objective function. Numerical derivatives are implemented in order to avoid computing the first derivatives of the involved functions. The numerical experiments carried out until now have shown that the presented strategy is worth pursuing.

Issues related to the extension of the proposed method to solving retarded OCP with constant delays in the state variables and in the control are now under investigation and will be the subject of a future paper.

References

Biegler L (2010) Nonlinear programming: concepts, algorithms, and applications to chemical processes. MOS-SIAM series on optimization. Society for Industrial and Applied Mathematics, Philadelphia, PA

Frego M (2014) Numerical methods for optimal control problems with applications to autonomous vehicles. PhD thesis, University of Trento

Schlegel M, Stockmann K, Binder T et al (2005) Dynamic optimization using adaptive control vector parameterization. Comput Chem Eng 29(8):1731–1751

Diehl M, Bock HG, Diedam H et al (2006) Fast direct multiple shooting algorithms for optimal robot control. In: Diehl M, Mombaur K (eds) Fast motions in biomechanics and robotics. Lecture notes in control and information sciences, vol 340, pp 65–93

Fletcher R, Leyffer S (2002) Nonlinear programming without a penalty function. Math Program Ser A 91(2):239–269

Audet C, Dennis JE Jr (2004) A pattern search filter method for nonlinear programming without derivatives. SIAM J Optim 14(4):980–1010

Assassa F, Marquardt W (2014) Dynamic optimization using adaptive direct multiple shooting. Comput Chem Eng 60:242–259

Schäder A, Kühl P, Diehl M et al (2007) Fast reduced multiple shooting methods for nonlinear model predictive control. Chem Eng Process 46(11):1200–1214

How JP 16.323 Principles of Optimal Control. Lecture 7 Numerical Solution in Matlab. Spring 2008. Massachusetts Institute of Technology: MIT OpenCourseWare. License: Creative Commons BY-NC-SA. http://ocw.mit.edu

Kirk DE (1970) Optimal control theory: an introduction. Prentice Hall Inc, NJ

Acknowledgments

We acknowledge the financial support of CIDEM, R&D unit funded by the FCT—Portuguese Foundation for the Development of Science and Technology, Ministry of Science, Technology and Higher Education, under the Project UID/EMS/0615/2019, and FCT within the Projects Scope: UIDB/00319/2020 and UIDB/00013/2020 and UIDP/00013/2020 of CMAT-UM.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2021 The Editor(s) (if applicable) and The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Cite this chapter

Ramadas, G.C.V., Fernandes, E.M.G.P., Rocha, A.M.A.C., Costa, M.F.P. (2021). A Multiple Shooting Descent-Based Filter Method for Optimal Control Problems. In: Gaspar-Cunha, A., Periaux, J., Giannakoglou, K.C., Gauger, N.R., Quagliarella, D., Greiner, D. (eds) Advances in Evolutionary and Deterministic Methods for Design, Optimization and Control in Engineering and Sciences. Computational Methods in Applied Sciences, vol 55. Springer, Cham. https://doi.org/10.1007/978-3-030-57422-2_24

Download citation

DOI: https://doi.org/10.1007/978-3-030-57422-2_24

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-57421-5

Online ISBN: 978-3-030-57422-2

eBook Packages: Computer ScienceComputer Science (R0)