Abstract

Sparsity is an important prior for various signals, and sparsity-based methods have been widely used in hyperspectral image classification. This chapter introduces the sparse representation methodology and its related techniques for hyperspectral image classification. To start with, we provide a brief review on the mechanism, models, and algorithms of sparse representation classification (SRC). We then introduce several advanced SRC methods that can improve hyperspectral image classification accuracy by incorporating spatial–spectral information into SRC models. As a case study, a hyperspectral image SRC method based on adaptive spatial context is discussed in detail to demonstrate the performance of SRC methods in hyperspectral image classification.

Access provided by Autonomous University of Puebla. Download chapter PDF

Similar content being viewed by others

8.1 Introduction

In the last few decades, sparsity has become one of the most important concepts in the field of signal processing. Sparsity concept has been widely employed in a variety of fields, e.g., source separation, restoration, and compression. Sparse representation was originally derived from compressed sensing [1,2,3], suggesting that if a signal is sparse or compressive, the original signal can be reconstructed with a few number of samplings. By introducing sparsity in sampling, compressed sensing has achieved great success in information theory, image acquisition, image processing, medical imaging, remote sensing, etc. Compressed sensing has also motivated many researches on sparse representation. As a matter of fact, signals in real world may not be sparse in the original space, but they can be sparse in an appropriate basis.

Hyperspectral imaging sensors record reflected light in hundreds of narrow frequencies covering the visible, near-infrared, and shortwave infrared bands. This abundant spectral information yields more precise measures and makes it possible to gain insight into the material at each pixel in the image. Supervised classification plays a central role in hyperspectral image (HSI) analysis, such as land-use or land-cover mapping, forest inventory, or urban-area monitoring [4]. Many methods have been proposed for solving the HSI classification problem, such as logistic regression [5], support vector machines (SVM) [6], artificial neural networks [7], and k-nearest neighbor (KNN) classifier [8]. These methods can serve the purpose of generating acceptable classification results. However, the high dimensionality of hyperspectral data remains a challenge for HSI classification.

To address this problem, sparse representation [9, 10] has been employed for classifying high-dimensional signals. A sparse representation classification (SRC) method [10] has been first proposed for face recognition. A test signal is sparsely represented by an over-complete dictionary composed of labeled training samples. At the decision level, the label of each test sample is set as the class whose corresponding atoms maximally represent the original test sample. Since then, SRC has been widely used in face recognition [10, 11], speech recognition [12], and image super-resolution [13]. Chen et al. [14] proposed an SRC framework for solving the HSI classification problem, in which each sample is a pixel’s spectral responses. Inspired by this work, many improved SRC methods have been proposed for HSI classification.

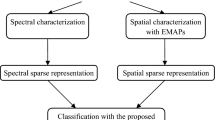

In this chapter, we investigate the SRC methods and present several advanced models of sparse representation for HSI classification. More specifically, we will give a case study of SRC method that improves the classification accuracy by incorporating the spectral–spatial information of HSI into the SRC framework.

8.2 Sparse Representation-Based HSI Classification

In the theory of sparse representation, given a dictionary, each signal can be linearly represented by a set of atoms in the dictionary. Designing an over-complete dictionary and obtaining the sparse representation vector through sparse coding are the two main goals of sparse representation.

In HSI classification, SRC assumes that the features belonging to the same class approximately lie in the same low-dimensional subspace spanned by dictionary atoms from the same class. Suppose we have M distinct classes and \( N_{i} (i = 1,2, \ldots ,M) \) training samples for each class. Each class has a sub-dictionary \( {\mathbf{D}}_{i} = {\mathbf{[d}}_{i,1} {\mathbf{,d}}_{i,2} , \ldots ,{\mathbf{d}}_{{i,N_{i} }} {\mathbf{]}} \in {\mathbb{R}}^{{B \times N_{i} }} \) in which the columns represent training samples and B is the number of spectral bands. A test pixel \( {\mathbf{x}} \in {\mathbb{R}}^{B} \) can be represented by a sparse linear combination of the training pixels as

where \( {\mathbf{D}} = [{\mathbf{D}}_{1} \,{\mathbf{D}}_{2} \ldots {\mathbf{D}}_{M} ] \in {\mathbb{R}}^{B \times N} \) with \( N = \sum\nolimits_{i = 1}^{M} {N_{i} } \) is the dictionary constructed by combining all sub-dictionaries \( \{ {\mathbf{D}}_{i} \}_{i = 1, \ldots ,M} \). \( {\varvec{\upalpha}} \in {\mathbb{R}}^{N} \) is an unknown sparse vector with K nonzero entries. Here, we denote \( K = \left\| {\varvec{\upalpha}} \right\|_{0} \). The sparse coefficient vector \( {\varvec{\upalpha}} \) is obtained by solving the following problem

where \( K_{0} \) is a pre-specified upper bound of K. The class label of x is determined by the minimal residual between x and its approximation from each class sub-dictionary, i.e.,

where \( {\varvec{\upalpha}}_{i} \) is the sub-vector corresponding to the i-th class, and \( {\mathbf{D}}_{i} \) denotes the sub-dictionary.

Problem (2) is NP-hard, and can be approximately solved by greedy algorithms, such as orthogonal match pursuit (OMP) and subspace pursuit (SP).

In OMP algorithm, we select one atom from the dictionary that is most correlated with the residual. The algorithmic flow of the OMP algorithm is described in Algorithm 8.1.

The procedure of SP algorithm is similar to that of OMP algorithm. The difference is that SP finds all the K atoms that satisfy (8.2) during one iteration. The complete procedure of SP algorithm is provided in Algorithm 8.2.

8.3 Advanced Models of Sparse Representation for Hyperspectral Image Classification

Many advanced methods based on SRC have been proposed for HSI classification.

In HSI, pixels within a small neighborhood usually consist of similar materials. Therefore, these pixels tend to have high spatial correlation [14]. The corresponding sparse coefficient vectors share a common sparsity pattern as follows.

Let \( \{ {\mathbf{x}}_{t} \}_{t = 1, \ldots ,T} \) be T pixels in a fixed window centered at \( {\mathbf{x}}_{1} \). These pixels can be represented by

In the joint sparsity model (JSM), the sparse vectors \( \{ {\varvec{\upalpha}}_{t} \}_{t = 1, \ldots ,T} \) share the same support \( \Omega \). S is a sparse matrix with \( \left|\Omega \right| \) nonzero rows, which can be obtained by solving the following optimization problem,

where \( \left\| {\mathbf{S}} \right\|_{{{\text{row}},0}} \) denotes the number of nonzero rows of S, and \( \left\| \cdot \right\|_{F} \) denotes the Frobenius norm. The problem in (8.5) can be approximately solved by the simultaneous version of OMP (SOMP). The label of the central pixel \( {\mathbf{x}}_{1} \) can be determined minimizing the total residual

where \( {\mathbf{S}}_{i} \) is the sub-sparse coefficient matrix corresponding to the i-th class.

Note that, the optimization models (8.2) and (8.5) are non-convex, and can be converted into convex versions by relaxing the norm constraints:

where \( \left\| {\varvec{\upalpha}} \right\|_{1} = \sum\limits_{i = 1}^{N} {\left| {\alpha_{i} } \right|} \) is the \( {\ell }_{1} \) norm, \( \left\| {\mathbf{S}} \right\|_{1,2} = \sum\limits_{i = 1}^{N} {\left\| {{\mathbf{s}}^{i} } \right\|_{2} } \) is the \( {\ell }_{1,2} \) norm, and \( {\mathbf{s}}^{i} \) represents the i-th row of S.

The JSM model enforces that the pixels in the neighborhood of the test sample are represented by the same atoms. However, if the neighboring pixels are on the boundary of several homogeneous regions, they would be classified into different classes. In this scenario, different sub-dictionaries should be used. Laplacian sparsity promotes sparse coefficients of neighboring pixels belonging to different clusters to be different from each other. For this reason, a weight matrix W is introduced, where \( w_{ij} \) represents the similarity between a pair of pixels \( {\mathbf{x}}_{i} \) and \( {\mathbf{x}}_{j} \) in the neighborhood of the text sample. As reported in [15], the optimization problem with additional Laplacian sparsity prior can be described as

where \( \lambda_{1} \) and \( \lambda_{2} \) are regularization parameters. \( {\mathbf{s}}_{i} \) is the i-th column of matrix S. Weight matrix W can characterize the similarity among neighboring pixels in the spectral space. If two pixels are similar, the weight value will be large. As a result, their corresponding sparse codes will be similar. On the other hand, if two pixels are less similar, the weight value will be small, allowing a large difference between their sparse codes. Laplacian sparsity prior is more flexible than the joint sparsity prior. In fundamental, the joint sparsity prior can be regarded as a special case of Laplacian sparsity. Laplacian sparsity prior can well characterize more pixels in the image, since the sparse codes of the neighboring pixels are not limited to have the same supports. Suppose \( {\mathbf{L}} = {\mathbf{I}} - {\mathbf{H}}^{ - 1/2} {\mathbf{WH}}^{ - 1/2} \) is the normalized symmetric Laplacian matrix and, H is the degree matrix computed from W. We can have the following new optimization problem:

In JSM model, each pixel is represented by the atoms in the dictionary, and is classified according to the residual between the sparse codes multiplying the sub-dictionary. It is a reasonable assumption that each pixel can only be represented by one sub-dictionary. This condition can be achieved by enforcing the sparse codes corresponding to one sub-dictionary to be active and other ones to be inactive. Group Lasso sums up the Euclidean norm of the sparse codes corresponding to all sub-dictionaries as the sparsity prior. In [15], group Lasso is introduced as the new regularization in the optimization problem, i.e.,

where \( g \subset \{ G_{1} ,G_{2} , \cdots G_{M} \} \), \( \sum\limits_{g \in G} {\left\| {{\varvec{\upalpha}}_{g} } \right\|_{2} } \) represents the group sparse prior defined in terms of M groups, \( \omega_{g} \) is the weight and is set to the square root of the cardinality of the corresponding group. Note here that \( {\varvec{\upalpha}}_{g} \) represents the coefficients of different groups. In a similar way, the group sparsity [15] can be employed in the JSM model as follows:

where \( \sum\limits_{g \in G} {\left\| {{\mathbf{S}}_{\text{g}} } \right\|_{F} } \) refers to the collaborative group Lasso regularization defined in terms of groups, and \( {\mathbf{S}}_{\text{g}} \) is the sub-matrix corresponding to the g-th sub-dictionary.

In models (8.11) and (8.12) only group sparsity is introduced, and the sparsity of the sparse code corresponding to sub-dictionary is not taken into consideration. When the sub-dictionary is over-complete, it is important to introduce the sparsity within each group [15]. The \( {\ell }_{1} \)-norm regularization can be incorporated into the objective function of (8.11) as follows:

Similarly, the problem in (8.13) can be extended to JSM as follows:

Another effective method is to introduce the correlation coefficient (CC) [16]. Traditionally, CC value is used to measure the correlation between different variables. In HSI classification, we can use CCs to determine whether pixels represent the same class. In general, CC can be calculated as follows:

where \( \text{var} ({\mathbf{x}}_{i} ) \) and \( \text{var} ({\mathbf{x}}_{j} ) \) are the variance of \( {\mathbf{x}}_{i} \) and \( {\mathbf{x}}_{j} \), respectively. \( {\mathbf{x}}_{iz} \) refers to the z-th element in \( {\mathbf{x}}_{i} \).\( u_{{{\mathbf{x}}_{i} }} = (1/B)\sum\nolimits_{z = 1}^{B} {{\mathbf{x}}_{iz} } \), and \( u_{{{\mathbf{x}}_{j} }} = (1/B)\sum\nolimits_{z = 1}^{B} {{\mathbf{x}}_{jz} } \) represents the mean values of the corresponding vectors. According to the definition of CC, we have \( \left| \rho \right| \le 1 \). Stronger correlation indicates that \( \rho \) is close to 1.

Following the method in [16], CCs among the training samples and test samples are first calculated. Given a test sample x and any training sample \( {\mathbf{d}}_{j}^{i} \), where \( {\mathbf{d}}_{j}^{i} \) represents the j-th atom in the i-th sub-dictionary. The CC between x and \( {\mathbf{d}}_{j}^{i} \) can be calculated as follows:

We define a matrix \( {\varvec{\uprho}}^{i} = \{ \rho_{1}^{i} ,\rho_{2}^{i} , \ldots ,\rho_{{N_{i} }}^{i} \} \). This matrix is sorted in descending order according to CCs among different training samples. Subsequently, the mean of L largest \( {\varvec{\uprho}}^{i} \) is calculated as the CC \( cor^{i} \). Assuming that the L largest \( {\varvec{\uprho}}^{i} \) consists of \( \{ \rho_{1}^{i} ,\rho_{2}^{i} , \ldots ,\rho_{L}^{i} \} \), the CC \( cor^{i} \) can be calculated as

Finally, the CC is combined with the JSM at the decision level to exploit the CCs among training and test samples as well as the representation residuals.

where \( cor^{i} \in [0,1] \) represents the CCs among pixels, and \( \lambda \) is the regularization parameter.

One more approach to improve SRC is kernel trick. As an extension of SRC, kernel SRC (KSRC) uses the kernel trick to project data into a feature space, in which the projected data are linearly separable.

Suppose the feature mapping function \( \phi :{\mathbb{R}}^{B} \to {\mathbb{R}}^{K} ,(B \le K) \) maps the features and also the dictionary to a high-dimensional feature space, \( {\mathbf{x}} \to \phi ({\mathbf{x}}) \), \( {\mathbf{D}} = [{\mathbf{d}}_{1} ,{\mathbf{d}}_{2} , \ldots ,{\mathbf{d}}_{N} ] \to \phi ({\mathbf{D}}) = [\phi ({\mathbf{d}}_{1} ),\phi ({\mathbf{d}}_{2} ), \ldots ,\phi ({\mathbf{d}}_{N} )] \, \). By replacing the mapped features and dictionary in (8.7), we have the KSRC model,

Similarly, the class label of x is determined as

It is worth mentioning that all \( \phi \) mappings used in KSRC occur in the form of inner products, allowing us to define a kernel function k for any samples \( {\mathbf{x}}_{i} \in {\mathbb{R}}^{B} \).

In this way, KSRC can be constructed using only the kernel function, without considering the mapping \( \phi \) explicitly. Then, the optimization problem can be rewritten as

where \( C = \frac{1}{2}{\mathbf{k}}({\mathbf{x}}_{i} ,{\mathbf{x}}_{j} ) \) is a constant, Q is a \( B \times B \) matrix with \( {\mathbf{Q}}_{ij} = {\mathbf{k}}({\mathbf{d}}_{i} ,{\mathbf{d}}_{j} ) \), and p is a \( B \times 1 \) vector with \( {\mathbf{p}}_{i} = {\mathbf{k}}({\mathbf{d}}_{i} ,{\mathbf{x}}) \). Analogously, the classification criterion can be rewritten as

where \( \delta_{i} ( \cdot ) \) is the characteristic function that selects coefficients within the i-th class and sets all other coefficients to zero.

Valid kernels are only those satisfying the Mercer’s condition [17, 18]. Some commonly used kernels in kernel methods include linear kernel, polynomial kernel, and Gaussian radial basis function kernel. Assuming \( {\mathbf{k}}_{1} \) and \( {\mathbf{k}}_{2} \) are two valid Mercer’s kernels over \( {\mathcal{X}} \times {\mathcal{X}} \) with \( {\mathbf{x}}_{i} \in {\mathcal{X}} \subseteq {\mathbb{R}}^{B} \) and \( z > 0 \), the direct sum \( {\mathbf{k}}({\mathbf{x}}_{i} ,{\mathbf{x}}_{j} ) = {\mathbf{k}}_{1} ({\mathbf{x}}_{i} ,{\mathbf{x}}_{j} ) + {\mathbf{k}}_{2} ({\mathbf{x}}_{i} ,{\mathbf{x}}_{j} ) \), tensor product \( {\mathbf{k}}({\mathbf{x}}_{i} ,{\mathbf{x}}_{j} ) = {\mathbf{k}}_{1} ({\mathbf{x}}_{i} ,{\mathbf{x}}_{j} ) \cdot {\mathbf{k}}_{2} ({\mathbf{x}}_{i} ,{\mathbf{x}}_{j} ) \), or scaling \( {\mathbf{k}}({\mathbf{x}}_{i} ,{\mathbf{x}}_{j} ) = z{\mathbf{k}}_{1} ({\mathbf{x}}_{i} ,{\mathbf{x}}_{j} ) \) are valid Mercer’s kernels [19].

A suitable kernel is a kernel whose structure reflects data relations. To properly define such a kernel, unlabeled information and geometrical relationships between labeled and unlabeled samples are very useful. The spatial–spectral kernel sparse representation is proposed [20], in which the neighboring filtering kernel is presented and the corresponding optimization algorithm is developed.

A full family of composite kernels (CKs) for the combination of spectral and spatial contextual information have been presented in SVM [21, 22]. These kernels are valid and are all suitable for KSRC. Although one can improve the performance of KSRC by CK, it is worth noting that the kernel should learn all high-order similarities between neighboring samples directly, and should reflect the data lying in complex manifolds. For these purposes, the neighbor filtering (NF) kernel would be a good choice, which computes the spatial similarity between neighboring samples in the feature space.

Given \( {\mathbf{x}}^{m} \in\Omega ,m = 1,2, \ldots ,\omega^{2} \), with \( \Omega \) being the spatial window \( \omega \) around pixel. Let \( \phi ({\mathbf{x}}^{m} ) \) be the image of \( {\mathbf{x}}^{m} \) under the mapping \( \phi \). In order to describe \( \phi ({\mathbf{x}}) \), a straightforward way is to use the average of spatially neighboring pixels in the kernel space. This method is similar to the mean filtering. The estimated vector is given by

However, the mean filtering rarely reflects relative contributions (which treats every neighboring pixel equally). To address this issue, the neighboring filtering is defined as

where \( {\mathbf{w}}^{m} = \exp ( - \gamma_{0} ||{\mathbf{x}} - {\mathbf{x}}^{m} ||_{2}^{2} ) \) and parameter \( \gamma_{0} > 0 \) acts as a degree of filtering.

Let us consider two different pixels \( {\mathbf{x}}_{i} \) and \( {\mathbf{x}}_{j} \). We are interested in defining a similarity function that estimates the proximity between them in a sufficiently rich feature space. A straightforward kernel function reflecting the similarity between them is obtained by evaluating the kernel function between the estimated vectors

which is referred to as neighbor filtering (NF) kernel. Similarly, we can define mean filtering (MF) kernel as follows:

which computes the spatial similarity between neighboring samples, whereas the cluster similarity is computed in the mean map kernel.

Since Q is a valid kernel, the objective function of (8.22) is convex, which is the same as the objective function of (8.19) except for the definition of Q and p. Therefore, alternating direction method of multipliers (ADMM) [23] can be used to solve this problem. By introducing a new variable \( {\mathbf{u}} \in {\mathbb{R}}^{B} \), the objective function can be rewritten as

ADMM imposes the constraint \( {\mathbf{u}} = {\mathbf{a}} \) which can be defined as

where \( t \ge 0 \) and \( \mu > 0 \). The minimizing solution \( {\varvec{\upalpha}}^{(t + 1)} \) is simply determined as

where I is the identity matrix. The minimizing solution \( {\mathbf{u}}^{(t + 1)} \) is the soft threshold [24],

where \( {\text{soft(}} \cdot ,\tau ) \) denotes the component-wise application of the soft-threshold function \( y \leftarrow {\text{sign}}(y)\hbox{max} \{ \left| y \right| - \tau ,0\} \).

The optimization algorithm for KSRC is summarized in Algorithm 8.3.

8.4 A Case Study of Hyperspectral Image Sparse Representation Classification Based on Adaptive Spatial Context

8.4.1 Model and Algorithm

In model (8.5), pixels in a fixed window centered at the test pixel are selected to be simultaneously sparse represented. All pixels in the fixed window have the same correlation with the center pixel. However, this condition does not always hold, especially for pixels located on the edge which can be seen as class boundary. It is obvious that pixels on the same side of the edge will have stronger correlation. Since different pixels have different spatial context, the definition of local structure for the adaptive spatial context is essential to HSI classification.

In the field of image recovery, steering kernel (SK) [25] is a popular local method, which can effectively express the adaptive local structure. This method starts with making an initial estimate of the image gradients using a gradient estimator, and then uses the estimate to measure the dominant orientation of the local gradients in the image [26]. The obtained orientation information is then used to adaptively “steer” the local kernel, resulting in elongated, elliptical contours spread along the directions of the local edge structure.

Taking into consideration that HSI generally contains hundreds of sub-images, a high-dimensional steering kernel (HDSK) [27] is defined where the gradient estimator contains every sub-image’s gradients. The gradients in vertical and horizontal directions are written as follows:

where \( {\mathbf{x}}_{i + 1}^{v} \) and \( {\mathbf{x}}_{i + 1}^{h} \) represent the neighboring pixels of \( {\mathbf{x}}_{i} \) in vertical and horizontal directions. HDSK for pixel \( {\mathbf{x}}_{i} \) is defined as

where \( {\mathbf{e}}_{i} \) and \( {\mathbf{e}}_{j} \) represent the coordinates of pixel \( {\mathbf{x}}_{i} \) and pixel \( {\mathbf{x}}_{j} \), respectively, h is the smoothing parameter used for controlling the supporting range of the steering kernel, and \( {\mathbf{C}}_{i} \) is the symmetric gradient covariance in vertical and horizontal directions in a \( M \times M \) window centered at \( {\mathbf{x}}_{i} \). A naïve estimate of this covariance matrix can be obtained by \( {\mathbf{C}}_{i} = {\mathbf{J}}_{i}^{T} {\mathbf{J}}_{i} \), where

Here, \( {\mathbf{x}}_{1} , \cdots ,{\mathbf{x}}_{M \times M} \) are the \( M \times M \) neighboring pixels in the local window centered at \( {\mathbf{x}}_{i} \). The resulting \( w_{ij} \) can be explained as the correlation between pixels \( {\mathbf{x}}_{i} \) and \( {\mathbf{x}}_{j} \). Since a large weight in steering kernel mean two pixels have strong correlation, HDSK could be an effective way to represent the local structure. For example, Fig. 8.1 shows the 10-th band image in the University of Pavia HSI and the calculated HDSKs for different pixels. It can be observed that when pixels are in a homogeneous region, the shape of HDSK is cycles without any directional preference. When the pixels are in the intersection or the boundary of different classes, the shape of HDSKs is oval and exhibits clear directional preference. The direction of the long axis of the oval indicates that similar pixels may appear in this direction.

Once having determined the local structure of a test pixel \( x_{i} \) using (8.20), we select P pixels whose weights are larger than the others. These pixels can be stacked as \( {\mathbf{X}}^{P} = [{\mathbf{x}}_{i1} \, {\mathbf{x}}_{i2} \ldots {\mathbf{x}}_{iP} ] \in {\mathbb{R}}^{B \times P} \), and \( {\mathbf{w}}^{P} = [w_{1} \, w_{2} \ldots w_{P} ]^{T} \) is the corresponding weight vector. It is believed that these selected P pixels have more compact inner patterns than those in a fixed window do. The adaptive spatial contextual information is introduced by the following problem:

Once the coefficient matrix \( {\mathbf{S}}^{P} \) is obtained, a new classifier is designed based on the HDSK. As the weights in the HDSK reflect the influence of neighboring pixels on the test pixel, the original decision rule (8.6) is replaced by

The joint sparse HSI classification method based on adaptive spatial context is named adaptive spatial context SOMP (ASC-SOMP), of which the general flow is summarized in Algorithm 8.4.

8.4.2 Experimental Results and Discussion

This section uses two real hyperspectral datasets to verify the effectiveness of ASC-SOMP algorithm. For each image, the pixel-wise SVM, SVM with composite kernel (SVM-CK) [19], OMP [14], SOMP [14] are compared with ASC-SOMP both visually and quantitatively. We select Gaussian radial basis function (RBF) for the pixel-wise SVM and SVM-CK methods, since RBF has proved its capability handling complex nonlinear class distributions. The parameters in SVM-based methods are obtained by fivefold cross-validation. For methods involved with composite kernels, the spatial kernels were built by using the mean and standard deviation of the neighboring pixels in a window per spectral channel. Each value of the results is obtained after performing ten Monte Carlo runs.

The training and test samples are randomly selected from the available ground truth map. The classification accuracy is evaluated by the overall accuracy (OA) which is defined as the ratio of the number of accurately classified samples to the number of test samples, the coefficient of agreement \( (\kappa ) \) which is the ratio of the amount of corrected agreement to the amount of expected agreement, and the average accuracy (AA). To be specific, OA is calculated by

where N is the total number of samples, and \( {\mathbf{E}}_{ij} \) represents the number of samples in class i which are miss-classified to class j.

AA is calculated by

The \( \kappa \) statistic is calculated by weighting the measured accuracies. This metric incorporates the diagonal and off-diagonal entries of the confusion matrix and is given by

8.4.2.1 Hyperspectral Dataset of AVIRIS Indian Pines

The Indian Pines image contains 145 × 145 pixels and 200 spectral reflectance bands, among which 24 water absorption bands have been removed. The ground truth contains 16 land cover classes and a total of 10366 labeled pixels. We randomly choose 10% of labeled samples for training, and use the rest 90% for testing. The false color image and ground truth are shown in Fig. 8.2a, b.

The parameters for ASC-SOMP algorithm are set to \( P = 120 \), \( K_{0} = 25 \), \( h = 25 \), and \( M = 21 \). The window size of SOMP algorithm is empirically set to \( 9 \times 9 \). The classification results, in terms of overall accuracy (OA), average accuracy (AA), \( \kappa \) statistic, and class individual accuracies, are shown in Table 8.1. The final maps are illustrated in Fig. 8.2c–g. It can be observed that ASC-SOMP algorithm achieves the highest OA of 96.79%, which is 1.5% higher than the second-highest OA. Classification results using different percentages of labeled samples for training are shown in Fig. 8.3. In this figure and the following, error bars indicate the standard deviation by random sampling. From Fig. 8.3, both numerical and statistical differences can be observed.

Next, we demonstrate the impact of the number of selected neighboring pixels P upon the performance of ASC-SOMP algorithm. We use 10% of data in each class as training samples. The number of selected pixels P ranges from \( P = 80 \) to \( P = 140 \), and the sparsity level \( K_{0} \) ranges from \( K_{0} = 5 \) to \( K_{0} = 45 \). The plots of overall accuracy evaluated on the entire test set are shown in Fig. 8.4. When \( K_{0} \ge 25 \) and \( P \ge 110 \), a relatively high classification accuracy can be achieved. Compared with SOMP algorithm, ASC-SOMP leads to the same optimal \( K_{0} \) value, but the optimal P value is significantly larger. As pixels are selected according to their spatial correlation to the center pixel, it is reasonable to select more pixels that can be sparsely represented simultaneously.

To investigate the effect of the introduced adaptive spatial context, we compare ASC-SOMP with traditional joint sparsity method in detail. It is obvious that SOMP is not able to identify any samples belonging to oats class. This observation is because oat pixels cover a very narrow region of size \( 10 \times 2 \) located in the middle-left of the image. In SOMP, the optimal \( 9 \times 9 \) local window centered at each oat pixel is dominated by pixels belonging to the other two adjacent classes. In contrast, ASC-SOMP achieves a 22.22% classification accuracy for oat class. By introducing adaptive spatial context, pixels distributed along the direction of the narrow region are selected as they have large correlation with the test pixel. On the other hand, pixels belonging to the other two classes whose weights are small have less impact upon our decision rule. Thus, better results can be obtained. However, the classification accuracy for oat class is still very low, because the total number of oat class is much less than the selected pixels to be sparsely represented simultaneously, and most of the selected pixels do not belong to oat class oat.

Taking into consideration that the effect of adaptive spatial context is clearer in the class boundary, more attention should be paid on the edge. We amplify the region of SOMP result and the region of ASC-SOMP result to verify the effect of adaptive spatial context. Figure 8.5 shows that our classification result has less wrong-classified pixels in the class boundary, demonstrating the advantages of the adaptive spatial context.

8.4.2.2 Hyperspectral Dataset of ROSIS Pavia University

The second hyperspectral data set was collected by the ROSIS optical sensor over the urban area of the Pavia University, Italy. The image size in pixels is \( 610 \times 340 \), with a very high spatial resolution of 1.3 m per pixel. The number of data channels in the acquired image is 103 (with the spectral range from 0.43 to 0.86 \( \mu \text{m} \)). Nine classes of interest were considered, including tree, asphalt, bitumen, gravel, metal sheet, shadow, bricks, meadow, and soil. Figure 8.6a, b shows the three-band false color image and the ground truth map, respectively. We randomly sampled 60 pixels for each class as the training samples and use the remainder as test samples. The optimal parameter settings for the ASC-SOMP method are \( P = 100 \) and \( K_{0} = 5 \). In SOMP, the window size was set to \( 9 \times 9 \), and the sparsity level was set to \( K_{0} = 15 \). We set \( h = 25 \) and \( M = 21 \) as in the previous set of experiments. The final classification maps are illustrated in Fig. 8.6c–g. The classification results, in term of overall accuracy (OA), average accuracy (AA), k statistic, and class individual accuracies, are provided in Table 8.2. The ASC-SOMP method outperforms other methods except for SVM-CK. SVM-CK achieves the best results since it is a spectral–spatial nonlinear kernel method. Figure 8.7 illustrates the classification accuracies by using different number of training samples. This result justifies the robustness of ASC-SOMP method. Figure 8.8 shows the performance in terms of overall accuracy with different numbers of selected pixels P at sparsity level \( K_{0} = 5 \) and \( K_{0} = 10 \), respectively. The number of selected pixels P ranges from 50 to 110. Figure 8.8 also shows that the overall accuracy improves as P value increases. This conclusion isconsistent with the conclusion drawn on the dataset of AVIRIS Indian Pines.

8.4.2.3 Discussion

The ASC-SOMP method and the nonlocal-weighted version of SOMP (NLW-JSRC) [28] both were developed for improving the original SOMP method. The weights for the neighboring pixels are calculated in both methods. We compared our method with NLW-JSRC. All experiments were performed using the same experimental setup as in the work of NLW-JSRC, where 9% of the labeled data are randomly sampled as the training samples, and the remainder of the data are used as test samples. Tables 8.3 and 8.4 present the comparisons of results by both methods. We can observe that the ASC-SOMP method outperforms the NLW-JSRC method, indicating that the steering kernel can better describe the spatial context than the nonlocal weights can.

h and M are two important parameters that control the supporting range of the steering kernel and determine the contributions of the selected pixels to the classification of test pixel. We further evaluate the classification accuracy on the two images for different h and M values. We use the same training samples as in previous experiments. h ranges from 1 to 45, and the window size M ranges from \( 13 \times 13 \) to \( 29 \times 29 \). Figure 8.9a indicates that the classification accuracy is relatively high when h is between 10 and 35. If h is too small, the variance of the weights is large, resulting in the outcome that a few pixels with large weights dominate the classification decision. If h is too large, on the other hand, the gap between different pixels’ weights is not clear enough, as the adaptive spatial context information is not used as much as possible. We can also observe from Fig. 8.9b that the classification accuracy is robust to the window size M as long as there are enough pixels to be selected.

8.5 Conclusions

Sparsity-based methods play an important role in HSI classification. Taking into consideration that the spectrum of a pixel lies in the low-dimensional subspace spanned by the training samples of the same class, sparse representation classification (SRC) is widely employed in HSI classification. Many advanced SRC models are presented to improve the classification accuracy, based on the structural sparsity priors, spectral–spatial information, kernel tricks, etc. This chapter reviews the structural sparsity priors and explains how the spectral–spatial information of HSI is incorporated into the SRC method. More specifically, a case study of HSI sparse representation classification based on adaptive spatial context is presented in detail. Experimental results demonstrate that, by combining SRC and adaptive spectral–spatial information, the performances of SRC can be significantly improved. Future work can be directed toward tensor sparse representation which can take full advantage of the high-order correlation in HSI and can preserve the spectral–spatial structure of HSI.

References

Donoho DL (2006) Compressed sensing. IEEE Trans Inf Theory 52(4):1289–1306

Baraniuk RG (2007) Compressive sensing. IEEE Signal Process Mag 24(4):118–121

Candès EJ, Romberg J, Tao T (2006) Robust uncertainty principles, Exact signal reconstruction from highly incomplete frequency information. IEEE Trans Inf Theory 52(2):489–509

Sun L, Zebin W, L Jianjun, X Liang, Wei Z (2015) Supervised spectral-spatial hyperspectral image classification with weighted markov random fields. IEEE Trans Geosci Remote Sens 53(3):1490–1503

Li J, Bioucas-Dias JM, Plaza A (2010) Semisupervised hyperspectral image segmentation using multinomial logistic regression with active learning. IEEE Trans Geosci Remote Sens 48(11):4085–4098

Melgani F, Bruzzone L (2004) Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans Geosci Remote Sens 42(8):1778–1790

Pan B, Shi Z, Xu X (2018) MugNet, deep learning for hyperspectral image classification using limited samples. ISPRS J Photogramm Remote Sens 145:108–119

Ma L, Crawford MM, Tian J (2010) Local manifold learning-based k-nearest-neighbor for hyperspectral image classification. IEEE Trans Geosci Remote Sens 48(11):4099–4109

Aharon M, Elad M, Bruckstein A (2006) K-SVD, an algorithm for designing overcomplete dictionaries for sparse representation. IEEE Trans Signal Process 54(11):4311

Wright J, Yang AY, Ganesh A et al (2009) Robust face recognition via sparse representation. IEEE Trans Pattern Anal Mach Intell 31(2):210–227

Wagner A, Wright J, Ganesh A et al (2012) Toward a practical face recognition system, robust alignment and illumination by sparse representation. IEEE Trans Pattern Anal Mach Intell 34(2):372–386

Gemmeke JF, Virtanen T, Hurmalainen A (2011) Exemplar-based sparse representations for noise robust automatic speech recognition. IEEE Trans Audio Speech Lang Process 19(7):2067–2080

Yang J, Wright J, Huang TS et al (2010) Image super-resolution via sparse representation. IEEE Trans Image Process 19(11):2861–2873

Chen Y, Nasrabadi NM, Tran TD (2011) Hyperspectral image classification using dictionary-based sparse representation. IEEE Trans Geosci Remote Sens 49(10):3973–3985

Sun X, Qu Q, Nasrabadi NM et al (2014) Structured priors for sparse-representation-based hyperspectral image classification. IEEE Geosci Remote Sens Lett 11(7):1235–1239

Tu B, Zhang X, Kang X et al (2018) Hyperspectral image classification via fusing correlation coefficient and joint sparse representation. IEEE Geosci Remote Sens Lett 15(3):340–344

Liu J, Wu Z, Xiao Z, Yang J (2017) Hyperspectral image classification via kernel fully constrained least squares. In: 2017 IEEE international geoscience and remote sensing symposium, Fort Worth, 23–28 July 2017, pp 2219–2222

Aizerman A, Braverman E, Rozoner L (1964) Theoretical foundations of the potential function method in pattern recognition learning. Autom Remote Control 25:821–837

Camps-Valls G, Gomez-Chova L, Muñoz-Mari’ J, Vila-Francés J, Calpe-Maravilla J (2006) Composite kernels for hyperspectral image classification. IEEE Geosci Remote Sens Lett 3(1):93–97

Liu J, Wu Z, Wei Z et al (2013) Spatial-spectral kernel sparse representation for hyperspectral image classification. IEEE J Sel Top Appl Earth Obs Remote Sens 6(6):2462–2471

Tuia D, Camps-Valls G (2001) Urban image classification with semisupervised multiscale cluster kernels. IEEE J Sel Top Appl Earth Obs Remote Sens 4(1):65–74

Gomez-Chova L, Camps-Valls G, Bruzzone L, Calpe-Maravilla J (2010) Mean map kernel methods for semisupervised cloud classification. IEEE Trans Geosci Remote Sens 48(1):207–220

Bioucas-Dias J, Figueiredo M (2010) Alternating direction algorithms for constrained sparse regression, Application to hyperspectral unmixing. In: Proceedings of WHISPERS, Reykjavik, Iceland, June 2010, pp 1–4. IEEE

Combettes P et al (2006) Signal recovery by proximal forward-backward splitting. Multiscale Model Simul 4(4):1168–1200

Takeda H, Farsiu S, Milanfar P (2007) Kernel regression for image processing and reconstruction. IEEE Trans Image Process 16(2):349–366

Feng X, Milanfar P (2002) Multiscale principal components analysis for image local orientation estimation. In: 36th Asilomar conference signals, systems and computers, Pacific Grove, CA

Xu Y, Wu Z, Wei ZH (2014) Joint sparse hyperspectral image classification based on adaptive spatial context. J Appl Remote Sens 8(1):083552

Zhang H et al (2013) A nonlocal weighted joint sparse representation classification method for hyperspectral imagery. IEEE J Sel Top Appl Earth Obs Remote Sens 7(6):2056–2065

Takeda H, Farsiu S, Milanfar P (2007) Kernel regression for image processing and reconstruction. IEEE Trans Image Process 16:349–366

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this chapter

Cite this chapter

Wu, Z., Xu, Y., Liu, J. (2020). Sparsity-Based Methods for Classification. In: Prasad, S., Chanussot, J. (eds) Hyperspectral Image Analysis. Advances in Computer Vision and Pattern Recognition. Springer, Cham. https://doi.org/10.1007/978-3-030-38617-7_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-38617-7_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-38616-0

Online ISBN: 978-3-030-38617-7

eBook Packages: Computer ScienceComputer Science (R0)