Abstract

We investigate and computationally solve a shape optimization problem constrained by a variational inequality of the first kind, a so-called obstacle-type problem, with a gradient descent and a BFGS algorithm in the space of smooth shapes. In order to circumvent the numerical problems related to the non-linearity of the shape derivative, we consider a regularization strategy leading to novel possibilities to numerically exploit structures, as well as possible treatment of the regularized variational inequality constrained shape optimization in the context of optimization on infinite dimensional Riemannian manifolds.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Shape optimization is a classical topic in mathematics which is of high importance in a wide range of applications, e.g., acoustics [23], aerodynamics [19] and electrostatics [6]. Qualitative properties of optimal shapes such as minimum surfaces are investigated in classical shape optimization. In select cases, an analytical solution can be derived. In contrast, modern and application-oriented questions in shape optimization are concerned with specific calculations of shapes which are optimal with respect to a process which is mostly described by partial differential equations (PDE) or variational inequalities (VI). Consequently, the area of shape optimization builds a bridge between pure and applied mathematics. Recently, shape optimization gained new interest due to novel developments such as the usage of volumetric/weak formulations of shape derivatives. This paper, which focuses on VI constrained shape optimization problems, is based on recent results in the field of PDE constrained shape optimization and carries the achieved methodology over to shape optimization problems with constraints in the form of VIs. Thus, this paper can be seen as an extension of the Riemannian shape optimization framework for PDEs formulated in [24] to VI. Note that VI constrained shape optimization problems are very challenging because of the two main reasons: One needs to operate in inherently non-linear, non-convex and infinite-dimensional shape spaces and—in contrast to PDEs—one cannot expect the existence of shape derivatives for an arbitrary shape functional depending on solutions to variational inequalities.

So far, there are only very few approaches in the literature to the problem class of VI constrained shape optimization problems. In [12], shape optimization of 2D elasto-plastic bodies is studied, where the shape is simplified to a graph such that one dimension can be written as a function of the other. In [22, Chap. 4], shape derivatives of elliptic VI problems are presented in the form of solutions to again VIs. In [18], shape optimization for 2D graph-like domains are investigated. Also [14] presents existence results for shape optimization problems which can be reformulated as optimal control problems, whereas [4, 7] show existence of solutions in a more general set-up. In [18], level-set methods are proposed and applied to graph-like two-dimensional problems. Moreover, [8] presents a regularization approach to the computation of shape and topological derivatives in the context of elliptic VIs and, thus, circumventing the numerical problems in [22, Chap. 4]. However, all these mentioned problems have in common that one cannot expect for an arbitrary shape functional depending on solutions to VIs to obtain the shape derivative as a linear mapping (cf. [22, Example in Chap. 1]). E.g., in general, the shape derivative for the obstacle problems fails to be linear with respect to the normal component of the vector field defined on the boundary of the domain under consideration. In order to circumvent the numerical problems related to the non-linearity of the shape derivative (cf., e.g., [22, Chap. 4]) and in particular the non-existence of the shape derivative of a VI constrained shape optimization problem, [8] presents a regularization approach to the computation of shape and topological derivatives in the context of elliptic VIs. In this paper, we consider this regularization strategy, leading to novel possibilities to numerically exploit structures, as well as possible treatment of the regularized VI constrained shape optimization in the context of optimization on infinite dimensional manifolds.

This paper is structured as follows. In Sect. 2, we give a brief overview of the VI constrained shape optimization model class and regularization techniques on which we focus in this paper. Section 3 presents a way to solve the VI constrained shape model problem in the space of smooth shapes based on gradient representations via Steklov-Poincaré metrics. Finally, numerical results of the gradient descent and a Broyden-Fletcher-Goldfarb-Shanno (BFGS) algorithm are presented in Sect. 4.

2 VI Constrained Model Problem

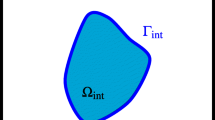

Let \(\varOmega \subset \mathbb {R}^2\) be a bounded domain equipped with a sufficiently smooth boundary \(\partial \varOmega \), which we will specify in more detail after stating the model problem. This domain is assumed to be partitioned in a subdomain \(\varOmega _\text {out}\subset \varOmega \) and an interior domain \(\varOmega _\text {int}\subset \varOmega \) with boundary \(\varGamma _\text {int}:=\partial \varOmega _\text {int}\) such that \(\varOmega _\text {out}\sqcup \varOmega _\text {int} \sqcup \varGamma _\text {int}=\varOmega \), where \(\sqcup \) denotes the disjoint union. We consider \(\varOmega \) depending on \(\mathrm {\Gamma _{int}}\), i.e., \(\varOmega =\varOmega (\mathrm {\Gamma _{int}})\). In the following, the boundary \(\varGamma _\text {int}\) of the interior domain is called the interface. In contrast to the outer boundary \(\partial \varOmega \), which is fixed, the inner boundary \(\mathrm {\Gamma _{int}}\) is variable. The interface is an element of an appropriate shape space. In this paper, we focus on the space of one-dimensional smooth shapes (cf. [16]) characterized by \(B_e:=B_e(S^1,\mathbb {R}^2):=\text {Emb}(S^1,\mathbb {R}^2) / \text {Diff}(S^1),\) i.e., the orbit space of \(\mathrm {Emb}(S^1,\mathbb {R}^2)\) under the action by composition from the right by the Lie group \(\mathrm {Diff}(S^1)\). Here, \(\mathrm {Emb}(S^1,{\mathbbm {R}}^2)\) denotes the set of all embeddings from the unit circle \(S^1\) into \({\mathbbm {R}}^2\), which contains all simple closed smooth curves in \({\mathbbm {R}}^2\). Note that we can think of smooth shapes as the images of simple closed smooth curves in the plane of the unit circle because the boundary of a shape already characterizes the shape. The set \(\mathrm {Diff}(S^1)\) is the set of all diffeomorphisms from \(S^1\) into itself, which characterize all smooth reparametrizations. These equivalence classes are considered because we are only interested in the shape itself and images are not changed by reparametrizations. More precisely, shapes that have been translated represent the same shape. In contrast, shapes with different scaling are not equivalent in this shape space. In [13], it is proven that the shape space \(B_e(S^1,\mathbb {R}^2)\) is a smooth manifold. For the sake of completeness it should be mentioned that the shape space \(B_e(S^1,\mathbb {R}^2)\) together with appropriate inner products is even a Riemannian manifold. In [17], a survey of various suitable inner products is given. In the following, we assume \(\mathrm {\Gamma _{int}}\in B_e\).

Let \(\nu >0\) be an arbitrary constant, \(\bar{y}\in L^2(\varOmega )\) and y solving the VI formulated in (3). For the objective function

we consider the following VI constrained shape optimization problem:

with y solving the following obstacle type variational inequality:

where \(f\in L^2(\varOmega )\) dependents on the shape,  denotes the duality pairing and \(a(\cdot ,\cdot )\) is a general bilinearform \( a:H_0^1(\varOmega )\times H_0^1(\varOmega ) \rightarrow \mathbb {R} ,\, (y, v) \mapsto \sum _{ij} a_{ij}y_{x_i}v_{x_j} + b yv \) defined by coefficient functions \(a_{ij}, b\in L^\infty (\varOmega )\), \(b\ge 0\).

denotes the duality pairing and \(a(\cdot ,\cdot )\) is a general bilinearform \( a:H_0^1(\varOmega )\times H_0^1(\varOmega ) \rightarrow \mathbb {R} ,\, (y, v) \mapsto \sum _{ij} a_{ij}y_{x_i}v_{x_j} + b yv \) defined by coefficient functions \(a_{ij}, b\in L^\infty (\varOmega )\), \(b\ge 0\).

With the tracking-type objective \(\mathcal {J}\) the model is fitted to data measurements \(\bar{y}\in L^2(\varOmega )\). The second term \(\mathcal {J}_\text {reg}\) in the objective function J is a perimeter regularization, which is frequently used to overcome ill-posedness of shape optimization problems. In (3), \(\psi \) denotes an obstacle which needs to be an element of \(L^1_{\text {loc}}(\varOmega )\) such that the set of admissible functions K is non-empty (cf. [22]). Then smoothness of the boundary \(\partial \varOmega \), where \(C^{1,1}\) regularity or polyhedricity is sufficient, and \(\psi \in H^2(\varOmega )\) ensure that the solution to (3) satisfies \(y\in H_0^1(\varOmega )\), see, e.g., [9, Remark 2.3]. Further, (3) can be equivalently expressed as a PDE with complementary constraints (cf. [11]):

The existence of solutions of any shape optimization problem is a non-trivial question. Shape optimization problems constrained by VIs are especially challenging because, in general, the shape derivative of VI constrained shape optimization problems is not linear (cf. [8, 22]). This potential non-linearity of the shape derivative complicates its use in algorithms. In order to circumvent the problems related to the non-linearity, we consider a regularized version of (2) constrained by (4)–(5). For convenience, we focus on a special bilinearform: We assume the bilinearform \(a(\cdot ,\cdot )\) of the state equation to correspond to the Laplacian \(-\varDelta \). In this setting, a regularized version is given by:

with \(\lambda _{\gamma ,c} = \text {max}_{\gamma }(0,\overline{\lambda }+c(y_{\gamma ,c}-\psi ))\), where \(\gamma , c>0\), \(0\le \overline{\lambda }\in L^2(\varOmega )\) fixed and

being a smoothed max-function. The convergence \(y_{\gamma ,c} \rightarrow y\) in \(H^1_0(\varOmega )\) of the regularized solution \(y_{\gamma ,c}\) to the unregularized solution y of (4) is guaranteed by a result in [15, Proposition 1]. Furthermore, the smoothness of the regularized PDE (7) guarantees the existence of adjoints, which in turn gives possibility to characterize a corresponding shape derivative of (6). In [8], it is mentioned that for a large parameter c the associated solution of the regularized state equation (7)–(8) using the unsmoothed \(\text {max}\)-function is an excellent approximation of the solution to the unregularized VI. Moreover, it is shown in [8] that the shape derivative for the regularized problem converges to the solution of a linear problem which depends linearly on a perturbation vector field. Numerical tests in [8] show the efficiency of the approach to introduce a regularization of the VI, which allows to apply the usual theory for obtaining shape derivatives. We refer to [15] for the shape derivative of (6)–(8) and the adjoint equation to (6)–(8), as well as the limiting objects and equations for \(\gamma , c \rightarrow \infty \). However, we want to point out that a proof of convergence of the optimal shapes generated by the steepest descent or BFGS method using the regularization parameters \(\gamma , c >0\) for \(\gamma , c \rightarrow \infty \) is yet to be done.

3 Algorithmic Details

This section presents a way to solve (6)–(8) computationally in the Riemannian manifold of smooth shapes. If we want to optimize on a Riemannian shape manifold, we have to find a representation of the shape derivative with respect to the Riemannian metric under consideration, called the Riemannian shape gradient, which is required to formulate optimization methods on a shape manifold. In [21], the authors present a metric based on the Steklov-Poincaré operator, which allows for the computation of the Riemannian shape gradient as a representative of the shape derivative in volume form. Besides saving analytical effort during the calculation process of the shape derivative, this technique is computationally more efficient than using an approach which needs the surface shape derivative form. For example, the volume form allows us to optimize directly over the hold-all domain \(\varOmega \) containing one or more elements \(\mathrm {\Gamma _{int}}\in B_e\), whereas the surface formulation would give us descent directions (in normal directions) for the boundary \(\varGamma _i\) only, which would not help us to move mesh elements around the shape. Additionally, when we are working with a surface shape derivative, we need to solve another PDE in order to get a mesh deformation in the hold-all domain \(\varOmega \) as outlined for example in [24].

We denote the shape derivative of J in direction of a vector field U which can be given in volume or surface form by \(DJ(\cdot )[U]\). In order to distinguish between surface and volume formulation, we use the notation \(DJ^{\text {surf}}(\cdot )[U]\), \(DJ^{\text {vol}}(\cdot )[U]\). Following the ideas presented in [21], we choose the Steklov-Poincaré metric

where \(S^{pr}:H^{-1/2}(\mathrm {\Gamma _{int}}) \rightarrow H^{1/2}(\mathrm {\Gamma _{int}}),\ \alpha \mapsto (\gamma _0 V)^T n\) denotes the projected Poincaré-Steklov operator with \(\text {tr}:H^1_0(\varOmega ,\mathbb {R}^2) \rightarrow H^{1/2}(\mathrm {\Gamma _{int}},\mathbb {R}^2)\) denoting the trace operator on Sobolev spaces for vector-valued functions and \(V\in H^1_0(X,\mathbb {R}^2)\) solving the Neumann problem

where \(a^{\text {deform}}:H_0^1(\varOmega ,{\mathbbm {R}}^2) \times H_0^1(\varOmega ,{\mathbbm {R}}^2) \rightarrow {\mathbbm {R}}\) is a symmetric and coercive bilinear form. If \(r\in L^1(\mathrm {\Gamma _{int}})\) denotes the \(L^2\)-shape gradient given by the surface formulation of the shape derivative  with n denoting the normal vector field and \(y_{\gamma ,c}\) denoting the solution of the regularized state equation (7)–(8), then a representation \(h\in T_{\mathrm {\Gamma _{int}}} B_e\cong \mathcal {C}^\infty (\mathrm {\Gamma _{int}})\) of the shape gradient in terms of \(G^S\) is determined by \(G^S(\phi ,h)=\left( r,\phi \right) _{L^2(\mathrm {\Gamma _{int}})} \, \forall \phi \in \mathcal {C}^\infty (\mathrm {\Gamma _{int}}).\) From this we get that the mesh deformation vector \(V\in H_0^1(\varOmega ,{\mathbbm {R}}^2)\) can be viewed as an extension of a Riemannian shape gradient to the hold-all domain \(\varOmega \) because of the identities

with n denoting the normal vector field and \(y_{\gamma ,c}\) denoting the solution of the regularized state equation (7)–(8), then a representation \(h\in T_{\mathrm {\Gamma _{int}}} B_e\cong \mathcal {C}^\infty (\mathrm {\Gamma _{int}})\) of the shape gradient in terms of \(G^S\) is determined by \(G^S(\phi ,h)=\left( r,\phi \right) _{L^2(\mathrm {\Gamma _{int}})} \, \forall \phi \in \mathcal {C}^\infty (\mathrm {\Gamma _{int}}).\) From this we get that the mesh deformation vector \(V\in H_0^1(\varOmega ,{\mathbbm {R}}^2)\) can be viewed as an extension of a Riemannian shape gradient to the hold-all domain \(\varOmega \) because of the identities

where \(v=(\text {tr}(V))^Tn,u=(\text {tr}(U))^Tn\in T_{\mathrm {\Gamma _{int}}}B_e\) with \(T_{\mathrm {\Gamma _{int}}} B_e\cong \mathcal {C}^\infty (\mathrm {\Gamma _{int}})\).

One option to choose the operator \(a^{\text {deform}}(\cdot , \cdot )\) is the bilinear form associated with the linear elasticity problem, i.e., \(a^{\text {elas}}(V, U):=\int _\varOmega (\lambda \text {tr} ( \epsilon (V) )\text {id} + 2 \mu \epsilon (V)) : \epsilon (U)\, dx,\) where \(\epsilon (U):= \frac{1}{2} \, (\nabla U + \nabla U^T)\), A : B denotes the Frobenius inner product for two matrices A, B and \(\lambda ,\mu \) denote the so-called Lamé parameters. To summarize, we need to solve the following deformation equation: find \(V \in H_0^1(\varOmega ,{\mathbbm {R}}^2)\) s.t.

In this equation, we need the solution \(y_{\gamma ,c}\) of the regularized state equation (7)–(8), and the solution \(p_{\gamma ,c}\) of the corresponding adjoint equation (cf. [15, Chapter 3]) in order to construct \(DJ(y_{\gamma ,c},\varOmega )[\cdot ]\). An alternative strategy to the regularization outlined is the linearized modified primal-dual active set (lmPDAS) algorithm formulated in [5, Algorithm 2]. The lmPDAS algorithm is based on the primal-dual active set (PDAS) algorithm given in [10] and on a linearization technique inspired by the concept of internal numerical differentiation [3].

The Riemannian shape gradient is required to formulate optimization methods in the shape space \(B_e\). In the setting of constrained shape optimization problems, a Lagrange-Newton method is obtained by applying a Newton method to find stationary points of the Lagrangian of the optimization problem. In contrast to this method, which requires the Hessian in each iteration, quasi-Newton methods only need an approximation of the Hessian. Such an approximation is realized, e.g., by a limited memory Broyden-Fletcher-Goldfarb-Shanno (BFGS) update. In the Steklov-Poincaré setting, such an update can be computed with the representation of the shape gradient with respect to \(G^S\) and a suitable vector transport (cf. [21]). The limited memory BFGS method (l-BFGS) for iteration j is summarized in Algorithm 1, where \(l \in \{2,3, \dots \}\) is the memory-length, \(V_i \in H^1_0(\varOmega , \mathbb {R}^2)\) are the volume representations of the gradients in \(T_{\mathrm {\Gamma _{int}}_i}B_e\) as by (12), \(S_i\in H^1_0(\varOmega )\) are the BFGS deformations generated in iteration i, \(\mathcal {T}_{S_{j-1}}\) denotes the vector transport associated to the update \(\varOmega _j = \text {exp}_{\varOmega _{j-1}}(\text {tr}(S_{j-1})^T n)\) and \(Y_i := V_{i+1} - \mathcal {T}_{S_i}V_{i} \in H^1_0(\varOmega , \mathbb {R}^2)\).

Remark 1

In general, we need the concept of the exponential map and vector transports in order to formulate optimization methods on a shape manifold. The calculations of optimization methods have to be performed in tangent spaces because manifolds are not necessarily linear spaces. This means points from a tangent space have to be mapped to the manifold in order to get a new shape-iterate, which can be realized with the help of the exponential map as used in Algorithm 1. However, the computation of the exponential map is prohibitively expensive in the most applications because a calculus of variations problem must be solved or the Christoffel symbols need be known. It is much easier and much faster to use a first-order approximation of the exponential map. In [1], it is shown that a so-called retraction is such a first-order approximation and sufficient in most applications. We refer to [20], where a suitable retraction and vector transport on \(B_e\) are given.

4 Numerical Results

We focus on a numerical experiment which is selected in order to demonstrate challenges arising for VI constrained shape optimization problems. To be more precise, we move and magnify a circle in the domain \(\varOmega =(0,1)^2\).

The right-hand side of (7), \(f \in L^2(\varOmega )\), is chosen as a shape dependent piecewise constant function \(f(x)=100\) for \(x\in \varOmega _{\text {int}}\) and \(f(x)=-10\) for \(x\in \varOmega {\setminus }\bar{\varOmega }_{\text {int}} \). Further, the perimeter regularization in Eq. (1) is weighted by \(\nu =0.00001\). The constants \(\gamma , c > 0\) in the regularized state equation are set to \(\gamma = 100, c=25\). The obstacle is given by

For our numerical test, we build artificial data \(\bar{y}\) by solving the state equation without obstacle for the setting that \(\varGamma _\text {int}:=\{(x,y)\in (0,1)^2:(x-0.6)^2+(y-0.5)^2 = 0.2^2 \}\). Then, we add noise to the measurements \(\bar{y}\), which is i.i.d. with \(\mathcal {N}(0.0,\, 0.5)\) for each mesh node. The Lamé parameter are chosen by \(\lambda =0\) and \(\mu \) as the solution of the following Laplace equation:

In order to solve our model problem formulated in Sect. 2, we focus on the strategy described in Sect. 3. This means after solving the state and adjoint equation, we compute a mesh deformation vector field by solving the deformation equation. The regularized state and adjoint equations are solved using the following discretizition. We use a Finite Element Method (FEM) with continuous Galerkin ansatz functions of first order and perform computations on unstructured meshes with up to approx. 2 300 vertices and 4 300 cells. All linear systems are solved with the preconditioned conjugate gradient solver of the software PETSc, which is used as a backend to the open source Finite Element Software FEniCS, see [2].

First, we focus on a steepest descent strategy. This means we add the mesh deformation vector field, which we get by solving the deformation equation, to all nodes in the finite element mesh. We implemented also a full BFGS strategy as described in Algorithm 1. Figures 1 and 2 present the results of the gradient and the BFGS method. The left pictures show the domain \(\varOmega \) together with the initial shape (small circle), the expected shape (dashed circle) and the shape iterates. One can see that the expected shape is only achieved with the BFGS method an not with the gradient method. This is due to some loss of shape information in \((0.75,1)\times (0,1)\). This could be explained by the structure of the limiting object \(p\in H^1_0(\varOmega )\) of the adjoints to the regularized problem, which is given by

where \(A:= \{x\in \varOmega : y(x) \ge \psi (x)\}\) denotes the active set of the variational inequality (4) (cf. [15, Theorem 1]). Due to the low obstacle \(\psi \) in \((0.75,1) \times (0,1)\), see (13), and the target \(\bar{y}\) being above \(\psi \) in \((0.75,1) \times (0,1)\), we have \((0.75,1) \times (0,1) \subset A\). Hence we have \(p_{\vert (0.75,1) \times (0,1)} = 0\) for the sensitivities, leaving no information in this area, resulting in shape derivatives which are 0 for directions \(V \in H^1_0(\varOmega , \mathbb {R}^2)\) with \(\text {supp}(V) \subset (0.75,1) \times (0,1)\). In this sense, only target shapes \(\varOmega \) that correspond to solutions \(\bar{y} \in H^1_0(\varOmega )\) which are below \(0.25 = \psi _{\vert (0.75,1) \times (0,1)}\) can be reached. This creates “blind areas” in the space of shapes \(B_e\) for the shapes not fulfilling this correspondence, which, due to the Laplace equation regarded, is mostly the case for shapes that partly lie in \((0.75,1) \times (0,1)\). The BFGS method only manages to reach the globally optimal shape since it generates a large step while the current shape iterate is still outside the blind area \((0.75,1) \times (0,1)\subset A\).

The right pictures of Figs. 1 and 2 show the decrease of the objective function and the mesh distance. In both methods, the shape distance between two shapes \(\varGamma _{\text {int}}^1,\varGamma _{\text {int}}^2\) is approximated by the integral \(\int _{x\in \varGamma _{\text {int}}^1} \max _{y\in \varGamma _{\text {int}}^2}\Vert x-y\Vert _2\, dx\), where \(\Vert \cdot \Vert _2\) denotes the euclidean norm. One sees that the BFGS method is superior to the gradient method: 5 iterations (BFGS) vs. 12 iterations (gradient method).

References

Absil, P.A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2008)

Alnæs, M.S., et al.: The FEniCS project version 1.5. Arch. Numer. Softw. 3(100), 9–23 (2015)

Bock, H.: Numerical treatment of inverse problems in chemical reaction kinetics. In: Ebert, K., Deuflhard, P., Jäger, W. (eds.) Modelling of Chemical Reaction Systems. Springer Series Chemical Physics, vol. 18, pp. 102–125. Springer, Heidelberg (1981). https://doi.org/10.1007/978-3-642-68220-9_8

Denkowski, Z., Migorski, S.: Optimal shape design for hemivariational inequalities. Universitatis Iagellonicae Acta Matematica 36, 81–88 (1998)

Führ, B., Schulz, V., Welker, K.: Shape optimization for interface identification with obstacle problems. Vietnam. J. Math. (2018). https://doi.org/10.1007/s10013-018-0312-0

Gangl, P., Laurain, A., Meftahi, H., Sturm, K.: Shape optimization of an electric motor subject to nonlinear magnetostatics. SIAM J. Sci. Comput. 37(6), B1002–B1025 (2015)

Gasiński, L.: Mapping method in optimal shape design problems governed by hemivariational inequalities. In: Cagnol, J., Polis, M., Zolésio, J.-P. (eds.) Shape Optimization and Optimal Design. Lecture Notes in Pure and Applied Mathematics, vol. 216, pp. 277–288. Marcel Dekker, New York (2001)

Hintermüller, M., Laurain, A.: Optimal shape design subject to elliptic variational inequalities. SIAM J. Control. Optim. 49(3), 1015–1047 (2011)

Ito, K., Kunisch, K.: Semi-smooth Newton methods for variational inequalities and their applications. M2AN Math. Model. Numer. Anal. 37, 41–62 (2003)

Ito, K., Kunisch, K.: Semi-smooth Newton methods for variational inequalities of the first kind. ESAIM Math. Model. Numer. Anal. 37(1), 41–62 (2003)

Kinderlehrer, D., Stampacchia, G.: An Introduction to Variational Inequalities and Their Applications, vol. 31. SIAM, Philadelphia (1980)

Kocvara, M., Outrata, J.: Shape optimization of elasto-plastic bodies governed by variational inequalities. In: Zolésio, J.-P. (ed.) Boundary Control and Variation. Lecture Notes in Pure and Applied Mathematics, vol. 163, pp. 261–271. Marcel Dekker, New York (1994)

Kriegl, A., Michor, P.: The Convient Setting of Global Analysis. Mathematical Surveys and Monographs, vol. 53. American Mathematical Society, Providence (1997)

Liu, W., Rubio, J.: Optimal shape design for systems governed by variational inequalities, Part 1: existence theory for the elliptic case. J. Optim. Theory Appl. 69(2), 351–371 (1991)

Luft, D., Schulz, V.H., Welker, K.: Efficient techniques for shape optimization with variational inequalities using adjoints. arXiv:1904.08650 (2019)

Michor, P., Mumford, D.: Riemannian geometries on spaces of plane curves. J. Eur. Math. Soc. 8, 1–48 (2006)

Michor, P., Mumford, D.: An overview of the Riemannian metrics on spaces of curves using the Hamiltonian approach. Appl. Comput. Harmon. Anal. 23, 74–113 (2007)

Myśliński, A.: Level set method for shape and topology optimization of contact problems. In: Korytowski, A., Malanowski, K., Mitkowski, W., Szymkat, M. (eds.) CSMO 2007. IAICT, vol. 312, pp. 397–410. Springer, Heidelberg (2009). https://doi.org/10.1007/978-3-642-04802-9_23

Schmidt, S., Ilic, C., Schulz, V., Gauger, N.: Three dimensional large scale aerodynamic shape optimization based on the shape calculus. AIAA J. 51(11), 2615–2627 (2013)

Schulz, V., Welker, K.: On optimization transfer operators in shape spaces. In: Schulz, V., Seck, D. (eds.) Shape Optimization. Homogenization and Optimal Control, pp. 259–275. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-90469-6_13

Schulz, V.H., Siebenborn, M., Welker, K.: Efficient PDE constrained shape optimization based on Steklov-Poincaré type metrics. SIAM J. Optim. 26(4), 2800–2819 (2016)

Sokolowski, J., Zolésio, J.-P.: An Introduction to Shape Optimization. Springer, Heidelberg (1992). https://doi.org/10.1007/978-3-642-58106-9

Udawalpola, R., Berggren, M.: Optimization of an acoustic horn with respect to efficiency and directivity. Int. J. Numer. Methods Eng. 73(11), 1571–1606 (2007)

Welker, K.: Optimization in the space of smooth shapes. In: Nielsen, F., Barbaresco, F. (eds.) GSI 2017. LNCS, vol. 10589, pp. 65–72. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-68445-1_8

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Luft, D., Welker, K. (2019). Computational Investigations of an Obstacle-Type Shape Optimization Problem in the Space of Smooth Shapes. In: Nielsen, F., Barbaresco, F. (eds) Geometric Science of Information. GSI 2019. Lecture Notes in Computer Science(), vol 11712. Springer, Cham. https://doi.org/10.1007/978-3-030-26980-7_60

Download citation

DOI: https://doi.org/10.1007/978-3-030-26980-7_60

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-26979-1

Online ISBN: 978-3-030-26980-7

eBook Packages: Computer ScienceComputer Science (R0)