Abstract

In this paper, we propose a reduction method for the multiobjective multiclass support vector machine (MMSVM), one of all-together method of the SVM. The method can maintain the discrimination ability, and reduce the computational complexity of the original MMSVM. First, we derive an approximate convex multiobjective optimization problem for the MMSVM by linearizing some constraints, and we secondly restrict the normal vectors of classifier candidates by using centroids obtained from the k-means clustering for each class dataset. The derived problem can be solved by the reference point method based on the centers of gravity of class datasets, in which the geometric margins between all pairs are exactly maximized. Some numerical experiments for benchmark problems show that the proposed method can reduce the computational complexity without decreasing its generalization ability widely.

Access provided by Autonomous University of Puebla. Download conference paper PDF

Similar content being viewed by others

Keywords

- Multiclass classification

- Support vector machine

- Multiobjective optimization

- Reference point method

- k-means clustering

1 Introduction

The binary support vector machine (SVM) [12] is one of popular machine learning methods, which finds a classifier with a high classification ability by maximizing the geometric margin between data and a separating hyperplane. In addition, various extended methods of the binary SVM have been investigated for multiclass classification. In this paper, we focus on all-together (AT) method among the extended ones, especially, the multiobjective multiclass SVM (MMSVM) [7]. It was reported that comparing with the simplest AT method maximizing functional margins, the MMSVM can obtain a classifier with a higher classification rate by maximizing exactly the geometric margin between each class pair. However, it requires a larger amount of computational resources than the simplest AT and other methods [7,8,9].

Therefore, in this paper, we propose a method of reducing the computational complexity without decreasing its generalization ability widely. First, we derive an approximate convex multi-objective optimization problem (MOP) by linearizing some constraints of the original non-convex MOP which is used to find a classifier in MMSVM. Secondly, we restrict the normal vectors of classifier candidates to a space spanned by centroids obtained from the preliminary k-means clustering. Thirdly, we solve the derived MOP by applying the reference point method. Through numerical experiments for benchmark problems, we evaluate performance of classifiers obtained by the proposed method and its computational complexity.

2 Multiclass Classification

The multiclass classification means discriminating data into more than two classes. We assume that a dataset \((x^i ,y_i ),\ i=1,\ldots ,l\) are generated by the same distribution P(x, y), where \(x^i \in \mathcal{R}^n\) denotes an n-dimensional input, and \(y_i \in M := \{1, \ldots , m\}\) denotes a label which the corresponding \(x^i\) should be classified into. The aim is finding a classifier f(x) which satisfies \( y_i =f(x^i ),\ i=1,\ldots ,l\) and which can correctly classify a new unknown input x from the same distribution. In this paper, we assume that there exists an appropriate feature space F and a corresponding function \(\phi \) \(: \mathcal{R}^n \rightarrow F\). Thus, we mainly discuss a linear classification on F which uses the kernel method.

In the representative SVMs for multiclass classification such as one-against-all (OAA) [2] and all-together (AT) methods [11, 12], the following discriminant function is often used:

where \(w^p,\ b^p,\ p \in M\) denote a weight vector and a bias value, respectively. Thus, the aim is finding appropriate \((w^p, b^p)\), \(p \in M\).

2.1 SVM Maximizing Functional Margins

As the simplest AT method, the SVM maximizing the sum of functional margins was proposed in [11, 12], which can be straightforwardly derived from the binary SVM.

where \(I_p := \{ i \in \{1, \ldots , l\} |\ y_i = p \}, p \in M\). Note that maximizing the functional margin in binary SVM can guarantee exact maximization of the distance between data and a separating hyperplane, called geometric margin, which can contribute its high generalization ability. On the other hand, in the problem (AT) for the multiclass classification, maximizing functional margins \(1/|| w^p - w^q ||\) does not necessarily guarantees the maximization of the geometric margins. Namely, the functional margin for a class pair pq does not necessarily represent the distance between the corresponding separating hyperplane:

and the closest data in classes \(\{ p, q \}\), as pointed out in [7], which is represented by

Thus, it might be difficult to expect the generalization ability similar to the binary SVM. The method of maximizing exactly geometric margins was already proposed in [7]. We introduce it in the next section.

2.2 SVM Maximizing Geometric Margins

In order to maximize exactly the geometric margins, an AT method called MMSVM was already proposed, which was formulated as the following multiobjective optimization problem (MOP) [7]:

where we define \( {\theta }_{pq}(w,\sigma ) = {\sigma _{pq}} / {\Vert w^p - w^q\Vert },\;\;q > p,\;\;p,q \in M. \) Note that (M) has more than two objective functions, and the number of them is that of all combinations of class pairs. In [7], it was shown that at any Pareto optimal solution \((w^*, b^*, \sigma ^*)\) of (M), each of objective functional values \({\theta }_{pq}(w^*,\sigma ^*)\) is equal to the geometric margin \( d_{pq}(w^*, b^*)\) of the corresponding class pair [7].

Since in general the optimal solutions of the MOP are often given as a set called Pareto optimal solutions, and, in addition, (M) is not convex. The problem is more difficult to solve than the single-objective optimization problem (SOP). However, a method of finding a Pareto optimal solution by solving a convex SOP was introduced, and the kernel method can be easily applied to (M).

Now, let’s consider the kernel method for (M). The weight vector \(w^p\) of the separating hyperplane is represented as a weighted sum of \(\phi (x^i)\) by introducing new decision variables \(\alpha ^{p}_{i} \in R,\;\;i = 1,\ldots ,l,\;\;p \in M\):

Then, by defining \(K := (\phi (x^1),\ldots ,\phi (x^l))^\top (\phi (x^1),\ldots ,\phi (x^l))\), \(\alpha ^{p} := (\alpha ^{p}_{1},\, \ldots ,\, \alpha ^{p}_{l})^\top \), \(p \in M\), \( \bar{\theta }_{pq}(\alpha ,\sigma ) := {\sigma _{pq}}/{ \sqrt{(\alpha ^p - \alpha ^q)^{\top } K (\alpha ^p - \alpha ^q)}}\), \(q > p\), \(p,q \in M\), \(\kappa (x^i) := (k(x^1,x^i),\ldots ,k(x^l,x^i))^\top \), \(i = 1,\ldots ,l\), (M) can be rewritten as

In addition, the discriminant function can be represented by \( f(x) = \mathop {\mathrm {argmax}}\limits _{p \in M} \{ {{\alpha ^p}^*}^\top \kappa (x) + {b^p}^* \}\), where \((\alpha ^*,b^*,\sigma ^*)\) is the Pareto optimal solution of (M2).

As a method of solving (M2), the \(\varepsilon \)-constraint method is used, which is one of popular scalarization methods for the MOP [4]. In the method, the SOP is derived instead of (M2), in which one of objective functions of (M2) is used as the objective function of the new SOP, and other objective functions are changed into its constraints by using an appropriate constant vector \(\varepsilon \). In addition, the following transformation was used to solve the SOP [7, 8].

Now, we focus on all positive eigenvalues values \({\lambda _1}, \ldots , \lambda _{\tau }\) of K, and the corresponding eigenvectors \( t^1, \ldots , t^{\tau }\), where \(\tau >0\) denotes the number of the positive eigenvalues. Then, we have that

Then, new decision variables \(z^p\) are defined as \( z^{p} := {\varLambda }^{\frac{1}{2}} {T}^\top \alpha ^p\), \(p \in M\), and the following convex SOP is obtained:

where \(\bar{t}^i{}^\top \) denotes the i-th row vector of T, the constant \(\varepsilon _{pq}\), \(p, q \in M\) is appropriately selected for the feasibility of (\(\varepsilon \)M2), and class pair rs is appropriately selected. Note that the constraint \( \sigma _{rs} = c_{rs}\) with a sufficiently large constant \(c_{rs}\) is added so that (\(\varepsilon \)M2) is convex, and a large \(c_{rs}\) guarantees that the optimal solution of (\(\varepsilon \)M2) is Pareto optimal [7]. Moreover, (\(\varepsilon \)M2) is a second-order cone programming problem (SOCP), which is a convex problem having the second-order and linear constraints, and which can be effectively solved by using some primal-dual interior method [1]. In addition, numerical experiments showed that the geometric margins of separating hyperplanes constructed by the optimal solution of (\(\varepsilon \)M2) are larger than those obtained by the functional margin method (AT), and that classifiers of (M2) obtained by (\(\varepsilon \)M2) have better classification ability than (AT) [7,8,9].

Next, let us evaluate the computational resources required to solve (\(\varepsilon \)M2) and (AT). Since in (\(\varepsilon \)M2), a constant vector \(\varepsilon \) is determined by the optimal solution of (AT), (\(\varepsilon \)M2) requires solving (AT). In addition, CPU time of solving an SOCP (\(\varepsilon \)M2) is considerably larger than that of (AT) because of many decision variables. Moreover, if the element number l of all datasets is large, the diagonalization of \(l \times l\) matrix K also requires a large amount of computational resources. Therefore, in [5], an approximation method for (M) was proposed, in which a single-objective SOCP is derived by introducing the sum of objective functions of (M) and linearizing the right-hand side of the first and second constraints of (M). The SOCP is easily solved due to its convexity, and an feasible solution of (M) can be easily obtained from the optimal solution of the SOCP. The numerical experiments showed that the generalization ability of classifiers obtained by approximation method is better than that of (AT).

In this paper, we derive an approximate MOP by using the same approximation technique, and, furthermore, we restrict the normal vectors of classifier candidates to a space spanned by centroids obtained from a preliminary clustering in order to reduce its computational complexity.

3 Approximate MMSVM

In this section, we introduce the following problem by defining \( \delta _{pq} := \sigma _{pq}^2\) and putting a constant upper limit \(\rho \ge 1\) on \( \delta _{pq}\), \(q>p,\ p,q\in M\).

Here, \(\eta _{pq}\) is defined by \( \eta _{pq}(w, \delta ) = {\Vert w^p - w^q \Vert ^2}/{ 2 \delta _{pq}}\), \(q>p,\ p,q\in M\). If \(\rho \) is sufficiently large, (S1) can be considered to be equivalent to (M). Then, in order to approximate (S1), we replace the right-hand sides of the first and second constraint inequalities with  by using a constant \(\rho \) in the same way to [5]. Then, we obtain

by using a constant \(\rho \) in the same way to [5]. Then, we obtain

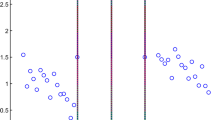

Figure 1 shows the relation of  and

and  , which shows that

, which shows that  for any \(\delta \in [1, \rho ]\). By making use of the property, for any Pareto optimal solution \((w, b, \delta )\) of (S2), we can obtain a feasible solution \((w, b, \delta ')\) of (S1) as follows:

for any \(\delta \in [1, \rho ]\). By making use of the property, for any Pareto optimal solution \((w, b, \delta )\) of (S2), we can obtain a feasible solution \((w, b, \delta ')\) of (S1) as follows:

Since (S2) can be regarded as convex, it is easier to solve the problem than (M2). In addition, we can show the following properties between solutions of (S1) and (S2): The relation of objective function values at \((w, b, \delta )\) and \((w, b, \delta ')\) is given by

Thus, for the Pareto solution or the feasible solution \((\bar{w}, \bar{b}, \bar{\delta })\) of (S1) which dominate \((w, b, \delta ')\) such that \(\eta _{pq}(\bar{w}, \bar{b}, \bar{\delta }) \le \eta _{pq}(w, b, \delta ')\), \(q>p,\;p,q\in M,\) we have that

The approximate method for MMSVM proposed in this section is called AMMSVM.

4 AMMSVM Based on K-Means Clustering

Next, we introduce a dimension reduction which restricts the weights of (1) of separating hyperplanes to AMMSVM. This method is based on the assumption that the representation of appropriate weights of the separating hyperplanes does not need all datasets such as (1), and thus, the weights can be represented by the weighted sum of a smaller number of data. Namely, instead of using all \(\phi (x^i), i = 1, \ldots , l\), the method selects representative points in F for weights \(w^p\) of each \(p \in M\). Since by using this restriction, the feasible region of the proposed problem is smaller than the original (M2), solving time and used memories can be expected to be widely reduced. At the same time, the proposed method keeps all the constraints of (M2) which guarantee that all training data is correctly classified. Thus, the method is quite different from a reduction method of deleting training data by some preliminary technique [3]. The proposed method uses the k-means clustering [6] with an appropriate number of clusters to each class data \( \phi (x^{i}),\ i \in I_{p}\), and centroids of obtained clusters are used as representative points for the class.

4.1 k-Means Clustering

In the proposed method, the k-means clustering is applied to each dataset \(\phi (x^{i}),\ i \in I_{p}\) for class p in order to obtain clusters \( \{ \phi (x^l) \}_{l \in I^k_p}\), \(k=1,\ldots , c_p\), such that \(I_p = \cup _{k=1}^{c_p} I^k_p\), individually, which means minimizing the following function:

where \(c_p\) denotes the number of clusters which is appropriately selected for class p. In the numerical experiments at Sect. 5, we set \(c_p= \lfloor r |I_p| \rfloor \), and r is a small constant. Centroids of each cluster k are given by \(\psi ^{p,k} = \sum _{i \in I^{k}_p} \phi (x^{i})/{|I_p^k|} \). Here, note that the kernel method can be easily applied to the clustering method.

It is well-known that the k-means does not necessarily find the global minimum of \(E^p\). Thus, we executed 20 times k-means clustering and select the centroids of the clustering in which the least \(E^p\) was obtained in the numerical experiments.

4.2 Dimension Reduction Based on k-Means Clustering

The centroids obtained by the k-means clustering for the dataset in class p are represented by \(\psi ^{p,k}\), \(k = 1,\ldots , c_p\). By introducing new decision variables \(\beta ^{p}_{q,k}\), \(k=1, \ldots , n_p\), \(p, q\in M\), the weight \(w_{p}\) of AMMSVM for class \(p \in M\) are given by

where matrix \(\varPsi \) and a decision vector \(\beta ^p\) is defined as

and \(c_{all}\) is defined as \(\sum _{p\in M}c_p\). Then, the discriminant function is represented by

and the decision variables \((\beta ^p,b^p)\), \(q>p,\;p,q\in M\) are determined by solving the following MOP:

The geometric margins between all class pairs are maximized by solving (KMS) under the restriction (3). Moreover, since a centroid of each cluster is represented by the weighted sum of \(\phi (x^i)\), the kernel method can be applied to (KMS).

Now, similarly to (2), we have that \( \varPsi ^\top \varPsi = \hat{T} \hat{\varLambda } \hat{T}^\top \), where \(\hat{\varLambda } \in \mathcal{R}^{\tau _c \times \tau _c}\) is a diagonal matrix whose diagonal components are all positive eigenvalues of \(\varPsi ^\top \varPsi \), \(T \in \mathcal{R}^{c_{all} \times \tau _c}\) consists of the corresponding eigenvectors, and \(\tau _c\) denotes the number of the positive eigenvalues. Then, by introducing decision variables:

and defining as \( \bar{k}^p(x) := \left( \sum _{j\in I^1_p}k(x^j,x) /{|I^1_p|} \right. ,\, \dots ,\,\left. \sum _{j\in I^{c_p}_p}k(x^j,x) / {|I^{c_p}_p|} \right) ^{\top }\) and \(\bar{\kappa }(x) := \left( \bar{k}^1(x),\dots , \bar{k}^m(x) \right) ^\top \), we can transform (KMS) to the following MOP:

We can easily show that any Pareto optimal solution of (KMS) is obtained by solving (KMS2) as follows:

Theorem 1

For a Pareto optimal solution \((z^*,b^*,\delta ^*)\) of (KMS2), a solution \((\beta ^*,b^*,\delta ^*)\) of which \(\beta ^*\) is defined as \( \beta ^{p*} = \hat{T} \hat{\varLambda }^{-\frac{1}{2}}z^{p*}, \;\;p\in M\) is Pareto optimal for (KMS). Conversely, for a Pareto optimal solution \((\beta ^*,b^*,\delta ^*)\) of (KMS), \((z^*,b^*,\delta ^*)\) in which \(z^*\) is defined by (4) is Pareto optimal for (KMS2).

4.3 Solving Based on Reference Point Method

In this subsection, we apply the reference point method to solve (KMS2), which finds a Pareto optimal solution by minimizing the distance between a given reference point and Pareto optimal solutions in the objective space. The following SOP can be derived:

Here, \(r^*_{pq}\) is a given reference point which is used as the criterion of minimizing. In (KMS3), the distance between the reference point and Pareto optimal solutions is measured by the augmented Tchebyshev function, in which \(\omega \) is a weight vector which determines a balance between objective functions, and \(\mu \) shows the rate of the second term to the first one. The weight vector \(\omega \) and reference point \(r^*\) are selected as the following three kinds of pairs:

where \(g^p\) denotes the center of gravity of the data set of class p, namely, \(g^p = (1/|I_p|)\sum _{i\in I_p} \phi (x^i)\). The selection is based on the idea that the appropriate balance of margins is roughly estimated at the balance of the distances between the centers of gravity. Then, the discriminant function is represented by

where \((z^*,b^*,\delta ^*)\) denotes the optimal solution of (KMS3). Although (KMS3) is not smooth, an equivalent smooth SOCP can be easily derived, which means that a Pareto optimal solution of (KMS3) can be easily obtained by solving the SOCP. Moreover, in the numerical experiments, we solve the dual problem of the smooth SOCP problem because its computational time can be expected to be less than that of the original problem.

4.4 Comparison of Computational Complexities

Now, let us compare computational complexities of (\(\varepsilon \)M2) and (KMS3). The constant vector \(\varepsilon \) used in (\(\varepsilon \)M2), are determined by solutions of (AT), which is a large-scale quadratic optimization problem using all class datasets at once. On the other hand, the calculation of centroids for (KMS3) requires a considerably small amount of computational resources even if it is executed 20 times because the k-means clustering is individually applied to each class dataset.

Next, let us compare the diagonalization of kernel matrices used in (\(\varepsilon \)M2) and the dual problem of (KMS3), and the numbers of the decision variables and constraints of them. The size of matrices diagonalized for (\(\varepsilon \)M2) and (KMS3) are \(l \times l\) and \( c_{all} \times c_{all}\), respectively. The numbers of decision variables of (\(\varepsilon \)M2) and the dual problem of (KMS3) are \( m(m+2\tau +1)/2 -1\) and \( m(m + \tau _c)\), respectively, and the sizes of constraints of them are \( (m-1)(l + m/2) -1 \) and \((m-1)(l +3m/2))\), respectively. Since in general, we have that \( m \le \tau _c \le c_{all} \ll \tau \le l\), more reduction can be expected if \(c_{all}\) is small.

5 Numerical Experiments

We applied the existing methods, AT and MMSVM and the variations of the proposed methods to seven benchmark problems [10], and compared the mean correct classification rate and mean CPU time by using the 10-fold cross-validation, in which hyperparameters were appropriately selected. To solve optimization problems, we used software package MOSEK. As variations of the proposed methods, we used the AMMSVM which does not use the dimension reduction based on the k-means clustering and which is solved by the reference point method, and KMSs in which r was varied in \( \{ 0.05,\, 0.1, 0.15, 0.2 \}\), which are represented by AMM, KMS5, KMS10, KMS15 and KMS20, respectively, and three kinds of reference point and weights, R0, R1 and R2, were used for AMMSVM and each KMS. We used the RBF kernel, namely, \(k(x,y) = \exp \left( - \gamma \Vert x - y \Vert ^2\right) \).

The results are shown in Tables 1 and 2. In Table 1, the numbers in parentheses denote the best hyperparameters of each method: \((\gamma )\) in AT and MMSVM, and \((\gamma , \rho , \mu )\) in AMMSVM and KMSs. The italic and bold number denote the first and second best classification rate for each problem.

Table 1 shows that MMSVM obtained a high classification rate for many problems, while the classification rates of AMMSVM and KMSs with a large r are equal or slightly smaller than those of MMSVM. The classification rates of KMSs mostly increase as r increases. In particular, although the rates are considerably low if r is small for Ecoli, the highest rate was obtained by the KMS with \(r=0.15\). In addition, AMMSVM and KMSs achieved a higher rate than MMSVM for some problems. The superiority of AMMSVM and KMSs is considered to be caused by the diversity of obtained solutions: The reference point method used in AMMSVM and KMSs finds a solution under various kinds of balances of objective functions, while in MMSVM, a single margin is maximized by the \(\varepsilon \)-constraint method using the optimal solution of (AT). From Table 2, we can see that AMMSVM reduced CPU time than AT or MMSVM without a dimension reduction, and KMSs did more greatly for large-scale problems even if \(r = 0.15\) or 0.20. Comparing performance of KMSs using three kinds of reference point and weights, namely, KMSs with R0, R1 and R2, each method obtained a high rate for different problems, though no significant difference was observed.

6 Conclusion

In this paper, we have proposed an approximate method of MMSVM which approximates its non-convex MOP by linearizing the constraints, and a reduction method which restricts the normal vectors of separating hyperplanes by using centroids from the k-means clustering. Through numerical experiments, we have observed that the proposed methods are effective to reduce the computational resources without decreasing the classification ability widely.

References

Alizadeh, F., Goldfarb, D.: Second-order cone programming. Math. Program. Ser. B 95, 3–51 (2003)

Bottou, L., et al.: Comparison of classifier methods: a case study in handwriting digit recognition. In: Proceedings of the 12th IAPR International Conference on Pattern Recognition, pp. 77–87 (1994)

Boyang, L., Qiangwei, W., Jinglu, H.: A fast SVM training method for very large datasets. In: Proceedings of the 2009 International Joint Conference on Neural Networks, pp. 14–19 (2009)

Ehrgott, M.: Multicriteria Optimization. Springer, Heidelberg (2005). https://doi.org/10.1007/3-540-27659-9

Kusunoki, Y., Tatsumi, K.: A multi-class support vector machine based on geometric margin maximization. In: Huynh, V.-N., Inuiguchi, M., Tran, D.H., Denoeux, T. (eds.) IUKM 2018. LNCS (LNAI), vol. 10758, pp. 101–113. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-75429-1_9

MacQueen, J.B.: Some methods for classification and analysis of multivariate observations. In: Fifth Berkeley Symposium on Mathematics, Statistics and Probability, pp. 281–297 (1967)

Tatsumi, K., Tanino, T., Hayashida, K.: Multiobjective multiclass support vector machines maximizing geometric margins. Pac. J. Optim. 6(1), 115–140 (2010)

Tatsumi, K., Kawachi, R., Tanino, T.: Nonlinear extension of multiobjective multiclass support vector machine. In: Proceedings of the IEEE SMC, pp. 1338–1343 (2010)

Tatsumi, K., Tanino, T.: Support vector machines maximizing geometric margins for multi-class classification. Off. J. Span. Soc. Stat. Oper. Res. 22(3), 815–840 (2014)

Lichman, M.: UCI machine learning repository (2013). http://archive.ics.uci.edu/ml

Weston, J., Watkins, C.: Multi-class Support Vector Machines. In: Verleysen, M. (ed.) ESANN99, Belgium, Brussels (1999)

Vapnik, V.N.: Statistical Learning Theory. Wiley, NewYork (1998)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Tatsumi, K., Sugimoto, T., Kusunoki, Y. (2019). Approximate Multiobjective Multiclass Support Vector Machine Restricting Classifier Candidates Based on k-Means Clustering. In: Seki, H., Nguyen, C., Huynh, VN., Inuiguchi, M. (eds) Integrated Uncertainty in Knowledge Modelling and Decision Making. IUKM 2019. Lecture Notes in Computer Science(), vol 11471. Springer, Cham. https://doi.org/10.1007/978-3-030-14815-7_23

Download citation

DOI: https://doi.org/10.1007/978-3-030-14815-7_23

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-14814-0

Online ISBN: 978-3-030-14815-7

eBook Packages: Computer ScienceComputer Science (R0)