Abstract

It is a well-known fact that mathematical functions that are timelimited (or spacelimited) cannot be simultaneously bandlimited (in frequency). Yet the finite precision of measurement and computation unavoidably bandlimits our observation and modeling scientific data, and we often only have access to, or are only interested in, a study area that is temporally or spatially bounded. In the geosciences we may be interested in spectrally modeling a time series defined only on a certain interval, or we may want to characterize a specific geographical area observed using an effectively bandlimited measurement device. It is clear that analyzing and representing scientific data of this kind will be facilitated if a basis of functions can be found that are “spatiospectrally” concentrated, i.e., “localized” in both domains at the same time. Here, we give a theoretical overview of one particular approach to this “concentration” problem, as originally proposed for time series by Slepian and coworkers, in the 1960s. We show how this framework leads to practical algorithms and statistically performant methods for the analysis of signals and their power spectra in one and two dimensions and particularly for applications in the geosciences and for scalar and vectorial signals defined on the surface of a unit sphere.

Access provided by Autonomous University of Puebla. Download reference work entry PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

It is well appreciated that functions cannot have finite support in the temporal (or spatial) and spectral domain at the same time (Slepian 1983). Finding and representing signals that are optimally concentrated in both is a fundamental problem in information theory which was solved in the early 1960s by Slepian, Landau, and Pollak (Slepian and Pollak 1961; Landau and Pollak 1961, 1962). The extensions and generalizations of this problem (Daubechies 1988, 1990; Daubechies and Paul 1988; Cohen 1989) have strong connections with the burgeoning field of wavelet analysis. In this contribution, however, we shall not talk about wavelets, the scaled translates of a “mother” with vanishing moments, the tool for multiresolution analysis (Daubechies 1992; Flandrin 1998; Mallat 1998). Rather, we devote our attention entirely to what we shall collectively refer to as “Slepian functions,” in multiple Cartesian dimensions and on the sphere.

These we understand to be orthogonal families of functions that are all defined on a common, e.g., geographical, domain, where they are either optimally concentrated or within which they are exactly limited, and which at the same time are exactly confined within a certain bandwidth or maximally concentrated therein. The measure of concentration is invariably a quadratic energy ratio, which, though only one choice out of many (Donoho and Stark 1989; Freeden and Windheuser 1997; Riedel and Sidorenko 1995; Freeden and Schreiner 2010; Michel 2010), is perfectly suited to the nature of the problems we are attempting to address. These are, for example: How do we make estimates of signals that are noisily and incompletely observed? How do we analyze the properties of such signals efficiently, and how can we represent them economically? How do we estimate the power spectrum of noisy and incomplete data? What are the particular constraints imposed by dealing with potential-field signals (gravity, magnetism, etc.) and how is the altitude of the observation point, e.g., from a satellite in orbit, taken into account? What are the statistical properties of the resulting signal and power spectral estimates?

These and other questions have been studied extensively in one dimension, that is, for time series, but until the twenty-first century, remarkably little work had been done in the Cartesian plane or on the surface of the sphere. For the geosciences, the latter two domains of application are nevertheless vital for the obvious reasons that they deal with information (measurement and modeling) that is geographically distributed on (a portion of) a planetary surface. In our own recent series of papers (Wieczorek and Simons 2005, 2007; Simons and Dahlen 2006, 2007; Simons et al. 2006, 2009; Dahlen and Simons 2008; Plattner and Simons 2013, 2014) we have dealt extensively with Slepian’s problem in spherical geometry. Asymptotic reductions to the plane (Simons et al. 2006; Simons and Wang 2011) then generalize Slepian’s early treatment of the multidimensional Cartesian case (Slepian 1964).

In this chapter we provide a framework for the analysis and representation of geoscientific data by means of Slepian functions defined for time series, on two-dimensional Cartesian, and spherical domains. We emphasize the common ground underlying the construction of all Slepian functions, discuss practical algorithms, and review the major findings of our own recent work on signal (Wieczorek and Simons 2005; Simons and Dahlen 2006) and power spectral estimation theory on the sphere (Wieczorek and Simons 2007; Dahlen and Simons 2008). Compared to the first edition of this work (Simons 2010), we now also include a section on vector-valued Slepian functions that brings the theory in line with the modern demands of (satellite) gravity, geomagnetic, or oceanographic data analysis (Freeden 2010; Olsen et al. 2010; Grafarend et al. 2010; Martinec 2010; Sabaka et al. 2010).

2 Theory of Slepian Functions

In this section we review the theory of Slepian functions in one dimension, in the Cartesian plane, and on the surface of the unit sphere. The one-dimensional theory is quite well known and perhaps most accessibly presented in the textbook by Percival and Walden (1993). It is briefly reformulated here for consistency and to establish some notation. The two-dimensional planar case formed the subject of a lesser-known of Slepian’s papers (Slepian 1964) and is reviewed here also. We are not discussing alternatives by which two-dimensional Slepian functions are constructed by forming the outer product of pairs of one-dimensional functions. While this approach has produced some useful results (Hanssen 1997; Simons et al. 2000), it does not solve the concentration problem sensu stricto. The spherical scalar case was treated in most detail, and for the first time, by ourselves elsewhere (Wieczorek and Simons 2005; Simons et al. 2006; Simons and Dahlen 2006), though two very important early studies by Slepian, Grünbaum, and others laid much of the foundation for the analytical treatment of the spherical concentration problem for cases with special symmetries (Gilbert and Slepian 1977; Grünbaum 1981). The spherical vector case was treated in its most general form by ourselves elsewhere (Plattner et al. 2012; Plattner and Simons 2013, 2014), but had also been studied in some, but less general, detail by researchers interested in medical imaging (Maniar and Mitra 2005; Mitra and Maniar 2006) and optics (Jahn and Bokor 2012, 2013). Finally, we recast the theory in the context of reproducing-kernel Hilbert spaces, through which the reader may appreciate some of the connections with radial basis functions, splines, and wavelet analysis, which are commonly formulated in such a framework (Freeden et al. 1998; Michel 2010).

2.1 Spatiospectral Concentration for Time Series

2.1.1 General Theory in One Dimension

We use t to denote time or one-dimensional space and ω for angular frequency, and adopt a normalization convention (Mallat 1998) in which a real-valued time-domain signal f(t) and its Fourier transform F(ω) are related by

The problem of finding the strictly bandlimited signal

that is maximally, though by virtue of the Paley-Wiener theorem (Daubechies 1992; Mallat 1998) never completely, concentrated into a time interval | t | ≤ T, was first considered by Slepian, Landau, and Pollak (Slepian and Pollak 1961; Landau and Pollak 1961). The optimally concentrated signal is taken to be the one with the least energy outside of the interval:

Bandlimited functions g(t) satisfying the variational problem (3) have spectra G(ω) that satisfy the frequency-domain convolutional integral eigenvalue equation

The corresponding time- or spatial-domain formulation is

The “prolate spheroidal eigentapers” g 1(t), g 2(t), … that solve Eq. (5) form a doubly orthogonal set. When they are chosen to be orthonormal over infinite time \(\vert t\vert \leq \infty\), they are also orthogonal over the finite interval | t | ≤ T:

A change of variables and a scaling of the eigenfunctions transforms Eq. (4) into the dimensionless eigenproblem

Equation (7) shows that the eigenvalues \(\lambda _{1}>\lambda _{2}>\ldots\) and suitably scaled eigenfunctions \(\psi _{1}(x),\psi _{2}(x),\ldots\) depend only upon the time-bandwidth product TW. The sum of the concentration eigenvalues \(\lambda\) relates to this product by

The eigenvalue spectrum of Eq. (7) has a characteristic step shape, showing significant (\(\lambda \approx 1\)) and insignificant (\(\lambda \approx 0\)) eigenvalues separated by a narrow transition band (Landau 1965; Slepian and Sonnenblick 1965). Thus, this “Shannon number” is a good estimate of the number of significant eigenvalues or, roughly speaking, N 1D is the number of signals f(t) that can be simultaneously well concentrated into a finite time interval | t | ≤ T and a finite frequency interval | ω | ≤ W. In other words (Landau and Pollak 1962), N 1D is the approximate dimension of the space of signals that is “essentially” timelimited to T and bandlimited to W, and using the orthogonal set \(g_{1},g_{2},\ldots,g_{N^{\mathrm{1D}}}\) as its basis is parsimonious.

2.1.2 Sturm-Liouville Character and Tridiagonal Matrix Formulation

The integral operator acting upon ψ in Eq. (7) commutes with a differential operator that arises in expressing the three-dimensional scalar wave equation in prolate spheroidal coordinates (Slepian and Pollak 1961; Slepian 1983), which makes it possible to find the scaled eigenfunctions ψ by solving the Sturm-Liouville equation

where \(\chi \neq \lambda\) is the associated eigenvalue. The eigenfunctions ψ(x) of Eq. (9) can be found at discrete values of x by diagonalization of a simple symmetric tridiagonal matrix (Slepian 1978; Grünbaum 1981; Percival and Walden 1993) with elements

The matching eigenvalues \(\lambda\) can then be obtained directly from Eq. (7). The discovery of the Sturm-Liouville formulation of the concentration problem posed in Eq. (3) proved to be a major impetus for the widespread adoption and practical applications of the “Slepian” basis in signal identification, spectral analysis, and numerical analysis. Compared to the sequence of eigenvalues \(\lambda\), the spectrum of the eigenvalues χ is extremely regular and thus the solution of Eq. (9) is without any problem amenable to finite-precision numerical computation (Percival and Walden 1993).

2.2 Spatiospectral Concentration in the Cartesian Plane

2.2.1 General Theory in Two Dimensions

A square-integrable function f(x) defined in the plane has the two-dimensional Fourier representation

We use g(x) to denote a function that is bandlimited to \(\mathcal{K}\), an arbitrary subregion of spectral space,

Following Slepian (1964), we seek to concentrate the power of g(x) into a finite spatial region \(\mathcal{R}\in \mathbb{R}^{2}\), of area A:

Bandlimited functions g(x) that maximize the Rayleigh quotient (13) solve the Fredholm integral equation (Tricomi 1970)

The corresponding problem in the spatial domain is

The bandlimited spatial-domain eigenfunctions \(g_{1}(\mathbf{x}),g_{2}(\mathbf{x}),\ldots\) and eigenvalues \(\lambda _{1} \geq \lambda _{2} \geq \ldots\) that solve Eq. (15) may be chosen to be orthonormal over the whole plane \(\|\mathbf{x}\| \leq \infty\) in which case they are also orthogonal over \(\mathcal{R}\):

Concentration to the disk-shaped spectral band \(\mathcal{K} =\{ \mathbf{k}:\| \mathbf{k}\| \leq K\}\) allows us to rewrite Eq. (15) after a change of variables and a scaling of the eigenfunctions as

where the region \(\mathcal{R}_{{\ast}}\) is scaled to area 4π and J 1 is the first-order Bessel function of the first kind. Equation (17) shows that, also in the two-dimensional case, the eigenvalues \(\lambda _{1},\lambda _{2},\ldots\) and the scaled eigenfunctions \(\psi _{1}(\boldsymbol{\xi }),\psi _{2}(\boldsymbol{\xi }),\ldots\) depend only on the combination of the circular bandwidth K and the spatial concentration area A, where the quantity K 2 A∕(4π) now plays the role of the time-bandwidth product TW in the one-dimensional case. The sum of the concentration eigenvalues \(\lambda\) defines the two-dimensional Shannon number N 2D as

Just as N 1D in Eq. (8), N 2D is the product of the spectral and spatial areas of concentration multiplied by the “Nyquist density” (Daubechies 1988, 1992). And, similarly, it is the effective dimension of the space of “essentially” space- and bandlimited functions in which the set of two-dimensional functions \(g_{1},g_{2},\ldots,g_{N^{\mathrm{2D}}}\) may act as a sparse orthogonal basis.

After a long hiatus since the work of Slepian (1964), the two-dimensional problem has recently been the focus of renewed attention in the applied mathematics community (de Villiers et al. 2003; Shkolnisky 2007), and applications to the geosciences are following suit (Simons and Wang 2011). Numerous numerical methods exist to use Eqs. (14) and (15) in solving the concentration problem (13) on two-dimensional Cartesian domains. An example of Slepian functions on a geographical domain in the Cartesian plane can be found in Fig. 1.

Bandlimited eigenfunctions \(g_{1},g_{2},\ldots,g_{4}\) that are optimally concentrated within the Columbia Plateau, a physiographic region in the United States centered on 116.02 ∘W 43.56 ∘N (near Boise City, Idaho) of area A ≈ 145 × 103 km2. The concentration factors \(\lambda _{1},\lambda _{2},\ldots,\lambda _{4}\) are indicated; the Shannon number N 2D = 10. The top row shows a rendition of the eigenfunctions in space on a grid with 5 km resolution in both directions, with the convention that positive values are blue and negative values red, though the sign of the functions is arbitrary. The spatial concentration region is outlined in black. The bottom row shows the squared Fourier coefficients | G α (k) | 2 as calculated from the functions g α (x) shown, on a wavenumber scale that is expressed as a fraction of the Nyquist wavenumber. The spectral limitation region is shown by the black circle at wavenumber K = 0. 0295 rad/km. All areas for which the absolute value of the functions plotted is less than one hundredth of the maximum value attained over the domain are left white. The calculations were performed by the Nyström method using Gauss-Legendre integration of Eq. (17) in the two-dimensional spatial domain (Simons and Wang 2011)

2.2.2 Sturm-Liouville Character and Tridiagonal Matrix Formulation

If in addition to the circular spectral limitation, space is also circularly limited, in other words, if the spatial region of concentration or limitation \(\mathcal{R}\) is a circle of radius R, then a polar coordinate, \(\mathbf{x} = (r,\theta )\), representation

may be used to decompose Eq. (17) into a series of nondegenerate fixed-order eigenvalue problems, after scaling,

The solutions to Eq. (20) also solve a Sturm-Liouville equation on \(0 \leq \xi \leq 1\). In terms of \(\varphi (\xi ) = \sqrt{\xi }\,\psi (\xi )\),

for some \(\chi \neq \lambda\). When m = ±1∕2 Eq. (21) reduces to Eq. (9). By extension to \(\xi> 1\) the fixed-order “generalized prolate spheroidal functions” \(\varphi _{1}(\xi ),\varphi _{2}(\xi ),\ldots\) can be determined from the rapidly converging infinite series

where \(\varphi (0) = 0\) and the fixed-m expansion coefficients d l are determined by recursion (Slepian 1964) or by diagonalization of a symmetric tridiagonal matrix (de Villiers et al. 2003; Shkolnisky 2007) with elements given by

where the parameter l ranges from 0 to some large value that ensures convergence. The desired concentration eigenvalues \(\lambda\) can subsequently be obtained by direct integration of Eq. (17), or, alternatively, from

An example of Slepian functions on a disk-shaped region in the Cartesian plane can be found in Fig. 2. The solutions were obtained using the Nyström method using Gauss-Legendre integration of Eq. (17) in the two-dimensional spatial domain. These differ only very slightly from the results of computations carried out using the diagonalization of Eqs. (23) directly, as shown and discussed by us elsewhere (Simons and Wang 2011).

Bandlimited eigenfunctions \(g_{\alpha }(r,\theta )\) that are optimally concentrated within a Cartesian disk of radius R = 1. The dashed circle denotes the region boundary. The Shannon number N 2D = 42. The eigenvalues \(\lambda _{\alpha }\) have been sorted to a global ranking with the best-concentrated eigenfunction plotted at the top left and the 30th best in the lower right. Blue is positive and red is negative and the color axis is symmetric, but the sign is arbitrary; regions in which the absolute value is less than one hundredth of the maximum value on the domain are left white. The calculations were performed by Gauss-Legendre integration in the two-dimensional spatial domain, which sometimes leads to slight differences in the last two digits of what should be identical eigenvalues for each pair of non-circularly-symmetric eigenfunctions

2.3 Spatiospectral Concentration on the Surface of a Sphere

2.3.1 General Theory in “Three” Dimensions

We denote the colatitude of a geographical point \(\mathbf{\hat{r}}\) on the unit sphere surface \(\Omega =\{ \mathbf{\hat{r}}:\| \mathbf{\hat{r}}\| = 1\}\) by \(0 \leq \theta \leq \pi\) and the longitude by 0 ≤ ϕ < 2π. We use R to denote a region of \(\Omega\), of area A, within which we seek to concentrate a bandlimited function of position \(\mathbf{\hat{r}}\). We express a square-integrable function \(f(\mathbf{\hat{r}})\) on the surface of the unit sphere as

using orthonormalized real surface spherical harmonics (Edmonds 1996; Dahlen and Tromp 1998)

The quantity \(0 \leq l \leq \infty\) is the angular degree of the spherical harmonic, and − l ≤ m ≤ l is its angular order. The function P lm (μ) defined in (28) is the associated Legendre function of integer degree l and order m. Our choice of the constants in Eqs. (26) and (27) orthonormalizes the harmonics on the unit sphere:

and leads to the addition theorem in terms of the Legendre functions \(P_{l}(\mu ) = P_{l0}(\mu )\) as

To maximize the spatial concentration of a bandlimited function

within a region R, we maximize the energy ratio

Maximizing Eq. (32) leads to the positive-definite spectral-domain eigenvalue equation

and we may equally well rewrite Eq. (33) as a spatial-domain eigenvalue equation:

where P l is the Legendre function of degree l. Equation (34) is a homogeneous Fredholm integral equation of the second kind, with a finite-rank, symmetric, Hermitian kernel. We choose the spectral eigenfunctions of the operator in Eq. (33b), whose elements are g lm α , α = 1, \(\ldots,\) (L + 1)2, to satisfy the orthonormality relations

The finite set of bandlimited spatial eigensolutions \(g_{1}(\mathbf{\hat{r}}),g_{2}(\mathbf{\hat{r}}),\ldots,g_{(L+1)^{2}}(\mathbf{\hat{r}})\) can be made orthonormal over the whole sphere \(\Omega\) and orthogonal over the region R:

In the limit of a small area \(A \rightarrow 0\) and a large bandwidth \(L \rightarrow \infty\) and after a change of variables, a scaled version of Eq. (34) will be given by

where the scaled region R ∗ now has area 4π and J 1 again is the first-order Bessel function of the first kind. As in the one- and two-dimensional case, the asymptotic, or “flat-Earth” eigenvalues \(\lambda _{1} \geq \lambda _{2} \geq \ldots\) and scaled eigenfunctions \(\psi _{1}(\boldsymbol{\xi }),\psi _{2}(\boldsymbol{\xi }),\ldots\) depend upon the maximal degree L and the area A only through what is once again a space-bandwidth product, the “spherical Shannon number,” this time given by

Irrespectively of the particular region of concentration that they were designed for, the complete set of bandlimited spatial Slepian eigenfunctions \(g_{1},g_{2},\ldots,g_{(L+1)^{2}}\) is a basis for bandlimited scalar processes anywhere on the surface of the unit sphere (Simons et al. 2006; Simons and Dahlen 2006). This follows directly from the fact that the spectral localization kernel (33b) is real, symmetric, and positive definite: its eigenvectors \(g_{1lm},g_{2lm},\ldots,g_{(L+1)^{2}lm}\) form an orthogonal set as we have seen. Thus the Slepian basis functions \(g_{\alpha }(\mathbf{\hat{r}})\), α = 1, …, (L + 1)2 given by Eq. (31) simply transform the same-sized limited set of spherical harmonics \(Y _{lm}(\mathbf{\hat{r}})\), 0 ≤ l ≤ L, − l ≤ m ≤ l that are a basis for the same space of bandlimited spherical functions with no power above the bandwidth L. The effect of this transformation is to order the resulting basis set such that the energy of the first N 3D functions, \(g_{1}(\mathbf{\hat{r}}),\ldots,g_{N^{\mathrm{3D}}}(\mathbf{\hat{r}})\), with eigenvalues \(\lambda \approx 1\), is concentrated in the region R, whereas the remaining eigenfunctions, \(g_{N^{\mathrm{3D}}+1}(\mathbf{\hat{r}}),\ldots,g_{(L+1)^{2}}(\mathbf{\hat{r}})\), are concentrated in the complimentary region \(\Omega \setminus R\). As in the one- and two-dimensional case, therefore, the reduced set of basis functions \(g_{1},g_{2},\ldots,g_{N^{\mathrm{3D}}}\) can be regarded as a sparse, global basis suitable to approximate bandlimited processes that are primarily localized to the region R. The dimensionality reduction is dependent on the fractional area of the region of interest. In other words, the full dimension of the space (L + 1)2 can be “sparsified” to an effective dimension of \(N^{\mathrm{3D}} = (L + 1)^{2}A/(4\pi )\) when the signal of interest resides in a particular geographic region.

Numerical methods for the solution of Eqs. (33) and (34) on completely general domains on the surface of the sphere were discussed by us elsewhere (Simons et al. 2006; Simons and Dahlen 2006, 2007). An example of Slepian functions on a geographical domain on the surface of the sphere is found in Fig. 3.

Bandlimited L = 60 eigenfunctions \(g_{1},g_{2},\ldots,g_{12}\) that are optimally concentrated within Antarctica. The concentration factors \(\lambda _{1},\lambda _{2},\ldots,\lambda _{12}\) are indicated; the rounded Shannon number is N 3D = 102. The order of concentration is left to right, top to bottom. Positive values are blue and negative values are red; the sign of an eigenfunction is arbitrary. Regions in which the absolute value is less than one hundredth of the maximum value on the sphere are left white. We integrated Eq. (33b) over a splined high-resolution representation of the boundary, using Gauss-Legendre quadrature over the colatitudes, and analytically in the longitudinal dimension (Simons and Dahlen 2007)

2.3.2 Sturm-Liouville Character and Tridiagonal Matrix Formulation

In the special but important case in which the region of concentration is a circularly symmetric cap of colatitudinal radius \(\Theta\), centered on the North Pole, the colatitudinal parts \(g(\theta )\) of the separable functions

which solve Eq. (34), or, indeed, the fixed-order versions

are identical to those of a Sturm-Liouville equation for the \(g(\theta )\). In terms of \(\mu =\cos \theta\),

with \(\chi \neq \lambda\). This equation can be solved in the spectral domain by diagonalization of a simple symmetric tridiagonal matrix with a very well-behaved spectrum (Simons et al. 2006; Simons and Dahlen 2007). This matrix, whose eigenfunctions correspond to the g lm of Eq. (31) at constant m, is given by

Moreover, when the region of concentration is a pair of axisymmetric polar caps of common colatitudinal radius \(\Theta\) centered on the North and South Pole, the \(g(\theta )\) can be obtained by solving the Sturm-Liouville equation

where L p = L or L p = L − 1 depending whether the order m of the functions \(g(\theta,\phi )\) in Eq. (39) is odd or even and whether the bandwidth L itself is odd or even. In their spectral form the coefficients of the optimally concentrated antipodal polar-cap eigenfunctions only require the numerical diagonalization of a symmetric tridiagonal matrix with analytically prescribed elements and a spectrum of eigenvalues that is guaranteed to be simple (Simons and Dahlen 2006, 2007), namely,

The concentration values \(\lambda\), in turn, can be determined from the defining Eqs. (33) or (34). The spectra of the eigenvalues χ of Eqs. (42) and (44) display roughly equant spacing, without the numerically troublesome plateaus of nearly equal values that characterize the eigenvalues \(\lambda\). Thus, for the special cases of symmetric single and double polar caps, the concentration problem posed in Eq. (32) is not only numerically feasible also in circumstances where direct solution methods are bound to fail (Albertella et al. 1999), but essentially trivial in every situation. In practical applications, the eigenfunctions that are optimally concentrated within a polar cap can be rotated to an arbitrarily positioned circular cap on the unit sphere using standard spherical harmonic rotation formulae (Edmonds 1996; Blanco et al. 1997; Dahlen and Tromp 1998).

2.4 Vectorial Slepian Functions on the Surface of a Sphere

2.4.1 General Theory in “Three” Vectorial Dimensions

The expansion of a real-valued square-integrable vector field \(\mathbf{f}(\mathbf{\hat{r}})\) on the unit sphere \(\Omega\) can be written as

using real vector surface spherical harmonics (Dahlen and Tromp 1998; Sabaka et al. 2010; Gerhards 2011) that are constructed from the scalar harmonics in Eq. (26), as follows. In the vector spherical coordinates \((\mathbf{\hat{r}},\boldsymbol{\hat{\theta }},\boldsymbol{\hat{\phi }})\) and using the surface gradient \(\boldsymbol{\nabla }_{1} =\boldsymbol{\hat{\theta }} \partial _{\theta } +\boldsymbol{\hat{\phi }} (\sin \theta )^{-1}\partial _{\phi }\), we write for l > 0 and − m ≤ l ≤ m,

together with the purely radial \(\mathbf{P}_{00} = (4\pi )^{-1/2}\mathbf{\hat{r}}\), and setting \(f_{00}^{B} = f_{00}^{C} = 0\) for every vector field f. The remaining expansion coefficients (45b) are naturally obtained from Eq. (45a) through the orthonormality relationships

The vector spherical-harmonic addition theorem (Freeden and Schreiner 2009) implies the limited result

As before we seek to maximize the spatial concentration of a bandlimited spherical vector function

within a certain region R, in the vectorial case by maximizing the energy ratio

The maximization of Eq. (52) leads to a coupled system of positive-definite spectral-domain eigenvalue equations, \(\mathrm{for}\,\,0 \leq l \leq L\,\,\mathrm{and}\, - l \leq m \leq l\),

Of the below matrix elements that complement the equations above, Eq. (54a) is identical to Eq. (33b),

and the transpose of Eq. (54c) switches its sign. The radial vectorial concentration problem (53a)–(54a) is identical to the corresponding scalar case (33) and can be solved separately from the tangential equations. Altogether, in the space domain, the equivalent eigenvalue equation is

a homogeneous Fredholm integral equation with a finite-rank, symmetric, separable, bandlimited kernel. Further reducing Eq. (55) using the full version of the vectorial addition theorem does not yield much additional insight.

After collecting the spheroidal (radial, consoidal) and toroidal expansion coefficients in a vector,

and the kernel elements D lm, l′m′, B lm, l′m′ and C lm, l′m′ of Eq. (54) into the submatrices \(\boldsymbol{\mathsf{D}}\), \(\boldsymbol{\mathsf{B}}\), and \(\boldsymbol{\mathsf{C}}\), we assemble

In this new notation Eq. (53) reads as an \([3(L + 1)^{2} - 2] \times [3(L + 1)^{2} - 2]\)-dimensional algebraic eigenvalue problem

whose eigenvectors \(\boldsymbol{\mathsf{g}}_{1},\boldsymbol{\mathsf{g}}_{2},\ldots,\boldsymbol{\mathsf{g}}_{3(L+1)^{2}-2}\) are mutually orthogonal in the sense

The associated eigenfields \(\mathbf{g}_{1}(\mathbf{\hat{r}}),\mathbf{g}_{2}(\mathbf{\hat{r}}),\ldots,\mathbf{g}_{3(L+1)^{2}-2}(\mathbf{\hat{r}})\) are orthogonal over the region R and orthonormal over the whole sphere \(\Omega\):

The relations (60) for the spatial domain are equivalent to their matrix counterparts (59). The eigenfield \(\mathbf{g}_{1}(\mathbf{\hat{r}})\) with the largest eigenvalue \(\lambda _{1}\) is the element in the space of bandlimited vector fields with most of its spatial energy within region R; the eigenfield \(\mathbf{g}_{2}(\mathbf{\hat{r}})\) is the next best-concentrated bandlimited function orthogonal to \(\mathbf{g}_{1}(\mathbf{\hat{r}})\) over both \(\Omega\) and R; and so on. Finally, as in the scalar case, we can sum up the eigenvalues of the matrix \(\boldsymbol{\mathsf{K}}\) to define a vectorial spherical Shannon number

To establish the last equality we used the relation (50). Given the decoupling of the radial from the tangential solutions that is apparent from Eq. (57), we may subdivide the vectorial spherical Shannon number into a radial and a tangential one. These are N r = (L + 1)2 A∕(4π) and N t = [2(L + 1)2 − 2]A∕(4π), respectively.

Numerical solution methods were discussed by Plattner and Simons (2014). An example of tangential vectorial Slepian functions on a geographical domain on the surface of the sphere is found in Fig. 4.

Twelve tangential Slepian functions \(\mathbf{g}_{1},\mathbf{g}_{2},\ldots,\mathbf{g}_{12}\), bandlimited to L = 60, optimally concentrated within Australia. The concentration factors \(\lambda _{1},\lambda _{2},\ldots,\lambda _{12}\) are indicated. The rounded tangential Shannon number N t = 112. Order of concentration is left to right, top to bottom. Color is absolute value (red the maximum) and circles with strokes indicate the direction of the eigenfield on the tangential plane. Regions in which the absolute value is less than one hundredth of the maximum absolute value on the sphere are left white

2.4.2 Sturm-Liouville Character and Tridiagonal Matrix Formulation

When the region of concentration R is a symmetric polar cap with colatitudinal radius \(\Theta\) centered on the north pole, special rules apply that greatly facilitate the construction of the localization kernel (57). There are reductions of Eq. (54) to some very manageable integrals that can be carried out exactly by recursion. Solutions for the polar cap can be rotated anywhere on the unit sphere using the same transformations that apply in the rotation of scalar functions (Edmonds 1996; Blanco et al. 1997; Dahlen and Tromp 1998; Freeden and Schreiner 2009).

In the axisymmetric case the matrix elements (54a)–(54c) reduce to

using the derivative notation \(X'_{lm} = dX_{lm}/d\theta\) for the normalized associated Legendre functions of Eq. (27). Equation (66) can be easily evaluated. The integrals over the product terms X lm X l′m in Eq. (64) can be rewritten using Wigner 3j symbols (Wieczorek and Simons 2005; Simons et al. 2006; Plattner and Simons 2014) to simple integrals over X l2m or X l0 which can be handled recursively (Paul 1978). Finally, in Eq. (65) integrals of the type X′ lm X′ l′m , and \(m^{2}(\sin \theta )^{-2}X_{lm}X_{l'm}\) can be rewritten as integrals over undifferentiated products of Legendre functions (Ilk 1983; Eshagh 2009; Plattner and Simons 2014). All in all, these computations are straightforward to carry out and lead to block-diagonal matrices at constant order m, which are relatively easily diagonalized.

As this chapter went to press, Jahn and Bokor (2014) reported the exciting discovery of a differential operator that commutes with the tangential part of the concentration operator (55), and a tridiagonal matrix formulation for the tangential vectorial concentration problem to axisymmetric domains. They achieve this feat by a change of basis by which to reduce the vectorial problem to a scalar one that is separable in \(\theta\) and ϕ, using the special functions \(X'_{lm} \pm m(\sin \theta )^{-1}X_{lm}\) (Sheppard and Török 1997). Hence they derive a commuting differential operator and a corresponding spectral matrix for the concentration problem. By their approach, the solutions to the fixed-order tangential concentration problem are again solutions to a Sturm-Liouville problem with a very simple eigenvalue spectrum, and the calculations are always fast and stable, much as they are for the radial problem which completes the construction of vectorial Slepian functions on the sphere.

2.5 Midterm Summary

It is interesting to reflect, however heuristically, on the commonality of all of the above aspects of spatiospectral localization, in the slightly expanded context of reproducing-kernel Hilbert spaces (Yao 1967; Nashed and Walter 1991; Daubechies 1992; Amirbekyan et al. 2008; Kennedy and Sadeghi 2013). In one dimension, the Fourier orthonormality relation and the “reproducing” properties of the spatial delta function are given by

In two Cartesian dimensions the equivalent relations are

and on the surface of the unit sphere we have, for the scalar case,

and for the vector case, we have the sum of dyads

The integral-equation kernels (5b), (15b), (34b), and (55b) are all bandlimited spatial delta functions which are reproducing kernels for bandlimited functions of the types in Eqs. (2), (12), (31), and (51):

The equivalence of Eq. (71) with Eq. (5b) is through the Euler identity, and the reproducing properties follow from the spectral forms of the orthogonality relations (67) and (68), which are self-evident by change of variables, and from the spectral form of Eq. (69), which is Eq. (29). Much as the delta functions of Eqs. (67)–(70) set up the Hilbert spaces of all square-integrable functions on the real line, in two-dimensional Cartesian space and on the surface of the sphere (both scalar and vector functions), the kernels (71) and (74) induce the equivalent subspaces of bandlimited functions in their respective dimensions. Inasmuch as the Slepian functions are the integral eigenfunctions of these reproducing kernels in the sense of Eqs. (5a), (15a), (34a), and (55a), they are complete bases for their band-limited subspaces (Slepian and Pollak 1961; Landau and Pollak 1961; Daubechies 1992; Flandrin 1998; Freeden et al. 1998; Plattner and Simons 2014). Therein, the N 1D, N 2D, N 3D, or N vec best time- or space-concentrated members allow for sparse, approximate expansions of signals that are spatially concentrated to the one-dimensional interval \(t \in [-T,T] \subset \mathbb{R}\), the Cartesian region \(\mathbf{x} \in \mathcal{R}\subset \mathbb{R}^{2}\), or the spherical surface patch \(\mathbf{\hat{r}} \in R \subset \Omega\).

As a corollary to this behavior, the infinite sets of exactly time- or spacelimited (and thus band-concentrated) versions of the functions g and g, which are the eigenfunctions of Eqs. (5), (15), (34), and (55) with the domains appropriately restricted, are complete bases for square-integrable scalar or vector functions on the intervals to which they are confined (Slepian and Pollak 1961; Landau and Pollak 1961; Simons et al. 2006; Plattner and Simons 2013). Expansions of such wideband signals in the small subset of their N 1D, N 2D, N 3D, or N vec most band-concentrated members provide reconstructions which are constructive in the sense that they progressively capture all of the signal in the mean-squared sense, in the limit of letting their numbers grow to infinity. This second class of functions can be trivially obtained, up to a multiplicative constant, from the bandlimited Slepian functions g and g by simple time- or space limitation. While Slepian (Slepian and Pollak 1961; Slepian 1983), for this reason perhaps, never gave them a name, we have been referring to those as h (and h) in our own investigations of similar functions on the sphere (Simons et al. 2006; Simons and Dahlen 2006; Dahlen and Simons 2008; Plattner and Simons 2013).

3 Problems in the Geosciences and Beyond

Taking all of the above at face value but referring again to the literature cited thus far for proof and additional context, we return to considerations closer to home, namely, the estimation of geophysical (or cosmological) signals and/or their power spectra, from noisy and incomplete observations collected at or above the surface of the spheres “Earth” or “planet” (or from inside the sphere “sky”). We restrict ourselves to real-valued scalar measurements, contaminated by additive noise for which we shall adopt idealized models. We focus exclusively on data acquired and solutions expressed on the unit sphere. We have considered generalizations to problems involving satellite potential-field data collected at an altitude elsewhere (Simons and Dahlen 2006; Simons et al. 2009). We furthermore note that descriptions of the scalar gravitational and magnetic potential may be sufficient to capture the behavior of the corresponding gravity and magnetic vector fields, but that with vectorial Slepian functions, versatile and demanding satellite data analysis problems will be able to get robustly handled even in the presence of noise that may be strongly heterogeneous spatially and/or over the individual vector components.

Speaking quite generally, the two different statistical problems that arise when geomathematical scalar spherical data are being studied are, (i) how to find the “best” estimate of the signal given the data and (ii) how to construct from the data the “best” estimate of the power spectral density of the signal in question. There are problems intermediate between either case, for instance, those that utilize the solutions to problems of the kind (i) to make inference about the power spectral density without properly solving any problems of kind (ii). Mostly such scenarios, e.g., in localized geomagnetic field analysis (Beggan et al. 2013), are born out of necessity or convenience. We restrict our analysis to the “pure” end-member problems.

Thus, let there be some real-valued scalar data distributed on the unit sphere, consisting of “signal,” s and “noise,” n, and let there be some region of interest \(R \subset \Omega\); in other words, let

We assume that the signal of interest can be expressed by way of spherical harmonic expansion as in Eq. (25), and that it is, itself, a realization of a zero-mean, Gaussian, isotropic, random process, namely,

For illustration we furthermore assume that the noise is a zero-mean stochastic process with an isotropic power spectrum, i.e., \(\langle n(\mathbf{\hat{r}})\rangle = 0\) and \(\langle n_{lm}n_{l'm'}\rangle = N_{l}\,\delta _{ll'}\delta _{mm'}\), and that it is statistically uncorrelated with the signal. We refer to power as white when S l = S or N l = N, or, equivalently, when \(\langle n(\mathbf{\hat{r}})n(\mathbf{\hat{r}}')\rangle = N\delta (\mathbf{\hat{r}},\mathbf{\hat{r}}')\). Our objectives are thus (i) to determine the best estimate \(\hat{s}_{lm}\) of the spherical harmonic expansion coefficients s lm of the signal and (ii) to find the best estimate \(\hat{S}_{l}\) for the isotropic power spectral density S l . While in the physical world there can be no limit on bandwidth, practical restrictions force any and all of our estimates to be bandlimited to some maximum spherical harmonic degree L, thus by necessity \(\hat{s}_{lm} = 0\) and \(\hat{S}_{l} = 0\) for l > L:

This limitation, combined with the statements of Eq. (75) on the data coverage or the study region of interest, naturally leads us back to the concept of “spatiospectral concentration,” and, as we shall see, solving either problem (i) or (ii) will gain from involving the “localized” scalar Slepian functions rather than, or in addition to, the “global” spherical harmonics basis.

This leaves us to clarify what we understand by “best” in this context. While we adopt the traditional statistical metrics of bias, variance, and mean-squared error to appraise the quality of our solutions (Cox and Hinkley 1974; Bendat and Piersol 2000), the resulting connections to sparsity will be real and immediate, owing to the Slepian functions being naturally instrumental in constructing efficient, consistent, and/or unbiased estimates of either \(\hat{s}_{lm}\) or \(\hat{S}_{l}\). Thus, we define

for problem (i), where the lack of subscript indicates that we can study variance, bias, and mean-squared error of the estimate of the coefficients \(\hat{s}_{lm}\) but also of their spatial expansion \(\hat{s}(\mathbf{\hat{r}})\). For problem (ii) on the other hand, we focus on the estimate of the isotropic power spectrum at a given spherical harmonic degree l by identifying

Depending on the application, the “best” estimate could mean the unbiased one with the lowest variance (Tegmark 1997; Tegmark et al. 1997; Bond et al. 1998; Oh et al. 1999; Hinshaw et al. 2003), it could be simply the minimum-variance estimate having some acceptable and quantifiable bias (Wieczorek and Simons 2007), or, as we would usually prefer, it would be the one with the minimum mean-squared error (Simons and Dahlen 2006; Dahlen and Simons 2008).

3.1 Problem (i): Signal Estimation from Spherical Data

3.1.1 Spherical Harmonic Solution

Paraphrasing results elaborated elsewhere (Simons and Dahlen 2006), we write the bandlimited solution to the damped inverse problem

where η ≥ 0 is a damping parameter, by straightforward algebraic manipulation, as

where \(\bar{D}_{lm,l'm'}\), the kernel that localizes to the region \(\bar{R} = \Omega \setminus R\), compliments D lm, l′m′ given by Eq. (33b) which localizes to R. Given the eigenvalue spectrum of the latter, its inversion is inherently unstable, thus Eq. (80) is an ill-conditioned inverse problem unless η > 0, as has been well known, e.g., in geodesy (Xu 1992; Sneeuw and van Gelderen 1997). Elsewhere (Simons and Dahlen 2006) we have derived exact expressions for the optimal value of the damping parameter η as a function of the signal-to-noise ratio under certain simplifying assumptions. As can be easily shown, without damping the estimate is unbiased but effectively incomputable; the introduction of the damping term stabilizes the solution at the cost of added bias. And of course when \(R = \Omega\), Eq. (81) is simply the spherical harmonic transform, as in that case, Eq. (33b) reduces to Eq. (29), in other words, then \(D_{lm,l'm'} =\delta _{ll'}\delta _{mm'}\).

3.1.2 Slepian Basis Solution

The trial solution in the Slepian basis designed for this region of interest R, i.e.,

would be completely equivalent to the expression in Eq. (77) by virtue of the completeness of the Slepian basis for bandlimited functions everywhere on the sphere and the unitarity of the transform (31) from the spherical harmonic to the Slepian basis. The solution to the undamped (η = 0) version of Eq. (80) would then be

which, being completely equivalent to Eq. (81) for η = 0, would be computable and biased, only when the expansion in Eq. (82) were to be truncated to some finite J < (L + 1)2 to prevent the blowup of the eigenvalues \(\lambda\). Assuming for simplicity of the argument that J = N 3D, the essence of the approach is now that the solution

will be sparse (in achieving a bandwidth L using N 3D Slepian instead of (L + 1)2 spherical-harmonic expansion coefficients) yet good (in approximating the signal as well as possible in the mean-squared sense in the region of interest R) and of geophysical utility (assuming we are dealing with spatially localized processes that are to be extracted, e.g., from global satellite measurements) as shown by Han and Simons (2008), Simons et al. (2009), and Harig and Simons (2012).

3.2 Bias and Variance

In concluding this section let us illustrate another welcome by-product of our methodology, by writing the mean-squared error for the spherical harmonic solution (81) compared to the equivalent expression for the solution in the Slepian basis, Eq. (83). We do this as a function of the spatial coordinate, in the Slepian basis for both, and, for maximum clarity of the exposition, using the contrived case when both signal and noise should be white (with power S and N, respectively) as well as bandlimited (which technically is impossible). In the former case, we get

while in the latter, we obtain

All (L + 1)2 basis functions are required to express the mean-squared estimation error, whether in Eq. (85) or in Eq. (86). The first term in both expressions is the variance, which depends on the measurement noise. Without damping or truncation the variance grows without bounds. Damping and truncation alleviate this at the expense of added bias, which depends on the characteristics of the signal, as given by the second term. In contrast to Eq. (85), however, the Slepian expression (86) has disentangled the contributions due to noise/variance and signal/bias by projecting them onto the sparse set of well-localized and the remaining set of poorly localized Slepian functions, respectively. The estimation variance is felt via the basis functions \(\alpha = 1 \rightarrow N^{\mathrm{3D}}\) that are well concentrated inside the measurement area, and the effect of the bias is relegated to those \(\alpha = N^{\mathrm{3D}} + 1 \rightarrow (L + 1)^{2}\) functions that are confined to the region of missing data.

When forming a solution to problem (i) in the Slepian basis by truncation according to Eq. (84), changing the truncation level J to values lower or higher than the Shannon number N 3D amounts to navigating the trade-off space between variance, bias (or “resolution”), and sparsity in a manner that is captured with great clarity by Eq. (86). We refer the reader elsewhere (Simons and Dahlen 2006, 2007) for more details, and, in particular, for the case of potential fields estimated from data collected at satellite altitude, treated in detail in chapter Potential-Field Estimation Using Scalar and Vector Slepian Functions at Satellite Altitude of this book.

3.3 Problem (ii): Power Spectrum Estimation from Spherical Data

Following Dahlen and Simons (2008) we will find it convenient to regard the data \(d(\mathbf{\hat{r}})\) given in Eq. (75) as having been multiplied by a unit-valued boxcar window function confined to the region R,

The power spectrum of the boxcar window (87) is

3.3.1 The Spherical Periodogram

Should we decide that an acceptable estimate of the power spectral density of the available data is nothing else but the weighted average of its spherical harmonic expansion coefficients, we would be forming the spherical analogue of what Schuster (1898) named the “periodogram” in the context of time series analysis, namely,

3.3.2 Bias of the Periodogram

Upon doing so we would discover that the expected value of such an estimator would be the biased quantity

where, as it is known in astrophysics and cosmology (Peebles 1973; Hauser and Peebles 1973; Hivon et al. 2002), the periodogram “coupling” matrix

governs the extent to which an estimate \(\hat{S}_{l}^{\mathrm{SP}}\) of S l is influenced by spectral leakage from power in neighboring spherical harmonic degrees l′ = l ± 1, l ± 2, …, all the way down to 0 and up to \(\infty\). In the case of full data coverage, \(R = \Omega\), or of a perfectly white spectrum, S l = S, however, the estimate would be unbiased – provided the noise spectrum, if known, can be subtracted beforehand.

3.3.3 Variance of the Periodogram

The covariance of the periodogram estimator (89) would moreover be suffering from strong wideband coupling of the power spectral densities in being given by

Even under the commonly made assumption as should the power spectrum be slowly varying within the main lobe of the coupling matrix, such coupling would be nefarious. In the “locally white” case we would have

Only in the limit of whole-sphere data coverage will Eqs. (92) or (93) reduce to

which is the “planetary” or “cosmic” variance that can be understood on the basis of elementary statistical considerations (Jones 1963; Knox 1995; Grishchuk and Martin 1997). The strong spectral leakage for small regions (A ≪ 4π) is highly undesirable and makes the periodogram “hopelessly obsolete” (Thomson and Chave 1991), or, to put it kindly, “naive” (Percival and Walden 1993), just as it is for one-dimensional time series.

In principle it is possible – after subtraction of the noise bias – to eliminate the leakage bias in the periodogram estimate (89) by numerical inversion of the coupling matrix K ll′. Such a “deconvolved periodogram” estimator is unbiased. However, its covariance depends on inverting the periodogram coupling matrix, which is only feasible when the region R covers most of the sphere, A ≈ 4π. For any region whose area A is significantly smaller than 4π, the periodogram coupling matrix (91) will be too ill-conditioned to be invertible.

Thus, much like in problem (i) we are faced with bad bias and poor variance, both of which are controlled by the lack of localization of the spherical harmonics and their non-orthogonality over incomplete subdomains of the unit sphere. Both effects are described by the spatiospectral localization kernel defined in (33b), which, in the quadratic estimation problem (ii), appears in “squared” form in Eq. (92). Undoing the effects of the wideband coupling between degrees at which we seek to estimate the power spectral density by inversion of the coupling kernel is virtually impossible, and even if we could accomplish this to remove the estimation bias, this would much inflate the estimation variance (Dahlen and Simons 2008).

3.3.4 The Spherical Multitaper Estimate

We therefore take a page out of the one-dimensional power estimation playbook of Thomson (1982) by forming the “eigenvalue-weighted multitaper estimate.” We could weight single-taper estimates adaptively to minimize quality measures such as estimation variance or mean-squared error (Thomson 1982; Wieczorek and Simons 2007), but in practice, these methods tend to be rather computationally demanding. Instead we simply multiply the data \(d(\mathbf{\hat{r}})\) by the Slepian functions or “tapers” \(g_{\alpha }(\mathbf{\hat{r}})\) designed for the region of interest prior to computing power and then averaging:

3.3.5 Bias of the Multitaper Estimate

The expected value of the estimate (95) is

where the eigenvalue-weighted multitaper coupling matrix, using Wigner 3-j functions (Varshalovich et al. 1988; Messiah 2000), is given by

It is remarkable that this result depends only upon the chosen bandwidth L and is completely independent of the size, shape, or connectivity of the region R, even as \(R = \Omega\). Moreover, every row of the matrix in Eq. (97) sums to unity, which ensures that the multitaper spectral estimate \(\hat{S}_{l}^{\mathrm{MT}}\) has no leakage bias in the case of a perfectly white spectrum provided the noise bias is subtracted as well: \(\langle \hat{S}_{l}^{\mathrm{MT}}\rangle -\sum M_{ll'}N_{l'} = S\) if S l = S.

3.3.6 Variance of the Multitaper Estimate

Under the moderately colored approximation, which is more easily justified in this case because the coupling (97) is confined to a narrow band of width less than or equal to 2L + 1, with L the bandwidth of the tapers, the eigenvalue-weighted multitaper covariance is

where, using Wigner 3-j and 6-j functions (Varshalovich et al. 1988; Messiah 2000),

In this expression B e , the boxcar power (88), which we note does depend on the shape of the region of interest, appears again, summed over angular degrees limited by 3-j selection rules to 0 ≤ e ≤ 2L. The sum in Eq. (98) is likewise limited to degrees 0 ≤ p ≤ 2L. The effect of tapering with windows bandlimited to L is to introduce covariance between the estimates at any two different degrees l and l′ that are separated by fewer than 2L + 1 degrees. Equations (98) and (99) are very efficiently computable, which should make them competitive with, e.g., jackknifed estimates of the estimation variance (Chave et al. 1987; Thomson and Chave 1991; Thomson 2007).

The crux of the analysis lies in the fact that the matrix of the spectral covariances between single-tapered estimates is almost diagonal (Wieczorek and Simons 2007), showing that the individual estimates that enter the weighted formula (95) are almost uncorrelated statistically. This embodies the very essence of the multitaper method. It dramatically reduces the estimation variance at the cost of small increases of readily quantifiable bias.

4 Practical Considerations

In this section we now turn to the very practical context of sampled, e.g., geodetic, data on the sphere. We shall deal exclusively with bandlimited scalar functions, which are equally well expressed in the spherical harmonic as the Slepian basis, namely:

whereby the Slepian-basis expansion coefficients are obtained as

If the function of interest is spatially localized in the region R, a truncated reconstruction using Slepian functions built for the same region will constitute a very good, and sparse, local approximation to it (Simons et al. 2009):

We represent any sampled, bandlimited function f by an M-dimensional column vector

where \(f_{j} = f(\mathbf{\hat{r}}_{j})\) is the value of f at pixel j, and M is the total number of sampling locations. In the most general case the distribution of pixel centers will be completely arbitrary (Hesse et al. 2010). The special case of equal-area pixelization of a 2-D function \(f(\mathbf{\hat{r}})\) on the unit sphere \(\Omega\) is analogous to the equispaced digitization of a 1-D time series. Integrals will then be assumed to be approximated with sufficient accuracy by a Riemann sum over a dense set of pixels,

We have deliberately left the integration domain out of the above equations to cover both the cases of sampling over the entire unit sphere surface \(\Omega\), in which case the solid angle \(\Delta \Omega = 4\pi /M\) (case 1) as well as over an incomplete subdomain \(R \subset \Omega\), in which case \(\Delta \Omega = A/M\), with A the area of the region R (case 2). If we collect the real spherical harmonic basis functions Y lm into an (L + 1)2 × M-dimensional matrix

and the spherical harmonic coefficients of the function into an (L + 1)2 × 1-dimensional vector

we can write the spherical harmonic synthesis in Eq. (100) for sampled data without loss of generality as

We will adhere to the notation convention of using sans-serif fonts (e.g., \(\boldsymbol{\mathsf{f}}\), \(\boldsymbol{\mathsf{Y}}\)) for vectors or matrices that depend on at least one spatial variable, and serifed fonts (e.g., f, D) for those that are entirely composed of “spectral” quantities. In the case of dense, equal-area, whole-sphere sampling, we have an approximation to Eq. (29):

where the elements of the (L + 1)2 × (L + 1)2-dimensional spectral identity matrix I are given by the Kronecker deltas \(\delta _{ll'}\delta _{mm'}\). In the case of dense, equal-area sampling over some closed region R, we find instead an approximation to the (L + 1)2 × (L + 1)2-dimensional “spatiospectral localization matrix”:

where the elements of D are those defined in Eq. (33b).

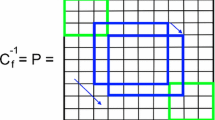

Let us now introduce the (L + 1)2 × (L + 1)2-dimensional matrix of spectral Slepian eigenfunctions by

This is the matrix that contains the eigenfunctions of the problem defined in Eq. (33), which we rewrite as

where the diagonal matrix with the concentration eigenvalues is given by

The spectral orthogonality relations of Eq. (35) are

where the elements of the (L + 1)2 × (L + 1)2-dimensional Slepian identity matrix I are given by the Kronecker deltas δ α β . We write the Slepian functions of Eq. (31) as

where the (L + 1)2 × M-dimensional matrix holding the sampled spatial Slepian functions is given by

Under a dense, equal-area, whole-sphere sampling, we will recover the spatial orthogonality of Eq. (36) approximately as

whereas for dense, equal-area sampling over a region R we will get, instead,

With this matrix notation we shall revisit both estimation problems of the previous section.

4.1 Problem (i), Revisited

4.1.1 Spherical Harmonic Solution

If we treat Eq. (107) as a noiseless inverse problem in which the sampled data \(\boldsymbol{\mathsf{f}}\) are given but from which the coefficients f are to be determined, we find that for dense, equal-area, whole-sphere sampling, the solution

is simply the discrete approximation to the spherical harmonic analysis formula (25). For dense, equal-area, regional sampling we need to calculate

Both of these cases are simply the relevant solutions to the familiar overdetermined spherical harmonic inversion problem (Kaula 1967; Menke 1989; Aster et al. 2005) for discretely sampled data, i.e., the least-squares solution to Eq. (107),

for the particular cases described by Eqs. (108) and (109). In Eq. (119) we furthermore recognize the discrete version of Eq. (81) with η = 0, the undamped solution to the minimum mean-squared error inverse problem posed in continuous form in Eq. (80). From the continuous limiting case Eq. (81), we thus discover the general form that damping should take in regularizing the ill-conditioned inverse required in Eqs. (119) and (120). Its principal property is that it differs from the customary ad hoc practice of adding small values on the diagonal only. Finally, in the most general and admittedly most commonly encountered case of randomly scattered data, we require the Moore-Penrose pseudo-inverse

which is constructed by inverting the singular value decomposition (svd) of \(\boldsymbol{\mathsf{Y}}^{\mathsf{T}}\) with its singular values truncated beyond where they fall below a certain threshold (Xu 1998). Solving Eq. (121) by truncated svd is equivalent to inverting a truncated eigenvalue expansion of the normal matrix \(\boldsymbol{\mathsf{Y}}\boldsymbol{\mathsf{Y}}^{\mathsf{T}}\) as it appears in Eq. (120), as can be easily shown.

4.1.2 Slepian Basis Solution

If we collect the Slepian expansion coefficients of the function f into the (L + 1)2 × 1-dimensional vector

the expansion (100) in the Slepian basis takes the form

where we used Eqs. (113) and (114) to obtain the second equality. Comparing Eq. (123) with Eq. (107), we see that the Slepian expansion coefficients of a function transform to and from the spherical harmonic coefficients as

Under dense, equal-area sampling with complete coverage, the coefficients in Eq. (123) can be estimated from

the discrete, approximate version of Eq. (101). For dense, equal-area sampling in a limited region R, we get

As expected, both of the solutions (125) and (126) are again special cases of the overdetermined least-squares solution

as applied to Eqs. (116) and (117). We encountered Eq. (126) before in the continuous form of Eq. (83); it solves the undamped minimum mean-squared error problem (80). The regularization of this ill-conditioned inverse problem may be achieved by truncation of the concentration eigenvalues, e.g., by restricting the size of the (L + 1)2 × (L + 1)2-dimensional operator \(\boldsymbol{\mathsf{G}}\boldsymbol{\mathsf{G}}^{\mathsf{T}}\) to its first J × J subblock. Finally, in the most general, scattered-data case, we would be using an eigenvalue-truncated version of Eq. (127), or, which is equivalent, form the pseudo-inverse

The solutions (118)–(120) and (125)–(127) are equivalent and differ only by the orthonormal change of basis from the spherical harmonics to the Slepian functions. Indeed, using Eqs. (114) and (124) to transform Eq. (127) into an equation for the spherical harmonic coefficients and comparing with Eq. (120) exposes the relation

which is a trivial identity for case 1 (insert Eqs. 108, 116 and 113) and, after substituting Eqs. (109) and (117), entails

for case 2, which holds by virtue of Eq. (113). Equation (129) can also be verified directly from Eq. (114), which implies

The popular but labor-intensive procedure by which the unknown spherical harmonic expansion coefficients of a scattered data set are obtained by forming the Moore-Penrose pseudo-inverse as in Eq. (121) is thus equivalent to determining the truncated Slepian solution of Eq. (126) in the limit of continuous and equal-area, but incomplete data coverage. In that limit, the generic eigenvalue decomposition of the normal matrix becomes a specific statement of the Slepian problem as we encountered it before, namely,

Such a connection has been previously pointed out for time series (Wingham 1992) and leads to the notion of “generalized prolate spheroidal functions” (Bronez 1988) should the “Slepian” functions be computed from a formulation of the concentration problem in the scattered data space directly, rather than being determined by sampling those obtained from solving the corresponding continuous problem, as we have described here.

Above, we showed how to stabilize the inverse problem of Eq. (120) by damping. We dealt with the case of continuously available data only; the form in which it appears in Eq. (81) makes it clear that damping is hardly practical for scattered data. Indeed, it requires knowledge of the complementary localization operator \(\bar{\mathbf{D}}\), in addition to being sensitive to the choice of η, whose optimal value depends implicitly on the unknown signal-to-noise ratio (Simons and Dahlen 2006). The data-driven approach taken in Eq. (121) is the more sensible one (Xu 1998). We have now seen that, in the limit of continuous partial coverage, this corresponds to the optimal solution of the problem formulated directly in the Slepian basis. It is consequently advantageous to also work in the Slepian basis in case the data collected are scattered but closely collocated in some region of interest. Prior knowledge of the geometry of this region and a prior idea of the spherical harmonic bandwidth of the data to be inverted allows us to construct a Slepian basis for the situation at hand, and the problem of finding the Slepian expansion coefficients of the unknown underlying function can be solved using Eqs. (127) and (128). The measure within which this approach agrees with the theoretical form of Eq. (126) will depend on how regularly the data are distributed within the region of study, i.e., on the error in the approximation (117). But if indeed the scattered-data Slepian normal matrix \(\boldsymbol{\mathsf{G}}\boldsymbol{\mathsf{G}}^{\mathsf{T}}\) is nearly diagonal in its first J × J-dimensional block due to the collocated observations having been favorably, if irregularly, distributed, then Eq. (126), which, strictly speaking, requires no matrix inversion, can be applied directly. If this is not the case, but the data are still collocated or we are only interested in a local approximation to the unknown signal, we can restrict \(\boldsymbol{\mathsf{G}}\) to its first J rows, prior to diagonalizing \(\boldsymbol{\mathsf{G}}\boldsymbol{\mathsf{G}}^{\mathsf{T}}\) or performing the svd of a partial \(\boldsymbol{\mathsf{G}}^{\mathsf{T}}\) as necessary to calculate Eqs. (127) and (128). Compared to solving Eqs. (120) and (121), the computational savings will still be substantial, as only when \(R \approx \Omega\) will the operator \(\boldsymbol{\mathsf{Y}}\boldsymbol{\mathsf{Y}}^{\mathsf{T}}\) be nearly diagonal. Truncation of the eigenvalues of \(\boldsymbol{\mathsf{Y}}\boldsymbol{\mathsf{Y}}^{\mathsf{T}}\) is akin to truncating the matrix \(\boldsymbol{\mathsf{G}}\boldsymbol{\mathsf{G}}^{\mathsf{T}}\) itself, which is diagonal or will be nearly so. With the theoretically, continuously determined, sampled Slepian functions as a parametrization, the truncated expansion is easy to obtain and the solution will be locally faithful within the region of interest R. In contrast, should we truncate \(\boldsymbol{\mathsf{Y}}\boldsymbol{\mathsf{Y}}^{\mathsf{T}}\) itself, without first diagonalizing it, we would be estimating a low-degree approximation of the signal which would have poor resolution everywhere. See Slobbe et al. (2012) for a set of examples in a slightly expanded and numerically more challenging context.

4.1.3 Bias and Variance

For completeness we briefly return to the expressions for the mean-squared estimation error of the damped spherical-harmonic and the truncated Slepian function methods, Eqs. (85) and (86), which we quoted for the example of “white” signal and noise with power S and N, respectively. Introducing the (L + 1)2 × (L + 1)2-dimensional spectral matrices

we handily rewrite the “full” version of Eq. (85) in two spatial variables as the error covariance matrix

We subdivide the matrix with Slepian functions into the truncated set of the best-concentrated \(\alpha = 1 \rightarrow J\) and the complementary set of remaining \(\alpha = J + 1 \rightarrow (L + 1)^{2}\) functions, as follows:

and similarly separate the eigenvalues, writing

Likewise, the identity matrix is split into two parts, \(\bar{\mathbf{I}}\) and \(\boldsymbol{\underline{\mathrm{I}}}\). If we now also redefine

the equivalent version of Eq. (86) is readily transformed into the full spatial error covariance matrix

In selecting the Slepian basis we have thus successfully separated the effect of the variance and the bias on the mean-squared reconstruction error of a noisily observed signal. If the region of observation is a contiguous closed domain \(R \subset \Omega\) and the truncation should take place at the Shannon number J = N 3D, we have thereby identified the variance as due to noise in the region where data are available and the bias to signal neglected in the truncated expansion – which, in the proper Slepian basis, corresponds to the regions over which no observations exist. In practice, the truncation will happen at some J that depends on the signal-to-noise ratio (Simons and Dahlen 2006) and/or on computational considerations (Slobbe et al. 2012).

Finally, we shall also apply the notions of discretely acquired data to the solutions of problem (ii), below.

4.2 Problem (ii), Revisited

We need two more pieces of notation in order to rewrite the expressions for the spectral estimates (89) and (95) in the “pixel-basis.” First we construct the M × M-dimensional symmetric spatial matrix collecting the fixed-degree Legendre polynomials evaluated at the angular distances between all pairs of observations points,

The elements of \(\boldsymbol{\mathsf{P}}_{l}\) are thus \(\sum _{m=-l}^{l}Y _{lm}(\mathbf{\hat{r}}_{i})Y _{lm}(\mathbf{\hat{r}}_{j})\), by the addition theorem, Eq. (30). And finally, we define \(\boldsymbol{\mathsf{G}}_{l}^{\alpha }\), the M × M symmetric matrix with elements given by

4.2.1 The Spherical Periodogram

The expression equivalent to Eq. (89) is now written as

whereby the column vector \(\boldsymbol{\mathsf{d}}\) contains the sampled data as in the notation for Eq. (103). This lends itself easily to computation, and the statistics of Eqs. (90)–(93) hold, approximately, for sufficiently densely sampled data.

4.2.2 The Spherical Multitaper Estimate

Finally, the expression equivalent to Eq. (95) becomes

Both Eqs. (141) and (142) are quadratic forms, earning them the nickname “quadratic spectral estimators” (Mullis and Scharf 1991). The key difference with the maximum-likelihood estimator popular in cosmology (Bond et al. 1998; Oh et al. 1999; Hinshaw et al. 2003), which can also be written as a quadratic form (Tegmark 1997), is that neither \(\boldsymbol{\mathsf{P}}_{l}\) nor \(\boldsymbol{\mathsf{G}}_{l}^{\alpha }\) depends on the unknown spectrum itself and can be easily precomputed. In contrast, maximum-likelihood estimation is inherently nonlinear, requiring iteration to converge to the most probable estimate of the power spectral density (Dahlen and Simons 2008). As such, given a pixel grid, a region of interest R and a bandwidth L, Eq. (142) produces a consistent localized multitaper power spectral estimate in one step.

The estimate (142) has the statistical properties that we listed earlier as Eqs. (96)–(99). These continue to hold when the data pixelization is fine enough to have integral expressions of the kind (104) be exact. As mentioned before, for completely irregularly and potentially non-densely distributed discrete data on the sphere, “generalized” Slepian functions (Bronez 1988) could be constructed specifically for the purpose of their power spectral estimation and used to build the operator (140).

5 Conclusions

What is the information contained in a bandlimited set of scientific observations made over an incomplete, e.g., temporally or spatially limited sampling domain? How can this “information,” e.g., an estimate of the signal itself, or of its energy density, be determined from noisy data, and how shall it be represented? These seemingly age-old fundamental questions, which have implications beyond the scientific (Slepian 1976), had been solved – some say, by conveniently ignoring them – heuristically, by engineers, well before receiving their first satisfactory answers given in the theoretical treatment by Slepian, Landau, and Pollak (Slepian and Pollak 1961; Landau and Pollak 1961, 1962), first for “continuous” time series, later generalized to the multidimensional and discrete cases (Slepian 1964; Slepian 1978; Bronez 1988). By the “Slepian functions” in the title of this contribution, we have lumped together all functions that are “spatiospectrally” concentrated, quadratically, in the original sense of Slepian. In one dimension, these are the “prolate spheroidal functions” whose popularity is as enduring as their utility. In two Cartesian dimensions, and on the surface of the unit sphere, both scalar and vectorial, their time for applications in geomathematics has come.

The answers to the questions posed above are as ever relevant for the geosciences of today. There, we often face the additional complications of irregularly shaped study domains, scattered observations of noise-contaminated potential fields, perhaps collected from an altitude above the source by airplanes or satellites, and an acquisition and model-space geometry that is rarely if ever nonsymmetric. Thus the Slepian functions are especially suited for geoscientific applications and to study any type of geographical information, in general.

Two problems that are of particular interest in the geosciences, but also further afield, are how to form a statistically “optimal” estimate of the signal giving rise to the data and how to estimate the power spectral density of such signal. The first, an inverse problem that is linear in the data, applies to forming mass flux estimates from time-variable gravity, e.g., by the grace mission (Harig and Simons 2012), or to the characterization of the terrestrial or planetary magnetic fields by satellites such as champ, swarm, or mgs. The second, which is quadratic in the data, is of interest in studying the statistics of the Earth’s or planetary topography and magnetic fields (Lewis and Simons 2012; Beggan et al. 2013) and especially for the cross-spectral analysis of gravity and topography (Wieczorek 2008), which can yield important clues about the internal structure of the planets. The second problem is also of great interest in cosmology, where missions such as wmap and planck are mapping the cosmic microwave background radiation, which is best modeled spectrally to constrain models of the evolution of our universe.

Slepian functions, as we have shown by focusing on the scalar case in spherical geometry, provide the mathematical framework to solve such problems. They are a convenient and easily obtained doubly orthogonal mathematical basis in which to express, and thus by which to recover, signals that are geographically localized or incompletely (and noisily) observed. For this they are much better suited than the traditional Fourier or spherical harmonic bases, and they are more “geologically intuitive” than wavelet bases in retaining a firm geographic footprint and preserving the traditional notions of frequency or spherical harmonic degree. They are furthermore extremely performant as data tapers to regularize the inverse problem of power spectral density determination from noisy and patchy observations, which can then be solved satisfactorily without costly iteration. Finally, by the interpretation of the Slepian functions as their limiting cases, much can be learned about the statistical nature of such inverse problems when the data provided are themselves scattered within a specific areal region of study.

References

Albertella A, Sansò F, Sneeuw N (1999) Band-limited functions on a bounded spherical domain: the Slepian problem on the sphere. J Geodesy 73:436–447

Amirbekyan A, Michel V, Simons FJ (2008) Parameterizing surface-wave tomographic models with harmonic spherical splines. Geophys J Int 174(2):617–628. doi:10.1111/j.1365–246X.2008.03809.x

Aster RC, Borchers B, Thurber CH (2005) Parameter estimation and inverse problems. Volume 90 of International Geophysics Series. Elsevier Academic, San Diego

Beggan CD, Saarimäki J, Whaler KA, Simons FJ (2013) Spectral and spatial decomposition of lithospheric magnetic field models using spherical Slepian functions. Geophys J Int 193: 136–148. doi:10.1093/gji/ggs122.

Bendat JS, Piersol AG (2000) Random data: Analysis and measurement procedures, 3rd edn. Wiley, New York

Blanco MA, Flórez M, Bermejo M (1997) Evaluation of the rotation matrices in the basis of real spherical harmonics. J Mol Struct (Theochem) 419:19–27

Bond JR, Jaffe AH, Knox L (1998) Estimating the power spectrum of the cosmic microwave background. Phys Rev D 57(4):2117–2137

Bronez TP (1988) Spectral estimation of irregularly sampled multidimensional processes by generalized prolate spheroidal sequences. IEEE Trans Acoust Speech Signal Process 36(12): 1862–1873

Chave AD, Thomson DJ, Ander ME (1987) On the robust estimation of power spectra, coherences, and transfer functions. J Geophys Res. 92(B1):633–648

Cohen L (1989) Time-frequency distributions – a review. Proc IEEE 77(7):941–981

Cox DR, Hinkley DV (1974) Theoretical statistics. Chapman and Hall, London

Dahlen FA, Simons FJ (2008) Spectral estimation on a sphere in geophysics and cosmology. Geophys J Int 174:774–807. doi:10.1111/j.1365–246X.2008.03854.x

Dahlen FA, Tromp J (1998) Theoretical global seismology. Princeton University Press, Princeton

Daubechies I (1988) Time-frequency localization operators: a geometric phase space approach. IEEE Trans Inform Theory 34:605–612

Daubechies I (1990) The wavelet transform, time-frequency localization and signal analysis. IEEE Trans Inform Theory 36(5):961–1005

Daubechies I (1992) Ten lectures on wavelets. Volume 61 of CBMS-NSF Regional Conference Series in Applied Mathematics. Society for Industrial & Applied Mathematics, Philadelphia

Daubechies I, Paul T (1988) Time-frequency localisation operators – a geometric phase space approach: II. The use of dilations. Inverse Probl 4(3):661–680

de Villiers GD, Marchaud FBT, Pike ER (2003) Generalized Gaussian quadrature applied to an inverse problem in antenna theory: II. The two-dimensional case with circular symmetry. Inverse Probl 19:755–778

Donoho DL, Stark PB (1989) Uncertainty principles and signal recovery. SIAM J Appl Math 49(3):906–931

Edmonds AR (1996) Angular momentum in quantum mechanics. Princeton University Press, Princeton

Eshagh M (2009) Spatially restricted integrals in gradiometric boundary value problems. Artif Sat 44(4):131–148. doi:10.2478/v10018–009–0025–4

Flandrin P (1998) Temps-Fréquence, 2nd edn. Hermès, Paris

Freeden W (2010) Geomathematics: Its role, its aim, and its potential. In: Freeden W, Nashed MZ, Sonar T (eds) Handbook of geomathematics, chap 1. Springer, Heidelberg, pp 3–42. doi:10.1007/978–3–642–01546–5_1

Freeden W, Schreiner M (2009) Spherical functions of mathematical geosciences: a scalar, vectorial, and tensorial setup. Springer, Berlin

Freeden W, Schreiner M (2010) Special functions in mathematical geosciences: an attempt at a categorization. In: Freeden W, Nashed MZ, Sonar T (eds) Handbook of geomathematics, chap 31. Springer, Heidelberg, pp 925–948. doi:10.1007/978–3–642–01546–5_31

Freeden W, Windheuser U (1997) Combined spherical harmonic and wavelet expansion – a future concept in Earth’s gravitational determination, Appl Comput Harmon Anal 4:1–37

Freeden W, Gervens T, Schreiner M (1998) Constructive approximation on the sphere. Clarendon, Oxford

Gerhards C (2011) Spherical decompositions in a global and local framework: theory and an application to geomagnetic modeling. Int J Geomath 1(2):205–256. doi:10.1007/s13137–010–0011–9

Gilbert EN, Slepian D (1977) Doubly orthogonal concentrated polynomials. SIAM J Math Anal 8(2):290–319

Grafarend EW, Klapp M, Martinec Z (2010) Spacetime modeling of the Earth’s gravity field by ellipsoidal harmonics, In: Freeden W, Nashed MZ, Sonar T (eds) Handbook of geomathematics, chap 7. Springer, Heidelberg, pp 159–252. doi:10.1007/978–3–642–01546–5_7

Grishchuk LP, Martin J (1997) Best unbiased estimates for the microwave background anisotropies. Phys Rev D 56(4):1924–1938

Grünbaum FA (1981) Eigenvectors of a Toeplitz matrix: discrete version of the prolate spheroidal wave functions. SIAM J Alg Disc Methods 2(2):136–141

Han S-C, Simons FJ (2008) Spatiospectral localization of global geopotential fields from the Gravity Recovery and Climate Experiment (GRACE) reveals the coseismic gravity change owing to the 2004 Sumatra-Andaman earthquake. J Geophys Res. 113:B01405. doi:10.1029/2007JB004927

Hanssen A (1997) Multidimensional multitaper spectral estimation. Signal Process 58:327–332

Harig C, Simons FJ (2012) Mapping Greenland’s mass loss in space and time. Proc Natl Acad Soc 109(49):19934–19937 doi:10.1073/pnas.1206785109

Hauser MG, Peebles PJE (1973) Statistical analysis of catalogs of extragalactic objects. II. The Abell catalog of rich clusters. Astrophys J 185:757–785

Hesse K, Sloan IH, Womersley RS (2010) Numerical integration on the sphere. In: Freeden W, Nashed MZ, Sonar T (eds) Handbook of geomathematics, chap 40. Springer, Heidelberg, pp 1187–1219. doi:10.1007/978–3–642–01546–5_40