Abstract

This chapter deals with the fundamental mathematical tools and the associated computational aspects for constructing the stochastic models of random matrices that appear in the nonparametric method of uncertainties and in the random constitutive equations for multiscale stochastic modeling of heterogeneous materials. The explicit construction of ensembles of random matrices but also the presentation of numerical tools for constructing general ensembles of random matrices are presented and can be used for high stochastic dimension. The developments presented are illustrated for the nonparametric method for multiscale stochastic modeling of heterogeneous linear elastic materials and for the nonparametric stochastic models of uncertainties in computational structural dynamics.

Access provided by CONRICYT-eBooks. Download reference work entry PDF

Similar content being viewed by others

Keywords

- Random matrix

- Symmetric random matrix

- Positive-definite random matrix

- Nonparametric uncertainty

- Nonparametric method for uncertainty quantification

- Random vector

- Maximum entropy principle

- Non-Gaussian

- Generator

- Random elastic medium

- Uncertainty quantification in linear structural dynamics

- Uncertainty quantification in nonlinear structural dynamics

- Parametric-nonparametric uncertainties

- Identification

- Inverse problem

- Statistical inverse problem

1 Introduction

It is well known that the parametric method for uncertainty quantification consists in constructing stochastic models of the uncertain physical parameters of a computational model that results from the discretization of a boundary value problem. The parametric method is efficient for taking into account the variabilities of physical parameters, but has not the capability to take into account the model uncertainties induced by modeling errors that are introduced during the construction of the computational model. The nonparametric method for the uncertainty quantification is a way for constructing a stochastic model of the model uncertainties induced by the modeling errors. It is also an approach for constructing stochastic models of constitutive equations of materials involving some non-Gaussian tensor-valued random fields, such as in the framework of elasticity, thermoelasticity, electromagnetism, etc. The random matrix theory is a fundamental tool that is really efficient for performing stochastic modeling of matrices that appear in the nonparametric method of uncertainties and in the random constitutive equations for multiscale stochastic modeling of heterogeneous materials. The applications of the nonparametric stochastic modeling of uncertainties and of the random matrix theory presented in this chapter have been developed and validated for many fields of computational sciences and engineering, in particular for dynamical systems encountered in aeronautics and aerospace engineering [7, 20, 78, 88, 91, 94], in biomechanics [30, 31], in environment [32], in nuclear engineering [9, 12, 13, 29], in soil-structure interaction and for the wave propagations in soils [4, 5, 26, 27], in rotor dynamics [79, 80, 82] and vibration of turbomachines [18, 19, 22, 70], in vibroacoustics of automotive vehicles [3, 38–40, 61], but also, in continuum mechanics for multiscale stochastic modeling of heterogeneous materials [48, 49, 51–53], for the heat transfer in complex composites and for their nonlinear thermomechanic analyses [97, 98].

The chapter is organized as follows:

-

Notions on random matrices and on the nonparametric method for uncertainty quantification: What is a random matrix and what is the nonparametric method for uncertainty quantification?

-

Brief history concerning the random matrix theory and the nonparametric method for UQ and its connection with the random matrix theory.

-

Overview and mathematical notations used in the chapter.

-

Maximum entropy principle (MaxEnt) for constructing random matrices.

-

Fundamental ensemble for the symmetric real random matrices with a unit mean value.

-

Fundamental ensembles for positive-definite symmetric real random matrices.

-

Ensembles of random matrices for the nonparametric method in uncertainty quantification.

-

The MaxEnt as a numerical tool for constructing ensembles of random matrices.

-

The MaxEnt for constructing the pdf of a random vector.

-

Nonparametric stochastic model for constitutive equation in linear elasticity.

-

Nonparametric stochastic model of uncertainties in computational linear structural dynamics.

-

Parametric-nonparametric uncertainties in computational nonlinear structural dynamics.

-

Some key research findings and applications.

2 Notions on Random Matrices and on the Nonparametric Method for Uncertainty Quantification

2.1 What Is a Random Matrix?

A real (or complex) matrix is a rectangular or a square array of real (or complex) numbers, arranged in rows and columns. The individual items in a matrix are called its elements or its entries.

A real (or complex) random matrix is a matrix-valued random variable, which means that its entries are real (or complex) random variables. The random matrix theory is related to the fundamental mathematical methods required for constructing the probability distribution of such a random matrix, for constructing a generator of independent realizations, for analyzing some algebraic properties and some spectral properties, etc.

Let us give an example for illustrating the types of problems related to the random matrix theory. Let us consider a random matrix [A], defined on a probability space \((\varTheta,\mathcal{T},\mathcal{P})\), with values in a set \(\mathbb{S}_{n}\) of matrices, which is a subset of the set \(\mathbb{M}_{n}^{S}(\mathbb{R})\) of all the symmetric (n × n) real matrices. Thus, for θ in Θ, the realization [A(θ)] is a deterministic matrix in \(\mathbb{S}_{n} \subset \mathbb{M}_{n}^{S}(\mathbb{R})\). Fundamental questions are related to the definition and to the construction of the probability distribution P [A] of such a random matrix [A]. If this probability distribution is defined by a probability density function (pdf) with respect a volume element d S A, which is a mapping [A] ↦ p [A]([A]) from \(\mathbb{M}_{n}^{S}(\mathbb{R})\) into \(\mathbb{R}^{+} = [0,+\infty [\), for which its support is \(\mathbb{S}_{n}\) (which implies that p [A]([A]) = 0 if \([A]\notin \mathbb{S}_{n}\)), then how must the volume element d S A be defined, how is the integration over \(\mathbb{M}_{n}^{S}(\mathbb{R})\) defined, and what are the methods and tools for constructing pdf p [A] and its generator of independent realizations? For instance, such a pdf cannot simply be defined in giving the pdf of every entry [A] jk for many reasons among the following ones. As random matrix [A] is symmetric, all the entries are not algebraically independent, and therefore, only the n(n + 1)∕2 random variables {[A]1 ≤ j ≤ k ≤ n } must be considered. In addition, if \(\mathbb{S}_{n}\) is the subset \(\mathbb{M}_{n}^{+}(\mathbb{R})\) of all the positive-definite symmetric (n × n) real matrices, then there is an algebraic constraint that relates the random variables {[A]1 ≤ j ≤ k ≤ n } in order that [A] be with values in \(\mathbb{M}_{n}^{+}(\mathbb{R})\), and such an algebraic constraint implies that all the random variables {[A]1 ≤ j ≤ k ≤ n } are statistically dependent .

2.2 What Is the Nonparametric Method for Uncertainty Quantification?

The parametric method for uncertainty quantification consists in constructing stochastic models of the uncertain physical parameters (geometry, boundary conditions, material properties, etc) of a computational model that results from the discretization of a boundary value problem. The parametric method, which introduces prior and posterior stochastic models of the uncertain physical parameters of the computational model, has not the capability to take into account model uncertainties induced by modeling errors that are introduced during the construction of the computational model.

The nonparametric method for uncertainty quantification consists in constructing a stochastic model of both the uncertain physical parameters and the model uncertainties induced by the modeling errors, without separating the effects of the two types of uncertainties. Such an approach consists in directly constructing stochastic models of matrices representing operators of the problem considered and not in using the parametric method for the uncertain physical parameters whose matrices depend. Initially developed for uncertainty quantification in computational structural dynamics, the use of the nonparametric method has been extended for constructing stochastic models of matrices of computational models, such as the nonparametric stochastic model for constitutive equation in linear elasticity.

The parametric-nonparametric method for uncertainty quantification consists in using simultaneously in a computational model, the parametric method for constructing stochastic models of certain of its uncertain physical parameters, and the nonparametric method for constructing a stochastic model of both, the other uncertain physical parameters and the model uncertainties induced by the modeling errors, in separating the effects of the two types of uncertainties.

Consequently, the nonparametric method for uncertainty quantification uses the random matrix theory.

3 A Brief History

3.1 Random Matrix Theory (RMT)

The random matrix theory (RMT) were introduced and developed in mathematical statistics by Wishart and others in the 1930s and was intensively studied by physicists and mathematicians in the context of nuclear physics. These works began with Wigner [125] in the 1950s and received an important effort in the 1960s by Dyson, Mehta, Wigner [36, 37, 126], and others. In 1965, Poter [92] published a volume of important papers in this field, followed, in 1967, by the first edition of the Mehta book [72] whose second edition [73] published in 1991 gives a synthesis of the random matrix theory. For applications in physics, an important ensemble of the random matrix theory is the Gaussian orthogonal ensemble (GOE) for which the elements are constituted of real symmetric random matrices with statistically independent entries and which are invariant under orthogonal linear transformations (this ensemble can be viewed as a generalization of a Gaussian real-valued random variable to a symmetric real square random matrix).

For an introduction to multivariate statistical analysis, we refer the reader to [2], for an overview on explicit probability distributions of ensembles of random matrices and their properties, to [55] and, for analytical mathematical methods devoted to the random matrix theory, to [74].

RMT has been used in other domains than nuclear physics. In 1984 and 1986, Bohigas et al. [14, 15] found that the level fluctuations of the quantum Sinai’s billard were able to predict with the GOE of random matrices. In 1989, Weaver [124] showed that the higher frequencies of an elastodynamic structure constituted of a small aluminum block had the behavior of the eigenvalues of a matrix belonging to the GOE. Then, Bohigas, Legrand, Schmidt, and Sornette [16, 65, 66, 99] studied the high-frequency spectral statistics with the GOE for elastodynamics and vibration problems in the high-frequency range. Langley [64] showed that, in the high-frequency range, the system of natural frequencies of linear uncertain dynamic systems is a non-Poisson point process. These results have been validated for the high-frequency range in elastodynamics. A synthesis of theses aspects related to quantum chaos and random matrix theory, devoted to linear acoustics and vibration, can be found in the book edited by Wright and Weaver [127].

3.2 Nonparametric Method for UQ and Its Connection with the RMT

The nonparametric method was initially be introduced by Soize [106, 107] in 1999–2000 for uncertainty quantification in computational linear structural dynamics in order to take into account the model uncertainties induced by the modeling errors that could not be addressed by the parametric method. The concept of the nonparametric method then consisted in modeling the generalized matrices of the reduced-order model of the computational model by random matrices. It should be noted that the terminology “nonparametric” is not at all connected to the “nonparametric statistics” but was introduced to show the differences between the well-known parametric method consisting in constructing a stochastic model of uncertain physical parameters of the computational model, and the new proposed nonparametric method that consisted in modeling the generalized matrices of the reduced-order model by random matrices, related to the operators of the problem. Later, the parametric-nonparametric method has been introduced [113].

Early in the development of the concept of the nonparametric method, a problem has occurred in the choice of ensembles of random matrices. Indeed the ensembles of random matrices coming from the RMT were not adapted to stochastic modeling required by the nonparametric method. For instance, the GOE of random matrices could not be used for the generalized mass matrix, which must be positive definite, what is not the case for a random matrix belonging to GOE. Consequently, new ensembles of random matrices have had to be developed [76, 107, 108, 110, 115], using the maximum entropy (MaxEnt) principle, for implementing the concept of the nonparametric method for various computational models in mechanics, for which the matrices must verify various algebraic properties. In addition, parameterizations of the new ensembles of random matrices have been introduced in the different constructions in order to be in capability to quantify simply the level of uncertainties. These ensembles of random matrices have been constructed with a parameterization exhibiting a small number of hyperparameters, what allows for identifying the hyperparameters in using experimental data, solving a statistical inverse problems for random matrices that are, in general, in very high dimension. In these constructions, for certain types of available information, an explicit solution of the MaxEnt principle has been obtained, giving an explicit description of the ensembles of random matrices and of the corresponding generator s of realizations. Nevertheless, for other cases of available information coming from computational models, there is no explicit solution of the MaxEnt, and therefore, a numerical tool adapted to the high dimension has had to be developed [112].

Finally, during these last 15 years the nonparametric method has extensively been used and extended, with experimental validations, to many problems in linear and nonlinear structural dynamics, in fluid-structure interaction and in vibroacoustics, in unsteady aeroelasticity, in soil-structure interaction, in continuum mechanics of solids for the nonparametric stochastic modeling of the constitutive equations in linear and nonlinear elasticity, in thermoelasticity, etc. A brief overview on all the experimental validations and applications in different fields is given in the last Sect. 15.

4 Overview

This chapter is constituted of two main parts:

-

The first one is devoted to the presentation of ensembles of random matrices that are explicitly described and also deals with an efficient numerical tool for constructing ensembles of random matrices when an explicit construction cannot be obtained. The presentation is focused to the fundamental results and to the fundamental tools related to ensembles of random matrices that are useful for constructing nonparametric stochastic models for uncertainty quantification in computational mechanics and in computational science and engineering, in such a framework, for the construction of nonparametric stochastic models of the random tensors or the tensor-valued random fields and also for the nonparametric stochastic models of uncertainties in linear and nonlinear structural dynamics.

All the ensembles of random matrices, which have been developed for the nonparametric method of uncertainties in computational sciences and engineering, are given hereinafter using a unified presentation based on the use of the MaxEnt principle, what allow us, not only to learn about the useful ensembles of random matrices for which the probability distributions and the associated generators of independent realizations are explicitly known but also to present a general tool for constructing any ensemble of random matrices, possibly using computation in high dimension.

-

The second part deals with the nonparametric method for uncertainty quantification, which uses the new ensembles of random matrices that have been constructed in the context of the development of the nonparametric method and that are detailed in the first part. The presentation is limited to the nonparametric stochastic model for constitutive equation in linear elasticity, to the nonparametric stochastic model of uncertainties in computational linear structural dynamics for damped elastic structures but also for viscoelastic structures, and to the parametric-nonparametric uncertainties in computational nonlinear structural dynamics. In the last Sect. 15 brief bibliographical analysis is given concerning the propagation of uncertainties using nonparametric or parametric-nonparametric stochastic models of uncertainties, some additional ingredients useful for the nonparametric stochastic modeling of uncertainties, some experimental validations of the nonparametric method of uncertainties, and finally some applications of the nonparametric stochastic modeling of uncertainties in different fields of computational sciences and engineering.

5 Notations

The following algebraic notations are used through all the developments devoted to this chapter.

5.1 Euclidean and Hermitian Spaces

Let x = (x 1, …, x n ) be a vector in \(\mathbb{K}^{n}\) with \(\mathbb{K} = \mathbb{R}\) (the set of all the real numbers) or \(\mathbb{K} = \mathbb{C}\) (the set of all the complex numbers). The Euclidean space \(\mathbb{R}^{n}\) (or the Hermitian space \(\mathbb{C}^{n}\)) is equipped with the usual inner product \(< \mathbf{x},\mathbf{y} >=\sum\nolimits_{ j=1}^{n}x_{j}\overline{y}_{j}\) and the associated norm ∥x∥ = <x, x>1∕2 in which \(\overline{y}_{j}\) is the complex conjugate of the complex number y j and where \(\overline{y}_{j} = y_{j}\) when y j is a real number.

5.2 Sets of Matrices

-

\(\mathbb{M}_{n,m}(\mathbb{R})\) be the set of all the (n × m) real matrices.

-

\(\mathbb{M}_{n}(\mathbb{R}) = \mathbb{M}_{n,n}(\mathbb{R})\) the square matrices.

-

\(\mathbb{M}_{n}(\mathbb{C})\) be the set of all the (n × m) complex matrices.

-

\(\mathbb{M}_{n}^{S}(\mathbb{R})\) be the set of all the symmetric (n × n) real matrices.

-

\(\mathbb{M}_{n}^{+0}(\mathbb{R})\) be the set of all the semipositive-definite symmetric (n × n) real matrices.

-

\(\mathbb{M}_{n}^{+}(\mathbb{R})\) be the set of all the positive-definite symmetric (n × n) real matrices.

The ensembles of real matrices are such that

5.3 Kronecker Symbol, Unit Matrix, and Indicator Function

The Kronecker symbol is denoted as δ

jk

and is such that δ

jk

= 0 if j ≠ k and δ

jj

= 1. The unit (or identity) matrix in \(\mathbb{M}_{n}(\mathbb{R})\) is denoted as [I

n

] and is such that [I

n

]

jk

= δ

jk

. Let \(\mathbb{S}\) be any subset of any set \(\mathbb{M}\), possibly with \(\mathbb{S} = \mathbb{M}\). The indicator function  defined on set \(\mathbb{M}\) is such that

defined on set \(\mathbb{M}\) is such that  if \(M \in \mathbb{S} \subset \mathbb{M}\) and

if \(M \in \mathbb{S} \subset \mathbb{M}\) and  if \(M\not\in \mathbb{S}\).

if \(M\not\in \mathbb{S}\).

5.4 Norms and Usual Operators

-

(i)

The determinant of a matrix [G] in \(\mathbb{M}_{n}(\mathbb{R})\) is denoted as det[G], and its trace is denoted as tr[G] = ∑ n j = 1 G jj .

-

(ii)

The transpose of a matrix [G] in \(\mathbb{M}_{n,m}(\mathbb{R})\) is denoted as [G]T, which is in \(\mathbb{M}_{m,n}(\mathbb{R})\).

-

(iii)

The operator norm of a matrix [G] in \(\mathbb{M}_{n,m}(\mathbb{R})\) is denoted as ∥G∥ = sup∥x∥ ≤ 1∥[G] x∥ for all x in \(\mathbb{R}^{m}\), which is such that ∥[G] x∥ ≤ ∥G∥∥x∥ for all x in \(\mathbb{R}^{m}\).

-

(iv)

For [G] and [H] in \(\mathbb{M}_{n,m}(\mathbb{R})\), we denote ≪[G], [H]≫ = tr{[G]T[H]} and the Frobenius norm (or Hilbert-Schmidt norm) ∥G∥ F of [G] is such that ∥G∥ 2 F = ≪[G], [G]≫ = tr{[G]T[G]} = ∑ n j = 1 ∑ m k = 1 G 2 jk , which is such that \(\Vert G\Vert \leq \Vert G\Vert _{F} \leq \sqrt{n} \Vert G\Vert\).

5.5 Order Relation in the Set of All the Positive-Definite Real Matrices

Let [G] and [H] be two matrices in \(\mathbb{M}_{n}^{+}(\mathbb{R})\). The notation [G] > [H] means that the matrix [G] − [H] belongs to \(\mathbb{M}_{n}^{+}(\mathbb{R})\).

5.6 Probability Space, Mathematical Expectation, and Space of Second-Order Random Vectors

The mathematical expectation relative to a probability space \((\varTheta,\mathcal{T},P)\) is denoted as E. The space of all the second-order random variables, defined on \((\varTheta,\mathcal{T},P)\), with values in \(\mathbb{R}^{n}\), equipped with the inner product ((X, Y)) = E{< X, Y >} and with the associated norm |||X||| = ((X, X))1∕2, is a Hilbert space denoted as \(\mathcal{L}_{n}^{2}\).

6 The MaxEnt for Constructing Random Matrices

The measure of uncertainties using the entropy of information has been introduced by Shannon [103] in the framework of the development of information theory. The maximum entropy (MaxEnt) principle (that is to say, the maximization of the level of uncertainties) has been introduced by Jaynes [58] and allows a prior probability model of any random variables to be constructed, under the constraints defined by the available information. This principle appears as a major tool to construct the prior probability models. All the ensembles of random matrices presented hereinafter (including the well-known Gaussian Orthogonal Ensemble) are constructed in the framework of a unified presentation using the MaxEnt. This means that the probability distributions of the random matrices belonging to these ensembles are constructed using the MaxEnt.

6.1 Volume Element and Probability Density Function (PDF)

This section deals with the definition of a probability density function (pdf) of a random matrix [G] with values in the Euclidean space \(\mathbb{M}_{n}^{S}(\mathbb{R})\) (set of all the symmetric (n × n) real matrices, equipped with the inner product ≪[G], [H]≫ = tr{[G]T[H]}). In order to correctly defined the integration on Euclidean space \(\mathbb{M}_{n}^{S}(\mathbb{R})\), it is necessary to define the volume element on this space.

6.1.1 Volume Element on the Euclidean Space of Symmetric Real Matrices

In order to well understand the principle of the construction of the volume element on Euclidean space \(\mathbb{M}_{n}^{S}(\mathbb{R})\), the construction of the volume element on Euclidean spaces \(\mathbb{R}^{n}\) and \(\mathbb{M}_{n}(\mathbb{R})\) is first introduced.

-

(i) Volume element on Euclidean space \(\mathbb{R}^{n}\). Let {e 1, …, e n } be the orthonormal basis of \(\mathbb{R}^{n}\) such that e j = (0, …, 1, …, 0) is the null vector with 1 in position j. Consequently, <e j , e k > = δ jk . Any vector x = (x 1, …, x n ) in \(\mathbb{R}^{n}\) can then be written as x = ∑ n j = 1 x j e j . This Euclidean structure on \(\mathbb{R}^{n}\) defines the volume element d x on \(\mathbb{R}^{n}\) such that d x = ∏ n j = 1 dx j .

-

(ii) Volume element on Euclidean space \(\mathbb{M}_{n}(\mathbb{R})\). Similarly, let {[b jk ]} jk be the orthonormal basis of \(\mathbb{M}_{n}(\mathbb{R})\) such that [b jk ] = e j e T k . Consequently, we have ≪[b jk ], [b j′k′] = δ jj′ δ kk′. Any matrix [G] in \(\mathbb{M}_{n}(\mathbb{R})\) can be written as [G] = ∑ n j, k = 1 G jk [b jk ] in which G jk = [G] jk . This Euclidean structure on \(\mathbb{M}_{n}(\mathbb{R})\) defines the volume element dG on \(\mathbb{M}_{n}(\mathbb{R})\) such that dG = ∏ n j, k = 1 dG jk .

-

(iii) Volume element on Euclidean space \(\mathbb{M}_{n}^{S}(\mathbb{R})\). Let {[b S jk ], 1 ≤ j ≤ k ≤ n} be the orthonormal basis of \(\mathbb{M}_{n}^{S}(\mathbb{R})\) such that [b S jj ] = e j e j T and \([b_{jk}^{S}] = (\mathbf{e}_{j}\,\mathbf{e}_{k}^{T} + \mathbf{e}_{k}\,\mathbf{e}_{j}^{T})/\sqrt{2}\) if j < k. We have ≪[b S jk ], [b S j′k′ ]≫ = δ jj′ δ kk′ for j ≤ k and j′ ≤ k′. Any symmetric matrix [G] in \(\mathbb{M}_{n}^{S}(\mathbb{R})\) can be written as [G] = ∑ 1 ≤ j ≤ k ≤ n G S jk [b S jk ] in which G jj S = G jj and \(G_{jk}^{S} = \sqrt{2}\,G_{jk}\) if j < k. This Euclidean structure on \(\mathbb{M}_{n}^{S}(\mathbb{R})\) defines the volume element d S G on \(\mathbb{M}_{n}^{S}(\mathbb{R})\) such that d S G = ∏ 1 ≤ j ≤ k ≤ n dG S jk . The volume element is then defined by

$$\displaystyle{ d^{S}G = 2^{n(n-1)/4}\,\prod _{ 1\leq j\leq k\leq n}\,dG_{jk}. }$$(8.1)

6.1.2 Probability Density Function of a Symmetric Real Random Matrix

Let [G] be a random matrix, defined on a probability space \((\varTheta,\mathcal{T},\mathcal{P})\), with values in \(\mathbb{M}_{n}^{S}(\mathbb{R})\) whose probability distribution P [G] = p [G]([G]) d S G is defined by a pdf [G] ↦ p [G]([G]) from \(\mathbb{M}_{n}^{S}(\mathbb{R})\) into \(\mathbb{R}^{+} = [0,+\infty [\) with respect to the volume element d S G on \(\mathbb{M}_{n}^{S}(\mathbb{R})\). This pdf verifies the normalization condition,

in which the volume element d S G is defined by Eq. (8.1).

6.1.3 Support of the Probability Density Function

The support of pdf p [G], denoted as supp p [G], is any subset \(\mathbb{S}_{n}\) of \(\mathbb{M}_{n}^{S}(\mathbb{R})\), possibly with \(\mathbb{S}_{n} = \mathbb{M}_{n}^{S}(\mathbb{R})\). For instance, we can have \(\mathbb{S}_{n} = \mathbb{M}_{n}^{+}(\mathbb{R}) \subset \mathbb{M}_{n}^{S}(\mathbb{R})\), which means that [G] is a random matrix with values in the positive-definite symmetric (n × n) real matrices. Thus, p [G]([G]) = 0 for [G] not in \(\mathbb{S}_{n}\), and Eq. (8.2) can be rewritten as

It should be noted that, in the context of the construction of the unknown pdf p [G], it is assumed that support \(\mathbb{S}_{n}\) is a given (known) set.

6.2 The Shannon Entropy as a Measure of Uncertainties

The Shannon measure [103] of uncertainties of random matrix [G] is defined by the entropy of information (Shannon’s entropy), \(\mathcal{E}(p_{[\mathbf{G}}])\), of pdf p [G] whose support is \(\mathbb{S}_{n} \subset \mathbb{M}_{n}^{S}(\mathbb{R})\), such that

which can be rewritten as \(\mathcal{E}(p_{[\mathbf{G}]}) = -E\{\log {\bigl (p_{[\mathbf{G}]}([\mathbf{G}])\bigr )}\). For any pdf p [G] defined on \(\mathbb{M}_{n}^{S}(\mathbb{R})\) and with support \(\mathbb{S}_{n}\), entropy \(\mathcal{E}(p_{[\mathbf{G}]})\) is a real number. The uncertainty increases when the Shannon entropy increases. More the Shannon entropy is small and more the level of uncertainties is small. If \(\mathcal{E}(p_{[\mathbf{G}]})\) goes to −∞, then the level of uncertainties goes to zero, and random matrix [G] goes to a deterministic matrix for the convergence in probability distribution (in probability law).

6.3 The MaxEnt Principle

As explained before, the use of the MaxEnt principle requires to correctly defined the available information related to random matrix [G] for which pdf p [G] (that is unknown with a given support \(\mathbb{S}_{n}\)) has to be constructed.

6.3.1 Available Information

It is assumed that the available information related to random matrix [G] is represented by the following equation on \(\mathbb{R}^{\mu }\), where μ is a finite positive integer,

in which p [G] ↦ h(p [G]) = (h 1(p [G]), …, h μ (p [G])) is a given functional of p [G], with values in \(\mathbb{R}^{\mu }\). For instance, if the mean value E{[G]} = [G] of [G] is a given matrix in \(\mathbb{S}_{n}\), and if this mean value [G] corresponds to the only available information, then \(h_{\alpha }(p_{[\mathbf{G}]}) =\int _{\mathbb{S}_{n}}G_{jk}\,p_{[\mathbf{G}]}([G])\,\,d^{S}G -\underline{G}_{jk}\), in which α = 1, …, μ is associated with the couple of indices (j, k) such as 1 ≤ j ≤ k ≤ n and where μ = n(n + 1)∕2.

6.3.2 The Admissible Sets for the pdf

The following admissible sets \(\mathcal{C}_{\mathrm{ free}}\) and \(\mathcal{C}_{\mathrm{ ad}}\) are introduced for defining the optimization problem resulting from the use of the MaxEnt principle in order to construct the pdf of random matrix [G]. The set \(\mathcal{C}_{\mathrm{ free}}\) is made up of all the pdf p: [G] ↦ p([G]), defined on \(\mathbb{M}_{n}^{S}(\mathbb{R})\), with support \(\mathbb{S}_{n} \subset \mathbb{M}_{n}^{S}(\mathbb{R})\),

The set \(\mathcal{C}_{\mathrm{ ad}}\) is the subset of \(\mathcal{C}_{\mathrm{ free}}\) for which all the pdf p in \(\mathcal{C}_{\mathrm{ free}}\) satisfy the constraint defined by

6.3.3 Optimization Problem for Constructing the pdf

The use of the MaxEnt principle for constructing the pdf p [G] of random matrix [G] yields the following optimization problem:

The optimization problem defined by Eq. (8.8) on set \(\mathcal{C}_{\mathrm{ ad}}\) is transformed in an optimization problem on \(\mathcal{C}_{\mathrm{ free}}\) in introducing the Lagrange multipliers associated with the constraints defined by Eqs. (8.5) [58, 60, 107]. This type of construction and the analysis of the existence and the uniqueness of a solution of the optimization problem defined by Eq. (8.8) are detailed in Sect. 10.

7 A Fundamental Ensemble for the Symmetric Real Random Matrices with a Unit Mean Value

A fundamental ensemble for the symmetric real random matrices is the Gaussian orthogonal ensemble (GOE) that is an ensemble of random matrices [G], defined on a probability space \((\varTheta,\mathcal{T},\mathcal{P})\), with values in \(\mathbb{M}_{n}^{S}(\mathbb{R})\), defined by a pdf p [G] on \(\mathbb{M}_{n}^{S}(\mathbb{R})\) with respect to the volume element d S G, for which the support \(\mathbb{S}_{n}\) of p G is \(\mathbb{M}_{n}^{S}(\mathbb{R})\), and satisfying the additional properties defined hereinafter.

7.1 Classical Definition [74]

The additional properties of a random matrix [G] belonging to GOE are (i) invariance under any real orthogonal transformation, that is to say, for any orthogonal (n × n) real matrix [R] such that [R]T[R] = [R] [R]T = [I n ], the pdf (with respect to d S G) of the random matrix [R]T[G] [R] is equal to pdf p G of random matrix [G], and (ii) statistical independence of all the real random variables {G jk , 1 ≤ j ≤ k ≤ n}.

7.2 Definition by the MaxEnt and Calculation of the pdf

Alternatively to the properties introduced in the classical definition, the additional properties of a random matrix [G] belonging to GOE are the following. For all 1 ≤ j ≤ k ≤ n,

in which δ > 0 is a given positive-valued hyperparameter whose interpretation is given after. The GOE is then defined using the MaxEnt principle for the available information given by Eq. (8.9), which defines mapping h (see Eq. (8.5)). The corresponding ensemble is written as GOE δ . In Eq. (8.9), the first equation means that the symmetric random matrix [G] is centered, and the second one means that its fourth-order covariance tensor is diagonal. Using the MaxEnt principle for random matrix [G] yields the following unique explicit expression for the pdf p G with respect to the volume element d S G:

in which c G is the constant of normalization such that Eq. (8.2) is verified. It can then be deduced that {G jk , 1 ≤ j ≤ k ≤ n} are Gaussian independent real random variables such that Eq. (8.9) is verified. Consequently, for all 1 ≤ j ≤ k ≤ n, the pdf (with respect to dg on \(\mathbb{R}\)) of the Gaussian real random variable G jk is \(p_{\mathbf{G}_{jk}}(g) = (\sqrt{2\pi }\sigma _{jk})^{-1}\exp \{ - g^{2}/(2\sigma _{jk}^{2})\}\) in which the variance of random variable G jk is σ 2 jk = (1 + δ jk ) δ 2∕(n + 1).

7.3 Decentering and Interpretation of Hyperparameter δ

Let [G GOE] be the random matrix with values in \(\mathbb{M}_{n}^{S}(\mathbb{R})\) such that [G GOE] = [I n ] + [G] in which [G] is a random matrix belonging to the GOE δ defined before. Therefore [G GOE] is not centered and its mean value is E{[G GOE]} = [I n ]. The coefficient of variation of the random matrix [G GOE] is defined [109] by

and δ GOE = δ. The parameter \(2\delta /\sqrt{n + 1}\) can be used to specify a scale.

7.4 Generator of Realizations

For θ ∈ Θ, any realization [G GOE(θ)] is given by [G GOE(θ)] = [I n ] + [G(θ)] with, for 1 ≤ j ≤ k ≤ n, G kj (θ) = G jk (θ) and G jk (θ) = σ jk U jk (θ), in which {U jk (θ)}1 ≤ j ≤ k ≤ n is the realization of n(n + 1)∕2 independent copies of a normalized (centered and unit variance) Gaussian real random variable.

7.5 Use of the GOE Ensemble in Uncertainty Quantification

The GOE can then be viewed as a generalization of the Gaussian real random variables to the Gaussian symmetric real random matrices. It can be seen that [G GOE] is with values in \(\mathbb{M}_{n}^{S}(\mathbb{R})\) but is not positive. In addition, for all fixed n,

-

(i)

It has been proved by Weaver [124] and others (see [127] and included references) that the GOE is well adapted for describing universal fluctuations of the eigenfrequencies for generic elastodynamical, acoustical, and elastoacoustical systems, in the high-frequency range corresponding to the asymptotic behavior of the largest eigenfrequencies.

-

(ii)

On the other hand, random matrix [G GOE] cannot be used for stochastic modeling of a symmetric real matrix for which a positiveness property and an integrability of its inverse are required. Such a situation is similar to the following one that is well known for the scalar case. Let us consider the scalar equation in u: ( G + G) u = v in which v is a given real number, G is a given positive number, and G is a positive parameter. This equation has a unique solution u = ( G + G)−1 v. Let us assume that G is uncertain and is modeled by a centered random variable G. We then obtain the random equation in U: ( G + G)U = v. If the random solution U must have finite statistical fluctuations, that is to say, U must be a second-order random variable (this is generally required due to physical considerations), then G cannot be chosen as a Gaussian second-order centered real random variable, because with such a Gaussian stochastic modeling, the solution U = (G + G)−1 v is not a second-order random variable, because E{U 2} = +∞ due to the non integrability of the function G ↦ (G + G)−2 at point G = −G.

8 Fundamental Ensembles for Positive-Definite Symmetric Real Random Matrices

In this section, we present fundamental ensembles of positive-definite symmetric real random matrices, SG +0 , SG + ɛ , SG + b , and SG + λ , which have been developed and analyzed for constructing other ensembles of random matrices used for the nonparametric stochastic modeling of matrices encountered in uncertainty quantification.

-

The ensemble SG +0 is a subset of all the positive-definite symmetric real (n × n) random matrices for which the mean value is the unit matrix and for which the lower bound is the zero matrix. This ensemble has been introduced and analyzed in [107, 108] in the context of the development of the nonparametric method of model uncertainties induced my modeling errors in computational dynamics. This ensemble has later been used for constructing other ensembles of random matrices encountered in the nonparametric stochastic modeling of uncertainties [110].

-

The ensemble SG + ɛ is a subset of all the positive-definite symmetric real (n × n) random matrices for which the mean value is the unit matrix and for which there is an arbitrary lower bound that is a positive-definite matrix controlled by an arbitrary positive number ɛ that can be chosen as small as is desired [114]. In such an ensemble, the lower bound does not correspond to a given matrix that results from a physical model, but allows for assuring a uniform ellipticity for the stochastic modeling of elliptic operators encountered in uncertainty quantification of boundary value problems. The construction of this ensemble is directly derived from ensemble SG +0 ,

-

The ensemble SG + b is a subset of all the positive-definite random matrices for which the mean value is either not given or is equal to the unit matrix [28, 50] and for which a lower bound and an upper bound are given positive-definite matrices. In this ensemble, the lower bound and the upper bound are not arbitrary positive-definite matrices, but are given matrices that result from a physical model. The ensemble is interesting for the nonparametric stochastic modeling of tensors and tensor-valued random fields for describing uncertain physical properties in elasticity, poroelasticity, thermics, etc.

-

The ensemble SG + λ , introduced in [76], is a subset of all the positive-definite random matrices for which the mean value is the unit matrix, for which the lower bound is the zero matrix, and for which the second-order moments of diagonal entries are imposed. In the context of the nonparametric stochastic modeling of uncertainties, this ensemble allows for imposing the variances of certain random eigenvalues of stochastic generalized eigenvalue problems, such as the eigenfrequency problem in structural dynamics.

8.1 Ensemble SG0 + of Positive-Definite Random Matrices With a Unit Mean Value

8.1.1 Definition of SG0 + Using the MaxEnt and Expression of the pdf

The ensemble SG +0 of random matrices [G 0], defined on the probability space \((\varTheta,\mathcal{T},\mathcal{P})\), with values in the set \(\mathbb{M}_{n}^{+}(\mathbb{R}) \subset \mathbb{M}_{n}^{S}(\mathbb{R})\), is constructed using the MaxEnt with the following available information, which defines mapping h (see Eq. (8.5)):

The support of the pdf is the subset \(\mathbb{S}_{n} = \mathbb{M}_{n}^{+}(\mathbb{R})\) of \(\mathbb{M}_{n}^{S}(\mathbb{R})\). This pdf \(p_{[\mathbf{G}_{0}]}\) (with respect to the volume element d S G on the set \(\mathbb{M}_{n}^{S}(\mathbb{R})\)) verifies the normalization condition and is written as

The positive parameter δ is a such that 0 < δ < (n + 1)1∕2(n + 5)−1∕2, which allows the level of statistical fluctuations of random matrix [G 0] to be controlled and which is defined by

The normalization positive constant \(c_{G_{0}}\) is such that

where, for all z > 0, Γ(z) = ∫ +∞0 t z−1 e −t dt. Note that {[G 0] jk , 1 ≤ j ≤ k ≤ n} are dependent random variables. If (n + 1)∕δ 2 is an integer, then this pdf coincides with the Wishart probability distribution [2, 107]. If (n + 1)∕δ 2 is not an integer, then this probability density function can be viewed as a particular case of the Wishart distribution, in infinite dimension, for stochastic processes [104].

8.1.2 Second-Order Moments

Random matrix [G 0] is such that E{∥G 0∥2} ≤ E{∥G 0∥ 2 F } < +∞, which proves that [G 0] is a second-order random variable. The mean value of random matrix [G 0] is unit matrix [I n ]. The covariance C jk, j′k′ = E{[G 0] jk − [I n ] jk ) ([G 0] j′k′ − [I n ] j′k′)} of the real-valued random variables [G 0] jk and [G 0] j′k′ is \(C_{jk,j'k'} = \delta ^{2}(n + 1)^{-1}{\bigl \{\delta _{j'k}\,\delta _{jk'} +\delta _{jj'}\,\delta _{kk'}\bigr \}}\). The variance of real-valued random variable [G 0] jk is σ 2 jk = C jk, jk = δ 2(n + 1)−1(1 + δ jk ).

8.1.3 Invariance of Ensemble SG0 + Under Real Orthogonal Transformations

Ensemble SG +0 is invariant under real orthogonal transformations. This means that the pdf (with respect to d S G) of the random matrix [R]T[G 0] [R] is equal to the pdf (with respect to d S G) of random matrix [G 0] for any real orthogonal matrix [R] belonging to \(\mathbb{M}_{n}(\mathbb{R})\).

8.1.4 Invertibility and Convergence Property When Dimension Goes to Infinity

Since [G 0] is a positive-definite random matrix, [G 0] is invertible almost surely, which means that for \(\mathcal{P}\)-almost θ in Θ, the inverse [G 0(θ)]−1 of the matrix [G 0(θ)] exists. This last property does not guarantee that [G 0]−1 is a second-order random variable, that is to say, that \(E\{\Vert [\mathbf{G}_{0}]^{-1}\Vert _{F}^{2}\} =\int _{\varTheta }\Vert [\mathbf{G}_{0}(\theta )]^{-1}\Vert _{F}^{2}\,d\mathcal{P}(\theta )\) is finite. However, it is proved [108] that

and that the following fundamental property holds:

in which C δ is a positive finite constant that is independent of n but that depends on δ. This means that n ↦ E{∥[G 0]−1∥2} is a bounded function from {n ≥ 2} into \(\mathbb{R}^{+}\).

It should be noted that the invertibility property defined by Eqs. (8.17) and (8.18) is due to the constraint \(E\{\log (\det [\mathbf{G}_{0}])\} =\nu _{G_{0}}\) with \(\vert \nu _{G_{0}}\vert < +\infty \). This is the reason why the truncated Gaussian distribution restricted to \(\mathbb{M}_{n}^{+}(\mathbb{R})\) does not satisfy this invertibility condition that is required for stochastic modeling in many cases.

8.1.5 Probability Density Function of the Random Eigenvalues

Let \(\boldsymbol{\Lambda } = (\varLambda _{1},\ldots,\varLambda _{n})\) be the positive-valued random eigenvalues of the random matrix [G 0] belonging to ensemble SG +0 , such that \([\mathbf{G}_{0}]\,\boldsymbol{\Phi }^{j} =\varLambda _{j}\,\boldsymbol{\Phi }^{j}\) in which \(\boldsymbol{\Phi }^{j}\) is the random eigenvector associated with the random eigenvalue Λ j . The joint probability density function \(p_{\boldsymbol{\Lambda }}(\boldsymbol{\lambda }) = p_{\varLambda _{1},\ldots,\varLambda _{n}}(\lambda _{1},\ldots,\lambda _{n})\) with respect to \(d\boldsymbol{\lambda } = d\lambda _{1}\ldots d\lambda _{n}\) of \(\boldsymbol{\Lambda } = (\varLambda _{1},\ldots,\varLambda _{n})\) is written [107] as

in which c Λ is a constant of normalization defined by the equation ∫ +∞0 …∫ +∞0 \(p_{\boldsymbol{\Lambda }}(\boldsymbol{\lambda })\,d\boldsymbol{\lambda } = 1\). All the random eigenvalues Λ j of random matrix [G 0] in SG +0 are positive almost surely, while this assertion is not true for the random eigenvalues Λ j GOE of the random matrix [G GOE] = [I n ] + [G] in which [G] is a random matrix belonging to the GOE δ ensemble.

8.1.6 Algebraic Representation and Generator of Realizations

The generator of realizations of random matrix [G 0] whose pdf is defined by Eq. (8.14) is directly deduced from the following algebraic representation of [G 0] in SG +0 . Random matrix [G 0] is written as [G 0] = [L]T [L] in which [L] is an upper triangular real (n × n) random matrix such that:

-

(i)

The random variables {[L] jk , j ≤ k} are independent;

-

(ii)

For j < k, the real-valued random variable [L] jk is written as [L] jk = σ n U jk in which σ n = δ(n + 1)−1∕2 and where U jk is a real-valued Gaussian random variable with zero mean and variance equal to 1;

-

(iii)

For j = k, the positive-valued random variable [L] jj is written as \([\mathbf{L}]_{jj} =\sigma _{n}\sqrt{2V _{j}}\) in which σ n is defined before and where V j is a positive-valued gamma random variable whose pdf is

in which \(a_{j} = \frac{n+1} {2\delta ^{2}} + \frac{1-j} {2}\).

in which \(a_{j} = \frac{n+1} {2\delta ^{2}} + \frac{1-j} {2}\).

It should be noted that the set { {U jk }1 ≤ j < k ≤ n , {V j }1 ≤ j ≤ n } of random variables are statistically independent, and the pdf of each diagonal element [L] jj of random matrix [L] depends on the rank j of the entry.

For θ ∈ Θ, any realization [G 0(θ)] is then deduced from the algebraic representation given before, using the realization {U jk (θ)}1 ≤ j < k ≤ n of n(n − 1)∕2 independent copies of a normalized (zero mean and unit variance) Gaussian real random variable and using the realization {V j (θ)}1 ≤ j ≤ n of the n independent positive-valued gamma random variable V j with parameter a j .

8.2 Ensemble SG ɛ + of Positive-Definite Random Matrices with a Unit Mean Value and an Arbitrary Positive-Definite Lower Bound

The ensemble SG + ɛ is a subset of all the positive-definite random matrices for which the mean value is the unit matrix and for which there is an arbitrary lower bound that is a positive-definite matrix controlled by an arbitrary positive number ɛ that can be chosen as small as is desired. In this ensemble, the lower bound does not correspond to a given matrix that results from a physical model.

Ensemble SG + ɛ is the set of the random matrices [G] with values in \(\mathbb{M}_{n}^{+}(\mathbb{R})\), which are written as

in which [G 0] is a random matrix in SG +0 , with mean value E{[G 0]} = [I n ], and for which the level of statistical fluctuations is controlled by the hyperparameter δ defined by Eq. (8.15) and where ɛ is any positive number (note that for ɛ = 0, SG + ɛ = SG +0 and then [G] = [G 0]). This definition shows that, almost surely,

in which the lower bound is the positive-definite matrix [G ℓ ] = c ɛ [I n ] with c ɛ = ɛ∕(1 + ɛ). For all ɛ > 0, we have

with \(\nu _{G_{\varepsilon }} =\nu _{G_{0}} - n\log (1+\varepsilon )\). The coefficient of variation δ G of random matrix [G], defined by

is such that

where δ is the hyperparameter defined by Eq. (8.15).

8.2.1 Generator of Realizations

For θ ∈ Θ, any realization [G(θ)] of [G] is given by \([\mathbf{G}(\theta )] = \frac{1} {1+\varepsilon }\{[\mathbf{G}_{0}(\theta )] +\varepsilon \, [I_{n}]\}\) in which [G 0(θ)] is a realization of random matrix [G 0] constructed as explained before.

8.2.2 Lower Bound and Invertibility

For all ɛ > 0, the bilinear form b(X, Y) = (([G] X, Y)) on \(\mathcal{L}_{n}^{2} \times \mathcal{L}_{n}^{2}\) is such that

Random matrix [G] is invertible almost surely and its inverse [G]−1 is a second-order random variable, E{∥[G]−1∥ 2 F } < +∞.

8.3 Ensemble SG b + of Positive-Definite Random Matrices with Given Lower and Upper Bounds and with or without Given Mean Value

The ensemble SG + b is a subset of all the positive-definite random matrices for which the mean value is either the unit matrix or is not given and for which a lower bound and an upper bound are given positive-definite matrices. In this ensemble, the lower bound and the upper bound are not arbitrary positive-definite matrices, but are given matrices that result from a physical model.

The ensemble SG + b is constituted of random matrices [G b ], defined on the probability space \((\varTheta,\mathcal{T},\mathcal{P})\), with values in the set \(\mathbb{M}_{n}^{+}(\mathbb{R}) \subset \mathbb{M}_{n}^{S}(\mathbb{R})\), such that

in which the lower bound [G ℓ ] and the upper bound [G u ] are given matrices in \(\mathbb{M}_{n}^{+}(\mathbb{R})\) such that [G ℓ ] < [G u ]. The support of the pdf \(p_{[\mathbf{G}_{b}]}\) (with respect to the volume element d S G on \(\mathbb{M}_{n}^{S}(\mathbb{R})\)) of random matrix [G b ] is the subset \(\mathbb{S}_{n}\) of \(\mathbb{M}_{n}^{+}(\mathbb{R}) \subset \mathbb{M}_{n}^{S}(\mathbb{R})\) such that

The available information associated with the presence of the lower and upper bounds is defined by

in which ν ℓ and ν u are two constants such that | ν ℓ | < +∞ and | ν u | < +∞. The mean value [G b ] = E{[G b ]} is given by

The positive parameter δ b , which allows the level of statistical fluctuations of random matrix [G b ] to be controlled, is defined by

8.3.1 Definition of SG b + for a Non-given Mean Value Using the MaxEnt

The mean value [G b ] of random matrix [G b ] is not given and therefore does not constitute an available information. In this case, the ensemble SG + b is constructed using the MaxEnt with the available information given by Eq. (8.28) (that defines mapping h introduced in Eq. (8.5) and rewritten for \(p_{[\mathbf{G}_{b}]}\)). The pdf \(p_{[\mathbf{G}_{b}]}\) is the generalized matrix-variate beta-type I pdf [55]:

in which \(c_{G_{b}}\) is the normalization constant and where α > (n − 1)∕2 and β > (n − 1)∕2 are two real parameters that are unknown and that depend on the two unknown constants ν ℓ and ν u . The mean value [G b ] must be calculated using Eqs. (8.29) and (8.31), and the hyperparameter δ b , which characterizes the level of statistical fluctuations, must be calculated using Eqs. (8.30) and (8.31). Consequently, [G b ] and δ b depend on α and β. It can be seen that, for n ≥ 2, the two scalar parameters α and β are not sufficient for identifying the mean value [G b ] that is in \(\mathbb{S}_{n}\) and the hyperparameter δ b . An efficient algorithm for generating realizations of [G b ] can be found in [28].

8.3.2 Definition of SG b + for a Given Mean Value Using the MaxEnt

The mean value [G b ] of random matrix [G b ] is given such that [G ℓ ] < [G b ] < [G u ]. In this case, the ensemble SG + b is constructed using the MaxEnt with the available information given by Eqs. (8.28) and (8.29) that defines mapping h introduced in Eq. (8.5). Following the construction proposed in [50], the following change of variable is introduced:

This equation shows that the random matrix [A 0] is with values in \(\mathbb{M}_{n}^{+}(\mathbb{R})\). Introducing the mean value [A 0] = E{[A 0]} that belongs to \(\mathbb{M}_{n}^{+}(\mathbb{R})\) and is Cholesky factorization [A 0] = [L 0]T [L 0] in which [L 0] is an upper triangular real (n × n) matrix, random matrix [A 0] can be written as [A 0] = [L 0]T [G 0] [L 0] with [G 0] that belongs to ensemble SG +0 depending on the hyperparameter δ defined by Eq. (8.15). The inversion of Eq. (8.32) yields

It can then be seen that for any arbitrary small ɛ 0 > 0 (for instance, ɛ 0 = 10−6), we have

For δ and [L 0] fixed, for θ in Θ, the realization [G 0(θ)] of random matrix [G 0] in SG +0 is constructed using the generator of [G 0], which has been detailed before. The mean value E{[G b ]} and the hyperparameter δ b defined by Eq. (8.30) are estimated with the corresponding realization \([\mathbf{G}_{b}(\theta )] = [G_{\ell}] + \left ([\underline{L}_{0}]^{T}\,[\mathbf{G}_{0}(\theta )]\,[\underline{L}_{0}] + [G_{\ell u}]^{-1}\right )^{-1}\) of random matrix [G b ]. Let \(\mathcal{U}_{L}\) be the set of all the upper triangular real (n × n) matrices [L 0] with positive diagonal entries. For a fixed value of δ, and for a given target value of [G b ], the value [L opt0 ] of [L 0] is calculated in solving the optimization problem

in which the cost function \(\mathcal{F}\) is deduced from Eq. (8.34) and is written as

8.4 Ensemble SG λ + of Positive-Definite Random Matrices with a Unit Mean Value and Imposed Second-Order Moments

The ensemble SG + λ is a subset of all the positive-definite random matrices for which the mean value is the unit matrix, for which the lower bound is the zero matrix, and for which the second-order moments of diagonal entries are imposed. In the context of nonparametric stochastic modeling of uncertainties, this ensemble allows for imposing the variances of certain random eigenvalues of stochastic generalized eigenvalue problems.

8.4.1 Definition of SG λ + Using the MaxEnt and Expression of the pdf

The ensemble SG + λ of random matrices [G λ ], defined on the probability space \((\varTheta,\mathcal{T},\mathcal{P})\), with values in the set \(\mathbb{M}_{n}^{+}(\mathbb{R}) \subset \mathbb{M}_{n}^{S}(\mathbb{R})\), is constructed using the MaxEnt with the following available information, which defines mapping h (see Eq. (8.5)):

in which \(\vert \nu _{G_{\lambda }}\vert < +\infty \), with m < n, and where s 21 , …, s 2 m are m given positive constants. The pdf \(p_{[\mathbf{G}_{\lambda }]}\) (with respect to the volume element d S G on the set \(\mathbb{M}_{n}^{S}(\mathbb{R})\) has a support that is \(\mathbb{S}_{n} = \mathbb{M}_{n}^{+}(\mathbb{R}) \subset \mathbb{M}_{n}^{S}(\mathbb{R})\) of \(\mathbb{M}_{n}^{S}(\mathbb{R})\). The pdf verifies the normalization condition and is written [76] as

in which \(C_{G_{\lambda }}\) is the normalization constant and α is a parameter such that n + 2α − 1 > 0, where [μ] is a diagonal real (n × n) matrix such that μ jj = (n + 2α − 1)∕2 for j > m and where μ 11, …, μ mm and τ 1, …, τ m are 2m positive parameters, which are expressed as a function of α and s 21 , …, s 2 m . The level of statistical fluctuations of random matrix [G λ ] is controlled by the positive hyperparameter δ that is defined by

and where δ is such that

8.4.2 Generator of Realizations

For given m < n, δ, and s 21 , …, s 2 m , the explicit generator of realizations of random matrix [G λ ] whose pdf is defined by Eq. (8.38) is detailed in [76].

9 Ensembles of Random Matrices for the Nonparametric Method in Uncertainty Quantification

In this section, we present the ensembles SE +0 , SE + ɛ , SE+0, SErect, and SEHT of random matrices which result from some transformations of the fundamental ensembles introduced before. These ensembles of random matrices are useful for performing the nonparametric stochastic modeling of matrices encountered in uncertainty quantification of computational models in structural dynamics, acoustics, vibroacoustics, fluid-structure interaction, unsteady aeroelasticity, soil-structure interaction, etc., but also in solid mechanics (elasticity tensors of random elastic continuous media, matrix-valued random fields for heterogeneous microstructures of materials), thermic (thermal conductivity tensor), electromagnetism (dielectric tensor), etc.

The ensembles of random matrices, devoted to the construction of nonparametric stochastic models of matrices encountered in uncertainty quantification, are briefly summarized below and then are mathematically detailed:

-

The ensemble SE +0 is a subset of all the positive-definite random matrices for which the mean values are given and differ from the unit matrix (unlike to ensemble SG +0 ) and for which the lower bound is the zero matrix. This ensemble is constructed as a transformation of ensemble SG +0 in keeping all the mathematical properties of ensemble SG +0 such as the positiveness.

-

The ensemble SE + ɛ is a subset of all the positive-definite random matrices for which the mean value is a given positive-definite matrix and for which there is an arbitrary lower bound that is a positive-definite matrix controlled by an arbitrary positive number ɛ that can be chosen as small as is desired. In this ensemble, the lower bound does not correspond to a given matrix that results from a physical model. This ensemble is constructed as a transformation of ensemble SG + ɛ and has the same area of use than ensemble SE +0 for stochastic modeling in uncertainty quantification but for which a lower bound is required in the stochastic modeling for mathematical reasons.

-

The ensemble SE+0 is similar to ensemble SG +0 but is constituted of semipositive-definite (m × m) real random matrices for which the mean value is a given semipositive-definite matrix. This ensemble is constructed as a transformation of positive-definite (n × n) real random matrices belonging to ensemble SG +0 , with n < m, in which the dimension of the null space is m − n. Such an ensemble is useful for the nonparametric stochastic modeling of uncertainties such as those encountered in structural dynamics in presence of rigid body displacements.

-

The ensemble SErect is an ensemble of rectangular random matrices for which the mean value is a given rectangular matrix and which is constructed using ensemble SE + ɛ . This ensemble is useful for the nonparametric stochastic modeling of some uncertain coupling operators encountered, for instance, in fluid-structure interaction and in vibroacoustics.

-

The ensemble SEHT is a set of random functions with values in the set of the complex matrices such that the real part and the imaginary part are positive-definite random matrices that are constrained by an underlying Hilbert transform induced by a causality property. This ensemble allows for a nonparametric stochastic modeling in uncertainty quantification encountered, for instance, in linear viscoelasticity.

9.1 Ensemble SE0 + of Positive-Definite Random Matrices with a Given Mean Value

The ensemble SE +0 is a subset of all the positive-definite random matrices for which the mean values are given and differ from the unit matrix (unlike to ensemble SG +0 ). This ensemble is constructed as a transformation of ensemble SG +0 in keeping all the mathematical properties of ensemble SG +0 such as the positiveness [107].

9.1.1 Definition of Ensemble SE0 +

Any random matrix [A 0] in ensemble SE +0 is defined on the probability space \((\varTheta,\mathcal{T},\mathcal{P})\), is with values in \(\mathbb{M}_{n}^{+}(\mathbb{R}) \subset \mathbb{M}_{n}^{S}(\mathbb{R})\), and is such that

in which the mean value [A] is a given matrix in \(\mathbb{M}_{n}^{+}(\mathbb{R})\).

9.1.2 Expression of [A0] as a Transformation of [G0] and Generator of Realizations

Positive-definite mean matrix [A] is factorized (Cholesky) as

in which [L A ] is an upper triangular matrix in \(\mathbb{M}_{n}(\mathbb{R})\). Taking into account Eq. (8.41) and the definition of ensemble SG +0 , any random matrix [A 0] in ensemble SE +0 is written as

in which the random matrix [G 0] belongs to ensemble SG +0 , with mean value E{[G 0]} = [I n ], and for which the level of statistical fluctuations is controlled by the hyperparameter δ defined by Eq. (8.15).

Generator of realizations. For all θ in Θ, the realization [G 0(θ)] of [G 0] is constructed as explained before. The realization [A 0(θ)] of random matrix [A 0] is calculated by [A 0(θ)] = [L A ]T[G 0(θ)] [L A ].

Remark 1.

It should be noted that the mean matrix [A] could also been written as [A] = [A]1∕2 [A]1∕2 in which [A]1∕2 is the square root of [A] in \(\mathbb{M}_{n}^{+}(\mathbb{R})\) and the random matrix [A 0] could then be written as [A 0] = [A]1∕2 [G 0] [A]1∕2.

9.1.3 Properties of Random Matrix [A0]

Any random matrix [A 0] in ensemble SE +0 is a second-order random variable,

and its inverse [A 0]−1 exists almost surely and is a second-order random variable,

9.1.4 Covariance Tensor and Coefficient of Variation of Random Matrix [A0]

The covariance C jk, j′k′ = E{([A 0] jk − A jk )([A 0] j′k′ − A j′k′)} of random variables [A 0] jk and [A 0] j′k′ is written as

and the variance σ 2 jk = C jk, jk of random variable [A 0] jk is

The coefficient of variation \(\delta _{A_{0}}\) of random matrix [A 0] is defined by

Since E{∥A 0 − A∥ 2 F } = ∑ n j = 1 ∑ n k = 1 σ 2 jk , we have

9.2 Ensemble SE ɛ + of Positive-Definite Random Matrices with a Given Mean Value and an Arbitrary Positive-Definite Lower Bound

The ensemble SE + ɛ is a set of positive-definite random matrices for which the mean value is a given positive-definite matrix and for which there is an arbitrary lower bound that is a positive-definite matrix controlled by an arbitrary positive number ɛ that can be chosen as small as is desired. In this ensemble, the lower bound does not correspond to a given matrix that results from a physical model. This ensemble is then constructed as a transformation of ensemble SG + ɛ and has the same area of use than ensemble SE +0 for stochastic modeling in uncertainty quantification, but for which a lower bound is required in the stochastic modeling for mathematical reasons.

9.2.1 Definition of Ensemble SE ɛ +

For a fixed positive value of parameter ɛ (generally chosen very small, as 10−6), any random matrix [A] in ensemble SE + ɛ is defined on probability space \((\varTheta,\mathcal{T},\mathcal{P})\), is with values in \(\mathbb{M}_{n}^{+}(\mathbb{R}) \subset \mathbb{M}_{n}^{S}(\mathbb{R})\), and is such that

in which [L A ] is the upper triangular matrix in \(\mathbb{M}_{n}(\mathbb{R})\) corresponding by the Cholesky factorization [L A ]T [L A ] = [A] of the positive-definite mean matrix [A] = E{[A]} of random matrix [A], and where the random matrix [G] belongs to ensemble SG + ɛ , with mean value E{[G]} = [I n ] and for which the coefficient of variation δ G is defined by Eq. (8.24) as a function of the hyperparameter δ defined by Eq. (8.15), which allows the level of statistical fluctuations to be controlled. It should be noted that for ɛ = 0, [G] = [G 0] that yields [A] = [A 0], and consequently, the ensemble SE + ɛ coincides with SE +0 (if ɛ = 0).

Generator of realizations. For all θ in Θ, the realization [G(θ)] of [G] is constructed as explained before. The realization [A(θ)] of random matrix [A] is calculated by [A(θ)] = [L A ]T[G(θ)] [L A ].

9.2.2 Properties of Random Matrix [A]

Almost surely, we have

in which [A 0] is defined by Eq. (8.43) and where the lower bound is the positive-definite matrix [A ℓ ] = c ɛ [A] with c ɛ = ɛ∕(1 + ɛ), and we have the following properties:

with \(\nu _{A} =\nu _{A_{0}} - n\log (1+\varepsilon )\). For all ɛ > 0, random matrix [A] in ensemble SE + ɛ is a second-order random variable,

and the bilinear form b A (X, Y) = (([A] X, Y)) on \(\mathcal{L}_{n}^{2} \times \mathcal{L}_{n}^{2}\) is such that

Random matrix [A] is invertible almost surely and its inverse [A]−1 is a second-order random variable,

The coefficient of variation δ A of random matrix [A], defined by

is such that

in which \(\delta _{A_{0}}\) is defined by Eq. (8.49).

9.3 Ensemble SE+0 of Semipositive-Definite Random Matrices with a Given Semipositive-Definite Mean Value

The ensemble SE+0 is similar to ensemble SG +0 but is constituted of semipositive-definite (m × m) real random matrices [A] for which the mean value is a given semipositive-definite matrix. This ensemble is constructed [110] as a transformation of positive-definite (n × n) real random matrices [G 0] belonging to ensemble SG +0 , with n < m.

9.3.1 Algebraic Structure of the Random Matrices in SE+0

The ensemble SE+0 is constituted of random matrix [A] with values in the set \(\mathbb{M}_{m}^{+0}(\mathbb{R})\) such that the null space of [A], denoted as null([A]), is deterministic and is a subspace of \(\mathbb{R}^{m}\) with a fixed dimension μ null < m. This deterministic null space is defined as the null space of the mean value [A] = E{[A]} that is given in \(\mathbb{M}_{m}^{+0}(\mathbb{R})\). We then have

There is a rectangular matrix [R A ] in \(\mathbb{M}_{n,m}(\mathbb{R})\), with n = m −μ null, such that

Such a factorization is performed using classical algorithms [47].

9.3.2 Definition and Construction of Ensemble SE+0

The ensemble SE+0 is then defined as the subset of all the second-order random matrices [A], defined on probability space \((\varTheta,\mathcal{T},\mathcal{P})\), with values in the set \(\mathbb{M}_{m}^{+0}(\mathbb{R})\), which are written as

in which [G] is a positive-definite symmetric (n × n) real random matrix belonging to ensemble SE + ɛ , with mean value E{[G]} = [I n ] and for which the coefficient of variation δ G is defined by Eq. (8.24) as a function of the hyperparameter δ defined by Eq. (8.15), which allows the level of statistical fluctuations to be controlled.

Generator of realizations. For all θ in Θ, the realization [G(θ)] of [G] is constructed as explained before. The realization [A(θ)] of random matrix [A] is calculated by [A(θ)] = [R A ]T[G(θ)] [R A ].

9.4 Ensemble SErect of Rectangular Random Matrices with a Given Mean Value

The ensemble SErect is an ensemble of rectangular random matrices for which the mean value is a given rectangular matrix and which is constructed with the MaxEnt. Such an ensemble depends on the available information and consequently, is not unique. We present hereinafter the construction proposed in [110], which is based on the use of a fundamental algebraic property for rectangular real matrices, which allows ensemble SE + ɛ to be used.

9.4.1 Decomposition of a Rectangular Matrix

Let [A] be a rectangular real matrix in \(\mathbb{M}_{m,n}(\mathbb{R})\) for which its null space is reduced to {0} ([A] x = 0 yields x = 0). Such a rectangular matrix [A] can be written as

in which the square matrix [T] and the rectangular matrix [U] are such that

The construction of the decomposition defined by Eq. (8.61) can be performed, for instance, by using the singular value decomposition of [A].

9.4.2 Definition of Ensemble SErect

Let [A] be a given rectangular real matrix in \(\mathbb{M}_{m,n}(\mathbb{R})\) with a null space reduced to {0} and whose decomposition is given by Eqs. (8.61) and (8.62). Since symmetric real matrix [T] is positive definite, there is an upper triangular matrix [L T ] in \(\mathbb{M}_{n}(\mathbb{R})\) such that [T] = [L T ]T [L T ] that corresponds to the Cholesky factorization of matrix [T].

A random rectangular matrix [A] belonging to ensemble SErect is a second-order random matrix defined on probability space \((\varTheta,\mathcal{T},\mathcal{P})\), with values in \(\mathbb{M}_{m,n}(\mathbb{R})\), whose mean value is the rectangular matrix [A] = E{[A]}, and which is written as

in which the random (n × n) matrix [T] belongs to ensemble SE + ɛ and is then written as

The random matrix [G] belongs to ensemble SG + ɛ in which [G] is a positive-definite symmetric (n × n) real random matrix belonging to ensemble SE + ɛ , with mean value E{[G]} = [I n ] and for which the coefficient of variation δ G is defined by Eq. (8.24) as a function of hyperparameter δ defined by Eq. (8.15), which allows the level of statistical fluctuations to be controlled.

Generator of realizations. For all θ in Θ, the realization [G(θ)] of [G] is constructed as explained before. The realization [A(θ)] of random matrix [A] is calculated by [A(θ)] = [U][L T ]T[G(θ)] [L T ].

9.5 Ensemble SEHT of a Pair of Positive-Definite Matrix-Valued Random Functions Related by a Hilbert Transform

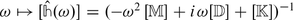

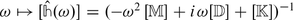

The ensemble SEHT is a set of random functions ω ↦ [Z(ω)] = [K(ω)] + iω [D(ω)] indexed by \(\mathbb{R}\) with values in a subset of all the (n × n) complex matrices such that [K(ω)] and [D(ω)] are positive-definite random matrices that are constrained by an underlying Hilbert transform induced by a causality property [115].

9.5.1 Defining the Deterministic Matrix Problem

We consider a family of complex (n × n) matrices [Z(ω)] depending on a parameter ω in \(\mathbb{R}\), such that [Z(ω)] = iω [D(ω)] + [K(ω)] where i is the pure imaginary complex number (\(i = \sqrt{-1}\)) and where, for all ω in \(\mathbb{R}\),

-

(i)

[D(ω)] and [K(ω)] belong to \(\mathbb{M}_{n}^{+}(\mathbb{R})\).

-

(ii)

[D(−ω)] = [D(ω)] and [K(−ω)] = [K(ω)].

-

(iii)

Matrices [D(ω)] and [K(ω)] are such that

$$\displaystyle{ \omega \,[D(\omega )] = [\widehat{N}^{I}(\omega )],\quad [K(\omega )] = [K_{ 0}] + [\widehat{N}^{R}(\omega )]. }$$(8.65)The real matrices \([\widehat{N}^{R}(\omega )]\) and \([\widehat{N}^{I}(\omega )]\) are the real part and the imaginary part of the (n × n) complex matrix \([\widehat{N}(\omega )] =\int _{\mathbb{R}}e^{-i\omega t}[N(t)]\,dt\) that is the Fourier transform of an integrable function t ↦ [N(t)] from \(\mathbb{R}\) into \(\mathbb{M}_{n}(\mathbb{R})\) such that [N(t)] = [0] for t < 0 (causal function). Consequently, \(\omega \mapsto [\widehat{N}^{R}(\omega )]\) and \(\omega \mapsto [\widehat{N}^{I}(\omega )]\) are continuous functions on \(\mathbb{R}\), which goes to [0] as | ω | → +∞ and which are related by the Hilbert transform [90],

$$\displaystyle{ [\widehat{N}^{R}(\omega )] = \frac{1} {\pi } \mathrm{ p.v}\int _{-\infty }^{+\infty }\frac{1} {\omega -\omega '}\,[\widehat{N}^{I}(\omega ')]\,d\omega ', }$$(8.66)in which p.v. denotes the Cauchy principal value. The real matrix [K 0] belongs to \(\mathbb{M}_{n}^{+}(\mathbb{R})\) and can be written as

$$\displaystyle{ [K_{0}] = [K(0)] + \frac{2} {\pi } \int _{0}^{+\infty }[D(\omega )]\,d\omega =\lim _{\vert \omega \vert \rightarrow +\infty }[K(\omega )], }$$(8.67)and consequently, we have the following equation:

$$\displaystyle{ [K(\omega )] = [K(0)] + \frac{\omega } {\pi }\,\mathrm{ p.v}\int _{-\infty }^{+\infty }\frac{1} {\omega -\omega '}\,[D(\omega ')]\,d\omega '. }$$(8.68)

9.5.2 Construction of a Nonparametric Stochastic Model

The construction of a nonparametric stochastic model then consists in modeling, for all real ω, the positive-definite symmetric (n × n) real matrices [D(ω)] and [K(ω)] by random matrices [D(ω)] and [K(ω)] such that

For ω ≥ 0, the construction of the stochastic model of the family of random matrices [D(ω)] and [K(ω)] is carried out as follows:

-

(i)

Constructing the family [D(ω)] of random matrices such that, for fixed ω, [D(ω)] = [L D (ω)]T [G D ] [L D (ω)], where [L D (ω)] is the upper triangular real (n × n) matrix resulting from the Cholesky decomposition of the positive-definite symmetric real matrix [D(ω)] = [L D (ω)]T [L D (ω)] and where [G D ] is a (n × n) random matrix that belongs to ensemble SG + ɛ , for which the hyperparameter δ is rewritten as δ D . Hyperparameter δ D allows the level of uncertainties to be controlled for random matrix [D(ω)].

-

(ii)

Constructing the random matrix [K(0)] = [L K(0)]T [G K(0)] [L K(0)] in which [L K(0)] is the upper triangular real (n × n) matrix resulting from the Cholesky decomposition of the positive-definite symmetric real matrix [K(0)] = [L K(0)]T [L K(0)] and where [G K(0)] is a (n × n) random matrix that belongs to ensemble SG + ɛ , for which the hyperparameter δ is rewritten as δ K . Hyperparameter δ K allows the level of uncertainties to be controlled for random matrix [K(0)].

-

(iii)

For fixed ω ≥ 0, constructing the random matrix [K(ω)] using the equation,

$$\displaystyle{ [\mathbf{K}(\omega )] = [\mathbf{K}(0)] + \frac{\omega } {\pi }\,\mathrm{ p.v}\int _{-\infty }^{+\infty }\frac{1} {\omega -\omega '}\,[\mathbf{D}(\omega ')]\,d\omega ', }$$(8.71)or equivalently,

$$\displaystyle{ [\mathbf{K}(\omega )] = [\mathbf{K}(0)] + \frac{2\,\omega ^{2}} {\pi } \,\mathrm{ p.v}\int _{0}^{+\infty } \frac{1} {\omega ^{2} -\omega '^{2}}\,[\mathbf{D}(\omega ')]\,d\omega '. }$$(8.72)The last equation can also be rewritten as the following equation recommended for computation (because the singularity in u = 1 is independent of ω):

$$\displaystyle\begin{array}{rcl} [\mathbf{K}(\omega )]& =& [\mathbf{K}(0)] + \frac{2\,\omega } {\pi } \,\mathrm{ p.v}\int _{0}^{+\infty }\, \frac{1} {1 - u^{2}}\,[\mathbf{D}(\omega u)]\,du, \\ & =& [\mathbf{K}(0)] + \frac{2\,\omega } {\pi } \,\,\lim _{\eta \rightarrow 0}\left \{\int _{0}^{1-\eta } +\int _{ 1+\eta }^{+\infty }\right \}.\end{array}$$(8.73) -

(iv)

For fixed ω < 0, [K(ω)] is calculated using the even property, [K(ω)] = [K(−ω)]. With such a construction, it can be verified that, for all ω ≥ 0, [K(ω)] is a positive-definite random matrix. The following sufficient condition is proved in [115]. If for all real vector y = (y 1, …, y n ), and if almost surely the random function ω ↦ <[D(ω)] y, y> is decreasing in ω for ω ≥ 0, then, for all ω ≥ 0, [K(ω)] is a positive-definite random matrix.

10 MaxEnt as a Numerical Tool for Constructing Ensembles of Random Matrices

In the previous sections, we have presented fundamental ensembles of random matrices constructed with the MaxEnt principle. For these fundamental ensembles the optimization problem defined by Eq. (8.8) has been solved exactly, what has allowed us to explicitly construct the fundamental ensembles of random matrices and also to explicitly describe the generators of realizations. This was possible thanks to the type of the available information that was used to define the admissible set (see Eq. (8.7)). In many cases, the available information does not allow the Lagrange multipliers to be explicitly calculated and, thus, does not allow for solving explicitly the optimization problem defined by Eq. (8.8).

In this framework of the nonexistence of an explicit solution for constructing the pdf of random matrices using the MaxEnt principle under the constraints defined by the available information, the first difficulty consists of the computation of the Lagrange multipliers with an adapted algorithm that must be robust for the high dimension. In addition, the computation of the Lagrange multipliers requires the calculation of integrals in high dimension, which can be estimated only by the Monte Carlo method. Therefore a generator of realizations of the pdf, which is parameterized by the unknown Lagrange multipliers that are currently being calculated, must be constructed. This problem is particularly difficult for the high dimension. An advanced and efficient methodology is presented hereinafter for the case of the high dimension [112] (thus allows also for treating the cases of the small dimension and then for any dimension).

10.1 Available Information and Parameterization

Let [A] be a random matrix defined on the probability space \((\varTheta,\mathcal{T},\mathcal{P})\), with values in any subset \(\mathbb{S}_{n}\) of \(\mathbb{M}_{n}^{S}(\mathbb{R})\), possibly with \(\mathbb{S}_{n} = \mathbb{M}_{n}^{S}(\mathbb{R})\). For instance, \(\mathbb{S}_{n}\) can be \(\mathbb{M}_{n}^{+}(\mathbb{R})\). Let p [A] be the pdf of [A] with respect to the volume element d S A on \(\mathbb{M}_{n}^{S}(\mathbb{R})\) (see Eq. (8.1). The support, denoted as supp p [A] of pdf [A], is \(\mathbb{S}_{n}\). Thus, p [A]([A]) = 0 for [A] not in \(\mathbb{S}_{n}\), and the normalization condition is written as

The available information is defined by the following equation on \(\mathbb{R}^{\mu }\):

in which f = (f 1, …, f μ ) is a given vector in \(\mathbb{R}^{\mu }\) with μ ≥ 1, where \([A]\mapsto \boldsymbol{\mathcal{G}}([A]) = (\mathcal{G}_{1}([A]),\ldots,\mathcal{G}_{\mu }([A]))\) is a given mapping from \(\mathbb{S}_{n}\) into \(\mathbb{R}^{\mu }\), and where E is the mathematical expectation. For instance, mapping \(\boldsymbol{\mathcal{G}}\) can be defined by the mean value E[A] = [A] in which [A] is a given matrix in \(\mathbb{S}_{n}\) and by the condition E{log(det[A])} = c A in which | c A | < +∞. A parameterization of ensemble \(\mathbb{S}_{n}\) is introduced such that any matrix [A] in \(\mathbb{S}_{n}\) is written as

in which y = (y 1, …, y N ) is a vector in \(\mathbb{R}^{N}\) and where \(\mathbf{y}\mapsto [\mathcal{A}(\mathbf{y})]\) is a given mapping from \(\mathbb{R}^{N}\) into \(\mathbb{S}_{n}\). Let y ↦ g(y) = (g 1(y), …, g μ (y)) be the mapping from \(\mathbb{R}^{N}\) into \(\mathbb{R}^{\mu }\) such that

Let Y = (Y 1, …, Y N ) be a \(\mathbb{R}^{N}\)-valued second-order random variable for which the probability distribution on \(\mathbb{R}^{N}\) is represented by the pdf y ↦ p Y (y) from \(\mathbb{R}^{N}\) into \(\mathbb{R}^{+} = [0,+\infty [\) with respect to d y = dy 1 …dy N . The support of function p Y is \(\mathbb{R}^{N}\). Function p Y satisfies the normalization condition:

For random vector Y, the available information is deduced from Eqs. (8.75) to (8.77) and is written as

10.1.1 Example of Parameterization

If \(\,\mathbb{S}_{n} = \mathbb{M}_{n}^{+}(\mathbb{R})\), then the parameterization, \([A] = [\mathcal{A}(\mathbf{y})]\), of [A] can be constructed in several ways. In order to obtain good properties for the random matrix \([\mathbf{A}] = [\mathcal{A}(\mathbf{Y})]\) in which Y is a \(\mathbb{R}^{N}\)-valued second-order random variable, deterministic matrix [A] is written as

with ɛ > 0, where [A 0] belongs to \(\mathbb{M}_{n}^{+}(\mathbb{R})\) and where [L A ] is the upper triangular (n × n) real matrix corresponding to the Cholesky factorization [L A ]T [L A ] = [A] of the mean matrix [A] = E{[A]} that is given in \(\mathbb{M}_{n}^{+}(\mathbb{R})\). Positive-definite matrix [A 0] can be written in two different forms (inducing different properties for random matrix [A]):

-

(i)

Exponential-type representation [54, 86]. Matrix [A 0] is written as \([A_{0}] =\exp\nolimits_{\mathbb{M}}([G])\) in which the matrix [G] belongs to \(\mathbb{M}_{n}^{S}(\mathbb{R})\) and where \(\exp\nolimits_{\mathbb{M}}\) denotes the exponential of the symmetric real matrices.

-

(ii)

Square-type representation [86, 111]. Matrix [A 0] is written as [A 0] = [L]T [L] in which [L] belongs to the set \(\mathcal{U}_{L}\) of all the upper triangular (n × n) real matrices with positive diagonal entries and where \([L] = \mathcal{L}([G])\) in which \(\mathcal{L}\) is a given mapping from \(\mathbb{M}_{n}^{S}(\mathbb{R})\) into \(\mathcal{U}_{L}\).

For this two representations, the parameterization is constructed in taking for y, the N = n(n + 1)∕2 independent entries {[G] jk , 1 ≤ j ≤ k ≤ n} of symmetric real matrix [G]. Then for all y in \(\mathbb{R}^{N}\), \([A] = [\mathcal{A}(\mathbf{y})]\) is in \(\mathbb{S}_{n}\), that is to say, is a positive-definite matrix.

10.2 Construction of the pdf of Random Vector Y Using the MaxEnt

The unknown pdf p Y with support \(\mathbb{R}^{N}\), whose normalization condition is given by Eq. (8.78), is constructed using the MaxEnt principle for which the available information is defined by Eq. (8.79). This construction is detailed in the next Sect. 11.

11 MaxEnt for Constructing the pdf of a Random Vector

Let Y = (Y 1, …, Y N ) be a \(\mathbb{R}^{N}\)-valued second-order random variable for which the probability distribution P Y (d y) on \(\mathbb{R}^{N}\) is represented by the pdf y ↦ p Y (y) from \(\mathbb{R}^{N}\) into \(\mathbb{R}^{+} = [0,+\infty [\) with respect to d y = dy 1 …dy N . The support of function p Y is \(\mathbb{R}^{N}\). Function p Y satisfies the normalization condition

The unknown pdf p Y is constructed using the MaxEnt principle for which the available information is

in which y ↦ g(y) = (g 1(y), …, g μ (y)) is a given mapping from \(\mathbb{R}^{N}\) into \(\mathbb{R}^{\mu }\). Equation (8.81) is rewritten as

Let \(\mathcal{C}_{p}\) be the set of all the integrable positive-valued functions y ↦ p(y) on \(\mathbb{R}^{N}\), whose support is \(\mathbb{R}^{N}\). Let \(\mathcal{C}\) be the set of all the functions p belonging to \(\mathcal{C}_{p}\) and satisfying the constraints defined by Eqs. (8.80) and (8.82),

The maximum entropy principle [58] consists in constructing p Y in \(\mathcal{C}\) such that

in which the Shannon entropy \(\mathcal{E}(p)\) of p is defined [103] by