Abstract

We consider perturbed Hammerstein integral equations of the form

where the \(\varphi _i\)’s and \(\psi _i\)’s are linear functionals realized as Stieltjes integrals with associated signed Stieltjes measures. Positive solutions of the integral equation can be related to positive solutions of boundary value problems, and we demonstrate that such problems have solutions under relatively mild hypotheses on the functions \(F_1\), \(F_2\), and f. We provide examples to illustrate the applicability of the results and their relationship to existing results in the literature, and we mention applications to radially symmetric solutions of PDEs as well as beam deflection modeling. Our results are achieved by using a nonstandard order cone together with an associated nonstandard open set.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper we consider perturbed Hammerstein integral equations of the form

where \(0\le t\le 1\). In (1.1) the functions \(\varvec{\xi }\mapsto F_i(\varvec{\xi })\), for \(i=1,2\), are assumed to be continuous, nondecreasing scalar-valued maps on \({\mathbb {R}}^{n_i}\), and the collection of functionals \(\{\varphi _i\}_{i=1}^{n_1}\) and \(\{\psi _j\}_{j=1}^{n_2}\), where \(n_1\), \(n_2\in {\mathbb {N}}\), are assumed to be linear functionals realized as

and

where the measures associated to these Stieltjes integrals are allowed to be signed. In addition, the maps \(t\mapsto \gamma _i(t)\), \((t,s)\mapsto G(t,s)\), and \((t,y)\mapsto f(t,y)\) are continuous on [0, 1], \([0,1]\times [0,1]\), and \([0,1]\times [0,+\infty )\), respectively; the precise hypotheses utilized are given in Sect. 2. Notice that solutions of integral equation (1.1) can be associated to solutions of various nonlocal boundary value problems (BVPs) with multiple nonlocal elements. BVPs with one or more nonlocal elements can be used in modeling temperature regulation, beam deformation and displacement, and chemical reactor theory among other applications—see Infante and Pietramala [16, 18] and Cabada, Infante, and Tojo [4] for additional details.

We would like to mention a few immediate applications of our results. Since we study the very general integral equation (1.1) a couple of specific applications are as follows:

radially symmetric solutions of the elliptic PDE

$$\begin{aligned} -(\Delta u)(\varvec{x})=a(t)g(|u(\varvec{x})|)\text {, }0<R_1\le |\varvec{x}|\le R_2<+\infty \text {, }\varvec{x}\in {\mathbb {R}}^{n} \end{aligned}$$(1.2)equipped with (radially symmetric) nonlocal boundary conditions.

solutions to ODEs of the form

$$\begin{aligned} u^{(4)}(t)=f(t,u(t))\text {, }t\in (0,1) \end{aligned}$$(1.3)subject to nonlocal boundary conditions.

In the case of problem (1.2) it is known (see, for example, Goodrich [13], Infante and Pietramala [19], Lan [25], and Lan and Webb [26]) that one can transform PDE (1.2) into an ODE. Then, in the case of nonlocal boundary conditions, we can study existence of solution of (1.2) by studying the corresponding problem for (1.1).

In the case of problem (1.3) this type of ODE can model the deflections of an elastic beam—see, for example, either Anderson and Hoffacker [1] or Infante and Pietramala [17]. In this case, if we equip (1.3) with nonlocal boundary conditions, then this can represent controllers on the beam that affect, for example, the displacement of the beam or the shearing force at a point. So, if (1.3) is equipped with the nonlocal boundary condition

where \(\varphi (u)\) is a nonlocal element, then this could model a controller located along the bar that affects the bending moment at the right endpoint (\(t=1\)) of the bar.

Our primary contribution is to show that we can obtain the existence of a positive solution to problem (1.1), for example, by assuming relatively mild hypotheses on the functions and functionals appearing in the perturbed Hammerstein equation. In particular, in past works on problems involving nonlinear, nonlocal elements it has been common to assume that the functions \(F_1\) and \(F_2\) satisfy either asymptotic or uniform growth conditions. Here, by contrast, in some cases the only condition we need impose on either \(F_1\) or \(F_2\) is a pointwise condition—see Theorem 2.5. In addition, we demonstrate in Remark 2.10 that in some cases our methodology is more broadly and easily applicable than competing methodologies.

More specifically, in previous papers by the author [9, 10] relatively general nonlocal conditions were considered. However, in those works it was required that such nonlocal elements be “asymptotically related” to a linear nonlocal element—an idea borrowed, essentially, from regularity theory (cf., Chipot and Evans [6] or Foss and Goodrich [8], for example). This resulted in somewhat technical conditions that had to be checked in order to the apply the results—see, for example, [9, Conditions (H1)–(H7), (H1b), (H2b), (H4b)] and [10, (H1)–(H6)].

In addition, recent papers by the author, namely [11,12,13,14], have considered rather general nonlocal, nonlinear elements. However, the methods utilized in [11,12,13,14] do not permit multiple nonlocal elements as in (1.1). By contrast, they only permit a single nonlocal element. The admission of two or more nonlocal elements requires a slightly different approach. The reason for this is that, as explained later in this section, our methods rely on controlling the size of the nonlocal elements—i.e., we want to have as precise information as possible regarding the value of \(\varphi _i(y)\) and \(\psi _j(y)\) for each i and j. This, however, turns out to be a somewhat delicate problem. Essentially, this is a topological problem since for sets \(\{E_j\}_{j=1}^{n}\) it is generally not the case that \(\partial \left( \bigcap _{j=1}^{n}E_j\right) =\bigcap _{j=1}^{n}\partial E_j\). As a consequence of this topological fact, while we have good control over the size of any one nonlocal element, it turns out to be difficult to control simultaneously the size of all \(n_1+n_2\) elements appearing in (1.1)—this is due to the construction of the sets \({\widehat{V}}_{\rho ,\varphi _i}\) and \({\widehat{V}}_{\rho ,\psi _i}\) in (1.5). Therefore, for these reasons we must proceed somewhat more cautiously when dealing now with multiple nonlocal elements as opposed to a single element, and this is reflected in the fact that we consider here only the case in which the functions \(F_1\) and \(F_2\) are nondecreasing.

Furthermore, we would like to mention that in a recent paper of Cianciaruso, Infante, and Pietramala [7] the authors treat very general nonlocal elements, somewhat akin to the formulation in (1.1). However, the authors’ methodology requires one to find linear functionals that act as upper and lower bounds on the nonlocal element. Moreover, their results apply to nonlocal elements with positive Stieltjes measures only. By contrast our methodology applies to signed measures and, in addition, our conditions on \(F_1\) and \(F_2\) can be pointwise in character, which, in particular, means that we do not have to search for linear functionals to act as appropriate upper and lower bounds on our nonlocal elements. In fact, we show by means of Example 2.9 and Remark 2.10 that in some cases our methodology is more straightforward, easier to apply, and more widely applicable than other methodologies, including the one introduced in [7]. In addition, since the recent paper by Cabada, Infante, and Tojo [5] makes use of the same basic hypotheses on the nonlocal elements as in [7], albeit in a more general overall problem, the same comments apply to the methodology utilized in [5].

In order to deduce our main results we utilize the nonstandard cone \({\mathcal {K}}\) defined by

where \(\eta _0\in (0,1]\), \((a,b)\Subset (0,1)\), and \(S_0\subseteq [0,1]\) is some set of full measure on which, for each \(i\in {\mathbb {N}}_1^{n_1}:=\{1,2,\dots ,n_1\}\) and \(j\in {\mathbb {N}}_1^{n_2}:=\{1,2,\dots ,n_2\}\), the quantities

in (1.4) are positive real numbers; here and throughout we define the map \(s\mapsto {\mathscr {G}}(s)\) by \({\mathscr {G}}(s):=\sup _{t\in [0,1]}G(t,s)\). Hence, in our analysis of problem (1.1) we reduce the collection of possible solution functions to those that result in the coercivity of the functionals \(\varphi _i\) and \(\psi _j\). The cone \({\mathcal {K}}\) was first introduced by the author in some recent papers [11,12,13,14], none of which, as already mentioned, addressed the general formulation suggested by (1.1). Strictly speaking, our methodology does not require the Harnack-like inequality \(\min _{t\in [a,b]}y(t)\ge \eta _0\Vert y\Vert \) in \({\mathcal {K}}\), but its inclusion can improve the existence results for problem (1.1).

As a consequence of our focus on the coercivity of the functionals, we also utilize a nonstandard open set that proves useful in improving the existence theorems one can provide in special cases. In particular, here we make use of the sets, which are relatively open in \({\mathcal {K}}\),

These types of sets were used by the author in [11] in the context of fractional-order differential equations. By using these sets together with the classical Leray–Schauder index, we demonstrate that we can improve the existence results that can be achieved. The use of (1.5) is only feasible since we have in hand the coercivity conditions in \({\mathcal {K}}\). Thus, this is an upshot of restricting the elements of \({\mathcal {K}}\) to those that cause the linear functionals to be coercive. In any case, we describe \({\widehat{V}}_{\rho ,\varphi _i}\) and \({\widehat{V}}_{\rho ,\psi _i}\) in greater detail in Sect. 2.

To conclude this section we briefly mention some of the literature related to our study of problem (1.1) that has not already been mentioned. The papers by Picone [27] and Whyburn [30], while classical, are of historical value for those interested in the origins of boundary value problems with nonlocal-type boundary conditions. More recently, numerous papers have appeared in the last several years on various problems (e.g., perturbed Hammerstein integral equations, ordinary differential equations, elliptic partial differential equations) with nonlocal elements. For example, Webb and Infante [28, 29] provided an elegant and general framework for addressing nonlocal boundary value problems. Cabada, Cid, and Infante [3], Infante [16], Infante, Minhós, and Pietramala [20], Jankowski [21], Karakostas and Tsamatos [22, 23], Karakostas [24], and Yang [31, 32] similarly considered boundary value problems with nonlocal boundary conditions. In addition, Anderson [2] specifically considered nonlinear boundary conditions in a relatively general context. Finally, the monographs by Guo and Lakshmikantham [15] and by Zeidler [33] are good references for the topological fixed point theory used in our proofs in Sect. 2.

But as alluded to earlier in this section, among the preceding papers that treat nonlinear, nonlocal boundary conditions, none of them utilizes pointwise-type conditions in the context of multiple nonlocal elements, owing to the fact that each of these works uses more classical cones and associated open sets. Here, by contrast, we demonstrate explicitly that the use of the \({\widehat{V}}\)-type sets in certain circumstances can yield some advantages over more traditional approaches even when arbitrarily many nonlocal elements are treated simultaneously.

2 Preliminaries, main result, and discussion

We begin by stating the various conditions that we impose on integral equation (1.1). These are listed as (H1)–(H5) below. Note that conditions (H1), (H3), and (H4) impose various regularity and structural conditions on the functionals \(\varphi _i\) and \(\psi _j\) as well as the kernel G. Condition (H2) imposes regularity and growth conditions on the functions \(\gamma _i\) and \(F_i\). Finally, condition (H5) ensures that \(\gamma _1\), \(\gamma _2\in {\mathcal {K}}\). Note that throughout this paper we denote by \(\Vert \cdot \Vert \) the usual maximum norm on the space \({\mathscr {C}}([0,1])\).

- H1::

For each \(i\in {\mathbb {N}}_1^{n_1}\) and \(j\in {\mathbb {N}}_{1}^{n_2}\) the functionals \(\varphi _i(y)\) and \(\psi _j(y)\) have the form

$$\begin{aligned} \varphi _i(y):=\int _{[0,1]}y(t)\ d\alpha _i(t)\text {, }\psi _j(y):=\int _{[0,1]}y(t)\ d\beta _j(t), \end{aligned}$$where \(\alpha _i\), \(\beta _j\ : \ [0,1]\rightarrow {\mathbb {R}}\) are of bounded variation on [0, 1]. Moreover, we denote by \(C_1^i\), \(D_1^j>0\) finite constants such that

$$\begin{aligned} |\varphi _i(y)|\le C_1^i\Vert y\Vert \quad \text {and}\quad |\psi _j(y)|\le D_1^j\Vert y\Vert , \end{aligned}$$for each \(y\in {\mathscr {C}}([0,1])\).

- H2::

- (1):

The functions \(\gamma _1\), \(\gamma _2\ : \ [0,1]\rightarrow [0,+\infty )\) and \(f\ : \ [0,1]\times [0,+\infty )\rightarrow [0,+\infty )\) are continuous.

- (2):

There exists at least one index \(i_0\in \{1,2\}\) such that \(\Vert \gamma _{i_0}\Vert >0\).

- (3):

For each \(i=1,2\) the function \(F_i\ : \ {\mathbb {R}}^{n_i}\rightarrow {\mathbb {R}}\) is monotone increasing in the sense that if \(\varvec{x}\le \varvec{y}\), then \(F_i(\varvec{x})\le F_i(\varvec{y})\); note that we follow the convention that given \(\varvec{x}:=(x_1,x_2,\dots ,x_n)\), \(\varvec{y}:=(y_1,y_2,\dots ,y_n)\in {\mathbb {R}}^{n}\), then \(\varvec{x}\le \varvec{y}\) if and only if \(x_i\le y_i\) for each \(i\in {\mathbb {N}}_1^{n}\).

- H3::

The map \(G\ : \ [0,1]\times [0,1]\rightarrow [0,+\infty )\) satisfies:

- (1):

\(G\in L^1([0,1]\times [0,1])\);

- (2):

for a.e. \(s\in [0,1]\) it follows that

$$\begin{aligned} \lim _{t\rightarrow \tau }|G(t,s)-G(\tau ,s)|=0 \end{aligned}$$for each \(\tau \in [0,1]\);

- (3):

\({\mathscr {G}}(s)=\sup _{t\in [0,1]}G(t,s)<+\infty \) for each \(s\in [0,1]\); and

- (4):

there exists an interval \((a,b)\Subset (0,1)\) and a constant \(\eta _0:=\eta _0(a,b)\in (0,1]\) such that

$$\begin{aligned} \min _{t\in [a,b]}G(t,s)\ge \eta _0{\mathscr {G}}(s), \end{aligned}$$for each \(s\in [0,1]\).

- H4::

Assume that for each \(i\in {\mathbb {N}}_1^{n_1}\) and \(j\in {\mathbb {N}}_1^{n_2}\) the maps

$$\begin{aligned} s\mapsto \frac{1}{{\mathscr {G}}(s)}\int _0^1G(t,s)\ d\alpha _i(t)\quad \text {and}\quad s\mapsto \frac{1}{{\mathscr {G}}(s)}\int _0^1G(t,s)\ d\beta _j(t) \end{aligned}$$are defined for each \(s\in S_0\), where \(S_0\subseteq [0,1]\) has full measure (i.e., \(\left| S_0\right| =1\)), and that the constants defined by

$$\begin{aligned} C_0^i:=\inf _{s\in S_0}\frac{1}{{\mathscr {G}}(s)}\int _0^1G(t,s)\ d\alpha _i(t) \end{aligned}$$and

$$\begin{aligned} D_0^j:=\inf _{s\in S_0}\frac{1}{{\mathscr {G}}(s)}\int _0^1G(t,s)\ d\beta _j(t) \end{aligned}$$satisfy

$$\begin{aligned} C_0^i\text {, }D_0^j\in (0,+\infty ), \end{aligned}$$for each \(i\in {\mathbb {N}}_1^{n_1}\) and \(j\in {\mathbb {N}}_1^{n_2}\).

- H5::

For \(k=1,2\) we have that

$$\begin{aligned} \varphi _i\left( \gamma _k\right) \ge C_0^i\Vert \gamma _k\Vert \text { and }\psi _j\left( \gamma _k\right) \ge D_0^j\Vert \gamma _k\Vert , \end{aligned}$$for each \(i\in {\mathbb {N}}_1^{n_1}\) and \(j\in {\mathbb {N}}_1^{n_2}\), and also that

$$\begin{aligned} \min _{t\in [a,b]}\gamma _k(t)\ge \eta _0\Vert \gamma _k\Vert \end{aligned}$$for each \(k=1,2\), where \(\eta _0\), a, and b are the same numbers as in condition (H3.4) above.

Remark 2.1

Note that by condition (H5) it follows that \({\mathcal {K}}\ne \varnothing \).

Next we provide some properties of the sets \({\widehat{V}}_{\rho ,\varphi _i}\) and \({\widehat{V}}_{\rho ,\psi _j}\), which are important for the results that follow. The proofs of these results can be isolated from [11], and so, we do not repeat the proofs here. Note that in Lemma 2.2 and the sequel we denote by \(\Omega _{\rho }\), for \(\rho >0\), the set

Lemma 2.2

For each fixed \(\rho >0\), \(i\in {\mathbb {N}}_1^{n_1}\), and \(j\in {\mathbb {N}}_1^{n_2}\), it holds that

and that

Lemma 2.3

For each fixed \(\rho >0\), \(i\in {\mathbb {N}}_1^{n_1}\), and \(j\in {\mathbb {N}}_1^{n_2}\), each of the sets \({\widehat{V}}_{\rho ,\varphi _i}\) and \({\widehat{V}}_{\rho ,\psi _j}\) defined in (1.5) is a (relatively) open set in \({\mathcal {K}}\) and, furthermore, is bounded.

We next introduce some notation that we use in this section.

Remark 2.4

For compact sets \(X\subset [0,1]\) and \(Y\subseteq [0,+\infty )\) we denote by \(f_{X\times Y}^{m}\) the set

and by \(f_{X\times Y}^{M}\) the set

Now we state and prove the first main existence result for problem (1.1), which considers the case in which \(F_1(\varvec{\xi })\not \equiv 0\) and \(F_2(\varvec{\xi })\equiv 0\). Note that in what follows we define the operator \(T\ : \ {\mathcal {K}}\rightarrow {\mathscr {C}}([0,1])\) by

Then, in the usual way, a fixed point of T will correspond to the solution of integral equation (1.1).

Theorem 2.5

Assume that conditions (H1)–(H5) are satisfied with \(\Vert \gamma _1\Vert >0\), \(F_1(\varvec{\xi })\not \equiv 0\), and \(F_2(\varvec{\xi })\equiv 0\). In addition, assume that there exist numbers \(\rho _1\), \(\rho _2>0\), with \(\rho _1\ne \rho _2\), such that each of the following is true.

- (1)

For the number \(\rho _1\) it holds that

$$\begin{aligned} \begin{aligned}&F_1(\varvec{0})\left( \min _{1\le j\le n_1}\varphi _{j}\left( \gamma _1\right) \right) \\&\quad \quad +\lambda \left( f_{[a,b]\times \left[ \frac{\eta _0\rho _1}{\max _{1\le j\le n_1}C_1^j},\frac{\rho _1}{\min _{1\le j\le n_1}C_0^j}\right] }^m\right) \min _{1\le j\le n_1}\int _a^b\int _0^1 G(t,s)\ d\alpha _{j}(t)\ ds>\rho _1, \end{aligned} \end{aligned}$$for the numbers a, \(b\in {\mathbb {R}}\) in condition (H3.4).

- (2)

For the number \(\rho _2\) it holds that

$$\begin{aligned} \begin{aligned}&F_1(\rho _2,\rho _2,\dots ,\rho _2)\left( \max _{1\le j\le n_1}\varphi _{j}\left( \gamma _1\right) \right) \\&\quad +\lambda \left( f_{[0,1]\times \left[ 0,\min _{1\le j\le n_1}\frac{\rho _2}{C_0^j}\right] }^{M}\right) \max _{1\le j\le n_1}\int _0^1\int _0^1 G(t,s)\ d\alpha _{j}(t)\ ds<\rho _2. \end{aligned} \end{aligned}$$

Then problem (1.1) has at least one positive solution.

Proof

It is clear that the operator T is completely continuous due to the regularity conditions imposed by conditions (H1)–(H5). At the same time by repeating arguments given in [11] one can show that \(T({\mathcal {K}})\subseteq {\mathcal {K}}\). So, we do not show that here.

Instead we will now show that \(\mu y\ne Ty\) for all \(\mu \ge 1\) and \( y\in \partial \left( \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _2,\varphi _j}\right) \). To this end, suppose for contradiction that there exists a number \(\mu \ge 1\) and a function \( y\in \partial \left( \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _2,\varphi _j}\right) \) such that \(\mu y=Ty\). Now, since

we know that

for each \(1\le j\le n_1\). Furthermore, because y is in the boundary of \(\bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _2,\varphi _j}\), there must exist an index \(j_0\in {\mathbb {N}}_{1}^{n_1}\) such that

Consequently, applying \(\varphi _{j_0}\) to both sides of the operator equation \(\mu y=Ty\) we obtain

Moreover, since \(\varvec{\xi }\mapsto F_1(\varvec{\xi })\) is a monotone map it follows that

where we have utilized inequality (2.1). Thus, we conclude that

Now, as mentioned above we have that \(\varphi _j(y)\le \rho _2\) for each \(j\in {\mathbb {N}}_1^{n_1}\). Consequently, by the coercivity of the functions \(\varphi _j\), it follows that

for each \(j\in {\mathbb {N}}_1^{n_1}\). Therefore, we conclude that

for each \(j\in {\mathbb {N}}_1^{n_1}\). In particular, this means that

In addition, we notice that

Thus, from the preceding inequality, estimate (2.2), and condition (2) in the statement of the theorem we estimate

which is a contradiction. Consequently, we conclude that

On the other hand, let \(e(t):=\gamma _1(t)\); recall that \(\Vert \gamma _1\Vert \ne 0\) and that \(\gamma _1\in {\mathcal {K}}\). We claim that for each \(\mu \ge 0\) and \( y\in \partial \left( \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _1,\varphi _j}\right) \) that \(y\ne Ty+\mu e\). So, suppose for contradiction that there does exist \(\mu \ge 0\) and \( y\in \partial \left( \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _1,\varphi _j}\right) \) such that \(y=Ty+\mu e\).

Now, since \( y\in \partial \left( \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _1,\varphi _j}\right) \) it follows that

for all \(1\le j\le n_1\). As in the preceding part of the proof, there must exist an index \(j_0\in {\mathbb {N}}_1^{n_1}\) such that \(\varphi _{j_0}(y)=\rho _1\). Applying this functional to both sides of the equation \(y=Ty+\mu e\) we obtain the estimate

Moreover, together with the monotonicity of the map \(\varvec{\xi }\mapsto F_1(\varvec{\xi })\), it follows that

Consequently, we deduce that

At the same time, since \(\varphi _{j_0}(y)=\rho _1\) we know that

whence

Similarly, since \( y\in \partial \left( \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _1,\varphi _j}\right) \), it follows that

for each \(j\in {\mathbb {N}}_1^{n_1}\), from which it follows that

for each \(t\in [0,1]\). Likewise it follows that

for each \(s\in [a,b]\). All in all, then, we conclude that

for each \(t\in [a,b]\). Consequently, we deduce that for \( y\in \partial \left( \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _1,\varphi _j}\right) \) we must have that

for each \(t\in [a,b]\). It thus follows from the above estimates together with inequality (2.3) that

which is a contradiction; note that to obtain inequality (2.4) we have used that

a.e. \(s\in [0,1]\). Therefore, we conclude that

In summary, we conclude that there exists a function \(y_0\) satisfying

if \(\rho _2>\rho _1>0\), or satisfying

if \(\rho _1>\rho _2>0\), such that \(Ty_0=y_0\). Note that if \( y\in \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _1,\varphi _j}\), then \(\varphi _j(y)<\rho _1\) for each \(1\le j\le n_1\). At the same time, if \( y\in \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _2,\varphi _j}\), then \(\varphi _j(y)<\rho _2\) for each \(1\le j\le n_1\). Therefore, if \(\rho _2>\rho _1>0\), then it follows that if \( y\in \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _1,\varphi _j}\), then

for each \(1\le j\le n_1\), whence \( y\in \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _2,\varphi _j}\). Consequently, we conclude that

and this inclusion is strict provided that \(\rho _1\ne \rho _2\). Similarly, if \(\rho _1>\rho _2>0\), then

with strict inclusion. In other words, we may safely conclude that

in case \(\rho _2>\rho _1>0\), and similarly that

in case \(\rho _1>\rho _2>0\), thus ensuring that \(y_0\) does not belong to the empty set. Finally, since the function \(y_0\) is a positive solution of problem (1.1), the proof is thus complete. \(\square \)

We next present a variation of Theorem 2.5 that allows for slightly more flexibility in some circumstances.

Corollary 2.6

Assume that conditions (H1)–(H5) are satisfied with \(\Vert \gamma _1\Vert >0\), \(F_1(\varvec{\xi })\not \equiv 0\), and \(F_2(\varvec{\xi })\equiv 0\). In addition, assume that there exist numbers \(\rho _1\), \(\rho _2>0\), with \(\rho _1\ne \rho _2\), such that each of the following is true.

- (1)

For the number \(\rho _1\) it holds that

$$\begin{aligned} \begin{aligned}&F_1\left( \frac{\rho _1C_0^1}{\max _{1\le j\le n_1}C_1^j},\frac{\rho _1C_0^2}{\max _{1\le j\le n_1}C_1^j},\dots ,\frac{\rho _1C_0^{n_1}}{\max _{1\le j\le n_1}C_1^j}\right) \left( \min _{1\le j\le n_1}\varphi _{j}\left( \gamma _1\right) \right) \\&\quad \quad +\lambda \left( f_{[a,b]\times \left[ \frac{\eta _0\rho _1}{\max _{1\le j\le n_1}C_1^j},\frac{\rho _1}{\min _{1\le j\le n_1}C_0^j}\right] }^m\right) \min _{1\le j\le n_1}\int _a^b\int _0^1 G(t,s)\ d\alpha _{j}(t)\ ds>\rho _1, \end{aligned} \end{aligned}$$for the numbers a, \(b\in {\mathbb {R}}\) in condition (H3.4).

- (2)

For the number \(\rho _2\) it holds that

$$\begin{aligned} \begin{aligned}&F_1(\rho _2,\rho _2,\dots ,\rho _2)\left( \max _{1\le j\le n_1}\varphi _{j}\left( \gamma _1\right) \right) \\&\quad +\lambda \left( f_{[0,1]\times \left[ 0,\min _{1\le j\le n_1}\frac{\rho _2}{C_0^j}\right] }^{M}\right) \max _{1\le j\le n_1}\int _0^1\int _0^1 G(t,s)\ d\alpha _{j}(t)\ ds<\rho _2. \end{aligned} \end{aligned}$$

Then problem (1.1) has at least one positive solution.

Proof

The proof is essentially the same as that of Theorem 2.5 with one exception. Instead of using the monotonicity of \(F_1\) to write

we instead recall that

for each \(j\in {\mathbb {N}}_1^{n_1}\). Then noticing that

for each \(j\in {\mathbb {N}}_1^{n_1}\), it follows upon combining these two preceding estimates that

from which the desired conclusion follows. \(\square \)

Remark 2.7

Evidently numerous variations of the argument in condition (1) of Corollary 2.6 can be written.

Numerous other variations of Theorem 2.5 may be provided if one desires. For example, instead of applying \(\varphi _{j_0}\) to both sides of the operator equation \(\mu y=Ty\) in the first part of the proof of Theorem 2.5, we could instead apply \(\psi _{j_0}\). Nonetheless, for the sake of brevity we provide only one such corollary. This corollary demonstrates that if both \(F_1(\varvec{\xi })\not \equiv 0\) and \(F_2(\varvec{\xi })\not \equiv 0\), then a modified version of Theorem 2.5 may be written.

Corollary 2.8

Assume that conditions (H1)–(H5) are satisfied. In addition, assume that there exist numbers \(\rho _1\), \(\rho _2>0\), with \(\rho _1\ne \rho _2\), such that each of the following is true.

- (1)

For the number \(\rho _1\) it holds that

$$\begin{aligned} \begin{aligned}&F_1(\varvec{0})\left( \min _{1\le j\le n_1}\varphi _{j}\left( \gamma _1\right) \right) \\&\quad \quad +\lambda \left( f_{[a,b]\times \left[ \frac{\eta _0\rho _1}{\max _{1\le j\le n_1}C_1^j},\frac{\rho _1}{\min _{1\le j\le n_1}C_0^j}\right] }^m\right) \min _{1\le j\le n_1}\int _a^b\int _0^1 G(t,s)\ d\alpha _{j}(t)\ ds>\rho _1. \end{aligned} \end{aligned}$$ - (2)

For the number \(\rho _2\) it holds that

$$\begin{aligned} \begin{aligned}&F_1(\rho _2,\rho _2,\dots ,\rho _2)\left( \max _{1\le j\le n_1}\varphi _j\left( \gamma _1\right) \right) \\&\quad +F_2\left( D_1^1\min _{1\le j\le n_1}\left\{ \frac{\rho _2}{C_0^j}\right\} ,\dots ,D_1^{n_2}\min _{1\le j\le n_1}\left\{ \frac{\rho _2}{C_0^j}\right\} \right) \left( \max _{1\le j\le n_1}\varphi _j\left( \gamma _2\right) \right) \\&\quad +\lambda \left( f_{[0,1]\times \left[ 0,\min _{1\le j\le n_1}\frac{\rho _2}{C_0^j}\right] }^{M}\right) \max _{1\le j\le n_1}\int _0^1\int _0^1 G(t,s)\ d\alpha _{j}(t)\ ds\\&<\rho _2. \end{aligned} \end{aligned}$$

Then problem (1.1) has at least one positive solution.

Proof

Since \((t,\varvec{\xi })\mapsto \gamma _2(t)F_2(\varvec{\xi })\) is a nonnegative map, it follows that repeating exactly the same steps as in the proof of Theorem 2.5 we obtain that

So, we will not repeat that part of the proof.

On the other hand, the proof that

changes slightly. As before, assume for contradiction the existence of \(\mu \ge 1\) and \( y\in \partial \left( \bigcap _{j=1}^{n_1}{\widehat{V}}_{\rho _2,\varphi _j}\right) \) such that \(\mu y=Ty\). Then \(\varphi _j(y)<\rho _2\) for each \(1\le j\le n_1\) and there exists \(j_0\in {\mathbb {N}}_1^{n_1}\) such that \(\varphi _{j_0}(y)=\rho _2\). Just as in the proof of Theorem 2.5 we find that

Now, as calculated in the proof of Theorem 2.5 we notice that

This means that

for each \(j\in {\mathbb {N}}_1^{n_1}\). Since the map \(\varvec{\xi }\mapsto F_2(\varvec{\xi })\) is increasing, it follows that

And from the preceding estimates we conclude that

Consequently, condition (2) in the statement of the corollary yields the contradiction \(\rho _2<\rho _2\), and so, we conclude that

Thus, in the end we are able to arrive at the same conclusion as in the proof of Theorem 2.5. And this completes the proof. \(\square \)

We conclude with a couple examples to illustrate the application of the result and, moreover, to compare its application to other related results in the literature. We will specialize our example to the case in which \(n_1=2\) and \(F_2(\varvec{\xi })\equiv 0\) in order to keep the example clearer and more manageable. More complicated situations can be treated, of course.

In addition, while this example illustrates the application of the results to the case of a second-order ODE with nonlocal BCs, as was mentioned in Sect. 1 it is easy to see that the same calculations lead to applications to radially symmetric solutions of elliptic PDEs as well as beam deflection models with nonlocal controllers. Since these extensions are straightforward, we give the example in the situation of a second-order ODE to focus more clearly on the application of the results rather than various applications.

Example 2.9

Consider the boundary value problem given by

Observe that in this case we define \(F_1\), \(F_2\ : \ [0,+\infty )\times [0,+\infty )\rightarrow {\mathbb {R}}\) by

If we put \(\gamma _1(t):=1-t\) and \(\gamma _2(t)\equiv 0\) as well as

in integral equation (1.1), then solutions of the integral equation correspond to solutions of boundary value problem (2.5). Notice, importantly, that the map \(\varvec{x}\mapsto F_1(\varvec{x})\) is increasing in each argument in the sense that if \(\varvec{x}\le \varvec{y}\), then \(F_1(\varvec{x})\le F_1(\varvec{y})\).

We now perform some preliminary calculations. Notice that

In addition, we calculate the following; note that we have elected to put \([a,b]:=\left[ \frac{1}{4},\frac{3}{4}\right] \) in this example.

So,

Note also that \(\eta _0:=\frac{1}{4}\) here and that each of the following is true.

Then using (2.6)–(2.7) we see that condition (1) in Theorem 2.5 reduces to

whereas condition (2) reduces to

We note that in order for condition (2) to be satisfied we must have that

and this inequality is satisfied only if \(\rho _2\in (0.382,1)\) to three decimal places of accuracy; thus, it is not a vacuous condition. For example, if we select \(\rho _1:=4\) and \(\rho _2:=\frac{2}{5}\), then both conditions are satisfied provided that

and

In other words, problem (1.1) will have at least one positive solution, \(y_0\), satisfying the localization \( y_0\in \overline{{\widehat{V}}_{4}}\setminus {\widehat{V}}_{0.4}\) provided that

assuming that this set is nonempty, which requires that

Alternatively, if we set \(\lambda =1\), then problem (2.5) becomes

It then follows from Theorem 2.5 that problem (2.8) will have at least one positive solution provided that f satisfies (to three decimal places of accuracy)

Remark 2.10

It is worth considering the result of Example 2.9 in the context of the recent results by Cianciaruso, Infante, and Pietramala [7]. In particular, we will show that while the methodology developed in our paper applies easily to problem (2.5) as demonstrated by Example 2.9, the methodology developed in [7] does not apply so easily the same problem.

Utilizing the methodology developed in [7] and applying it to problem (2.5) we would need to find, among other things, a linear functional \(\alpha ^{\rho }\) such that

for each \(y\in \partial \Omega _{\rho }:=\{y\in {\mathscr {C}}([0,1])\ : \ \Vert y\Vert =\rho \}\), for some number \(\rho >0\). Importantly, \(\alpha ^{\rho }\) must satisfy the auxiliary condition

Suppose that we choose

for some constant \(A>0\) to be determined. Condition (2.9) requires that

so that \(A<2\). Since

and

if we employ the same Harnack inequality as before (as the authors of [7] do as well), namely \(\min _{\frac{1}{4}\le t\le \frac{3}{4}}y(t)\ge \frac{1}{4}\Vert y\Vert \), then it follows that if

then \(\left( y\left( \frac{1}{2}\right) \right) ^2+\sqrt{y\left( \frac{3}{4}\right) }<\alpha ^{\rho }(y)\) will hold as desired. As A increases, the range of values of \(\Vert y\Vert \) such that (2.10) is satisfied increases. Recalling from above that \(A<2\) must hold, if we put \(A=2\), then (2.10) becomes

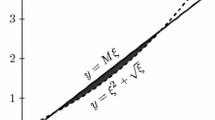

which has no real-valued solutions in \(\Vert y\Vert \). In other words, a straightforward analysis of \(\alpha ^{\rho }\) does not show it to be an admissible “upper bound functional” in the methodology of [7]. Similar comments apply to the choice \(\alpha ^{\rho }(y):=Ay\left( \frac{3}{4}\right) \). The drawing below shows the configuration of the graph of the maps \(\xi \mapsto \xi ^2+\sqrt{\xi }\) and \(\xi \mapsto \frac{1}{2}\xi \).

Notice that the dashed curve, which is the graph of \(y=\xi ^2+\sqrt{\xi }\) lies above the solid curve, which is the graph of \( y=\frac{1}{2}\xi \).

On the other hand, if we try

for constants A, \(B>0\) to be determined, then since we again need that \({\widehat{\alpha }}\left( \gamma _1\right) <1\) it follows that the constants A and B must satisfy

At the same time using the Harnack inequality \(\min _{\frac{1}{4}\le t\le \frac{3}{4}}y(t)\ge \frac{1}{4}\Vert y\Vert \) we may estimate \({\widehat{\alpha }}^{\rho }\) from below by

Thus, if

is satisfied for each \(y\in \partial \Omega _{\rho }\), for some \(\rho >0\), then the functional \({\widehat{\alpha }}^{\rho }\) will serve as a suitable upper bound, provided that inequality (2.11) holds. But conditions (2.11)–(2.12) are not compatible since there exist no values of A, B, \(\rho >0\) such that \(\Vert y\Vert =\rho \) implies (2.11)–(2.12).

All in all, as regards problem (2.5) we see that the methodology we have presented in this paper may be more easily applicable since it does not rely on discovering a functional \(\alpha ^{\rho }\) and then demonstrating that it acts as a suitable upper bound on the nonlocal element. Rather by means of \({\mathcal {K}}\) and the \({\widehat{V}}\)-type sets we can restrict our analysis to “pointwise values”. So, in some cases the methodology introduced here may be easier and more flexible to apply than competing methodologies.

Remark 2.11

We remark that while we have compared our methodology here with competing methodologies in the case of nonlinear, nonlocal boundary conditions, it is, nonetheless, the case that Theorem 2.5 can apply to the case of linear nonlocal boundary conditions.

References

Anderson, D.R., Hoffacker, J.: Existence of solutions for a cantilever beam problem. J. Math. Anal. Appl. 323, 958–973 (2006)

Anderson, D.R.: Existence of three solutions for a first-order problem with nonlinear nonlocal boundary conditions. J. Math. Anal. Appl. 408, 318–323 (2013)

Cabada, A., Cid, J.A., Infante, G.: A positive fixed point theorem with applications to systems of Hammerstein integral equations. Bound. Value Probl. 2014, 254 (2014)

Cabada, A., Infante, G., Tojo, F.A.J.: Nonzero solutions of perturbed Hammerstein integral equations with deviated arguments and applications. Topol. Methods Nonlinear Anal. 47, 265–287 (2016)

Cabada, A., Infante, G., Tojo, F.A.F.: Nonlinear perturbed integral equations related to nonlocal boundary value problems. Fixed Point Theory 19, 65–92 (2018)

Chipot, M., Evans, L.C.: Linearisation at infinity and Lipschitz estimates for certain problems in the calculus of variations. Proc. R. Soc. Edinb. Sect. A 102, 291–303 (1986)

Cianciaruso, F., Infante, G., Pietramala, P.: Solutions of perturbed Hammerstein integral equations with applications. Nonlinear Anal. Real World Appl. 33, 317–347 (2017)

Foss, M., Goodrich, C.S.: On partial Hölder continuity and a Caccioppoli inequality for minimizers of asymptotically convex functionals between Riemannian manifolds. Ann. Mat. Pura Appl. (4) 195, 1405–1461 (2016)

Goodrich, C.S.: Positive solutions to boundary value problems with nonlinear boundary conditions. Nonlinear Anal. 75, 417–432 (2012)

Goodrich, C.S.: On nonlinear boundary conditions satisfying certain asymptotic behavior. Nonlinear Anal. 76, 58–67 (2013)

Goodrich, C.S.: Coercive nonlocal elements in fractional differential equations. Positivity 21, 377–394 (2017)

Goodrich, C.S.: The effect of a nonstandard cone on existence theorem applicability in nonlocal boundary value problems. J. Fixed Point Theory Appl. 19, 2629–2646 (2017)

Goodrich, C.S.: New Harnack inequalities and existence theorems for radially symmetric solutions of elliptic PDEs with sign changing or vanishing Green’s function. J. Differ. Equ. 264, 236–262 (2018)

Goodrich, C.S.: Radially symmetric solutions of elliptic PDEs with uniformly negative weight. Ann. Mat. Pura Appl. (4) 197, 1585–1611 (2018)

Guo, D., Lakshmikantham, V.: Nonlinear Problems in Abstract Cones. Academic Press, Boston (1988)

Infante, G.: Nonlocal boundary value problems with two nonlinear boundary conditions. Commun. Appl. Anal. 12, 279–288 (2008)

Infante, G., Pietramala, P.: A cantilever equation with nonlinear boundary conditions. Electron. J. Qual. Theory Differ. Equ., Special Edition I, No. 15 (2009)

Infante, G., Pietramala, P.: Multiple nonnegative solutions of systems with coupled nonlinear boundary conditions. Math. Methods Appl. Sci. 37, 2080–2090 (2014)

Infante, G., Pietramala, P.: Nonzero radial solutions for a class of elliptic systems with nonlocal BCs on annular domains. NoDEA Nonlinear Differ. Equ. Appl. 22, 979–1003 (2015)

Infante, G., Minhós, F., Pietramala, P.: Non-negative solutions of systems of ODEs with coupled boundary conditions. Commun. Nonlinear Sci. Numer. Simul. 17, 4952–4960 (2012)

Jankowski, T.: Positive solutions to fractional differential equations involving Stieltjes integral conditions. Appl. Math. Comput. 241, 200–213 (2014)

Karakostas, G.L., Tsamatos, P.C.: Existence of multiple positive solutions for a nonlocal boundary value problem. Topol. Methods Nonlinear Anal. 19, 109–121 (2002)

Karakostas, G.L., Tsamatos, P.Ch.: Multiple positive solutions of some Fredholm integral equations arisen from nonlocal boundary-value problems. Electron. J. Differ. Equ. 30, 17 (2002)

Karakostas, G.L.: Existence of solutions for an \(n\)-dimensional operator equation and applications to BVPs. Electron. J. Differ. Equ. (71) (2014)

Lan, K.Q.: Multiple positive solutions of semilinear differential equations with singularities. J. Lond. Math. Soc. (2) 63, 690–704 (2001)

Lan, K.Q., Webb, J.R.L.: Positive solutions of semilinear differential equations with singularities. J. Differ. Equ. 148, 407–421 (1998)

Picone, M.: Su un problema al contorno nelle equazioni differenziali lineari ordinarie del secondo ordine. Ann. Scuola Norm. Sup. Pisa Cl. Sci. 10, 1–95 (1908)

Webb, J.R.L., Infante, G.: Positive solutions of nonlocal boundary value problems: a unified approach. J. Lond. Math. Soc. 74(2), 673–693 (2006)

Webb, J.R.L., Infante, G.: Non-local boundary value problems of arbitrary order. J. Lond. Math. Soc. 79(2), 238–258 (2009)

Whyburn, W.M.: Differential equations with general boundary conditions. Bull. Am. Math. Soc. 48, 692–704 (1942)

Yang, Z.: Existence and nonexistence results for positive solutions of an integral boundary value problem. Nonlinear Anal. 65, 1489–1511 (2006)

Yang, Z.: Existence of nontrivial solutions for a nonlinear Sturm-Liouville problem with integral boundary conditions. Nonlinear Anal. 68, 216–225 (2008)

Zeidler, E.: Nonlinear Functional Analysis and Its Applications, I: Fixed-Point Theorems. Springer, New York (1986)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Ti-Jun Xiao.

Rights and permissions

About this article

Cite this article

Goodrich, C.S. Pointwise conditions for perturbed Hammerstein integral equations with monotone nonlinear, nonlocal elements. Banach J. Math. Anal. 14, 290–312 (2020). https://doi.org/10.1007/s43037-019-00017-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43037-019-00017-1

Keywords

- Hammerstein integral equation

- Nonlocal boundary condition

- Nonlinear boundary condition

- Positive solution

- Coercivity