Abstract

This research presents a comprehensive study on predicting the compressive strength (CS) of PET-fiber-reinforced concrete (PFRC) using three decision tree-based machine learning models: Decision Tree (DT), Random Forest (RF), and Gradient Boosting Machine (GBM) regressors. To enhance the predictive capabilities of these models, the hyperparameters were optimized using the novel metaheuristic Dolphin Echolocation Optimization (DEO) technique. The input features considered for the models include the Binder content, W/B ratio, coarse and fine aggregate content, and PET fiber volume fraction. The target variable is the compressive strength of the concrete samples. Extensive experimentation was used to analyze and compare the effectiveness of each model. The results demonstrate that the DEO-tuned Random Forest outperformed its other counterparts, achieving improved accuracy in predicting the CS of PFRC. SHAP (Shapley Additive Explanations) and Sobol sensitivity analysis were conducted to explore the sensitivity of the input features toward compressive strength prediction. The Sobol sensitivity analysis assessed the significance of the input features and their interactions, whereas the SHAP values revealed the specific effects of each feature on the output of the model. The findings from the sensitivity analyses identified the Binder content, fiber volume fraction, and W/B as the most influential factors in determining the compressive strength.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Due to its special qualities and prospective advantages, PET fiber-reinforced concrete (PFRC) has received a lot of interest in the field of civil engineering. In PFRC, concrete mixtures are infused with PET fibers, which enhances the mechanical and durability characteristics of the resulting composite material (Marthong & Marthong, 2016). Enhancing the tensile strength and toughness of concrete is one of the main benefits of PET fiber reinforcing. Concrete, although strong in compression, is inherently weak in tension (Weckert et al., 2011). The inclusion of PET fibers helps to bridge micro-cracks that occur during the early stages of loading, effectively distributing the stress and preventing crack propagation (Benkharbeche et al., 2021). This property makes PFRC particularly suitable for applications, where enhanced crack resistance is desired, such as pavements, industrial floors, and precast elements. Another significant benefit of PFRC is its impact on the durability and service life of concrete structures. PET fibers act as a barrier against the ingress of water, chloride ions, and other harmful substances that can cause the corrosion of reinforcing steel or deteriorate the concrete matrix (Naidu Gopu & Joseph, 2022). By mitigating the potential damage caused by chemical attacks, PFRC offers improved resistance to environmental factors and can extend the lifespan of structures, reducing maintenance and repair costs. PFRC contributes to sustainable construction practices by utilizing recycled PET materials. The incorporation of PET fibers in concrete provides an environmentally friendly solution to the growing issue of plastic waste (Rao et al., 2022, 2023). By diverting PET bottles and other plastic waste from landfills and repurposing them into construction materials, PFRC offers a valuable avenue for recycling and waste reduction. The procedure to develop PET fiber of various aspect ratio (AR) is illustrated in Fig. 1.

Generation of PET fiber (Meza et al., 2021)

Strength is an important characteristic of any cementitious material (Parhi et al., 2023; Pradhan et al., 2022c). Higher strength is generally an indicator of good durability (2022b; Pradhan et al., 2022a). The prediction of compressive strength plays a vital role in the evaluation and optimization of construction materials, in particular, PET fiber-reinforced concrete. PET fiber-reinforced concrete is gaining increasing attention in the construction industry due to its improved mechanical properties and environmental benefits. Making informed judgments throughout the planning, design, building, and management of infrastructure projects requires accurate prediction of the CS of PFRC (Nafees et al., 2023). Machine learning algorithms have proven their potential in accurately predicting material properties, including compressive strength, by learning from historical data and identifying complex relationships (Kaveh & Khalegi, 1998; Kaveh & Khavaninzadeh, 2023; Parhi & Patro, 2023; Singh et al., 2023; Parhi & Panigrahi, 2023). Developing reliable prediction models empowers engineers to optimize mixture proportions, select suitable reinforcement strategies, and meet strength specifications. This optimization enhances structural performance, durability, cost-effectiveness, and sustainability of construction projects.

To predict the CS of PFRC, three decision tree-based machine learning models: Decision Tree, Random Forest, and Gradient Boosting Machine regressors were utilized in this study. The hyperparameters of all the models were optimized using the metaheuristic Dolphin echolocation optimization technique. SHAP and Sobol sensitivity analysis was used to access the feature sensitivity toward compressive strength. All the models were developed in Google Colab’s Python interface.

Research significance

This study implements three decision tree-based machine learning regressors, i.e., Decision tree, Random Forest, and Gradient Boosting machine to predict the CS of PFRC. Decision trees offer easy interpretability as their decision-making process can be visualized in a tree-like structure with clear if–else rules. They can handle both numerical and categorical data without requiring extensive normalization or scaling. Moreover, decision trees are less sensitive to outliers in the data and provide faster training and prediction, especially for smaller data sets. They also make minimal assumptions about the data distribution, making them suitable for non-linear scenarios. Random forests, on the other hand, construct multiple decision trees and combine their predictions, resulting in improved accuracy and reduced overfitting compared to a single decision tree. They effectively mitigate the impact of noisy data and offer valuable insights into feature importance, aiding feature selection in the data set. In addition, the training of individual decision trees in a random forest can be easily parallelized. Gradient Boosting Machines (GBMs) achieve high predictive accuracy by combining the predictions of multiple weak learners, typically decision trees. They handle missing data effectively without extensive imputation and can accommodate various data types, including numerical and categorical variables. GBMs also work well with a variety of loss functions, making them suitable for regression tasks. These are some of the advantages of these ML methods.

There are some limitations to these methods. Such decision trees tend to overfit, particularly when they become deep and complex, resulting in poor generalization and performance on unseen data. In addition, the discrete splits used by decision trees may not effectively capture the continuous nature of certain data sets or variables. Moreover, the model’s instability can be attributed to the production of different trees with slight variations in the training data. Random forests, consisting of multiple decision trees, can pose challenges in terms of interpretation and understanding due to their ensemble nature. They also require more computational resources and time compared to individual decision trees, especially when handling large data sets or numerous trees. Moreover, the storage of multiple decision trees leads to higher memory consumption. Gradient boosting machines (GBMs) can be sensitive to noisy data as the boosting process attempts to fit the noisy samples during training, thereby increasing the risk of overfitting. GBMs with a large number of iterations or deep trees can be computationally expensive and demand more memory. Furthermore, proper tuning of GBMs, including learning rate, number of iterations, and tree depth, can be a non-trivial task, necessitating careful parameter optimization.

To overcome these limitations, a novel metaheuristic Dolphin echolocation optimization method was used for hyperparameter optimization. Its adaptability, efficient exploration, and robustness increase the prediction accuracy of the models. A database was developed from published literature and pre-processed. Tenfold cross-validation was used for training and testing to obtain the best-performing model. Two sensitivity methods used in this study.

Machine learning algorithms

Decision tree (DT)

A Decision Tree Regressor is a machine learning algorithm used for regression tasks, aiming to predict continuous numerical values. This algorithm utilizes a hierarchical structure of decision nodes and leaf nodes to make predictions based on the input features (Gu et al., 2021). Recursively splitting the data based on the values of the input features is how the Decision Tree Regressor functions (de Ville, 2013). It identifies the most informative feature at each decision node by maximizing the reduction in variance or another suitable metric. The goal is to split the data into subsets that are as homogeneous as possible in terms of the target variable. Each leaf node in the decision tree represents a prediction value for the target variable. When new data points are encountered, they traverse the tree from the root node to a specific leaf node based on the feature values, and the prediction value associated with that leaf node is assigned as the output. One of the key advantages of a Decision Tree Regressor is its interpretability. The resulting tree structure gives us insights into the correlations between features and the target variable, helping us to comprehend the decision-making process. In addition, decision trees can handle both numerical and categorical features and can handle missing values without requiring extensive pre-processing. The Decision Tree Regressor is a versatile algorithm for regression tasks. Its hierarchical structure, interpretability, and ability to handle various feature types make it a popular choice in the field of machine learning. Figure 2 represents the flow chart of decision tree regressor algorithm.

Flow chart of decision tree algorithm (Gajowniczek & Ząbkowski, 2021)

Random forest (RF)

A potent and popular machine learning method known as the Random Forest Regressor excels at predicting tasks requiring continuous numerical variables (Chen & Ishwaran, 2012). Multiple decision trees are combined as part of an ensemble learning technique to produce a reliable and precise predictive model (Sagi & Rokach, 2018). A random forest integrates the concepts of bagging and random feature selection to help alleviate these problems, in contrast to a single decision tree, which can be vulnerable to overfitting and instability. The technique generates a variety of models by selecting random subsets of the training data and characteristics for each tree. Each decision tree in the forest autonomously gains knowledge from the chosen features and data, producing a variety of distinct but individually flawed forecasts. During prediction, the random forest aggregates the predictions from all the decision trees by averaging (for regression tasks) or voting (for classification tasks). This ensemble approach helps to reduce the variance and bias of the final prediction, resulting in improved generalization and robustness. The strength of the random forest regressor lies in its ability to handle complex relationships and interactions among variables. It can capture nonlinearities, handle high-dimensional data, and effectively deal with missing values and outliers. Moreover, random forests are less sensitive to the choice of hyperparameters compared to other machine learning algorithms, making them relatively easy to use and tune. Random forests provide valuable insights into feature importance. This information aids in feature selection, dimensionality reduction, and understanding the underlying factors influencing the outcome. Due to their robustness, accuracy, and interpretability, random forest regressors have found applications in various domains. Figure 3 depicts the flow chart of the random forest regression algorithm.

Flow chart of random forest model (Singh et al., 2021)

Gradient boosting machine (GBM)

Gradient Boosting Machine Regressor, a powerful algorithm in machine learning, is widely used for regression tasks due to its exceptional predictive capabilities (Zhou et al., 2021). It is a member of the ensemble learning family, which brings together several weak learners to produce a reliable and precise predictive model. The Gradient Boosting Machine Regressor algorithm works in a sequential manner, where weak learners, typically decision trees, are trained in an additive fashion (Badirli et al., 2020). Each subsequent tree focuses on reducing the errors made by the previous trees. By iteratively fitting new trees to the residuals of the previous trees, the algorithm gradually improves its predictive performance. One of the key strengths of the Gradient Boosting Machine Regressor lies in its ability to handle complex relationships and non-linear interactions within the data. It automatically captures intricate patterns and nonlinearities by leveraging the hierarchical structure of decision trees. This makes it highly effective in capturing both local and global dependencies in the data, leading to accurate predictions. The algorithm incorporates regularization techniques, such as shrinkage and tree pruning, to prevent overfitting and improve generalization. Shrinkage reduces the impact of each tree, allowing for a more conservative and robust model. Tree pruning, on the other hand, removes unnecessary branches and nodes, simplifying the model and enhancing its interpretability. Figure 4 illustrates the flow chart of gradient boosting method.

Flow chart of GBM model (Zhang et al., 2021)

Metaheuristic optimization

Dolphin echolocation optimization (DEO)

Dolphin Echolocation Optimization (DEO) is an innovative optimization algorithm inspired by the remarkable echolocation abilities of Dolphins (Kaveh, 2017a). This nature-inspired algorithm mimics the biological behavior of Dolphins in using echolocation to locate objects and navigate their surroundings effectively (Kaveh & Farhoudi, 2016b). DEO has gained attention in the field of optimization due to its ability to solve complex problems and find near-optimal solutions (Kaveh, 2017b). The DEO algorithm employs a multi-objective approach, where multiple solutions are generated simultaneously to explore the search space efficiently (Kaveh & Farhoudi, 2013). Similar to how Dolphins emit sound waves and listen to the echoes to perceive their environment, the DEO algorithm uses a set of candidate solutions and evaluates their fitness based on predefined objectives (Kaveh et al., 2018). By iteratively refining these solutions through a combination of exploration and exploitation, DEO aims to converge toward optimal or near-optimal solutions. One key advantage of the DEO algorithm lies in its ability to balance exploration and exploitation effectively. Like Dolphins that continuously adapt their echolocation strategies to changing environmental conditions, DEO dynamically adjusts its search process. This adaptability enables the algorithm to escape local optima and explore diverse regions of the search space, ultimately improving the quality of the solutions obtained. DEO exhibits robustness and versatility, allowing it to handle various types of optimization problems. Its ability to handle both single-objective and multi-objective optimization tasks makes it applicable to a wide range of real-world scenarios. Whether it is used in engineering design, financial modeling, or data analysis, DEO showcases its potential in finding optimal or near-optimal solutions efficiently. Its unique characteristics, including the balance between exploration and exploitation, adaptability, and versatility, make it a valuable tool for solving complex optimization problems across different domains. Figure 5 shows the echolocation of Dolphins in nature.

Echolocation of Dolphin (Kaveh & Farhoudi, 2016a)

Hyperparameter optimization

Hyperparameter optimization is a critical step in developing accurate regression models that can effectively capture and model the underlying relationships within a data set (Feurer & Hutter, 2019). Regression models rely on various hyperparameters, which are adjustable settings that determine the model's behavior and performance. Optimizing these hyperparameters is essential to improve the model’s predictive capabilities and ensure optimal performance. The process of hyperparameter optimization involves systematically searching through different combinations of hyperparameter values to identify the configuration that yields the best results. The objective is to identify the set of hyperparameters that maximizes the performance metric of the model or minimizes its inaccuracy. By carefully tuning the hyperparameters of regression models, researchers and practitioners can unlock their full potential and improve the model’s ability to accurately predict outcomes. This optimization process allows for better generalization, and increased model robustness, and ultimately enhances the model's performance in real-world applications.

In this study, the hyperparameters of all the decision tree-based algorithms were optimized using the DEO algorithm. The pseudo-code of the optimized regression models is shown in Tables 1, 2, and 3.

Data set preparation

A robust and well-organized database is of utmost importance in the field of machine learning. Machine learning models heavily rely on data to learn patterns, make accurate predictions, and provide meaningful insights (Asteris et al., 2021). A good database serves as the foundation for successful machine-learning models. The accuracy and usefulness of the data have a direct bearing on how well these models work. A good database facilitates efficient data preprocessing and feature engineering. Data preprocessing involves tasks, such as cleaning, normalization, and handling missing values, while feature engineering involves transforming raw data into meaningful features that capture relevant information (Ahmed & Iqbal, 2023). A well-structured database simplifies these processes, enabling researchers and practitioners to prepare the data effectively and extract valuable insights.

To predict the CS of PFRC a database was constructed from published literature (Adnan & Dawood, 2020; Foti, 2011, 2013; Fraternali et al., 2011; Irwan et al., 2013; Kim et al., 2010; Marthong, 2015; Marthong & Sarma, 2016; Mohammed & Rahim, 2020; Mohammed Ali, 2021; Nibudey et al., 2013; Ochi et al., 2007; Pelisser et al., 2012; Rahmani et al., 2013). The database consisted of a total of 120 data points. The small number of data was due to the minimal study in the field of PET fiber-reinforced concrete. The data set contained Binder (mostly cement), Water-to-Binder ratio (W/B), fine aggregate (FA), coarse aggregate (CA), and fiber volume fraction (FVF) as input features, while the compressive strength was taken as output. The statistics of the data set are shown in Table 4.

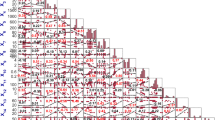

The Pearson correlation matrix as shown in Fig. 6, is a statistical tool that provides valuable insights into the relationships between variables in a data set. It measures the strength and direction of the linear association between pairs of variables. The matrix displays the correlation coefficients, which range from − 1 to 1, where − 1 represents a perfect negative correlation, 1 represents a perfect positive correlation, and 0 indicates no linear correlation. By the Pearson correlation matrix, researchers can identify the degree of association between different variables. A high positive correlation between two variables suggests that they tend to increase or decrease together, while a high negative correlation indicates an inverse relationship. On the other hand, a correlation close to zero suggests no linear relationship between the variables. Here, the input features were at the non-correlation-to-medium co-relation stage.

Figure 7 represents a density distribution plot, also known as a kernel density plot, which is a visual representation of the distribution of a continuous variable. It provides valuable insights into the shape, spread, and concentration of data points along the range of the variable. This type of plot is widely used in data analysis and statistics to understand the underlying distribution of a data set. The density distribution plot is constructed by estimating the probability density function (PDF) of the data. It smooths out the individual data points and presents a continuous curve that represents the overall distribution. The curve is derived by placing a kernel function, such as a Gaussian kernel, on each data point and summing up the contributions to create a smooth density estimate.

Metrics for model evaluation

Several criteria are frequently employed to judge the accuracy, precision, and generalizability of regression machine learning models. These metrics shed light on the model's capability to forecast continuous numerical values. R2 measures the percentage of the target variable's variance that the regression model accounts for. The value, which runs from 0 to 1, denotes the goodness of fit. A better fit of the model to the data is indicated by a higher R2 score. The percentage of variance in the target variable that is explained by the model is measured by the explained variance (EV) score. A higher score denotes a better model fit and spans from 0 to 1. The average absolute difference between the expected and actual values is measured by MAE. The average percentage difference between the predicted and actual values is calculated using MAPE. It is very helpful when we want to assess the relative accuracy of the model's predictions and the target variable has a wide range of values. The average magnitude of the prediction mistakes is measured by RMSE, which is the square root of MSE. It helps interpret the error metric in the target variable's original scale. Below are the equations for the evaluation metrics.

Results and discussion

Model training and hyperparameter optimization

For the training and testing of all the models, a tenfold cross-validation method was utilized. Tenfold cross-validation is a commonly used technique in machine learning for assessing the performance and generalization ability of a model. It involves dividing the data set into ten equal-sized subsets or “folds”. The model is trained and evaluated ten times, with each iteration using nine folds for training and onefold for validation. This process ensures that every sample in the data set is used for both training and validation exactly once. The results from each fold are then averaged to provide an overall assessment of the model's performance. Tenfold cross-validation helps to reduce the impact of data partitioning on model evaluation and provides a more robust estimation of its effectiveness on unseen data. It is a valuable tool for selecting models, tuning hyperparameters, and comparing different algorithms while avoiding overfitting and optimizing generalization.

Hyperparameter optimization of all the models was done using DEO. By emulating the adaptive and exploratory nature of Dolphin echolocation, DEO aims to enhance optimization processes in machine learning tasks. It potentially incorporates mechanisms to dynamically adjust search strategies, detect relevant cues or patterns, and adapt to changing problem landscapes. Table 5 shows the optimum hyperparameters of the three ML models.

Decision tree (DT)

DEO (Differential Evolution Optimization) tuned decision tree regressor was employed to predict the CS of PFRC. The goal was to investigate the effectiveness of this approach in accurately estimating the compressive strength based on the selected input features. The data set used for this study consisted of a wide range of concrete mixtures with varying proportions of PET fibers. The results indicated that the model achieved a moderate level of accuracy. In training the optimized model was found to have an accuracy of 94%, while the accuracy fell to 83% in training. While this drop in accuracy may initially seem concerning, it is not uncommon in machine learning models. It suggests that the optimized model might have become slightly overfitted to the training data, leading to a slight decrease in its generalization capabilities. The regression plot in training is shown in Fig. 8 and that of testing is shown in Fig. 9. Table 6 shows the details about the metric scores of both training and testing.

Random forest (RF)

The performance of the DEO (Differential Evolution Optimization) tuned random forest regressor in predicting the CS of PFRC was investigated. The accuracy of the random forest model is influenced by the number of decision trees and the maximum voting, as the prediction of the random forest is the average of the predictions of its constituent trees. During the training phase, the random forest regressor demonstrated strong predictive capabilities in forecasting the values of compressive strength with an accuracy of 97% and the model also predicted with an accuracy of 91% in the testing phase. These high values indicate that the model captured a significant portion of the variance in the training data set. To visually assess the model's performance, regression plots comparing the actual and predicted values were generated for the training and testing data sets, as shown in Figs. 8 and 9, respectively. These plots provided a visual representation of how closely the predicted values aligned with the actual values. Additional performance metrics of the model are presented in Table 6.

Gradient boosting machine (GBM)

The prediction accuracy of the DEO-tuned gradient boosting machine regressor was evaluated in predicting the CS of PFRC. In the training phase, the model showed an impeccable accuracy of 96% (Fig. 8) but in the testing stage the accuracy dropped to 87% (Fig. 9). The high accuracy achieved during the training phase indicates that the DEO tuning process effectively optimized the GBM regressor to capture the underlying patterns and relationships between the input features and the compressive strength of the concrete samples. The model successfully learned from the training data, resulting in accurate predictions within that specific data set. The drop in accuracy observed during the testing stage suggests that the optimized GBM regressor might have slightly overfitted the training data. When a model becomes overly complicated and begins to memorize training instances rather than recognizing underlying patterns, overfitting takes place. As a result, its performance on data that has not been seen may be affected. It is important to note that due to the complexity of the material and several contributing factors, predicting concrete strength with high precision is a difficult process. Table 6 represents additional details about model metrics.

Comparison among models

Further to compare and visualize all the optimized models in detail six different plots were used. The error distribution plot, Bland–Altman plot, Error comparison plot, cumulative distribution plot (CDF), Quantile plot (QQ), and box plots were implemented. The models were compared in the testing phase.

The error distribution histogram plot (Fig. 10) was utilized to analyze the performance of all the optimized models in predicting the CS of PFRC. This plot provides valuable insights into the distribution and magnitude of errors made by the model. The histogram plot displayed the frequency of errors across different ranges or bins. The x-axis represented the error intervals, while the y-axis depicted the frequency or count of samples falling within each interval. Analyzing the error distribution histogram plot, it was observed that the RF and GBM errors exhibited a roughly symmetric distribution. This indicates that, on average, the DEO-tuned RF and GBM regressor achieved predictions that were relatively close to the actual compressive strength values of the PET fiber-reinforced concrete samples.

In this study, the Bland–Altman plot (Fig. 11) was utilized to assess the agreement between two measurement methods or assess the level of agreement between a measurement method and a reference standard. The Bland–Altman plot provides valuable insights into the bias and variability of the measurements, offering a visual representation of the agreement between the two methods. A deviation from zero in DT and GBM models suggests a systematic difference between the methods, indicating the presence of a consistent bias in the measurements.

The line plot (Fig. 12) provided a clear and concise representation of how the compressive strength varied in actual and predicted values for all the models. The trend can be found to be more consistent for the RF model compared to the other two models. In the RF model, a close association can be seen between actual and predicted values.

The cumulative distribution function (CDF) plot (Fig. 13) was employed to analyze the distribution of error in this study. This plot provides valuable insights into the probability distribution of the error and allows for a better understanding of its characteristics. The CDF plot revealed that the compressive strength of PET fiber-reinforced concrete follows a relatively normal distribution. This is evidenced by the smooth and gradually increasing curve observed in the plot. The majority of the compressive strength values tend to cluster around the zero in the RF model, indicating a central tendency in the distribution.

The QQ plot of errors (Fig. 14) was used to assess the distributional assumptions and the goodness-of-fit of the regression model for predicting the CS of PFRC. The QQ plot provides a visual comparison between the observed errors and the expected errors under a theoretical distribution, typically a normal distribution in regression analysis. In this study, the QQ plot was generated by plotting the quantiles of the observed errors against the quantiles of the standard normal distribution. The goal was to evaluate whether the errors followed a normal distribution, which is a common assumption in regression models. Upon analyzing the QQ plot of errors, it was observed that the majority of the data points fell relatively close to the expected line for the RF model, indicating a reasonably good fit to a normal distribution. However, there were slight deviations in the tails of the QQ plot for the other two models, suggesting that the errors did not perfectly adhere to the normal distribution assumption.

To gain further insights into the performance of the DEO-tuned models in predicting the CS of PFRC, a box plot of errors (Fig. 15) was constructed. This analysis aimed to provide a visual representation of the distribution and characteristics of the errors made by the model. The box plot of errors provides a summary of the distribution of errors, including measures, such as the median, quartiles, and potential outliers. Upon analyzing the box plot of errors, several observations can be made. The median of the errors displays the central tendency and illustrates the typical size of the model's errors. A lower median value of RF and GBM suggests that these models tend to have smaller errors on average. The quartiles displayed in the box plot provide insights into the spread of the errors. The interquartile range (IQR), defined as the difference between the first quartile (Q1) and the third quartile (Q3), indicates the spread of the errors around the median. A narrower IQR of RF suggests a more consistent performance, whereas a wider IQR of the other two models indicates greater variability in the errors made by the model. By examining the box plot of errors, it was observed that the RF model exhibited a relatively low median error. This suggests that, on average, the model's predictions were close to the actual compressive strength values.

From the above evaluation, it can be concluded that the DEO-tuned RF shows maximum accuracy, robustness, and generalizability in predicting CS of PFRC compared to the other two models.

Sensitivity analysis (SA)

SHAP sensitivity analysis

SHAP (Shapley Additive Explanations) sensitivity analysis is a powerful technique used to understand the contribution of different features in a machine learning model toward its predictions. This analysis provides insights into the importance and impact of individual features on the model's output. Unlike traditional feature importance methods that consider features in isolation, SHAP offers a unified framework based on cooperative game theory, specifically the concept of Shapley values (Lundberg & Lee, 2017). It takes into account the interaction and dependence between features when attributing importance to each feature. By applying SHAP sensitivity analysis, we gain a comprehensive understanding of how changes in input features affect the model's predictions. This analysis can uncover complex relationships and interactions that may not be apparent through simple feature importance ranking. For each occurrence in the data set, SHAP gives each feature a distinct value to indicate how much it contributed to the prediction. Positive SHAP values represent a feature's favorable influence on the prediction, while negative values represent a detrimental influence. The sum of SHAP values for all features equals the difference between the model's output and the average output of all possible feature combinations. The most important features can be found and their effects on the model’s predictions can be understood by interpreting SHAP values. This knowledge helps in building trust in the model, identifying potential biases or confounding factors, and making informed decisions based on the model's insights. SHAP sensitivity analysis enables us to perform feature-level explanations, attributing the model's output to specific features. This not only enhances interpretability but also enables the identification of critical features that significantly influence the model's predictions. It aids in building trust in machine learning models, identifying influential features, and gaining insights into the decision-making process. By leveraging SHAP, we can unlock the potential for enhanced transparency and interpretability in machine learning applications.

Sobol sensitivity analysis

Sobol sensitivity analysis is a powerful and widely used method for quantifying the relative importance of input variables in a mathematical or computational model. It provides valuable insights into the behavior and interactions of variables, enabling researchers to understand the factors that significantly influence the output of the model. The Sobol technique is a variance-based method that breaks down the overall variance of the model's output into contributions from the many input variables and their interactions (Saltelli et al., 1999). Sobol analysis determines the sensitivity indices, such as the first-order and total-order indices, by methodically changing the values of each input variable while maintaining others constantly. The total-order sensitivity index additionally takes into account the contributions from interactions with other variables, whereas the first-order sensitivity index just indicates the impact of a single input variable on the output. These sensitivity indices have values between 0 and 1, with values closer to 1 indicating a greater influence on the model output. Sobol sensitivity analysis offers several advantages. First, it provides a quantitative assessment of the importance of input variables, allowing researchers to prioritize their efforts in further understanding and refining the influential factors. Second, it enables the identification of non-linear and interaction effects, which may not be evident from simple correlation analysis. Third, it assists in reducing the dimensionality of the problem by identifying and eliminating non-influential variables, thus improving computational efficiency.

Sensitivity analysis result

SHAP SA aimed to gain insights into the relative importance of these features and understand their influence on the model's predictions. The SHAP analysis was done on the optimized RF model which was found to be more accurate. A violin plot as shown in Fig. 16 shows the impact of features on the model, while in Fig. 17, average impact of features on the model output can be visualized through a bar plot. The results of the sensitivity analysis revealed that the Binder content had the most significant impact on the model's predictions of compressive strength followed by FA and CA.

The total order SA was calculated using the Sobol method, as shown in Fig. 18. The total-order sensitivity takes into account both the direct and indirect effects, including interactions with other variables, and provides more accurate information. Based on the results, it was found that the FVF and W/B ratio were the most influential factors in determining the compressive strength of PET fiber-reinforced concrete. These variables had high total-order sensitivity indices, indicating their significant individual contributions to the model's output.

There is a significant deviation that can be seen in the result of both sensitivity analyses. SHAP shows binder content and aggregate content as more sensitive features, while Sobol SA shows fiber volume fraction and W/B ratio as more sensitive parameters. The SHAP method is a local SA method that relies on model and variation in the database, while the Sobol method is a global SA method (Zhang et al., 2015). The Sobol method is a Monte-Carlo simulation-based method that highlights the importance of considering the interactions between input variables. The interaction effects were captured by the total-order sensitivity indices. It was observed that the interaction between the water–binder ratio and fiber volume fraction had a notable impact on the compressive strength. This suggests that the combined influence of these variables is greater than their contributions alone. Other input features, such as the binder content and aggregate content, were found to have relatively lower sensitivity indices compared to these. The SHAP analysis provides more information about the model behavior, while the Sobol method can be confirmed to be showing more accurate information on features that affect the CS of PFRC.

Conclusion

This study successfully implements a DEO-tuned decision tree-based machine learning algorithm to predict the CS of PFRC. Following conclusions can be drawn from the research study.

-

Three decision tree-based machine learning models, namely, Decision tree, Random Forest, and Gradient Boosting Machine regressors, were applied for predicting the compressive strength of PET fiber-reinforced concrete.

-

The hyperparameters of all models were optimized using the Dolphin echolocation optimization technique, a metaheuristic algorithm known for its efficiency in solving complex optimization problems.

-

SHAP and Sobol sensitivity analysis were employed to evaluate the feature sensitivity concerning the CS of PFRC.

-

The Dolphin echolocation optimization technique effectively optimized the hyperparameters of the machine learning models, enhancing their predictive capabilities.

-

The optimized Random Forest model showed the highest accuracy in both the training and testing phase compared to other models.

-

The results obtained from the SHAP analysis provided insights into the individual feature contributions and their impact on the predicted compressive strength.

-

The Sobol sensitivity analysis helped quantify the relative importance of input variables and identified the key drivers influencing compressive strength.

-

Binder content, fiber volume fraction, and W/B ratio were found to be the most sensitive.

-

Further research and validation using independent data sets are recommended to confirm the applicability and generalizability of the proposed methodology.

Data availability

Data will be made available on request.

References

Adnan, H. M., & Dawood, A. O. (2020). Strength behavior of reinforced concrete beam using re-cycle of PET wastes as synthetic fibers. Case Studies in Construction Materials, 13, e00367. https://doi.org/10.1016/j.cscm.2020.e00367

Ahmed, Z. S., & Iqbal, K. (2023). Automated signal detection and prioritization in FAERS data using machine learning algorithms for pharmacovigilance. Journal of Advanced Analytics in Healthcare Management, 7(1), 77–95.

Asteris, P. G., Skentou, A. D., Bardhan, A., Samui, P., & Pilakoutas, K. (2021). Predicting concrete compressive strength using hybrid ensembling of surrogate machine learning models. Cement and Concrete Research, 145, 106449. https://doi.org/10.1016/j.cemconres.2021.106449

Badirli, S., Liu, X., Xing, Z., Bhowmik, A., Doan, K., & Keerthi, S. S. (2020). Gradient boosting neural networks: GrowNet. arXiv.

Benkharbeche, H., Rokbi, M., Rahmouni, Z. E. A., Ghebouli, M., Grine, M., & Baali, B. (2021). Effect of fibers orientation on the fracture of polymer concrete based on quartz, polyester and jute fabrics. Defect and Diffusion Forum, 406, 511–520. https://doi.org/10.4028/www.scientific.net/DDF.406.511

Chen, X., & Ishwaran, H. (2012). Random forests for genomic data analysis. Genomics, 99(6), 323–329. https://doi.org/10.1016/j.ygeno.2012.04.003

de Ville, B. (2013). Decision trees. Wires Computational Statistics, 5(6), 448–455. https://doi.org/10.1002/wics.1278

Feurer, M., & Hutter, F. (2019). Hyperparameter optimization. Automated machine learning: Methods, systems, challenges (pp. 3–33). Springer International Publishing.

Foti, D. (2011). Preliminary analysis of concrete reinforced with waste bottles PET fibers. Construction and Building Materials, 25(4), 1906–1915. https://doi.org/10.1016/j.conbuildmat.2010.11.066

Foti, D. (2013). Use of recycled waste pet bottles fibers for the reinforcement of concrete. Composite Structures, 96, 396–404. https://doi.org/10.1016/j.compstruct.2012.09.019

Fraternali, F., Ciancia, V., Chechile, R., Rizzano, G., Feo, L., & Incarnato, L. (2011). Experimental study of the thermo-mechanical properties of recycled PET fiber-reinforced concrete. Composite Structures, 93(9), 2368–2374. https://doi.org/10.1016/j.compstruct.2011.03.025

Gajowniczek, K., & Ząbkowski, T. (2021). Interactive decision tree learning and decision rule extraction based on the ImbTree entropy and ImbTree AUC packages. Processes, 9(7), 1107. https://doi.org/10.3390/pr9071107

Gu, S., Wang, J., Hu, G., Lin, P., Zhang, C., Tang, L., & Xu, F. (2021). Prediction of wind-induced vibrations of twin circular cylinders based on machine learning. Ocean Engineering, 239, 109868. https://doi.org/10.1016/j.oceaneng.2021.109868

Irwan, J. M., Asyraf, R. M., Othman, N., Koh, K. H., Annas, M. M. K., & Faisal, S. K. (2013). The mechanical properties of PET fiber reinforced concrete from recycled bottle wastes. Advanced Materials Research, 795, 347–351. https://doi.org/10.4028/www.scientific.net/AMR.795.347

Kaveh, A. (2017a). Advances in metaheuristic algorithms for optimal design of structures. Springer International Publishing.

Kaveh, A. (2017b). Applications of metaheuristic optimization algorithms in civil engineering. Springer International Publishing.

Kaveh, A., & Farhoudi, N. (2013). A new optimization method: Dolphin echolocation. Advances in Engineering Software, 59, 53–70. https://doi.org/10.1016/j.advengsoft.2013.03.004

Kaveh, A., & Farhoudi, N. (2016a). Dolphin echolocation optimization for design of cantilever retaining walls. Computer Science Engineering, 17, 193–211.

Kaveh, A., & Farhoudi, N. (2016b). Dolphin echolocation optimization: Continuous search space. Advances in Computational Design, 1(2), 175–194. https://doi.org/10.12989/ACD.2016.1.2.175

Kaveh, A., Hoseini Vaez, S., & Hosseini, P. (2018). Simplified dolphin echolocation algorithm for optimum design of frame. Smart Structures and Systems. https://doi.org/10.12989/sss.2018.21.3.321

Kaveh, A., & Khalegi, A. (1998). Prediction of strength for concrete specimens using artificial neural networks. Advances in engineering computational technology (pp. 165–171). Civil-Comp Press.

Kaveh, A., & Khavaninzadeh, N. (2023). Efficient training of two ANNs using four meta-heuristic algorithms for predicting the FRP strength. Structures, 52, 256–272. https://doi.org/10.1016/j.istruc.2023.03.178

Kim, S. B., Yi, N. H., Kim, H. Y., Kim, J.-H.J., & Song, Y.-C. (2010). Material and structural performance evaluation of recycled PET fiber reinforced concrete. Cement and Concrete Composites, 32(3), 232–240. https://doi.org/10.1016/j.cemconcomp.2009.11.002

Lundberg, S. M., & Lee, S.-I. (2017). A unified approach to interpreting model predictions. Advances in neural information processing systems. Curran Associates Inc.

Marthong, C. (2015). Effects of PET fiber arrangement and dimensions on mechanical properties of concrete. The IES Journal Part A, 8(2), 111–120. https://doi.org/10.1080/19373260.2015.1014304

Marthong, C., & Marthong, S. (2016). An experimental study on the effect of PET fibers on the behavior of exterior RC beam-column connection subjected to reversed cyclic loading. Structures, 5, 175–185. https://doi.org/10.1016/j.istruc.2015.11.003

Marthong, C., & Sarma, D. K. (2016). Influence of PET fiber geometry on the mechanical properties of concrete: An experimental investigation. European Journal of Environmental and Civil Engineering, 20(7), 771–784. https://doi.org/10.1080/19648189.2015.1072112

Meza, A., Pujadas, P., Meza, L. M., Pardo-Bosch, F., & López-Carreño, R. D. (2021). Mechanical optimization of concrete with recycled PET fibres based on a statistical-experimental study. Materials, 14(2), 240. https://doi.org/10.3390/ma14020240

Mohammed, A. A., & Rahim, A. A. F. (2020). Experimental behavior and analysis of high strength concrete beams reinforced with PET waste fiber. Construction and Building Materials, 244, 118350. https://doi.org/10.1016/j.conbuildmat.2020.118350

Mohammed Ali, TKh. (2021). Shear strength of a reinforced concrete beam by PET fiber. Environment, Development and Sustainability, 23(6), 8433–8450. https://doi.org/10.1007/s10668-020-00974-w

Nafees, A., Althoey, F., Khan, S., Sikandar, M. A., Alyami, S. H., Rehman, M. F., Javed, M. F., & Eldin, S. M. (2023). Plastic concrete mechanical properties prediction based on experimental data. Case Studies in Construction Materials, 18, e01831. https://doi.org/10.1016/j.cscm.2023.e01831

Naidu Gopu, G., & Joseph, S. A. (2022). Corrosion behavior of fiber-reinforced concrete—A review. Fibers, 10(5), 38. https://doi.org/10.3390/fib10050038

Nibudey, R. N., Nagarnaik, P. B., Parbat, D. K., & Pande, A. M. (2013). A model for compressive strength of PET fiber reinforced concrete. American Journal of Engineering Research., 2(12), 367–372.

Ochi, T., Okubo, S., & Fukui, K. (2007). Development of recycled PET fiber and its application as concrete-reinforcing fiber. Cement and Concrete Composites, 29(6), 448–455. https://doi.org/10.1016/j.cemconcomp.2007.02.002

Parhi, S. K., Dwibedy, S., Panda, S., & Panigrahi, S. K. (2023). A comprehensive study on controlled low strength material. Journal of Building Engineering. https://doi.org/10.1016/j.jobe.2023.107086

Parhi, S. K., & Panigrahi, S. K. (2023). Alkali–silica reaction expansion prediction in concrete using hybrid metaheuristic optimized machine learning algorithms. Asian Journal of Civil Engineering. https://doi.org/10.1007/s42107-023-00799-8

Parhi, S. K., & Patro, S. K. (2023). Prediction of compressive strength of geopolymer concrete using a hybrid ensemble of grey wolf optimized machine learning estimators. Journal of Building Engineering, 71, 106521. https://doi.org/10.1016/j.jobe.2023.106521

Pelisser, F., Montedo, O. R. K., Gleize, P. J. P., & Roman, H. R. (2012). Mechanical properties of recycled PET fibers in concrete. Materials Research, 15, 679–686. https://doi.org/10.1590/S1516-14392012005000088

Pradhan, P., Panda, S., Kumar Parhi, S., & Kumar Panigrahi, S. (2022a). Effect of critical parameters on the fresh properties of Self Compacting geopolymer concrete. Materials Today. https://doi.org/10.1016/j.matpr.2022.02.506

Pradhan, P., Panda, S., Kumar Parhi, S., & Kumar Panigrahi, S. (2022b). Variation in fresh and mechanical properties of GGBFS based self-compacting geopolymer concrete in the presence of NCA and RCA. Materials Todays. https://doi.org/10.1016/j.matpr.2022.03.337

Pradhan, P., Panda, S., Kumar Parhi, S., & Kumar Panigrahi, S. (2022c). Factors affecting production and properties of self-compacting geopolymer concrete: A review. Construction and Building Materials, 344, 128174. https://doi.org/10.1016/j.conbuildmat.2022.128174

Rahmani, E., Dehestani, M., Beygi, M. H. A., Allahyari, H., & Nikbin, I. M. (2013). On the mechanical properties of concrete containing waste PET particles. Construction and Building Materials, 47, 1302–1308. https://doi.org/10.1016/j.conbuildmat.2013.06.041

Rao, M. M., Patro, S. K., & Acharya, P. K. (2023). Utilisation of plastic waste as synthetic fiber and aggregate in concrete: A review. The Open Civil Engineering Journal. https://doi.org/10.2174/18741495-v17-e230113-2022-HT31-3975-4

Rao, M. M., Patro, S. K., & Basarkar, S. S. (2022). Mechanical and post-cracking performance of recycled high density polyethylene fiber reinforced concrete. Journal of the Institution of Engineers Series A, 103(2), 519–530. https://doi.org/10.1007/s40030-022-00625-5

Sagi, O., & Rokach, L. (2018). Ensemble learning: A survey. Wires Data Mining and Knowledge Discovery, 8(4), e1249. https://doi.org/10.1002/widm.1249

Saltelli, A., Tarantola, S., & Chan, K.P.-S. (1999). A quantitative model-independent method for global sensitivity analysis of model output. Technometrics, 41(1), 39–56. https://doi.org/10.1080/00401706.1999.10485594

Singh, S., Patro, S. K., & Parhi, S. K. (2023). Evolutionary optimization of machine learning algorithm hyperparameters for strength prediction of high-performance concrete. Asian Journal of Civil Engineering. https://doi.org/10.1007/s42107-023-00698-y

Singh, U., Rizwan, M., Alaraj, M., & Alsaidan, I. (2021). A machine learning-based gradient boosting regression approach for wind power production forecasting: A step towards smart grid environments. Energies, 14(16), 5196. https://doi.org/10.3390/en14165196

Weckert, S., Weerasooriya, T., & Gunnarson, C. A. (2011). Loading rate effect on the tensile failure of concrete and its constituents using diametrical compression and direct tension. In T. Proulx (Ed.), Dynamic behavior of materials, volume 1 conference proceedings of the society for experimental mechanics series (pp. 13–27). Springer.

Zhang, T., Lin, W., Vogelmann, A. M., Zhang, M., Xie, S., Qin, Y., & Golaz, J.-C. (2021). Improving convection trigger functions in deep convective parameterization schemes using machine learning. Journal of Advances in Modeling Earth Systems, 13(5), e2020MS002365. https://doi.org/10.1029/2020MS002365

Zhang, X., Trame, M., Lesko, L., & Schmidt, S. (2015). Sobol sensitivity analysis: A tool to guide the development and evaluation of systems pharmacology models. CPT Pharmacometrics and Systems Pharmacology, 4(2), 69–79. https://doi.org/10.1002/psp4.6

Zhou, Y., Liu, Y., Wang, D., & Liu, X. (2021). Comparison of machine-learning models for predicting short-term building heating load using operational parameters. Energy and Buildings, 253, 111505. https://doi.org/10.1016/j.enbuild.2021.111505

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

Conceptualization and Methodology: Suraj K Parhi and Sanjaya K Patro; Formal analysis and investigation: Suraj K Parhi; Writing—original draft preparation: Suraj K Parhi; Writing—review and editing: Suraj K Parhi and Sanjaya K Patro; Supervision: Sanjaya K Patro

Corresponding author

Ethics declarations

Conflict of interest

The authors affirm that they have no known financial or interpersonal conflicts that would have appeared to have an impact on the research presented in this study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Parhi, S.K., Patro, S.K. Compressive strength prediction of PET fiber-reinforced concrete using Dolphin echolocation optimized decision tree-based machine learning algorithms. Asian J Civ Eng 25, 977–996 (2024). https://doi.org/10.1007/s42107-023-00826-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s42107-023-00826-8