Abstract

We study a natural Wasserstein gradient flow on manifolds of probability distributions with discrete sample spaces. We derive the Riemannian structure for the probability simplex from the dynamical formulation of the Wasserstein distance on a weighted graph. We pull back the geometric structure to the parameter space of any given probability model, which allows us to define a natural gradient flow there. In contrast to the natural Fisher–Rao gradient, the natural Wasserstein gradient incorporates a ground metric on sample space. We illustrate the analysis of elementary exponential family examples and demonstrate an application of the Wasserstein natural gradient to maximum likelihood estimation.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The statistical distance between histograms plays a fundamental role in statistics and machine learning. It provides the geometric structure on statistical manifolds [3]. Learning problems usually correspond to minimizing a loss function over these manifolds. An important example is the Fisher–Rao metric on the probability simplex, which has been studied especially within the field of information geometry [3, 6]. A classic result due to Chentsov [11] characterizes this Riemannian metric as the only one, up to scaling, that is invariant with respect to natural statistical embeddings by Markov morphisms (see also [9, 21, 32]). Using the Fisher–Rao metric, a natural Riemannian gradient descent method is introduced [2]. This natural gradient has found numerous successful applications in machine learning (see, e.g., [1, 27, 35, 36, 40]).

Optimal transport provides another statistical distance, named Wasserstein or Earth Mover’s distance. In recent years, this metric has attracted increasing attention within the machine learning community [5, 16, 31]. One distinct feature of optimal transport is that it provides a distance among histograms that incorporates a ground metric on sample space. The \(L^2\)-Wasserstein distance has a dynamical formulation, which exhibits a metric tensor structure. The set of probability densities with this metric forms an infinite-dimensional Riemannian manifold, named density manifold [20]. The gradient descent method in the density manifold, called Wasserstein gradient flow, has been widely studied in the literature; see [34, 38] and references.

A question intersecting optimal transport and information geometry arises: What is the natural Wasserstein gradient descent method on the parameter space of a statistical model? In optimal transport, the Wasserstein gradient flow is studied on the full space of probability densities, and shown to have deep connections with the ground metrics on sample space deriving from physics [33], fluid mechanics [10] and differential geometry [25]. We expect that these relations also exist on parametrized probability models, and that the Wasserstein gradient flow can be useful in the optimization of objective functions that arise in machine learning problems. By incorporating a ground metric on sample space, this method can serve to implement useful priors in the learning algorithms.

We are interested in developing synergies between the information geometry and optimal transport communities. In this paper, we take a natural first step in this direction. We introduce the Wasserstein natural gradient flow on the parameter space of probability models with discrete sample spaces. The \(L^2\)-Wasserstein metric on discrete states was introduced in [12, 26, 29]. Following the settings from [13, 14, 17, 22], the probability simplex forms the Riemannian manifold called Wasserstein probability manifold. The Wasserstein metric on the probability simplex can be pulled back to the parameter space of a probability model. This metric allows us to define a natural Wasserstein gradient method on parameter space.

We note that one finds several formulations of optimal transport for continuous sample spaces. On the one hand, there is the static formulation, known as Kantorovich’s linear programming [38]. Here, the linear program is to find the minimal value of a functional over the set of joint measures with given marginal histograms. The objective functional is given as the expectation value of the ground metric with respect to a joint probability density measure. On the other hand, there is the dynamical formulation, known as the Benamou-Brenier formula [8]. This dynamic formulation gives the metric tensor for measures by lifting the ground metric tensor of sample spaces. Both static and dynamic formulations are equivalent in the case of continuous state spaces. However, the two formulations lead to different metrics in the simplex of discrete probability distributions. The major reason for this difference is that the discrete sample space is not a length space.Footnote 1 Thus the equivalence result in classical optimal transport is no longer true in the setting of discrete sample spaces. We note that for the static formulation, there is no Riemannian metric tensor for the discrete probability simplex. See [14, 26] for a detailed discussion.

In the literature, the exploration of connections between optimal transport and information geometry was initiated in [4, 18, 39]. These works focus on the distance function induced by linear programming on discrete sample spaces. As we pointed out above, this approach can not cover the Riemannian and differential structures induced by optimal transport. In this paper, we use the dynamical formulation of optimal transport to define a Riemannian metric structure for general statistical manifolds. With this, we obtain a natural gradient operator, which can be applied to any optimization problem over a parameterized statistical model. In particular, it is applicable to maximum likelihood estimation. Other works have studied the Gaussian family of distributions with \(L^2\)-Wasserstein metric [30, 37]. In that particular case, the constrained optimal transport metric tensor can be written explicitly and the corresponding density submanifold is a totally geodesic submanifold. In contrast to those works, our discussion is applicable to arbitrary parametric models.

This paper is organized as follows. In Sect. 2 we briefly review the Riemannian manifold structure in probability space introduced by optimal transport in the cases of continuous and discrete sample spaces. In Sect. 3 we introduce Wasserstein statistical manifolds by isometric embedding into the probability manifold, and in Sect. 4 we derive the corresponding gradient flows. In Sect. 5 we discuss a few examples.

2 Optimal transport on continuous and discrete sample spaces

In this section, we briefly review the results of optimal transport. We introduce the corresponding Riemannian structure for simplices of probability distributions with discrete support.

2.1 Optimal transport on continuous sample space

We start with a review of the optimal transport problem on continuous spaces. This will guide our discussion of the discrete state case. For related studies, we refer the reader to [20, 38] and the many references therein.

Denote the sample space by \((\Omega , g^\Omega )\). Here \(\Omega \) is a finite dimensional smooth Riemannian manifold, for example, \(\mathbb {R}^d\) or the open unit ball therein. Its inner product is denoted by \(g^\Omega \) and its volume form by dx. Denote the geodesic distance of \(\Omega \) by \(d_\Omega :\Omega \times \Omega \rightarrow \mathbb {R}_+\).

Consider the set \(\mathcal {P}_2(\Omega )\) of Borel measurable probability density functions on \(\Omega \) with finite second moment. Given \(\rho ^0, \rho ^1\in \mathcal {P}_2(\Omega )\), the \(L^2\)-Wasserstein distance between \(\rho ^0\) and \(\rho ^1\) is denoted by \(W:\mathcal {P}(\Omega )\times \mathcal {P}(\Omega )\rightarrow \mathbb {R}_+\). There are two equivalent ways of defining this distance. On one hand, there is the static formulation. This refers to the following linear programming problem:

where the infimum is taken over the set \(\Pi (\rho ^0, \rho ^1)\) of joint probability measures on \(\Omega \times \Omega \) that have marginals \(\rho ^0\), \(\rho ^1\).

On the other hand, the Wasserstein distance W can be written in a dynamic formulation, where a probability path \(\rho :[0,1]\rightarrow \mathcal {P}_2(\Omega )\) connecting \(\rho ^0\), \(\rho ^1\) is considered. This refers to a variational problem known as the Benamou-Brenier formula:

where the infimum is taken over the set of Borel potential functions \([0,1]\times \Omega \rightarrow \mathbb {R}\). Each potential function \(\Phi \) determines a corresponding density path \(\rho \) as the solution of the continuity equation

Here \(\text {div}\) and \(\nabla \) are the divergence and gradient operators in \(\Omega \). The continuity equation is well known in physics.

The equivalence of the static (1) and dynamic (2) formulations is well known (for continuous \(\Omega \)). For the reader’s convenience we give a sketch of proof in the appendix. In this paper we focus on the variational formulation (2). In fact, this formulation entails the definition of a Riemannian structure as we now discuss. For simplicity, we only consider the set of smooth and strictly positive probability densities

Denote \( \mathcal {F}(\Omega ):=C^{\infty }(\Omega )\) the set of smooth real valued functions on \(\Omega \). The tangent space of \(\mathcal {P}_+(\Omega )\) is given by

Given \(\Phi \in \mathcal {F}(\Omega )\) and \(\rho \in \mathcal {P}_+(\Omega )\), define

We assume the zero flux condition

In view of the continuity equation, the zero flux condition is equivalent to requiring that \(\int _\Omega \frac{\partial \rho }{\partial t}dx = 0\), which means that the space integral of \(\rho \) is always 1. When \(\Omega \) is compact without boundary, this is automatically satisfied. This is also true when \(\Omega = \mathbb {R}^d \) and \(\rho \) has finite second moment. Thus \(V_\Phi \in T_{\rho }\mathcal {P}_+(\Omega )\). The elliptic operator \(\nabla \cdot (\rho \nabla )\) identifies the function \(\Phi \) on \(\Omega \) modulo additive constants with a tangent vector \(V_{\Phi }\) of the space of densities (for more details see [20, 25]). This gives an isomorphism

Define the Riemannian metric (inner product) on the tangent space of positive densities \(g^W:{T_\rho }\mathcal {P}_+(\Omega )\times {T_\rho }\mathcal {P}_+(\Omega )\rightarrow \mathbb {R}\) by

where \(\Phi (x)\), \(\tilde{\Phi }(x)\in \mathcal {F}(\Omega )/\mathbb {R}\). This inner product endows \(\mathcal {P}_+(\Omega )\) with an infinite dimensional Riemannian metric tensor. In other words, the variational problem (2) is a geometric action energy in \((\mathcal {P}_+(\Omega ), g^W)\) in the sense of [8, 25]. In literature [20], \((\mathcal {P}_+(\Omega ), g^W)\) is called density manifold.

2.2 Dynamical optimal transport on discrete sample spaces

We translate the dynamical perspective from the previous section to discrete state spaces, i.e., we replace the continuous space \(\Omega \) by a discrete space \(I=\{1,\ldots , n\}\).

To encode the metric tensor of discrete states, we first need to introduce a ground metric notion on sample space. We do this in terms of a graph with weighted edges, \(G=(V, E, \omega )\), where \(V=I\) is the vertex set, E is the edge set, and \(\omega =(\omega _{ij})_{i,j\in I}\in \mathbb {R}^{n\times n}\) are the edge weights. These weights satisfy

As mentioned above, the weights encode the ground metric on the discrete state space. More precisely, we write

where \(d^G_{ij}\) represents the distance or ground metric between states i and j. The set of neighbors or adjacent vertices of i is denoted by \(N(i)=\{j\in V:(i,j)\in E\}\).

The probability simplex supported on the vertices of G is defined by

Here \(p=(p_1,\ldots , p_n)\) is a probability vector with coordinates \(p_i\) corresponding to the probabilities assigned to each node \(i\in I\). We denote the relative interior of the probability simplex by \(\mathcal {P}_+(I)\). This consists of the strictly positive probability distributions, \(p\in \mathcal {P}(I)\) with \(p_i>0\), \(i\in I\).

Next we introduce the variational problem (2) on discrete states. First we need to define the “metric tensor” on graphs. A vector field \(v=(v_{ij})_{i,j\in V}\in \mathbb {R}^{n\times n}\) on G is a skew-symmetric matrix:

A potential function \(\Phi =(\Phi _i)_{i=1}^n\in \mathbb {R}^{n}\) defines a gradient vector field \(\nabla _G\Phi =(\nabla _G\Phi _{ij})_{i,j\in V}\in \mathbb {R}^{n\times n}\) on the graph G by the finite differences

Here we use \(\sqrt{\omega }\) rather than \(1/d^G\) for simplicity of notations. In this way, we can represent the gradient, divergence, and Laplacian matrix in a multiplicity of weight, instead of dividing the ground metric.

We define an inner product of vector fields \(v_{ij}\), \(\tilde{v}_{ij}\) at each state \(i \in I\) by

In particular, the gradient vector field \(\nabla _G\Phi \) defines a kinetic energy at each state \(i \in I\) by

We next define the expectation value of kinetic energy with respect to a probability distribution p:

This can also be written as

where

There are two definitions hidden in (4). First, \({\text {div}}_G:\mathbb {R}^{n\times n}\rightarrow \mathbb {R}^n\) maps any given vector field m on the graph G to a potential function

Second, the probability weighted gradient vector field \(m=p\nabla _G\Phi \) defined by

where \(\frac{p_i+p_j}{2}\) represents the probability weight on the edge \((i,j)\in E\).

We are now ready to introduce the \(L^2\)-Wasserstein metric on \(\mathcal {P}_+(I)\).

Definition 1

For any \(p^0\), \(p^1\in \mathcal {P}_+(I)\), define the Wasserstein distance \(W:\mathcal {P}_+(I)\times \mathcal {P}_+(I)\rightarrow \mathbb {R}\) by

Here the infimum is taken over pairs \((p(t), \Phi (t))\) with \(p\in H^1((0,1), \mathbb {R}^{n})\) and \(\Phi :[0, 1]\rightarrow \mathbb {R}^n\) measurable, satisfying

Remark 1

It is worth mentioning that the metric given in Definition 1 is different from the metric defined by linear programming. In other words, denote the distance \(d^G(i,j)\) between two vertices i and j as the length of a shortest (i, j)-path. If \((i,j)\in E\), then \(d^G(i,j)\) is same as the ground metric defined in (3). Then

The reason for this in-equivalence is that the discrete sample space I is not a length space. In other words, there is no continuous path in I connecting two nodes in I. For more details see discussions in the appendix.

2.3 Wasserstein geometry and discrete probability simplex

In this section we introduce the primal coordinates of the discrete probability simplex with \(L^2\)-Wasserstein Riemannian metric. Our discussion follows the recent work [22]. The probability simplex \(\mathcal {P}(I)\) is a manifold with boundary. To simplify the discussion, we focus on the interior \(\mathcal {P}_+(I)\). The geodesic properties on the boundary \(\partial \mathcal {P}(I)\) have been studied in [17].

Let us focus on the Riemannian structure. In the following we introduce an inner product on the tangent space

Denote the space of potential functions on I by \( \mathcal {F}(I)=\mathbb {R}^{n}\). Consider the quotient space

where \([\Phi ]=\{(\Phi _1+c,\ldots , \Phi _n+c) :c\in \mathbb {R}\}\) are functions defined up to addition of constants.

We introduce an identification map via (4)

In [12] it is shown that \(V_\Phi :\mathcal {F}(I)/\mathbb {R}\rightarrow T_p\mathcal {P}_+(I)\) is a well defined map which is linear and one-to-one. I.e., \( \mathcal {F}(I)/\mathbb {R}\cong T_p^*\mathcal {P}_+(I)\), where \(T_p^*\mathcal {P}_+(I)\) is the cotangent space of \(\mathcal {P}_+(I)\). This identification induces the following inner product on \(T_p\mathcal {P}_+(I)\).

We first present this in a dual formulation, which is known in the literature [25].

Definition 2

(Inner product in dual coordinates) The inner product \(g_p^W :T_p\mathcal {P}_+(I)\times T_p\mathcal {P}_+(I) \rightarrow \mathbb {R}\) takes any two tangent vectors \(V_{\Phi }\) and \(V_{\tilde{\Phi }}\in T_p\mathcal {P}_+(I)\) to

We shall now give the inner product in primal coordinates. The following matrix operator will be the key to the Riemannian metric tensor of \((\mathcal {P}_+(I), g^W)\).

Definition 3

(Linear weighted Laplacian matrix) Given \(I=\{1,\ldots , n\}\) and a weighted graph \(G=(I,E,\omega )\), the matrix function \(L(\cdot ):\mathbb {R}^n\rightarrow \mathbb {R}^{n\times n}\) is defined by

where

-

\(D \in \mathbb {R}^{|E|\times n}\) is the discrete gradient operator

$$\begin{aligned} D_{(i,j)\in {E}, k\in V}={\left\{ \begin{array}{ll} \sqrt{\omega _{ij}}, &{} \text {if }i=k,\hbox { }i>j\\ -\sqrt{\omega _{ij}}, &{} \text {if }j=k,\hbox { }i>j\\ 0, &{} \text {otherwise} \end{array}\right. }, \end{aligned}$$ -

\(-D^{\mathsf {T}}\in \mathbb {R}^{n\times |E|}\) is the discrete divergence operator, also called oriented incidence matrix [15], and

-

\(\Lambda (a)\in \mathbb {R}^{|E|\times |E|}\) is a weight matrix depending on a,

$$\begin{aligned} \Lambda (a)_{(i,j)\in E, (k,l)\in E}={\left\{ \begin{array}{ll} \frac{a_i+a_j}{2} &{} \text {if }(i,j)=(k,l)\in E\\ 0 &{} \text {otherwise} \end{array}\right. }. \end{aligned}$$

Consider some \(p\in \mathcal {P}_+(I)\). From spectral graph theory [15], we know that L(p) can be decomposed as

Here \(0<\lambda _1(p)\le \cdots \le \lambda _{n-1}(p)\) are the eigenvalues of L(p) in ascending order, and \(U(p)=(u_0(p),u_1(p),\cdots , u_{n-1}(p))\) is the corresponding orthogonal matrix of eigenvectors with

We write \(L(p)^{\dagger }\) for the pseudo-inverse of L(p), i.e.,

With \(\sigma =L(p)\Phi \), \(\tilde{\sigma }=L(p)\tilde{\Phi }\), we see that

Now we are ready to give the inner product in primal coordinates.

Definition 4

(Inner product in primal coordinates) The inner product \(g^{W}_p:T_p\mathcal {P}_+(I)\times T_p\mathcal {P}_+(I)\rightarrow \mathbb {R}\) is defined by

In other words, the variational problem from Definition 1 is a minimization of geometry energy functional in \(\mathcal {P}_+(I)\), i.e.,

This defines a Wasserstein Riemannian structure on the probability simplex. For more details of Riemannian formulas see [22]. Following [20] we could call \((\mathcal {P}_+(I), g^W)\) discrete density manifold. However, this could be easily confused with other notions from information geometry, and hence we will use the more explicit terminology Wasserstein statistical manifold, or Wasserstein manifold for short.

3 Wasserstein statistical manifold

In this section we study parametric probability models endowed with the \(L^2\)-Wasserstein Riemannian metric. We define this in the natural way, by pulling back the Riemannian structure from the Wasserstein manifold that we discussed in the previous section. This allows us to introduce a natural gradient flow on the parameter space of a statistical model.

3.1 Wasserstein statistical manifold

Consider a statistical model defined by a triplet \((\Theta , I, p)\). Here, \(I=\{1,\ldots , n\}\) is the sample space, \(\Theta \) is the parameter space, which is an open subset of \(\mathbb {R}^d\), \(d\le n-1\), and \(p:\Theta \rightarrow \mathcal {P}_+(I)\) is the parametrization function,

In the sequel we will assume that \(\text {rank}(J_{\theta } p(\theta ))=d\), so that the parametrization is locally injective.

We define a Riemannian metric g on \(\Theta \) as the pull-back of metric \(g^W\) on \(\mathcal {P}_+(I)\). In other words, we require that \(p:(\Theta ,g)\rightarrow (\mathcal {P}_+(I), g^W)\) is an isometric embedding:

Here \(d p(\theta )(a)=\big (\sum _{j=1}^n\frac{\partial p_i(\theta )}{\partial \theta _j}a_j\big )_{i=1}^n=J_\theta p(\theta )a\), where \(J_\theta p(\theta )\) is the Jacobi matrix of \( p(\theta )\) with respect to \(\theta \). We arrive at the following definition.

Definition 5

For any pair of tangent vectors \(a,b\in T_\theta \Theta =\mathbb {R}^d\), define

where \(J_\theta p(\theta )=(\frac{\partial p_i(\theta )}{\partial \theta _j})_{1\le i\le n, 1\le j\le d}\in \mathbb {R}^{n\times d}\) is the Jacobi matrix of the parametrization p, and \(L( p(\theta ))^{\mathcal {\dagger }}\in \mathbb {R}^{n\times n}\) is the pseudo-inverse of the linear weighted Laplacian matrix.

This inner product is consistent with the restriction of the Wasserstein metric \(g^W\) to \( p(\Theta )\). For this reason, we call \( p(\Theta )\), or \((\Theta , I, p)\), together with the induced Riemannian metric g, Wasserstein statistical manifold.

We need to make sure that the embedding procedure is valid, because the metric tensor \(L(p)^{\mathcal {\dagger }}\) is only of rank \(n-1\). The next lemma shows that \((\Theta , g)\) is a well defined d-dimensional Riemannian manifold.

Lemma 6

For any \(\theta \in \Theta \), we have

In addition, \(g_{\theta }\) is smooth as a function of \(\theta \), so that \((\Theta , g)\) is a smooth Riemannian manifold.

Proof

We only need to show that \(J_\theta p(\theta )^{\mathsf {T}}L( p(\theta ))^{\mathcal {\dagger }}J_\theta p(\theta )\in \mathbb {R}^{d\times d}\) is a positive definite matrix. Consider

where \(0\in \mathbb {R}^{n-1}\). Since L(p) only has one simple eigenvalue 0 with eigenvector \(u_0\), then

Since \(u_0^{\mathsf {T}} p(\theta )=\frac{1}{\sqrt{n}}\sum _{i=1}^np_i(\theta )=0\), we have that \(u_0^{\mathsf {T}}\frac{\partial p(\theta )}{\partial \theta _j}=\frac{1}{\sqrt{n}}\sum _{i=1}^n\frac{\partial p_i(\theta )}{\partial \theta _j}=0\), i.e.,

Left multiply \(u_0\) into (7), we obtain

Thus \(c=0\), and (7) forms

Since \(\text {rank}(J_{\theta } p(\theta ))=d<n\), we have \(a=0\), which finishes the proof. \(\square \)

We illustrate some geometric calculations on parameter space \((\Theta , g)\). For simplicity of illustration, we assume \(\Theta \subset \mathbb {R}^d\), and denote a matrix function \(G(\theta )\in \mathbb {R}^{d\times d}\) with \(g_\theta (\dot{\theta }, \dot{\theta })=\dot{\theta }^{\mathsf {T}}G(\theta )\dot{\theta }\), i.e.,

Under this notation, given \(\theta _0\), \(\theta _1\in \Theta \), the Riemannian distance on \((\Theta , g)\) is defined by the geometric action functional:

Denote \(\theta (t)=\theta _t\), and \(S_t\) is the Legendre transformation of \(\dot{\theta }_t\) in \((\Theta , g)\), then the cotangent geodesic flow satisfies

It is worth recalling the following facts. If p is an identity map, then (10) translates to

In addition, if \(I=\Omega \) and we replace i by x and \(p_i(t)\) by \(\rho (t,x)\), the above becomes

which are the standard continuity and Hamilton-Jacobi equations on \(\Omega \). For these reasons, we call the two equations in (10) the continuity equation and the Hamilton-Jacobi equation on parameter space.

3.2 Geometry calculations in statistical manifold

We next present the geometric formulas in a probability model. This approach connects the geometry formulas in the full probability set to the ones in a submanifold \((p(\Theta ), g)\), and in the parameter space \((\Theta , g)\).

We first study the orthogonal projection operator from \((\mathcal {P}_+(I), g^W)\) to \((p(\Theta ), g)\).

Theorem 7

Given \(\theta \in \Theta \), for any tangent vector \(\sigma \in T_{p(\theta )}\mathcal {P}_+(I)\), there exists a unique orthogonal decomposition

with \(\sigma ^{\parallel }\in T_{ p(\theta )}p(\Theta )\) and \(\sigma ^{\perp }\in N_{ p(\theta )} p(\Theta )\), i.e., \(g^W_{p(\theta )}(\sigma ^{\parallel }, \sigma ^{\perp })=0\). At each point \(p(\theta )\), the projection matrix

gives the decomposition by

where \(\mathbb {I}\) is an identity matrix in \(\mathbb {R}^{n\times n}\).

Proof

We first prove that (11) is a decomposition. It is to check that \(g^W_{p(\theta )}(\sigma ^{\parallel }, \sigma ^{\perp })=0\), i.e.

Recall \(G(\theta )=J_\theta p(\theta )^{\mathsf {T}}L( p(\theta ))^{\mathcal {\dagger }}J_\theta p(\theta )\). We check that

which shows the claim. We next prove the uniqueness of decomposition (11). Suppose there are two decomposition \(\sigma =\sigma ^{||}+\sigma ^{\perp }\), \(\tilde{\sigma }=\tilde{\sigma }^{||}+\tilde{\sigma }^{\perp }\), where \({\sigma }^{||}=J_\theta p(\theta )\dot{\theta }\) and \(\tilde{\sigma }^{||}=J_\theta p(\theta )\dot{\tilde{\theta }}\). From the definition, then

Since \(G(\theta )\) is positive definite, we have \(\dot{\theta }=\dot{\tilde{\theta }}\) and \(\sigma ^{||}={\tilde{\sigma }}^{||}\), which finishes the proof. \(\square \)

We next present the second fundamental form for submanifold \((p(\Theta ), g)\). Given any \(\sigma \), \(\tilde{\sigma }\in T_{ p(\theta )}p(\Theta )\), consider the orthogonal decomposition of Levi–Civita connection in \((\mathcal {P}_+(I), g^W)\):

The second fundamental form is the orthogonal part of this decomposition, i.e., \(B_{p(\theta )}(\sigma , \tilde{\sigma }):=(\nabla ^W_{\sigma }\tilde{\sigma })^{\perp }\).

Proposition 8

(Second fundamental form) Let \(\nabla _G\cdot \circ \nabla _G\cdot :\mathbb {R}^n\times \mathbb {R}^n\rightarrow \mathbb {R}^n\) so that, for any \(\Phi \), \(\tilde{\Phi }\in \mathbb {R}^n\),

Then

Proof

As shown in [22, Proposition 11], the Christoffel formula in \((\mathcal {P}_+(I), g^W)\) satisfies

Following the projection operator \(H(p(\theta ))\), we finish the proof. \(\square \)

We next establish the parallel transport and geodesic equation in \((p(\Theta ), g)\).

Proposition 9

(Parallel transport) Let \(p(\theta _t)\in p(\Theta )\), \(t\in (0,1)\) be a smooth curve. Consider a vector field \(\sigma _t\in T_{p(\theta _t)} p(\Theta )\) along curve \( p(\theta _t)\). Then the equation for \(\sigma _t\) to be parallel along \( p(\theta _t)\) satisfies

If \(\sigma _t=\dot{p}(\theta _t)\), then the geodesic equation satisfies

Proof

The parallel equation in a submanifold is given by

In other words, we have

where \(\nabla ^W\) is defined in (12). Let \(\sigma _t=\dot{p}(\theta _t)\), then

This means that

Following the projection operator and (12), we finish the proof. \(\square \)

We last present the curvature tensor in \((p(\Theta ), g)\), denoted by \(R(\cdot , \cdot )\cdot :T_{p(\Theta )} p(\Theta )\times T_{p(\theta )} p(\Theta )\times T_{p(\theta )} p(\Theta )\rightarrow T_{p(\theta )} p(\Theta )\).

Proposition 10

(Curvature tensor) Given \(\sigma _1\), \(\sigma _2\), \(\sigma _3\), \(\sigma _4\in T_{ p(\theta )} p(\Theta )\), then

where m, \(n:T_{ p(\theta )} p(\Theta )\times T_{ p(\theta )} p(\Theta )\rightarrow T_{ p(\theta )} p(\Theta )\) are symmetric, antisymmetric operators respectively, which are defined by

and

Proof

The curvature tensor in submanifold relates to the one in full manifold as follows:

where \(R_W\) is the curvature tensor of \((\mathcal {P}_+(I), g^W)\) derived in [22, Proposition 6]. Combining \(R_W\) and the second fundamental form in Proposition 8, we derive the result. \(\square \)

4 Gradient flow on Wasserstein statistical manifold

In this section we introduce the natural Riemannian gradient flow on Wasserstein statistical manifold \((\Theta , g)\).

4.1 Gradient flow on parameter space

Consider a smooth loss function \(F:\mathcal {P}_+(I)\rightarrow \mathbb {R}\). Thus we focus on the composition \(F\circ p:\Theta \rightarrow \mathbb {R}\). The Riemannian gradient of \(F( p(\theta ))\) is defined as follows. Given \(\nabla _g F( p(\theta ))\in T_\theta \Theta \), we have

where \(\nabla _\theta F( p(\theta ))\cdot a= \sum _{i=1}^d\frac{\partial }{\partial \theta _i}F( p(\theta ))a_i\). The gradient flow satisfies

The next theorem establishes an explicit formulation of the gradient flow.

Theorem 11

(Wasserstein gradient flow) The gradient flow of a functional \(F: \mathcal {P}_+(I)\rightarrow \mathbb {R}\) is given by

where \(\nabla _{\theta }\) is the Euclidean gradient of \(F( p(\theta ))\) with respect to \(\theta \). More explicitly,

where \(\nabla _p\) is the Euclidean gradient of F(p) with respect to p.

Proof

The proof follows directly from (13). Notice that

and \(J_\theta p(\theta )^{\mathsf {T}}L( p(\theta ))^{\mathcal {\dagger }}J_\theta p(\theta )\) is an invertible matrix. Hence

We compute \(\nabla _\theta F( p(\theta ))\) as

This concludes the proof of (14). \(\square \)

Equation (14) is the generalization of Wasserstein gradient flow in probability simplex to the one on parameter space. If p is an identity map with the parameter space \(\Theta \) equal to the entire probability simplex, then (14) is

which is the Wasserstein gradient flow on the discrete probability simplex. In particular, if \(I=\Omega \), then it represents

which is the Wasserstein gradient flow on \(\Omega \). From now on, we call (14) the Wasserstein gradient flow on parameter space.

The definition of Wasserstein gradient flow shares many similarities with the steepest gradient descent defined as follows. Consider

where \(\epsilon \in \mathbb {R}_+\) is a given small constant. By taking the second-order Taylor approximation of the Wasserstein distance at \(\theta \), we get

where \(G(\theta )\) is the metric tensor of \((\Theta , g)\) defined in (8), inherited from Wasserstein manifold. We take the first-order approximation of \(F( p(\theta + h))\) in (15) by

By the Lagrangian method with Lagrange multiplier \(\lambda \), we have

The above derivations lead to the Wasserstein natural gradient direction

Remark 2

In the standard Fisher–Rao natural gradient [2], we replace (15) by

where \({\text {KL}}\) stands for the Kullback-Leibler divergence (relative entropy) from \( p(\theta )\) to \( p(\theta + h)\). Our definition changes the KL-divergence by the Wasserstein distance.

4.2 Displacement convexity on parameter space

The Wasserstein structure on the statistical manifold not only provides us the gradient operator, but also the Hessian operator on \((\Theta , g)\). The latter allows us to introduce the displacement convexity on parameter space.

We first review some facts. One remarkable property of Wasserstein geometry is that it yields a correspondence between differential operators on sample space and differential operators on probability space. E.g., the Hessian operator on Wasserstein manifold is equal to the expectation of Hessian operator on sample space.

An important example is stochastic relaxation. Given \(f(x)\in C^{\infty }(\Omega )\), consider

It is known that the Hessian operator of \( F(\rho )\) on Wasserstein manifold satisfies

One can show that \({\text {Hess}}f\succeq \lambda \mathbb {I}\) if and only if \({\text {Hess}}_W F(\rho )(V_\Phi , V_\Phi )\succeq \lambda g^W_\rho (V_\Phi , V_\Phi )\). This means that f is \(\lambda \)-geodesic convex in \((\Omega , g^\Omega )\) if and only if \( F(\rho )\) is \(\lambda \)-geodesic convex in \((\mathcal {P}(\Omega ), g^W)\). In literature [38], the geodesic convexity on Wasserstein manifold is known as the displacement convexity.

In this section we would like to extend the displacement convexity to parameter space \(\Theta \). In other words, we relate the parameter to the differential structures of sample space via constrained Wasserstein geometry \((\Theta , g)\). If \(\Theta \) is the full probability manifold, our definition coincides with the classical Hessian operator in sample space.

Definition 12

(Displacement convexity on parameter space) Given \( F\circ p:\Theta \rightarrow \mathbb {R}\), we say that \(F(p(\theta ))\) is \(\lambda \)-displacement convex if for any constant speed geodesic \(\theta _t\), \(t\in [0,1]\) connecting \(\theta _0,\theta _1\in (\Theta , g)\), it holds that

where \({\text {Dist}}\) is defined in (9). If \(F(p(\theta ))=\sum _{i=1}^n f_ip_i(\theta )\) is \(\lambda \)-displacement convex, then we call \(f\in \mathbb {R}^n\) \(\lambda \)-convex in \((\Theta , I, p)\).

Remark 3

In particular, the displacement convexity of KL divergence relates to the Ricci curvature lower bound on sample space. We elaborate this notion in [23].

We next derive the displacement convexity condition for stochastic relaxation.

Theorem 13

Assume \(\Theta \subset \mathbb {R}^d\) is a compact set and \(f=(f_i)_{i=1}^n\in \mathbb {R}^n\). Then f is \(\lambda \)-convex if and only if

for any \(\Phi \in \mathcal {F}(I)/\mathbb {R}\) and \(\theta \in \Theta \). Here \(\Gamma :\mathbb {R}^n\times \mathbb {R}^n \rightarrow \mathbb {R}^n\) is given by

and B is the second fundamental form given in Proposition 8.

Proof

If \(\Theta \) is a compact set, then the \(\lambda \)-displacement convexity of \(F(p(\theta ))\) is equivalent to

where \({\text {Hess}}_g F\) is the Hessian operator in \((\Theta , g)\). We next calculate this Hessian operator explicitly. Notice that

where \(\text {Hess}_W\) is the Hessian operator in \((\mathcal {P}_+(I), g^W)\). Denote the above in dual coordinates, i.e. \(\sigma =\tilde{\sigma }=V_\Phi =V_{\tilde{\Phi }}=L(p(\theta ))\Phi \), and follow the geometric computations in [22, Proposition 18], we finish the proof. \(\square \)

Here \(\Gamma \) is the discrete Bakry-Emery Gamma one operator [7]. The geometry of Wasserstein manifold is directly related to the expectation of Bakry-Emery Gamma one operators [22]. In particular, if p is the identity mapping and \(I=\Omega \), then our definition (16) represents

i.e.

for any \(\rho \), and vector fields \(\nabla \Phi \). The above inequality is same as requiring \(\text {Hess}f\succeq \lambda I\). Our definition extends this concept to parameter space.

4.3 Numerical methods

Here we discuss the numerical computation of the Wasserstein metric and the Wasserstein gradient flow.

Let us give a simple reformulation of the gradient that can be useful in practice, where typically \(n\gg d\). Note that

Hence (7) can be written as

In this formulation, the computation of the pseudo inverse of \(L( p(\theta ))\in \mathbb {R}^{n\times n}\) is not needed, and the computation complexity reduces to that of obtaining the pseudo inverse of \(J_\theta p(\theta )\in \mathbb {R}^{n\times d}\).

Given the gradient flow (7), there are two standard choices of time discretization, namely the forward Euler scheme and the backward Euler scheme. Denote the step size by \(\lambda >0\). The forward Euler method computes a discretized trajectory by

while the backward Euler method computes

where \(\text {Dist}\) is the geodesic distance in parameter space \((\Theta , g)\).

In the information geometry literature, the forward Euler method is often referred to as natural gradient method. In Wasserstein geometry, the backward Euler method is often called the Jordan–Kinderlehrer–Otto (JKO) scheme. In the following we give pseudo code for both numerical methods.

In practice, the forward Euler method is usually easier to implement than the backward Euler method. We would also suggest to implement the natural Wasserstein gradient using this method for minimization problems. As known in optimization, the JKO scheme can also be useful for non-smooth objective functions. Moreover, the backward Euler method is usually unconditionally stable, which means that one can choose a large step size h for computations.

5 Examples

Example 1

( Wasserstein geodesics) Consider the sample space \(I=\{1,2,3\}\) with an unweighted graph \(1-2-3\). The probability simplex for this sample space is a triangle in \(\mathbb {R}^3\):

Following Definition 1, the \(L^2\)-Wasserstein distance is given by

where the infimum is taken over paths \(\Phi :[0,1]\rightarrow \mathbb {R}^3\). Each \(\Phi \) defines \(p:[0,1]\rightarrow \mathbb {R}^3\) as the solution of the differential equation

with boundary condition \(p(0)=p^0\), \(p(1)=p^1\).

Consider local coordinates in (17). We parametrize a probability vector as \(p=(p_1, 1-p_1-p_3, p_3)\), with parameters \((p_1, p_3)\). Then (17) can be written as

where the infimum is taken over paths \(p:[0,1]\rightarrow \mathcal {P}_+(I)\). We also compare the Wasserstein metric (18) with the Fisher–Rao metric. In this case, the Fisher–Rao metric function is given by

This clearly demonstrates the difference between Wasserstein Riemannian metric and Fisher–Rao metric. We would also compare the dynamical optimal transport with the statistical one. In particular, if the ground metric is given by \(c_{12}=1\), \(c_{13}=2\), \(c_{23}=1\), which is of homogenous degree one type. Then the statistical optimal transport defined by

can be reformulated by

Here the statistical formulation does not provide a Riemannian metric, but gives a Finslerian metric.

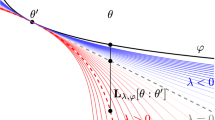

We next compute (18) numericallyFootnote 2 for different choices of the boundary conditions \(p^0\), \(p^1\). We fix three distributions

and solve (18) for three choices of the boundary conditions:

This gives us a geodesic triangle between \(q^1, q^2, q^3\), which is illustrated in Fig. 1. It can be seen that \((\mathcal {P}_+(I), W)\) has a non Euclidean geometry. Moreover, we see that the geodesics depend on the graph structure on sample space, where state 2 is qualitatively different from states 1 and 3.

The Wasserstein geodesic triangle from Example 1 plotted in the probability simplex (left) and in exponential parameter space (right). The path connecting \(q^1\) and \(q^3\) bends towards \(q^2\); something that does not happen for the other two paths. This illustrates how, as a result of the ground metric on sample space, state 2 is treated differently from 1 and 3

We can make the same derivations in terms of an exponential parametrization. Consider the parameter space \(\Theta =\{\theta =(\theta _1,\theta _2)\in \mathbb {R}^2\}\) and the parametrization \(p:\Theta \rightarrow \mathcal {P}_+(I)\) with

We rewrite the Wasserstein metric (18) in terms of \(\theta \). Denote \( p(\theta ^k)=p^k\), \(k=0, 1\). Then the Wasserstein metric in the coordinate system \(\theta \) is

The resulting geodesic triangle in \(\Theta \) is plotted in the right panel of Fig. 1.

For comparison, we compute the exponential geodesic triangle between the same distributions \(q^1,q^2,q^3\). This is shown in Fig. 2. In this case, there is no distinction between the states 1, 2, 3 and the three paths are symmetric. The exponential geodesic between two distributions \(p^0\) and \(p^1\) is given by \((p^0)^{1-t}(p^1)^{t}/ \sum _x(p^0)^{1-t}(p^1)^{t}\), \(t\in [0,1]\).

Example 2

(Wasserstein gradient flow on an independence model) We next illustrate the Wasserstein gradient flow over the independence model of two binary variables. The sample space is \(I = \{-1,+1\}^2\). For simplicity, we denote the states by \(a=(-1,-1)\), \(b=(-1, +1)\), \(c=(+1,-1)\), \(d=(+1,+1)\). We consider the square graph

with vertices I, edges \(E=\{\{a, b\},\{b,d\}, \{a,c\},\{c,d\} \}\), and weights \(\omega =( \omega _{ab}, \omega _{bd}, \omega _{ac}, \omega _{cd})\in \mathbb {R}^E\) attached to the edges. The edge weights correspond to the inverse squared ground metric that we assign to the sample space I. The probability simplex for this sample space is the tetrahedron

Following Definition 4, the Wasserstein metric tensor is given by \(g_p^W=L(p)^{\mathcal {\dagger }}\), which is the inverse of the linear weighted Laplacian metric L from Definition 3. In this example the latter is

The independence model consist of the joint distributions that satisfy \(p(x_1, x_2)=p(x_1)p(x_2)\). This can be parametrized in terms of \(\Theta =\{\xi =(\xi _1,\xi _2)\in [0,1]^2\}\), where \(\xi _1=p_1(x_1=+1)\), \(\xi _2=p_2(x_2=+1)\) describe the marginal probability distributions. The parametrization \(p:\Theta \rightarrow \mathcal {P}(I)\) is then

The model \( p(\Theta ) \subset \mathcal {P}(I)\) is a two dimensional manifold. The parameter space \(\Theta \) inherits the Riemannian structure \(g^W\) from \(\mathcal {P}(I)\), which is computed as follows. Denote the Jacobi matrix of the parametrization by

Then \(g^W\) induces a metric tensor on \(\Theta \) given by

We now consider a discrete optimization problem via stochastic relaxation and illustrate the gradient flow. Consider following potential function on I, taken from [28]:

We are to minimize \(F(\mathbf{p})=\mathbb {E}_\mathbf{p}[ f]\), i.e.,

By Theorem 11, the Wasserstein gradient flow is

For our function, the standard Euclidean gradient is \(\nabla _\xi F( p(\xi ))=(-4+12\xi _2, -2+12\xi _1)^{\mathsf {T}}\). The matrix G is computed numerically from J and L.

Negative Wasserstein gradient on the parameter space \([0,1]^2\) of the two-bit independence model from Example 2. We fix the state graph shown on the top left, and a function f with values shown in gray next to the state nodes. We evaluate the gradient flow for three different choices of the graph weight \(\omega _{bd}\). When the weight \(\omega _{bd}\) is small, the flow from d towards b (a local minimum) is suppressed. A large weight has the opposite effect. The contours are for the objective function \(F( p(\xi )) = \mathbb {E}_{ p(\xi )}[f]\)

In Fig. 3 we plot the negative Wasserstein gradient vector field in the parameter space \(\Theta =[0,1]^2\). As can be seen, the Wasserstein gradient direction depends on the ground metric on sample space (encoded in the edge weights). If b and d are far away, there is higher tendency to go c, rather than b. This reflects the intuition that, the more ground distance between b and d, the harder for the probability distribution to move from its concentration place b to d. We observe that the the attraction region of the two local minimizers changes dramatically as the ground metric between b and d changes, i.e., as \(\omega _{bd}\) varies from 0.1, 1, 10. This is different in the Fisher–Rao gradient flow, plotted in Fig. 4, which is independent of the ground metric on sample space.

The above result illustrates the displacement convexity shown in Theorem 16. Different ground metric exhibits different displacement convexity of f on parameter space \((\Theta , g)\). These properties lead to different convergence regions of Wasserstein gradient flows.

Fisher–Rao gradient vector field for the same objective function of Fig. 3

Example 3

(Wasserstein gradient for maximum likelihood estimation) In maximum likelihood estimation, we seek to minimize the Kullback-Leibler divergence

where q is the empirical distribution of some given data. The Wasserstein gradient flow of \({\text {KL}}(q \Vert p(\theta ))\) satisfies

In this example we consider hierarchical log-linear models as our parametrized probability models, which are an important type of exponential families describing interactions among groups of random variables. Concretely, for an inclusion closed set S of subsets of \(\{1,\ldots , n\}\), the hierarchical model \(\mathcal {E}_S\) for n binary variables is the set of distributions of the form

for all possible choices of parameters \(\theta _\lambda \in \mathbb {R}\), \(\lambda \in S\). Here the \(\phi _\lambda \) are real valued functions with \(\phi _\lambda (x)=\phi _\lambda (y)\) whenever \(x_i=y_i\) for all \(i\in \lambda \). We consider two different choices of \(\phi _\lambda \), \(\lambda \in S\), corresponding to two different parametrizations of the model.

-

Our first choice are the orthogonal characters

$$\begin{aligned} \sigma _\lambda (x) = \prod _{i\in \lambda } (-1)^{x_i} = e^{ i \pi \langle 1_\lambda , x\rangle }, \quad x\in \{0,1\}^n, \end{aligned}$$which can be interpreted as a Fourier basis for the space of real valued functions over binary vectors.

-

As an alternative choice we consider the basis of monomials

$$\begin{aligned} \pi _\lambda (x) = \prod _{i\in \lambda } x_i, \quad x\in \{0,1\}^n, \end{aligned}$$which is not orthogonal, but is frequently used in practice.

When \(S=\{ \lambda \subseteq \{1,\ldots , n\}:|\lambda |\le k\}\), the model is called k-interaction model. We consider k-interaction models with \(k=1,\ldots , n\) (independence model, pair interaction model, three way interaction model, etc.), with the two parametrizations, \(\sigma \) (orthogonal sufficient statistics) and \(\pi \) (non-orthogonal sufficient statistics).

We compare the Euclidean, Fisher, and Wasserstein gradients. For binary variables, the Hamming distance is a natural ground metric notion. Accordingly, we define the Wasserstein metric with the uniformly weighted graph of the binary cube. We sampled a few target distributions on \(\{0,1\}^n\) uniformly at random (uniform Dirichlet). For each target distribution, we initialize the model at the uniform distribution, \(\theta _0=0\). The gradient descent parameter iteration is

where G is the corresponding metric (Euclidean, Fisher, or Wasserstein), \(\nabla \) is the standard gradient operator with respect to the model parameter \(\theta \), and \(\gamma _t\in \mathbb {R}_+\) is the learning rate (step size). The choice of the learning rate \(\gamma _t\) is important and the optimal value may vary for different methods and problems. We implemented an adaptive method to handle this as follows. We set an initial learning rate \(\gamma _0=0.001\), and at each iteration t, if the divergence does not decrease, we scale down the learning rate by a factor of 3 / 4. We also tried a few other methods, including backtracking line search and Adam [19], which is a method based on adaptive estimates of lower-order moments of the gradient. The stopping criterion was that the infinity norm of the expectation parameter matched the data expectation parameter to within 1 percent.

The results are shown in Fig. 5. The convergence to the final value can be monitored in terms of the normalized area under the optimization curve, \(\sum _{t=1}^T (D_t-D_T)/(D_0-D_T)\), where \(D_t\) is the divergence value at iteration t, and T is the final time. All methods achieved similar values of the divergence, except for the Euclidean gradient with non-orthogonal parametrization, which did not always reach the minimum. For the Fisher and Wasserstein gradients, the learning paths were virtually identical under the two different model parametrizations, as we already expected from the fact that these are covariant gradients. On the other hand, for the Euclidean gradient, the paths (and the number of iterations) were heavily dependent on the model parametrization, with the orthogonal basis usually being a much better choice than the non-orthogonal basis. In terms of the number of iterations until the convergence criterion was satisfied, the comparison is difficult because different methods work best with different step sizes. With the simple adaptive method and a suitable initial step size, the Wasserstein gradient was faster than the Euclidean and Fisher gradients. On the other hand, using Adam to adapt the step size, orthogonal Euclidean, Fisher, and Wasserstein were comparable.

Divergence minimization for random target distributions on \(\{0,1\}^n\), \(n=7\), over k-interaction models with \(k=1,\ldots ,n\). Shown is the average value of the divergence after optimization by Euclidean, Fisher, and Wasserstein gradient descent, and the corresponding number of gradient iterations. Orthogonal and non-orthogonal parametrization are indicated by \(\sigma \) and \(\pi \). The right hand side shows histograms of the normalized area under the optimization curves. The top figures are using a simple adaptive method for selecting the step size, and the bottom figures are using Adam

6 Discussion

We introduced the Wasserstein statistical manifolds, which are submanifolds of the probability simplex with the \(L^2\)-Wasserstein Riemannian metric tensor. With this, we defined an optimal transport natural gradient flow on parameter space.

The Wasserstein distance has already been discussed with divergences in information geometry and also shown to be useful in machine learning, for instance in training restricted Boltzmann machines and generative adversarial networks. In this work, we used the Wasserstein distance to define a geometry on the parameter space of a statistical model. Following this geometry, we establish a corresponding natural gradient and displacement convexity on parameter space.

We presented an application of the Wasserstein natural gradient method to maximum likelihood estimation in hierarchical probability models. The experiments show that, in combination with a suitable step size, the Wasserstein gradient can be a competitive optimization method and even reduce the required number of parameter iterations compared both to Euclidean and Fisher gradient methods. It will be essential to conduct further experimental studies to better understand the effects of the learning rate, as well as the interplay of ground metric, model, and optimization problem. In our current implementation, the Wasserstein gradient involved heavier computational costs compared to the Euclidean and Fisher gradients. For applications, it will be important to explore efficient computation and approximation approaches.

Regarding the theory, we suggest that many studies from information geometry will have a natural analog or extension in the Wasserstein statistical manifold. Some questions to consider include the following. Is it possible to characterize the Wasserstein metric on probability manifolds through an invariance requirement of Chentsov type? For instance, the work [32] formulates extensions of Markov embeddings for polytopes and weighted point configurations. Is there a weighted graph structure for which the corresponding Wasserstein metric recovers the Fisher metric?

The critical innovation coming from the Wasserstein gradient in comparison to the Fisher gradient is that it incorporates a ground metric in sample space. We suggest that this could have a positive effect not only concerning optimization, as discussed above, but also regarding generalization performance, in interplay with the optimization. The reason is that the ground metric on sample space provides means to introduce preferences in the hypothesis space. The specific form of such a regularization still needs to be developed and investigated. In this regard, a natural question is how to define natural ground metric notions. These could be fixed in advance or trained.

We hope that this paper contributes to strengthening the emerging interactions between information geometry and optimal transport, in particular, to machine learning problems, and to develop better natural gradient methods.

Notes

A length space is one in which the distance between points can be measured as the infimum length of continuous curves between them.

We use the direct method, which is a standard technique in optimal control. Here the time is discretized, and the sum replacing the integral is minimized by means of gradient descent with respect to \((p(t)_i)_{i=1,3, t\in \{t_1,\ldots , t_N\}} \in \mathbb {R}^{2\times N}\). A reference for these techniques is [24].

References

Amari, S.: Neural learning in structured parameter spaces-natural Riemannian gradient. In: Mozer, M.C., Jordan, M.I., Petsche, T. (eds.) Advances in Neural Information Processing Systems 9, pp. 127–133. MIT, London (1997)

Amari, S.: Natural gradient works efficiently in learning. Neural Comput. 10(2), 251–276 (1998)

Amari, S.: Information Geometry and Its Applications. Number volume 194 in Applied mathematical sciences. Springer, Tokyo (2016)

Amari, S., Karakida, R., Oizumi, M.: Information geometry connecting Wasserstein distance and Kullback-Leibler divergence via the Entropy-Relaxed Transportation Problem (2017). arXiv:1709.10219 [cs, math]

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein GAN (2017). arXiv:1701.07875 [cs, stat]

Ay, N., Jost, J., Lê, H., Schwachhöfer, L.: Information Geometry Ergebnisse der Mathematik und ihrer Grenzgebiete. 3. Folge / A Series of Modern Surveys in Mathematics. Springer, Berlin (2017)

Bakry, D., Émery, M.: Diffusions hypercontractives. In: Azéma, J., Yor, M. (eds.) Séminaire de Probabilités XIX 1983/84, pp. 177–206. Springer, Berlin (1985)

Benamou, J.-D., Brenier, Y.: A computational fluid mechanics solution to the Monge-Kantorovich mass transfer problem. Numerische Mathematik 84(3), 375–393 (2000)

Campbell, L.: An extended Čencov characterization of the information metric. Proc. Am. Math. Soc. 98, 135–141 (1986)

Carlen, E.A., Gangbo, W.: Constrained Steepest Descent in the 2-Wasserstein Metric. Ann. Math. 157(3), 807–846 (2003)

Čencov, N.N.: Statistical Decision Rules and Optimal Inference. Translations of Mathematical Monographs, vol. 53. American Mathematical Society, Providence (1982). (Translation from the Russian edited by Lev J. Leifman)

Chow, S.-N., Huang, W., Li, Y., Zhou, H.: Fokker–Planck equations for a free energy functional or markov process on a graph. Arch. Ration. Mech. Anal. 203(3), 969–1008 (2012)

Chow, S.-N., Li, W., Zhou, H.: A discrete Schrodinger equation via optimal transport on graphs (2017). arXiv:1705.07583 [math]

Chow, S.-N., Li, W., Zhou, H.: Entropy dissipation of Fokker–Planck equations on graphs. Discrete Contin. Dyn. Syst. A 38(10), 4929–4950 (2018)

Chung, F. R. K.: Spectral Graph Theory. Number no. 92 in Regional conference series in mathematics. In: Published for the Conference Board of the mathematical sciences by the American Mathematical Society, Providence, R.I. (1997)

Frogner, C., Zhang, C., Mobahi, H., Araya-Polo, M., Poggio, T.: Learning with a Wasserstein loss (2015). arXiv:1506.05439 [cs, stat]

Gangbo, W., Li, W., Mou, C.: Geodesic of minimal length in the set of probability measures on graphs. accepted in ESAIM: COCV (2018)

Karakida, R., Amari, S.: Information geometry of wasserstein divergence. In: Nielsen, F., Barbaresco, F. (eds.) Geometric Science of Information, pp. 119–126. Springer, Cham (2017)

Kingma, D. P., Adam, J. Ba.: A method for stochastic optimization (2014). CoRR, arXiv:1412.6980

Lafferty, J.D.: The density manifold and configuration space quantization. Trans. Am. Math. Soc. 305(2), 699–741 (1988)

Lebanon, G.: Axiomatic geometry of conditional models. IEEE Trans. Inf. Theory 51(4), 1283–1294 (2005)

Li, W.: Geometry of probability simplex via optimal transport (2018). arXiv:1803.06360 [math]

Li, W., Montufar, G.: Ricci curvature for parameter statistics via optimal transport (2018). arXiv:1807.07095

Li, W., Yin, P., Osher, S.: Computations of optimal transport distance with fisher information regularization. J. Sci. Comput. 75, 1581–1595 (2017)

Lott, J.: Some geometric calculations on Wasserstein space. Commun. Math. Phys. 277(2), 423–437 (2007)

Maas, J.: Gradient flows of the entropy for finite Markov chains. J. Funct. Anal. 261(8), 2250–2292 (2011)

Malagò, L., Matteucci, M., Pistone, G.: Towards the geometry of estimation of distribution algorithms based on the exponential family. In: Proceedings of the 11th Workshop Proceedings on Foundations of Genetic Algorithms, FOGA ’11, New York, NY, USA, 2011. ACM, pp. 230–242

Malagò, L., Pistone, G.: Natural gradient flow in the mixture geometry of a discrete exponential family. Entropy 17(12), 4215–4254 (2015)

Mielke, A.: A gradient structure for reaction–diffusion systems and for energy-drift-diffusion systems. Nonlinearity 24(4), 1329–1346 (2011)

Modin, K.: Geometry of matrix decompositions seen through optimal transport and information geometry. J. Geometr. Mech. 9(3), 335–390 (2017)

Montavon, G., Müller, K.-R., Cuturi, M.: Wasserstein training of restricted boltzmann machines. In: Lee, D.D., Sugiyama, M., Luxburg, U.V., Guyon, I., Garnett, R. (eds.) Advances in Neural Information Processing Systems 29, pp. 3718–3726. Curran Associates Inc, Red Hook (2016)

Montúfar, G., Rauh, J., Ay, N.: On the Fisher metric of conditional probability polytopes. Entropy 16(6), 3207–3233 (2014)

Nelson, E.: Quantum Fluctuations. Princeton series in physics. Princeton University Press, Princeton (1985)

Otto, F.: The geometry of dissipative evolution equations: the porous medium equation. Commun. Partial Diff. Equ. 26(1–2), 101–174 (2001)

Pascanu, R., Bengio, Y.: Revisiting natural gradient for deep networks. In: International Conference on Learning Representations 2014 (Conference Track) (2014)

Peters, J., Vijayakumar, S., Schaal, S.: Natural actor-critic. In: Gama, J., Camacho, R., Brazdil, P.B., Jorge, A.M., Torgo, L. (eds.) Machine Learning: ECML 2005, pp. 280–291. Springer, Berlin (2005)

Takatsu, A.: Wasserstein geometry of Gaussian measures. Osaka J. Math. 48(4), 1005–1026 (2011)

Villani, C.: Optimal Transport: Old and New. Number 338 in Grundlehren der mathematischen Wissenschaften. Springer, Berlin (2009)

Wong, T.-K.: Logarithmic divergences from optimal transport and Rényi geometry (2017). arXiv:1712.03610 [cs, math, stat]

Yi, S., Wierstra, D., Schaul, T., Schmidhuber, J.: Stochastic search using the natural gradient. In: Proceedings of the 26th Annual International Conference on Machine Learning, ICML ’09, New York, NY, USA. ACM, pp. 1161–1168 (2009)

Acknowledgements

The authors would like to thank Prof. Luigi Malagò for his inspiring talk at UCLA in December 2017. This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (Grant agreement no 757983).

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix

In this appendix we review the equivalence of static and dynamical formulations of the \(L^2\)-Wasserstein metric formally. For more details see [38].

Consider the duality of linear programming.

By standard considerations, the supremum in the last formula is attained when

This means that \(\Phi ^1\), \(\Phi ^0\) are related to the viscosity solution of the Hamilton-Jacobi equation on \(\Omega \):

with \(\Phi ^0(x)=\Phi (0,x)\), \(\Phi ^1(x)=\Phi (1,x)\). Hence (21) becomes

By the duality of above formulas, we can obtain variational problem (1). In other words, consider the dual variable of \(\Phi _t=\Phi (t,x)\) by the density path \(\rho _t=\rho (t,x)\), then

The third equality is derived by integration by parts w.r.t. t and the fourth equality is by switching infimum and supremum relations and integration by parts w.r.t. x.

In the above derivations, the relation of Hopf–Lax formula (22) and Hamilton–Jacobi equation (23) plays a key role for the equivalence of static and dynamic formulations of the Wasserstein metric. This is also a consequence of the fact that the sample space \(\Omega \) is a length space, i.e.,

However, in a discrete sample space I, there is no path \(\gamma (t)\in I\) connecting two discrete points. Thus the relation between (22) and (23) does not hold on I. This indicates that in discrete sample spaces, the Wasserstein metric in Definition 1 can be different from the one defined by linear programming (5). See many related discussions in [12, 26].

Notations

We use the following notations.

Continuous/discrete sample space | \(\Omega \) | I |

|---|---|---|

Inner product | \(g^\Omega \) | \(g^I\) |

Gradient | \(\nabla \) | \(\nabla _G\) |

divergence | \(\text {div}\) | \(\text {div}_G\) |

Hessian in \(\Omega \) | Hess | |

Potential function set | \(\mathcal {F}(\Omega )\) | \(\mathcal {F}(I)\) |

Weighted Laplacian operator | \(-\nabla \cdot (\rho \nabla )\) | L(p) |

Continuous/discrete probability space | \(\mathcal {P}_+(\Omega )\) | \(\mathcal {P}_+(I)\) |

|---|---|---|

Probability distribution | \(\rho \) | p |

Tangent space | \(T_\rho \mathcal {P}_+(\Omega )\) | \(T_p\mathcal {P}_+(I)\) |

Wasserstein metric tensor | \(g^W\) | \(g^W\) |

Dual coordinates | \(\Phi (x)\) | \((\Phi _i)_{i=1}^n\) |

Primal coordinates | \(\sigma (x)\) | \((\sigma _i)_{i=1}^n\) |

First differential operator | \(\delta _\rho \) | \(\nabla _p\) |

Second differential operator | \(\delta ^2_{\rho \rho }\) | |

Gradient operator | \(\nabla _W\) | |

Hessian operator | \(\text {Hess}_W\) | |

Levi–Civita connection | \(\nabla ^W_{\cdot }\cdot \) |

Parameter space/Probability model | \(\Theta \) | \(p(\Theta )\) |

|---|---|---|

Inner product | \(g_\theta \) | \(g_{p(\theta )}\) |

Tangent space | \(T_\theta \Theta \) | \(T_{p(\theta )}p(\Theta )\) |

\(L^2\)-Wasserstein matrix | \(G(\theta )\) | |

\(L^2\)-Wasserstein distance | \(\text {Dist}\) | \(\text {Dist}\) |

Second fundamental form | \(B(\cdot , \cdot )\) | |

Projection operator | H | |

Levi–Civita connection | \((\nabla ^W_\cdot \cdot )^{||}\) | |

Jacobi operator | \(J_\theta \) | |

First differential operator | \(\nabla _\theta \) | |

Gradient operator | \(\nabla _g\) | |

Hessian operator | \(\text {Hess}_g\) |

Rights and permissions

About this article

Cite this article

Li, W., Montúfar, G. Natural gradient via optimal transport. Info. Geo. 1, 181–214 (2018). https://doi.org/10.1007/s41884-018-0015-3

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41884-018-0015-3