Abstract

Estimating the tracking efficacy of vehicles in traffic videos is one of the most desirable analysis specially in the presence of a challenging weather conditions. In this paper, a fine-tuning Kalman filter based tracking system has been proposed so that it will work robustly on the traffic videos. Such tracking efficacy has been tested and tuned by integrating a system that calculates the distance between adjacent vehicles as a case study. The integrated system could provide some sort of traffic warning system according to the allowable traffic safety standards. Analysis has been utilized in two phases; phase (1): by changing the performance indices of Kalman filter parameters (initial estimation error, motion noise, and measurement noise). We have measured both average number of assigned tracks and processing time of interest in order to acquire best tuning decision. From observations, changing values of initial estimation error has no effect on the performance of the tracking efficacy however increasing both motion noise and measurement noise has an adverse impact on the tracking performance. Phase (2) by applying the integrated system on a degraded version of a captured urban traffic video to measure performance of the tracking procedure according to the existence of salt and pepper, Gaussian, and Speckle video degradations. Such video disturbances could perform an evaluation for some sort of challenging weather conditions (e.g., rain, fog, and reduced light conditions). It is obviously that average number of assigned tracks has been degraded in the presence of video disturbance with respect to percentage of occurrence and the appropriate statistical features (mean, and variance) of such degradation. Twelve different types of filtering mask have been applied in order to measure average number of assigned tracks (correct predictions) after the cleaning process. We have measured the deviation between both the no noise and the with noise traffic video to study effect of each filter mask within each noise type of video disturbance. Such deviation measurements introduce a decision making criteria for best tuning that increases the efficacy of the vehicular tracking.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Intelligent transportation systems (ITS) refers to a variety of tools, software, hardware, and communication technologies that could be applied in an integrated fashion to improve the efficiency and safety of vehicular traffic [1, 2]. ITS provides support to enhance operation of transportation services, transit management and information to travelers [3, 4]. Research in ITS is targeting improvements in safety of future transportation systems through integrating safety enhancing functions within vehicles. Technologies such as Radar/Lidar, loop detectors and traffic video analysis have been used to provide such safety features. We discuss these technologies in Sect. 2 below.

The on-road automated vehicular detection and tracking has been considered as one of the most valuable research point over the past decades [5, 6]. Such point of interest plays a vital role in the evolution of intelligent transportation systems (ITS). Many available techniques have been grown up for the on-road vehicular detection. Those techniques can be classified into software based computer vision technique and hard ware active sensors based Millimeter radar and lidar techniques. Computer vision methodology introduces a good point of view in the state of the art of analyzing traffic videos. Vehicle interaction, automated traffic warning system, traffic rule violation, and congestion are good examples which can be targeted using surveillance on-road installed cameras. Foreground estimation, background estimation, and motion tracking are classical visual techniques for detecting and classifying vehicles on highways of interest. Video analysis of urban areas are still more challenging because of its dependency on some sort of road parameters such as traffic density, variation of road users, and the degree of occlusion [7]. In order to performing a comparison study between the proposed algorithms, it would be more difficult to perform such study as there is no standardized benchmark dataset to be used [8].

ITS provide the opportunity to establish functions in the infrastructure and/or vehicle to mitigate these deficiencies. For example, sensors in the main line highway could provide an advance warning about an oncoming vehicle to side street traffic on a stop-controlled intersection, to compensate for any sight distance deficiencies for the side street traffic. In-vehicle sensors could provide advance warning to inattentive or drowsy drivers before hitting another vehicle or object, or before running off the road. The possibilities to improve the safety of our transportation system are endless [9].

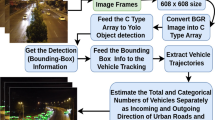

In this paper, video analysis has been proposed in order to consider vehicular tracking operations under different weather conditions (e.g., rain, fog, and reduced light conditions). accordingly, such analysis has been rarely performed in spite of being highly desirable. The proposed traffic video analysis setup a Kalman filter in the presence of weather conditions. The paper uses three different types of video degradation noises (salt and pepper, speckle, and Gaussian) with different levels of occurrence to analyze traffic videos. In addition, a system of filters has been applied to the degraded version of the test video producing a new record with respect to filter masking system. We calculate distance between two vehicles as an example application of vehicular tracking. Calculating such distance enhances safety function in order to provide an automated warning system in order to increase safety. This may result in reducing aggressive driving behavior as well as providing drivers with more time to react to road events. Figure 1 shows the main block diagram of the introduced study.

The rest of this article has been organized as follow: Sect. 2 ITS vehicular tracking technologies; Sect. 3 distance estimation and tracking algorithm; Sect. 4 introduces code setup; Sect. 5 introduces experimental results; Sect. 6 conclusion; references.

2 ITS vehicular tracking technologies

In this section, an overview has been introduced for the following ITS technologies: radar/Lidar and computer vision. One of the most widely used techniques that serves the process of detecting vehicles is the Millimeter radar active sensor. Typically, a continuous waveform signal which is frequency modulated will be emitted. Once receiving the demodulated wave form, the frequency content will be analyzed. The distance between the active sensor and the appropriate vehicle of interest will be easily calculated according to the frequency shift between the transmitted and the received signal. Tracking the detected objects will be performed according to motion characteristic of interest [10].

Vehicular detection and tracking based active millimeter radar works fairly in a challenging weather conditions (rain, fog, and darkness). In addition, in case of noisy measurements, a cleaning process would be extensively required. Millimeter radar detects and tracks all moving objects. A classification process would be necessary to classify those objects as vehicles according to the appropriate relative acceleration, motion, and size of interest. Furthermore, detection of stopped vehicles would be fairly performed [10, 11]. As millimeter radar, lidar detects and tracks all moving objects. A classification process would also be necessary to classify those objects as vehicles according to the appropriate relative acceleration, motion, and size of interest too. However, lidar provides cleaner measurements and more sensitivity to precipitation than radar. Lidar use a rotating hexagonal mirrors which split the laser beam [11]. The upper three beams are used for detecting vehicles and appropriate obstacles however the lower three beams are used for detecting road features and land marks [12]. Lidar cost remains a challenging issue. Vehicular detection and tracking based computer vision uses a system of installed surveillance cameras. Acquisition system based cameras provide a wide range view which allowing the vehicular detection and tracking across multiples lanes [13]. The appropriate imaging system contains a lens and a charged coupled device (CCD). By using means of computer vision, sophisticated computations would be required due to the presence of large amount of homogeneous mapped pixels in the digital video frames of interest. Successive video frames provide researchers with rich visual information source for manual inspection. In addition, there will be no traffic disruption for installation and maintenance. By using means of computer vision, recognizing objects as vehicles would be easier than active lidar and radar. In addition, there will be no need for any classification processes [14].

One of the most foundational and valuable technique based computer vision is the Kalman filter (KF). Kalman filter is an estimator which infers the appropriate parameters of interest from inaccurate, indirect, and uncertain perceptions [15]. Its filtering functionality based on a linear mean square error estimation. The main target of its filtering functionality is to minimize the estimated mean error covariance according to some sort of presumed conditions. Kalman filter produces good results due to optimality and structure in addition, KF offers a convenient form for online real time processing. However, KF also does not just clean up the data observations, but also projects these observations into an enhanced version of measurements [16]. The basic mathematical model for the KF involves a discrete-time nonlinear dynamic enhanced system as follow:

where xk represents the un-observed state of the system and yk represents the only observed state of the system, vk represents the process based noise which drives the dynamic system, and nk represents the observation noise [15]. The dynamic model based system F and H has been assumed to be known. In state-estimation, the KF is the best predictor which has the ability to achieve a recursive maximum likelihood estimation of the state [16]. KF model could be expressed as follow:

where \( \widehat{{X_{k} }} \) is the current estimation, KK is the Kalman filter gain with discrete values (K1, K2, K3,…..), Zk is the measured value, and \( \widehat{{X_{K - 1} }} \) is the previous estimation.

Kalman filter has five performance indices which affect directly on the accuracy of the tracking methodology:

-

1.

Motion model The KF can follow one of two motion models. The first model is the constant velocity based model where the velocity of the moving object is assumed to be constant. The second model is the model based constant acceleration where the object is assumed to be accelerating at a constant rate.

-

2.

Initial location For the KF to be able to track a certain object, its initial location must be known. This parameter is given in X and Y coordinates.

-

3.

Initial estimation error This parameter expresses the amount of error in the X and Y directions which the KF should accept before deeming a certain track as “unacceptable” and dropping it altogether. It only affects the accuracy of the first few predictions since the KF adapts and creates its own estimation error values later on based on the previous results.

Increasing this value will make it possible for the KF to adapt faster but it might also make the first few predictions inaccurate.

-

4.

Motion noise This parameter represents the acceptable deviation from the set Motion Model because it might not fit the object’s velocity or acceleration perfectly. Increasing this parameter might be good for the reason of making the KF more suitable for the object’s movement but it might also cause some inaccuracy.

-

5.

Measurement noise This value is given as a scalar. Increasing it causes the KF to adhere more to the set Motion Model.

3 Distance estimation and tracking algorithm

Figure 2 introduces the main algorithm for estimating the distance between two adjacent vehicles after the detection procedure for the vehicle in a certain video and how this vehicle is kept tracked through that video according to their centroids and how any other moving objects being eliminated according to specifying a minimum threshold size of interest. The following steps summarize the algorithm:

Step 1 traffic video based multiple moving vehicles would be read according to Algorithm 1:

Step 2 Separate the background from the foreground (vehicles). A number of consecutive frames have been taken and the pixels have been divided into static and dynamic pixels. Foreground detector uses means of background subtraction technique. The appropriate methodology has been utilized as follow:

-

a)

Specify a reference frame which represent the appropriate back ground of interest. Now, the background model based frame would be initialized.

-

b)

Estimate the appropriate threshold value in order to satisfy the required detection rate of interest. The selection of the threshold plays a vital role in the subtraction operation.

-

c)

Identify and classify the type of given pixel with respect to degree of both brightness and chromaticity compared with pixels in the background frame. The four pixel classes would be summarized as follow:

Class 1: moving foreground based pixels According to both chromaticity and brightness, they would be different from the expected values in the background frame.

Class 2: shaded background based pixels According to both chromaticity and brightness, chromaticity would be similar to those in the background frame however brightness would but lower.

Class 3: ordinary background based pixels According to both chromaticity and brightness, they would be similar to those in the background frame of interest.

Class 4: highlighted background based pixels According to both chromaticity and brightness, chromaticity would be similar to those in the background frame however brightness would but higher.

A binary frame has been resulted where black represents the background and white represents the moving as shown in Figs. 3 and 4. The mathematical representation for the background subtraction was as follow:

where I: observed video sequence, Is,t: foreground model at pixel s within time t, Bs: background model at pixel s, B: static background, τ: threshold, xt: motion mask, d: the distance between Is,t, and Bs. The occurrence probability of color I at pixel s is given by:

where \( N\left( {\mu_{i,s,t} ,\varSigma_{i,s,t} } \right) \):is the ith Gaussian model and wi,s,t is the appropriate weights.

Step 3 Apply morphological operations to preprocess the appropriate test video and remove undesirable objects. Two phases have been introduced for such analysis; first phase is to get rid of undesirable objects such as smaller (i.e. birds) and larger (i.e. pedestrians) moving objects compared with the size of the desired moving vehicles of interest [17, 18]. This phase would be concerned in the state of the art of adaptive thresholding. Algorithm 2 introduces phase 1 based morphological operations of interest.

Second phase based morphological analysis guarantee the process of filling undetectable pixels in vehicle window. The appropriate filling would be concerned by using means of vehicle closing. This mathematical morphology based technique has been derived by applying dilation process cascaded by erosion process. The closing process working in the state of the art of enlarging the appropriate bright boundaries of the foreground objects (vehicles) in each frame and shrinking the appropriate background holes in such vehicles regions. Algorithm 3 introduces phase 2 of interest [19].

The proposed system has been utilized regardless the possibility of object losses due to the conditions that change the objects appearance.

Step 4 Apply blob analysis in order to isolate the blobs (vehicles) in each binary frame. A blob consists of a group of connected pixels which represent each vehicle of interest. Blob analysis methodology has the ability of extracting the most salient statistical features; area, perimeter, centroids, bounding box [20]. All these features would be used in order to classify blobs (vehicles) in order to ease the decision making of that if they hold the objects which we are concerned about or not. In the introduced paper, we have concerned with the calculation and the assignment of the following properties to each detected vehicle; Area of the objects, Bounding box of the object, and X and Y coordinates of the blob’s centroid [24]. Figures 3 and 4 show the output from the proposed system for two successive frames after applying blob analysis according to calculating centroids of the moving objects [21, 22]. The state of the art of blob detector is based on normalized Laplacian of Gaussian (LOG)norm:

where \( g\left( {x,y,t} \right) = \frac{1}{{2\pi t^{2} }}e^{{ - \frac{{x^{2} + y^{2} }}{{2t^{2} }}}} \) is the Gaussian kernel and f(x,y,t) is the video frame of interest. The (LOG)norm can be estimated as follow:

Step 5 For each detected blob, perform the following:

-

Assign an ID number that identifies the vehicle throughout the duration of its appearance in the video as shown in Figs. 3 and 4.

-

Apply KF to actually track the appropriate vehicle and associate its detections throughout the video to a single track.

-

Calculate duration of how long has a particular object been detected.

-

Estimate total Visible Count which indicates how many consecutive frames has the particular object been detected.

-

Estimate consecutive invisible count to indicate how many consecutive frames has the object been undetectable for.

Step 6 Estimate distance between two adjacent vehicles according to spatial coordinates of the centroid of each blob (calculated in step 4). The distance between these two centroids (x1, y1) and (x2, y2) of two blobs is calculated using the Euclidean equation:

Step 7 For each of the following frames, the KF will predict new locations of blobs and places a bounding box around it [21].The reason of using KF instead of any other object tracking [i.e. Hidden Markov Model (HMM)] [22, 23] is that KF introduces some facilities; its ability to Predict moving vehicles in future locations, its ability to reduce the appropriate noise that introduced by inaccurate detections, KF provides some sort of Facilitating the process of association of multiple objects to their tracks, finally, KF introduces multiple moving vehicles tracking with lower processing time.

In addition, the reason of using the concept of Euclidean distance instead of any other distance estimation techniques [(i.e. K-nearest neighbor’s algorithm (k-NN)] [24, 25] is that this method offers an acceptable processing time and simplicity in computations [26, 27]. however, K-NN is a learning methodology with more sophisticated analysis due to its main applications as a classifier detector and predictor [28,29,30,31]. In the proposed analysis, a simple way for calculating such distance is required due to the continuous variation of the centroid values for each detected based in each frame.

Step 8 As a particular vehicle is predicted, a possibility of error is generally expected. To ensures tracking vehicles in spite of changing position, speed and acceleration, we calculate the distance of centroids of each blob calculated in two consecutive frames using the Euclidean Eq. (1). If this difference is found to be less than a specified threshold value, then this prediction is deemed “accurate” and the track’s confidence level is incremented. If the difference value is greater than the threshold value, then the prediction is deemed ‘inaccurate’ and the track’s confidence level is decremented. In order to illustrating this mechanism, a cost matrix, shown in Fig. 5, is created. This matrix consists of M rows and N columns. M is the number of tracks (predictions) and N is the number of detections. Each element in this matrix represents the cost of matching the Mth prediction to the Nth detection. This cost is calculated via the Euclidean equation for distance calculation. If this cost is low, then a match between the prediction and detection is achieved, otherwise the match does not happen. Another parameter that goes into the process of deciding whether the track is to be assigned to the detection or not is the “Cost of non-assignment” which represents the cost of not assigning a prediction or a detection. The higher this parameter is the more likely for most detections and predictions to be matched. Figure 5 show the cost matrix from the proposed system.

Step 9 Based on the values regarding “accurate” predictions obtained from steps 7 and 8, perform the following:

-

Update the bounding box of the object to the current one instead of the previously predicted one.

-

Adds [1] to the age of the track.

-

Adds [1] to the visibility count for the track.

-

Sets the invisibility count to [0].

Step 10 Based on the values regarding “inaccurate” predictions obtained from step 7 and 8, perform the following:

-

Adds [1] to the age of the track.

-

Adds [1] to its invisibility count.

Step 11 Delete tracks with frequent inaccurate predictions (it stays invisible for a certain number of consecutive frames).

Step 12 Create new tracks for every new vehicle that enters the camera’s scope, assign a corresponding track structure to start tracking by the KF.

4 Code setup

The Software of the proposed system has been developed using Matlab 2015 release (a) with a PC that have 4 GB RAM and 2.5 GHZ dual core processor. According to the following procedure. A graphical user interface has been integrated for estimating the required observations of the two appropriate phases based computer vision analysis.

5 Experimental results

The objectives are to calculate distance between adjacent vehicles as an application of vehicular tracking. The main procedure was to measure efficiency of the tracking by analyze a raw test video with ideal weather conditions (i.e., no noise) and a simulated challengeable weather conditions (i.e., adding noise). A complete GUI interface has been utilized with the following indicators: number of assigned tracks, average number of assigned tracks, number of cars, distance alarm indicator, and processing time indicator. Figure 6 show screen shots from the interface of interest.

5.1 The no-noise case

The number of assigned tracks (correct predictions) has been measured in each frame according to the test video in response to varying the following KF parameters: initial estimation error, motion noise, and measurement noise. Motion model has been set to a constant velocity model and initial location has been set to the coordinates of the centroids. Figures 7, 8, and 9 represent the relation between number of frames (along horizontal axis) and number of assigned tracks in each appropriate frame (along vertical axis).

In Fig. 7, the initial estimation error is varied along the [X Y] direction which the KF should accept before deeming a certain track as “unacceptable” and dropping it altogether. Table 1 represents all case studies of initial estimation error as a response for applying a gradual variation in its coordinate value according to a 500 frames test video. In each case, the average number of assigned tracks have been calculated in all frames as well as processing time. It is observed that the value of the initial estimation error does not have a significant effect on the number of assigned tracks (correct predictions) and all case studies remain unchanged. This is due to the fact that the KF adapts and changes its estimation error value based on input data.

Figure 8 is concerned with the change of motion noise according to four categories of observations. Table 2 represents all case studies of interest of motion noise. From the obtained results, it is observed that as for the motion noise, choosing a value that is above [150, 150] would be unsuitable. This is due to the fact that increasing its value could lead into a great deviation from the motion model set which causes inaccuracy that appears as a drop in the values of the average number of assigned tracks. This drop causes some sort of wrong predictions which leads to in accurate tracking.

Figure 9 is concerned with the changing of the measurement noise according to five categories of observations. Table 3 represents all case studies of interest. As for the Measurement Noise, increasing its value causes inaccuracy measurements for estimating the average number of assigned tracks. The number of assigned tracks is indeed increasing with the increase in its value. But, this increase could still have an adverse impact on the long run. From all previously discusses observations, a first stage fine-tuned tracking criteria has been concluded as follow; by adjusting the performance indices of KF (for a 500 frames test video) as follow:

-

Choose initial estimation error to have any coordinates below 500.

-

Choose motion noise to have any coordinates below 150.

-

Choose measurement noise to have any value below 150.

5.2 The with-noise case

Now, the analysis of non-ideal test video would be started by adding noise into test video. In addition, the previously discusses conclusions have been considered for the required modifications in the proposed GUI system. The appropriate modifications are needed to acquire the analysis of test video with different disturbances. In addition, the tracking accuracy has been utilized and tested again.

The main purpose for that phase is to create simple simulation criteria for some of the most challenging weather conditions. Modifications has been utilized by adding salt and pepper noise, Gaussian noise, and speckle noise as three types of video disturbances with different levels of occurrence percentages. In addition, system of filters has been added to the interface in order to measure the required performance (correct tracking) after the cleaning processes. Many distinguishing filtering systems have been applied for each type of noise; average, maximum, minimum, wiener, disk, Laplacian, of Gaussian (LoG), motion, sobel, prewitt, median, and Gaussian filters. Observations has been recorded as follow: In each case of salt and pepper noise, by increasing percentage of occurrence, observations have been targeted that the values of assigned tracks have been deviated from the ideal case value (zero noise). In addition, by adding a Gaussian noise with a changeable mean and variance values, the number of assigned tracks has been degraded than the ideal case (zero noise) however, these degradations could be assumed non effective in cases of small mean value. Finally, in case of speckle noise, we can see that these degradations will be very small at large variance. Tables 4, 5 and 6 represents the effect of video degradation on tracking accuracy.

By adjusting the GUI interface in filtering video mode as shown in Fig. 10. New observations have been recorded after applying the cleaning methodology of each filter mask on each type of noise with its different percentage of occurrence. These observations have been listed in Tables 8, 9, and 10. In addition, the main target was to measure the efficiency of each filter mask with each type of noise disturbance with respect to different levels of occurrences. This could be accomplished by measuring the amount of deviation of the average number of assigned tracks between the zero noise test video (value was recorded approximately to be 1.996) and the noisy version. Those deviations have been summarized in Table 7 according to comparing the observations listed in Tables 8, 9, and 10 (calculations after cleaning) with the ideal. Observations were as follow: in case of Speckle noise: The Wiener and Disk filters scored the least deviation from the original values, thus it appears that they are the most accurate and the processing times are approximately the same. In case of Gaussian noise: The Median filter showed the least deviation from the original values, thus it’s the most suitable filter for this type of noise. However, its processing time was high. Finally, in case of Salt and pepper noise: The Median filter scored the least deviation from the original values, thus it’s the most suitable one however its processing time was high. Table 11 represents snapshots from the system output for the applied three types of video noises after the cleaning process. From all previously discusses observations, a secondly stage fine-tuned tracking criteria has been recommended to use such median, wiener, and disk filter masks to discriminate between video degradations.

6 Conclusion

Measuring and enhancing the tracking efficiency for moving vehicles in an urban video is an important challenging research points especially under abnormal weather conditions. A new system has been developed for calculating distance between adjacent vehicles as an application of vehicular tracking. Calculating such distance enhances safety function in order to provide an automated warning system for the drivers. Two phases based analysis have been observed in order to establish such system in both ideal and challenging weather conditions. Phase 1 is responsible for adjusting performance indices of KF parameters in order to achieve the best tracking in case of no noise test video. We recommend the values of initial estimation error, motion noise, and measurement noise to be below one quarter the total number of frames for the video of interest (in our case, we have used 500 frames test video and performance indices were below 150 for best tracking). For a noisy test video, the first procedure is to set KF parameters as discussed before. Then we recommend the use of wiener, disk, and median filters according to the type of disturbance of interest (use wiener and disk filter masking in case of speckle noise, use median filter in case of Gaussian noise, and use median filter in case of salt and pepper noise). Future work should be directed toward cascading filters with a focus on realistic conditions during evaluation taking in considerations the level of complexity. In addition, a comparison study would be targeted in the state of the art of using new algorithms for detection and tracking moving vehicles such as Otsu method, k-nearest neighbor’s algorithm (k-NN), and Hidden Markov Model (HMM).

References

An S, Lee BH, Shin DR (2011) A survey of intelligent transportation systems. In: Proceedings of 2011 Third International Conference on Computational Intelligence, Communication Systems and Networks (CICSyN). pp 332–337

Wang F (2010) Parallel control and management for intelligent transportation systems: concepts, architectures, and applications. IEEE Trans Intell Transp Syst 11(3):630

G Yan, W Yang, W Yang (2011) A secure and intelligent parking system. IEEE Intell Transp Mag 18

J Zaldivar, T Calafate, J Cano, P Manzoni (2011) Providing accident detection in vehicular networks through OBD-II devices and android-based smartphones. 5th IEEE workshop on user mobility and vehicular networks, on-move, pp 813

Zhang J, Wang F, Wang K, Lin W, Xu X, Chen C (2011) Data-driven intelligent transportation systems. IEEE Trans Intell Transp Syst 12(4):1624

Faouzi N, Leung H, Kurian A (2011) Data fusion in intelligent transportation systems progress and challenges—a survey. Inf Fus 4–10:5

Buch N, Velastin SA, Orwel J (2011) A review of computer vision techniques for the analysis of urban Traffic. IEEE Trans Intell Transp Syst 12(3):920–939

Sivaraman S, Trivedi MM (2013) Looking at vehicles on the road: a survey of vision based vehicle detection, tracking, and behavior analysis. IEEE Trans Intell Transp Syst 14(4):1773–1795

Sivaraman S, Trivedi M (2013) Integrated lane and vehicle detection, localization, and tracking: a synergistic approach. IEEE Trans Intell Transp Syst 14(2):907–917

S Sato, M Hashimoto, M Takita, K Takagi, T Ogawa (2010) Multilayer lidar-based pedestrian tracking in urban environments. In proc. IEEE IV, pp 849–854

Garcia F, Cerri P, Broggi A, Armingo JM, de la Escalera A (2009) Vehicle detection based on laser radar. Springer Verlag, New York

Xue L, Jiang C, Chang H, Yang Y, Qin W, Yuan W (2012) A novel Kalman filter for combining outputs of MEMS gyroscope array. Elsevier, New York, pp 745–746

Guo J, Williams BM, Huang W (2014) Adaptive Kalman filter approach for stochastic short-term traffic flow rate prediction and uncertainty quantification. Transp Res Part C Emerg Technol 43(Part 1):50–51

Ko CN, Lee CM (2012) Short-term load forecasting using SVR (support vector regression)-based radial basis function neural network with dual extended Kalman filter. Elsevier, New York, p 413

Y Tian, Y Wang, X Chen, Z Tan (2014) Kalman-filter-based state estimation for system information exchange in a multi-bus islanded microgrid. Proceedings of the 7th IET international conference on power electronics, p 1

PL Houtekamer, X Deng, HL Mitchell, SJ Beak, N Gagnon (2014) Higher resolution in an operational ensemble Kalman filter. IEEE, p 1143

AH Cherif, K Boussetta, G Diaz, D Fedoua (2016) A probabilistic convergecast protocol for vehicle to infrastructure communication in ITS architecture. 13th IEEE annual consumer communications and networking conference (CCNC), pp 776–777

Y Yao, G Xiong, K Wang, Fe Zhu, FY Wang (2013) Vehicle detection method based on active basis model and symmetry in ITS. Conference on intelligent transportation systems, IEEE, 16th international, ITSC, pp 614–618

T Edwards, J Moore, M Loukadaki, P Jaworski (2011) A network assisted vehicle for ADAS and ITS testing. 14th international IEEE conference on intelligent transportation systems, pp 681–685

Sofia Janet R, Bagyamani J (2015) Traffic analysis on highways based on image processing. Int J Comput Intell Inf 5(1):14–23

Sivaraman S, Manubhai M (2013) Integrated lane and vehicle detection localization, and tracking: a synergistic approach. IEEE Trans Intell Transp Syst IEEE 14(2):906

Kamal MS, Chowdhury L, Khan MI, Ashour AS, Manuel J, Tavares RS, Dey N (2017) Hidden Markov model and Chapman Kolmogrov for protein structures prediction from images. Comput Biol Chem 68:231–244

MS Kamal, S Parvin, AS Ashour, F Shi, N Dey (2017) De-Bruijn graph with MapReduce framework towards metagenomic data classification. Int J Inf Technol 9:59–75. http://springerlink.bibliotecabuap.elogim.com/article/10.1007/s41870-017-0005-z. Accessed Mar 2017

Kamal MS, Dey N, Ashour AS, Ripon SH, Balas VE, Kaysar MS (2017) FbMapping: an automated system for monitoring facebook data. Neural Netw World 27:27–57

Kamal MdS, Nimmy SF, Parvin S (2016) Performance evaluation comparison for detecting DNA structural break through big data analysis. Comput Syst Sci Eng 31:275–289

Kamal MS, Dey N, Nimmy SF, Ripon SH, Ali NY, Ashour AS, Karaa WA, Shi F (2016) Evolutionary framework for coding area selection from cancer data. Neural Comput Appl. https://doi.org/10.1007/s00521-016-2513-3

MS Kamal¸ MI Khan, K Deb, L Chowdhury, N Dey (2016) An optimized graph based metagenomic gene classification approach: metagenomic gene analysis, chap 12. In: Dey N, Ashour A (eds) Classification and clustering in biomedical signal processing. IGI Global, Advances in bioinformatics and biomedical engineering (ABBE) book series. pp 290–314. https://doi.org/10.4018/978-1-5225-0140-4

S Ripon, MS Kamal, S Hossain, N Dey (2016) Theoretical analysis of different classifiers under reduction rough data set: a brief proposal. Int J Rough Sets Data Anal (IJRSDA) 5(1)

Kamal MS, Khan MI (2014) Performance evaluation of Warshall algorithm and dynamic programming for Markov chain in local sequence alignment. Interdiscip Sci Comput Life Sci 7(1):78–81

Lamba A, Kumar D (2016) Optimization of KNN with Firefly algorithm. BIJIT BVICAM’s Int J Inf Technol 8(2):997–1003

Soundarya K (2014) Video denoising based on stationary wavelet transform and center weighted median filter. BIJIT BVICAM’s Int J Inf Technol 6(1):722–726

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ata, M.M., El-Darieby, M. & El-nabi, M.A. A fine tuned tracking of vehicles under different video degradations. Int. j. inf. tecnol. 10, 417–434 (2018). https://doi.org/10.1007/s41870-018-0171-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41870-018-0171-7