Abstract

We propose a new multiple criteria decision-making method by combining the two types of distance aggregation methods: technique for order preference by similarity to ideal solution (TOPSIS) method and ordered weighted average distance (OWAD) operator together. The TOPSIS method measures the distance of alternatives to positive ideal and negative ideal solutions and then chooses the best solution according to the relative closeness. However, it does not consider the decision-maker’s attitude. OWAD operator is a combination of OWA operator and distance measure to express the decision-maker’s attitude. Therefore, we combine TOPSIS and OWAD operator together into a new approach OWAD–TOPSIS method. The influences of weights in OWAD operator on the decision-making results are analyzed. A neat-OWAD operator-based OWAD–TOPSIS method is proposed where the weights dynamically change with the distance values and the preference parameter. Finally, a numerical example is illustrated to demonstrate the proposed approach and the results are analyzed with different parameter values.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

We often make decisions from a number of alternatives, actions, or candidates (Hwang and Yoon 1981; Pedrycz and Chen 2011, 2015a, b). Multiple criteria decision-making (MCDM) provides efficient solutions for these problems (Pedrycz and Chen 2015a; Lin et al. 2009a, b; Dagdeviren et al. 2009; Lee et al. 2009). Considering the uncertainty nature of the decision-making problems, fuzzy MCDM method is also proposed (Zadeh 1965; Blanco-Mesa et al. 2017; Yazdanbakhsh and Dick 2018) and applied in the decision-making problems such as portfolio selection (Tiryaki and Ahlatcioglu 2005), personnel selection (Dursun and Karsak 2010; Safarzadegan Gilan et al. 2012) and decision support system (Hung et al. 2010; Noor-E-Alam et al. 2011; Peng et al. 2011; Li and Kao 2009; Chen and Hong 2014). Dheena and Mohanraj (2011) considered multiple criteria decision-making combining fuzzy set theory for location site selection. Yan et al. (2011) and Yager (2004) dealt multiple criteria decision-making problems with multiple priorities. Blanco-Mesa et al. (2017) provided a comprehensive review on the developments of fuzzy decision-making problems and their applications.

Among the various MCDM approaches, technique for order performance by similarity to ideal solution (TOPSIS) method is a popular and efficient approach to solve multiple criteria decision-making problems (Behzadian et al. 2012; Zyoud and Fuchs-Hanusch 2017; Zavadskas et al. 2016). The TOPSIS method which was first introduced by Hwang and Yoon (1981). The basic idea of TOPSIS method is choosing the best alternative by considering the shortest distance to the positive ideal solution and the longest distance from the negative ideal solution simultaneously. The TOPSIS method has been widely used in various decision-making problems (Dursun et al. 2011; Chen et al. 2016; Wang and Chen 2017; Zyoud and Fuchs-Hanusch 2017; Zavadskas et al. 2016; Lee and Chen 2008). Wang and Elhag (2006) proposed a fuzzy TOPSIS method based on alpha-level sets in fuzzy numbers and applied it to bridge risk assessment. Kabak et al. (2012) combined fuzzy ANP and fuzzy TOPSIS approaches to develop a more accurate personnel selection methodology. Xu and Zhang (2013) proposed hesitant fuzzy TOPSIS with incomplete information. Zhang and Xu (2014, 2015) also extended TOPSIS to the forms of Pythagorean fuzzy sets and intuitionistic fuzzy sets, respectively. Behzadian et al. (2012), Zyoud and Fuchs-Hanusch (2017) and Zavadskas et al. (2016) gave comprehensive reviews of TOPSIS method and their applications in various decision-making problems. Recently, Liang and Xu (2017) further extended TOPSIS to the hesitant pythagorean fuzzy sets case. Yoon and Kim (2017) incorporated the decision-maker’s behavioral tendency into TOPSIS by accommodating the loss aversion concept in behavioral economics. Wu et al. (2018) proposed interval type-2 fuzzy TOPSIS model for large-scale group decision-making problems with social network information. Dwivedi et al. (2018) proposed a generalized fuzzy TOPSIS method as a versatile evaluation model by extending the calculation of closeness coefficients.

Obviously, TOPSIS method provides an effective way to aggregate decision-making information. In recent years, various decision-making information aggregation methods have been proposed (Yager 1988; Mardani et al. 2018) and the aggregation methods often depend upon the preferences of decision-makers (Yoon and Kim 2017; Dwivedi et al. 2018). The ordered weighted averaging operator (OWA) was introduced by Yager (1988) which is a widely applied and important type of aggregation operators to deal with decision-makers’ opinions. Various extensions of OWA operator such as ordered weighted geometric averaging operators, neated OWA operator, inducted OWA operator, ordered weighted average distance operator, and generalized OWA operator are also proposed (Laengle et al. 2017; Morshedizadeh et al. 2018; Torra 2004; Emrouznejad and Marra 2014; He et al. 2017; Mardani et al. 2018; Yager et al. 2011). Emrouznejad and Marra (2014); He et al. (2017); Mardani et al. (2018) gave comprehensive reviews on this topic. Ordered weighted average distance (OWAD) operator, which was introduced by Xu and Chen (2008), is a combination of OWA operator and distance measure. Merigó and Gil-Lafuente (2010, 2011) used Hamming distance with OWA operator in sport management and selection of financial products. Xu and Wang (2011) developed linguistic ordered weighted distance (LOWD) operator. He also investigated some families of the LOWD operator and developed a procedure to the linguistic decision problem with the developed linguistic distance operators. Vizuete Luciano et al. (2012) introduced a new process based on the use of the OWAD operator in the Hungarian algorithm. Scherger et al. (2017) developed a goodness index based on Hamming distance and ordered weighted averaging distance (OWAD) operator and applied to diagnose of business failure. Merigo et al. (2018) proposed probabilistic ordered weighted averaging distance operators and applied them to the asset management problem.

In addition to the separated study of TOPSIS method and OWA or OWAD operator, the attempts of combining TOPSIS method and OWA operator also appear in the recent years. Xu and Liu (2007) constructed the human resource evaluation model based on extended continuous OWA operators and TOPSIS method. Dursun and Karsak (2010) and Dursun et al. (2011) proposed fuzzy TOPSIS which is based on the principles of fusion of fuzzy information, 2-tuple linguistic representation model with OWA operator, and used combined method to evaluate health-care waste management and personnel selection. Chen et al. (2011) proposed a hybrid approach which integrates OWA operator into TOPSIS method to tackle multiple criteria decision-making problems. The information processing schemes are applied to a group decision support procedure. Liu and Zhang (2014) propose the TOPSIS-based consensus model for group decision-making with incomplete interval fuzzy preference relations using the induced ordered weighted averaging operator. Wang et al. (2016) integrated OWA–TOPSIS framework in intuitionistic fuzzy settings for multiple attribute decision-making problems.

From the literature review, we can find that a restriction of TOPSIS method is that it is neutral in the sense toward the decision-maker’s attitudinal character. In addition, OWAD operator as an extension of OWA operator has the flexibility to obtain various aggregation results according to the decision-maker’s attitudinal character in different situations. Furthermore, both TOPSIS and OWAD use the distance value in the aggregation process. As far as we know, there are no attempts on the combination of OWAD operator and TOPSIS method. The purpose of this paper is to propose the method of combining TOPSIS method and OWAD operator together. TOPSIS method provides a systemic framework of ranking of alternatives by comparing the distance to the positive ideal and from the negative ideal solutions. While the OWAD operator aggregates the distance values by considering the attitudinal character of decision-maker. The specific computing procedures of the TOPSIS–OWAD approach are given, and include TOPSIS as special case. The influence of the weights on the final solution is analyzed and an additive neat-OWAD operator with influential parameter is proposed, in which the weights dynamically change with the aggregated elements without the element’s order information.

The rest of the paper is organized as follows. Section 2 introduces the basic conceptions of OWAD operator and TOPSIS method. Section 3 presents the connections between OWAD operator and TOPSIS method, and then proposes a new neat-OWAD operator. Section 4 gives a numerical example. Section 5 summarizes the main results and draws conclusions.

2 Preliminaries

Here, we will briefly introduce the basic concepts of ordering weighted average distance (OWAD) operator and the solution process of TOPSIS method.

2.1 Ordering weighted average distance operator

Ordering weighted average distance (OWAD) operator is an extension of ordered weighted averaging (OWA) operator (Xu and Chen 2008). In addition to the static weight assignment methods, OWA operator can also have dynamic weights that depend on the aggregated elements, which is called neat-OWA operator with the characteristic that the aggregation value is independent of the element’s orders (La Red et al. 2011; Liu and Lou 2006; Liu 2008).

Definition 1

(Yager 1988) Yager’s OWA operation of dimension n is a mapping \(\varnothing :\mathbb {R}_N\rightarrow \mathbb {R}\), which has an associated set of weights \(\mathbf {W}=(w_1,w_2,\ldots ,w_n)^T\) to it, so that \(w_i\in [0,1]\) and \(\sum _{i=1}^nw_i=1\):

where \(y_i\) is the ith highest value in the set \(\{x_1,\ldots ,x_n\}\).

The definition of neat-OWA operator is given as follows.

Definition 2

(Liu 2008) For aggregated elements \(X=(x_1,x_2,\ldots ,x_n),x_i\in [a,b]\), and \(f(x)\geq 0\),\(f(x_i)\ne 0\) for at least one i, an additive neat-OWA (ANOWA) operator determined by weighting function f(x) is a neat-OWA operator with weights \(W=(w_1,w_2,\ldots ,w_n)^T\) defined as follows:

The neat-OWA operator aggregation result is

The ordered weighted average distance operator is an aggregation operator that uses OWA operators and distance measures in the same formulation (Xu and Chen 2008). OWAD operator differs from OWA operator in that reordering step is developed by the arguments of the distances rather than individual value. It can be defined as follows for two sets \(X=\{x_1,x_2,\ldots ,x_n\}\), \(Y=\{y_1,y_2,\ldots ,y_n\}\).

Definition 3

(Xu and Chen 2008) An OWAD operator of dimension n is a mapping OWAD: \(R^n \times R^n \rightarrow R\) that has an associated weighting vector W with \(\sum ^n_{j=1}w_j=1\) and \(w_j\in [0,1]\) such that:

where \(d_j\) is the jth largest distance of the \(|x_i-y_i|\), and \(x_i\) and \(y_i\) are the ith arguments of the sets X and Y.

OWAD operator can provide a parameterized family of distance aggregation operators between the minimum and the maximum. The maximum distance is found when \(w_1=1\) and \(w_j=0\) for all \(j\ne 1\) and the minimum distance when \(w_n=1\) and \(w_j=0\) for all \(j\ne n\). If we would like to get the average distance, and then, \(w_j=\frac{1}{n}\), \(1\leq j \leq n\).

2.2 The TOPSIS method

The TOPSIS method is a multiple criteria method to identify solutions from a finite set of alternatives (Hwang and Yoon 1981; Behzadian et al. 2012). The basic principle is that the chosen best alternative should have the shortest distance from the positive ideal solution and the farthest distance from negative ideal solution. The procedure of TOPSIS method can be expressed in a series of steps.

- Step 1:

Calculate the normalized decision-matrix. The process of normalization often has three types. For decision-matrix \(A=(a_{ij})_{m\times n}\), \(i=1,2,\ldots ,m, j=1,2,\ldots ,n\), where \(a_{ij}\) is the performance rating of the ith alterative, \(A_i\), with respect to the jth criterion, \(X_j\). The normalized value \(b_{ij}, i=1,2,\ldots ,m, j=1,2,\ldots ,n\) is calculated with sum-based normalization:

$$\begin{aligned} b_{ij}=\frac{a_{ij}}{\sum ^m_{i=1}a_{ij}}. \end{aligned}$$(5)- Step 2:

Calculate the weighted normalized decision-matrix. The weighted normalized value \(c_{ij}\) is calculated as \(c_{ij}=w_{j}b_{ij}, i=1,2,\ldots ,m, j=1,2,\ldots ,n\), where \(w_j\) is the weight of the jth criterion \(G_j\), and \(\sum _{j=1}^{n}w_j=1\). Then, determine the positive ideal and negative ideal solutions:

$$\begin{aligned} C^+&={c^+_1,c_2^+,\ldots ,c_n^+}=\{(\max c_{ij}|j\in I).(\min c_{ij}|j\in J)\} \end{aligned}$$(6a)$$\begin{aligned} C^-&={c^-_1,c_2^-,\ldots ,c_n^-}=\{(\min c_{ij}|j\in I).(\max c_{ij}|j\in J)\}, \end{aligned}$$(6b)where I is associated with benefit criteria, and J is associated with cost criteria.

- Step 3:

Calculate the separation measures, using the n-dimensional distance. The Manhattan distance separations of each alternative from the positive ideal and the negative ideal solutions are given as follows:

$$\begin{aligned} d_i^+&=\sum _{j=1}^n|c_{ij}-c_j^+|, i=1,2,\ldots ,m. \end{aligned}$$(7a)$$\begin{aligned} d_i^-&=\sum _{j=1}^n|c_{ij}-c_j^-|, i=1,2,\ldots ,m. \end{aligned}$$(7b)- Step 4:

Calculate the relative closeness degree to the positive ideal solution. The relative closeness of the alternative \(A_i\) with respect to \(A^+\) is defined as follows:

$$\begin{aligned} R_i=\frac{d_i^-}{d_i^-+d_i^+}, i=1,2,\ldots ,m. \end{aligned}$$(8)Since \(d_i^- \geq 0\) and \(d_i^+\geq 0\), then, clearly, \(R_i\in [0,1]\).

- Step 5:

Rank the preference order. For ranking alternatives using this index, we can rank alternative in decreasing order.

3 Extension the TOPSIS method to OWAD–TOPSIS method with OWAD operator

Because the TOPSIS method aggregates the criteria with distance in an objective way, while the OWAD operator uses the distances to reflect preferences of the decision-maker in a subjective way. Here, we will connect the TOPSIS method with OWAD operator and then extend the traditional TOPSIS method with OWAD operator called OWAD–TOPSIS method, which makes the TOPSIS method can integrate the preference of the decision-maker.

3.1 The procedure to combination OWAD operator and TOPSIS method

Both OWAD operator and TOPSIS method are used to measure the distance to ideal and negative ideal solutions to choose the best choice. As we know, OWAD operator can modify the aggregation results according to the preferences of the decision-maker, but the aggregation result of TOPSIS method does not have this feature yet. Therefore, we try to combine OWAD operator with the TOPSIS method.

The new approach combines the OWAD operator and the TOPSIS method together. The specific procedures are given as follows.

- Step 1:

Calculate the normalized decision-matrix. The normalized value \(b_{ij}, i=1,2,\ldots ,m, j=1,2,\ldots ,n\) is calculated as follows:

$$\begin{aligned} b_{ij}=\frac{a_{ij}}{\sum ^n_{j=1}a_{ij}}. \end{aligned}$$(9)- Step 2:

Calculate the weighted normalized decision-matrix. The weighted normalized value \(c_{ij}\) is calculated as \(c_{ij}=w_{j}b_{ij}, i=1,2,\ldots ,m, j=1,2,\ldots ,n\), where \(w_j\) is the weight of the jth criterion \(G_j\), and \(\sum _{j=1}^{n}w_j=1\). Then, determine the positive ideal and negative ideal solutions:

$$\begin{aligned} C^+&={c^+_1,c_2^+,\ldots ,c_n^+}=\{(\max c_{ij}|j\in I).(\min c_{ij}|j\in J)\} \end{aligned}$$(10a)$$\begin{aligned} C^-&={c^-_1,c_2^-,\ldots ,c_n^-}=\{(\min c_{ij}|j\in I).(\max c_{ij}|j\in J)\}, \end{aligned}$$(10b)where I is associated with benefit criteria, and J is associated with cost criteria.

- Step 3:

Calculate the separation measures, using the n-dimensional Manhattan distance. The distance separations of each alternative from the ideal and negative ideal solutions considering preference are given as follows:

$$\begin{aligned} d_i^+&=\sum _{j=1}^nw_{ij}d^+_{i{\sigma (j)}}, i=1,2,\ldots ,m. \end{aligned}$$(11a)$$\begin{aligned} d_i^-&=\sum _{j=1}^nw_{ij}d^-_{i{\sigma (j)}}, i=1,2,\ldots ,m. \end{aligned}$$(11b)When \(d^+_{i{\sigma (j)}}\) is the jth largest distance of \(|c_{ij}-c_j^+|\), \(d^-_{i{\sigma (j)}}\) is the jth largest distance of \(|c_{ij}-c_j^-|\). \(w_{ij}\) means different weight corresponding to the distance to ideal and negative ideal solutions, respectively.

- Step 4:

Calculate the relative closeness degree to ideal solution. The relative closeness of the alternative \(A_i\) with respect to \(A^+\) is defined as follows:

$$\begin{aligned} R_i=\frac{d_i^-}{d_i^-+d_i^+}, i=1,2,\ldots ,m. \end{aligned}$$(12)Since \(d_i^- \geq 0\) and \(d_i^+\geq 0\), then, clearly, \(R_i\in [0,1]\).

- Step 5:

Rank the preference order. For ranking alternatives using this index, we can rank alternative in decreasing order.

We call this method as OWAD–TOPSIS method. The difference between the traditional TOPSIS method and our new OWAD–TOPSIS method is that the new approach takes OWAD weights into consideration on behalf of decision-maker’s attitude. It can represents the decision-maker’s preference information and can change with decision-maker’s attitude dynamically. The traditional TOPSIS method is only a simple aggregation of distance measures. The OWAD–TOPSIS method provides the feature of OWAD operator which the weights are determined by place of distance rather than specific distance measures.

3.2 The analysis of weight values in OWAD–TOPSIS method

Next, we will analyze the different cases of weight values in OWAD–TOPSIS method, and will prove that our method can include TOPSIS method as special case. Then, we will propose a parameterized OWAD–TOPSIS model by applying the exponential function neat-OWAD operator.

The influences of weights in the OWAD operator can be discussed in the following different cases:

- 1.

Under the condition of the distance to ideal solution, if the weight tends to descend, then the aggregation result will increase. The attitude of decision-maker is pessimistic. If weight is extremely taken as \(W^*=(1,0,\ldots ,0)\), we can get the maximum distance to ideal solution. On the other hand, if the weight tends to ascend, then the aggregation result will decrease. The attitude of decision-maker is optimistic. If weight is extremely taken as \(W_* =(0,0,\ldots ,0,1)\), we can get the minimum distance to ideal solution.

- 2.

Under the condition of the distance to negative ideal solutions, if the weight tends to descend, then the aggregation result will increase. The attitude of decision-maker is optimistic. If weight is extremely taken as \(W^*=(1,0,\ldots ,0)\), we can get the maximum distance to negative ideal solution. On the other hand, if the weight tends to ascend, then the aggregation result will decrease. The attitude of decision-maker is pessimistic. If weight is extremely taken as \(W_* =(0,0,\ldots ,0,1)\), we can get the minimum distance to negative ideal solution.

- 3.

Under the condition of the distance to ideal or negative ideal solution, if the weight is equal that is \(W_A=(\frac{1}{n},\frac{1}{n},\ldots ,\frac{1}{n})\), then the aggregation result is equal to the initial result, and the decision-maker’s attitude is neutral.

Next, we will prove that the neutral attitude of the decision-maker in the OWAD–TOPSIS method can include the traditional TOPSIS method as a special case.

As

If we try to add the weight vector \(W=(\frac{1}{n},\frac{1}{n},\ldots ,\frac{1}{n})\) to each distance, then aggregation result will be modified to

The relative closeness of the alternative \(A_i\) with respect to \(A^+\) can be expressed as follows:

which demonstrates that the traditional TOPSIS method is a special case of the OWAD–TOPSIS method with \(w_i=\frac{1}{n},1\leq i\leq n\).

3.3 Weight assignment method with neat-OWAD operator

Like the OWAD operator is an extension of OWA operator, neat-OWAD operator can be seen an extension of neat-OWA operator. Neat-OWA operator is more flexible to aggregate information than OWA operator. Furthermore, the weights of neat-OWA operator can dynamically change with the aggregated elements. In aggregating the distance values, neat-OWA operator can implement the idea that big standard deviations should be given more important weights than those with small standard deviations. Therefore, we propose neat-OWAD operator with the combination of neat-OWA operator and distance. The aggregation result can be dynamically changed with distance weights. The neat-OWAD operator can be defined as follows for two sets \(X=\{x_1,x_2,\ldots ,x_n\}\), \(Y=\{y_1,y_2,\ldots ,y_n\}\).

Definition 4

A neat-OWAD operator of dimension n is a mapping neat-OWAD: \(R^n \times R^n \rightarrow R\) that has an associated weighting vector W. With \(\sum ^n_{j=1}w_j=1\) and \(w_j\in [0,1]\), such that:

where \(d_i=|x_i-y_i|\), and \(x_i\) and \(y_i\) are the ith arguments of the sets X and Y.

If we set function \(f(x)=x^\alpha\) to express the influence on the distance weight, then we have

Next, we will discuss the behavior of neat-OWAD operator with the change of parameter \(\alpha\)(\(\alpha \geq 0\)).

- 1.

When \(\alpha =0\), \(W=(\frac{1}{n},\frac{1}{n},\ldots ,\frac{1}{n})\). All the weights values become the same, neat-OWAD operator becomes the simple average operator. Furthermore, the OWAD–TOPSIS method becomes the traditional TOPSIS method.

- 2.

When \(0<\alpha\), the weights have the same order of the distance information; the bigger the distance is, the bigger the corresponding weight becomes. For neat-OWAD operator, the aggregation result is bigger than the average value, and increases with the value of \(\alpha\).

- 3.

When \(\alpha \rightarrow +\infty\), all the weights approach zero except the weights of the biggest distance value.

The distance weight depends on the distance values and parameter \(\alpha\). When decision-maker’s attitude is neutral, \(\alpha =0\) and all the weights are the same. In the other conditions, \(\alpha\) can be seen as a parameterized amplifier with the weight values to the standard distance values.

For simplification, we take \(\alpha =1\) as an example in the following discussions. As different distances should be corresponding to different weights, weight of neat-OWAD operator can be expressed with the change of distance. If we try to integrate neat-OWAD operator into the TOPSIS approach, the weight is more reasonable and flexible. Steps for this new approach are listed as follows.

- Step 1:

Calculate the normalized decision-matrix. The normalized value \(b_{ij}, i=1,2,\ldots ,m, j=1,2,\ldots ,n\) is calculated as follows:

$$\begin{aligned} b_{ij}=\frac{a_{ij}}{\sum ^n_{j=1}a_{ij}}. \end{aligned}$$(20)- Step 2:

Calculate the weighted normalized decision-matrix. The weighted normalized value \(c_{ij}\) is calculated as \(c_{ij}=w_{j}b_{ij}, i=1,2,\ldots ,m, j=1,2,\ldots ,n\), where \(w_j\) is the weight of the jth criterion \(G_j\), and \(\sum _{j=1}^{n}w_j=1\). Then, determine the ideal and negative ideal solutions:

$$\begin{aligned} C^+&={c^+_1,c_2^+,\ldots ,c_n^+}=\{(\max c_{ij}|j\in I).(\min c_{ij}|j\in J)\} \end{aligned}$$(21a)$$\begin{aligned} C^-&={c^-_1,c_2^-,\ldots ,c_n^-}=\{(\min c_{ij}|j\in I).(\max c_{ij}|j\in J)\}, \end{aligned}$$(21b)where I is associated with benefit criteria, and J is associated with cost criteria.

- Step 3:

Calculate the separation measures, using the n-dimensional Manhattan distance. When \(\alpha =1\), the separations of each alternative from the ideal and negative ideal solutions considering preference are given as follows:

$$\begin{aligned} d_i^+&=\sum _{j=1}^n|c_{ij}-c_j^+|w_{ij}=\sum _{j=1}^n\frac{|c_{ij}-c_j^+|^{\alpha +1}}{\sum ^n_{j=1}|c_{ij}-c_j^+|^{\alpha }}=\sum _{j=1}^n\frac{|c_{ij}-c_j^+|^2}{\sum ^n_{j=1}|c_{ij}-c_j^+|} \end{aligned}$$(22a)$$\begin{aligned} d_i^-&=\sum _{j=1}^n|c_{ij}-c_j^-|w_{ij}=\sum _{j=1}^n\frac{|c_{ij}-c_j^-|^{\alpha +1}}{\sum ^n_{j=1}|c_{ij}-c_j^-|^{\alpha }}=\sum _{j=1}^n\frac{|c_{ij}-c_j^-|^2}{\sum ^n_{j=1}|c_{ij}-c_j^-|}. \end{aligned}$$(22b)- Step 4:

Calculate the relative closeness degree to ideal solution. The relative closeness of the alternative \(A_i\) with respect to \(A^+\) is defined as follows:

$$\begin{aligned} R_i=\frac{d_i^-}{d_i^-+d_i^+}, i=1,2,\ldots ,m. \end{aligned}$$(23)Since \(d_i^- \geq 0\) and \(d_i^+\geq 0\), then, clearly, \(R_i\in [0,1]\).

- Step 5:

Rank the preference order. For ranking alternatives using this index, we can rank alternative in decreasing order.

4 Numerical example

The new OWAD–TOPSIS model can be applied in a wide range of problems. A numerical example based on Shih et al. (2007) is used to illustrate the process of the neat-OWAD operator and TOPSIS method combination.

A local chemical company tries to recruit an online manager. The company’s human resources department provides some relevant selection tests, as the benefit attributes to be evaluated. There are 17 qualified candidates on the list, and four decision-makers are responsible for the selection. In this paper, we consider TOPSIS method to solve single decision-maker’s problem rather than group decision-making problem. Thus, we have dealt with these initial data. The basic data including objective and subjective attributes (only quantitative information here) for the decision are listed in Tables 1 and 2.

Now, we begin to explain the specific steps applying in personnel selection.

- Step 1:

Normalize the alternatives elements values with results in Table 3. Due to all criteria which are benefit criteria, we choose sum-based normalization method here.

- Step 2:

Calculate the weighted normalized decision-matrix. With the eights listed in Table 1, the positive ideal and negative ideal solutions are determined in Table 4.

- Step 3:

Calculate the separation measures, using the n-dimensional Manhattan distance. When \(\alpha =1\), the separations of each alternative from the positive ideal and negative ideal solutions are given in Tables 5 and 6. The results for other values of \(\alpha\) are given in the “Appendix”.

- Step 4:

Calculate the relative closeness degree. The calculation results are given in Table 7. If the relative closeness comes near to zero, it means the solution is close to positive ideal solution. If the relative closeness comes near to one, it means that the solution is far from positive ideal solution.

- Step 5:

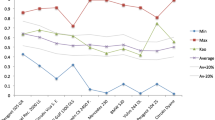

Rank the preference order in Table 8.

- (a)

When \(\alpha =0\), all the weight values are the same and the method is equivalence to the TOPSIS method. According to the ranking place \(A3>A9>A16\), A3 is the best person matching the position.

- (b)

When \(\alpha =0.5\), according to the ranking place \(A3>A9>A16\), A3 is the best person matching the position.

- (c)

When \(\alpha =1\), according to the ranking place \(A3>A9>A16\), A3 is the best person matching the position.

- (d)

When \(\alpha =2\), according to the ranking place \(A3>A9>A16\), A3 is the best person matching the position.

- (a)

We can see that, despite the weights are different, A3, A9, A16 always rank as the first, second, and third ones, and A2, A10, A12 are always rank as the last three ones. The reason is due to these candidates’s overall performances are obviously much better or worse than the other ones. The other alternatives’ ranking positions change with the weights corresponding to the decision-maker’s attitude. Combination of neat-OWAD operator and TOPSIS method takes the decision-maker’s preference information into account. Furthermore, the weight values are determined by the distance to positive ideal and negative ideal solutions dynamically. When \(\alpha =0\), all the weights are equal and the combination approach is TOPSIS method, which is shown with bold in Table 8. When \(\alpha>0\), the aggregation value changes with the weight dynamically.

5 Conclusions

In this paper, we analyze the connection between the TOPSIS method and the OWAD operator for multiple criteria decision-making. A new decision model that combines the TOPSIS method and the OWAD operator is proposed and included TOPSIS special cases. The new approach keeps the good framework of TOPSIS method and also integrates decision attitude flexibility of OWAD operator. A dynamic neat-OWAD operator with parameterized attitude character is proposed. which can integrate objective information and subjective preference of the problems.

In the future, we will develop other extensions of mathematical function types of neat-OWA operator to express weights. Furthermore, we will investigate this process aggregation to fuzzy scope such as interval sets, intuitionistic fuzzy sets, and linguistic environments.

References

Behzadian M, Otaghsara SK, Yazdani M, Ignatius J (2012) A state-of the-art survey of TOPSIS applications. Expert Syst Appl 39(17):13,051–13,069

Blanco-Mesa F, Merigo JM, Gil-Lafuente AM (2017) Fuzzy decision making: a bibliometric-based review. J Intell Fuzzy Syst 32(3):2033–2050

Chen SM, Hong JA (2014) Fuzzy multiple attributes group decision making based on ranking interval type-2 fuzzy sets and the TOPSIS method. IEEE Trans Syst Man Cybern Syst 44(2):1665–1673

Chen SM, Cheng SH, Lan TC (2016) Multicriteria decision making based on the TOPSIS method and similarity measures between intuitionistic fuzzy values. Inf Sci 367:279–295

Chen Y, Li KW, Sf L (2011) An OWA–TOPSIS method for multiple criteria decision analysis. Expert Syst Appl 38(5):5205–5211

Dagdeviren M, Yavuz S, Kilinc N (2009) Weapon selection using the AHP and TOPSIS methods under fuzzy environment. Expert Syst Appl 36(4):8143–8151

Dheena P, Mohanraj G (2011) Multicriteria decision-making combining fuzzy set theory, ideal and anti-ideal points for location site selection. Expert Syst Appl 38(10):13,260–13,265

Dursun M, Karsak EE (2010) A fuzzy MCDM approach for personnel selection. Expert Syst Appl 37(6):4324–4330

Dursun M, Ertugrul Karsak E, Karadayi MA (2011) A fuzzy MCDM approach for health-care waste management. World Acad Sci Eng Technol 73:858–864

Dwivedi G, Srivastava RK, Srivastava SK (2018) A generalised fuzzy TOPSIS with improved closeness coefficient. Expert Syst Appl 96:185–195

Emrouznejad A, Marra M (2014) Ordered weighted averaging operators 1988–2014: a citation-based literature survey. Int J Intell Syst 29(11):994–1014

He XR, Wu YY, Yu DJ, Merigo JM (2017) Exploring the ordered weighted averaging operator knowledge domain: a bibliometric analysis. Int J Intell Syst 32(11):1151–1166

Hung KC, Julian P, Chien T, Jin WT (2010) A decision support system for engineering design based on an enhanced fuzzy MCDM approach. Expert Syst Appl 37(1):202–213

Hwang C, Yoon K (1981) Multiple attribute decision making: methods and applications. Springer, Berlin

Kabak M, Burmaoǧlu S, Kazançoǧlu Y (2012) A fuzzy hybrid MCDM approach for professional selection. Expert Syst Appl 39(3):3516–3525

La Red DL, Dona JM, Pelaez JI, Fernandez EB (2011) WKC-OWA, a new neat-OWA operator to aggregate information in democratic decision problems. Int J Uncertain Fuzziness Knowl Based Syst 19(5):759–779

Laengle S, Loyola G, Merigo JM (2017) Mean-variance portfolio selection with the ordered weighted average. IEEE Trans Fuzzy Syst 25(2):350–362

Lee LW, Chen SM (2008) Fuzzy multiple attributes group decision-making based on the extension of TOPSIS method and interval type-2 fuzzy sets. International conference on machine learning and cybernetics. IEEE, Kunming, pp 3260–3265

Lee WS, Tzeng GH, Guan JL, Chien KT, Huang JM (2009) Combined MCDM techniques for exploring stock selection based on Gordon model. Expert Syst Appl 36(3):6421–6430

Li YM, Kao CP (2009) TREPPS: a trust-based recommender system for peer production services. Expert Syst Appl 36(2, Part 2):3263–3277

Liang D, Xu Z (2017) The new extension of TOPSIS method for MCDM with hesitant Pythagorean fuzzy sets. Appl Soft Comput 60:167–179

Lin CT, Lee C, Chen WY (2009a) An expert system approach to assess service performance of travel intermediary. Expert Syst Appl 36(2):2987–2996

Lin CT, Lee C, Wu CS (2009b) Optimizing a marketing expert decision process for the private hotel. Expert Syst Appl 36(3):5613–5619

Liu F, Zhang WG (2014) Topsis-based consensus model for group decision-making with incomplete interval fuzzy preference relations. IEEE Trans Cybern 44(8):1283–1294

Liu X, Lou H (2006) Parameterized additive neat OWA operators with different orness levels. Int J Intell Syst 21(10):1045–72

Liu XW (2008) A general model of parameterized OWA aggregation with given orness level. Int J Approx Reason 48:598–627

Mardani A, Nilashi M, Zavadskas EK, Awang SR, Zare H, Jamal NM (2018) Decision making methods based on fuzzy aggregation operators: three decades review from 1986 to 2017. Int J Inf Technol Decis Mak 17(2):391–466

Merigó JM, Gil-Lafuente AM (2010) New decision-making techniques and their application in the selection of financial products. Inf Sci 180(11):2085–2094

Merigó JM, Gil-Lafuente AM (2011) Decision-making in sport management based on the OWA operator. Expert Syst Appl 38(8):10,408–10,413

Merigo JM, Zhou LG, Yu DJ, Alrajeh N, Alnowibet K (2018) Probabilistic OWA distances applied to asset management. Soft Comput 22(15):4855–4878

Morshedizadeh M, Kordestani M, Carriveau R, Ting DSK, Saif M (2018) Power production prediction of wind turbines using a fusion of MLP and ANFIS networks. IET Renew Power Gener 12(9):1025–1033

Noor-E-Alam M, Lipi TF, Ahsan Akhtar Hasin M, Ullah AMMS (2011) Algorithms for fuzzy multi expert multi criteria decision making (ME-MCDM). Knowl Based Syst 24(3):367–377

Pedrycz W, Chen SM (2011) Granular computing and intelligent systems: design with information granules of higher order and higher type. Springer, Heidelberg

Pedrycz W, Chen SM (2015a) Granular computing and decision-making: interactive and iterative approaches. Springer, Heidelberg

Pedrycz W, Chen SM (2015b) Information granularity, big data, and computational intelligence. Springer, Heidelberg

Peng Y, Zhang Y, Tang Y, Li S (2011) An incident information management framework based on data integration, data mining, and multi-criteria decision making. Decis Supp Syst 51(2):316–327

Safarzadegan Gilan S, Sebt MH, Shahhosseini V (2012) Computing with words for hierarchical competency based selection of personnel in construction companies. Appl Soft Comput 12(2):860–871

Scherger V, Terceno A, Vigier H (2017) The owa distance operator and its application in business failure. Kybernetes 46(1):114–130

Shih HS, Shyur HJ, Lee ES (2007) An extension of TOPSIS for group decision making. Math Comput Model 45(7–8):801–813

Tiryaki F, Ahlatcioglu M (2005) Fuzzy stock selection using a new fuzzy ranking and weighting algorithm. Appl Math Comput 170(1):144–157

Torra V (2004) OWA operators in data modeling and reidentification. IEEE Trans Fuzzy Syst 12(5):652–660

Vizuete Luciano E, Merigo JM, Gil-Lafuente AM, Boria Reverte S (2012) OWA operators in the assignment process: the case of the Hungarian algorithm. Lecture notes in business information processing, vol 115. In: International conference on modeling and simulation in engineering, economics, and management. Springer, Berlin, pp 166–177

Wang CY, Chen SM (2017) Multiple attribute decision making based on interval-valued intuitionistic fuzzy sets, linear programming methodology, and the extended TOPSIS method. Inf Sci 397:155–167

Wang TR, Liu J, Li JZ, Niu CH (2016) An integrating OWA–TOPSIS framework in intuitionistic fuzzy settings for multiple attribute decision making. Comput Ind Eng 98:185–194

Wang YM, Elhag TMS (2006) Fuzzy TOPSIS method based on alpha level sets with an application to bridge risk assessment. Expert Syst Appl 31(2):309–319

Wu T, Liu XW, Liu F (2018) An interval type-2 fuzzy TOPSIS model for large scale group decision making problems with social network information. Inf Sci 432:392–410

Xu G, Liu P (2007) The evaluation study of human resource based on extended C-OWA operators and TOPSIS method. In: International conference on wireless communications. networking and mobile computing, IEEE, pp 4461–4465

Xu Y, Wang H (2011) Distance measure for linguistic decision making. Syst Eng Procedia 1:450–456

Xu Z, Chen J (2008) Ordered weighted distance measure. J Syst Sci Syst Eng 17(4):432–445

Xu Z, Zhang XL (2013) Hesitant fuzzy multi-attribute decision making based on TOPSIS with incomplete weight information. Knowl Based Syst 52:53–64

Yager RR (1988) On ordered weighted averaging aggregation operators in multicriteria decision making. Syst Man Cybern IEEE Trans 18(1):183–190

Yager RR (2004) Modeling prioritized multicriteria decision making. IEEE Trans Syst Man Cybern Part B Cybern 34(6):2396–2404

Yager RR, Kacprzyk J, Beliakov G (eds) (2011) Recent developments in the ordered weighted averaging operators: theory and practice. Springer, Berlin

Yan HB, Huynh VN, Nakamori Y, Murai T (2011) On prioritized weighted aggregation in multi-criteria decision making. Expert Syst Appl 38:812–823

Yazdanbakhsh O, Dick S (2018) A systematic review of complex fuzzy sets and logic. Fuzzy Sets Syst 338:1–22

Yoon KP, Kim WK (2017) The behavioral TOPSIS. Expert Syst Appl 89:266–272

Zadeh LA (1965) Fuzzy sets. Inf Cont 8(3):338–353

Zavadskas EK, Mardani A, Turskis Z, Jusoh A, Nor KMD (2016) Development of TOPSIS method to solve complicated decision-making problems: An overview on developments from 2000 to 2015. Int J Inf Technol Decis Mak 15(3):645–682

Zhang X, Xu Z (2014) Extension of TOPSIS to multiple criteria decision making with pythagorean fuzzy sets. Int J Intell Syst 29(12):1061–1078

Zhang X, Xu Z (2015) Soft computing based on maximizing consensus and fuzzy TOPSIS approach to interval-valued intuitionistic fuzzy group decision making. Appl Soft Comput 26:42–56

Zyoud SH, Fuchs-Hanusch D (2017) A bibliometric-based survey on AHP and TOPSIS techniques. Expert Syst Appl 78:158–181

Acknowledgements

The work was supported by the National Science Foundation of China (NSFC) (71371049, 71771051).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

-

1.

When \(\alpha =0\), then \(weight=1/7\). At this time, the weights are equal, and the combination of neat-OWAD and TOPSIS method is equivalent to the TOPSIS method. The distance to positive ideal and negative ideal solutions in Tables 9 and 10.

-

2.

When \(\alpha =0.5\), the distance to positive ideal and negative ideal solutions in Tables 11 and 12.

-

3.

When \(\alpha =2\), the distance to positive ideal and negative ideal solutions in Tables 13 and 14. As the normalized distance is between 0 and 1, the weight and aggregation value will be very small with the decreasing value of \(\alpha\). We keep 4 decimal and stop discussing the greater value.

Rights and permissions

About this article

Cite this article

Liu, X., Wang, L. An extension approach of TOPSIS method with OWAD operator for multiple criteria decision-making. Granul. Comput. 5, 135–148 (2020). https://doi.org/10.1007/s41066-018-0131-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41066-018-0131-4